NASA’s Earth Observatory reports that there was a record low Arctic sea ice concentration in June 2005. There was a record-number of typhoons over Japan in 2004. In June, there were reports of a number of record-breaking events in the US. And on July 28, the British News paper The Independent reported on record-breaking rainfall (~1 m) in India, claiming hundreds of lives. These are just a few examples of recent observations. So, what is happening?

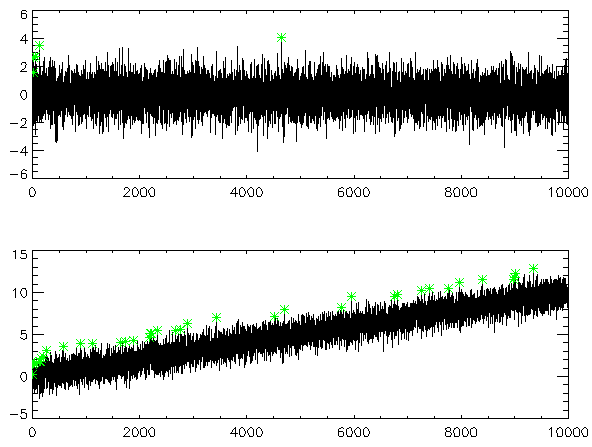

Whenever there is a new record-breaking weather event, such as record-high temperatures, it is natural to ask whether the occurrence of such an event is due to a climate change. Before we proceed, it may be useful to define the term ‘statistically stationary’, the meaning here being that statistical aspects of the weather (means, standard deviation etc.) aren’t changing. In statistics, there is a large volume of literature on record-breaking behaviour, and statistically stationary systems will produce new record-breaking events from time to time. On the other hand, one would expect to see more new record-breaking events in a changing climate: when the mean temperature level rises new temperatures will surpass past record-highs. This is illustrated in Fig. 1.

Fig. 1. An example showing two different cases, one which is statistically stable (upper) and one that is undergoing a change with a high occurrence of new record-events. Green symbols mark a new record-event. (courtesy William M Connolley)

Record-events and extremes are closely related. Record-high and low values define the limits (bound or the range) between which observed values lie, and extremes are defined as those values in the vicinities of these limits. The third assessment report (TAR) of the IPCC reported some studies that have found regional trends in temperature extremes (particularly low temperatures). There have also been some reports on trends of more extreme precipitation, although The International Ad Hoc Detection and Attribution Group (IDAG, 2005) did not manage to attribute trends in precipitation to anthropogenic greenhouse gases (G) – a quote from their review article is: “For diurnal temperature range (DTR) and precipitation, detection is not achieved”, here ‘detection’ implying the signal of G. A high occurrence of new record-events is an indication of a change in the ‘tails’ of the frequency distribution and thus that values that in the past were considered extreme are becoming more common.

But how does one distinguish between the behaviour of a stable system to one that is undergoing a change in terms of record-events? This kind of question has traditionally not been discussed much in the climate research literature (e.g. record-events are not discussed in DIAG, 2005 or the IPCC TAR), perhaps because it has been perceived that analysis on record-breaking events is difficult if not impossible. There are many different types of record-events related to climatic and weather phenomena. One hurdle is that the number of record-/extreme-events is very low and there is not enough data for the analysis. Another problem is the data quality: does the sensor give a good reading near the ranges of their calibrated scales?

Yet, there is a volume of statistical literature on the subject of record-statistics, and the underlying theory for the likelihood of a record-breaking event taking place in a stable system is remarkably simple (Benestad, 2003, 2004). In fact, the simplicity and the nature of the theory for the null-hypothesis (for an stable behaviour/stationary statistics for a set of unrelated observations, referred to as independent and identically distributed data, or ‘iid’, in statistics) makes it possible to test whether the occurrence of record-events is consistent with the null-hypothesis (iid). I will henceforth refer to this as the ‘iid-test’ (unlike many other tests, this analysis does not require that the data is normally distributed as long as there are no ties for the record-event). The results for such a test on monthly absolute minimum/maximum temperatures in the Nordic countries and monthly mean temperatures worldwide are inconsistent with what we would see under a stable climate. Further analysis showed that the absolute monthly maximum/minimum temperature was poorly correlated with that of the previous month, ruling out depeendency in time (this is also true for monthly mean temperature – hence, ‘seasonal forecasting’ is very difficult in this region). Additional tests (Monte Carlo simulations) were used to check whether a spatial dependency could explain the deviation from the iid-rule, but the conclusion was that it could not explain the observed number of records. A similar conclusion was drawn from a similar analysis applied to a (spatially sparse) global network of monthly mean temperatures, where the effect of spatial dependencies for inter-annual and inter-decadal variations could be ruled out (Benestad, 2004). Thus, the frequent occurrence of record-high temperatures is consistent with a global warming.

It is not possible to apply the iid-test to one single event, but the test can detect patterns in a series of events. The test requires a number of independent observations of the same variable over a (sufficiently long) period of time. Since climate encompasses a large number of different parameters (temperature, precipitation, wind, ice extent, etc), it is probable that a climate change would affect the statistics of a number of different parameters simultaneously. Thus, the iid-test can be applied to a set of parallel series representing different aspects of one complex system to examine whether its general state is undergoing a change. Satellite observations tend to be too short for concluding whether they are consistent with null-hypothesis saying there is no climate change (i.e. it being iid) or the alternative hypothesis that the climate is in fact changing (or the observations are not independent). Nevertheless, the record-low sea ice concentration is consistent with a shrinking ice-cap due to a warming. Rainfall observations tend to be longer and therefore more appropriate for such tests, but, such an analysis has not yet been done on a global scale to my knowledge. Results of an iid-test on series of maximum monthly 24-hour rainfall within the Nordic countries (Norway, Sweden, Finland, Denmark & Iceland) could not rule out the null-hypothesis (i.e. the possibility that there is no change in the rainfall statistics), but this case was on the border line and the signal could also be too weak for detection. In a recent publication, however, Kharin & Zwiers (2005) analysed extreme values from model simulations of a changing climate and found that an enhanced greenhouse effect will likely lead to ‘more extreme’ precipitation. This would imply an anomalously high occurrence of record-high rainfall amounts. They discussed the effect of variables being non-iid on the extreme value analysis, and after taking that into account, propose that changes in extreme precipitation are likely to be larger than the corresponding changes in annual mean precipitation under a global warming. Thus, new record-high precipitation amounts are consistent with the climate change scenarios.

Theory for the mathematically minded

The simple theory behind the iid-test is that we have a number of N observations of the same object. If all the values represent a variable that follows the same distribution (i.e. exhibits the same behaviour), then the probability that the last observation is a record-breaking event (the highest number) is 1/N. It is then easy to estimate the expected number of record-events (E) for a series of length N: E = 1/1 + 1/2 + 1/3 + 1/4 + … + 1/N (the first observation being a ‘record-event’ by definition). It is also easy to estimate the likelihood that the number deviated from E by a given amount (i.e. using an analytical expression for the variance of E or so-called ‘Monte-Carlo’ simulations). The probability for seeing new record-events diminishes for an iid variable as the number N increases.

References

Benestad, R.E. (2004) Record-values, non-stationarity tests and extreme value distributions Global and Planetary Change vol 44, issue 1-4, p.11-26

Benestad, R.E. (2003) How often can we expect a record-event? Climate Research Vol 23, 3-13.

DIAG (2005) Detecting and Attributing External Influences on the Climate System: A Review of Recent Advances, J. Clim., vol 18, 1291-1313, 1 May

Kharin & Zwiers (2005), Estimating Extremes in Transient Climate Change Simulations, J. Clim., 18, 1156-1173

Why weren’t all these records set in 1998, “the warmest year in the last millenium” (according to Mann)?

[Response:New record-breaking events take place as time move on, and the analysis for the time before 1998 cannot include observations have ‘not yet happened’. The latest record for global and annual mean was set 1998, but it may also be slightly different when looking at local temperatures and on a monthly basis. -rasmus]

Thank you for a lovely column.

Why is the sequence of records (asterisks) in the lower part of Figure 1 not strictly increasing? Is something other than “maximum value seen so far” being used to define a record event?

Thanks for all the effort you and your colleagues put into this blog.

[Response:You interpretation “maximum value seen so far” is correct. If you refer to the rate of occurrence, then this depends on the mean trend and the rate of change of the variance. -rasmus]

[Response:The asterisks *are* strictly increasing… its an optical illusion – William]

Another way of putting it is that if the weather record that was broken last week was set last year then we’re probably in the midst of a rapid change in climate.

Just to add to the records – the south west of W.A. had it’s driest July on record (normally the wettest month) which was preceded by the wettest June for some time (although it wasn’t a record). I suspect that the structure of the southern hemisphere climate system is starting to change. By that I mean the series of cells that convey heat from the tropics to the poles.

More pertinently, it worries me that climate scientists have spent so much time ensuring that their predictions are defensible that they may have minimized the risk of dramatic climate change.

In particular, I think that there are signs that severe impacts may be starting to occur in widespread animal and plant communities. It’s worth bearing in mind that living things that can’t migrate only need one year of adverse conditions to go extinct.

The real possibility exists that extinctions may cascade especially where ‘keystone’ species are affected.

It is also worth bearing in mind that (conspiracy theorists notwithstanding) we humans can’t migrate either.

Re#3,

Thanks to another poster here (I don’t think it was you) some time ago, I did some research on the data at your Bureau of Meteorology concerning the “south west of Australia.” I found plenty of contradictory information (especially with regards to variability, as I recall). I would be happy to re-hash when I have some time (privately or otherwise), if necessary. I can also find recent quotes from a Dr. Ian Smith of CSIRO claiming that climate changes in the south-western portion of Western Australia are “most likely due to long-term natural climate variations.”

Would you be so kind as to comment on how such extreme value statistics illuminate a changing mean within cyclical systems, such as NAO or ENSO? Inferrences would seem much more difficult.

[Response:It depends on the length of the data series and time resolution. As long as the criterion that the data are independent, the iid-test can detect a climate change. A cyclical system sets a kind of ‘beat’ and in order to detect a change, the best is to sample at the frequency of the cycle. A cyclical system would furthermore produce autocorrelation structures, and the analysis -i referred to, it was shown that the autocorrelation was low. The NAO is not really very ‘cyclical’ (despite being called an ‘oscillation’ – it has proven very difficult to predict the NAO, and cycles are easy to forecast). In the former paper, we are also talking about the hottest day in a month in a region where ENSO has little impact. In the latter paper, the monthly mean scattered all over the world, most not affected by NAO. Temperature was subsampled once every 3 months, taking different months from adjacent stations. A visual plot showed when the record-event took place, and it was hence possible to show that the sub-sampling removed clustering in time. It’s really easy to tell whether you have a dependency – just plot the times of the record-events. -rasmus]

Your second graph shows an upward trend line. Another possibility is a somewhat horizontal trend line with a widening cone of readings. That would seem to allow for record lows as well as record highs in temperature, rainfall etc. Incidentally it is quite balmy here in southern mid-winter. The local farmers are convinced of a warming trend, so maybe we are all programmed to run tests using fuzzy criteria.

I would like to clarify your usage of the term stationary particularly as it relates to autocorrelation. The second graph you show is, to my mind, trend stationary. This is particularly so given your comment about low autocorrelation in response to the last comment (#5). Have there been any studies that investigate whether ‘global’ temperature (or similar climate indicator) is trend stationary rather than non-stationary? When I look at any of the graphs of global temperature I am struck by an impression of a very high degree of autocorrelation (indeed, tending towards I(1) behaviour) – particularly given the inflection around the turn of the century that seems inconsistent with a deterministic trend.

[Response:When there is a trend, then the distribution function (probability distribution function, pdf, or frequency distribution if you like) is changing. I use the word ‘stationary’ in the meaning of the pdf. By definition, a variable that does not have a constant pdf is non-iid. The test does not discriminate between a linear or a non-linear trend (I think it is this you refer to with ‘stationary trend’ and ‘non-stationary trend’). Many curves for the global mean have been filtered (introduces a higher autocorrelation), but if you use unfiltered data, remove the trend and look at month-to-month variations then you will see that the presistence becomes less important. When we use the iid-test in studying climate-change, then we want to exclude the dependency between successive values related to aspects not related to climate change (i.e. ENSO, NAO), but want to retain the part that explains long-term changes (trend). -rasmus]

I was somewhat put off when Roger Pielke said that here on the eastern front range in Colorado the week where we had +100 degree (fahrenheit) highs for 5 days in a row that the heat did not indicate anything unusual going on. Apparently this had occurred in the late 19th century according to some old temperature records, so we were within some kind of normal pattern — just a 100 year event, I guess. But we set some new temperature records that week and are continuing to set such records some days even now some a few weeks later.

Climate is the statistics of weather over time, I know. But since the probability of such anomalies is greater as time goes on, I expect people to say so instead of giving some reassuring message to the evening news that “It’s All Good”, nothing unusual going on here.

Climate scientists don’t want to get all entangled with day-to-day weather, I understand this. But, when is this backing off on relating the probability of extreme weather events to climate change going to stop?

[Response:Climate can be regarded as ‘weather statistics’, of which the occurrence of record-breaking events is naturally one subject. The longer the series of observations are, the lower the chance of seeing a new record-breaking event given the process is iid. Even so, they will still happen from time to time, and if there are ‘too many’ record-events, then this is improbable given an iid-process. You can have many parallel (contemporary) observations and the chance of seing at least one new record amongst a volume of observables may remain high even after some time. But it’s easy to calculate the probability of seeing a number of new records from binominal law if you know the true degrees of freedom. -rasmus]

Of course, one has to be wary of the extreme value fallacy.

Cheers, — Jo

[Response:Thanks for the link! It’s important to be cautious and aware of common mistakes. I do like the iid-test bacause of its simplicity: of a batch of N random rational numbers (eg ordered chronologically), the probability one particular (eg the most recent one) has the greatest value is 1/N. The nice thing about it is that you can test it with permutations or create synthetic data and test it (Monte-Carlo simulations). The iid-test makes no assumption about the distribution of the data – the only requirement is that there is no ties. -rasmus]

Rasmus, re your response to my comment #7. By trend stationary I mean this. Thus, I am distinguishing between a deterministic trend (which includes both linear and non-linear trends) and a stochastic trend. I take your research to be establishing the question of whether there is a trend. My question was directed to exploring the nature of that trend (which has profound implications for forecastability).

[Response:Thanks for the clarifications. I think you’re asking whether there might be some kind of ‘time structures’ (types of cycles, etc) present in addition to that of a global-warming trend. A good point! The answer is probably yes (inter-decadal variability, natural external forcings and so on). I’m not sure whether statistical trend models would be sufficient, and in order to examine the ‘residuals’ (the data after the trends have been removed), one really needs to use a fully-flegded climate model with all important forcings and feedback processes accounted for. If the residual is non-stationary, then this will also make the iid-test reject the null-hypothesis (it would be easy to test by applying the iid-test on the residuals). The iid-test does not attribute a behaviour to one specific forcing, but gives the probability of a variable representing independent and identically distributed data. A dependency may influence the test results and therefore must be eliminated (e.g. by sub-sampling) before we can say whether there is an undergoing change in the pdf. It reveals whether the upper tails of the pdf is being ‘stretched’. -rasmus]

Regarding the extreme value fallacy link (provided by Jo):

The awful website to which Jo links speaks informatively about the topic of records in general and then talks about the “myth” of global warming. Rather than asserting this, the author could provide some analysis with data. For example, with global warming, we might predict that record hot events will be more likely than record cold events. A simple sign test could determine if this is true and, since autocorrelations (both spatial and temporal) should act similarly on records at both extremes, I suspect it could be argued that statistical problems would cancel each other out once the test was adjusted for the correct degrees of freedom. More problematic are cases like precipitation and other processes where the prediction is an increase in records at both extremes. In such cases trends in measures for one extreme cannot be used as controls for the evaluation of trends in the other.

Back to the website, if one clicks FAQs at the bottom and goes to the page where the Greenhouse Effect is described one views a reasonable physical description followed by this ridiculous statement: “There are other potential greenhouse gases, such as carbon dioxide and methane, but their atmospheric concentrations are so low that they may be ignored (CO2 at 0.033% and CH4 at 0.0002%).” Better people than me can learn something about extreme events from someone who sees no significance in record-breaking concentrations of CO2. Perhaps CO2 records would be a useful teaching tool for demonstrating the analytical theory.

Let me see if I got it right:

(1) With GW we’re not sure of getting much change in overall global average precip, but when when it rains it pours … & floods, which also means on the flip side we would expect increasing periods of no precip (aka droughts), since the global average precip is not changing.

(2) The reason the changing climate in the second graph does not show more extreme events, is because they are only being compared to the nearby previous timeframe or the new mean (?), not the x=0 point on the graph, because the interest is in finding trends, not absolutes. Right?

(3) If 2 is correct, then from a layperson’s or farmer’s perspective, we might also be interested in absolute changes, comparing the x=10,000 time to the x=0 time, in which case ALL of the y values, say, between x=0 to x=2000 (even the low extremes) would be higher than ALL of the values closer to x=10,000, and all would be extreme in the comparison. And with many other indicators that GW was happening, incl causing the upward trend in extremes, then we might attribute in this absolute analysis all values to GW, or at least all values would have some GW component.

What about any logical HYPOTHESES/THEORIES re heavier precip events, greater dry periods, and same global average precip. Would it have to do with GW causing more water vapor to be held (hoarded) in the air, then when something triggers it, it just pours it out?

I understand scientists cannot attribute a single extreme event to GW; it may in God-only-knows reality be due to GW, but scientists don’t have the tools to make such a claim. The way I handle this (such as a dangerous hurricane) is to say, “We can only expect worse in the future with GW.” But I’d really like to say, “The most dangerous 5% of that hurricane’s intensity has been scientifically proven to be attributed to GW.” But I guess we can’t say that – at least for now. My thinking (perhaps wrong), is that in the future we may be able to come back to these current floods, droughts, hurricanes, etc and attribute portions of them to GW. I know that 1/2 the heat deaths in Europe in 2003 were attributed to GW.

And as I always mention, as a layperson, I don’t need scientific certainty to understand that the drought in Niger & mudslide in India are at least partly due to GW. I think at this point there may be a preponderance of evidence (which means it is more likely than not (more than 50% sure) that GW has a part in these single events). And my standard is even lower than that; “MIGHT be partly (the worst part) due to GW” is for me a clarion call to reduce GHGs.

[Response:Dear Lynn, the answer to (1) is that I have not done the analysis for historical observations. I have analysed several climate model results and find that under a GW regime we would expect to see more record-breaking events at mid- to high latitudes and actually fewer new records than one would expect for the sub-tropics and where there is large-scale subsidence. There are also indications of more record-breaking events in regions with convection (e.g. ITCZ). This study, though is only submitted. (2) There are more record-events (green asterisks) in the lower panel and it is the absolute value that matters. The definition of a record-breaking event is a value greater than any previously recorded (since the recor starts). There are physical reasons to believe that a GW can result in more havy precipitations: a surface warming results in a higher rate of evaporation. Again, this is what the (preliminary) model results suggest. – rasmus]

RE response in #12, what is subsidence? I think it means land sinking or settling. And what in GW is causing that? I think I live in a borderline area btw the mid-lat & subtropics, though people here refer to it as subtropical (my latitude is 26.2 N).

[Response:In this context it means the downward branch of the atmospheric Hadley circulation which exists in the sub-tropics to balance the upward mass flux that occurs near the equator. -gavin]

Re#12-“I know that 1/2 the heat deaths in Europe in 2003 were attributed to GW.”

Who did this?!?!? And does this person (or group of people) also attribute lives saved in European winters to GW? I’ve shown before that the typical European winter gets far more weather-attributed deaths than the extreme heat wave of 2003, so it only stands to reason that GW is saving far more lives in Europe than it is taking, right?

“And as I always mention, as a layperson, I don’t need scientific certainty to understand that the drought in Niger & mudslide in India are at least partly due to GW.”

Annual rainfall in India is heavily dependent on ENSO and shows tremendous variability from year-to-year. As the globe has warmed in recent decades, this variability has actually appeared to DECREASED, which you can see graphically here. http://climexp.knmi.nl/plotseries.cgi?someone@somewhere+p+ALLIN+All-India_Rainfall+precipitation+yr0

Looking at monthly data for India, the same is also generally true http://climexp.knmi.nl/plotseries.cgi?someone@somewhere+p+ALLIN+All-India_Rainfall+precipitation+month .

FWIW, the mean rainfall for Indian in June-Sept (which includes the monsoon season) from 1871-1995 is 85cm…the same as it is from 1871-1950 and 1950-1995 http://tao.atmos.washington.edu/data_sets/india/parthasarathy.html . I went ahead and looked at 1995-2000 at the KNMI Climate Explorer link…range was 79 cm to 87 cm with a mean of 84 cm.

Neither 20th century warming overall nor the warming of recent decades seem to show an effect on India’s rainfall, either taken annually, seasonally, or monthly. But you can dismiss 130 yrs of rainfall data and cry “GW” due to ONE DAY of rain?

As for the typhoon record mentioned in the contribution above…there were 29 Pacific typhoons, just 2 above the annual mean. It’s hard for me to consider one year’s worth of unusual typhoon landfalls in one nation to represent a significant phenomenon. In fact, one might consider a highly increasing landfall count during a roughly average typhoon year overall to indicate less chaotic weather patterns at play. To me, this would contradict the idea of “more extreme, less predictable” climatic events.

[Response:True, the count of one year may not represent a significant scientific evidence (despite otherwise severe consequences for those hit by them), and it is the pattern of occurrences over time that will tell use whether there are strange things going on. If there is a trend that continues with increasingly higher typhoon landfalls over Japan, even if there is a near-constant total number of Typhoons over the Pacific, this would have significance on many levels. This would suggest a systematic change in the storm tracks. Having said that, the record-number of landfalls was ‘only’ 10 and the previous was six, which suggests that either the length of the (official) observations is very short or there so far has not been many record-breaking events. I haven’t dug up the data concerning the landfalls in Japan (many of the Japanese websites are in Japanese, a language that I do not master), so I have not had the chance to make a more thorough analysis on this. -rasmus]

Re #14

Re #12″I know that 1/2 the heat deaths in Europe in 2003 were attributed to GW.”

Who did this?!?!? And does this person (or group of people) also attribute lives saved in European winters to GW?

I’ve shown before that the typical European winter gets far more weather-attributed deaths than the extreme heat

wave of 2003, so it only stands to reason that GW is saving far more lives in Europe than it is taking, right?

I think Lynn was referring to Human contribution to the European heatwave of 2003. Peter A. Stott, D. A. Stone & M. R. Allen.

This includes

Using a threshold for mean summer temperature that was exceeded in 2003, but in no other year since the start of the instrumental record in 1851, we estimate it is very likely (confidence level >90%) that human influence has at least doubled the risk of a heatwave exceeding this threshold magnitude.

Michael didn’t you quote some figures for low deaths in Scandinavia from cold? Doesn’t this show that people are prepared for what they expect? Isn’t it the case that if GW throws some unusual weather, that is what causes deaths?

Regarding the comment about the driest July in south west Western Australia: the previous record dry July was about 100 years ago; we had already had the Jan 1 – July 31 average rainfall by the first week of the month; the local Bureau of Met person stated that August and September have been getting wetter.

I put it all down to my driving a large V8 from Perth to Bridgetown on a regular basis.

A retiring meteorologist once remarked that a month without a new record would be a record!

[Response:True with some moderations: depending on how long the observational series are and how many independent parallel observations you make, there is an expected number of record-events according to the iid-test. Numbers consistently significantly lower or higher point to a non-iid process. Furthermore, as the length of the observational series become longer, the probability of seeing new record-events diminishes and the expected number of records gradually declines. -rasmus]

Without suggesting that the studies at the centre of this posting are at fault – there is a tendency to generate new ‘records’ with alarming regularity.

It almost seems to be a product of the human condition – we are always looking for something exceptional in recent events and, if you look hard enough, you can find it. You have to work very explicitly to overcome that inherent bias.

I particularly like sporting records – “that was the highest fourth wicket partnership between left- and right-handed batsmen on the second day of a test a Lords between Australia and England” – almost as good as “the driest July in south-west Western Australia”

[Response:You are right, and that was the original motivation of my research into this – I recalled frequent headlines with new record-breakingevents and wondered if this was normal. Now, I know that the iid-test can give some indication about what we would expect if the variable we observe is statistically stationary. -rasmus]

Re #15: The expectation is not for more frequency, but for more strength. The total number of typhoons might actually go up a bit because of the promotion of some tropical storms to typhoons, but of course the distinction between these classes of cyclone is arbitrary. The important issue is the total energy involved. Regarding your last point, I’m not aware of any evidence for such an effect as regards North Atlantic cyclones.

[Response:Thanks! I think we will soon have a new post dealing with this. Stay tuned… -rasmus]

A recent study done by the Australian Bureau of Meteorology (don’t have the link handy, but can post it later if people are interested) showed that Australian high temperature records were being broken at above the rate one would statistically expect for stable temperature, while low temperature records were being broken at below the rate one would expect.

Highly consistent with warming temperatures. The authors themselves said it would be more interesting to look at monthly records rather than daily ones, and they were planning to do this next. Precipitation is much more difficult, and I am not sure if they had plans to move on that. I don’t think the paper was published in a peer reviewed journal, but it was presented at a large conference of meteorologists, so if there were any flaws one would expect it to have been picked to pieces.

Re #19 by John S:

That’s precisely why I was jabbering on about the pseudo-control of record low temperatures in #11. As we are now putting more measures into databases and have much greater ability to search for and identify new records, we may need to control for this so that new records say more about weather/climate than they do about our abilities.

On the other hand, if you think of some measure for which there should be a reliable long-term database, this problem should disappear (as suggested in the original contribution). Take your example of moisture in July in south-west Western Australia. Let’s say that this year’s dry July is noticed by someone interested in the statistical method described. He/She can then look at the history of records for dryness in July in Western Australia. Contrast these two differing possibilities:

(i) Immediately after the first measure was taken, a high frequency of records in both dry and wet July conditions gradually gives way to a lower frequency of record dry or wet July conditions that could be expected due to the increasing number of years in the comparison until 2005 which was a dry record.

(ii) The same as (i) except that over the past two decades (1984-2004, say) the rate of record-setting for July conditions is higher than for the two decades prior to that (as opposed to the expectation that records should be harder to attain).

These two scenarios should be informative of a climate change, and very little explicit effort is required to overcome any inherent bias. The only requirement is that all such investigations are recorded [e.g., no publication bias toward scenario (i) or (ii)] so that meta-analyses or corrections for multiple tests [e.g., sequential Bonferroni] are not confounded.

I have a harder time explaining to myself how this would work spatially rather than temporally. In that case, and in the temporal case for that matter, I would think that somehow looking at trends in variance (continuous data) would be more powerful than converting data into rank form or nominal form (“record” or “non-record”). Perhaps Rasmus could explain. Is it because of the more intuitive appeal of results that you have chosen this method?

[Response:I think the analysis probably works best in the temporal case. It is not clear how we could apply this test in a spatial dimension, since we do not expect tempertures to be iid with respect to geographic location. The spatial aspect comes in by aggregating parallel series form different locations. This has to be done with care, since opposite trends in different series will appear consistent with the null-hypothesis. The iid-test is an alternative to trends in variance. -rasmus]

Re #14 & #16:

People adapt (or it is genetically predisposed) to average climate/temperatures in their own neighbourhood. A study in Europe by Keatinge ea. (confirmed by a similar one in the USA) found that mortality is lowest in a small (3 degr.C) band, which is 14.3-17.3 degr.C for north Finland, but 22.7-25.7 degr.C for Athens. Above these temperature bands, mortality increases, but below this band, mortality increases much faster: there are some factor 10 more cold related deaths than heat related deaths.

Thus an increase of average temperature, due to global warming (which has most effect in winter), will reduce average mortality, not increase it…

Re #16. Chris, there was also ‘Hot news from summer 2003’ by Christoph Schar & Gerd Jendritzky, Nature, 2 December 2004. It included a startling graph of mortaility rates in one region of Germany that year. The spike during the heatwave is stark and obvious.

RE #21

I couldn’t find the study you mentioned but I don’t think you need one if you look at these maps and graphs from the Aussie BOM. The raw data is available if someone wants to try it out.

Re#16: Yes, I believe I did report low numbers for at leat one nation in Scandinavia (if not the region as a whole). However, the numbers I presented for annual deaths attributed to cold weather for England and Wales combined regularly approach those attributed Europe-wide for the 2003 summer heat wave. I believe I provided a figure someone had come up with which correlated the number of additional deaths to be expected for a one degree drop in avg winter temps, and it stands to reason one could easily reverse that and determine how many lives were saved annually thanks to warmer temps.

Thank you for the link to the article. The question I have with it is that I understand that much of the 2003 heat wave resulted from very unusual pressure system activities. I am not certain whether the Hadley Centre model they used accounts for this. I would like to see their model results of “natural forcing” coupled with these pressure patters. One could, of course, argue that these anomolous pressure patterns were the result of human activities, but that would be another paper, I guess!

Re#21:”Highly consistent with warming temperatures.”

I don’t think anyone disputes that temperatures have warmed since “record keeping” began.

Above link more proof that cloud dynamics have ELECTRICAL forcing… [edited]

[Response:Please try to stay at least vaguely on topic – William]

The period over which figures are looked at is obviously important. The maximum temperature on August 3 at one particular place is going to be far more variable than the average maximum August temperatures etc. But as periods get larger they don’t just become statistically more uniform – consider what happens when a period starts to encompass a seasonal change.

Record high temperatures might be an occurrence before the monsoon, but once it’s started the only records likely to be broken are rainfall. Temperature under cloud cover doesn’t vary greatly. So a period (of three months say)that includes the monsoon will show average temperatures that are dampened by the presence of the monsoon and I think I’m correct in guessing that this would show up as an anomaly within your iid null hypothesis. Of course, the timing of the monsoon may also change!

What I’m trying to get at is that although the problem with records is clearly the arbitrarily large number of degrees of freedom that they invoke. These degrees of freedom can be meaningfully reduced and as that happens information (about events local in time and space) will disappear. The records of annual average global temperature represent the extreme but also carry weight because all other local information has been lost.

Monthly averages to me make the most sense from a time point of view because they are too short for seasonal effects and long enough to reduce noise.

As far as rainfall is concerned – one way of creating a figure similar to temperature(with minima and maxima) is to do what was done by hand in 1948 for most Australian rainfall stations. A meteoroligist(whose name escapses me) calculated the monthly average for all the records then took the difference between the first month actual rain and the average as the first point in a time series. The second point was the same difference for the next month added or subtracted from the previous month.And so on.

He then had a graph of the cumulative deficit or surplus above(or below) the monthly average. This clearly highlighted dry and wet spells extending beyond single seasons. In naturally dry months the graph stays below if there is a preceding rainfall deficit. this enabled one to get a clear view of the length of dry periods.

In a rough analysis of the above data I was able to identify that most short term droughts in coastal Australia were 15 months in length. The inadequacy of relying upon 12 month intervals for rainfall records is clearly problematic.

Interestingly I was able to identify 4 distinct droughts of 7 years duration although this was nowhere near statistically significant given the limit of the records (most stations had between 50 and 100 years of records). The east coast drought in Australia is currently in it’s seventh year.

THe comments regarding the narrow range of temperatures and the importance of habituation influencing European weather related deaths highlights the point I was trying to make regarding the unknown potential for climate change to cause a cascading affect upon biological systems including our own.

[Response:The iid-test ought to be applied to say one fixed day of the year, e.g. for July-01 of each year. Then influences from say a Monsoon will constitute as random noise, unless the Monsoon itself changes systematically. The iid-test becomes more powerful if you apply it to a series of parallel independent series, e.g. January 1st, January 6th, January 11th, and so on. The reason for not using every day, is to reduce the influence of dependencies (day-to-day correlations). -rasmus]

What I’d like to know is what would be the likelihood (best guesstimate), say, of the Venezuela deluge and mudslide of 1999 being partly due to GW. I understand that such events may have happened over 100 years ago, but they would have not been due to AGW in that era, because it wasn’t happening then. Now that we are pretty sure AGW is happening, it might be reasonable to at least suspect that AGW might be partly involved in extreme weather events that are predicted to increase, according to the models, even if scientists cannot prove it for individual events beyond a reasonable doubt. So which might be the best guesstimate for the 1999 Venezuela deluge?

A. AGW most assuredly played at least a small part in the Venezuela deluge (>90% sure).

B. It is somewhat sure (but not certain) or more likely than not that AGW played at least a small part in the Venezuela deluge (>50% sure).

C. It is possible that AGW played at least a small part in the Venezuela deluge, but we cannot be sure (> 0%, but <50% sure).

D. It is impossible that AGW played any part in the deluge (e.g., because … that isn’t even predicted for that area in the models… or ??)

Maybe in the future science will advance to the point (beyond our wildest imaginations) that it can give a more accurate assessment of AGW’s role (or lack thereof) in the 1999 Venezuela deluge. And just think because of AGW (as with war) our science would have advanced so much.

RE #14, I have no problem crying “GW.” I’m not a scientist trying to protect my reputation, but a person concerned about others. In fact I have been crying GW for 15 years & attributing all sorts of harms to AGW (as predicted by climate scientists & their models to happen or increase over time). I say, “Well, that unusual flood looks like it might be due to GW” (and my husband tells me to shut up, though he believes me), and I haven’t seen a dangerous stampede to reduce GHGs yet. People just think I’m crazy. But even if I’m wrong and miraculously people do respond by reducing GHGs, they will save money & help the economy & solve other problems; but if the contrarians are wrong and they persuade people not to reduce GHGs (which seems to me what is happening), then we (at least our progeny & other creatures) will have hell to pay.

Lynn, re #27:

Rainfall in Latin America is heavily tied to the Southern Oscillation (El Nino – La Nina) conditions. These show a large(r) variation in recent decades, which may – or not – be tied to (A)GW. Most models are much too coarse to predict trends on regional scale (and certainly can’t predict extreme events), thus there is no clear answer to your question.

(A)GW can not be excluded, the possibility that it is indirectly involved can not be excluded either. Thus the nearest scientific answer to your question is C.

See further the IPCC synthesis

Btw, you are not crazy by reducing your own use of fossil fuels. Even if CO2 is not a big problem, it will reduce pollutants and our dependence of not so stable countries. But for a lot of energy-dependent industries, there is little way to reduce energy use, as most measures were taken a long time ago (after the first oil crisis), and alternatives (still) are too expensive…

# 29

Caracus 1999 was due to dam changes in the region, again, impacting the conductivity of the regions oceans and its ability tho support a surface low sustaining its electrical conductivity over time despite roiling and depressurization depleting ocean carbination. The Bates et al research (Nature) on Hurricane Felix indicates that it takes about 2 weeks for the oceans to ‘recharge’ after a storm. Obviously, if there is more CO2 from human activity, the time it takes to ‘recharge’ is reduced and the equillibrium partial pressure would be greater, allowing for more gas to the surface to run back to ion form and increase conductivity. But IMHO in following the dam constructions and flow and sedimentation delays, it was pretty clear that for several years the Carribean was impacted by the changes to the Orinoco from huge hydroelectric projects in the region.

“In fact I have been crying GW for 15 years & attributing all sorts of harms to AGW (as predicted by climate scientists & their models to happen or increase over time).”

Lynn,

I commend you on your energy and water-conservation methods (although I wonder if not watering your lawn reduces the carbon sink in your yard by restricting growth and whether that should take priority over water conservation). But as for the predictions and models…

We can look a full century into the future of the US climate using some top-flight climate models http://www.usgcrp.gov/usgcrp/Library/nationalassessment/overviewlooking.htm from 2000. There are two models used for this report – the Canadian Centre model and the Hadley Centre model. What do they predict? Warming, of course! Note that the Canadian model projects more warming and that the two conflict with regard to winter warming (Canadian shows max warming in the midwest while Hadley model shows min warming there). Ok, fine…let’s move to precipitation. They agree on increased precipitation in Cali and Nevada – with the specific note that “some other models do not simulate these increases.” So even their agreement here may conflict with the results of other models. The Candadian model projects decreases in precipitation across the eastern half of the US while the Hadley model projects increases. Hmmm…so which is it that climate scientists and their models predict for the eastern half of the US? If these areas are turned into virtual swamps in 100 yrs, will someone be able to say that it was predicted to happen due to AGW? Or what if they instead become virtual deserts – can the same claim be made? Last but not least, we have the combination of rainfall and temperature to produce soil moisture changes in the models. The level of disagreement between the models is quite amazing, isn’t it? So which is it that the climate scientists and their models predict for, say, Oklahoma City? The northwest corner of California? South-Central Texas? The Rust Belt? I think the models have strongly conflicting predictions over a greater area than which they somewhat agree. So what exactly can the climate scientists and their models predict?

The latest onslaught of heat and precipitation records really get very little attention by world press unless someone dies or gets seriously injured. There has been, in my opinion, a trend, not really appreciated by most, which is stable extreme weather, one can name many zones of the world having some extreme fascet of continous drought, rain, heat and mostly changes in dominant wind directions for extended periods of time, which is in itself unusual. I suggest the slowing of Hadley cell circulation caused by weaker differential temperatures between major air masses, this will cause all the phenomenas cited. It is known that there is a pronounced difference in Hadley cell circulation between winter and summer, heat spreading more evenly would mean greater water vapor density, exacerbating AGW effects a great deal more. It would be nice to see if there is active Hadley cell models on the web, in the meantime observing what Hadley, Ferrel and Polar cells are doing must be done through met models, with a particular eye on the meandering jet streams…

# ref 32

Mike

I have to say that you seem to have talked yourself into admitting that the scientists are predicting ‘climate disruption’!?!

I would have thought that there would have been many studies of just this question. Why haven’t there been: is it lack of long term data, or is the work not sexy enough?

Given the data that exists do we have any idea how long it would take to get a reasonable confidence level that extreme events are or are not increasing? Do we have enough data to do the analysis? To be convincing, many locations would nave to be analyzed.

RE Comment #31, Mike Doran wrote:

“Caracus 1999 was due to dam changes in the region, again, impacting the conductivity of the regions oceans and its ability tho support a surface low sustaining its electrical conductivity over time despite roiling and depressurization depleting ocean carbonation.”

and “But IMHO in following the dam constructions and flow and sediment delays, it was pretty clear that for several years the Carribean was impacted by the changes to the Orinoco from huge hydroelectric projects in the region.”

I would be interested in some detailed citations supporting these assertions, since they don’t seem very likely to me, for the following reasons:

1) My impression is that it is pretty well established that El Nin~os cause low rainfall in Venezuela and La Nin~as cause heavy rainfall there.

2) The Guri project on the Caroni is one of the world’s largest, but the reservoir started filling in the late 60’s or early 70’s and the project was fully operational in 1978, 21 years before the 1999 floods and mudslides. The Macagua dam is a run of the river project near the confluence of the Caroni and the Orinoco. Carauchi, between Macagua and Guri is partly operational. The two reservoirs below Guri will not have much impact on flow and sediment. What sediment there is is being trapped in the Guri reservoir — and has been for about 25 years. More dams are planned on the Caroni, both above and below Guri and will equally have little incremental impact on sediment entering the Orinoco and less impact on sediment entering the North Atlantic, because …

3) The Caroni and the other right-bank tributaries of the Orinoco are blackwater rivers with a load of dissolved organic carbon (tinting them), but very little suspended material or electrolytes. Almost all of the sediment load in the Orinoco is dumped in the river by the left-bank tributaries, most prominently the Apure, which arise in the Andes and the northern mountains of Venezuela and drain the huge Venezuelan llanos. There has been little or no dam-building on those rivers and no significant reduction their sediment load or in the overall Orinoco sediment and electrolyte load. See (in English) and .

4) From experience of living in Venezuela in the mid-60s and many visits to Caracas, I will assert that the slopes of the Pico Avila chain between the Valley of Caracas and the Caribbean were on their way to being a mudslide waiting to happen, with settlement moving up the slopes and deforestation.

5) I find nothing in the cited Bates et al. paper to support the idea that formation and sustenance of low pressure systems over the ocean depends on electrical conductivity. Do you have some sources for that belief?

6) My answers to Lynn’s question are A or B.

Best regards.

Jim Dukelow

I have now added a link to the papers cited: they can be found on

http://regclim.met.no/results_iii_artref.html

(one of the papers has not been placed there as a pdf file, but it will hopefully be available within a few days).

I have also submitted an R-package called iid.test to CRAN: http://cran.r-project.org under contributed packages. It’s not yet posted there, but should hopefully be available soon for people who want to play around with it.

-rasmus

An article which seems to make Lynn’s comments very “a propos”:

http://www.sciencedaily.com/releases/2005/08/050804050702.htm

RE #32, you’re right about not watering & letting the grass die back, thus not allowing it to be a carbon sink (though its unclear if there is a net reduction in GHGs if you water your lawn).

However, there’s a solution. Greywater. That is, rigging your bath & washing machine water to be used for your garden (which also saves on your water bill). Water from the kitchen sink is referred to as black water (because it’s a bit dirtier). So you can have a picture perfect garden AND reduce your water bill & water depletion & GW all at once. Who said you can’t have your cake and eat it too?

RE #30, I think you may be wrong re industries not being able to reduce GHGs much. Amory Lovins is continually helping industries to reduce substantially their GHGs in cost-effective/money saving ways (& finding examples of such). He cites an fairly recent example of a business cutting its energy (used for production) by 90% by what he refers to as “tunnelling through.” I would guesstimate that industries on the whole could cut their GHGs cost-effectively by at least 1/4 or 1/3. They just need to seriously put their mind to it, or call in experts.

Re Comment #36

I managed to confuse the software that manages the process of posting comments by citing in the last sentence of paragraph 3 of my Comment #36 a couple of URL, enclosed in angle brackets. The software tried to understand them as one of the small collection of HTML commands it understands, failed to do so, and discarded the offending text. The truncated sentence should read:

“See http://www.edelca.com.ve/descargas/cifras_ingles_2003.pdf (in English) and http://www.icsu-scope.org/downloadpubs/scope42/chapter05.html ”

Jim Dukelow

Have you examined any dendro or other proxy records with this statistic?

-It would be interesting to know whether there is more record-breaking now than in the past.(for example at the onsets of the of MWP and LIA, in Europe).

[Response:Sorry, I haven’t had the chance to look at dendro or proxy records. Hopefully, some one will… –rasmus]

Robin McKie reports from Svalbard where scientists have been sunbathing in record temperatures of almost 20C

Sunday July 17, 2005

The Observer

These are unusual times for Ny-Alesund, the world’s most northerly community. Perched high above the Arctic Circle, on Svalbard, normally a place gripped by shrieking winds and blizzards, it was caught in a heatwave a few days ago.

Temperatures soared to the highest ever recorded here, an extraordinary 19.6C, a full degree-and-a-half above the previous record. Researchers lolled in T-shirts and soaked up the sun: a high life in the high Arctic.

It was an extraordinary vision, for this huddle of multi-coloured wooden huts – a community of different Arctic stations run by various countries and perched at the edge of a remote, glacier-rimmed fjord – is only 600 miles from the North Pole.

That they could bask in the sun merely confirms what these scientists have long suspected: that Earth’s high latitudes are warming dangerously thanks to man-made climate change, with temperatures rising at twice the global average. Clearly, Ny-Alesund has much to tell us.

For a start, this bleakly beautiful landscape is changing. Twenty years ago, giant icy fingers of glaciers spread across its fjords, including the Kungsfjorden where Ny-Alesund is perched.

‘When I first came here, 20 years ago, the Kronebreen and Kongsvegen glaciers swept round either side of the Colletthogda peak at the end of the fjord,’ said Nick Cox, who runs the UK’s Arctic Research Station, one of several different national outposts at Ny-Alesund.

‘Today they have retreated so far the peak will soon become completely isolated from ice. Similarly, the Blomstrand peninsula opposite us is now an island. Not long ago a glacier used to link it with coastline.’

You get a measure of these changes from the old pictures in Ny-Alesund’s tiny museum, dedicated to the miners who first created this little community and dug in blizzards, winters of total darkness and bitter cold until 1962, when explosions wrecked the mine, killing 22 people. The landscape then was filled with bloated glaciers. Today they look stunted and puny.

This does not mean all its glaciers are losing ice, of course. Sometimes it builds up at their summits but is lost at their snouts, and this can be can misleading, as the climate sceptics claim. Recent research at Ny-Alesund indicates this idea is simply wrong, however.

Gareth Rees and Neil Arnold of Cambridge University are using a laser measuring instrument called Lidar, flown on a Dornier 228 aircraft, to measure in pinpoint detail the topography of glaciers. An early survey of the Midre-Lovenbreen glacier at Ny-Alesund ‘shows there has been considerable loss of [the] glacier’s ice mass in recent years,’ said Arnold.

‘Natural climatic changes are no doubt involved, but there is no doubt in my mind that man-made changes have also played a major role.’

This turns out to be an almost universal refrain of scientists working here. They know the behaviour of these great blue rivers of ice can tell us much about the health of our planet. As a result, droves of young UK researchers, armed with water gauges, GPS satellite positioning receivers and rifles (to ward off predatory polar bears) head off from Ny-Alesund to study the local glaciers and gauge how man-made global warming affects them.

It is exhausting work that requires lengthy periods of immersion in glacier melt-water. The outcome of these labours is clear, however. ‘Glaciers are shrinking all over Svalbard,’ said Tristram Irvine-Fynn of Sheffield University. ‘Ice loss is about 4.5 cubic kilometres of ice a year.’

The Arctic is melting, in other words. And soon the rest of the world will be affected, as Phil Porter of the University of Hertfordshire, another UK researcher on field studies in Ny-Alesund, points out: ‘I have studied glaciers across the world, for example in the Himalayas, and we are seeing the same thing there. The consequences for that region are more serious, however. Rivers rise and wash away farming land.’

Humanity clearly needs to take careful notice to what is happening to the beautiful desolate area. ‘This place is important because it is so near the pole, far closer than most Antarctic bases are to the South Pole,’ said Trud Sveno, head of the Norwegian Polar Institute here.

‘We have found that not only are glaciers retreating dramatically, but the extent of the pack ice that used to stretch across the sea from here to the pole is receding. It is now at an absolute minimum since records began.’

Nor is it hard to pinpoint the culprit. On Mount Zeppelin, which overlooks Ny-Alesund, Swedish and Norwegian researchers have built one of the most sensitive air-monitoring laboratories in the world, part of a network of stations that constantly test our atmosphere.

Instruments in the little station – reached by an antiquated two-person cable car that can swing alarmingly in the whistling, polar wind – suck in air and measure their levels of carbon dioxide, methane, other pollutants and gases. It is a set-up of stunning sensitivity. Light up a cigarette at the mountain’s base and the station’s instruments will detect your exhalations, it is claimed.

Carbon dioxide, produced by cars and factories across the globe, is the real interest here, however. Over the past 15 years, not only have levels continued to rise from around 350 to 380 parts per million (ppm), but this rise is now accelerating.

In 1990 this key cause of global warming was rising at a rate of 1 ppm; by 1998 it was increasing by 2ppm; and by 2003 instruments at Mount Zeppelin showed it was growing by 3ppm. ‘Never before has carbon dioxide increased at the rate it does now,’ adds Sveno.

Such research reveals the importance of Svalbard to Europe and the rest of the world, and explains why there is a constant pilgrimage of hydrologists, ornithologists, marine biologists, glaciologists, plant experts and other researchers to the station. The work is hard, although life here has improved a lot since coal was mined at Ny-Alesund.

Buildings are warm and snug, radios and GPS receivers ensure safety in bad weather, and fresh food is shipped in weekly. The base is also kept under careful ecological control. Barnacle geese and their goslings, fiercely territorial Arctic terns, reindeer and Arctic foxes are allowed to wander between the station’s huts.

Apart from the ferocious, unpredictable weather, it is all quite idyllic and very different from Ny-Alesund’s former days. Miners had none of this, nor did the great Norwegian explorer Roald Amundsen.

After beating Scott to the South Pole, Amundsen used Ny-Alesund as his base for his next great triumph, setting sail by zeppelin to cross the North Pole to Alaska in 1926. The mooring gantry for his ship still towers over the settlement as well as a statue dedicated to the great man.

Teams of young researchers now march past these monuments every day, heading out to work on the Arctic landscape he helped to open up and which is now sending humanity a grim warning about our planet.

Re#42,

I don’t imagine the proxies have the temperature sensitivity nor the data accuracy required to do such an analysis with a good degree of confidence. I think you’d have a lot of missed records and false records due to the wide range of error.

[Response:Missing record can lead to an under-count, but not over-count. The iid-test can be run in chronological and reversed chronlogical order and if the former leads to an over-count while the latter an under-count, then the process appearss inconsistent with being iid and there is not a strong effect from missing data, if there were any. Often, we would choose only high-quality series with no missing holes. About noise, as long as these are random and do not undergo a systematic change – i.e. they are also iid, then this does not affect the analysis given a large number of data. It is possible to use mny parallel series to increase the sample size and hence effects from errors will tend to cancel. You can of course test these in numerical execrises with syntetic data. -rasmus]

#36 Jim

http://groups.yahoo.com/group/methanehydrateclub/message/2481

[Response:Please let the discussion be on this site. -rasmus]

RE#44 “I don’t imagine the proxies have the temperature sensitivity nor the data accuracy required (Snip)… I think you’d have a lot of missed records and false records due to the wide range of error.”

In my opinion, if this this matters to the final conclusions, it will be exposed by legitimate scientists in the scientific arena in legitimate scientific journals over time. Even the global warming skeptic Richard Lindzen gets published in Geophysical Letters(although his evidence does not stand up). He and others would have a field day if there were holes in this conclusion.

Re #44, #42, #46 etc.

The non-parametric iid test described here is very simple, which is what gives it a lot of its power (although not statistical ‘power’). However, that simplicity means you need to apply a lot of care to the interpretation.

For example, I would be surprised if this test did not find that the ring widths from practically any tree were not iid. Aha! Some people might exclaim – proof of global warming. No, proof that trees grow at different rates during their life. Or, proof that rainfall varied over the life of the tree. Or, proof that CO2 concentrations have changed over the life of the tree. A simple test like this can’t discriminate between all the possible reasons for a change in the distribution of tree-ring widths. Thus, this test will not provide useful results if applied to those sorts of proxies – its just not well designed for the task. It will be useful for examining series that you have a priori reasons for expecting that they will be constant (or in which change is significant of itself). But if you expect that whatever you are examining will have reasons for changing over time that are ‘mundane’ then a positive result from this test will not illuminate you any further.

[Response:You’re correct in that the test is simple and only answers the question of whether the data is likyly to be iid. Thus, it is very important to have a physical insight and compliment with further tests. It does not do the attribution-bit and does not say why data would by non-iid. We can merely say that non-iid is consitent with a climate change in this exercise. -rasmus]

Thanks for the interesting post, your time and effort. The balanced and informative contributions of this site are invaluable.

“one would expect to see more new record-breaking events in a changing climate: when the mean temperature level rises new temperatures will surpass past record-highs.”

Here are the monthly record high and low temps. recorded in Denmark over the last 125 yrs. :

http://www.dmi.dk/dmi/index/danmark/oversigter/meteorologiske_ekstremer_i_danmark.htm

The record highs seem, by eye, to cluster around post-midcentury years, while the record lows around the pre-midcentury years. Does this mean that these statistics are a clear manifestation of the warming trend which has been observed:

http://www.dmi.dk/dmi/tr04-02.pdf (page 2)

and which was illustrated in Table 2 Fig 1? In other words, can I confidently quote these record statistics as being the result of a warming climate?

“The results for such a test on monthly absolute minimum/maximum temperatures in the Nordic countries and monthly mean temperatures worldwide are inconsistent with what we would see under a stable climate.”

Are these results available?

[Response:The papers are available on http://regclim.met.no/results_iii_artref.html (one of them is there now and one will soon – hopefully – be available from this site.

The original data is available from http://www.smhi.se/hfa_coord/nordklim/.

-rasmus

]

where did this one go?

https://www.realclimate.org/index.php?p=178

[Response: Was accidentally deleted due to a technical glitch. We’ve restored it now, though some comments were lost. Our apologies to our readers! ]

Can you weigh in on this or direct me to an appropriate site? I’m looking for some predictions of how the climate will actually change (assuming GW occurring and no major effort made to stop it). Not looking for a denier site or for a worst case site. Just a reasonable best guess. In particular, am interested in broad changes (who gets warmer, colder) over the globe. Am also interested in particular in Virginia. Any chances of palm trees or aligators in my life time?

[Response:Try http://www.grida.no/ -rasmus]