Do you remember when global warming was small enough for people to care about the details of how climate scientists put together records of global temperature history? Seems like a long time ago…

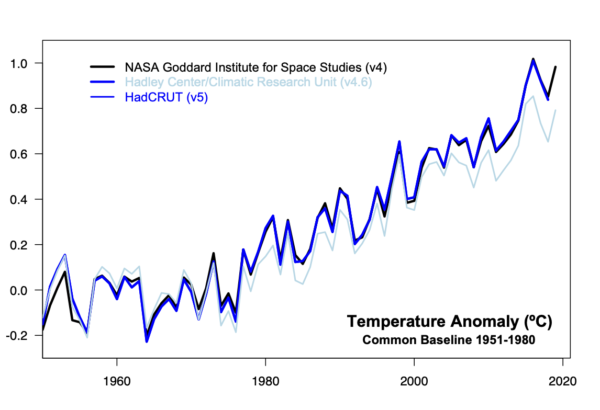

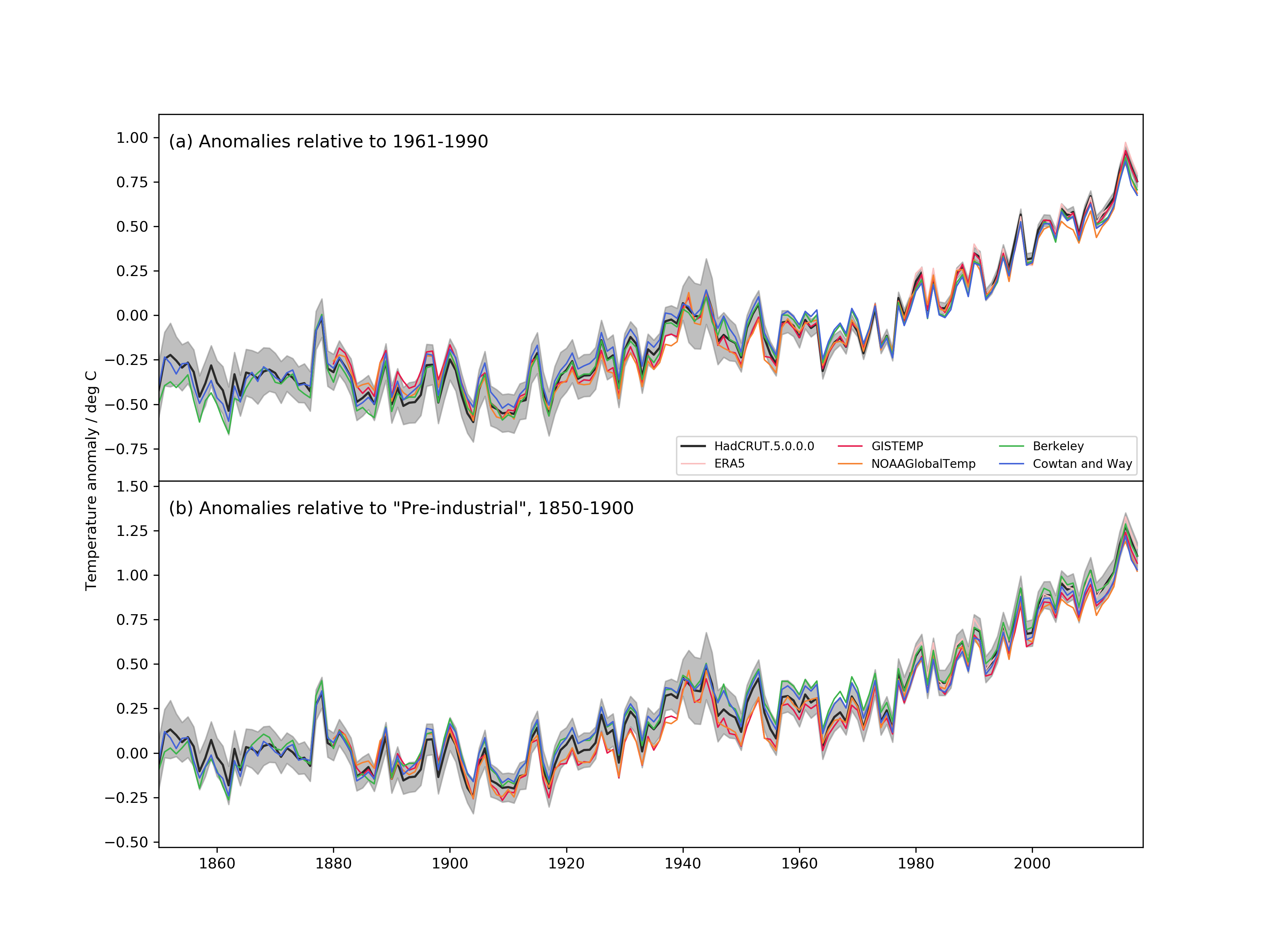

Nonetheless, it’s worth a quick post to discuss the latest updates in HadCRUT (the data product put together by the UK’s Hadley Centre and the Climatic Research Unit at the University of East Anglia). They have recently released HadCRUT5 (Morice et al., 2020), which marks a big increase in the amount of source data used (similarly now to the upgrades from GHCN3 to GHCN4 used by NASA GISS and NOAA NCEI, and comparable to the data sources used by Berkeley Earth). Additionally, they have improved their analysis of the sea surface temperature anomalies (a perennial issue) which leads to an increase in the recent trends. Finally, they have started to produce an infilled data set which uses an extrapolation to fill in data-poor areas (like the Arctic – first analysed by us in 2008…) that were left blank in HadCRUT4 (so similar to GISTEMP, Berkeley Earth and the work by Cowtan and Way). Because the Arctic is warming faster than the global mean, the new procedure corrects a bias that existing in the previous global means (by about 0.16ºC in 2018 using a 1951-1980 baseline). Combined, the new changes give a result that is much closer to the other products:

Differences persist around 1940, or in earlier decades, mostly due to the treatment of ocean temperatures in HadSST4 vs. ERSST5.

In conclusion, this update further solidifies the robustness of the surface temperature record, though there are still questions to be addressed, and there remain mountains of old paper records to be digitized.

The implications of these updates for anything important (such as the climate sensitivity or the carbon budget) will however be minor because all sensible analyses would have been using a range of surface temperature products already.

With 2020 drawing to a close, the next annual update and intense comparison of all these records, including the various satellite-derived global products (UAH, RSS, AIRS) will occur in January. Hopefully, HadCRUT5 will be extended beyond 2018 by then.

In writing this post, I noticed that we had written up a detailed post on the last HadCRUT update (in 2012). Oddly enough the issues raised were more or less the same, and the most important conclusion remains true today:

First and foremost is the realisation that data synthesis is a continuous process. Single measurements are generally a one-time deal. Something is measured, and the measurement is recorded. However, comparing multiple measurements requires more work – were the measuring devices calibrated to the same standard? Were there biases in the devices? Did the result get recorded correctly? Over what time and space scales were the measurements representative? These questions are continually being revisited – as new data come in, as old data is digitized, as new issues are explored, and as old issues are reconsidered. Thus for any data synthesis – whether it is for the global mean temperature anomaly, ocean heat content or a paleo-reconstruction – revisions over time are both inevitable and necessary.

Mostly, we only know what we think we know about climate science because of the climate science. I have had many run-ins with denialists, contrarians or climate change dismissives as they are variously called. Over the past two years especially, concern has also moved to the other end of the spectrum, to alarmism. Both ends, while the latter has been more thinly tapered, can represent forms of denial. In this abridged adaptation I will start with denialism, but round on the more recent friendly fire on science that has emerged in alarmism.

Mostly, we only know what we think we know about climate science because of the climate science. I have had many run-ins with denialists, contrarians or climate change dismissives as they are variously called. Over the past two years especially, concern has also moved to the other end of the spectrum, to alarmism. Both ends, while the latter has been more thinly tapered, can represent forms of denial. In this abridged adaptation I will start with denialism, but round on the more recent friendly fire on science that has emerged in alarmism.