For the last few years (since at least 2016), I’ve shared predictions for the next annual global mean surface air temperature (GMSAT) anomaly based on the long term trend and the state of ENSO at the start of the year. Generally speaking, this has been quite skillful compared to persistence or just the long term trend alone – the eventual anomaly was consistently within the predicted bounds. Until 2023.

[Read more…] about Annual GMSAT predictions and ENSOstatistics

Science denial is still an issue ahead of COP28

It is 33 years now since the IPCC in its first report in 1990 concluded that it is “certain” that greenhouse gas emissions from human activities “will enhance the greenhouse effect, resulting on average in an additional warming of the Earth’s surface.” That has indeed happened as predicted, it has been confirmed by a zillion studies and has been scientific consensus for decades. Yet, when the next global climate summit is coming up (it’s starting tomorrow), we don’t only learn that the host, United Arab Emirates, intends to use the event for new oil deals. We also see more attempts to cast doubt that global warming is caused by emissions from burning oil, gas and coal – as so often before these summits.

This time making the rounds is a “discussion paper” published by Statistics Norway. It is noteworthy not because it contains anything new (it doesn’t), but because despite clearly violating the established standards of good scientific practice, it was published by a government agency. That’s why it is having an impact in non-scientific quarters including the corporate world, and it has even been cited in a submission to proceedings of the German parliament.

The flood of fallacies or deceptions begins with the paper’s title: “To what extent are temperature levels changing due to greenhouse gas emissions?” But the effect of greenhouse gases is not even investigated in the paper – which suggests the title is politically motivated. And the paper revolves around ignoring past studies and basic physics, using dubious sources, and the glaring blunder of arguing that warming at any individual weather station might be caused by random weather variations, without ever wondering how it is possible that these supposed random variations go in the same direction all over the planet: in the direction of warming.

The paper provides a good opportunity to illustrate how climate science obfuscation works, and to remind readers how we actually know for sure that greenhouse gas emissions are indeed responsible for modern global warming.

Egregious scientific errors

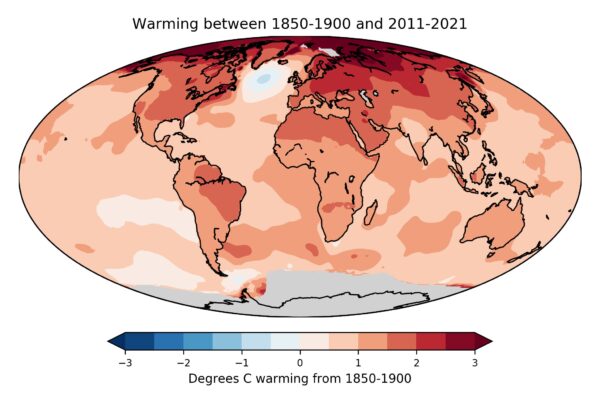

The paper contains far too many egregious scientific errors and logical fallacies to review here, but let’s look at one: The paper continually mixes up local and global temperatures. It performs some statistical analysis on local temperature changes and argues they individually might just be within random fluctuations (a 25-year-old argument, which works if you assume long autocorrelation) – but even if that were true, the same does not apply to the global temperature. In an unchanging climate, the random fluctuations would lead to warming in some parts of the world and cooling in others. The fact that all parts of the world, with very few exceptions, show warming at the same time cannot be explained by random internal fluctuations.

It’s not hard to understand. In a world with just random local fluctuations but no climate change, about half the weather stations would show a (more or less significant) warming, the other half a cooling. With a modest amount of global warming, perhaps 60% would be warming and 40% cooling. With strong global warming, close to 100% will show warming, and that is exactly what is happening. It shows global warming has overwhelmed natural temperature variability, and that is what the Statistics Norway paper confirms yet again. Its authors literally don’t see the forest for the trees when they falsely claim the opposite.

Figure 1: Map of observed near-surface air temperature changes since the late 19th Century. Gray areas show lack of data. The only region of cooling is the northern Atlantic, where climate models have long predicted just that due to a slowing of the Atlantic Ocean overturning circulation. The data are from the independent open-source Berkeley Earth project – a project by the formerly outspoken climate skeptic physicist Richard Muller, which in 2010 set out to do better than the traditional climate institutes and in the end obtained almost exactly the same results, just a slightly faster global warming. Muller was converted to accepting mainstream climate science by his own results. Image: Zeke Hausfather / Berkeley Earth.

Such statistics have of course been investigated for other climate parameters, too. For extreme rainfall events, a study of a global dataset of 8326 high-quality weather stations found that “64% of stations show increasing trends and 36% show decreasing trends”. Another study has shown the same for 940 Western European weather stations. That confirms that extreme rainfall is increasing – as predicted by elementary physics as well as climate models.

Blind use of statistics without understanding physics

Perhaps the most important law of physics is the conservation of energy, and the observed warming of Earth requires a huge energy input, which cannot be provided by random weather fluctuations. But even first-term physics is completely ignored in the Statistics Norway paper.

The heating of the global ocean has been going on at a steady rate of nine zeta Joules per year for decades, which is 15 times the worldwide primary energy consumption. We know this from the thousands of Argo floats drifting in the oceans, regularly diving down to 2000 meters while taking measurements. And we know where this staggering amount of heat energy comes from: It represents 91% of the additional heat retained on our planet by the human-caused increase in greenhouse gases. The energy balance of our planet, the radiation arriving and leaving, is continually monitored by a global radiation network at the surface and by dedicated satellites.

The greenhouse effect is in fact the largest control knob to dial up the temperature of our planet. We are receiving 342 Watts per square meter of Earth surface in back-radiation from the greenhouse effect, which is more than twice the Sun’s energy absorbed at Earth’s surface. And yes, also the increase in back-radiation towards the Earth surface from the CO2 greenhouse effect is a measured fact.

The physics behind the greenhouse effect and the gases that cause it have been understood since the 1800s, and that is why global warming was correctly predicted since the 1970s, even before observations unequivocally showed it. This warming was predicted not only by independent university and government scientists, but also by scientists from the oil company Exxon.

Is it sheer incompetence or is it politically motivated?

So the Statistics Norway paper ignores physics, misinterprets statistics and cherry-picks data – but is that just sheer incompetence, or is it politically motivated? In addition to the title, there are many tell-tale signs that strongly suggest the latter. Here’s just a few examples.

The paper shows a graph of local Greenland temperature from the famous Camp Century ice core drilled in 1960-1966. But rather than the data from the original publication in Science, it shows a hand-drawn version that has never been published in the peer-reviewed literature and is mislabeled, with the vertical axis showing variations around an average temperature of 15 °C (rather than -25 °C) to suggest it represents the global mean. This version originates from a 1995 German book and to this day is highly popular with the climate skeptics bubble on social media, and with the German right-wing AfD climate denial party (see my 2019 blog article).

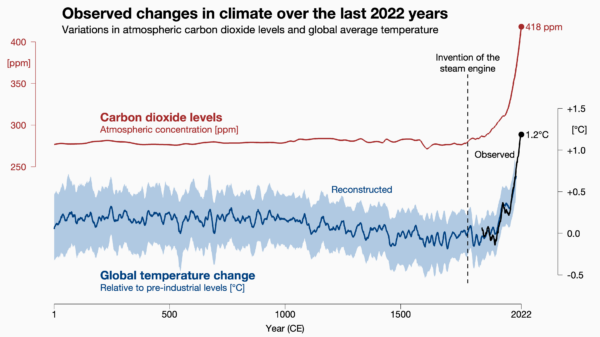

The Statistics Norway authors try to cast doubt on modern warming being human-caused by pointing to the fact that Greenland was warmer during past millennia. But they don’t tell you why: as explained for example in the paleoclimate chapter of the 4th IPCC report of 2007 (which I co-authored), this is as expected from Earth’s natural orbital cycles. And they conveniently ignore global data reconstructions, which show Earth is warmer now than any time at least since the last Ice Age 24.000 years ago.

Similarly, they show Antarctic ice core data, taken from the climate skeptic website climate4you rather than a scientific source, with the figure caption falsely claiming that these data show global temperature when in fact, it is local. Greenland and Antarctica are perhaps the two locations on Earth where temperature variations are least representative of the global average.

The statistical analysis in fact confirms climate change

The paper analyses only one temperature data set which is actually global: the HadCRUT3 data, one of the well-established global temperature series. Strangely, the data shown end in December 2010 and the diagram is copied from the same climate skeptic website, instead of using the current HadCRUT5 data which have improved global coverage and are readily available from the source (which google finds in one second). But regardless: for this data set even their method “found that the HadCRUT3 time series is far from stationary”. The real result of their statistical analysis is thus: global temperature does show climate change! They even wonder why it does that, despite their home-baked aggregate of a small number of weather stations does not even though it shows a similar trend. They don’t seem to understand that the signal-to-noise ratio matters, which is worse the fewer data one uses (they used a meagre 74 stations).

Figure 2: Carbon dioxide levels and global temperature over the past 2022 years. Carbon dioxide data from air bubbles enclosed in Antarctic ice. Global temperature data from the PAGES2k project, a collaboration of 78 paleo-climatologists from 24 countries. The Statistics Norway paper conveniently ignored these well-known state-of-the art data, even though they are shown in the IPCC report. Image: Prof. Ed Hawkins, National Centre for Atmospheric Science.

Unscientific sources

The paper also repeatedly cites a climate skeptics book by Fritz Vahrenholt and Sebastian Lüning, a former German CEO and an employee of the energy giant RWE, the largest CO2 emitter in Europe. The US edition of this book, called The Neglected Sun, is published by the climate denial lobby Heartland Institute. Yes, that’s the think tank which ran a poster campaign comparing the Unabomber and Osama Bin Laden to those concerned about global warming.

The original German title of this book is called Die kalte Sonne (The Cold Sun), referring to the fact that solar activity has declined, and thus has counteracted a small amount of the greenhouse warming caused by burning coal, oil and gas. This book badly overestimated the importance of solar variations and thus predicted an imminent global cooling. When this was soon disproven by observations, they accused NASA of doctoring the data.

I could go on. The paper presents many more hair-raising false statements and misleading climate-change-denial talking points. The authors have clearly swallowed a great mouthful of the toxic brew found on climate denial websites. But they have apparently not bothered to look at real climate science or talk to a climate scientist before publishing this “discussion paper”.

A massive blow to Statistics Norway’s credibility

It is more than embarrassing that Statistics Norway has published this nonsense. It is a scandal. Let’s hope it was not political on the part of that institution, but just a bad mistake. If they want to salvage their reputation and credibility, they should withdraw it immediately, with an appropriate explanation of the real science of global warming.

Area-based global hydro-climatological indicators

The World Meteorological Organisation (WMO) Global Climate Observing System (GCOS) and Copernicus Climate Change Services (C3S) both provide sets of global climate statistics to summarise the state of Earth’s climate. They are indeed valuable indicators for the global or regional mean temperature, greenhouse gas concentrations, both ice volume and area, ocean heat, acidification, and the global sea level.

Still, I find it surprising that the set does not include any statistics on the global hydrological cycle, relevant to rainfall patterns and droughts. Two obvious global hydro-climatological indicators are the total mass of water falling on Earth’s surface each day P and the fraction of Earth’s surface area on which it falls Ap.

[Read more…] about Area-based global hydro-climatological indicatorsEvaluation of GCM simulations with a regional focus.

Do the global climate models (GCMs) we use for describing future climate change really capture the change and variations in the region that we want to study? There are widely used tools for evaluating global climate models, such as the ESMValTool, but they don’t provide the answers that I seek.

I use GCMs to provide information about large-scale conditions, processes and phenomena in the atmosphere that I can use as predictors in downscaling future climate projections. I also want to know whether the ensemble of GCM simulations that I use provides representative statistics of the actual regional climate I’m interested in.

[Read more…] about Evaluation of GCM simulations with a regional focus.The established ground and new ideas

Science is naturally conservative and the scepticism to new ideas ensures high scientific quality. We have more confidence when different scholars arrive at the same conclusion independently of each other. But scientific research also brings about discoveries and innovations, and it typically takes time for such new understanding to receive acknowledgement and acceptance. In the meanwhile, it’s uncertain whether they really represent progress or if they are misconceived ideas. Sometimes we can shed more light on new ideas through scientific discussions.

[Read more…] about The established ground and new ideas2022 updates to the temperature records

Another January, another annual data point.

As in years past, the annual rollout of the GISTEMP, NOAA, HadCRUT and Berkeley Earth analyses of the surface temperature record have brought forth many stories about the long term trends and specific events of 2022 – mostly focused on the impacts of the (ongoing) La Niña event and the litany of weather extremes (UK and elsewhere having record years, intense rainfall and flooding, Hurricane Ian, etc. etc.).

But there are a few things that don’t get covered much in the mainstream stories, and so we can dig into them a bit here.

What influence does ENSO really have?

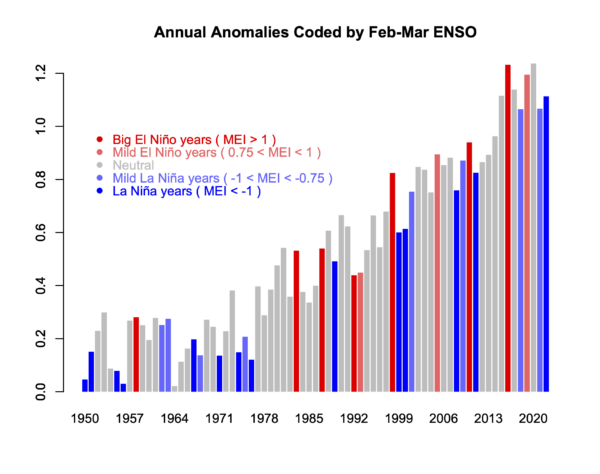

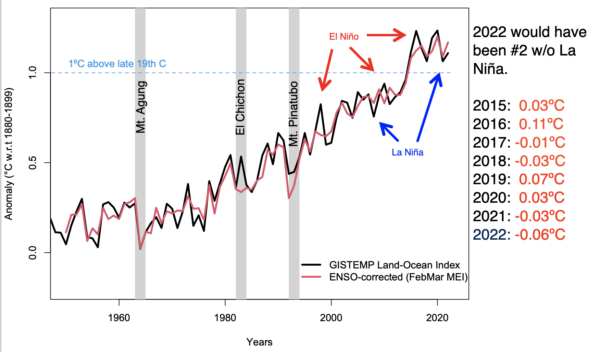

It’s well known (among readers here, I assume), that ENSO influences the interannual variability of the climate system and the annual mean temperatures. El Niño events enhance global warming (as in 1998, 2010, 2016 etc.) and La Niña events (2011, 2018, 2021, 2022 etc.) impart a slight cooling.

Consequently, a line drawn from an El Niño year to a subsequent La Niña year will almost always show a cooling – a fact well known to the climate disinformers (though they are not so quick to show the uncertainties in such cherry picks!). For instance, the trends from 2016 to 2022 are -0.12±0.37ºC/dec but with such large uncertainties, the calculation is meaningless. Far more predictive are the long term trends which are consistently (now) above 0.2ºC/dec (and with much smaller uncertainties ±0.02ºC/dec for the last 40 years).

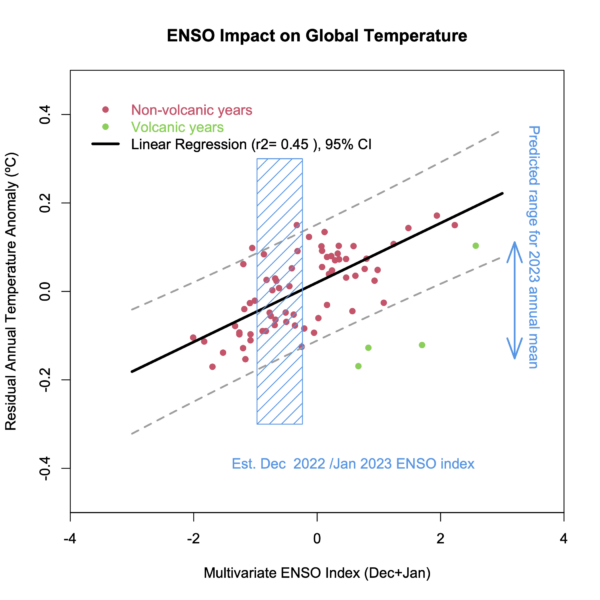

It’s worth exploring quantitatively what the impact is, and this is something I’ve been looking at for a while. It’s easy enough correlate the detrended annual anomalies with the ENSO index (maximum correlation is for the early spring values), and then use that regression to estimate the specific impact for any year, and to estimate an ENSO-corrected time series.

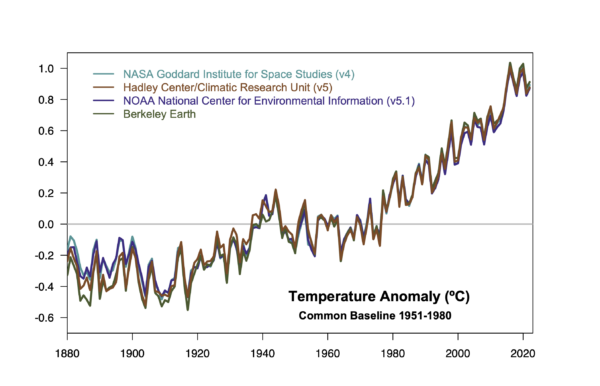

The surface temperature records are becoming more coherent

Back in 2013/2014, the differences between the surface indices (HadCRUT3, NOAA v3 and GISTEMP v3) contributed to the initial confusion related to the ‘pause’, which was seemingly evident in HadCRUT3, but not so much in the other records (see this discussion from 2015). Since then all of the series have adopted improved SST homogenization, and HadCRUT5 adopted a similar interpolation across the pole as was used in the GISTEMP products. From next month onwards, NOAA will move to v5.1 which will now incorporate Arctic buoy data (a great innovation) and also provide a spatially complete record. The consequence is that the surface instrument records will be far more coherent than they have ever been. Some differences remain pre-WW2 (lots of SST inhomogeneities to deal with) and in the 19th C (where data sparsity is a real challenge).

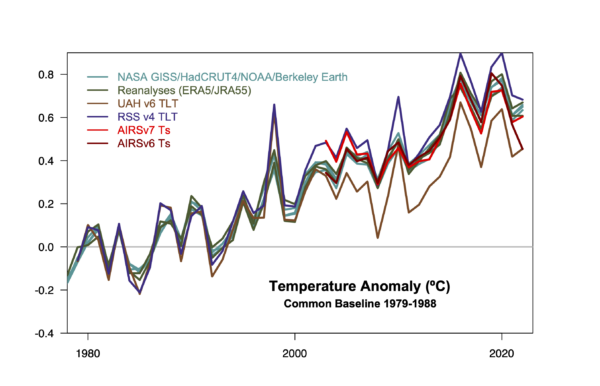

The structural uncertainty in satellite records is large

While the surface-based records are becoming more consistent, the various satellite records are as far apart as ever. The differences between the RSS and UAH TLT records are much larger than the spread in the surface records (indeed, they span those trends), making any claims of greater precision somewhat dubious. Similarly, the difference in the versions of the AIRS records (v6 vs. v7) of ground temperature anomalies produce quite distinct trends (in the case of AIRS v6, Nov 2022 was exceptionally cold, which was not seen in other records).

When will we reach 1.5ºC above the pre-industrial?

This was a very common question in the press interviews this week. It has a few distinct components – what is the ‘pre-industrial’ period that’s being referenced, what is the uncertainty in that baseline, and what are the differences in the long term records since then?

The latest IPCC report discusses this issue in some depth, but the basic notion is that since the impacts that are expected at 1.5ºC are derived in large part from the CMIP model simulations that have a nominal baseline of ~1850, ‘pre-industrial’ temperatures are usually assumed to be some kind of mid-19th Century average. This isn’t a universally accepted notion – Hawkins et al (2017) for instance, suggest we should use a baseline from the 18th Century – but it is one that easier to operationalise.

The baseline of 1880-1900 can be calculated for all the long temperature series, and with respect to that 2022 (or the last five years) is between 1.1 and 1.3ºC warmer (with Berkeley Earth showing the most warming). For the series that go back to 1850, the difference between 1850-1900 and 1880-1900 is 0.01 to 0.03ºC, so probably negligible for this purpose.

Linear trends since 1996 are robustly just over 0.2ºC/decade in all series, so that suggests between one and two decades are required to have the mean climate exceed 1.5ºC, that is around 2032 to 2042. The first specific year that breaches this threshold will come earlier and will likely be associated with a big El Niño. Assuming something like 2016 (a +0.11ºC effect), that implies you might see the excedence some 5 years earlier – say 2027 to 2037 (depending a little on the time-series you are following).

2023 is starting the year with a mild La Niña, which is being forecast to switch to neutral conditions by mid-year. Should we see signs of an El Niño developing towards the end of the year, that will heavily favor 2024 to be a new record, though not one that is likely to exceed 1.5ºC however you calculate it.

[Aside: In contrast to my reasoning here, the last decadal outlook from the the UK MetOffice/WMO suggested that 2024 has a 50-50 chance of exceeding 1.5ºC, some 5 or so years early than I’d suggest, and that an individual year might reach 1.7ºC above the PI in the next five years! I don’t know why this is different – it could be a larger variance associated with ENSO in their models, it could be a higher present day baseline (but I don’t think so), or a faster warming rate than the linear trend (which could relate to stronger forcings, or higher effective sensitivity). Any insight on this would be welcome!]

References

- E. Hawkins, P. Ortega, E. Suckling, A. Schurer, G. Hegerl, P. Jones, M. Joshi, T.J. Osborn, V. Masson-Delmotte, J. Mignot, P. Thorne, and G.J. van Oldenborgh, "Estimating Changes in Global Temperature since the Preindustrial Period", Bulletin of the American Meteorological Society, vol. 98, pp. 1841-1856, 2017. http://dx.doi.org/10.1175/BAMS-D-16-0007.1

Serious mistakes found in recent paper by Connolly et al.

Guest post by Mark Richardson who is a Research Scientist in the Aerosol and Clouds Group at NASA’s Jet Propulsion Laboratory, California Institute of Technology. All opinions expressed are his own and do not in any way represent those of NASA, JPL or Caltech.

Should scientists choose to believe provably false things? Even though that would mean more inclusive debates with a wider range of opinions, our recent paper Richardson & Benestad (2022) argues no: “instead of repeating errors, they should be acknowledged and corrected so that the debate can focus on areas of legitimate scientific uncertainty”. We were responding to Connolly et al., who suggested that maybe the Sun caused “most” of the warming in “recent decades” based on a simple maths mistake.

[Read more…] about Serious mistakes found in recent paper by Connolly et al.References

- M.T. Richardson, and R.E. Benestad, "Erroneous use of Statistics behind Claims of a Major Solar Role in Recent Warming", Research in Astronomy and Astrophysics, vol. 22, pp. 125008, 2022. http://dx.doi.org/10.1088/1674-4527/ac981c

Scafetta comes back for more

A new paper from Scafetta and it’s almost as bad as the last one.

Back in March, we outlined how a model-observations comparison paper in GRL by Nicola Scafetta (Scafetta, 2022a) got wrong basically everything that one could get wrong (the uncertainty in the observations, the internal variability in the models, the statistical basis for comparisons – the lot!). Now he’s back with a new paper in a different journal (Scafetta, 2022b) that could be seen as trying to patch the holes in the first one, but while he makes some progress, he now adds some new errors while attempting CPR on his original conclusions.

[Read more…] about Scafetta comes back for moreReferences

- N. Scafetta, "Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m", Geophysical Research Letters, vol. 49, 2022. http://dx.doi.org/10.1029/2022GL097716

- N. Scafetta, "CMIP6 GCM ensemble members versus global surface temperatures", Climate Dynamics, vol. 60, pp. 3091-3120, 2022. http://dx.doi.org/10.1007/s00382-022-06493-w

Watching the detections

The detection and the attribution of climate change are based on fundamentally different frameworks and shouldn’t be conflated.

We read about and use the phrase ‘detection and attribution’ of climate change so often that it seems like it’s just one word ‘detectionandattribution’ and that might lead some to think that it is just one concept. But it’s not.

[Read more…] about Watching the detectionsClimate adaptation should be based on robust regional climate information

Climate adaptation steams forward with an accelerated speed that can be seen through the Climate Adaptation Summit in January (see previous post), the ECCA 2021 in May/June, and the upcoming COP26. Recent extreme events may spur this development even further (see previous post about attribution of recent heatwaves).

To aid climate adaptation, Europe’s Climate-Adapt programme provides a wealth of resources, such as guidance, case studies and videos. This is a good start, but a clear and transparent account on how to use the actual climate information for adaptation seems to be missing. How can projections of future heatwaves or extreme rainfall help practitioners, and how to interpret this kind of information?

[Read more…] about Climate adaptation should be based on robust regional climate information