This month’s open thread. We’ve burned out on mitigation topics (again), so please focus on climate science issues this month…

Climate Science

Climate Oscillations and the Global Warming Faux Pause

No, climate change is not experiencing a hiatus. No, there is not currently a “pause” in global warming.

Despite widespread such claims in contrarian circles, human-caused warming of the globe proceeds unabated. Indeed, the most recent year (2014) was likely the warmest year on record.

It is true that Earth’s surface warmed a bit less than models predicted it to over the past decade-and-a-half or so. This doesn’t mean that the models are flawed. Instead, it points to a discrepancy that likely arose from a combination of three main factors (see the discussion my piece last year in Scientific American). These factors include the likely underestimation of the actual warming that has occurred, due to gaps in the observational data. Secondly, scientists have failed to include in model simulations some natural factors (low-level but persistent volcanic eruptions and a small dip in solar output) that had a slight cooling influence on Earth’s climate. Finally, there is the possibility that internal, natural oscillations in temperature may have masked some surface warming in recent decades, much as an outbreak of Arctic air can mask the seasonal warming of spring during a late season cold snap. One could call it a global warming “speed bump”. In fact, I have.

Some have argued that these oscillations contributed substantially to the warming of the globe in recent decades. In an article my colleagues Byron Steinman, Sonya Miller and I have in the latest issue of Science magazine, we show that internal climate variability instead partially offset global warming.

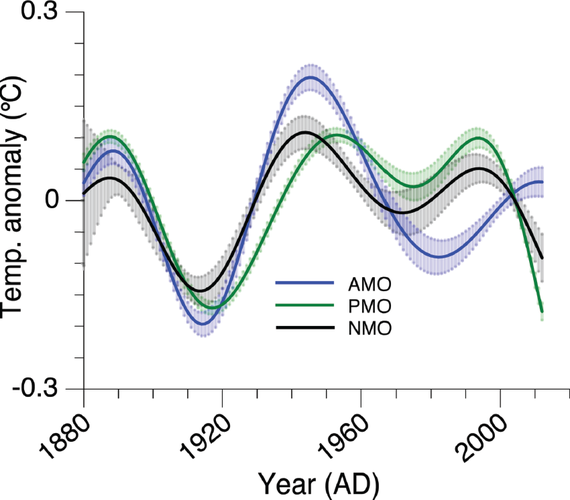

We focused on the Northern Hemisphere and the role played by two climate oscillations known as the Atlantic Multidecadal Oscillation or “AMO” (a term I coined back in 2000, as recounted in my book The Hockey Stick and the Climate Wars) and the so-called Pacific Decadal Oscillation or “PDO” (we a use a slightly different term–Pacific Multidecadal Oscillation or “PMO” to refer to the longer-term features of this apparent oscillation). The oscillation in Northern Hemisphere average temperatures (which we term the Northern Hemisphere Multidecadal Oscillation or “NMO”) is found to result from a combination of the AMO and PMO.

In numerous previous studies, these oscillations have been linked to everything from global warming, to drought in the Sahel region of Africa, to increased Atlantic hurricane activity. In our article, we show that the methods used in most if not all of these previous studies have been flawed. They fail to give the correct answer when applied to a situation (a climate model simulation) where the true answer is known.

We propose and test an alternative method for identifying these oscillations, which makes use of the climate simulations used in the most recent IPCC report (the so-called “CMIP5” simulations). These simulations are used to estimate the component of temperature changes due to increasing greenhouse gas concentrations and other human impacts plus the effects of volcanic eruptions and observed changes in solar output. When all those influences are removed, the only thing remaining should be internal oscillations. We show that our method gives the correct answer when tested with climate model simulations.

Estimated history of the “AMO” (blue), the “PMO (green) and the “NMO” (black). Uncertainties are indicated by shading. Note how the AMO (blue) has reached a shallow peak recently, while the PMO is plummeting quite dramatically. The latter accounts for the precipitous recent drop in the NMO.

Applying our method to the actual climate observations (see figure above) we find that the NMO is currently trending downward. In other words, the internal oscillatory component is currently offsetting some of the Northern Hemisphere warming that we would otherwise be experiencing. This finding expands upon our previous work coming to a similar conclusion, but in the current study we better pinpoint the source of the downturn. The much-vaunted AMO appears to have made relatively little contribution to large-scale temperature changes over the past couple decades. Its amplitude has been small, and it is currently relatively flat, approaching the crest of a very shallow upward peak. That contrasts with the PMO, which is trending sharply downward. It is that decline in the PMO (which is tied to the predominance of cold La Niña-like conditions in the tropical Pacific over the past decade) that appears responsible for the declining NMO, i.e. the slowdown in warming or “faux pause” as some have termed it.

Our conclusion that natural cooling in the Pacific is a principal contributor to the recent slowdown in large-scale warming is consistent with some other recent studies, including a study I commented on previously showing that stronger-than-normal winds in the tropical Pacific during the past decade have lead to increased upwelling of cold deep water in the eastern equatorial Pacific. Other work by Kevin Trenberth and John Fasullo of the National Center for Atmospheric Research (NCAR) shows that the there has been increased sub-surface heat burial in the Pacific ocean over this time frame, while yet another study by James Risbey and colleagues demonstrates that model simulations that most closely follow the observed sequence of El Niño and La Niña events over the past decade tend to reproduce the warming slowdown.

It is possible that the downturn in the PMO itself reflects a “dynamical response” of the climate to global warming. Indeed, I have suggested this possibility before. But the state-of-the-art climate model simulations analyzed in our current study suggest that this phenomenon is a manifestation of purely random, internal oscillations in the climate system.

This finding has potential ramifications for the climate changes we will see in the decades ahead. As we note in the last line of our article,

Given the pattern of past historical variation, this trend will likely reverse with internal variability, instead adding to anthropogenic warming in the coming decades.

That is perhaps the most worrying implication of our study, for it implies that the “false pause” may simply have been a cause for false complacency, when it comes to averting dangerous climate change.

The Soon fallacy

As many will have read, there were a number of press reports (NYT, Guardian, InsideClimate) about the non-disclosure of Willie Soon’s corporate funding (from Southern Company (an energy utility), Koch Industries, etc.) when publishing results in journals that require such disclosures. There are certainly some interesting questions to be asked (by the OIG!) about adherence to the Smithsonian’s ethics policies, and the propriety of Smithsonian managers accepting soft money with non-disclosure clauses attached.

However, a valid question is whether the science that arose from these funds is any good? It’s certainly conceivable that Soon’s work was too radical for standard federal research programs and that these energy companies were really taking a chance on blue-sky high risk research that might have the potential to shake things up. In such a case, someone might be tempted to overlook the ethical lapses and conflicts of interest for the sake of scientific advancement (though far too many similar post-hoc justifications have been used to excuse horrific unethical practices for this to be remotely defendable).

Unfortunately, the evidence from the emails and the work itself completely undermines that argument because the work and the motivation behind it are based on a scientific fallacy.

[Read more…] about The Soon fallacy

The mystery of the offset chronologies: Tree rings and the volcanic record of the 1st millennium

Guest commentary by Jonny McAneney

Volcanism can have an important impact on climate. When a large volcano erupts it can inject vast amounts of dust and sulphur compounds into the stratosphere, where they alter the radiation balance. While the suspended dust can temporarily block sunlight, the dominant effect in volcanic forcing is the sulphur, which combines with water to form sulphuric acid droplets. These stratospheric aerosols dramatically change the reflectivity, and absorption profile of the upper atmosphere, causing the stratosphere to heat, and the surface to cool; resulting in climatic changes on hemispheric and global scales.

Interrogating tree rings and ice cores

Annually-resolved ice core and tree-ring chronologies provide opportunities for understanding past volcanic forcing and the consequent climatic effects and impacts on human populations. It is common knowledge that you can tell the age of a tree by counting its rings, but it is also interesting to note that the size and physiology of each ring provides information on growing conditions when the ring formed. By constructing long tree ring chronologies, using suitable species of trees, it is possible to reconstruct a precisely-dated annual record of climatic conditions.

Ice cores can provide a similar annual record of the chemical and isotopic composition of the atmosphere, in particular volcanic markers such as layers of volcanic acid and tephra. However, ice cores can suffer from ambiguous layers that introduce errors into the dating of these layers of volcanic acid. To short-circuit this, attempts have been made to identify know historical eruptions within the ice records, such as Öraefajökull (1362) and Vesuvius (AD 79). This can become difficult since the ice chronologies can only be checked by finding and definitively identifying tephra (volcanic glass shards) that can be attributed to these key eruptions; sulphate peaks in the ice are not volcano specific.

Thus, it is fundamentally important to have chronological agreement between historical, tree-ring and ice core chronologies: The ice cores record the magnitude and frequency of volcanic eruptions, with the trees recording the climatic response, and historical records evidencing human responses to these events.

But they don’t quite line up…

[Read more…] about The mystery of the offset chronologies: Tree rings and the volcanic record of the 1st millennium

Noise on the Telegraph

I was surprised by the shrill headlines from a British newspaper with the old fashioned name the Telegraph: “The fiddling with temperature data is the biggest science scandal ever”. So what is this all about?

Unforced Variations: Feb 2015

Thoughts on 2014 and ongoing temperature trends

Last Friday, NASA GISS and NOAA NCDC had a press conference and jointly announced the end-of-year analysis for the 2014 global surface temperature anomaly which, in both analyses, came out top. As you may have noticed, this got much more press attention than their joint announcement in 2013 (which wasn’t a record year).

In press briefings and interviews I contributed to, I mostly focused on two issues – that 2014 was indeed the warmest year in those records (though by a small amount), and the continuing long-term trends in temperature which, since they are predominantly driven by increases in greenhouse gases, are going to continue and hence produce (on a fairly regular basis) continuing record years. Response to these points has been mainly straightforward, which is good (if sometimes a little surprising), but there have been some interesting issues raised as well…

[Read more…] about Thoughts on 2014 and ongoing temperature trends

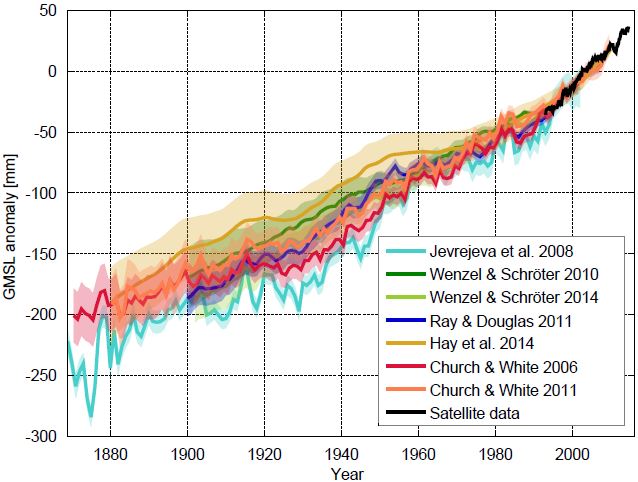

A new sea level curve

The “zoo” of global sea level curves calculated from tide gauge data has grown – tomorrow a new reconstruction of our US colleagues around Carling Hay from Harvard University will appear in Nature (Hay et al. 2015). That is a good opportunity for an overview over the available data curves. The differences are really in the details, the “big picture” of sea-level rise does not change. In all curves, the current rates of rise are the highest since records began.

The following graph shows the new sea level curve as compared to six known ones.

Fig 1 Sea level curves calculated by different research groups with various methods. The curves show the sea level relative to the satellite era (since 1992). Graph: Klaus Bittermann.

All curves show the well-known modern sea level rise, but the exact extent and time evolution of the rise differ somewhat. Up to about 1970, the new reconstruction of Hay et al. runs at the top of the existing uncertainty range. For the period from 1880 AD, however, it shows the same total increase as the current favorites by Church & White. Starting from 1900 AD it is about 25 mm less. This difference is at the margins of significance: the uncertainty ranges overlap. [Read more…] about A new sea level curve

References

- C.C. Hay, E. Morrow, R.E. Kopp, and J.X. Mitrovica, "Probabilistic reanalysis of twentieth-century sea-level rise", Nature, vol. 517, pp. 481-484, 2015. http://dx.doi.org/10.1038/nature14093

Diagnosing Causes of Sea Level Rise

Guest post by Sarah G. Purkey and Gregory C. Johnson,

University of Washington / NOAA

I solicited this post from colleagues at the University of Washington. I found their paper particularly interesting because it gets at the question of sea level rise from a combination of ocean altimetry and density (temperature + salinity) data. This kind of measurement and calculation has not really been possible — not at this level of detail — until quite recently. A key finding is that one can reconcile various different estimates of the contributions to observed sea level rise only if the significant warming of the deep ocean is accounted for. There was a good write-up in The Guardian back when the paper came out.– Eric Steig

Sea leave rise reveals a lot about our changing climate. A rise in the mean sea level can be caused by decreases in ocean density, mostly reflecting an increase in ocean temperature — this is steric sea level rise. It can also be caused by an increase in ocean mass, reflecting a gain of fresh water from land. A third, and smaller, contribution to mean sea level is from glacial isostatic adjustment. The contribution of glacial isostatic adjustment, while small, has a range of possible values and can be a significant source of uncertainty in sea level budgets. Over recent decades, very roughly half of the observed mean sea level rise is owing to changes in ocean density with the other half owing to the increased in ocean mass, mostly from melting glaciers and polar ice sheets. The exact proportion has been difficult to pin down with great certainty. [Read more…] about Diagnosing Causes of Sea Level Rise

References

- S.G. Purkey, G.C. Johnson, and D.P. Chambers, "Relative contributions of ocean mass and deep steric changes to sea level rise between 1993 and 2013", Journal of Geophysical Research: Oceans, vol. 119, pp. 7509-7522, 2014. http://dx.doi.org/10.1002/2014JC010180