Guest article by William Ruddiman

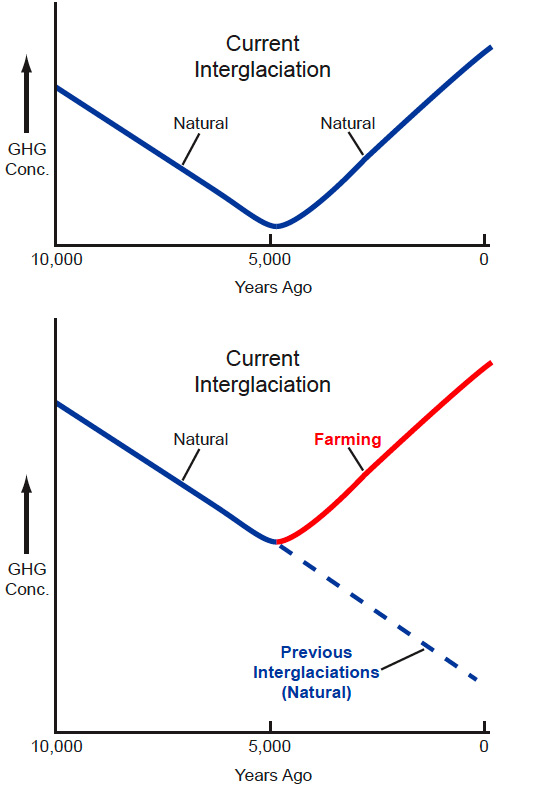

More than 20 years ago, analyses of greenhouse gas concentrations in ice cores showed that downward trends in CO2 and CH4 that had begun near 10,000 years ago subsequently reversed direction and rose steadily during the last several thousand years. Competing explanations for these increases have invoked either natural changes or anthropogenic emissions. Reasonably convincing evidence for and against both causes has been put forward, and the debate has continued for almost a decade. Figure 1 summarizes these different views.

Last week, there was a

Last week, there was a