Guest commentary from Paul Bates, Peter Bauer, Tim Palmer, Julia Slingo, Graeme Stephens, Bjorn Stevens, Thomas Stocker and Georg Teutsch

Gavin Schmidt claims that the benefits of k-scale climate models (i.e. global climate models with grid spacing on the order of 1 km) have been “potentially somewhat oversold” in two recent Nature Climate Change papers. By this, we suppose Gavin means that the likely benefits of k-scale cannot justify the cost and time their development demands.

The benefit of k-scale — which is laid out in the papers (Slingo et al., 2022 and Hewitt et al., 2022) and only briefly recapitulated here — is that it enables the use of physics, instead of fitting, to represent some of the most important components of the climate system (precipitating systems in the atmosphere, eddies in the ocean, and topographic relief), and hence build more reliable models.

Those who haven’t been following the technology might be surprised to learn how far we’ve come. Later this year the NICAM group will perform global simulations on a 220m grid. If they had the Earth Simulator machine Fugaku to themselves, they could deliver 1500 years of throughput with their 3.5 km global coupled model within a year. Likewise, at the MPI in Hamburg, benchmarking of a 1.25 km version of ICON on pre-exascale platforms suggests that, over the course of a year, DOE’s Frontier machine could deliver 800 years of coupled 1.25 km output.

Yes, investments in science and software engineering will be required to get the most out of k-scale models running on exascale machines. This realization motivates new programmes like Destination Earth (EU), as well as national projects in Germany (WarmWorld), and Switzerland (EXCLAIM). However, if we wish to benefit from the fruits of these efforts, and we must, we will need to better align the development of k-scale models with exascale machines in ways that makes their developing information content accessible by all. How much would this cost? Given access to the hardware, we estimate that about 100 M€/year of additional funding would be sufficient to support the staff needed to use it to make multi-decadal, multi-model, k-scale climate information systems a reality; not by 2080, but by the end of this decade.

We don’t pretend that k-scale modelling will solve every problem, but it will solve many important ones, and put us in a much better position to tackle those that remain. This conviction is rooted in the experiences of scientists world-wide, who perform their numerical experiments and run their weather models at k-scale if they can. It is also why the European Centre, and many leading climate centers are retooling their labs to develop k-scale coupled global models. No-one says that the benefits of high resolution, e.g. in terms of lives saved, have been oversold for weather prediction. Why should the same not be true of climate prediction? If anything is being oversold, it is the idea that business as usual, which favors fitting over physics, will be adequate to address the challenges of a changing climate.

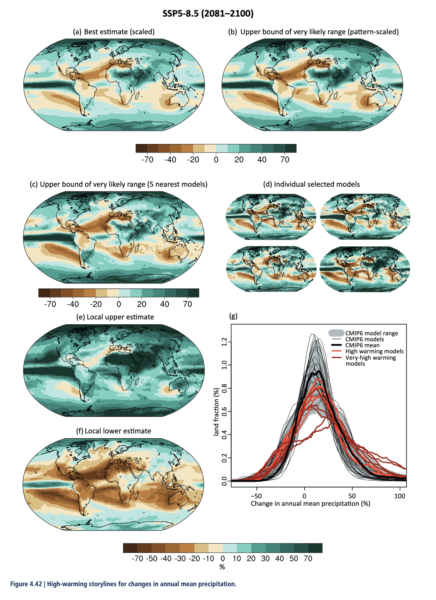

No-one who understands the notion of nonlinearity can be comfortable with the fact that the precipitation biases in CMIP-class models are larger than the signal, and that the oceans — which buffer emissions and export enthalpy to ice-sheets — behave like asphalt. As societies begin to confront the reality of climate change, and the existential threats it poses, our science cannot countenance complacency. To build resilience to the changing nature of weather extremes, communities and countries need to know what is coming — more droughts and heat waves, or more storms and floods, and with what intensity, scale and duration? The brutal truth is that we don’t know enough, a fact testified to by, for example, Fig. 3 and Fig. 8 of the SPM, or Fig 4.42 of the full AR6 report (right).

If six phases of CMIP have taught us anything, it is that a seventh phase based on similar models won’t help. Unless we up the scientific ante, nothing will change.

If people are happy to endorse satellite missions to observe convective cloud systems, surely they should support the development of models which have the capability to assimilate the data from such missions and then exploit them in a physically robust manner? We have no beef with the argument that the current generation of climate models have helped advance climate science; we just happen to be convinced that we can, and must, do better — ideally by working together.

References

- J. Slingo, P. Bates, P. Bauer, S. Belcher, T. Palmer, G. Stephens, B. Stevens, T. Stocker, and G. Teutsch, "Ambitious partnership needed for reliable climate prediction", Nature Climate Change, vol. 12, pp. 499-503, 2022. http://dx.doi.org/10.1038/s41558-022-01384-8

- H. Hewitt, B. Fox-Kemper, B. Pearson, M. Roberts, and D. Klocke, "The small scales of the ocean may hold the key to surprises", Nature Climate Change, vol. 12, pp. 496-499, 2022. http://dx.doi.org/10.1038/s41558-022-01386-6

I’d like to thank Tim and the other authors for engaging on this in order to help pin down where we do and don’t agree.

To start with, where do we agree? I’m sure that “k-scale” climate modeling is likely to be very exciting and will almost certainly have better background climatologies than current climate models. I look forward to seeing some of the preliminary results from the ongoing efforts.

However, this response clearly articulates two points that continue to give me pause.

First, the authors explicitly state that because of the increasing success of higher resolution weather models, we should just expect similar improvements in climate prediction, and they go on to explain that this will be in the forecasting of extreme events (heat waves, intense rain, droughts, floods etc.). In my mind, this really requires a demonstration, not just a claim. Why am I sceptical? Weather models clearly benefit from higher resolution because their skill comes from the ability to track dynamical movements in the atmosphere (storm fronts, convective systems etc,) given initial atmospheric conditions – something clearly tied to the accuracy of the dynamics code through reduced numerical diffusion, sharper fronts, better wave patterns, etc. Climate model skill is measured instead through changes over time in the statistics of the key features (such as temperature, or weather events etc.). Clearly, if a particular climate model doesn’t have the resolution to capture intense tropical cyclones, or derechos or tornadoes for instance, one would not expect it to have skillful predictions of their changes. But many extremes are captured by climate models already (heat waves, shifts in the distribution of intense rain etc.) and their predicted changes are in line with observations. Major tropical cyclones form in these models at resolutions of 4km, but reasonable distributions of slightly weaker storms occur at 50 or 25 km resolution. But even k-scales don’t get you to actual tornadoes (areas of potential tornadic activity perhaps). What would go a long way to convincing me on this is for the k-scale atmospheric models to show that the changes in statistics of extremes, even in a simple 2xCO2 simulation, are distinctly different from what lower resolution climate models show. It simply isn’t obvious to me that this will inevitably be the case.

The second point that gives me pause is the somewhat curt dismissal of the CMIP process. To counter their point, we have actually learned many things from this almost three decade-long effort. We have learnt that we need initial condition ensembles to really address internal variability, that we need to explore structural and parametric uncertainty in the physics, but also in the forcings and scenarios. We have learned that oceans, ice sheets, and soils have very long memories that require sometimes thousands of years of spin up. We have learned that atmospheric chemistry, aerosols and the carbon cycle are a fundamental part of the climate system. we have learned that out-of-sample paleoclimate simulations are essential to build confidence in predictions. The post CMIP6 developments aren’t yet finalized (and it’s not yet clear that there will be a CMIP7 anytime soon), but it’s not likely that any of these issues will be dropped, For reference, the GISS-E2.1-G model in CMIP6 simulated more than 50,000 years as it completed the various DECK runs and sub-MIPs. This will not be achievable with k-scale models for decades (even accepting the claims above), and so their ability to be compared to current and near-future models will be limited.

The authors ask why one would support expensive new observing platforms but not equally large investments in new model efforts. One difference is that observations are forever – the 40+ years of existing satellite data are an invaluable resource for both current and future climate models that can’t be replicated without waiting another 40 years. New instruments observing unexplored processes have the potential to be revolutionary for our understanding. New k-scale climate models might be similarly revolutionary in ways that the currently funded evolutionary efforts are not, but again, this needs to be demonstrated, not just assumed. The process to get a new instrument in space is sometimes decades-long and involves multiple proofs of concept of the technology, lots of compromises, careful preparation, and some luck. I don’t think it’s too much to ask for something similar for new proposed modeling efforts.

Thank you, Gavin, for your thoughtful reply. However, I have to disagree with you on your first point. The UK recently published its 3rd Climate Change Risk Assessment which included, for the first time, km-scale regional modelling. The results showed some big changes in extreme statistics at the k-scale compared with a 12km model. I led the Science Chapter which discusses these results and can be found here: https://www.ukclimaterisk.org/independent-assessment-ccra3/technical-report/. So we already know that we can simulate much more intense and realistic rainfall by resolving the dynamics of the storms. This built on over a decade of experience running a k-scale system in NWP at the Met Office and is a great example of climate gearing off weather.

Furthermore there has been a lot of research over the last 15 years or so on the importance of resolution in the tropics using a hierarchy of models down to the k-scale. Some of this was led by my group when I was at Reading University. We showed that only at the k-scale can we simulate correctly the phase of the diurnal cycle in tropical rainfall, the MJO and other convectively coupled equatorial waves. Since organised convection and meso-scale convective systems are fundamental to the tropical Warm Pool, they are therefore critical drivers of Rossby wave dynamics and global teleconnections. As we argue in Slingo et al. 2022, k-scale is not just about better extreme statistics, but about zero-order dynamics of the global climate system. The model biases in ENSO that we show reinforce this point.

I have worked on climate models all my career and appreciate the great value that CMIP has contributed to the debate on climate change. But with these new scientific perspectives we need to accept that there may be a different paradigm for providing the climate information that society needs so badly and embrace the challenge of k-scale. I, for one, see no alternative.

Thanks. And thanks for the link to the CCRA3. The statements there about the convective permitting (regional) model (2.2km) are certainly food for thought (mostly based on the work of Kendon et al (2020, https://journals.ametsoc.org/view/journals/clim/33/17/jcliD200089.xml), right?). If I was picking nits, I’d point out that there are still structural issues with the CPM (as evidenced by the potential impact of the Fosser et al (2019) changes on the results), and that the CPM results for the extremes aren’t obviously better than the RCM (Table 1.1 – Note there’s an obvious typo in the 38ºC threshold/CPM 2021-2040 box – the median must be greater than “1”). Additionally, it’s clear from fig 1.23 that it was only for winter precip that the CPM even differs substantively from the RCM or the driving global model. That’s an interesting result, and I have no reason to doubt the explanation given, but it remains to be seen how robust that is, or whether we’d see other impacts elsewhere in the globe. The dependence on the driving model and it’s climate sensitivity also suggests that better constraints on ECS might have a bigger impact on UK future extremes projections.

As for the tropics and the MJO etc, I have less expertise there, but I will point out that the representation of the MJO improved enormously in CMIP6 (Orbe et al, 2020).

My overall point is not to discourage anyone from pursuing this line of research, but I am yet to be convinced it is the near-term (1 decade!) panacea for all (or even much) that ails climate modeling. I am indifferent to being proved wrong on this though!

Gavin, in this context I’d like to draw attention to our statement:

“However, if we wish to benefit from the fruits of these efforts, and we must, we will need to better align the development of k-scale models with exascale machines in ways that makes their developing information content accessible by all”

Like it or not, the approaches we advocate are being adopted by the rich countries, as a way to save lives and manage infrastructure.. If it’s good enough for them, why would you waste your energy nit picking as to the value of extending this capability to the rest of the world?

Once the nits are picked, I’d hope we can start talking about how to approach this professionally, and democratically, using science as an example of how we have to work together to solve global problems. One of things I find appealing about an international center, which gets too little attention, is its ability to engage those who don’t have labs, like mine, that can develop their own models, or labs, like yours, that can make them better. As a case in point, the expertise in cloud microphysics at GISS would (in my mind) be much better engaged working with models that had updrafts, then trying to compensate for errors in models that don’t.

@Julia Slingo: –

” Water is Earth’s life blood and fundamental to our future. Hydro-meteorological extremes (storms, floods and droughts) are among the costliest impacts of climate change, …

threatening our food security, water security, health and infrastructure investments. …

Yet the current generation of global climate models struggles to represent precipitation and related extreme events, especially on local and regional scales. …

As water is an essential resource for humans and ecosystems, these shortcomings complicate efforts to effectively adapt to climate change, particularly in the Global South, and to assess the risk of catastrophic regional changes. ”

ms. — WELCOME to my regional locality to discuss drought and flood events and “new” models.

The last costly catastrophe in my home region (Germany/Rhine catchment area 220,000km²) almost exactly a year ago on July 15th. cost ~180 lives and caused damage > €15 billion in the Ahr Valley (900km²), a rather small tributary of the Rhine.

The chronological forecast on July 11th. looked like this:

https://wetterkanal.kachelmannwetter.com/wp-content/uploads/2021/07/ez11000.png

However, the ECMWF and GFS weather models are coarse-meshed global models that cannot resolve convection. If such a weather situation occurs, it must therefore be assumed that even larger amounts of rain can occur locally in this area. In short: These precipitation amounts of 100 to 200 mm in a coarse-meshed global model are VERY ALARMING – especially in hilly terrain.

The high number of deaths could not have been prevented even with k-scale models

– not a liter of rain would have fallen less due to a locally higher-resolution weather forecast, and most of those affected down in the Ahr Valley did not care whether the water masses came from the back left or the front right km².

It was more the mistakes made in the alarm chain between functioning weather warning –> politics / administration –> population that led to poor implementation with regard to evacuation and crisis management. A communication problem between climate/meteorology science and the rest of the population.

I therefore strongly advocate that climate science penetrates into the regions and localities – also welcome with higher resolution k-scale models. Once there – you can e.g. hike up the Ahr valley and also the small tributaries and examine every single km².

–

You are allowed to use as much meteorological ground observation in the region as you need to calculate rainfall, soil moisture, groundwater/river levels, incoming/outgoing SW & LW radiation, (soil) temperature, humidity, evaporation,…and more of you desired factors. Instead of many individual cells with 1km² – now preferably observe the 900km² catchment area with its irregular outer borders as a whole.

Create a regional energy balance for this area from:

-incoming solar radiation TOA,

-albedo,

– thermal outgoing TOA,

– solar absorbed surface,

– evapotranspiration,

– sensible heat

– thermal up/down surface

– imbalance

to show the region if and where its climate balance can improve. This also includes the monitoring of regional CO2 emissions / absorption and expansion of renewable energies.

The best strategy against drought AND flooding is to distribute water over a large area in the region and to use or create high-volume water reservoirs. This could be a higher organic content in the agricultural soil, artificial rainwater retention areas/cisterns or simple drainage or diversions (sowing and harvesting water) as shown here: https://hidraulicainca.com/

But disused mines, underground pump storage and even rain barrels for roof areas can also contribute to a massive retention volume.

Climate science that is willing and able to oversee, plan and organize the implementation of all these props in the region will be able to make money there too.

The UN needs a fire brigade that actually gets to the places of cause and effect!

The group response says:

I have no idea how a model can be matched to the observations without some amount of fitting. Just consider one aspect related to ENSO. Since ENSO is a standing wave behavior, the standing wave mode needs to be selected. Is this determined completely from the physics?

Or consider tidal analysis, which is a very mature scientific discipline. However, to do a predictive tidal analysis without back-fitting of known data and instead simply relying on the physics is not often performed. Maybe pure geophysics alone can get close? In that case would need to now the exact orbital track of the moon + sun + earth system, and also the fluid dynamics+boundary conditions solution of the local region being analyzed. Instead what is done is a harmonic fit of all the known tidal cycles to a previously collected time interval. That works very well, and it is anticipated that one day a similar approach will also capture ENSO cycles very accurately.

Only for the fact Hr. Pukite, that the ENSO is neither a cycling. nor a standing wave.

Its being a cycle 0r a cycling is a false and misleading physical model conseptual idea about it as long as it is rather a burbling and rumbling, splashing and gushing……. nonperiodical noise with amplitudes indeed but hardly with any reliable predictable frequencies and wavelengths..

Of course it is — it sits in place and cycles back and forth for eternity. That’s a standing wave.

It’s only not predictable in linear terms. There’s an entire discipline devoted to extracting order from chaos — it’s called decryption. The inverse of that is called encryption. Nature could be hiding the key and we would never know it.

I think what he meant is that ENSO is not a cycle in terms of having a fixed wavelength and amplitude. It’s an oscillation, which is not quite the same thing. Not everything that goes up and down repeatedly is a cycle.

Thanks for that remark, Hr Lvenson.

I once gave Killian also a thorrough lecture of Pattern recognition…. signal analysis and understanding.

The general principle of consciousness is that you tend to sense and grasp what you allready somehow expect to see and hear and feel. And supress and ignore “noise and bullshit”. Or what hardly interests you. Even when that is much stronger signals.

This is a vital property of mind, the LOGOS..

“Nihil est in intellectu quod non prius fuerit in sensu..” that is n0t true. A- priori Cathegories ideas mentality archetypes comes first.

It rules for astronomy faint planets and galaxiwes, and for shabby written messages.And further for radio and other listening to faint and dubious signals under noisy conditions. If the “conscept” is there of what it is about and might be and entail, then you hear it quite much better because conscious mind “repairs” foggy dirty holes in the signal.

You are able to “Glimpse” it at least.

In the wilderness, what is it really? is it a troll?, a dragon? or a bear? or just common stones mosses and rubbish in the dusk?

Having a false or rather unreasonable not very plausible consceptual idea of what it might eventually be for thing, does shield and block you off from grasping and finding out.

In that context , that frameous “cycle” for anything in the climate and the weathers and in the geophysics, is rather quite stupid and dilettantic and should be ridiculed. As hinduistic psevdo buddhistic new age folklore chosmology or marxist leninism vulgar psevdo- hegelianism that must be shrubbed and rinsed out first.

Because it is not that easy to repeat the success of Milancovic.

Your basic ideas of what it possibly might be, your basic model conscepts, namely your basic EXPERIMENTAL ARCHETYPS follow you all the way all your life and if you are unlucky there to have had ugly priests , GURUs and teachers, such things , may poison your brains, your feelings, and your thoughts.

The fameous cycle and the quite popular chosmic wheel the pure Sinus and the straight line…. as managed through the atomic molecular DATA particular digital LEGO with confidence and error- bars……

……..is some of the least appliciable model conscept of Nature and Reality.

Of course it is. Consider that tidal cycles are also a thing even though the repeat cycle is very long due to the incommensurate nature of the 4 major tidal factors (3 lunar and 1 solar). What is not generally acknowledged is that applying the slightest bit of non-linearity to the complex tidal cycle will make the cycle inscrutable (to put it mildly). A few years ago, I was able to come up with an alternate solution to Laplace’s Tidal Equations applied to ENSO (see Mathematical Geoenergy, Wiley/2018). This formulation kind of looks like the classical sinusoidal wave solution but it includes a strong linearity that bears some resemblance to the Mach-Zehnder interference modulation well-known to spectroscopists.

Now consider that Mach-Zehnder modulation has increasingly being used for optical pattern encryption (see https://www.spiedigitallibrary.org/journals/optical-engineering/volume-61/issue-3/033101/Phase-shifting-hiding-technology-for-fully-phase-based-image-using/10.1117/1.OE.61.3.033101.short ). What does that imply? Well bad news for anyone that thinks that extracting the periodic signal from ENSO is going to be a walk in the park. I have spent most of my time since formulating that non-standard wave solution to exploring techniques that can be used to come up with a canonical fit by essentially decrypting/decoding an assumed M-Z modulation to the ENSO signal. The software for doing this is available on GitHub, under /pukpr

Bottom line: Nature does not readily reveal it’s secrets. So, will more compute cycles reveal them? Will some variation of machine learning reveal them? Or will working smart reveal them?

BPL: “ENSO is not a cycle “

PP: Of course it is.

BPL: Of course it is not, and the more you double down on this obvious mistake, the stupider you look.

Hr Pukite

A wave that is reflected and comes back mirrored and phase- coupples with the incoming wave by non- classical- non newtonian forces , that makes a standing wave.

It sticks and glues and phase- coupples and drives in space by van der waals forces.

When it sounds and whizzles out of empty air with no mooving or oscillating mechanical parts, it does it the describede d/on way and it is quite common in the universe. Electrical and electromagnetic oscillating currents do it the same way, as for instance in radio transmitters inside the conducting electromagnetic metal volume fields. .

But if the wave breaks and falls into material and energetic chaos,…. that rather “ends” that wave wityhout reflecting or conducting it further, is a breaking or falling wave, not a standing wave, and not a cycling thing..

The white breaker chaos at sea, the end and death of waves, should have told you of this. And we find the same breakers and burble and hysteresis phaenomena in electrically driven chladni plates with variable forcing sinus input in watt an Hz sustained sinus input.

And in radio transmitters if driven to hard and in conflict with their endconditions. Then youn get “wolf im Ton” burble and hysterese there also.

and in musical wind instruments pnevmatic oscillators.

and in the experimental water bath- the windtunnel and experimental shipstank.

and in oscillating flames and plasma.

a breaking crushing splashing and falling wave in the middle of its field in space and time should not be called a standing wave. But taklen for serious as what it is and not in terms of what it is not.

There you should think it over one more time at least for the ENSO in the Pacific. “Unlinear” does not save and explain it “Psi” the operator of non obedience and not understanding things, will not help you either.

a splash and a crack and a foam and a chaos is not a cycle. It is not cycling on further . and does not reflect or conduct the wave further in time and space.

Eventual more waves must then origine , stand up again for anew.

It is material and phaenomenal dis- continuity. in macro space and time.

= easily shown on oscilloscope and easily found and heard and seen and shown in nature also.

Remember, there is is no “Psi” and no “alians” But it a fameous el- dorado of supersticion and human quackery.. The sea dragon and serpent for instance, and the Gremlins.

There is no “Psi” at the skunkworks either, where they paint their planes with powdered Fe3O4 in a skunky solution to crush and to kill incoming electropmagnetic wave signals and transform that to heat, It is Skunk and powdered magnetite, at work, not “psi … tiniest unlinear…”. that just makes people stupid.

For “Yell” and tone in a room you neen phase- couppled reflection- eccho, wherefore you do not get that inam eccho- free room.

It is not “unlinear effects” that makes the problem of enso understanding and prediction,..,. it is because that enso- wave does not circulate, cycle and stand. It breaks, splashes and rumbles and falls.

No problem doubling down. Consider sunspot cycles, even though those are even less of a periodic oscillation than ENSO. ENSO at least is triggered or synchronized to the annual cycle, which is agreed on by the majority of researchers — this is also verified from basic signal processing analysis of the NINO34 time-series sidebands . Yet sunspot cycles have no fundamental period, just a heuristic connection to an 11-year periodic envelope. I have tried using my software on solar activity data, and haven’t found anything but weak matches to reported heuristics.

https://github.com/pukpr/GeoEnergyMath/wiki/MMF

They are still referred to as sunspot cycles, tho.

Hr P. Pukite @ whut

Then check your “software” if it is well understood and in order on such things.

I am using eye and ear measurement and selfmade well undersood hardware and find the difference.

With too much positive feedback to itself, , your device and method wil only document itself regardeless of signal imput and say nothing but “…oooOOOOOOOOOOOOooooooo……..” at one and only one cycle per decade year week or second. Simply by its own resistance and capacity.

So your own method and device has obviously gone into eternal spin from eternity to eternity now….regardless of input signal and regardless of how we beat you up and kock you at any point from any side,… it answers only that monochromatic “….oooOOOOOOOOOoooooo….!”

…. until we go to more radical means. cut off your power supply and voltage and shortcut and damp and ground the output of your sublimely professional and scientific, method.ical device….

….that seems to have gone into spin now for a while by too much positive feedback to its own input…

It only documents its own internal capacities and restistance in time by ny = 1/ 2pi * (1/RC )^ .5

I would suggest looking at small-scale fluid dynamics experiments to see how the non-linear harmonics arise, and then imagine it scaling up. The harmonics are generated via a wave-breaking mechanism, creating waveforms compatible with the FD equations but not your garden-variety sinusoids.

This paper:

“An experimental study of two-layer liquid sloshing under pitch excitations”, Journal of Fluids, May 2022

https://www.researchgate.net/profile/Dongming-Liu-7/publication/360620472_An_experimental_study_of_two-layer_liquid_sloshing_under_pitch_excitations/links/62822b7f4f1d90417d70f205/An-experimental-study-of-two-layer-liquid-sloshing-under-pitch-excitations.pdf

@BPL:

“ENSO is not a cycle “

PP: Of course it is.

BPL: “Of course it is not, and the more you double down on this obvious mistake, the stupider you look.”

MS. — Nobody here looks stupider than you and @Carbomontanus.

Two more “Silver Lemons” trophies to line up in your boreholes.

And before you insult established climate scientists from NOAA and METOFFICE, read through their posts and definition of ENSO.

https://oceanservice.noaa.gov/facts/ninonina.html

” Scientists call these phenomena the El Niño-Southern Oscillation (ENSO) cycle. ”

https://www.metoffice.gov.uk/research/climate/seasonal-to-decadal/gpc-outlooks/el-nino-la-nina/enso-description

” These episodes alternate in an irregular inter-annual cycle called the ENSO cycle. ”

So whut ?

A self-proclaimed astrophysicist like you should at least perceive that planets move in large circular orbits. El Nino (Jesus Christ) is called El Nino because it usually begins around Christmas time.

– Is spring-summer-autumn-winter a cycle?

– Is the Walker circulation a cycle that “only” shifts through ENSO?

– Doesn’t La Nina also shift the water cycles and precipitation from the ocean to land areas?

– Are your heads just too flat to fit all these cycles -?

(if you want to say NO to all questions – you have to start reading again at the top)

Whether you’re discussing open/closed systems – circles or other simple things like albedo – you always do it with your snouts amazingly wide open and your output ridiculously flat.

In return, you both have excellent abilities to synchronize your stupidities.

Only for the fact Hr Schürle that you believe rather blindly in the scriptyures here and stumble and are being fooled by your own language, giving a damn to what it is about.

How especially typical of certain Germans.

I have chosen to tease ande po0ison and crush certain keyewords and keye- conscepts of climate surrealism. Smash that cycle and that cycling for instance and they become helpless because they lack words and conscepts, They have got but a cycle to ride against Mann with the scytch ( Der Mann mit der Senze) that scares them so deeply.

(It is no hockeystick, can`t you see that it is a scytch?)

They cannot derive conscepts formulas and language from actual and relevant real experience and learnings because they have no conscepts exept for their catechismic liturgical words such as “cycle—cyclings” for anything that splashes and sloshes and mooves irregularly within large limits.

I gave them “rumble- burble sneezing splashing roaring… Wolf im Ton…” phaenomenologically different from “tone” or “Klang”, and no Harmonical phonetical numeral Helmholz- spectrum. Of the kind you find in laminar and coherent phase couppled oscillating water drops, Chladni plates, driven radio oscillator with LC resonators and antennas, and pnevmatically driven wind instruments and sustained tone organ pipes.

That are to go laminar phase- couppled and coherent flexible dynamic without burble and breakers in the tone.

Also you give a damn to the rest of physics and chemistery that might lead you critically, and badger Mittlere Reife because you seem to lack it , propagating that you are an “artist” but that is surrealism and dadaism.

It is not circling or cycling when it goes elliptic allready, and not at all when it is squeezing rumbling and sloshing. and splashing.

The slosh and the splash and the rumble does not cause the next one and is not caused by the former one. it is not going on an assembly- line in classical industrial space or like a military drill that can be commanded. and ademini9stered by industrialized dilettants…..

… when it is about how to analyze, interprete, and to predict the weathers and the El Ninos,

Then you and “people” must respect theese elementary realities first, so you do not take to inadequate and false thoughts and methods.

You should also really stop teaching inadequate, phaenomenologically incongruent, and false thoughts and methods for it, that are just stupidifying..

I manage the laminar coherent smoothly and harmonical oscillators and deliver those higly refined wind instruments on Master level of craft, and am fameous for it

Because I do not cheat and deny and give a damn to such tings. And tune finely and can predict and guarantee the complex smoothly efficient and flexible dynamic engines also by the same thoughts and art and techniques.

Hr Pukite

“the wave breaking mechanism, creating waveforms,..” do not cause and create any “standing waves” or phase- couppled coherent harmonical travelling waves.,

No “Tone” only severe Wolf im Ton.

You seem to be deeply misconsceived wityh your alternative physics there.

It does not refect aqny given wave or macro and moovement forms, it ends and kills them forever so it cannit “cycle” further..

Wake up and remember elastic and non- elastic collisions., and reversible – non reversible processes.

An elastric bump and a total or severe crash is 2 different categorical things to be known.

In an unelastic crash, matter, energy and impetus will remain, but material form is lost forever.,

and it does not cause the next same kind of event.

That is caused from before and from elsewhere. Call that Psi or Fi and you will never find out.

Your repeating ” whut-whut-whut-whut-whut-whut-whut……..from eternity to eternity in your system is well known as “motorboating” in traditional radio Hi-Fi- systems, that has gone into spin.

Try a small condenser,, a lowpass- filter after on your input resistance. Use RC = t. .

It is not due to the moonn or to Saturnus or to the sun, but is an feedback- error of your own system, documenting and displaying hardly anything but itself and its o0wn spinning that way in its own RC, resistance- capacity- field.

.

Come on, Carbonlifeform, you obviously aren’t trying hard enough. Spotted this paper in my inbox today — “On the Natural Causes of Global Warming” https://journalijecc.com/index.php/IJECC/article/view/30803

Abstract: ” It is demonstrated that temperature anomalies in 1900 – 2009 are inextricably linked to the speed of the magnetic North Pole, used as a proxy for the Earth’s magnetic field; and UFO sightings, used as a proxy for energy transfer from the near-terrestrial space to the Earth’s atmosphere. Also explained is why 2010 – 2016 was a very unusual period and how it affected global temperature and the environment. “ —

International Journal of Environment and Climate Change (April 2022)

The key you missed is UFO sightings ! Might not be due to carbon-lifeforms ! UGW — Unidentified Global Warming

I wonder if k-scale models could better capture land characteristics, such as stable soil organics and water retention. This process is important to global cycling of carbon and water.

Perhaps greenhouse enhancement in local profiles is observed over areas in the process of erosion. i.e σT^4 surface – OLR.

Once land is cleared, loss of soil organics proceeds for up to 100 years before settling usually <1%. A commenter on a previous thread was asking how loss of soil organics can accumulate. It is a function of land area cleared, duration of time, and land management practices. This would be valuable to model.

https://www.researchgate.net/publication/29659561_Application_of_sustainability_indicators_soil_organic_matter_and_electrical_conductivity_to_resource_management_in_the_northern_grains_region/figures

This has significance to water cycles, carbon cycles, and surface energy budgets.

I noticed CNBC recently used a NASA visualization to demonstrate the coincidence between soil tillage in spring & fall, harvest, and annual CO2 cycles. Rates of erosion (loss of stable soil organics and dust production) follow a similar cycle.

https://youtu.be/NJhpoYwAqFA?t=248

In 1989 a Washington policy quarterly asked me to review the state of climate modeling as reported by the NAS in Global Change and Our Common Future,, and Jim Hansen’s then-recent Senate testimony . As today, more computation for less money was sought, but there were already glimmerings of k-scale regional modeling.

I noted in The National Interest that it was “an uncomfortable time ” to be called upon testify to Congress :

“there is presently no joy for atmospheric scientists in having to testify that the answers policy-makers seek are beyond the scope of the available data or the present limits of computational power. Nor is there consolation in the grim realization that their computerized global circulation models have but an ephemeral capacity to predict the future.

They can jump forward to model the climate of the distant future on a “what if” basis, but they can at best conjure up a coarsely realistic picture of global weather that lasts for a few weeks before beginning to disintegrate into gibberish. Even modeling the evolution of a single thunderhead’s birth and death is an absolute tour de force of today’s computer modeling….

“What bothers a lot of us is, ” one modeler remarked, “telling Congress things we are reluctant to say ourselves.” ”

Small wonder-, the computer I wrote on pleading for more computational power boasted 256 K of RAM and ran at a blazing 16 megahertz., and I was looking forward to the release of the first Mac pizza , so I could watch NCAR’s pac-man resolution models live.

The story is set in an unnamed global climate modelling centre on the west coast of Europe. A smartly-dressed visiting scientist is taking photographs when he notices a shabbily dressed local model developer reading the latest IPCC report while running on his laptop a single-column atmospheric model on a challenging stratocumulus-to-cumulus transition case study. The visiting scientist is disappointed with the model developer’s apparently lazy attitude towards his work, so he approaches him and asks him why he is running a single-column model over a remote limited area in the north Pacific rather than a k-scale global climate model. The model developer explains that he ran a 150-km global version of his global atmospheric model in the morning, and found large enough uncertainties in the atmospheric boundary layer and cloud microphysical schemes to be investigated for the next two months.

The visiting scientist tells him that if he runs a high-resolution global climate model, he would be able to get much more sexy results and much more funding, buy a more powerful supercomputer, ask for more money, buy another one in less than half a decade, and so on. The visiting scientist further explains that one day, the model developer could even run a k-scale global climate model which could tell him how strong the Equilibrium Climate Sensitivity is and how close to Clausius-Clapeyron the rate of increase in heavy precipitation would be after a CO2 doubling.

The nonchalant model developer asks, “Then what?”

The scientist enthusiastically continues, “Then, without a care in your publication record, you could sit and read the IPCC reports from WG2 and WG3.”

“But I’m already doing that”, says the model developer.

The enlightened visiting scientist walks away pensively, with no trace of envy for the model developer, only a little pity.

Freely adapted from the short story by Heinrich Böll

It’s good we have these debates, but at the end of the day we as a community have to decide whether the cost/benefit ratio of this project is worth it or not (not specifically for science, but also for those that will benefit from improved climate predictions). If we simply pick at nits, but avoid answering the big question, we simply sow doubt in the minds of those that might be minded to fund the type of international centre we are advocating. As a recent UK Govt Chief Scientific Advisor told me when I briefed him on our proposal: “come back when your community speaks with one voice”.

If we agree that it is worth it, then we have to say so, nits and other inevitable uncertainties notwithstanding. We have tried to argue that by comparison with other big projects (LHC, James Webb etc) this is not a big deal at all. But neither are the costs negligible.

What’s your view on this, Gavin? Worth it or not?

Hi Tim, my feeling is that it is not only a go/no go question but also when? I agree that, in the absolute, saving more lives is always a good reason for conducting more ambitious research. Yet, ultra-high global climate modelling may not be the quantum leap that you pretend and may therefore not be the priority. Once other scientists will have helped us to decarbonize the energy and to reduce the electricity needed by supercomputers, it will be still possible to improve our NWP and S2S forecasting systems in a warmer climate that more parsimonious approaches and, unfortunately, a growing number of observations will have enabled us to characterise sufficiently well to conduct the necessary adaptation (and mitigation) policies.

By focusing on this particular project, as opposed to asking more generally how we could help people benefit from current and foreseeable future climate-related projects, you are skewing the discussion. An attempt to impose a pre-decided approach as a consensus for agencies as opposed to having a consensus emerge organically is more likely to raise hackles than funds.

In discussions I’ve had with US agencies, other funders and other centers, many ideas have been proposed that could greatly improve decision-makers’ ability to both prepare for changes and hone their mitigation efforts. Many of those ideas relate to better accessibility and customization of existing data sources, and linking climate and air quality and public health issues coherently. I note that DestinE has a new ‘Data Lake’ as a core component that would serve a similar role. IMO developing national (and perhaps international) facilities focused on the needs of end users of climate data is a higher priority than using a million times more computational power to marginally improve the statistics on a single season’s rainfall regionally. I think I could also make the case that better integration (of remote sensing, reanalyses, in situ observations and climate models) on a custom data analytics platform would also enable leaps forward in the science as we would be able to truly move towards more process-based analysis and model development.

This is not to deny the interest in k-scale modeling or to prevent the current projects you mention above from moving forward, but rather it is a call for a more balanced and integrated approach that supports the multiple ways in which we (collectively) can make a bigger difference.

I’ll make one further point. Other big physics projects you mention did not spring into existence ex nihilo. They are the product of literally decades of work on prototypes, proofs of concepts, competing designs, tests, changes of course, delays, problems, and (continual!) debate. And the SSC demonstrated that there is no guarantee you’ll even get there in the end. If that’s the road that you envisage our community going down, you can’t short circuit all of that.

The supporters of the k-scale model might be right, the problem is timing. As in, we don’t have time for improving the climate models, we might or might not have enough time for solutions. I want to believe we have time for solutions, so we need to focus on and invest in them.

Speaking of solutions, 100 M€/year could create n wind or solar utilities, n EVs, n railways, n rewilding projects, n sustainable farms, etc. In other words, the opportunity cost of the k-model project is too high.

Quote from Peter Kalmus’ book “Being the change”: “There’s already enough science to know what we need to do, and we’re not doing it. – Ken Caldeira, climate scientist”

Right now, the problem is devolving to how much time an individual scientist might want to devote to k-scale. A set of labs are already developing models, so we will get some feedback about what they add to the modeling. Gavin is basically saying that it is not worth his time to explore this type of modeling; that time can be better devoted to higher priority problems.

There is no need to argue what we should or shouldn’t do this. Some are doing this and we will see if results match some of the expectations. I am trying to picture how such a monster model would be initialized, especially if biogeochemical data are thrown in.

Chill, Mitch.

Like quantum computing, monstrosity is still in its infancy,

I am enjoying reading the discussion and would like to take a slightly different angle to this. What I have read so far is about climate sensitivity and other “large-scale” issues. I note that those happen to be affected by pretty small-scale processes, but I think we are all aware of that. I have also read about extremes and our inability to nail the details around them. The focus on either or both of these topics is missing what I see as one of the biggest needs for the next decades and might well be the reason for the polarised debate.

Based on the broad-scale climate information current approaches (including but not limited to modelling) can provide, most of the world has decided to build carbon-neutral economies over the next few decades. We now must implement the actual measures to achieve the broad-scale mitigation targets. First and foremost this equates to deploying renewables at a scale never seen before. I would like to point out what should be obvious but seems to be forgotten, our already weather dependent economies will become much more so! And I am not talking about the headline disruptive events that are extremes, I am talking about the day to day weather. If you are sceptical, I recommend following up on the material of the 2021 wind drought over Europe, such as https://energypost.eu/climate-change-wind-droughts-and-the-implications-for-wind-energy/ or for the more meteorology minded: https://climate.copernicus.eu/esotc/2021/low-winds

To support this deployment of huge resources we need to know the future of our weather, which requires us to simulate not only realistic mean-rainfall patterns, Hadley Cell boundaries, ENSO variability and jet-stream locations, but the day to day variability in wind, sunshine and rainfall. These are not statistical entities, but they are causally linked to weather systems, such as fronts, cyclones, breaking Rossby waves, …. I am yet to meet a climate modeller who would claim that their model can simulate these systems with any fidelity. And importantly, it is the same weather systems all over the globe (even in the tropics!), that shape the long term averages and extremes discussed by everyone above.

In an earlier contribution the question of why Numerical Weather Prediction has anything to do with this discussion was raised. Well, what we learned in NWP is that simulating realistic weather systems requires significantly higher resolution than that of contemporary climate models. What we are still learning is that turning the realistic simulation of these systems into a realistic simulation of the “weather” (think wind and sunshine to stay on the topic of carbon neutral), requires higher resolutions still. Add to this the ability of k-scale models to remove a massive uncertainty in our current systems – the parametrisation of convection, which I worked on improving most of my scientific life – and it can only boggle my mind why we would not aim to run k-scale models for climate problems.

Much of the framing of the discussion also seems to be an either/or. Why is that? Do we really believe that k-scale modelling will stop groups from working with low-resolution models to explore the climate system’s behaviour? There is no evidence in other parts of the field and also not in other fields. The formation of ECMWF in the late 70s has not stopped global weather prediction in other countries. What it did though is enable scientists from countries who could not afford global NWP systems to engage in building them. Wouldn’t that be something. An international frontier climate modelling centre that all scientists across the globe, not just those in rich countries, could join and contribute to.

In summary, I believe that for us to continue to do very nice science on the climate system and learn more of the things mentioned in many comments above, evolving the status quo might well be adequate, although we would not have found the Higgs boson taking that approach. But, to be true to our increasingly frequent claim that we do this to serve society and support the critical decisions ahead, we need the step change in our modelling capabilities that global k-scale modelling brings. It needn’t be either/or but if we do not unite behind this cause as a major capability need, we will fail society in supporting it through the critical next 2 decades.

My two cents:

K-scale models are extremely important and need to be further developed with high priority. At the same time it would be foolish to limit all the funding and HPC resources to k-scale models until (a) we have fully-coupled Storm-Resolving Models that have reasonable Top-of-Atmosphere balance, and do not drift away within years or months like current versions, and (b) we can afford to run moderate single-model initial condition ensembles with these models. Yes, k-scale models are very promising to study extremes. But to evaluate the added value and particularly to inform decisions, we need good enough statistics as nicely shown in the Deser et al. and follow-up papers. To this end 2-3 decades or even years of coupled simulations are not enough.

Please recall, everybody, how tedious reading repetitive accusations of stupidity and the like gets. Snark is one thing, as at least it has entertainment value. Flat-out name calling is just a bore. (Yes, I’m reminding myself, too.)

“Scrollin’, scrollin’, scrollin’…

Scrollin’, scrollin’, scrollin’…

Scrollin’, scrollin’, scrollin’…

No find!

Scrollin’, scrollin’, scrollin’…

Though my eyes are swollen,

See those posters trollin’,

No find!”

For the younger (& non-North American) crowd, an explainer for that:

https://www.youtube.com/watch?v=PFGyhSifqzA

Kevin, This is nothing — nothing at stake here. Compare to the cruelty of trying to pursue actual research in the earth sciences, read Mike’s entire Twitter thread:

https://twitter.com/IceSheetMike/status/1544690862029819904

You seem to be making an equivalence that would never have occurred to me.

I was saying “Please don’t insult people,” which is somewhat like “Please don’t leave the toilet seat up, or the toothpaste uncapped.” A plea for manners. Active discrimination is a whole ‘nother thing.

All of which said, manners still matter.

Kevin, That’s the equivalence I made, since I was the one accused of being stupid by two other commenters in this post. Not a big deal in the greater scheme of things.

I regret that the insult was made. Indeed, I’m sure it was in the background of my mind when I wrote the comment above.

I really appreciate reading the group contribution (the article) and the discussion that followed. I’ve read papers by many of the authors.

The amount of money outlined in “Ambitious partnership needed for reliable climate prediction” seems very small in the comparison with the scale of the problem and any adaption costs.

When will the underselling of M scale climate modeling cease?

Visual acuity is finite, and the escalation of computer and TV screen resolution may slow a few powers of two past 8K, as the number of pixels displays transcends the number of rod and cone cells in the human retina.

As the ambition of computational hydrodynamics knows only economic bounds , the demand for higher grid resolution may continue until modelers recall the Borges Limit; modeling a planet at native resolution may tend exhaust the supply of real estate available for other purposes, as the chip architecture needed to realize M scale models may require in excess of 1 M2 of silicon.