Two opinion pieces (Slingo et al., and Hewitt et al.) and a supportive Nature Climate Change editorial were published this week, extolling the prospects for what they call “k-scale” climate modeling. These are models that would have grid boxes around 1 to 2 km in the horizontal – some 50 times smaller than what was used in the CMIP6 models. This would be an enormous technical challenge and, while it undoubtedly would propel the science forward, the proclaimed benefits and timeline are perhaps being somewhat oversold.

The technical wall to climb

Climate model resolution has always lagged the finest-scale weather model resolution which, for instance, at ECMWF is now around 9km. This follows from the need to run for much longer simulation periods (centuries, as opposed to days) (a factor of ~5000 more computation), and to include more components of the climate system (the full ocean, atmospheric chemistry, aerosols, bio-geochemistry, ice sheets etc.) (another factor of 2). These additional cost factors (~10000) for climate models can be expressed in the resolution, since each doubling of resolution leads to about a factor of 10 increase in cost (2 horizontal dimensions, half the timestep, and a not-quite proportionate increase in vertical resolution and/or complexity). Thus you expect climate model resolutions to be around 24 times larger than current weather models (and lo, 16*9km is ~144km).

For standard climate models to get to ~1 km resolution then, we need at least a 106 increase in computation. For reference, effective computational capacity is increasing at about a factor of 10 per decade. At face value then, one would not expect k-scale climate models to be standard before around 2080. Of course, special efforts can be made to push the boundaries well before then and indeed these efforts are underway and referenced in the papers linked above. But, to be clear, many things will need to be sacrificed to get there early (initial condition ensembles, forcing ensembles, parameter ensembles, interactive chemistry, biogeochemical cycles, paleoclimate etc.) which are currently thought of as essential in order to bracket internal and structural uncertainties.

Both the Slingo and Hewitt papers suggest some shortcuts that could help – machine learning to replace computationally expensive parameterizations for instance, but since part of the attraction in k-scale modeling is that many parameterizations will no longer be needed, there is a limited role there for this. More dedicated hardware has also been posited instead of using general purpose computing and that has historically helped (temporarily) in other fields of physics. Unfortunately, the market for climate modeling supercomputers is not large enough to really drive the industry forward on its own, and so it’s almost inevitable that general purpose computing will advance faster than application-specific bespoke machines.

The ‘ask’ is therefore considerable.

Potential breakthroughs

Increasing resolution in climate models has, historically, allowed for new emergent behaviour and improved climatology. However, the big question in climate prediction is the sensitivity to changing drivers and this has not shown much correlation (if any) with resolution. Conceptually, it’s easy to imagine cases where an improved background climate sharpens the predictions of change, e.g. in regions with strong precipitation gradients. Similarly, one can imagine (as the authors of Hewitt et al. do) that the sensitivity of ocean circulation will be radically different when mesoscale eddies are explicitly included. Indeed, the worth of these models will rely almost entirely on these kinds of new rectification effects where the inclusion of smaller scales makes the larger scale response different. If the k-scale models produce similar dynamic sensitivities of the North Atlantic overturning circulation or the jet stream position as current models, that would be interesting and confirming, but I think would also be slightly disappointing.

There are real and important targets for these models at regional scales. One would be the interaction of the ocean and the ice sheets in the ice shelf cavity regions around Antarctica, but note we are still ignorant of key boundary conditions (like the shape of the cavities!), and so more observations would also be needed. In the atmosphere, the mesoscale organization of convection would clearly be another target. Indeed, there are multiple other areas where such models could be tapped to provide insight or parametrizations for the more standard models. All of this is interesting and relevant.

Missing context?

But neither of the comments nor the editorial discuss the issue of climate sensitivity in the standard sense (the warming of the planet if CO2 is doubled). The reason is obvious. The sensitivity variations in the existing CMIP6 ensemble are both broader than the observational constraints and are mostly due to variations in cloud micro-physics which would still need to parameterized in k-scale models. Thus there is no expectation (as far as I can tell) that k-scale models would lead to models converging on the ‘right’ value of the climate sensitivity. Similarly, aerosol-cloud interactions are the most important forcing uncertainty and, again, this is a micro-physical issue that will not be much affected by better dynamics. Since these are the biggest structural uncertainties at present (IMO), we can’t look to k-scale models to help reduce them. Note too, that it will be much longer before k-scale models are used in the paleo-climate configurations (e.g. Zhu et al., 2022) that have proved so useful in building credibility (or not) for the shifts predicted by current climate models.

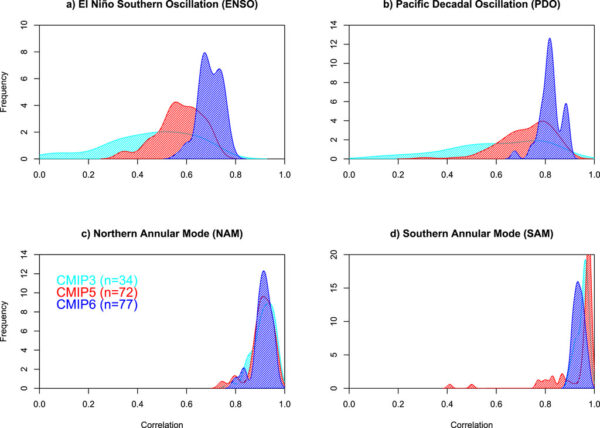

More subtly, I often get the impression from these kinds of articles that progress in climate modeling has otherwise come to a standstill. Indeed, in the Slingo et al. paper, they use Fieldler et al. (2020) (in Box 1) to suggest that rainfall biases in response to El Niño have ‘remained largely unchanged over two decades’. However, Fieldler et al. actually say that “CMIP5 witnessed a marked improvement in the amplitude of the precipitation signal for the composite El Niño events. This improvement is maintained by CMIP6, which additionally shows a slight improvement in the spatial pattern”. This result is consistent with results from just the US models (Orbe et al., 2020), where the correlations related to ENSO and PDO have markedly improved in the latest round of models.

Perhaps this is a glass half-full issue, and while I agree there is a ways to go, the models have already come far. Based on previous successes, I assume that this progress will continue even with the normal process of model improvement.

A hybrid way forward?

Some modeling groups will continue to prioritize higher model resolution and that’s fine. We may even see cross-group and cross-nation initiatives to accelerate progress (though it probably won’t be as rapid as implied). But we are not going to be in a situation where these efforts can be the only approaches for many decades. Thus, in my view, we should be planning for an integrated effort that supports cutting edge higher resolution work, but that also improves the pipeline for parameterisation development, calibration and tuning for the standard models (which will be naturally increasing their resolution over time though at a slower rate). We should also be investing in hybrid efforts, where for instance, the highest resolution operational weather model ensemble (currently at 18km resolution) could be run with snapshots of ocean temperatures and sea ice from 1.5ºC or 2ºC worlds derived from the coupled climate models. Do these simulations give different statistics for extremes than the originating coupled climate model? Can we show that the hybrid set-up is more skillful in hindcast mode? We could learn an enormous amount using existing technology with only minimal additional investment. Indeed, these simulations could be key ‘proof of concept’ test that could support some of the more speculative statements being used to justify a full k-scale effort.

And so…

Back in 1997, the Japanese Earth Simulator came online with similar ambitions to the aims outlined in these papers. Much progress was made with the technology, with the IO, and with the performance of the climate model. Beautiful visualizations were created. Yet I have the impression that the impact on the wider climate model effort has not been that profound. Are there any parameterizations in use that used the ES as the source of the high resolution data? (My knowledge of how the various Japanese climate modeling efforts intersect is scant, so I may be wrong on this. If so, please let me know).

I worry that these new proposed efforts will be focused more on exercising flashy new hardware than on providing insight and usable datasets. I worry that implicit claims that climate model prediction will be as improved by higher resolution as weather forecasts have been will backfire. I also worry that the excitement of shiny new models will lead to a neglect of the workhorse climate model systems that we will still need for many years (decades!) to come.

Over the years, we have heard frequent claims that paradigm-shattering high resolution climate models are just around the corner and that they will revolutionize climate modeling. At some point this will be true – but perhaps not quite yet.

References

- J. Slingo, P. Bates, P. Bauer, S. Belcher, T. Palmer, G. Stephens, B. Stevens, T. Stocker, and G. Teutsch, "Ambitious partnership needed for reliable climate prediction", Nature Climate Change, vol. 12, pp. 499-503, 2022. http://dx.doi.org/10.1038/s41558-022-01384-8

- H. Hewitt, B. Fox-Kemper, B. Pearson, M. Roberts, and D. Klocke, "The small scales of the ocean may hold the key to surprises", Nature Climate Change, vol. 12, pp. 496-499, 2022. http://dx.doi.org/10.1038/s41558-022-01386-6

- "Think big and model small", Nature Climate Change, vol. 12, pp. 493-493, 2022. http://dx.doi.org/10.1038/s41558-022-01399-1

- J. Zhu, B.L. Otto‐Bliesner, E.C. Brady, A. Gettelman, J.T. Bacmeister, R.B. Neale, C.J. Poulsen, J.K. Shaw, Z.S. McGraw, and J.E. Kay, "LGM Paleoclimate Constraints Inform Cloud Parameterizations and Equilibrium Climate Sensitivity in CESM2", Journal of Advances in Modeling Earth Systems, vol. 14, 2022. http://dx.doi.org/10.1029/2021MS002776

- S. Fiedler, T. Crueger, R. D’Agostino, K. Peters, T. Becker, D. Leutwyler, L. Paccini, J. Burdanowitz, S.A. Buehler, A.U. Cortes, T. Dauhut, D. Dommenget, K. Fraedrich, L. Jungandreas, N. Maher, A.K. Naumann, M. Rugenstein, M. Sakradzija, H. Schmidt, F. Sielmann, C. Stephan, C. Timmreck, X. Zhu, and B. Stevens, "Simulated Tropical Precipitation Assessed across Three Major Phases of the Coupled Model Intercomparison Project (CMIP)", Monthly Weather Review, vol. 148, pp. 3653-3680, 2020. http://dx.doi.org/10.1175/MWR-D-19-0404.1

- C. Orbe, L. Van Roekel, �.F. Adames, A. Dezfuli, J. Fasullo, P.J. Gleckler, J. Lee, W. Li, L. Nazarenko, G.A. Schmidt, K.R. Sperber, and M. Zhao, "Representation of Modes of Variability in Six U.S. Climate Models", Journal of Climate, vol. 33, pp. 7591-7617, 2020. http://dx.doi.org/10.1175/JCLI-D-19-0956.1

Geophysical modelers have complained of lack of computational power since the punchcard days of the International Geophysical Year.

Until Moore’s Law cut in in earnest at the end of the 20th Century, global circulation modeling remained too coarse to sustain physical realism for long, and modelers candidly conceded that it would take decades of further parameter research and a vast acceleration of computational speed to move past the limits of uncertainty.

This became source of great political vexation, and considerable glee at the American Petroleum Institute, but as the models advanced and the weather started to catch up with them , parametric uncertainty began to recede and the scientific foundations of a disinterested consensus began to emerge.

Computational grids operating on the scale of naked eye meteorology are seriously good news, but now as ever it remains to be seen how true believers and professional publicists on all sides of the Climate Wars will react.

Will they be governed by the upshift to the point of rewriting their playbooks as fast as reality dictates, or will they persevere in deploying one set of facts for the construction of public opinion, and another for the advancement of public understanding of climate science?

“Back in 1997, the Japanese Earth Simulator came online with similar ambitions to the aims outlined in these papers. Much progress was made with the technology, with the IO, and with the performance of the climate model. Beautiful visualizations were created. Yet I have the impression that the impact on the wider climate model effort has not been that profound”

Really ?

Gavin, now more than ever the world lives on images, and none are more universal than animations of the Earth.

As W.H. Auden noted in The Dyer’s Hand

“Now it is not artists who collectively decide what is sacred truth, but scientists, or the scientific politicians, who are responsible for forming mankind in the true faith. Under them, an artist becomes a mere technician, an expert in effective expression, and who is hired to express effectively what the scientist-politician requires to be said”.

It is technically and intellectually exciting and I understand why modelers, in any field, want to improve the accuracy of predictions, but even more so in a highly consequential field: climate science.

However, the current climate models have proved accurate enough to lead to action. Why we don’t take it and invest all our resources, including financial, in action instead of better models, is difficult to understand. It is not better models that will convince ignorants, obscurantists and vested interests that our fossil-based living is posing an existential threat to life. It is action by the rest of us.

In what regards computational power, I think one solution might be shared computing. I have been running since 2011 the https://www.climateprediction.net/ models on my computer. It is a good use of idle capacity and a valuable way to contribute to science together with many other global citizens.

Interesting, thanks, Gavin!

The tension you describe is one that has parallels in other related realms, including some discussed here on RC. I think most of us are prone to underestimate the difficulties involved in transforming complex systems, whether they be communal science enterprises, energy economies, or social structures writ large. That’s not to say that profound transformations are either impossible or undesirable, of course. But in many contexts, it may take rather more time than we’d either like, or have.

Maybe a comparison from a different field of ecology is appropriate here. My experience with planning the environmental impact of building projects is that, if somebody really wants to build whatever the impact, the study of details for possible avoidance measures or continuous ecological functionality-measures (CEF) becomes more and more detailed—until it gets so abstract, that anything planned seems to fulfill the requirements of nature protection law, at least on paper.

By “has remained largely unchained” did you mean “largely unchanged?”

[Response: Ha. Yes I did. – gavin]

A couple of comments went into spam by mistake and I didn’t realize before deleting them. Please resubmit if you can. Sorry.

Disclaimer: I am a layman. My first impression is that this push for k-scale modeling is motivated less by the Science than by dreams of Empire. The Big Iron required usually brings big funding lines and requires a small army of personnel for its care and feeding.

Besides my concerns about Empire, another concern troubles me about this push for k-scale modeling. The suggestions of machine learning raise similar concern. Climate science promises some prediction of the directions Climate Chaos might take and their time frames. I am past worry about climate sensitivity to CO2 given how little action or effect I expect from efforts to slow, or better halt, adding greenhouse gases to the atmosphere. It might be nice to know what we currently know to 1 km resolution, but I would far rather know at a coarser granularity what happens after the nonlinear components of the climate models begin to more plainly manifest. How much knowledge and understanding about climate might be lost or hidden in the coefficients of machine learning — or parameterizations for that matter? How much might efforts at achieving greater resolutions focus attention away from greater efforts to discover and characterize the unknown components of climate that have not been modelled? Given a choice, I would far rather see efforts to compute climates further out into the future — which also makes demands for Big Iron — but before that, I would hope more effort were spent seeking to learn and understand the unknown and unmodelled. My awareness about paleoclimate is largely limited to Hansen et al. 2016 and Dr. White’s 2014 Nye Lecture — it appears Humankind is in for a rough ride. I am not sure how well the existing models capture all the effects that might explain the climate that paleoclimates suggest could be in our future. I want to know how rough the ride could be, where to move for the future, and what kinds of climate will first challenge efforts to adapt. I believe 9 km resolution is sufficient resolution in space for those purposes.

Much as I monitor climate science I also monitor molecular biology. A lot of money and effort has gone into computing ever more accurate descriptions of protein structures. The hope is that function follows form, that knowing the structure of proteins we might better understand their functions and how they operate and interact with other proteins and the menagerie of molecules in the cell. There are ample databases of protein structure and 3-D viewers to use for exploring them. But after many decades of computing ever more protein structures to ever greater accuracy, I cannot but wonder how much efforts to achieve accuracy have diverted funds and attention from efforts to understand how proteins function.

Greater accuracy is a nice metric for assessing the results of a research contract. I do not believe it provides much measure of gain in knowledge and understanding of a problem. Greater accuracy too easily supplants other concerns, and especially so when it also levers funding lines and personnel for an aspiring manager of science.

Yes, you have important points there.

It the particle a consequense of the whole or i9s the whole a consequense (sum and product) ofr the particles?

People live and think accorting to different and contradicting wiews there.

Is the house a function of the brick or is the brick a consequense of the house? And many people are automatically damned sure in what they think about that.

Is the wholeness in the aqvarium or the test tube a function of dry mechanical material atomic and molecular statistics with confidence and error bars?

I heard at the institute of forest research: Yesteryear they were all climbing up in the trees in the wood doing forest research. But now they have all got their desktop PCs that shall help them writing more scientific articles from which we live. ( publish or perish) But they are all sitting there alone back in their offices playing computer games on their PCs.

We had an Atrium with flowers and trees where we could sit together for lunch and discuss flowers and trees also. And there it was understood, said, and confessed.

” what happens after the nonlinear components of the climate models”

Have to realize there are infinitely more non-linear formulations than linear formulations possible (proof: raise any linear relationship to a real-value power, where the number of real numbers is infinite). What that means is that using linear methods to find solutions may be equivalent to the drunk looking for his car-keys under the streetlamp (“a type of observational bias that occurs when people only search for something where it is easiest to look.” ).

Someone or some machine has to explore the territory there as there are no other options.

Is this the future of climate modeling posed in a recent thread? https://www.realclimate.org/index.php/archives/2022/03/the-future-of-climate-modeling/

Hewitt et al state: “Small-scale ocean processes will also play a role in the response to mitigation efforts and possible overshoots of global temperature, given their role in heat and carbon uptake by the ocean”

Slingo et al state: “”The failure of models to capture the observed rainfall response limits confidence in predictions of the current and future impacts of El Niño, and may disproportionately affect regions of the world where population growth is largest and the needed capital for adaptation is the scarcest.””

Dare say that better predictive models could save “countless lives” ?

People can invest in computational solutions any way they want. It’s their money after all. Yet, there are always ways to work smart and come up with modeling solutions on the cheap. I just re-read math wunderkind Terry Tao’s analysis on the shallow water wave equation here: https://terrytao.wordpress.com/2011/03/13/the-shallow-water-wave-equation-and-tsunami-propagation/. Pay attention to the ansatz, as that leads the way.

It’s going to be real fun at a scale of 1 km to specify historic (let alone the paleohistoric) surface structure

At a 1 km scale it’s going to be more than amusing to reconstruct the historical surface furniture (let alone the paleo) during a run

Hi Gavin, thanks for the post.

I’m sometimes wondering whether IPCC should not think of writing a summary for climate scientists. As a CLA of the AR6 WG1 Chapter 8, I was (to be honest not so much) surprised by the Slingo et al. opinion paper that I would not put in the same category as the more careful and better argued article by Hewitt et al..

The AR6 assessment of high-resolution models (including convective-permitting Regional Climate Models) is obviously biased towards their benefits given the fact that nobody wants to run such monsters without showing a somehow “positive effect” (rather than a real added value in most cases). In spite of this systematically ignored “confirmation bias”, the AR6 WG1 Ch8 remains careful in its conclusions (cf. Section 8.5.2.1) and may have been even more without the strong lobbying of the proponents of high resolution.

Of course, transient climate simulations with ultra-high-resolution global climate models are still not available and cannot be assessed. Yet, there are at least two more reasons not to rush in this direction:

– we still need the current-generation GCMs to quantify (changes in) the internal climate variability (large initial condition ensembles) and to better explore the parametric uncertainties (perturbed parameter ensembles) so that we should stop discouraging model developers by arguing that the current-generation GCMs are all the same and all fail at capturing key phenomena, especially when it may not be true (e.g., https://doi.org/10.1088/1748-9326/abd351)

– we may want to wait and see if the key findings of early (and no so clean) convective-permitting RCM intercomparisons (e.g., https://doi.org/10.1007/s00382-021-05657-4) do suggest a better model agreement (for regional climate change) than the former generation RCMs.

More importantly, the AR6 WG3 SPM states that “All global modelled pathways that limit warming to 1.5°C (>50%) with no or limited overshoot, and those that limit warming to 2°C (>67%), involve rapid and deep and in most cases immediate GHG emission reductions in ALL sectors.” Can we really sit on this conclusion after years of convincing our contemporaries that there is a real (and serious) climate issue?

The model reliability assessment challenge cannot, IMHO, be met for parametrized (read non-mechanistic) models. The only available approach here is hindcasting. The conclusions we are trying to support by the modelling are all in the future, where an additional factor is progressively more significant: us.

In fact, it’s the effects of this factor that we are most interested in. It’s what most of the conclusions drawn are about: we must stop this, we must start that etc. Hindcasting can’t tell you anything about how good a model is going to be at forecasting climate impacted by something that hasn’t played a role in your paleoclimate dataset. AI is no help either: you can’t have a training set for AI filled with data from the future, not without a time machine.

So there’s no way we can trust heuristic models to predict emergent behavior. And there’s no way we can look at an emergent factor (us) and call it both relevant for conclusions we draw – and irrelevant for the models we use.

Sorry but IMHO we’ll have to do the actual holistic mechanistic science to have any confidence in our forecasts for the real emergent world. Old models are not good enough, and new meshing with old models is not good enough either. What might help is a new paradigm.

I fully agree, Gavin. Another illustration: already more than 25 years ago I had these discussions with people developeing high-resolution ocean models which resolve eddies, who claimed the sensitivity of the Atlantic Overturning Circulation could be very different, compared to coarse models with paramaterised eddy effects. The idea was that eddies might make the AMOC more stable. Since then, I have not seen any evidence supporting this claim. Let me know whether I missed anything. Instead, paleoclimate evidence has convincingly supported the instability of the AMOC.

I see a great danger of megalomaniac projects like k-scale climate models sapping the climate science funding which would be much more effectively utilised by a host of smaller projects. The lure of BIG tech projects is very tempting for politicians, and a BIG computer running a BIG model is a prestige thing they can show off with, like a flashy big telescope, but it’s probably not the best way to spend limited research funds.

Hr Schmidt

This is more difficult, but I may come in later when I see what the other people have to say.

Model- making and worhipping the same is as much as violence to Moses §2, i. e not sustainable! It brings unluck to your grandcildren and grand grandchildren..

A. Einstein, and N. Bohr, (both of them were jews who knew better) have explicitely warned and adviced against it from own experience and understanding.

I must specify this, so that Dr. G Schmidt also gets a longer mask.

“Professor Bohr, do you believe in the existance of the electron?…”

“…. Hmmmm…. NO!.

I cannot believe that it exists in the same way as tables chairs windows and doors.. blackboard clalk sponge… that we see here around us in this room….. but the devices on the laboratory desk by what we can assume its existance,.. they do exist!”

Bohr was a passionate amateur football player and began his career in the water bath experimental hydraulic situation, so he was apparently very observant and aware in the present and local practical situation. of chosmology.

In my textbook of physical chemistery I find referred to Niels Bohr:

“We must resign on all attempt to visualize or to explain classically the behaviours of the electron during its transition from one stationary state to the other. ….This arose a storm of controversy among philosophers and physicists!”

It is the typical Bohr point of wiew where we can glimpse a still very worshipful Jew.

Einsteinn and Bohr came together an had a chat for a while. That was a typical jewish scolarly chat, 2 cocks in the same basket.

Einstein hated Bohrs discontinuity and indeterminism. Einstein did obviously believe in the continuum and that it can be determined all the way. So he said: “GOD does not play with daise!”

to which Bohr replied: “Albert, do order GOD how to play!”

Einstein was once asked whether it had meant anything and whether he had had any advantage of being a Jew. To which he replied:

” To the seventh and last and when all comes to it, I may have had an advantage after all. Because, we the jews have a cetrain ceremonial training due to the second commandment not to make any pictures and carved models. So we are ceremonially trained on thinking without having to make our pictures and models of it.

In my work the the theories of relativity where you definitely cannot make any visualization or 3d sculptured models,… this may have been to my advantage!”

I say very fine thank you, because the same rules also indeed for microchosmos, for proper chemistery in the flasks and in the test tubes. Nothing is more inhibiting to proper and efficient chemical thought and work than those dry material atomic and molecular particles with spiral spring mechanical bonds between the grains.

I found that I have all in all 5 different ways of writing the electron and the chemical bond on the blackboard. But keep the sponge at hand and keep it wet because that is all false statements about what it really is .

All this, I believe, rules further for macroscopic and climate modeling display and forecasts in virtual reality.

But you can train on the weather forecasts allready and quite important, make such also for yourself. Then go our an look and feel , see and observe if it really is so and whether you are right or misconsceived, because that is really what it is about. For this we have the institute of meteorology to our help. They are also wrong many times, but follow them and see and judge how they think and how they develop or not. Be your own amateur meteorologist and climatologist as good as you can. and see if you can beat them or not.

All weather prophets to be taken serious do it that way. .They follow the best meteorological institutes quite minutely. and make up their minds for themswelves.

Might it be possible to compress the data array by a wavelet transform, and do less math on the compressed data but have similar resolution. If one adjusts the gamma on a wavelet compressed image, is that operation done on the compressed data, or does the data have to be expanded first? https://link.springer.com/article/10.1007/s12650-021-00813-8

Try both and compare the results. :) I don’t like to compress the data, since I don’t mind the math.

Moderator: please delete if not appropriate.

If anyone is seriously interested exploring the output from a set of k-scale seasonal simulations by ECMWF [1], please express your interest and register at:

https://www.olcf.ornl.gov/ifs-nr-data-hackathon/

Model output available at all model levels, every 3 hours for a NDJF seasonal experimental nature run (atmosphere only) using a ECMWF IFS T7999 configuration.

[1] Wedi, N. P., Polichtchouk, I., Dueben, P., Anantharaj, V. G., Bauer, P., Boussetta, S., et al. (2020). A baseline for global weather and climate simulations at 1 km resolution. Journal of Advances in Modeling Earth Systems, 12, e2020MS002192. https://doi.org/10.1029/2020MS002192