Not small enough to ignore, nor big enough to despair.

There is a new review paper on climate sensitivity published today (Sherwood et al., 2020 (preprint) that is the most thorough and coherent picture of what we can infer about the sensitivity of climate to increasing CO2. The paper is exhaustive (and exhausting – coming in at 166 preprint pages!) and concludes that equilibrium climate sensitivity is likely between 2.3 and 4.5 K, and very likely to be between 2.0 and 5.7 K.

For those looking for some context on climate sensitivity – what it is, how can we constrain it from observations, and what observations are available, browse our previous discussions on the topic: On Sensitivity, Sensitive but Unclassified Part 1 + Part 2, A bit more sensitive, and Reflections on Ringberg among others. In this post, I’ll focus on what is new about this review.

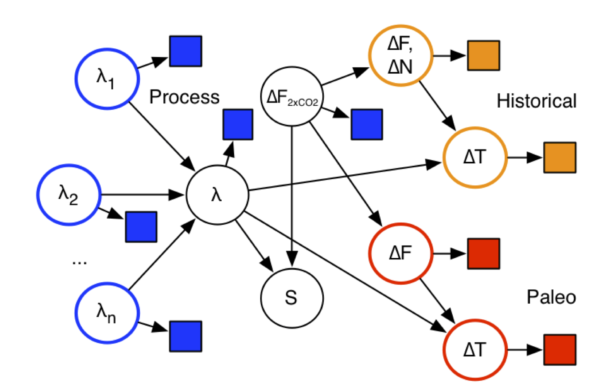

Climate Sensitivity is constrained by multiple sets of observations

The first thing to note about this study is that it attempts to include relevant information from three classes of constraints: processes observed in the real climate, the historical changes in climate since the 19th Century, and paleo-climate changes from the last ice age (20,000 years ago) and the Pliocene (3 million years ago) (see figure 1). Each constraint has (mostly independent) uncertainties, whether in the spatial pattern of sea surface temperatures, or quality of proxy temperature records, or aerosol impacts on clouds, but the impacts of these are assessed as part of the process.

Importantly, all the constraints are applied to a coherent, yet simple, energy balance model for the climate. This is based on the standard ‘temperature change = energy in – energy out + feedbacks’ formula that people have used before, but it explicitly tries to take into account issues like the spatial variations of temperature, non-temperature-related adjustments to the forcings, and the time/space variation in feedbacks. This leads to more parameters that need to be constrained, but the paper tries to do this with independent information. The alternative is to assume these factors don’t matter (i.e. set the parameters to a fixed number with no uncertainty), and then end up with mismatches across the different classes of constraints that are due to the structural inadequacy of the underlying model.

This is fundamentally a Bayesian approach, and there is inevitably some subjectivity when it comes to assessing the initial uncertainty in the parameters that are being sampled. However, because the subjective priors are explicit, they can be adjusted based on anyone else’s judgement and the consequences worked out. Attempts to avoid subjectivity (so-called ‘objective’ Bayesian approaches) end up with unjustifiable priors (things that no-one would have suggested before the calculation) whose mathematical properties are more important than their realism.

What sensitivity is being constrained?

There are a number of definitions of climate sensitivity in the literature, varying depending on what is included, and how easy they are to calculate and to apply. There isn’t one definition that is perfect for each application, and so there is always a need to translate between them. For the sake of practicality and to not preclude increases in scope in climate models, this paper focuses on the Effective Climate Sensitivity (Gregory et al, 2004) (based on the 150 yr response to an abrupt change to 4 times CO2), which is a little smaller than the Equilibrium Climate Sensitivity in most climate models (Dunne et al, 2020). It can allow for a wider range of feedbacks than the standard Charney sensitivity, but limits the very long term feedbacks because of its focus on the first 150 years of the response.

Issues

Each class of constraint has it’s own issues. For the paleo-climate constraints, the uncertainties relate to the fidelity of the temperature reconstructions and knowledge of the forcings (greenhouse gas levels, ice sheet extent and height, etc). Subtler issues are whether ice sheet forcing has the same impact as greenhouse gas forcing per W/m2 (e.g. Stap et al., 2019), and whether there is an asymmetry between colder and warmer climates than today. For the transient constraints, there are questions about the difference between the pattern of sea surface temperature change over the last century compared to what we’ll see in long term, and the implications of aerosol forcing over the twentieth century which is still quite uncertain.

The process-based constraints, sometimes called emergent constraints, face challenges in enumerating all the relevant processes and finding enough variability in the observational records to assess their sensitivity. This is particularly hard for cloud related feedbacks – the most uncertain part of the sensitivity.

The paper goes through each of these issues in somewhat painful detail, highlighting as it does so areas that could do with further research.

Putting it together

There have been a few earlier papers that tried to blend these three classes of constraints, notably Annan and Hargreaves (2006), but doing so credibly while accounting for possible shared assumptions has been difficult. This paper explicitly looks at the sensitivity to the (subjective) priors, the quality of the evidence, and reasonable estimates of missing information. Notably, the paper also addresses how wrong the assumptions would need to be to have a notable impact on the final results.

Bottom line

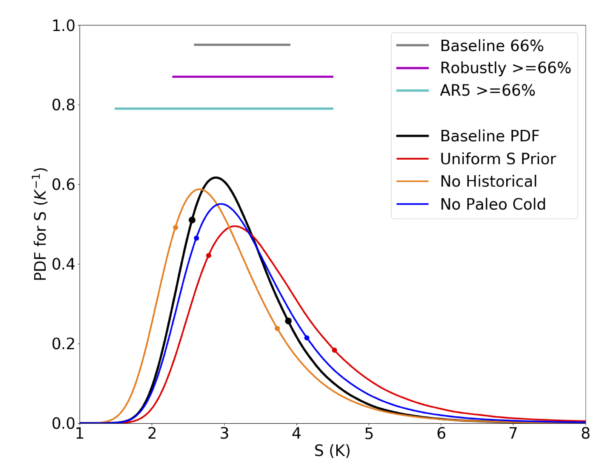

The likely range of sensitivities is 2.3 to 4.5 K, which covers the basic uncertainty (“the Baseline” calculation) plus a number of tests of the robustness (illustrated in Figure 2). This is slightly narrower than the likely range given in IPCC AR4 (2.0-4.5 K), and quite a lot narrower than the range in AR5 (1.5-4.5 K). The wider range in AR5 was related to the lack (at that time) of quantitative explanations for why the constraints built on the historical observations were seemingly lower than those based on the other constraints. In the subsequent years, that mismatch has been resolved through taking account of the different spatial patterns of SST change and the (small) difference related to how aerosols impact the climate differently from greenhouse gases.

This range the paper comes up with is not a million miles from what most climate scientists have been saying for years. That a group of experts, trying their hardest to quantify expert judgement, comes up with something close to the expert consensus perhaps isn’t surprising. But, in making that quantification clear and replicable, people with other views (supported by evidence or not) now have the challenge of proving what difference their ideas would make when everything else is taken into account.

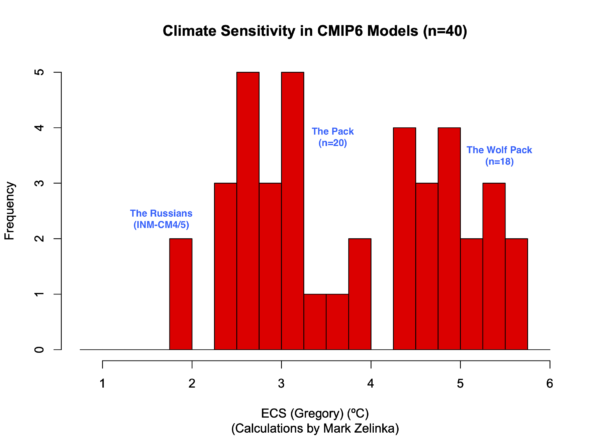

One further point. When this assessment started, it was before anyone had seen any of the CMIP6 model results:

As many have remarked, some (but not all) reputable models have come out with surprisingly high climate sensitivities, and in comparison with the likely range proposed here, about a dozen go beyond 4.5 K. Should they therefore be ruled out completely? No, or at least not yet. There may be special combinations of features that allow these models to match the diverse observations while having such a high sensitivity. While it may not be likely that they will do so, they should however be tested to see whether or not they do. That means that it is very important that these models are used for paleo-climate runs, or at least idealised versions of them, as well as the standard historical simulations.

In the meantime, it’s certainly worth stressing that the spread of sensitivities across the models is not itself a probability function. That the CMIP5 (and CMIP3) models all fell within the assessed range of climate sensitivity is probably best seen as a fortunate coincidence. That the CMIP6 range goes beyond the assessed range merely underscores that. Given too that CMIP6 is ongoing, metrics like the mean and spread of the climate sensitivities across the ensemble are not stable, and should not be used to bracket projections.

The last word?

I should be clear that although (I think) this is the best and most thorough assessment of climate sensitivity to date, I don’t think it is the last word on the subject. During the research on this paper, and the attempts to nail down each element of the uncertainty, there were many points where it was clear that more effort (with models or with data analysis) could be applied (see the paper for details). In particular, we could still do a better job of tying paleo-climate constraints to the other two classes. Additionally, new data will continue to impact the estimates – whether it’s improvements in proxy temperature databases, cloud property measurements, or each new year of historical change. New, more skillful, models will also help, perhaps reducing the structural uncertainty in some of the parameters (though there is no guarantee they will do so).

But this paper should serve as a benchmark for future improvements. As new data comes in, or better understandings of individual processes, the framework set up here can be updated and the consequences seen directly. Instead of claims at the end of papers such as “our results may have implications for constraints on climate sensitivity”, authors will be able to work them out directly; instead of cherry-picking one set of data to produce a conveniently low number, authors will be able to test their assumptions within the framework of all the other constraints – the code for doing so is here. Have at it!

References

- S.C. Sherwood, M.J. Webb, J.D. Annan, K.C. Armour, P.M. Forster, J.C. Hargreaves, G. Hegerl, S.A. Klein, K.D. Marvel, E.J. Rohling, M. Watanabe, T. Andrews, P. Braconnot, C.S. Bretherton, G.L. Foster, Z. Hausfather, A.S. von der Heydt, R. Knutti, T. Mauritsen, J.R. Norris, C. Proistosescu, M. Rugenstein, G.A. Schmidt, K.B. Tokarska, and M.D. Zelinka, "An Assessment of Earth's Climate Sensitivity Using Multiple Lines of Evidence", Reviews of Geophysics, vol. 58, 2020. http://dx.doi.org/10.1029/2019RG000678

- J.M. Gregory, W.J. Ingram, M.A. Palmer, G.S. Jones, P.A. Stott, R.B. Thorpe, J.A. Lowe, T.C. Johns, and K.D. Williams, "A new method for diagnosing radiative forcing and climate sensitivity", Geophysical Research Letters, vol. 31, 2004. http://dx.doi.org/10.1029/2003GL018747

- J.D. Annan, and J.C. Hargreaves, "Using multiple observationally‐based constraints to estimate climate sensitivity", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2005GL025259

Sherwood et al. 2020 is really informative. Builds on prior work on how emergent constraints brings model-based climate sensitivity estimates closer to paleoclimate estimates. It’s also good to know that some of the very high climate sensitivities of the distant past [> ~4K for ECS] don’t necessarily apply to the conditions we’re seeing now and in the near-future.

I’m sure the usual suspects (Lewis, Curry, Christy, McKitrick, etc.) will invent some flimsy pretext for objecting, as usual; otherwise, people might cite scientific evidence to support climate policies they dislike, like people cited scientific evidence on the health risks of smoking to support tobacco policies that various right-wing people disliked.

https://www.annualreviews.org/doi/full/10.1146/annurev-earth-100815-024150

https://www.nature.com/articles/s41561-018-0146-0

https://www.nature.com/articles/ngeo3017

On Sherwood et al. 2020:

https://climateextremes.org.au/wp-content/uploads/2020/07/WCRP_ECS_Final_manuscript_2019RG000678R_FINAL_200720.pdf

https://www.sciencemag.org/news/2020/07/after-40-years-researchers-finally-see-earths-climate-destiny-more-clearly

https://twitter.com/hausfath/status/1285937780246233088

Thanks for a valuable update.

Am I missing something – like a definition of climate sensitivity. Degrees K to what quantity of CO2?

[Response: A doubling. – gavin]

This is a much needed study to reconcile different lines of evidence and come up with an improved consensus climate sensitivity estimate for AR6 compared to AR5. There are many excellent scientists as authors of this study, so I expect that the study has been done very well. I can add more comments once I get through the paper. I suspect that Nic Lewis will inevitably write a blog post criticizing your use of priors (whatever priors you may have used) and claiming that climate sensitivity should be lower than estimated in this paper. I look forward to Lewis’ inevitable criticisms and your inevitable responses.

I forgot to ask; the RC post says this:

“Attempts to avoid subjectivity (so-called ‘objective’ Bayesian approaches) end up with unjustifiable priors (things that no-one would have suggested before the calculation) whose mathematical properties are more important than their realism.”

Should I assume that refers to work like this from Nic Lewis?:

https://link.springer.com/article/10.1007/s00382-017-3744-4

Also, folks should prepare for a deluge of people citing McKitrick and Christy’s latest under-estimate of climate sensitivity, as if it’s credible:

https://agupubs.onlinelibrary.wiley.com/doi/pdfdirect/10.1029/2020EA001281

The usual problems apply, such as:

– a muted ‘negative lapse rate feedback’ would imply greater climate sensitivity, not less

– we already know CMIP6 models, on average, over-estimate ECS; that doesn’t mean CMIP5 models over-estimate ECS or TCR, the claim McKitrick + Christy have tried to support for years

– CMIP5 models, on average, get surface warming correct when one accounts for coverage bias, observed SST vs. modelled ocean air temps, errors in inputted forcings, etc.; and CMIP5 models get the ratio of bulk tropospheric warming vs. surface warming correct, which means their lapse rate feedback is fine

– heterogeneities in the data-sets, especially UAH and its decades-long history of under-estimate warming due to faulty adjustments; also residual heterogeneities in the radiosonde-based analyses that Sherwood’s been pointing out for years

And so on.

How easy is it to translate ECS (&etc) from temperature predictions to other measures/

Sea level is the first measure that comes to mind.

e.g. For a doubling of CO2 what is the equilibrium sea level probability distribution?

So half a degree less sensitive than he thought 6 years ago

https://www.news.com.au/technology/science/university-of-nsw-study-predicts-5c-global-warming-before-2100/news-story/2d21519ac7bcf60a16662cd1f49ecbf8

@3 What is ECS?

Sometimes it helps to read the source article before commenting on it. ECS is a central parameter to the whole field. Here is the inroduction to the article (open source preprint)…

Introduction

Earth’s equilibrium climate sensitivity (ECS), defined generally as the steady-state global temperature increase for a doubling of CO2, has long been taken as the starting point for understanding global climate changes. It was quantified specifically by Charney et al. (National Research Council, 1979) as the equilibrium warming as seen in a model with ice sheets and vegetation fixed at present-day values. Those authors proposed a range of 1.5-4.5 K based on the information at the time, but did not attempt to quantify the probability that the sensitivity was inside or outside this range. The most recent report by the Intergovernmental Panel on Climate Change (Stocker et al., 2013) asserted the same now-familiar range, but more precisely dubbed it a >66% (“likely”) credible interval, implying an up to one in three chance of being outside that range. It has been estimated that—in an ideal world where the information would lead to optimal policy responses—halving the uncertainty in a measure of climate sensitivity would lead to an average savings of US$10 trillion in today’s dollars (Hope, 2015). Apart from this, the sensitivity of the world’s climate to external influence is a key piece of knowledge that humanity should have at its fingertips. So how can we narrow this range?

The current atmospheric CO2 level is about 414 ppm so we are roughly half way to doubling (from preindustrial 280 ppm to 560 ppm).

Based on this study, what is the range of temperature increases we would expect from halfway-to-doubling?

If I recall correctly, global average temperature has increased about 1.2C already — how much more warming is expected from the greenhouse gases we have already added to the atmosphere?

The definition of sensitivity always seems to be expressed using the phrase “a doubling of CO2 from pre-industrial levels.” What is the pre-industrial CO2 level we should use?

One obvious question is: how will this affect carbon budgets?

For example, the famous 420 GtCO₂ from 2018 for 67% chance of keeping to 1.5 °C? Despite an increase in the lower bound, will this new probability distribution paradoxically loosen the budget for the higher probabilities of staying within a given temperature target?

The study is for equilibrium climate sensitivity, and suggests that the transient climate response (transient climate sensitivity), is less. However, I do not understand how studies like this, Greater future global warming inferred from Earth’s recent energy budget https://www.nature.com/articles/nature24672 or Trajectories of the Earth System in the Anthropocene https://www.nature.com/articles/nature24672 fit into this picture.

I would assume that the transient response may be much larger, hence yield PETM carbon spikes, or features like Siberian traps, all the stuff which is involved on the qay to the new equilibrium, the next state.

However, this is discussed as carbon cycle feedbacks, but shouldn’t this also count towards a TCR – since this is not a Planck value, but should be the value derived from the current planetary configuration.

The notion that CCF is tied to a transient response also visible on this 2013 graph https://www.realclimate.org/index.php/archives/2013/01/on-sensitivity-part-i

Imho, what is important for us today is the transient response, not necessarily a value which may be applied in millennia.

jgnfld (8): “[ECS] is the equilibrium warming as seen in a model with ice sheets and vegetation fixed at present-day values”

Do these ECS calculations assume also present day sea-ice extent, i.e. with its present-day albedo effect?

Has as anybody calculated the sensitivity of the model predictions to the above assumptions?

I ask because in 2xCO2 we can expect less albedo from ice and probably less albedo from clouds formed thanks to transpiration by plants, i.e. with a systematic underestimation of the warming by assumptions that those effects are fixed at the present day levels.

So if somebody run sensitivity model for those (and other assumptions) and found that these changes are negligible – fair enough. But IF they are not – then the priority in your statement:

“the sensitivity of the world’s climate to external influence is a key piece of knowledge that humanity should have at its fingertips. So how can we narrow this range?”

may be misplaced, as concentrating on narrowing the range without addressing the assumptions, at some point may offer a false overconfidence in the predictions:

say, you spent a lot of research to narrow down ECS to 2.5-3C, but the melting ice and degraded vegetation increased temps. by additional 1C – then you would have underestimated the real sensitivity of the climate – and suggested that you know more than you really do (mistaking precision for accuracy).

Furthermore, unless the effect of these assumptions is negligible, they would have to be addressed to have any meaningful comparison of the predictions of future ECS with those reconstructed from the past, since geological temps would have been affected not only by CO2 at the time, but also ice and vegetation there – so we may be comparing current apples with paleoclimatic oranges.

MS 10: The definition of sensitivity always seems to be expressed using the phrase “a doubling of CO2 from pre-industrial levels.” What is the pre-industrial CO2 level we should use?

BPL: 280 ppmv. Some studies use 300 ppmv.

Environmentalists like me are NOT alarmed about what might happen to us, and not even as concerned for ourselves as we are about what we’re doing to others. It’s about caring. And time doesn’t matter. A portion our CO2 emissions can be up there for many 1000s of years as David Archer says, going on harming people (if some are still left then) and other creatures from all the many and various knock-on effects of climate change; many of them needless killings when we could have been raking in $$ by becoming energy/resource efficient/conservative and going on alt energy. Killing is killing no matter when it happens, or if my emissions joined with 1000 others’ kills only one person per 100 years. It’s cause for utmost concern, action & activism. Also we tend to follow a holistic approach, considering all harms from resource extraction, shipping/piping, processing, leaks/spills, combustion/use, waste disposal, various pollutions, including acid rain and ocean acidification. You can’t separate these out in the real world, only analytically in science and in the minds of anti-alarmists.

I used to joke about the “alarmist” epithet thrown at us by saying, yeh there’s grave danger in folks rushing to the Home Depot’s lighting aisle to get the most efficient bulbs, shopping carts colliding, a foot is bound to be run over.

But now everything is crystal clear with the pandemic. Various non-alarmists and anti-alarmists in office and in the public are into killing or allowing to be killed many 1000s of people within a few months. That IS horrifyingly alarming to me. So how can we expect them to care at all about climate change.

Lynn, are you saying CO2 emissions are killing people?

piotr (13) ‘Do these ECS calculations assume also present day sea-ice extent, i.e. with its present-day albedo effect?’

No, changes in albedo are intended to be included. For a start, the paleoclimate evidence is from the actual reaction of the Earth system, including albedo changes in sea-ice, ice sheets and vegetation over thousands of years. Also AFAIK all the newer GCM models (in CMIP6) include sea-ice albedo changes and ‘fast’ feedbacks.

What they necessarily omit is the carbon-cycle feedbacks like carbon released from forests or permafrost, since the point of this assessment is to estimate sensitivity to a *fixed* amount of carbon. Earth-system models (ESMs) do attempt to include this. For more info, https://www.carbonbrief.org/analysis-how-carbon-cycle-feedbacks-could-make-global-warming-worse and Heinze et al, ‘Climate feedbacks in the Earth system and prospects for their evaluation’, Earth System Dynamics, 2019. https://doi.org/10.3929/ethz-b-000354206

—-

Which brings me to my own question as a non-scientist, actually more prompted by the Siberian heatwave and forest fires. I’ve often heard it said that the paleo studies constrain ECS from being at the high end (although I notice not all of them), but some GCM models find ECS of 5 K or more. I’ve also heard of the hypothesis that Arctic amplification will cause more blocking patterns and ‘quasi-stationary Rossby waves’ so greater prolonged extremes of temperature and precipitation.

Suppose that is correct and warming leads to more regional extremes of temperature, both hot and cold. Wouldn’t that reduce the overall positive forcing, because by T^4 the hot extremes radiate significantly more? So if GCMs include such stronger weather patterns they would produce lower estimates of ECS than those that don’t feature it? Might that even help reconcile the lower estimates of mean warming from paleo studies with the more sensitive models? (Could temperature heterogeneity be detected from paleo evidence?)

Has this been looked at? If the speculation is correct that ECS is say 3.3 K but extremes become severe with higher greenhouse forcing, it might good news with respect to the *mean* warming for a given total of human GHGs emissions, but bad news with respect to damage, adaptation and carbon feedbacks. You’ve constrained ECS, but Earth System Sensitivity to human emissions might be higher than expected.

Congratulations to all who contributed to this step forward in understanding.

In my comment above 3.3 K would not be ‘good news’; I meant to say 2.3 K could be ‘good news’ except for possibly greater extremes.

I agree with Lynn (15). ‘Alarmism’ strictly means an overstated or unnecessary worry. At the moment the problem is that governments are under-reacting, by a factor of 4 or more. Peter Kalmus tweeted ‘What people don’t understand about climate breakdown, and what needs to become obvious, is that when you burn fossil fuel – whether that’s getting on a plane or green lighting a new coal-burning power plant – you’re killing other people, now and far into the future.’

SecularAnimist (9): back of the envelope suggests response to current levels of CO₂ would be ‘likely’ to be 1.3-2.6 °C with these results, but remember that ECS is the result after holding the same level of carbon dioxide concentrations for thousands of years including things like melting of sea ice. If we suddenly stopped emitting now, much of the CO₂ would be taken up by oceans so you wouldn’t ever see that level of warming.

Here’s my attempt to explain why doubling concentrations from *any* baseline (not just pre-industrial) should produce the same temperature rise: air expands and cools as it rises (you could also say the molecules lose kinetic energy), so for each km you climb it cools by the same amount (about 6 °C); but because pressure is maintained by the column of air above, density halves about every 7 km you rise. So for a given type/frequency of heat/infra-red emitted by the Earth back to space, if you double the concentration of a well-mixed gas, the layers of gas emitting that heat rises by the same amount. This is called the ’emission layer displacement’ way of understanding things: https://doi.org/10.1002/2014JD022466 but I’ve probably got it wrong.

GM 16: Lynn, are you saying CO2 emissions are killing people?

BPL: If so, Lynn is right. Droughts and storms that would not have happened, or would not have been so severe, can be attributed to global warming, which in turn can be attributed to CO2 emissions. So yes, CO2 emissions are killing people.

RE #15, I meant my remarks for the previous post, “Shellenberger’s op-ad.”

RE #16, yes. Not that ambient CO2 in the air kills people (plants love it), but the warming it causes causes many knock-on effects in addition to heat-related deaths, such as crop failure* & a large variety of other threats to life. Many of these are projected into the future at more death-causing levels as our CO2 emissions compound over the decades, centuries, and millennia. And one of those knock-on effects of our emissions is the warming we cause then causing nature to release more & more ghgs, causing more warming, causing more emissions from nature, & the decreasing albedo from snow & ice melting causing more warming.

But one can’t consider CO2 emissions in isolation. The whole fossil fuels material economy has to be considered, which involves harms from resource extraction, shipping/piping, pollution/leaks, processing, other pollutants emitted while emitting CO2, waste disposal. For instance, there is a 30 acre benzene plume under McAllen (the next city over from us), caused by the fossil fuel industry, causing leukemia and other harms, and it could explode.

If you delve into it some more you’ll see how dire the global warming situation is on into the future. Might want to start reading about the end Permian extinction.

_______________

*re crop failure, studies show that crops in some areas are for now doing a bit better with the increasing maximum diurnal (daytime) temps, but worse with the more greatly increasing minimum diurnal (night) temps (seems plants need cooler temps at night to recoup from high daytime temps), cancelling out the effect. For now. But in a decade or so both the increasing day and night temps will be causing harm. And that is only one factor of the very many that are threatening our food supply, which is only one problem of many caused by global warming.

It so happens that the high level of deaths from the 2003 European heatwave was also in part due to the high night temps, because people need cooler night temps to recoup from the stress of high daytime temps.

As higher temperature cause deaths and higher temperatures result from higher CO2 levels it follows that higher CO2 levels cause deaths. This seems obvious.

Are GHG emissions killing people?

Of course.

It’s not easy to attribute specific classes of deaths cleanly to climate change, much less to quantify the numbers with confidence. And of course, there are numerous other causes of premature mortality to muddy the waters.

That said, the WHO asserts that:

(And that estimate appears to be more than a decade old.)

Moreover, boldly wielding the back of an envelope, it’s easy to tot up casualties from climate-related disasters like heat waves, floods, and hurricanes, discount by some estimated factor to account for the likelihood that considerable numbers of those would have occurred somehow anyway–say, -67% –and come up with hundreds of thousands of casulaties and hundreds of billions of dollars of economic loss. Not authoritative, of course, but it does provide a bit of a ‘sniff test’ for the WHO (and similar) estimates.

Cedric, #17

“Suppose that is correct and warming leads to more regional extremes of temperature, both hot and cold. Wouldn’t that reduce the overall positive forcing, because by T^4 the hot extremes radiate significantly more?

The nighttime side of the planet, wherever that happens to be at a given moment, has been warming faster than the daytime side; which means global temperature extremes are decreasing, not increasing. Also, because of Arctic amplification, the temperature difference between the poles and equator are decreasing.

So I finally finished reading the paper. As expected based on the authors, this is a fantastic paper of exceptionally high quality! The authors go to great lengths to account for various sources of uncertainty and account for robustness. They also make great effort to make the paper more accessible to the public by including more background information. I especially liked how the paper strongly links the empirical historical and paleoclimate observations to the underlying physics in a unified model. I could go on about how wonderful the paper, is but I’m sure that the authors are well aware of this.

Here are some comments/criticisms that I have of the paper:

1. For Cowtan and Way, I assume that the paper used the version that uses HadSST3 instead of the newer version that uses HadSST4, since uncertainty for the HadSST4 version is not available. Is this correct? If so, why was there no mention in the paper of the improvements in HadSST4, specifically the improvements to correcting for the shift from engine-intake measurements to buoys?

2. Cowtan and Way neglects measurement and sampling errors of HadCRUT4 and only takes into account bias errors. This means that the paper underestimates uncertainty of temperature change over the historical period. Bias errors would dominate measurement and sampling errors, so this isn’t a big issue. However, why was this issue not briefly mentioned in the paper?

3. In 3.2.1, it was mentioned that CO2 causes plants to close their stromata, thereby reducing low level clouds over land. While I understand the effect, could it be offset by A) plants that have closed stromata may get wetter which can cause more evaporation or B) increased CO2 may cause more primary production which could cause more evaporation. If the plants have less evaporation due to closed stromata then where does the water that would have evaporated go? Is it that plants would take up less water from the soil, so this water would sink to bedrock?

4. Constant ratios of ECS to S, ESS to S, and S to TCR were estimated from climate models. However, these ratios tend to increase with climate sensitivity, which makes sense given the interaction of feedbacks. If climate models are overall oversensitive then this could mean that the approach overestimates ECS and ESS while it underestimates TCR. I think it would have been much better to instead relate the differences between these measures of climate sensitivity to differences in feedbacks and instead adjust Lambda to account for these differences in climate sensitivity, similar to what was done to relate S_hist to S.

5. Given that the paleoclimate record rules out an unstable climate, it might have been sensible to exclude all scenarios where Lambda is not positive. But maybe this was effectively done by eliminating combinations of Lambda where the likelihood is less than 10^-10.

6. I think that the prior used in the baseline scenario is very reasonable. Uniform priors over different feedbacks should have much less problems than uniform priors over climate sensitivity. The choice of a range of (-10,10) for the priors of the feedbacks seemed arbitrary and unjustified. To avoid the arbitrary choice of the range, why not instead use a uniform prior over the range (-infinity,infinity), in which case the posterior distribution of Lambda after taking into account the process understanding would simply be the likelihood function? Although this would make practically no difference given that (-10,10) is a very large range relative to the likelihoods of the feedbacks.

7. It is good that a uniform prior in S was not used for the base case. There are serious problems with using a uniform prior for S, as mentioned in the paper as well as in the past by Nic Lewis. While it may be fair to criticize Lewis’ use of a Jeffrey’s prior as unphysical, physically we know that S is the result of the interaction of various independent feedbacks. Thus one could argue that the prior is unphysical as it should diminish in probability at the upper end of the range of S.

It should be noted that our definition of S is artificial and that perhaps in a parallel universe or in an alien civilization, they might have defined climate sensitivity differently. For example, perhaps the logarithm of S could have been used instead, which would have the advantage of excluding unphysical negative S values. In this case, a uniform prior over (-infinity,ln(20)) for the logarithm of S would correspond to an exponentially-decreasing prior over (0,20) for S, which would give a very different posterior distribution. On this basis, I think it is very desirable to choose prior information in a way that does not depend on the artificial way that S is defined. Using a uniform prior for feedbacks, which is more strongly connected to underlying physics, is one way to do this, although there might be other approaches.

Overall, the use of a uniform (0,20K) prior for S arguably results in arbitrary and unjustified inflation of the posterior distribution of S. While I understand that it is useful to produce results using this uniform prior, in order to make comparisons with previous studies, I hope that this arbitrary and questionable prior isn’t used as justification to keep the upper range of climate sensitivity unnecessarily high in AR6. The other sensitivity tests already provide plenty of checks to robustness.

Billy Pilgrim (23). Thanks. I know winters warm faster than summers and nights warm faster than days, but that’s long predicted and observations match, so I tentatively guessed those effects are already matched in CMIP6 models and don’t account for any discrepancy with observations. (Exactly how much does that ‘evening out’ of temperature reduce the Planck negative feedback compared to one with diurnal variation artificially maintained?)

I was thinking instead of synoptic-scale heterogeneity of temperatures, over which there is disagreement about trend; from that I presume a conclusion about it doesn’t fall out of models easily. I should have been clearer and asked about possibility of greater intensity of local or regional warming (such as Siberian heatwaves) *compared to what is modelled*. Suppose models, particularly the ‘wolf pack’ models like HadGEM3 or E3SM, underestimate Planck (or lapse rate) feedback because they have weather patterns much like today’s. Wouldn’t including the hypothetical blocking patterns bring them closer to paleo evidence? Would it only be a tiny effect? It’s all speculation on my part, and my question is really a concern that we don’t need to just worry about mean quantity of heating, but also changes to its quality and distribution.

Billy Pilgrim: “The nighttime side of the planet, wherever that happens to be at a given moment, has been warming faster than the daytime side; which means global temperature extremes are decreasing, not increasing.”

These would apply only to the cold extremes, not the heat extremes (and it is the latter where Cedric’s question might have merit)

Furthermore – even your argument of cold extremes – is based on the average values (_average_ nightime temps increase faster than _average_ daytime temps) – but these are not the only, and perhaps not the main reasons for the “cold extremes” Cedric is talking about – the changes in air circulations are probably more important regionally than the increase in night-time temps.

For instance, if the strength of the Jet Stream weakens, then it becomes more wobbly – bringing polar air masses further south in the US and staying there for longer, hence more, not fewer, local cold extremes.

So coming back to Cedric’s questions – yes, more heat extremes would increase radiative output, but given the limited regional and temporal extent of these extremes, I doubt it would reduce GLOBAL warming in any significant way.

23: I don’t follow your logic here.

Just because the nights have been warming faster than the days does not imply temperature extremes are decreasing. If the temperature distribution of daytime temperatures in any one location is shifting towards warmer temperatures, the probability of a new temperature record is higher than if the climate is steady. The Arctic amplification effect can also make cold temperature records more likely if it is causing more frequent atmospheric blocking in winter. In the UK, our coldest winter temperatures usually occur when blocking anticyclones form over Greenland or Scandinavia, sending the jet stream troughing south and opening the doors to Arctic air masses.

Cedric, #25

I’m no expert, so anything I speculate about needs to be taken with a tablespoon of salt.

That said, I think you’ll get lost in the weeds trying to figure out how changes to the Jet Stream will effect the homogeneity of global temperatures.

I tried to simplify the problem – what if you could monitor in real time the average temperature of the colder/dark side of the planet as well as the warmer/sunlit side? Both metrics would show a warming trend, but the former would surely be warming the fastest; an increase in heat waves and/or blocking patterns notwithstanding. With the cooler side warming faster, how could temps be getting less homogenous?

Adam Lea, #27

“In the UK, our coldest winter temperatures usually occur when blocking anticyclones form over Greenland or Scandinavia, sending the jet stream troughing south and opening the doors to Arctic air masses.”

IOW, temperatures in parts of the Arctic had become similar to those found at mid-latitudes, and temperatures in parts of the mid-latitudes had become similar to those found in the Arctic. The result was a smaller north/south contrast, not a greater one.

(Of course, with an extra wavy Jet Stream, you could point to bigger contrasts moving west to west. The net is far from clear.)

The paper is far too long to be credible: real information should be concise and clear. It is like the parson’s egg: good in parts. In particular, the analysis of clouds is very subjective and does not relate to the real world. Also, the well-known interaction between CO2 and H2O in attenuating changes in the transmittivity of the mixed-gas is not dealt with at all, a problem when looking at climate sensitivity as CO2 does not exist in isolation.

#29

Should be ‘east to west’, not west to west.

“We find that warming rates similar to or higher than modern trends have only occurred during past abrupt glacial episodes. We argue that the Arctic is currently experiencing an abrupt climate change event, and that climate models underestimate this ongoing warming.

https://www.nature.com/articles/s41558-020-0860-7

Billy Pilgrim (28). ‘With the cooler side warming faster, how could temps be getting less homogenous?’

Trying to clarify again: I mean relatively less homogeneous than in modelled warming, not absolutely less homogeneous over time. I’m suggesting regional distribution of temperatures could diverge from models.

I was posting here in the hope of engaging a professional modeller on their coffee break. I’m guessing it’s a small effect from section 3.2.2, where λ[Planck] has a sd of only 0.1 Wm¯²K¯¹, and models deviate from first principles only by that amount. I wondered if any such effects actually fall under λ[other], but that section says ‘Uncertainties [ in λ[Planck] ] arise from differences in the spatial pattern of surface warming, and the climatological distributions of clouds and water vapor that determine the planetary emissivity’. BTW I’m sure someone said the article would be free-to-view at Rev Geophysics, but it still isn’t.

My other question about carbon budgets also still stands. I expect Global Carbon Project & others will be working on it behind the scenes.

I’m also a little confused between likely (66%) ranges of S and ECS. According to Dr Schmidt’s blog here, the former has narrowed from the classic range to 2.6-3.9 K (or robustly 2.3-4.5 K). However Zeke Hausfather tweeted ‘We find that equilibrium climate sensitivity is likely between 2.6C and 4.1C per doubling of CO2, compared to 1.5C to 4.5C in the last IPCC report’. Is the difference in the upper limit (3.9 or 4.1 K) the difference between S and ECS? But in the first paragraph here 3.9 K is described as an ECS bound.

Cedric,

I thought I understood your questions, but realize now I was mostly over my head. Sorry about that. Clearly, you DO need to chat with a climate modeler.

Still, I ended up learning a few things that I wouldn’t have otherwise. For example, you got me wondering how the faster rate of land warming compared to ocean warming might be affecting the T^4 issue. Turns out the average global SST is more than 10 C higher than the average land surface. This was a shocker, and a surprisingly difficult bit of information to find. Best I could come up with was this article by Bob Tisdale (granted, a fairly controversial figure in climate blogs):

https://bobtisdale.wordpress.com/2014/11/09/on-the-elusive-absolute-global-mean-surface-temperature-a-model-data-comparison/amp/

Bob’s post also included an interesting link to NASA, where they pointed out some of the many problems in trying to determine ‘a global average temperature’.

https://data.giss.nasa.gov/gistemp/faq/abs_temp.html

My Question was: Does CO2 kill people? Nobody has shown that it does. The responses have gone off on a tangent going on to pollution which is a completely different subject.

Note the following:

Data collected on nine nuclear-powered ballistic missile submarines indicate an average CO2 concentration of 3,500 ppm with a range of 0-10,600 ppm, and data collected on 10 nuclear-powered attack submarines indicate an average CO2 concentration of 4,100 ppm with a range of 300-11,300 ppm (Hagar 2003). – page 46

Re 22:

**That said, the WHO asserts that:

Climatic changes already are estimated to cause over 150,000 deaths annually.**

WHO asserts, but has not proved it.

**BPL: If so, Lynn is right. Droughts and storms that would not have happened, or would not have been so severe, can be attributed to global warming, which in turn can be attributed to CO2 emissions. So yes, CO2 emissions are killing people.**

Droughts and storms that would not have happened??? This has not been proved.

RE 20.

**RE #16, yes. Not that ambient CO2 in the air kills people (plants love it), but the warming it causes causes many knock-on effects in addition to heat-related deaths, such as crop failure* & a large variety of other threats to life. Many of these are projected into the future at more death-causing levels as our CO2 emissions compound over the decades, centuries, and millennia. And one of those knock-on effects of our emissions is the warming we cause then causing nature to release more & more ghgs, causing more warming, causing more emissions from nature, & the decreasing albedo from snow & ice melting causing more warming.**

The future is mainly speculation.

**If you delve into it some more you’ll see how dire the global warming situation is on into the future. Might want to start reading about the end Permian extinction.**

I see cooling just as likely as warming.

**It so happens that the high level of deaths from the 2003 European heatwave was also in part due to the high night temps, because people need cooler night temps to recoup from the stress of high daytime temps.**

With reference to 2003, let us go back to 1911 when over 41,000 people died in France alone from the 70 day heat wave. Were these deaths caused by CO2? The CDC in the USA indicates that more people die from cold weather than hot weather. So is it not WEATHER that causes deaths?

Billy Pilgrim: The nighttime side of the planet, wherever that happens to be at a given moment, has been warming faster than the daytime side; which means global temperature extremes are decreasing, not increasing. Also, because of Arctic amplification, the temperature difference between the poles and equator are decreasing.

AB: So the question with regard to cold records is whether the long tails that blocking patterns bring are of greater magnitude than the mean’s warming shift.

So far cold records are still falling. When will the warming of the mean get loud enough that even Saddam’s Mother of all Blocking Events won’t break a cold record?

Gerald Machnee @35,

Your quote looks more a cut-&-paste from a piece of nonsense posted up on the boghouse door on the rogue planetoid Wattsupia than from the reference cited. The quote also appears in National Research Council (2007) ‘Emergency and Continuous Exposure Guidance Levels for Selected Submarine Contaminants’ Vol 1 Ch3 which thus gives some context.

To suggest that the inhalation of CO2 will become a direct physiological cause of death under the atmospheric levels projected under AGW is, of course, entirely risible. So ‘well done, you!!’ for bringing the issue up here. We do enjoy a good laugh.

Yet swivel-eyed lunatics do on occasion use findings from US navy submarines (where CO2 levels can rise to many thousands of ppm) to assure the world that there is absolutely nothing to fear from elevated levels of CO2 in the atmosphere. (I addressed the amazing levels of crass stupidity reached by one such commentator, a senile old twit called William Happer, whose level of stupidity wa so high it took not just one posting to examine his commentary, but also a second posting where I examine his crazy analysis of the hockey-stick curve which apparently is also relevant to the damaging nature of CO2 levels.)

The findings from USN & NASA studies do show that breathing an atmosphere of CO2 for a couple of months will not have any direct adverse effects, this the result of analyses of fit young folk. So how your granny would manage, or a growing child, we cannot say.

If that really was the issue you wished to discuss when you posted your question to Lynn Vincentnathan @16, I would suggest you re-state your question to Lynn Vincentnathan but this time with a little more care as to your meaning.

You might also wish to consider the relevance of such a question on this website which is about climatology not physiology.

#35, Gerald–

You’re playing silly games here. Good faith estimates are not “proof”–positive “proof” is rare in modern science anyway–cf., Karl Popper and his well-known paradigm–but that doesn’t mean you get to ignore them.

But the silliest thing you said is this:

“I see cooling just as likely as warming.”

We have a well-observed, well-understood and well-validated warming forcing which is relentlessly increasing. Your assessment flies in the face of the record of the last four decades, and in the record of predictions made during that time.

Al, #36

“When will the warming of the mean get loud enough that even Saddam’s Mother of all Blocking Events won’t break a cold record?”

I’m not sure if we’re on the same page. How does your question relate to equilibrium climate sensitivity?

@Machnee 35

Sheesh! Reality will catch up to you eventually. Meanwhile, please stop believing and spreading lies and distortions, though I suspect that’s a hopeless ask, since you’ve been told facts are part of the “deep state”.

https://en.wikipedia.org/wiki/Global_temperature_record

https://climate.nasa.gov/vital-signs/global-temperature/

And much much more …

“I see cooling as likely as warming.” (!!)

Holy cow, it’s been years since I’ve seen that put so bluntly, although Andy Revkin used to allow some troll on his old NYT blog to repeat it over and over.

I used to offer the trolls a USA-upper-middle-class-yearly-salary-sized bet on that trend over decades, but alas never got to top of my retirement nest egg by having anyone take the bait. This would be a good time to start one though, as averages by calendar decade are easily understandable by the ordinary TV news watcher, and we’ve hardly begun this calendar decade.

Gerald Machnee,

Yes. CO2 at contrations of 100000 pmv can cause suffocation per the CDC, but that it irrelevant to the discussion.

And I am sorry. This site is about science, not mathematics. In math you get proofs. In science you get evidence.

Gerald: “I see cooling just as likely as warming.” Based on? Certainly NOT evidence.

Please educate yourself. Go to the “Start Here” tab in the upper left of this webpage. Read. Feel free to come back and ask questions. Until you do, it is unlikely you will benefit from this discussion.

Gerald Machnee #35: My Question was: Does CO2 kill people? Nobody has shown that it does.

Of course it does. It’s a waste product to animals, just like oxygen is a waste product to photosynthesizing creatures.

Actual deaths – Lake Nyos in Cameroon – August 26, 1986 – over 1,700 people in one night, and their cattle. Suffocation as heavier-than-air CO2 filled the area one night. https://en.wikipedia.org/wiki/Lake_Nyos_disaster

Any questions beyond that? CO2 does more than exist as a waste gas animals must expel to survive. Living things can’t do well when their cells are filled with waste – the plants that love our CO2 can’t put up with the O2, either, so they expel it.

GM 35: I see cooling just as likely as warming.

BPL: You never had a class in statistics and probability, did you?

@Cedric Knight 26 Jul 2020 at 3:32 AM “I’ve also heard of the hypothesis that Arctic amplification will cause more blocking patterns and ‘quasi-stationary Rossby waves’ so greater prolonged extremes of temperature and precipitation.” It’s more than a hypothesis –

“Two effects are identified that each contribute to a slower eastward progression of Rossby waves in the upper-level flow: 1) weakened zonal winds,

and 2) increased wave amplitude.”

Evidence linking Arctic amplification to extreme weather in mid-latitudes

https://courses.seas.harvard.edu/climate/eli/Courses/global-change-debates/Sources/Jet-stream-waviness-and-cold-winters/2-Francis-Vavrus-2012.pdf

This is of course only one set observations that are “evidence or argument establishing or helping to establish a fact or the truth of a statement.” e.g.PROOF.

Brian Dodge #45

The science is far from settled:

[Overall, Woollings says “there are no clear trends in blocking seen against this noise”.

Shaffrey agrees:

“Historical weather records suggest that blocking hasn’t changed significantly over the past few decades. However, this is somewhat dependent on the method used to define blocking events.”]

[In general, models “predict a general decrease in blocking occurrence in the future”, says Woollings. He explains:

“The dynamics of blocking is focused up at jet-stream level in the atmosphere and here models predict the strongest warming in the tropics. This strengthens the jet-stream winds, making it harder for blocks to form.”]

https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2017GL073336

https://www.carbonbrief.org/jet-stream-is-climate-change-causing-more-blocking-weather-events

Continued (Brian Dodge, #45)

From your link,

“Two effects are identified that each contribute to a slower eastward progression of Rossby waves in the upper-level flow:

1) weakened zonal winds,

2) increased wave amplitude.”

————

Worth noting, the 2019/2020 winter was characterized by:

1) strong zonal winds

2) decreased wave amplitude

https://www.latimes.com/california/story/2020-03-28/if-a-warm-u-s-winter-was-a-preview-of-global-warming-what-part-did-a-polar-vortex-play

Again, the science is far from settled.

I wonder wether ECS is existing at all as a defined number, which can, with the best of all sciences, be given with an uncertainty of, say, one decimal places. It may well be, that different paths and details of approaching the doubling of CO2 lead to a very different – but in itself stable – value, in other words, that our dear earth is a multistable system with many quasistable states.

This would mean, that there is an intrinsic uncertainty in the concept which can by no means and effort be decreased, and the uncertainty we currently have may well be already in the vicinity of the intrinsic uncertainty.

Supporting this assesment is the treshold like behaviour of many subsystems. An argument against it is the strong stochastic variation in time of the climate / weather system, that makes it pass through many different temporary states, smooth out the nonlinearity of the subsystems and end up with a well defined ECS.

If the latter is the case, the very complexity of the system would lead to a well defined ECS in a similar way the high number of particles in a thermodynamic system leads to a well defined temperature.

So the question is: true multistability with badly defined ECS – or enough complexity for a well defined ECS.

Gerald Machnee (35) “Does CO2 kill people? Nobody has shown that it does. The responses have gone off on a tangent going on to pollution which is a completely different subject.”

“tangential” is only your relationship with logic – you come to a CLIMATE change group and lecture others that people killed by the CLIMATIC effects of CO2 are tangential to you, because YOU wanted to prove that emissions of CO2 are harmless by asking about the impact of CO2 ONLY via deaths by inhalation (if you want those – see b.fagan (43))

GM:”Note the following: Data collected on nine nuclear-powered ballistic missile submarines indicate an average CO2 concentration of 3,500 ppm with a range of 0-10,600 ppm”

So what? No normal person would claim that the emissions of CO2 are benign because we are not at conc. that kill people by … mere inhalation. That’s like saying that smoking tobacco is OK, because very few people choke on cigarette smoke while dismissing as unproven (“WHO asserts, but has not proved it”) and as “tangential” posts that linked smoking with lung cancer and other diseases.

GM: “I see cooling just as likely as warming.”

Which says nothing about climate, but quite a bit about you. Next time do some reading _before_ you expose your ignorance to the world. And I mean scientific papers, not blogs of denialist trolls.

BP (over and over again): the science is far from settled.

BPL: That the globe is warming, that we’re doing it, and that it’s extremely dangerous, is all quite settled. The rest is details.