“The greenhouse effect is here.”

– Jim Hansen, 23rd June 1988, Senate Testimony

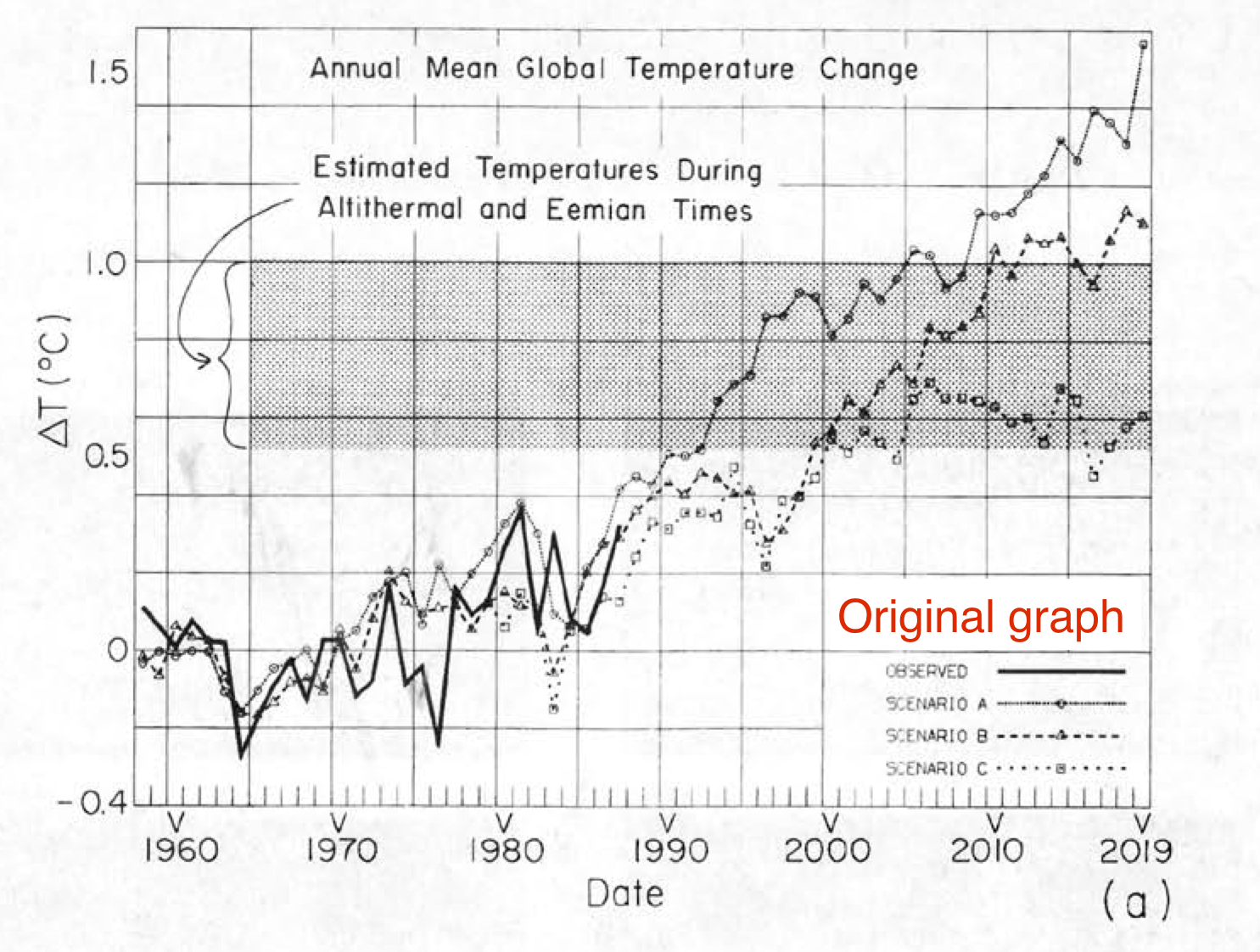

The first transient climate projections using GCMs are 30 years old this year, and they have stood up remarkably well.

We’ve looked at the skill in the Hansen et al (1988) (pdf) simulations before (back in 2008), and we said at the time that the simulations were skillful and that differences from observations would be clearer with a decade or two’s more data. Well, another decade has passed!

How should we go about assessing past projections? There have been updates to historical data (what we think really happened to concentrations, emissions etc.), none of the future scenarios (A, B, and C) were (of course) an exact match to what happened, and we now understand (and simulate) more of the complex drivers of change which were not included originally.

The easiest assessment is the crudest. What were the temperature trends predicted and what were the trends observed? The simulations were run in 1984 or so, and that seems a reasonable beginning date for a trend calculation through to the last full year available, 2017. The modeled changes were as follows:

- Scenario A: 0.33±0.03ºC/decade (95% CI)

- Scenario B: 0.28±0.03ºC/decade (95% CI)

- Scenario C: 0.16±0.03ºC/decade (95% CI)

The observed changes 1984-2017 are 0.19±0.03ºC/decade (GISTEMP), or 0.21±0.03ºC/decade (Cowtan and Way), lying between Scenario B and C, and notably smaller than Scenario A. Compared to 10 years ago, the uncertainties on the trends have halved, and so the different scenarios are more clearly distinguished. By this measure it is clear that the scenarios bracketed the reality (as they were designed to), but did not match it exactly. Can we say more by looking at the details of what was in the scenarios more specifically? Yes, we can.

This is what the inputs into the climate model were (CO2, N2O, CH4 and CFC amounts) compared to observations (through to 2014):

Estimates of CO2 growth in Scenarios A and B were quite good, but estimates of N2O and CH4 overshot what happened (estimates of global CH4 have been revised down since the 1980s). CFCs were similarly overestimated (except in scenario C which was surprisingly prescient!). Note that when scenarios were designed and started (in 1983), the Montreal Protocol had yet to be signed, and so anticipated growth in CFCs in Scenarios A and B was pessimistic. The additional CFC changes in Scenario A compared to Scenario B were intended to produce a maximum estimate of what other forcings (ozone pollution, other CFCs etc.) might have done.

But the model sees the net effect of all the trace gases (and whatever other effects are included, which in this case is mainly volcanoes). So what was the net forcing since 1984 in each scenario?

There are multiple ways of defining the forcings, and the exact value in any specific model is a function of the radiative transfer code and background climatology. Additionally, knowing exactly what the forcings in the real world have been is hard to do precisely. Nonetheless, these subtleties are small compared to the signal, and it’s clear that the forcings in Scenario A and B will have overshot the real world.

If we compare the H88 forcings since 1984 to an estimate of the total anthropogenic forcings calculated for the CMIP5 experiments (1984 through to 2012), the main conclusion is very clear – forcing in scenario A is almost a factor of two larger (and growing) than our best estimate of what happened, and scenario B overshoots by about 20-30%. By contrast, scenario C undershoots by about 40% (which gets worse over time). The slight differences because of the forcing definition, whether you take forcing efficacy into account and independent estimates of the effects of aerosols etc. are small effects. We can also ignore the natural forcings here (mostly volcanic), which is also a small effect over the longer term (Scenarios B and C had an “El Chichon”-like volcano go off in 1995).

The amount that scenario B overshoots the CMIP5 forcing is almost equal to the over-estimate of the CFC trends. Without that, it would have been spot on (the over-estimates of CH4 and N2O are balanced by missing anthropogenic forcings).

The model predictions were skillful

Predictive skill is defined as the whether the model projection is better than you would have got assuming some reasonable null hypothesis. With respect to these projections, this was looked at by Hargreaves (2010) and can be updated here. The appropriate null hypothesis (which at the time would have been the most skillful over the historical record) would be a prediction of persistence of the 20 year mean, ie. the 1964-1983 mean anomaly. Whether you look at the trends or annual mean data, this gives positive skill for all the model projections regardless of the observational dataset used. i.e. all scenarios gave better predictions than a forecast based on persistence.

What do these projections tell us about the real world?

Can we make an estimate of what the model would have done with the correct forcing? Yes. The trends don’t completely scale with the forcing but a reduction of 20-30% in the trends of Scenario B to match the estimated forcings from the real world would give a trend of 0.20-0.22ºC/decade – remarkably close to the observations. One might even ask how would the sensitivity of the model need to be changed to get the observed trend? The equilibrium climate sensitivity of the Hansen model was 4.2ºC for doubled CO2, and so you could infer that a model with a sensitivity of say, 3.6ºC, would likely have had a better match (assuming that the transient climate response scales with the equilibrium value which isn’t quite valid).

Hansen was correct to claim that greenhouse warming had been detected

In June 1988, at the Senate hearing linked above, Hansen stated clearly that he was 99% sure that we were already seeing the effects of anthropogenic global warming. This is a statement about the detection of climate change – had the predicted effect ‘come out of the noise’ of internal variability and other factors? And with what confidence?

In retrospect, we can examine this issue more carefully. By estimating the response we would see in the global means from just natural forcings, and including a measure of internal variability, we should be able to see when the global warming signal emerged.

The shading in the figure (showing results from the CMIP5 GISS ModelE2), is a 95% confidence interval around the “all natural forcings” simulations. From this it’s easy to see that temperatures in 1988 (and indeed, since about 1978) fall easily outside the uncertainty bands. 99% confidence is associated with data more than ~2.6 standard deviations outside of the expected range, and even if you think that the internal variability is underestimated in this figure (double it to be conservative), the temperatures in any year past 1985 are more than 3 s.d. above the “natural” expectation. That is surely enough clarity to retrospectively support Hansen’s claim.

At the time however, the claim was more controversial; modeling was in it’s early stages, and estimates of internal variability and the relevant forcings were poorer, and so Hansen was going out on a little bit of a limb based on his understanding and insight into the problem. But he was right.

Misrepresentations and lies

Over the years, many people have misrepresented what was predicted and what could have been expected. Most (in)famously, Pat Michaels testified in Congress about climate changes and claimed that the predictions were wrong by 300% (!) – but his conclusion was drawn from a doctored graph (Cato Institute version) of the predictions where he erased the lower two scenarios:

Undoubtedly there will be claims this week that Scenario A was the most accurate projection of the forcings [Narrator: It was not]. Or they will show only the CO2 projection (and ignore the other factors). Similarly, someone will claim that the projections have been “falsified” because the temperature trends in Scenario B are statistically distinguishable from those in the real world. But this sleight of hand is trying to conflate a very specific set of hypotheses (the forcings combined with the model used) which no-one expects (or expected) to perfectly match reality, with the much more robust and valid prediction that the trajectory of greenhouse gases would lead to substantive warming by now – as indeed it has.

References

- J. Hansen, I. Fung, A. Lacis, D. Rind, S. Lebedeff, R. Ruedy, G. Russell, and P. Stone, "Global climate changes as forecast by Goddard Institute for Space Studies three‐dimensional model", Journal of Geophysical Research: Atmospheres, vol. 93, pp. 9341-9364, 1988. http://dx.doi.org/10.1029/JD093iD08p09341

- J.C. Hargreaves, "Skill and uncertainty in climate models", WIREs Climate Change, vol. 1, pp. 556-564, 2010. http://dx.doi.org/10.1002/wcc.58

Does this mean that:

“…3.6ºC, would likely have had a better match (assuming that the transient climate response scales with the equilibrium value which isn’t quite valid)”

over the 30 year time period of Hansen’s prediction, the equilibrium sensitivity has been 3.6C? That this 30 years includes some of the slow responses of the earth to CO2 increase?

Victor@42

Tony Heller aka Steven Goddard does not back up assertions with tangible evidence from the reality-based universe. So you have not successfully rained on anyone’s parade but your own.

https://www.desmogblog.com/steven-goddard

> Victor … Tony Heller

Yah, yet another “birther” conspiracy theorist. You do know how to pick’em, Victor.

I think the accuracy of the temperature predictions are rather irrelevant compared to the important possible consequences of the already done emissions and the following rise in temperature. Since some time it has been clear, that even during the warming at the end of the last ice age (which was much slower than the warming we have experienced during the last century not to speak of the last thirty years), there were phases when big parts of the global atmospheric circulation were shifting in just one to three years.

http://science.sciencemag.org/content/321/5889/680

If something like that was to happen now, the consequences for global food production would be fatal.

The important thing now is warning the public of this danger. We have already during the russian heatwave 2010 seen what just that relatively small disruption meant: no russian wheat export 2011 and resulting from that the arab spring.

Johnno @ 35 says:

That is correct, but my point was twofold, first the Tesla battery is just one example of new and radically disruptive technologies applied to grid management. And second there is no longer a need for massive amounts of power for hours at a stretch. We are in the process of transitioning to a completely new energy producing paradigm which is making both coal and nuclear obsolete.

David B. Benson @ 37 says:

Thank you, but I have no horse in that race and am not particularly interested in pursuing that discussion. I think my previous point makes it moot. In any case the initial point I was really replying to, made by Johnno @ 21, was why I think that Dr Hansen, while certainly entitled to his opinion on the benefits of Nuclear power generation, is a completely separate discussion from his quite well documented competence as a climate scientist. Therefore, IMHO, it is not so strange that some climate activists while holding Dr. Hansen in quite high esteem may not necessarily think that nuclear power is such a panacea given how technology has been evolving. A prolonged discussion about the pros and cons of nuclear would be a distraction and does not address the original point.

47

Could Victor be a nom de guerre climatique for Tim Ball ?

It’s amusing to see all the usual suspects crawling out of the woodwork at the mention of Tony Heller’s name. Hello again, everyone!

Why am I not surprised to see a vituperative string of ad hominems and insults in response to a remarkably straightforward video presentation? I find it especially amusing when Heller is accused of rehashing “old stuff” in response to all the hype over forecasts now all of 30 years old. It’s Hansen and Co. who are now recycling all the old stuff — Heller is just trying to keep them honest about it.

Can we cut all the personal crap and get down to the facts?

In 1988, Hansen predicted “Our climate model simulations for the late 1980s and 90s indicate a tendency for an increase in heat wave drought situations in the southeast and midwest United States.”

According to Heller, “since Hansen’s forecast for Midwest drought, the Midwest has had above normal precipitation almost every year.” To support his contention, he displays the following graph, courtesy of NOAA: https://realclimatescience.com/wp-content/uploads/2018/04/2018-04-18044503_shadow.png

In case you might want to accuse Heller of cherry picking, here’s a graph, also courtesy of NOAA, representing “Average Drought Conditions in the Contiguous 48 States, 1895–2015”: https://www.epa.gov/sites/production/files/styles/large/public/2016-07/drought-figure1-2016.png (from https://www.epa.gov/climate-indicators/climate-change-indicators-drought) Do you see an upward trend? I don’t.

In 1988, “Hansen is forecasting a huge increase in the number of 90 degree and 100 degree days” in Washington D.C. and Omaha. Heller then displays a graph of days above 90 degrees in Ashland, Nebraska (weather station closest to Omaha), wherein we see a downward trend from 1896 to 2013. “Similarly the number of 100 degree days has also plummeted,” as pictured in the following graph he displays.

Heller then displays similar graphs representing similar declines in the Washington D.C. area for both 90 and 100 degree days.

Heller then refers to Hansen’s prediction of 2008 that “in five or ten years the arctic will be free of sea ice in the summer.” He then displays this graph, noting correctly that “sea ice volume is the highest it’s been since 2005.” https://realclimatescience.com/wp-content/uploads/2018/06/chart1_shadow-22.png

In 1988, Hansen predicted that “heat would cause inland waters to evaporate more rapidly, thus lowering the level of bodies of water such as the Great Lakes.” In response, Heller displays a “Great Lakes Dashboard,” showing that “Great Lakes water levels have increased and are near record highs.” https://realclimatescience.com/wp-content/uploads/2018/06/2018-06-21070140_shadow-1024×471.png

Heller notes that in 1988 (or possibly 1989), “Hansen predicted that Lower Manhattan would be underwater by 2018.”

According to Hansen, “The West Side Highway [which runs along the Hudson River] will be under water.” Heller then displays the following photo showing the highway more or less as it’s always been, with no sign of flooding: https://realclimatescience.com/wp-content/uploads/2018/06/2018-06-21070835_shadow.png

Heller then displays a graph of lower Manhattan sea levels over the last 20 years: https://realclimatescience.com/wp-content/uploads/2018/06/chart3_shadow-4.png

According to Heller, this graph indicates that “sea level there is about the same as it was 20 years ago.” While sea levels have in fact been steadily rising since around 1900, as can be seen in this more comprehensive graph (https://tidesandcurrents.noaa.gov/sltrends/plots/8518750_meantrend.png), the rise has been slow and steady overall, with no sign of the precipitous increase that would be required to fulfill Hansen’s alarming prophecy.

Admittedly there has been some question over whether Hansen really meant “20 or 30 years,” or the reporter got it wrong and the correct estimate was 40 years. However, if, after 30 years, we see no sign of any tendency toward the sort of extreme acceleration required by Hansen’s prophecy, it’s hard to accept that sea levels could suddenly lurch upward to the necessary extent over the next ten years.

While it might be possible to argue that at least some of Hansen’s predictions (or if you prefer, “projections”) seem to have panned out, I’m sorry but that’s not good enough. I too could predict all sorts of things pertaining to future weather conditions or climate conditions or even stock market prices, and the odds are that at least some of them will come true. Would that make me a prophet? Hardly — as should be obvious.

Comrades,

Ice breaker assistance needed later than expected in the Arctic:

https://thebarentsobserver.com/en/2018/06/midsummer-tankers-get-ice-trapped-near-russian-arctic-port

V 57: In 1988, Hansen predicted “Our climate model simulations for the late 1980s and 90s indicate a tendency for an increase in heat wave drought situations in the southeast and midwest United States.”

According to Heller, “since Hansen’s forecast for Midwest drought, the Midwest has had above normal precipitation almost every year.”

BPL: Precipitation isn’t the only factor in drought. Temperature, wind speed, and a number of other factors also matter. It’s an old prediction of AGW theory that precipitation would rise with temperature. Go get a look at the Penman-Monteith equation for evapotranspiration, Victor. That will begin to tell you why Tony “It snows CO2 in the Antarctic” Heller is wrong once again.

Victor, #57–

What a Gish gallop of nonsense! I won’t try to address every point, but let’s take as a sample the sea ice bit. It’s sourced from here:

https://realclimatescience.com/2018/06/nasa-ice-free-prophesy-update/

It’s quite clear that the ‘five to ten years’ are not a scientific prediction, but an off-hand descriptive statement. (Note that Hansen’s exact words are not even quoted in the report; the statement is a summary by reporter Seth Bornstein.) In 2008 everyone concerned was still stunned by the then-record low extent of fall 2007–following a pretty stunning record set in 2005–and were understandably thinking that it might be mostly forcing, as opposed to forcing plus variability.

More instructive, however, is how Goddard misuses data to suggest that sea ice is somehow just fine. By cherry-picking the Danish volume data, rather than the generally better-regarded PIOMAS data, and by focusing on just two points in the time series, he suggests that sea ice volume has increased since 2008. This is emphatically not the case (unless by ‘increase’ you mean short-term ‘wobbles’.)

PIOMAS long-term record for April and September:

http://psc.apl.uw.edu/wordpress/wp-content/uploads/schweiger/ice_volume/BPIOMASIceVolumeAprSepCurrent.png

(Note that the record-low volume for April was set just last year.)

Long term anomaly trend:

http://psc.apl.uw.edu/wordpress/wp-content/uploads/schweiger/ice_volume/BPIOMASIceVolumeAnomalyCurrentV2.1.png

I’d also add that Goddard is cherry-picking in another way by using the volume data and completely ignoring anything else: the volume data is in fact modeled data (ie., based on reanalysis). In a way, it’s the most important parameter, but it’s also the most uncertain, because ice thickness is really tough to measure on a pan-Arctic basis. And volume is pretty noisy, too, as you can see in the plots, which allows Goddard/Heller lots of latitude to distort the narrative by his selective focus–latitude he certainly takes full advantage of.

The bottom-line reality is that while ‘forecasts’ of an ice-free Arctic by this year–most such, like the reported Hansen comment, were not in fact actual predictions in a proper scientific sense, but descriptive comment– were clearly not correct, the Arctic sea-ice is in a robust decline which continues to this day. Luckily, this June has been relatively kind to it, with relatively slow melt rates recorded. But that isn’t a change in trend; that’s weather. And we are still at about the 3rd-lowest ice extent on record (depending on data set).

@58

So one port in one country is now “the Arctic” according to our esteemed troll? I know deniers like to scream “recovery” every time a wiggle goes up rather than down, but still. This one is a real stretch.

Right now it’s not a record-breaking year. It is however perfectly part of the downward trend observed over the past 30 years. https://nsidc.org/arcticseaicenews/charctic-interactive-sea-ice-graph/

Andy @51,

A Hansen model was used (along with a Manabe model) when the Charney Report set out ECS in 1979 so presumably the ice-cover and the carbon-cycle are modelled in the 1988 version. I would assume the ECS=3.6ºC is derived from the actual model’s ECS=4.2ºC due to the assessment of forcing from 2xCO2 having been higher back then.

So, yes, a 30-year-old model with an effective ECS=3.6ºC matches recent temperature rises. And to say that the Hansen model included “some of the slow responses” probably would also be answered ‘yes’ but it should be made clear with that ‘yes’ that not anything like “all” of those slow responses are modelled.

Count on Weaktor to be a faithful conduit for stupid. Stupid it may be. However, it is useful to dissect it to see how denialists lie by distortion.

First: Drought–here Heller/Goddard carefully selects a statistic that sounds like the quantity Hansen discusses but that differs in a subtle but significant manner. In this case, he is looking at the drought index for the lower 48–which is NOT the southeast or midwest. Average over a big enough area, and you lose the signal. Here is a more apples to apples comparison:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4577202/

As to the ice-free Arctic statement–that statement was made after the unprecedented fall in ice area after 2007. This was not a prediction of the models. It was an acknowledgement that the models grossly underpredict ice loss. So, yes, the models are flawed–but certainly not in a way that supports Weaktor/Heller/Other morons. At the time, NOBODY knew what would happen. Hansen made the mistake of extrapolating the short-term behavior into the future–a forgivable mistake given the unprecedented nature of what had just happened.

And the West Side Highway comment is known to be taken completely out of context–so much so, that we know anyone who cites it is dishonest.

Models are looking pretty good. Again, Weaktor, thanks to you and Tony for being on the other side.

Victor @57 posts copy and paste information “In 1988, Hansen predicted that “heat would cause inland waters to evaporate more rapidly, thus lowering the level of bodies of water such as the Great Lakes.” In response, Heller displays a “Great Lakes Dashboard,” showing that “Great Lakes water levels have increased and are near record highs.” https://realclimatescience.com/wp-content/uploads/2018/06/2018-06-21070140_shadow-1024×471.png

Selective, misleading rubbish that cherrypicks a water levels trend from 1990 – 2015. Look longer back to 1920 – 2108 by adjusting the slider controls on the website, and you can plainly see the water levels have falling linear trend from the 1980’s to 2018, corresponding nicely with the modern global warming period.

The recent so called recovery is not so significant and is no record, when you do this. The long term trend has various short term recoveries, but the overall trend from the 1980’s to 2018 is towards lower water levels.

I don’t frequent the sceptic blogs, so someone may have pointed out this already. Figure 1 here, posted by Gavin does not really show that the recorded temperatures are following Scenario B, but rather Scenario C. By a strange coincidence, the recorded temperatures have jumped in 2005 and 2006, probably due to the El Nino. It is now falling back. At the same time, 2006, there was a drop in Scenario B, due to natural variation? Overall, Scenario C is a better match.

This implies that the models are overestimating the effects of greenhouse gases, and the unthinkable is true – they are wrong.

The radiation trapped by the greenhouse gases is saturated, and the effect of their increase is to raise the temperature of the air close to the surface in the surface layer where no convection happens. The effect is to melt snow and ice and increase evaporation from the oceans. These are the effects we see. However, melting of snow and ice also reduces the global albedo resulting in a rise in global temperatures. This is what is causing global warming, not the direct effect of greenhouse gases.

Although warming is only rising slowly at present, when the Arctic sea ice disappears, then there will be a major change in albedo and we can expect an abrupt climate change.

That, of course, means that any plans to keep temperatures below +1.5C will be scuppered.

Victor (@57) unloads a whole mess of dishonest data. Let’s take a look…

On drought, as pointed out already, Heller shows a graph of precipitation (problem 1 – not a direct measure of drought) for only a limited part of the Northwest (problem 2 – possible cherry-picking alert). The third problem is that the data starts at 1988, taking away the context from the previous decades.

Victor then links to EPA data on an actual drought metric and states that he doesn’t see an increasing trend. Not trusting Victor’s eyes (or my own), I actually did a regression of the last 4 decades (basically of the most dramatic warming period, and most likely to be relevant to Hansen’s study). Guess what? I got a trend showing drought. I got an increasing trend over the last two decades as well. Now, neither trend was significant, but then Hansen did state that it was only a “tendency”, so I think significance is not necessarily a deal-breaker.

So, on drought, I’d say Hansen did OK.

On the “huge increase” in hot days in two regions, Heller doesn’t state which scenario this based on. If it was for scenario A, I am not remotely surprised if we vastly undershot as total forcings didn’t remotely resemble scenario A. But this is Heller, a serial liar, reporting this. He gets NO benefit of the doubt. I would also add that region forecasts are still the least robust, so I can’t imagine they had much skill back in 1988. To ding Hansen on this, even if it’s an honest ding (doubtful), is just silly.

I just don’t believe Hansen made the prediction that was reported about no summer sea ice in the Arctic. It certainly had no basis in any modeled result that Hansen published. I suspect the quote was taken horribly out of context. But what’s interesting here is just how incredibly dishonest Heller was in his video. He showed a graph of annual volume figures that shows volumes staying pretty steady. WOW! I thought for sure they had been falling pretty steadily (note the graph to which Kevin linked). Ahh… Heller showed the data for ONE DAY from each year. Who does he think he’s fooling? (Oh wait, we KNOW who he fooled!)

The lower Manhattan thing is well known and wildly misinterpreted. There’s no point in rehashing it, but it is instructive to see who “goes there”.

Finally, when people talk about the skill demonstrated by Hansen’s model from the seminal 1988 paper, they are talking about one thing: the skill in predicting global warming based on total forcings. To demonstrate that, I repost a link I put up last week:

Zeke Hausfather posted this fantastic graph via Twitter comparing temperatures vs. cumulative forcings for the scenarios and observed.

https://twitter.com/hausfath/status/1010240656004927491?s=12

All I have to say is DAMN that’s good.

Oh, one more thing. THIS is why Victor and his ilk are deniers. They accept uncritically almost any tripe put out by fellow members of the denialati. But when Mann links to some graphs of stratospheric temperatures, he is dismissed as a mathematician and of no account relative the august geographer who wrote a report published by a think tank. Why a geographer’s comments about satellite-measured stratosphere temperatures would hold more weight is beyond me.

Alistair @65, you say “This implies that the models are overestimating the effects of greenhouse gases, and the unthinkable is true – they are wrong.”

Maybe read everything that Gavin wrote?

MartinJB @66: Beyond not trusting Heller on being honest in his reporting, you also have to add outright incompetence, as repeatedly shown by Nick Stokes.

With the “hot days” there remains the problem of changing methodology in measuring temperatures over the last century. Not just different Tobs, but also changes in instrumentation.

t marvell: The WSJ has a pay wall. I suspect others

AB: would never give that rag $1 to see behind the curtain. (but I do give to The Guardian, NPR, and others)

—————–

Kevin M: as ‘tangible’ as that CO2 snow he once claimed fell at the South Pole.

AB: dry ice sublimates at -78.5 degrees C at 1 atmosphere. Vostok Station in Antarctica on July 21, 1983 measured −89.2 °C. So case closed! Steve Goddard didn’t have to check whether it CO2 snowed cuz a degree is a degree, eh?

However, the phase change is based on partial pressure of CO2 (as opposed to total pressure of all gasses).

Good thing Steve Goddard is a snow jobber cuz otherwise all those scientists would be dead when they inhaled dry ice and it exploded their lungs. (OK, I don’t actually know that)

————-

Jim B: still cling to the notion that 1.5C is still achievable?

AB: Because the oceans will keep drawing down CO2 levels at the current rate (if the overturning circulations don’t slow further or stall) Thus, 350 is still achievable. (In Fantasyland)

———

AND FOLKS, STOP POSTING FORCED RESPONSES STUFF ANYWHERE ELSE!!!!! If some twit says something off topic, don’t go nuclear here! Instead, RESPOND ON FORCED RESPONSES. Duh, eh?

I must say I’m puzzled by the use of the term “null hypothesis” in two graphs from the above essay; specifically why the null hypothesis is equated with “persistence.”

As I understand it, the null hypothesis, in laymen’s terms at least, is the generally accepted interpretation, such as, for example, the notion that the Earth is the center of the universe, which was commonly accepted prior to Copernicus. The “alternate” hypothesis is understood as a hypothesis that challenges the accepted view — so Copernicus’ theory would have been, at the time, the “alternate hypothesis.”

Here’s one example of how it’s been defined: “The null hypothesis, H0 is the commonly accepted fact; it is the opposite of the alternate hypothesis. Researchers work to reject, nullify or disprove the null hypothesis. Researchers come up with an alternate hypothesis, one that they think explains a phenomenon, and then work to reject the null hypothesis.” — http://www.statisticshowto.com/probability-and-statistics/null-hypothesis/

The generally accepted view of climate change, prior to the theories of Hansen and certain predecessors, was that it was due to natural variation, which, as it seems to me, would make natural variation the null hypothesis. I can’t imagine that anyone would assume that “persistence” was the generally accepted view, since as is well known, the climate has varied considerably for millions of years.

If we were to accept “persistence” as the null hypothesis, then of course it would be easy to claim that Hansen has refuted it, since all the graphs show temperature rise rather than persistence. If on the other hand, we see “natural variation” as the null hypothesis, which makes far more sense, then there would seem to be nothing in any of Hansen’s projections/predictions that comes close to refuting it — even if all his forecasts had been verified (they haven’t), there is nothing in any of them that challenges the possibility that every change could be due to natural variation.

Re #65 I should have written “the recorded temperatures jumped in 2015 and 2016, probably due to the El Nino. It is now falling back. At the same time, in 2016, there was a drop in Scenario B, due to “natural” variation? Overall, Scenario C is a better match for what really happened.” 2005 and 2006 were just typos.

My apologies to all those who have written to correct me :-)

“since as is well known, the climate has varied considerably for millions of years.”

Not on these time scales, Victor. You might want to try and read Julia Hargreaves paper. Oh wait, that’s science that contradicts your viewpoint, so you’ll do whatever you can to ignore it anyway.

“there is nothing in any of them that challenges the possibility that every change could be due to natural variation.”

Yes there is: there is no known natural driver that would push the variation into a persistent upward trend.

Victor, Aristotle couldn’t have put it better.

But then, he also insisted that quartz was just another form of water, like diamonds and pearls.

Victor @70 You wrote you are ‘puzzled by the use of the term “null hypothesis” in two graphs from the above essay; specifically why the null hypothesis is equated with “persistence.”’

But the essay doesn’t equate the null hypothesis with persistence. It equates it with “a prediction of persistence of the 20 year mean, ie. the 1964-1983 mean anomaly.” Isn’t that the natural variation you want?

@70

“As I understand it, the null hypothesis, in laymen’s terms at least, is the generally accepted interpretation, such as, for example, the notion that the Earth is the center of the universe, which was commonly accepted prior to Copernicus.”

Unfortunately, and as usual, what you “understand” has little relation to reality. Nor does the “Stephanie” you quote. That site, you may note, is directed at nonstats users specifically including AP level. She is trying to be helpful, but it is clear she is not speaking to anyone at a professional level. She also severely glosses over experimental versus nonexperimental usages of the term. But then she is talking to HS students, after all.

WRT your Hansen statements, the most basic null hypothesis is that over time period X “natural variation” (i.e., no trend) explains the observed variation. One could work in more refined null hypotheses that take into consideration the long term “climate is always changing” drivel you bring up. But the fact is that the “always changing” factors 1. operate at rates which are orders of magnitude less than that which is happening and 2. presently act in the cooling, not warming, direction.

I would suggest an actual book, tamino’s comes to mind http://www.lulu.com/shop/grant-foster/noise-lies-damned-lies-and-denial-of-global-warming/paperback/product-11254204.html but surely you would never read or understand it. If you understood the wiki page on the subject https://en.wikipedia.org/wiki/Null_hypothesis, you’d have a start.

Oh, Weaktor, is there any subject not encompassed by your vast cluelessness?

I respond only because there is general confusion about the meaning of a null hypothesis. The null hypothesis is simply an artifact of statistical methodology made possible by the fact that most methods of estimating statistical significance cannot produce an absolute answer, but only a comparative one.

In the above case, the question of interest is whether there is a significant positive forcing. In the absence of such a forcing, we would expect the average temperature to remain the same within the bounds of error due to natural variability. Thus, one could compare the moving average vs. the mean for the preceding period to see if the disagreement is outside what would be expected due to natural variation. This would likely be most easily done using Monte Carlo.

The question you are really asking is whether the data are better explained if your hypothesis is true than if it isn’t and how significant that improvement is.

Victor, #70–

Since natural variation is essentially random or quasi-random noise around a stationary mean, and since that noise is in principle (and in practice) not highly amenable to forecasting, “persistence” is (the practical version of) “natural variability.”

Victor, I don’t usually like people replying to Bore Holed comments (because there’s a reason things end up there), but I have to make an exception in this case. You accused me of fiddling with data and methods. I take my integrity pretty fucking seriously, so you can imagine the impolite two words I have in mind, but I’m gonna be more civil: Sorry Charlie. That’s what your denialist fellow travelers do. I just ran a simple linear regression on the data to which you linked. Doesn’t agree with your eyes? Please. Your ignore-the-numbers eyeball science is trash. Like every one of your arguments. Seriously. Almost every last argument you’ve made since you landed here has been utter garbage.

Victor moves from the argument from incredulity to the argument from incomprehension.

Please, you claim you’re an academic, talk to someone in the college library about how to look things up.

Victor: it seems to me, would make natural variation the null hypothesis. I can’t imagine that anyone would assume that “persistence” was the generally accepted view,

AB: Uh, natural variation averages out to ZERO CHANGE. Your stance that increasing temperatures is the null hypothesis is bunk. I can’t imagine any other null hypothesis other than no change over the teensy weensie timeframes involved. Remember, climate has changed in the past, but ONLY either over thousands of years or due to a serious forcing.

Climate change debate video. Moderator is Lars Larson, from, I think Porkyland, Oregon. Debate starts at 8:15 of this video:

http://www.larslarson.com/weather-wars-a-climate-change-debate-from-the-bloodworks-live-studio/

Full disclosure – I have not listened to much of it yet.

What a load of pseudo scientific mumbo-jumbo we’ve been getting on the very simple notion of what a null hypothesis is. There are two explanations currently under consideration for the changes in global temperature we’ve been seeing over the last 120 years or so: either primarily natural variation or primarily CO2 emissions due to the burning of fossil fuels. Since natural variation would seem to be the only possible driver prior to the time when fossil fuels came into widespread use, natural variation is clearly the default explanation, i.e. the null hypothesis. AGW due primarily to the burning of fossil fuels is the alternate hypothesis. And since natural variation, by definition, varies, then it makes no sense to posit “persistence” as the null hypothesis. There are many forces that could be driving current temperatures up or down over ANY given period – CO2 emission is only one.

Here is yet another definition of the null hypothesis, also based on simple common sense:

“The null hypothesis (H0) is a hypothesis which the researcher tries to disprove, reject or nullify. The ‘null’ often refers to the common view of something, while the alternative hypothesis is what the researcher really thinks is the cause of a phenomenon.” (https://explorable.com/null-hypothesis)

And by the way, the Wiki page to which I was referred above states that “The null hypothesis is generally assumed to be true until evidence indicates otherwise.” (https://en.wikipedia.org/wiki/Null_hypothesis) In other words, it is not necessary to prove that natural variation explains the various ups and downs of temperature since it constitutes the null hypothesis. Burden of proof is on the alternative hypothesis to demonstrate that the null hypothesis is wrong.

72 Marco says, quoting me: “there is nothing in any of them that challenges the possibility that every change could be due to natural variation.”

M: Yes there is: there is no known natural driver that would push the variation into a persistent upward trend.

V: First of all: what persistent trend? Sorry but I can’t find one. What we see in the actual evidence is a roller coaster pattern of ups and downs. The only persistent upward trends occurred either early in the 20th century when CO2 emissions had only a small effect or late in the 20th century over a period of only 20 years. Secondly, the fact that there is no “known” natural driver does not mean that there could not be natural drivers we don’t yet know about. Nor does it exclude the very likely possibility of random drift, driven by chaotic forces that might never be explained. In any case, since natural variation is the null hypothesis there is no need to name any natural forcing that could produce the effects in question. The burden of proof is on the alternate hypothesis.

And by the way: technical definitions based exclusively on a rigid understanding of the role of statistics in science can only serve to muddy the waters. There is a huge difference between mathematics and physics, as explained so eloquently in this brief youtube video clipped from a lecture by Richard Feynman: https://youtu.be/MZZPF9rXzes

“V: First of all: what persistent trend? Sorry but I can’t find one. What we see in the actual evidence is a roller coaster pattern of ups and downs. The only persistent upward trends occurred either early in the 20th century when CO2 emissions had only a small effect or late in the 20th century over a period of only 20 years. Secondly, the fact that there is no “known” natural driver does not mean that there could not be natural drivers we don’t yet know about. Nor does it exclude the very likely possibility of random drift, driven by chaotic forces that might never be explained. In any case, since natural variation is the null hypothesis there is no need to name any natural forcing that could produce the effects in question. The burden of proof is on the alternate hypothesis.”

Mods: Isn’t it about time to Bore Hole significance testing by eye? And, as well, further lectures into the nature of scientific inference to professionals from someone who quotes from now 2 different high school level sites?

Victor, #82–

Let me repeat myself: ‘since natural variation, by definition, varies unpredictably about a mean‘, persistence is the practical metric for natural variation. You’re trying to insist on a distinction which makes no practical difference–except that would be impossible to implement projections at all, since future natural drivers are not yet forecastable with sufficient precision and accuracy.

Which, amazingly–by pure coincidence!–has resulted in a net rise of temperature by better than 1 C now.

Yes, just as we can’t exclude the ‘Vonnegut conjecture’ that there is a chocolate cake somewhere in Jovian orbit. Researchers have been examining natural drivers since at least the late 18th century, and with high intensity during the last half of the 20th-century. The chances that something major is still missed are Vonnegutian for all practical purposes.

Ever hear of a little thing called “conservation of energy?” It’s technically unproven, but *exceedingly* well-accepted.

73 Russell says:

“Victor, Aristotle couldn’t have put it better.”

Why thank you, Russ.

R: But then, he also insisted that quartz was just another form of water, like diamonds and pearls.

V: True. But my critics on this blog insist that the human race is doomed unless the entire world conforms to their outrageous demands. Aristotle may have been wrong about some things, but he was not deluded.

78 MartinJB says:

“Victor, I don’t usually like people replying to Bore Holed comments (because there’s a reason things end up there), but I have to make an exception in this case. You accused me of fiddling with data and methods. I take my integrity pretty fucking seriously, so you can imagine the impolite two words I have in mind, but I’m gonna be more civil: Sorry Charlie. That’s what your denialist fellow travelers do. I just ran a simple linear regression on the data to which you linked. Doesn’t agree with your eyes? Please. Your ignore-the-numbers eyeball science is trash. Like every one of your arguments. Seriously. Almost every last argument you’ve made since you landed here has been utter garbage.”

Here once again is the graph in question:

https://www.epa.gov/sites/production/files/styles/large/public/2016-07/drought-figure1-2016.png

Anyone trying to argue before a group of unbiased scientists for some sort of long-term trend toward more frequently occurring droughts on the basis of this graph would be greeted by indifferent shrugs — for reasons that should be obvious. So you did a linear regression and found an (insignificant) trend. All that tells me is that you are a true believer who refuses to accept ANY evidence that might challenge your certainties. You are the one who’s in denial, and your effort to produce a trend where clearly no significant trend exists proves it. Sorry to ruffle your feathers, but it can’t be helped. I suggest you take another look at the Richard Feyman video I linked to, which might help you understand that mathematics is not physics. Feynman was a distinguished mathematician but his insistence on the value of simple common sense is well known.

Friends,

I think you’re taking the wrong approach with Victor. Instead of arguing with him, accept the fact that he’s unable to learn. Use his misinformation as an opportunity to enlighten the passersby who might be confused by his mendacity.

You could post it in the comments here, or even do a blog post about it. Like this one: https://tamino.wordpress.com/2018/06/30/weak-sauce-from-climate-deniers/

All of the graphs describing global warming show a steep incline after 1970. That is the year jet aircraft transportation was introduced. It is the first time in the history of the earth that the upper atmosphere has been polluted. Is there a connection? My research, energyconservationtech.blogspot.com, says, YES !

Victor@82:

Leaving aside your ignorance, the IPCC’s Fifth Assessment Report concludes

Therefore, the burden of proof is on you.

Piece of advice: you would be wise to rely less on ideology-driven propaganda and more on peer-reviewed scientific literature.

Tamino just now has provided eyeglasses for Victor to see the persistent trend: https://tamino.wordpress.com/2018/06/30/weak-sauce-from-climate-deniers/

Victor (and others): Tamino has a comment on the assertion that there has only been an upward trend over a period of 20 years in the late 20th century:

https://tamino.wordpress.com/2018/06/30/weak-sauce-from-climate-deniers/

As usual, Tamino actually looks at the data in a meaningful way.

Victor @82 repeated: “First of all: what persistent trend? Sorry but I can’t find one….” Here is the analysis you are missing that shows the trend and proves that it continued after the 20 year period you admit:

http://tamino.wordpress.com/2018/06/30/weak-sauce-from-climate-deniers/

Victor #57

“Heller notes that in 1988 (or possibly 1989), ‘Hansen predicted that Lower Manhattan would be underwater by 2018.’ ”

This is just completely false. It demonstrates nothing about Hansen but much about the quality of your source.

First, Hansen was referrring specifically to a low-lying portion of the West Side Highway that was visible from his office, not “lower Manhattan.”

Second, and more important, the timeframe in question was 20 years after a doubling of CO2, not 20 years from the date of the interview.

Since we are still only halfway to a doubling of CO2, the date he was talking about is still decades in the future.

Heller knows this, of course. He just won’t tell you.

Tamino has done some heavy lifting on the Victor/Heller/Goddard unekeptical “skepticism” (aka timewasting, unobservant, and repetitive denial of reality and science, in detail)

https://tamino.wordpress.com/2018/06/30/weak-sauce-from-climate-deniers/

However, I don’t absolve myself and my friends and colleagues here of feeding the troll in some detail. They thrive on responses, hot air at the ready in infinite supply.

Don’t we all have better things to do? Schopenhauer nailed it nearly 200 years ago (38 ways to win an argument, if you’re interested).

Victor @82 is all worried that the recent warming trend is ‘bumpy”. Of course it will be bumpy, because you have some natural variation combining with the increasing greenhouse effect.

The point is long term temperature reconstructions going back thousands of years and see an obvious upwards warming trend since 1920 that stands out exactly like a hockey stick, and its much steeper than previous trends in the distant past, and for example the so called rather weak medieval warm period. Math’s analysis confirms all this. It doesn’t matter if its a bit bumpy because you would EXPECT this.

Furthermore it has been explained to Victor many times that the characteristics of the warming period since 1920, and particularly 1980 are compatible with the greenhouse theory and not a solar forcing.

Its ludicrous of Victor to fall back on some mysterious, implausible, “hidden cause” of the recent warming, which is like saying the evidence points strongly that tobacco is a carcinogen, but wait their “might” be come “hidden cause” we have missed. This is stupidity and wishful thinking and is not a plausible basis for human decision making.

This has all been explained to Victor 100 times. Victor is therefore not listening. He is repeating the same old rubbish over and over, and this falls into the category of denialist propaganda. I support free speech and Victor has a right to SOME space, BUT there’s a point where publishing blatant, repetitive, propaganda becomes stupid.

Perhaps Gavin publishes Victors nonsense because its so obviously nonsense who would care? But I worry that gullible politically motivated people will lap it up.With respect it seems naive to give these people too much space when its repetition and deceptive nonsense.

what persistent trend? Sorry but I can’t find one. What we see…

Lookie! Tamino made pictures for you.

Victor, I suggest you head over to Tamino’s site for an explanation of why your comment, “what persistent trend? Sorry but I can’t find one. What we see in the actual evidence is a roller coaster pattern of ups and downs. The only persistent upward trends occurred either early in the 20th century when CO2 emissions had only a small effect or late in the 20th century over a period of only 20 years.” is wrong.

However, I’m heartened that you think CO2 is a factor in rising temperatures (in your comment, ” There are many forces that could be driving current temperatures up or down over ANY given period – CO2 emission is only one.”). Perhaps you can look up what atmospheric CO2 concentration has done over the last several decades, at least. It’s also positive that you think Hansen may have gotten many things right, in your comment, “While it might be possible to argue that at least some of Hansen’s predictions (or if you prefer, “projections”) seem to have panned out”. Sadly, you added that this isn’t good enough even though you concentrated on a few minor apparent predictions that Hansen may, arguably, have gotten wrong.

Keep learning, you may end up changing your mind!

@Victor. Science concerns explanation. The greenhouse effect is well understood: more greenhouse gas more warming. Nothing else explains the warming. Invoking some mysterious force when we have a perfectly good one is not science, it’s voodoo.

The scientific method is whatever produces science acceptable to the global community of scientists, represented by the Royal Society, National Academy of Sciences, American Association for the Advancement of Science, American Physical Society, American Chemical Society … American Statistical Association etc etc etc. It’s not some rigid procedure proposed by philosophers in the past.

You exhibit not scepticism and a willingness to learn, but disbelief, denial and delusion: You don’t know enough to know you don’t know, but you think you do. No wonder people who know what they’re talking about find you some irritating…

Weaktor: “Nor does it exclude the very likely possibility of random drift, driven by chaotic forces that might never be explained.”

I see Weaktor’s delusion has progressed to the point where it has its own jargon? Precisely what is “random drift”? And how would it explain the very definite multi-decadal rise since the 1970s?

Weaktor, here’s a hint. When you say that something is inexplicable, you have leaft the realm of science. Effects have causes–particularly effects that involve a significant increase in the energy of a system. Energy is conserved–it must come from somewhere.

Also, your contention that the effects of anthropogenic CO2 would only be recently significant is false. Humans have been burning coal in significant amounts since the late 17th century, and the effect of adding CO2 to the atmosphere is logarithmic in the amount added. That means that the first gigaton has much more effect than the 4000th gigaton. I believe your inability to understand this simple logarithmic dependence is a strong contributor to your stupidity.

Victor @82

Pure nonsense. There are multiple causes for the changes in temperatures over this time period. Suggesting that there are only two is a false dichotomy. Natural variation is one possibility to explain temperature fluctuations, but so are all the drivers such as changes in the solar constant, changes in the earth’s orbit and tilt, changes in aerosols, changes in greenhouse gases, changes in albedo, and so on.

So, when attempting to explain the temperature variation in its totality for the time frame Victor mentions there is no null hypothesis because there are multiple possibilities all based on known physics. That is, the drivers of the average temperature of the earth are well known based on physical laws that became well understood in the nineteenth century. The temperature is the result of the energy into the system versus the energy out.

And why would you pick *natural variation* as the default over any of the actual drivers? Especially since *natural variation* is strictly the movement of heat between the oceans and the atmosphere and does not effect the energy into or out of the system. Not in the least. It is not a driver; therefore, it’s possibilities are severely limited due to physical laws. It can only account for minor variation about the driven mean temperature. There is zero evidence that it can produce dramatic temperature swings. Zero. To call it a default position is just silly and ignorant.

In understanding what has occurred to temperatures over the last 120 years we need to understand what the effect of all the drivers were. All of them. Not just greenhouse gases and not just natural variation. Victor has a problem getting this through his thick skull.

The role of greenhouse gases in determining the planetary temperature is also well known going back to Tyndall. Without greenhouse gases restricting heat loss the average temperature of the world would be about zero degrees fahrenheit rather than the approximately 60 degrees that it is now. The effect of changing greenhouse gases can be determined by physics and is well accepted.

If there were in fact two competing theories for the warming experienced since the 1970s (there isn’t), the increase in greenhouse gases would be the null hypothesis and one would have to prove why the increase in CO2 is not behaving as predicted by science. What Victor fails to comprehend is that greenhouse gas science predicted the warming as per the Hansen discussion and we are not going back and trying to create a theory to explain the current observations, hence the null hypothesis would be greenhouse gases. Any alternative cause would have to fit the evidence of the arctic warming faster than the rest of the planet, nights warming faster than days, and winters faster than summers. Natural variation certainly cannot account for any of these these, only phenomena that restrict heat loss.

It is Victor who is always full of pseudo scientific mumbo-jumbo.