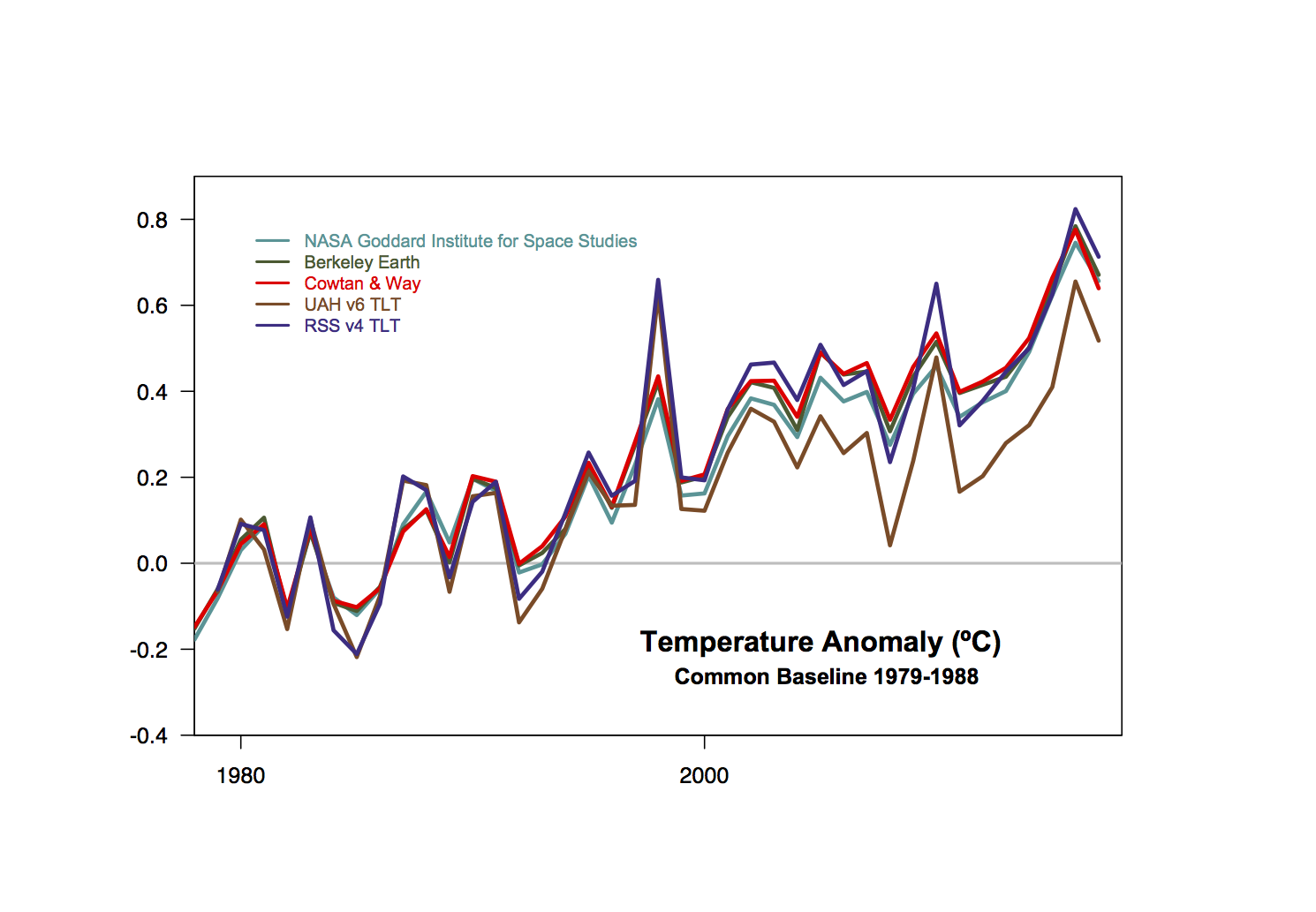

This is a thread to discuss the surface temperature records that were all released yesterday (Jan 18). There is far too much data-vizualization on this to link to, but feel free to do so in the comments. Bottom line? It’s still getting warmer.

[Update: the page of model/observational data comparisons has now been updated too.]

Old news: ECMWF had a press release on this on 4 January: https://www.ecmwf.int/en/about/media-centre/news/2018/2017-extends-exceptionally-warm-period-first-complete-datasets-show

Apologies if this is common knowledge, but is this time series sensitive to the baseline’s period? Is there a specific reason why we are looking at 1979-1988, and not for example 1979-2009?

[Response: On this graph, it’s just to show clearly the trends from a common base. If it’s too short, there is a lot of inter-annual noise that gets into the visual presentation, if it’s too long, you can’t really see the difference in the trends. – gavin]

Two other interesting findings on temperature

One is that seafloor methane is apparently not reaching the sea surface, and so is not reaching the atmosphere. This finding, if it holds true, is cause for sighs of relief — it would limit scenarios out toward the worst case end of the scenarios

The other is that neither 1.5 nor 4C now looks likely, but 2 or more still does, so little cause for cheer there, unless, of course, that finding assumed that seafloor methane can make its way to the atmosphere

#2 – presenting “anomaly” data is an unnecessary complexity, and makes it harder for the climate scientists to communicate with the public. I am not aware of any other discipline that regularly uses anomalies. People have a tendency to think there is some kind of trickery when they don’t understand – especially with an odd revision of data. Anomalies also make it harder to analyze the data statistically (e.g., cannot log it, or compute growth rates). At the least, the charts should give the baseline figure. In all, it would be better to show the charts in degrees centigrade. The curve would be the same, depending on the scaling.

[Response: The calculations are actual for the global anomalies directly, there isn’t an intermediate step of calculating the absolute value. And I think you’ll find that many measurements are of anomalies from a standard. Isotope ratios in ice cores? Anomalies. Atomic weights? Anomalies. Etc. It seems a little patronizing to assume that the public can’t understand differences, no? – gavin]

#4, cont. The public can be confused, as shown by post #2, and similar earlier posts. From my point of view, it is impossible to analyze anomaly data properly unless the baseline figure is given, and I have had much more trouble finding the baseline than should be the case. I gather that you think it is hard to establish the baseline, and easier to establish trends. As a practical matter, the baseline does not have to be precise for the purposes of understanding the graphs and for the purposes of analyzing the data (especially after converting to degrees Kelvin).

Again, at the least, the baseline should be stated when presenting anomalies, and it might limit the confusion.

Also, what is a “common baseline”? The average for all data series? If that is the case, one cannot compare the trends for the various measures, and the UAH trend is probably biased downward.

Hansen on 2017 GISS temps

Therefore, because of the combination of the strong 2016 El Niño and the phase of the solar cycle, it is plausible, if not likely, that the next 10 years of global temperature change will leave an impression of a ‘global warming hiatus’.

On the other hand, the 2017 global temperature remains stubbornly high, well above the trend line (Fig. 1), despite cooler than average temperature in the tropical Pacific Niño 3.4 region (Fig. 5), which usually provides an indication of the tropical Pacific effect on global temperature.[7]

Conceivably this continued temperature excursion above the trend line is not a statistical fluke, but rather is associated with climate forcings and/or feedbacks.

The growth rate of greenhouse gas climate forcing has accelerated in the past decade.[3] There is also concern that polar climate feedbacks may accelerate.[8]

Therefore, temperature change during even the next few years is of interest, to determine whether a significant excursion above the trend line is underway.

https://www.climatescienceawarenesssolutions.org/blog-1/2018/1/18/global-temperature-in-2017

and misc graphs

http://www.columbia.edu/~mhs119/Temperature/

” It’s still getting warmer. ”

Has been since when, the last ice age?

[Response: Nope. Only since the 19th Century. – gavin]

– gavin]

I think it would be a good idea to publish graphs with the influence of El Nino, etc, removed, if this is possible.

[Response: Like this?

– gavin]

– gavin]

What´s up with UAH temperature series? It´s seem to be diverting from others over time? Any thoughts why that is?

@9 GuyFromFinland:

Satellite data have proven to show a downward bias, in particular UAH data.

Gary and Keihm 1991 showed that natural variability in only 10 years of UAH data was so large that the UAH temperature trend was statistically indistinguishable from that predicted by climate models.

http://science.sciencemag.org/content/251/4991/316.short

Hurrell and Trenberth 1997 found that UAH merged different satellite records incorrectly, which resulted in a spurious cooling trend.

http://www.cgd.ucar.edu/cas/abstracts/files/Hurrell1997_1.html

Wentz and Schabel 1998 found that UAH didn’t account for orbital decay of the satellites, which resulted in a spurious cooling trend.

http://www.nature.com/nature/journal/v394/n6694/abs/394661a0.html

Fu et al. 2004 found that stratospheric cooling (which is also a result of greenhouse gas forcing) had contaminated the UAH analysis, which resulted in a spurious cooling trend.

http://www.atmos.washington.edu/~qfu/Publications/nature.fu.2004a.pdf

Mears and Wentz 2005 found that UAH didn’t account for drifts in the time of measurement each day, which resulted in a spurious cooling trend.

http://science.sciencemag.org/content/309/5740/1548.abstract

Happy to oblige.

https://tamino.wordpress.com/2018/01/20/2017-temperature-summary/

Tamino did a smoothing to factor out the enso ln fluctuations here:

https://tamino.wordpress.com/2018/01/20/2017-temperature-summary/

and says, “Two interesting facts become clear. First, the hottest year on record after removing the influence of fluctuation factors was 2017, in all seven data sets. Second, the “odd man out” is clearly the data from UAH.”

That’s where we really are, just had the hottest year ever again.

Mike

@12 Mike

=> It’s Official: 2017 Was the Hottest Year Without an El Niño

The United Nations announced Thursday that 2017 was the hottest year on record without an El Niño event kicking up global annual temperatures. …

T Marvell ”

#2 – presenting “anomaly” data is an unnecessary complexity, and makes it harder for the climate scientists to communicate with the public.”

I don’t see why. I doubt the general public even know what anomalies are used for. They just look at lines on a graph and see a general temperature trend over time, and see whats implied. They mostly don’t read the footnotes on what scales and baselines are used.

I have never heard denialists raise anomalies as an argument.

However the word anomaly does have an unfortunate sort of connotation, but as I said most people probably don’t even realise its used.

Tamino’s graphic display of the various data sets, adjusted as indicated, is available here: https://tamino.wordpress.com/2018/01/20/2017-temperature-summary/#more-9559

UAH, even with their flawed data, show an .7 degree increase in global temps since 1980.

Mr KIA @7

Notice how it took 8000 years after the last ice age for temperatures to increase approximately 4 degrees C. We are doing the same or more in 100 years. Big difference in terms of rate and impacts on ability to adapt / adjust.

in re: #4–1/19/18 @ 1:07P–(both to t.marvell & Dr. Schmidt’s response) – I have long thought that SOMETHING different needs to be tried, regarding the impass twixt the Sci Guys & ‘the Public.’ Some percentage of the latter are quite capable of grasping differences and anomalies. True that. But, simplicity is useful in the attempt to WIDEN that membership.

(A political consensus, after all, will one day be required to practically limit human combustion, should Humanity decide to constrain atmospheric carbon loadings).

In saving us from the nightmare of the Quid & farthing, etc., Guvnor Morris and Thomas Jefferson combined efforts to give the US a simplified Thaller (‘short for’ an ounce of Silver minted in the Ancient Czechoslovakian mining zone where the Curries got their uranium ores), and crucially, a decimal, or ten-based, system for fractioning the base unit. [Thank you, Gentlemen!!]

In our long running discourse (which oft times seems to get us frustratingly little “Ball Movement” upon the field), Humanity’s great difficulty in getting any real grasp of matters Thermal, instructs. It was tough. Real progress awaiting Rumford, Fahrenheit, & others. Not won until the early 18th Century.

The fantastic instability, within bounds, of our planet to Fail to decide upon an Average temperature–is a basis for MIT trained astronaut Buzz Aldrin to dis the whole of the AGW issue! And he is quite numerate. Beyond one’s wildest expectations for where Everyman, might ever get.

It is a “weakness,” as well as an “Accident,” after all, that both of our contemporary metrics are SUSPENDED as if by Magic, @ a physically meaningless mid point. Where water freezes, or boils, or whatever.

On other forums the cold Northern Hemisphere weather in January 2018 is being taken as a sure sign of an imminent Ice Age. Never mind the fact that in the Southern Hemisphere heat records have been broken (with no El Nino) and sporting events cancelled or shortened. No doubt if things are toasty up north in July it will be interpreted as a hiccup. If that happens I’d say there’s little chance the new Ice Agers will reconsider.

Johnnt, #18–

Actually, to be pedantic, on a hemispheric basis it’s the Northern Hemisphere that is warmer than normal just now.

Per the Climate Reanalyzer site out of U. Maine, the anomaly for the planet is 0.6 C, for the Southern Hemisphere 0.3, but for the Northern, 0.9, as of today. The Arctic is nearly 3 degrees above climatology, as is often the case in this modern era.

In Eastern North America, we are experiencing a sudden ‘interglacial’–except of course for denialist crowing about immediate temperature, which has ironically hit a frosty patch. Central Siberia, on the other hand, is now in the cross hairs of ‘General Winter.’

http://cci-reanalyzer.org/wx/DailySummary/#t2anom

So Gavin in your response to #7…… do you really trust those error bars? The spread of modern day direct temperature readings reasonably spaced around the globe as shown in your blog post is as great as the possible error 22,000 years ago!

Makes you wonder why we need such detailed measurements

As an addendum to the land-atmosphere temperature trend, one with the longest-lasting consequences:

https://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT/

https://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT/heat_content55-07.png

Interesting, on 20, the NODC website is shut down for US government shutdown. I don’t remember this happening at the 2013 shutdown.

Could you express in words what you referenced?

Thanks…

Keith Woolard,

So, we’ll add reading a graph to the list of talents you do not possess. The modern era is entirely in the spike at the end.

This is the OHC through December 2017 for 0 to 700 meters:

https://i.imgur.com/nHQc005.png

When I took the screen shot, there was no 4th-quarter update for 0 to 2000 meters.

Response on comment #7:

Duh, I like that graph, it speaks so clearly and merciless :)

” Nothing we see or hear is perfet. But right there in the imperfection is perfect reality.”

– Shunryu Suzuki

… may it be pretty or may it be ugly, I always stick to reality 8) Kind regards,

Nemesis

With all due respect (and that’s a tremendous lot of it) to the folks who fuss over anomalies and graphs and removing El Nino from the trend (thanks Tamino) —

What the clueless folks you are trying to reach actually need to know is that each decade is warmer than the last, at a rate near 2 degrees Celsius per century, which might well increase, and for comparison the difference between Ice Age glaciation and the climate we like is about 6 degrees Celsius. There’s a bit more about pointing out lead times to change infrastructure, but muddying the waters with squabbles about El Nino-scale wobbles will not help that at all.

Anyone trying to mislead the clueless with quibbles should just be challenged to provide a prediction (especially one made decades ago that may be checked now, but a current one for the next few decades is fair game as well). Then slam them with the 1970s the 1980s, the … Story since the 1970s is darn clear.

This is the best answer **for the general public** to nonsense about short wobbles, pauses that either don’t exist or don’t matter, etc. etc.

ocean heat content record from 1958-2017, see here:

https://twitter.com/Lijing_Cheng/status/954017104545701888

https://pbs.twimg.com/media/DT1ZfllU8AA8HQx.jpg:large

OK All,

Obviously I will have to explain things a little more simply as Ray (who has never actually managed to copy the spelling of my name correctly) Et Al clearly don’t understand.

From the graph in the blog post there is a spread of at least 0.4C across the various manipulations of the temperature data recordings. These have been derived from thousands of locations measured multiple times a day 365 days per year. And yet there is still AT LEAST a +/- 0.2C error bar.

The graph that Gavin posts as a response to #7 (ignoring for the moment the spike pasted on) is derived from a handful of non-random locations around the planet and not sampled at 700 times/year rather maybe once, or often once every hundred years or so. And on top of that, they aren’t actually temperature measurements, they are ALL proxies. Despite all these limitations, they still claim that same +/-0.2C margin of error.

Sure paleoclimatology can give us a good idea of past climate change but if Gavin thinks that we can know the temperature of the globe 22,000 years ago to within 0.4 of a degree then I have a bridge I would like to sell him

From the graph in the blog post there is a spread of at least 0.4C across the various manipulations of the temperature data recordings.

The spread is between the RSS and UAH. The thermometer series are almost a photo finish.

K Woollard @28

“From the graph in the blog post there is a spread of at least 0.4C across the various manipulations of the temperature data recordings. These have been derived from thousands of locations measured multiple times a day 365 days per year. And yet there is still AT LEAST a +/- 0.2C error bar.”

The spread of temperatures in the graph in the article are mostly between UAH which measure the upper atmosphere, and the others including NASA and hadcrut that measures the surface. These are just completely different things, so a difference is not surprising, and UAH has always been an outlier set of data.

The different surface data sets do have some small differences, but not +/- 0.2 degrees. It’s not so much an issue related to numbers of sites. They all use the same raw temperature data, and the issue is they make slightly different adjustments due to problems of urban heat islands and so on.

Paleo climate is obviously challenging, but there are various different ways of assessing it which helps. You also don’t have the problems of modern data, where systems of measurement changed repeatedly, for example particularly the way oceans temperatures were measured. Paleo climate data and its error bars are therefore perfectly credible.

And regardless of the accuracy of this paleo data, we are talking roughly 4 degrees coming out of the ice age over 8,000 years. Does it matter exactly what the error bar is, for example whether its +/- 0.2 degree or +/- 1.0 degree or whatever? It’s important academically, but the most important point is temperatures coming out of the ice age increased very slowly. Over the last 100 years they have increased at a vastly more rapid rate. This is just undeniable, and is the most important issue.

Climate change sceptics are totally incapable of working out the things that matter most.

@7 Gavin’s [Response: Nope. Only since the 19th Century] + graph.

At first glance your graph gives me grave concerns on the message you are attempting to deliver.

The step up at the end of the graph can only be from data, as you say, from the last century or two. To have any credibility the graph would need to show temperature variation at this level of time scale for the whole series. Otherwise, in the current vernacular, it’s a contender for ‘FAKE NEWS’.

Please explain my dilemma in interpretation.

[Response: Your dilemma is entirely self-imposed. Your desire for confirmation of your predetermined conclusion (that nothing untoward is occurring) is preventing you from investigating the answers to your questions in any way that risks your current conclusions. This unfortunately is nothing I can help you with. Of course, you could surprise me by reading the papers involved, checking out the copious discussions about this very point at the time, and in general give a little credence to the idea that ppl have already thought about the obvious things that you came up with after 10 minutes thought. – gavin]

To KW, 28, I believe the paleo data are typically century or longer averages. Which you are comparing to error bars on annual data for the present day.

The Presidential Cagwallagists at Breitbart report Earth’s narrow escape from a lethal temperature plunge during the government shutdown .

Keith, I really don’t care how you spell your name. You’ve never said anything correct or interesting, so it’s really not worth my taking the time to learn. If you want to hog all the l’s that is your business.

So, in addition to reading a graph, we can also add a lack of understanding of basic statistics. The sample mean converges toward the actual mean with the error decreasing as the inverse of the square root of the number of samples. There are multiple sources of data and multiple constraints.

And here’s yet another news flash for you: Your inability to understand something does not make it wrong–which is a very good thing given the enormous number of things you do not understand.

Keith,

I think we ‘need to explain things a little more simply’ for YOU!

There’s virtually no distinguishable error bar visible at that scale on the ‘modern temperature record’ section of that graph, let alone one matching 22K yr ago!! See that big vertical straight line?…… the initial blue quarter of it.. need I continue?… the marked ‘0’ was over 2000 years ago.

About Mr. KIA, Keith Woollard et al:

Hahaha, I’m so happy that I’m done with funny deniers for some years now :’D Their funny game goes like this:

One jumps out of the bush, throws in any completely irrational “comment” and when that “comment” has been disproved, then he ducks and another one jumps out of the bush and throws in another completely irrational “comment” :’D

Yeah, I know that gamez in and out. But hey, I love you, brainf*s, because YOU are my guarantee, that the funny system will fall quickly 8) So, PLEASE, go on for goodness sake, please, never stop denyin, never ever stop, we are almost done. Thank you, beloved deniers, thank you very much for serving my purpose:

The fall of greedy, ignorant capitalism.

It will happen, I swear, thanks to endless ignorance and denial, it will happen 100%. So, thank you, thank you, thank you, beloved deniers!

Best wishes,

Nemesis

To these funny, beloved deniers:

CO2 is good for the plants, we are heading towards a wonderful future, we will grow wine and strawberries on Greenland, we will welcome millions and millions of climate refugees as cheap labour in Europe and elsewhere, Mr. Trump and alike will surely get what they want and deserve:

More heat.

Yeah, Karma is a funny thing I tell you.

Btw: Tomorrow we will get up to +16°C in Germany, gnahahaha, in the midst of Winter :’D Yoh yoh, that’s just weather, everyhing is fine, wonderful, please, never stop denying, beloved deniers, Hell needs to be Hot, Hotter. Please, make Hell real Hot. And I mean it 3:-(

A few comments…

1) Ray “The sample mean converges toward the actual mean with the error decreasing as the inverse of the square root of the number of samples.”

IFF the noise is white.

IFF you are sampling the same thing

Neither of those two conditions exist in the proxy datasets.

2) Putting a one-sigma uncertainty on a paleo temperature dataset is ludicrous. The errors are not normally distributed and there is no account taken of systemic issues.

3) “but the most important point is temperatures coming out of the ice age increased very slowly.” Yes, that is what the graph shows without realistic error bars. But the graph is extremely sparsely sampled and the composite of hugely varying datasets. Do any of the individual proxies look as smooth?

But Ray, I do like your comment about hogging all the L’s

@31 Gavin’s response:

According to data the increase in warming since 19th century is around 1-1.5 centigrade. I estimate your graph up to the start of the upturn is based on a 3-400 year resolution. Then increases 3.5 centigrade over a couple of hundred years. That’s FAKE NEWS in my book. Please explain the graph.

Keith Woollard,

I’ve come up with a mnemonic to help me with your name. I’ll call you Woollard the Dullard.

1)On the time scales used in the paleo constructions, noise is probably pretty close to white.

2)Really? You really think that the concept of standard deviation is only meaningful for a normal distribution. That’s fricking adorable.

3)Dude, that is why you combine the proxies–to smooth out the noise. And it works.

Uh, Titus. Look at the date. 2100–that is a projection. I wonder whether you MAGA hat might be too tight.

Titus, #39–You do realize you’re repeating yourself, right? Did you actually check out the ‘copious discussions’ Gavin linked?

OK, here’s a BOTE model of a ‘quasi-modern’ temperature spike compared with a stable 400-year window.

First, the window: by definition, it averages out to some baseline, with only minor random variation about a mean. We will set the baseline as equal to 0.

Now, the temperature spike. For simplicity, we’ll model it as perfectly linear: every year, the temperature will increase by 0.01 C. (That’s considerably less than what we observe today, according to the instrumental data, but let’s be both simple and conservative.)

So, in Year 1, we get an anomaly of 0.01C, increasing to 0.02, and so on until in Year 100, we have 1 degree C of warming. Thereupon the Maxwell demons decide it would be amusing to undo all they just did, and they reverse the process exactly, so that in Year 200, we’re back to 0.

Conveniently, this means that you can pair years such that adding them gives you a 1-degree total. For example, Year 1–0.01 C–can be paired with Year 101–0.99 C. Thus, you find that that there are 100 pairs of years, each of which has an anomaly of exactly 1. That means, in turn, that the mean temperature of a 400-year window with a 200-year linear up-and-down spike is going to be increased by 0.25 degrees.

There’s nothing close to that in the relevant part of the curve. Yes, this is BOTE, not a rigorous demonstration. But I would think the logic is correct enough that it’s at least indicative.

(You could easily make it more realistic by using real data for the last 100 years instead of the linear model I used–it’d only be a bit more trouble to gin it up in Excel–but I think that would be still less flattering to the contrarian case.)

Ray (I am going to refrain from name calling – people who toe the line seem immune from censorship whereas anyone who questions anything seems to be treated somewhat differently)

You do realise white doesn’t mean unknown, it means all frequencies represented equally.

Obviously I don’t agree with (for example) Shakun 2012’s claimed precision or accuracy, but that is not the point. My point is Gavin’s pasting of two (three actually)completely different datatypes onto the same graph. That alone is bad enough but to then draw an inference from the exact differences between the data types to make a point is just bad science.

Gavin, resample the graph at a 300 year increment or cummulate your error bars like real scientists do

So, Keith, why is the use of multiple data types in the same graph a bad thing, as long as all of them have error bars associated with them and are nominally measuring the same quantity? Might it not provide a more complete picture in some instances…like this one, where it serves to illustrate the utter uniqueness of the current epoch?

The hadsst3 data shows global sst temperatures are now below the pre El Nino trend.

https://3.bp.blogspot.com/-Bw6Bg3cSLAU/Wmoj7naWrQI/AAAAAAAAAmk/_z1Slvtf6kYnxrqlLbwAMH4ecojMN9XdwCLcBGAs/s1600/HADSST2018125.png

I see that reality is beginning to intrude upon the dangerous global warming team. They say ” it is plausible, if not likely, that the next 10 years of global temperature change will leave an impression of a ‘global warming hiatus’.”

Climate is controlled by natural cycles. Earth is just past the 2003+/- peak of a millennial cycle and the current cooling trend will likely continue until the next Little Ice Age minimum at about 2650.See the Energy and Environment paper at http://journals.sagepub.com/doi/full/10.1177/0958305X16686488

and an earlier accessible blog version at http://climatesense-norpag.blogspot.com/2017/02/the-coming-cooling-usefully-accurate_17.html

Here is the abstract for convenience :

“ABSTRACT

This paper argues that the methods used by the establishment climate science community are not fit for purpose and that a new forecasting paradigm should be adopted. Earth’s climate is the result of resonances and beats between various quasi-cyclic processes of varying wavelengths. It is not possible to forecast the future unless we have a good understanding of where the earth is in time in relation to the current phases of those different interacting natural quasi periodicities. Evidence is presented specifying the timing and amplitude of the natural 60+/- year and, more importantly, 1,000 year periodicities (observed emergent behaviors) that are so obvious in the temperature record. Data related to the solar climate driver is discussed and the solar cycle 22 low in the neutron count (high solar activity) in 1991 is identified as a solar activity millennial peak and correlated with the millennial peak -inversion point – in the RSS temperature trend in about 2003. The cyclic trends are projected forward and predict a probable general temperature decline in the coming decades and centuries. Estimates of the timing and amplitude of the coming cooling are made. If the real climate outcomes follow a trend which approaches the near term forecasts of this working hypothesis, the divergence between the IPCC forecasts and those projected by this paper will be so large by 2021 as to make the current, supposedly actionable, level of confidence in the IPCC forecasts untenable.””

For the current situation and longer range forecasts see Figs 4 and 12 in the links above.

https://2.bp.blogspot.com/-ouMJV24kyY8/WcRJ4ACUIdI/AAAAAAAAAlk/WqmzMcU6BygYkYhyjNXCZBa19JFnfxrGgCLcBGAs/s1600/trend201708.png

Fig 4. RSS trends showing the millennial cycle temperature peak at about 2003 (14)

Figure 4 illustrates the working hypothesis that for this RSS time series the peak of the Millennial cycle, a very important “golden spike”, can be designated at 2003

The RSS cooling trend in Fig. 4 and the Hadcrut4gl cooling in Fig. 5 were truncated at 2015.3 and 2014.2, respectively, because it makes no sense to start or end the analysis of a time series in the middle of major ENSO events which create ephemeral deviations from the longer term trends. By the end of August 2016, the strong El Nino temperature anomaly had declined rapidly. The cooling trend is likely to be fully restored by the end of 2019.

https://4.bp.blogspot.com/-iSxtj9C8W_A/WKNAMFatLGI/AAAAAAAAAkM/QZezbHydyqoZjQjeSoR-NG3EN2iY93qKgCLcB/s1600/cyclesFinal-1OK122916-1Fig12.jpg

Fig. 12. Comparative Temperature Forecasts to 2100.

Fig. 12 compares the IPCC forecast with the Akasofu (31) forecast (red harmonic) and with the simple and most reasonable working hypothesis of this paper (green line) that the “Golden Spike” temperature peak at about 2003 is the most recent peak in the millennial cycle. Akasofu forecasts a further temperature increase to 2100 to be 0.5°C ± 0.2C, rather than 4.0 C +/- 2.0C predicted by the IPCC. but this interpretation ignores the Millennial inflexion point at 2004. Fig. 12 shows that the well documented 60-year temperature cycle coincidentally also peaks at about 2003.Looking at the shorter 60+/- year wavelength modulation of the millennial trend, the most straightforward hypothesis is that the cooling trends from 2003 forward will simply be a mirror image of the recent rising trends. This is illustrated by the green curve in Fig. 12, which shows cooling until 2038, slight warming to 2073 and then cooling to the end of the century, by which time almost all of the 20th century warming will have been reversed

Titus estimates the sampling period in the Marcott temperature reconstruction posted above going back 20,000 years is only 300 – 400 years. He thinks this would miss short term temperature spikes .

But apparently the sampling period is 120 years as below.

https://www.realclimate.org/index.php/archives/2013/03/response-by-marcott-et-al/

And any robust increase in temperatures would be expected to last several centuries wouldn’t it, so would show up in the graph?

Titus doesn’t like the way different sets of data are spliced together. They all relate to common base points, so I don’t see the problem.

@41 Ray Ladbury says: “Look at the date. 2100–that is a projection”

Oh my goodness. So Gavin is actually using a projection to show it has only been warming since the 19th century. That confirms it, this is “VERY FAKE NEWS”.

@42 Kevin McKinney says: “Did you actually check out the ‘copious discussions’ Gavin linked”

I was questioning the graph. Ray @41 has just confirmed it is “FAKE NEWS”

Cheers

Ray @ 45,

They are not measuring the same thing, and the error are not appropriate.

Go and grab the SI from Marcott2013. Let’s look at three proxies:

IOW225514 with a mean sample rate (sr) of 70 years shows a reasonably linear cooling trend of 3 degrees over it’s 6000 years.

GeoB 3313-1 with a sr of 564 (not 530 as stated, and almost twice the maximum for selection) shows a less believable, but still clear warming trend of 2 degrees over the 11000 of the study

Moose Lake (sr 47) has a noisy but identifiable cooling of 1 degree over 6000 years.

Note also that 2 off these don’t reach the minimum 6500 year period needed as a cut-off.

Sure, combining all these is helpful and gives a picture of the past, but to say that the current rate of change of temperature did not occur in the past 11,000 years is laughable. That is what Gavin is trying to do by combining the disparate data types. And that leads people like Kevin @43 to believe there own flawed model

… to say that the current rate of change of temperature did not occur in the past 11,000 years is in fact correct!

That is what Gavin is doing by combining the disparate data types – being correct!