There has been a bit of excitement and confusion this week about a new paper in Nature Geoscience, claiming that we can still limit global warming to below 1.5 °C above preindustrial temperatures, whilst emitting another ~800 Gigatons of carbon dioxide. That’s much more than previously thought, so how come? And while that sounds like very welcome good news, is it true? Here’s the key points.

Emissions budgets – a very useful concept

First of all – what the heck is an “emissions budget” for CO2? Behind this concept is the fact that the amount of global warming that is reached before temperatures stabilise depends (to good approximation) on the cumulative emissions of CO2, i.e. the grand total that humanity has emitted. That is because any additional amount of CO2 in the atmosphere will remain there for a very long time (to the extent that our emissions this century will like prevent the next Ice Age due to begin 50 000 years from now). That is quite different from many atmospheric pollutants that we are used to, for example smog. When you put filters on dirty power stations, the smog will disappear. When you do this ten years later, you just have to stand the smog for a further ten years before it goes away. Not so with CO2 and global warming. If you keep emitting CO2 for another ten years, CO2 levels in the atmosphere will increase further for another ten years, and then stay higher for centuries to come. Limiting global warming to a given level (like 1.5 °C) will require more and more rapid (and thus costly) emissions reductions with every year of delay, and simply become unattainable at some point.

It’s like having a limited amount of cake. If we eat it all in the morning, we won’t have any left in the afternoon. The debate about the size of the emissions budget is like a debate about how much cake we have left, and how long we can keep eating cake before it’s gone. Thus, the concept of an emissions budget is very useful to get the message across that the amount of CO2 that we can still emit in total (not per year) is limited if we want to stabilise global temperature at a given level, so any delay in reducing emissions can be detrimental – especially if we cross tipping points in the climate system, e.g trigger the complete loss of the Greenland Ice Sheet. Understanding this fact is critical, even if the exact size of the budget is not known.

But of course the question arises: how large is this budget? There is not one simple answer to this, because it depends on the choice of warming limit, on what happens with climate drivers other than CO2 (other greenhouse gases, aerosols), and (given there’s uncertainties) on the probability with which you want to stay below the chosen warming limit. Hence, depending on assumptions made, different groups of scientists will estimate different budget sizes.

Computing the budget

The standard approach to computing the remaining carbon budget is:

(1) Take a bunch of climate and carbon cycle models, start them from preindustrial conditions and find out after what amount of cumulative CO2 emissions they reach 1.5 °C (or 2 °C, or whatever limit you want).

(2) Estimate from historic fossil fuel use and deforestation data how much humanity has already emitted.

The difference between those two numbers is our remaining budget. But there are some problems with this. The first is that you’re taking the difference between two large and uncertain numbers, which is not a very robust approach. Millar et al. fixed this problem by starting the budget calculation in 2015, to directly determine the remaining budget up to 1.5 °C. This is good – in fact I suggested doing just that to my colleague Malte Meinshausen back in March. Two further problems will become apparent below, when we discuss the results of Millar et al.

So what did Millar and colleagues do?

A lot of people were asking this, since actually it was difficult to see right away why they got such a surprisingly large emissions budget for 1.5 °C. And indeed there is not one simple catch-all explanation. Several assumptions combined made the budget so big.

The temperature in 2015

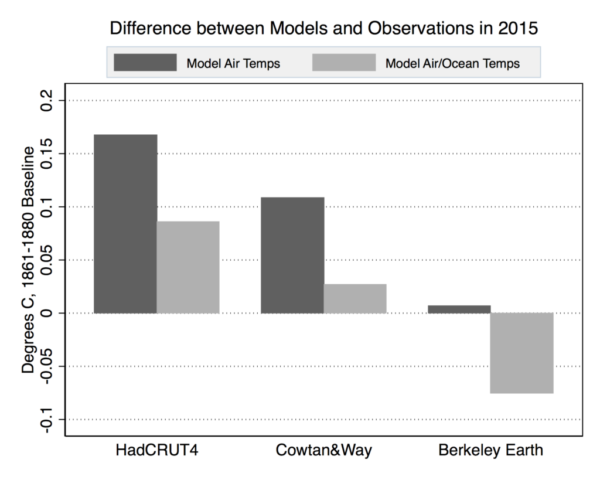

To compute a budget from 2015 to “1.5 °C above preindustrial”, you first need to know at what temperature level above preindustrial 2015 was. And you have to remove short-term variability, because the Paris target applies to mean climate. Millar et al. concluded that 2015 was 0.93 °C above preindustrial. That’s a first point of criticism, because this estimate (as Millar confirmed to me by email) is entirely based on the Hadley Center temperature data, which notoriously have a huge data gap in the Arctic. (Here at RealClimate we were actually the first to discuss this problem, back in 2008.) As the Arctic has warmed far more than the global mean, this leads to an underestimate of global warming up to 2015, by 0.06 °C when compared to the Cowtan&Way data or by 0.17 °C when compared to the Berkeley Earth data, as Zeke Hausfather shows in detail over at Carbon Brief.

Figure: Difference between modeled and observed warming in 2015, with respect to the 1861-1880 average. Observational data has had short-term variability removed per the Otto et al 2015 approach used in the Millar et al 2017. Both RCP4.5 CMIP5 multimodel mean surface air temperatures (via KNMI) and blended surface air/ocean temperatures (via Cowtan et al 2015) are shown – the latter provide the proper “apples-to-apples” comparison. Chart by Carbon Brief.

As a matter of fact, as Hausfather shows in a second graph, HadCRUT4 is the outlier data set here, and given the Arctic data gap we’re pretty sure it is not the best data set. So, while the large budget of Millar et al. is based on the idea that we have 0.6 °C to go until 1.5 °C, if you believe (with good reason) that the Berkeley data are more accurate we only have 0.4 °C to go. That immediately cuts the budget of Millar et al. from 242 GtC to 152 GtC (their Table 2). [A note on units: you need to always check whether budgets are given in billion tons of carbon (GtC) or billion tons of carbon dioxide. 1 GtC = 3.7 GtCO2, so those 242 GtC are the same as 887 GtCO2.] Gavin managed to make this point in a tweet:

Headline claim from carbon budget paper that warming is 0.9ºC from pre-I is unsupported. Using globally complete estimates ~1.2ºC (in 2015) pic.twitter.com/B4iImGzeDE

— Gavin Schmidt (@ClimateOfGavin) September 20, 2017

Add to that the question of what years define the “preindustrial” baseline. Millar et al. use the period 1861-80. For example, Mike has argued that the period AD 1400-1800 would be a more appropriate preindustrial baseline (Schurer et al. 2017). That would add 0.2 °C to the anthropogenic warming that has already occurred, leaving us with just 0.2 °C and almost no budget to go until 1.5 °C. So in summary, the assumption by Millar et al. that we still have 0.6 °C to go up to 1.5 °C is at the extreme high end of how you might estimate that remaining temperature leeway, and that is one key reason why their budget is large. The second main reason follows.

To exceed or to avoid…

Here is another problem with the budget calculation: the model scenarios used for this actually exceed 1.5 °C warming. And the 1.5 °C budget is taken as the amount emitted by the time when the 1.5 °C line is crossed. Now if you stop emitting immediately at this point, of course global temperature will rise further. From sheer thermal inertia of the oceans, but also because if you close down all coal power stations etc., aerosol pollution in the atmosphere, which has a sizeable cooling effect, will go way down, while CO2 stays high. So with this kind of scenario you will not limit global warming to 1.5 °C. This is called a “threshold exceedance budget” or TEB – Glen Peters has a nice explainer on that (see his Fig. 3). All the headline budget numbers of Millar et al., shown in their Tables 1 and 2, are TEBs. What we need to know, though, is “threshold avoidance budgets”, or TAB, if we want to stay below 1.5 °C.

Millar et al also used a second method to compute budgets, shown in their Figure 3. However, as Millar told me in an email, these “simple model budgets are neither TEBs nor TABs (the 66 percentile line clearly exceeds 1.5 °C in Figure 3a), they are instead net budgets between the start of 2015 and the end of 2099.” What they are is budgets that cause temperature to exceed 1.5 °C in mid-century, but then global temperature goes back down to 1.5 °C in the year 2100!

In summary, both approaches used by Millar compute budgets that do not actually keep global warming to 1.5 °C.

How some media (usual suspects in fact) misreported

We’ve seen a bizarre (well, if you know the climate denialist scene, not so bizarre) misreporting about Millar et al., focusing on the claim that climate models have supposedly overestimated global warming. Carbon Brief and Climate Feedback both have good pieces up debunking this claim, so I won’t delve into it much. Let me just mention one key aspect that has been misunderstood. Millar et al. wrote the confusing sentence: “in the mean CMIP5 response cumulative emissions do not reach 545GtC until after 2020, by which time the CMIP5 ensemble-mean human-induced warming is over 0.3 °C warmer than the central estimate for human-induced warming to 2015”. As has been noted by others, this is comparing model temperatures after 2020 to an observation-based temperature in 2015, and of course the latter is lower – partly because it is based on HadCRUT4 data as discussed above, but equally so because of comparing different points in time. This is because it refers to the point when 545 GtC is reached. But the standard CMIP5 climate models used here are not actually driven by emissions at all, but by atmospheric CO2 concentrations. For the historic period, these are taken from observed data. So the fact that 545 GtC are reached too late doesn’t even refer to the usual climate model scenarios. It refers to estimates of emissions by carbon cycle models, which are run in an attempt to derive the emissions that would have led to the observed time evolution of CO2 concentration.

Does it all matter?

We still live in a world on a path to 3 or 4 °C global warming, waiting to finally turn the tide of rising emissions. At this point, debating whether we have 0.2 °C more or less to go until we reach 1.5 °C is an academic discussion at best, a distraction at worst. The big issue is that we need to see falling emissions globally very very soon if we even want to stay well below 2 °C. That was agreed as the weaker goal in Paris in a consensus by 195 nations. It is high time that everyone backs this up with actions, not just words.

Technical p.s. A couple of less important technical points. The estimate of 0.93 °C above 1861-80 used by Millar et al. is an estimate of the human-caused warming. I don’t know whether the Paris agreement specifies to limit human-caused warming, or just warming, to 1.5 °C – but in practice it does not matter, since the human-caused warming component is almost exactly 100 % of the observed warming. Using the same procedure as Millar yields 0.94 °C for total observed climate warming by 2015, according to Hausfather.

However, updating the statistical model used to derive the 0.93 °C anthropogenic warming to include data up to 2016 gives an anthropogenic warming of 0.96 °C in 2015.

Weblink

Statement by Millar and coauthors pushing back against media misreporting. Quote: “We find that, to likely meet the Paris goal, emission reductions would need to begin immediately and reach zero in less than 40 years’ time.”

Anyway, I would like to apologize. I did misread things when I made the comment that ‘at least try to remove El Nino’. An attempt was made, although looking at the model used it seems inadequate.

So, from what I can tell, the shape of a step response function is obtained from climate model output and then convoluted with historical forcing data. The result is then fit via simple linear regression, with historical temperature data.

If this is the case, then internal variability is effectively being treated as white noise by the statistical model used, which will clearly have problems and should result in an overestimate in the anthropogenic temperature trend if the data set ends in a strong El Nino year.

At this point, I think it’s more constructive to simply assume we can and work as hard as possible to get carbon emissions down. Throwing our hands up and saying it can’t be done is incredibly dangerous at this stage, as people will assume a hedonist lifestyle, thinking that the world is doomed anyway…

Jonathan Richards @31

Scientists first brought the problem to the attention of politicians in 1965. This was when President Johnson was given a comprehensive report on the state of the environment. In the half-century since, the world’s politicians have either acknowledged the science and, effectively, done nothing or denied the science and done nothing.

What more could the scientists do? The world had been warned; it was up to the politicians to act. It was not the fault of the scientists that the politicians did not act. So I’m not at all surprised that in the intervening years the scientists just continued to do what they knew best: science. They’ve acquired a greater and greater knowledge and understanding of the planetary climate and accumulated a vast amount of evidence to back them up.

In a way, you are correct — it is irrelevant, but what else could they do? I’m reminded of a story from ancient Japan, where a samurai challenged a tea-master over some slight. The tea-master knew he had no chance against the samurai. Instead, resigned to his fate, he appeared at the appointed time and place with his tea-making paraphernalia and began brewing tea. The samurai relented.

Re 12, Mr. Know It All

I also find the use models for predictions with 1/100 degree significant digits to be quite funny.

I think Stefan was somewhat unfair with some of his comments about the HadCRUT4 dataset. The reason the dataset “notoriously [has] a huge data gap in the Arctic.” is because, well, there is not a lot of observational data there, especially in the past. HadCRUT4 uses SST measurements from buoys and ships, and land air temperatures from meteorological stations. There is no infilling of data into grid points where there have been no measurements.

Saying HadCRUT4 “is not the best data set” relies on assuming that those dataset techniques, that interpolate data into data sparse regions from regions where there is data, do not introduce uncertainties that may influence comparisons with model simulations.

We know for instance that the temporal/spatial variability in these in-filled regions is different to where there are observations, which need to be thought about when comparing with model variability.

We recently published a paper exploring the impact of observational uncertainty on an attribution analysis.

http://journals.ametsoc.org/doi/abs/10.1175/JCLI-D-16-0628.1

In our discussion exploring the (very minor) differences in results when using different datasets we said:-

“Dataset creation approaches that infill missing data areas may give overconfidence to climate changes in regions where there are no direct measurements, when compared with model simulations that have data in those regions.”

and “care should be taken in the interpretation of data comparisons when using datasets with infilled data areas.”

There are very good scientific reasons for using observational datasets that fill in data sparse regions in many analyses – I will continue using them – but we should be aware of not only their strengths but also of their weaknesses.

[Response: Hi Gareth, I think the main issue is whether HadCRUT4 is the most appropriate match to true global means in models (as it was effectively used in the Millar paper). An appropriate match to the masked model data would be fine, but is rarely done. One solution which has different assumptions than what is used to define the HadCRUT4 global values, would be to calculate the zonal means first and then area weight those – which assumes that missing data warms at the same rate as the local zonal average as opposed to the global means. Sampling from the reanalyses do suggest that would be more accurate (reduced bias), even if you don’t want to go to the Cowtan and Way methodology. – gavin]

Peak temperature arguments imply that bad things happen when global temperatures reach a certain threshold but their severity is independent of the path taken too reach that threshold.

Does this imply that a 1.5°C increase in global temperatures brings the same problems independent of the heat stored lower in the oceans or how much the ice caps have melted?

Does the emphasis on peak temperature sideline other important measurements?

Should we look for other measurements to supplement global temperature targets?

[Response: Good point – the outcome will depend on the path, of course, e.g. for the reasons you give. In practice that is not a big issue, though, since the practical paths from where we are now to stopping global warming at the 1.5 °C level all look very similar. Provided that you assume they are reasonably smooth without abrupt changes to our emissions. -Stefan]

Dan H., #47–

“We just need to reduce them to meet the terrestrial removal, which increase with increasing atmospheric concentrations.”

A dangerous and unwarranted assumption. There is good reason to believe that ‘terrestrial removal’ could be begin to *reduce* in the future in response to rising temperatures–to note just two cases, conversion of rain forest to savannah in the Amazon, and radical methane emissions increases in the Arctic, due both of melting permafrost and to increasing microbial metabolism.

Mark Goldes:

Well, no wonder 8^D! Extraordinary claims require extraordinary evidence. You haven’t presented any.

Eric Swanson:

Claiming “The science

violatesexpands the Second Law of Thermodynamics” tells us all we need to know.Kevin,

“A dangerous and unwarranted assumption.” Not really. The bigger assumption would be that the rain forests cease to exist. Granted, there is significant destruction occurring today that could lead to that assumption, but that is a separate issue. Crops must continue to grow to support a growing population, most of which will increase uptake as concentrations and temperatures rise. The biggest sink is still the oceans, which will continue to dissolve carbon dioxide, continuously reaching new equilibriums.

#40 Larry: Fee and Dividend will lower emissions more than you think. The point of the policy is to (1) make fossil fuels more expensive than clean alternatives and (2) provide incentives for eliminating emissions. So even if the rich can afford it, why would they buy more expensive dirty energy when clean energy costs less? Also, at $50/ton-CO2, CCS will be cheaper than paying the fee. At $100/ton, Direct Air Capture will be cheaper than paying the fee. So, dramatic emissions reductions are possible when certain thresholds are reached.

While F&D is estimated to reduce emissions by over 50% in 20 years, we need to be down to about zero in 30 years, so we will also need direct regulations like to you talk about. But the chances that we will implement rationing anytime soon is much lower than the chances we implement F&D which, after all, is similar to the existing Alaska Permanent Fund policy that everyone in Alaska loves.

Dan H @59

“Crops must continue to grow to support a growing population, most of which will increase uptake as concentrations and temperatures rise.”

Wheres your evidence? Lands under cultivation are already pushed to the limits with much land lost to erosion and over farming.

Wheres your evidence temperatures somehow increase carbon sink potential? This claim of yours makes no sense whatsoever.

There’s some published research suggesting more CO2 has already lead to more plant and tree growth, but the research says this affect diminishes over time.

The IPCC also find higher temperatures will reduce crop productivity after 2050.

Improved soil management may increase soil potential as a carbon sink in theory but doing this in reality means considerable changes to farming techniques, on a GLOBAL scale, which looks very hard to make happen, certainly in the short time frames required. In other words nice idea, but don’t count on it.

“The biggest sink is still the oceans, which will continue to dissolve carbon dioxide, continuously reaching new equilibriums.”

This doesn’t work.The oceans ability to absorb CO2 saturates. Global warming is expected to reduce the ocean’s ability to absorb CO2, leaving more in the atmosphere…which will lead to even higher temperatures as below from NASA.

https://earthobservatory.nasa.gov/Features/OceanCarbon/

Stefan, I have two questions. In replying to #56 you said that practical pathways from here to an avoidance of 1.5oC all look very similar, “provided that you assume they are reasonably smooth without abrupt changes to our emissions”.

Question #1 is, what are some pathways that you would consider to have abrupt changes in emissions?

Question #2 is, why not model these to potentially put them on the table, or to at least provide them as outlier proposals? Being that we all are frogs in an alarmingly warming pot, I believe it would be wise for modellers to give policymakers and the public some choices that go beyond what you think may be politically palatable.

Thanks for your valuable work. But I think some additional work in this direction by yourself or other whom you might encourage would be very valuable for informing public dialog.

“or others”, not “or other”.

Dan H., #59–

No, the rain forests don’t have to ‘cease to exist’ for their carbon-sinking capacity to be degraded. Any shrinkage, all other things being equal, will do that–has already done it, if the study below is correct.

https://phys.org/news/2015-04-amazon-rainforest-losses-impact-climate.html

Nor is the Amazon tipping point an ‘assumption’–it’s in the literature, and has been since at least 2000, after carbon cycle models started to be implemented in GCMs. The first such study I’m aware of was Cox et al (2000); it’s discussed in the “Three Degrees” section of Mark Lynas’ “Six Degrees”, which I summarized here:

https://letterpile.com/books/Mark-Lynass-Six-Degrees-A-Summary-Review

It was a modeled projection, and research on the topic continues, so I wouldn’t call it a settled matter. I’m not well up on the state of the research today, other than the facts that there is a lot of it, and that there are competing effects in play which researchers are attempting to pin down; but the risk is clearly well beyond ‘assumption.’ Here’s just one recent(ish) ‘alarmist’ example:

http://onlinelibrary.wiley.com/doi/10.1890/ES15-00203.1/full

“We also present a set of global vulnerability drivers that are known with high confidence: (1) droughts eventually occur everywhere; (2) warming produces hotter droughts; (3) atmospheric moisture demand increases nonlinearly with temperature during drought; (4) mortality can occur faster in hotter drought, consistent with fundamental physiology; (5) shorter droughts occur more frequently than longer droughts and can become lethal under warming, increasing the frequency of lethal drought nonlinearly; and (6) mortality happens rapidly relative to growth intervals needed for forest recovery. These high-confidence drivers, in concert with research supporting greater vulnerability perspectives, support an overall viewpoint of greater forest vulnerability globally.”

See the whole abstract for a more discussion about the ‘competing effects’ I mentioned.

(And yes, that’s a larger geographical scope than we’ve been talking about–the boreal forest is quite important, too, and other forest lands shouldn’t be neglected–but I only specified that Amazon as one example and so don’t mind going a bit wider here.)

An assertion relying on multiple assumptions. Have you tested any of them, or even examined them? For example, what about agricultural carbon efficiency–I’ve seen some surprisingly high numbers for CO2 emitted by industrial ag per calorie consumed. It’s very plausible that this would completely outweigh the ‘uptake’ of CO2. (I’ve seen different numbers for how much agriculture emits, but I’ve *never* seen an estimate concluding that current ag functions as a CO2 sink!) For another example, surely it matters what new cropland *displaces*. The answer on that, in the case of the Amazon, is already in.

And for that matter, for your argument to make sense at all, wouldn’t the CO2 have to end up being sequestered? AFAIK, that pretty much doesn’t happen. Some of ag’s CO2 is emitted directly by feedlots, farm equipment, and related transportation activities; some (such as unwanted leaves, stems and the like) is recycled into the soil but still remains in the active carbon cycle; some (probably a small fraction) is transpired or excreted by humans and our commensals. Worth remembering, too, is that quite a lot of modern ag is functioning as an enormous topsoil mining operation–which is to say, it is functioning as a ‘soil-to-atmosphere carbon pump.’ Can you point to *any* net sequestration by current agriculture?

I left marine sinks out of it because in your original comment you specified ‘land sinks’. But since you bring it up, basic chemistry tells us that the marine sink will diminish with warming, since warmer waters take up less CO2 than cooler ones. That’s believed to be a crucial causal link in Milankovitch-driven glacial cycles, last I heard at least. So this point is perhaps the very worst argument that CO2 sinks will increase with warming. I’d call it an ‘own goal,’ actually.

Got anything else?

There was a comedy skit from ~60 years ago. It involved one comic offering a series of highly unlikely eventualities all of which his partner answered with the phrase “There’s a chance.” Until the last one. Finding good pastrami in Havana? The answer was, “There is no chance.” (Timing and delivery were involved in the comedy.)

Holding warming below 1.5C? There is no chance.

Dan DaSilva: I also find the use models for predictions with 1/100 degree significant digits to be quite funny.

AB: And where have you seen such a thing? I’ve seen estimates with rather wide error bars, which is entirely different. There’s no problem with showing more digits than are “significant”. It’s the only way to make a graph that isn’t stairsteps, and since the uncertainty grows quickly, any output from a model which conforms to your insistence would quickly flatline and become worthless, eh?

———

ahh, the “AESOP Institute” and no-fuel power. At least they have truth-in-naming. Wasn’t Aesop’s product line “fables”.

I wonder if, in the later Roman Empire, there were barbarian denialists: “provinces devastated, nah, just people getting hysterical about a few German lads out on the town”, “those emperors want you to think there’s a barbarian menace just so they can keep their jobs”, “this barbarian alarmism is just a conspiracy to inflict an authoritarian, militaristic, monarchical system on us, we never used to have that (oh, wait..)”.

Not so fast, Dan H, growing crops are only a seasonal CO2 sink, in some cases less than seasonal. The carbon in the grain/fruit will be exhaled as CO2 after they are consumed and metabolized, and the discarded stems/leaves will emit CO2 as they decay, so there is no net benefit what so ever in terms of CO2 draw-down. But then you probably already know this and are just peddling your usual nonsense.

PRACTICAL PATHS?

Thanks Stefan for your answer to #56 where you say

But what are practical paths?

In Well below 2°C: Mitigation strategies for avoiding dangerous to catastrophic climate changes, Ramanathan and Xu outline three levers that can control climate

1. The super pollutant lever, mitigating short-term climate pollutants

2. The Carbon Neutral Lever, mitigating CO2 emissions

3. The Carbon Extraction and Sequestration Lever

From what I’ve read sequestration (3) seems the least practical lever. There are sub-levers in CO2 mitigation (2) that seem practical. Some sub-levers of the super pollutant lever (1) seem very practical, such as the phasing out halocarbons.

CLIMATE LEVER PRACTICALITY

Like this posting, Ramanathan and Xu are discussing the aim to stop global warming at the 1.5°C level but it is clear that the levers they mention have different effects on measures such as ocean heat content, which are not directly related to peak temperature.

Ramanathan and Xu’s levers have different levels of practicality. When “lever practicality” is part of the argument, policy options estimating which paths are practical become clearer.

Example:

What is the link animal husbandry to sea level rise, particularly the production of beef and lamb, which produces large quantities of methane? Because of methane’e short lifetime in the atmosphere, emissions have a limited effect on peak temperature – until the peak is near. However, while in the atmosphere, it will have caused some sea level rise, amongst other effects.

If a significant effect is there, would an easy lever to pull be telling the public?

nigelj,

You are forgetting your basic chemistry. The amount of carbon dioxide dissolved in the ocean will be in equilibrium with its partial pressure in the atmosphere (Henry’s Law). If the atmospheric concentration increases 10%, the partial pressure will increase correspondingly, and the concentration of dissolved gas will also increase 10%. Yes, warmer waters decrease the solubility, but to a much lesser extent. The current average surface temperature is ~16C, an increase of ~1C over the past century. The resulting drop in carbon dioxide solubility in water was about 2.5%. Compare that to the 35% increase in atmospheric concentrations during that time frame, and the overwhelming direction is towards more oceanic uptake, not less. This effect decreases as water warms, as solubility changes less at higher temperatures. Do the math yourself.

This simple illustration is only part of the carbon cycle, increased calcification will remove carbon dioxide from the water, allowing for the dissolution of more gas. The only way that the oceans will outgas carbon dioxide would be for the water temperature to increase, without an increase in atmospheric concentrations. Similarities occur with the land sinks also, they are pressure driven much more that temperature.

“I wonder if, in the later Roman Empire, there were barbarian denialists…”

Dunno, though it wouldn’t be a shock.

However, it is documented that pretty much while the Appomattox surrender was happening, some Southern authorities were working to determine where they should put the next camp for Northern POWs.

Geoff, thanks for sharing that PNAS study.

Some may be interested to observe the occupation of Mitch McConnell’s office by hurricane survivors and(?) 350.org activists.

https://www.facebook.com/kevin.mckinney.1840

nigelj: The oceans ability to absorb CO2 saturates.

AB: but not until after the oceans die. Well, not die, but change to lifeforms yucky and toxic to humans. “Pass the jellyfish and a gas mask, please”.

——

Kevin McKinney: conversion of rain forest to savannah in the Amazon,

AB: But the Amazon represents a one-off injection of carbon into the atmosphere, while grasslands are true carbon sequesters. Note that Larry Edward’s link shows that the loss of 10% of the trees in the Amazon represents a mere 1.5% of increased carbon in the atmosphere. So a total loss of the Amazon would cost perhaps 15% while unlocking the tremendous carbon sucking action of subsequent grasslands. Perhaps we should kill the Amazon deliberately, eh? ;-)

Dan H:The biggest sink is still the oceans, which will continue to dissolve carbon dioxide, continuously reaching new equilibriums. …and… You are forgetting your basic chemistry. The amount of carbon dioxide dissolved in the ocean will be in equilibrium with its partial pressure in the atmosphere (Henry’s Law).

AB: The oceans aren’t at equilibrium for either temperature or CO2 concentration. That takes many, many centuries to achieve.

Note that we’re slowing down the overturning of ocean waters. This reduces CO2 uptake by the ocean. If you keep slowing the overturning, you keep reducing uptake because the atmosphere “sees” more and more stagnating surface water and less and less returning deep water.

Here are some quotes from https://scripps.ucsd.edu/programs/keelingcurve/2013/07/03/how-much-co2-can-the-oceans-take-up/

“although the oceans presently take up about one-fourth of the excess CO2 human activities put into the air, that fraction was significantly larger at the beginning of the Industrial Revolution.”

“This slowing of ocean mixing may have another effect. It stifles the transport of nutrients such as nitrate and phosphate from deeper waters to the surface, which diminishes the growth of phytoplankton, which store carbon in their tissue as a product of photosynthesis. The sinking tissue takes the carbon with it to the deep ocean when the organisms die.”

“All this adds up to what scientists expect to be a gradual slowing of ocean CO2 uptake if human fossil fuel use continues to accelerate. ”

“As a result, ocean waters deeper than 500 meters (about 1,600 feet) have a large but still unrealized absorption capacity … As emissions slow in the future, the oceans will continue to absorb excess CO2 … into ever-deeper layers … eventually, 50 to 80 percent of CO2 cumulative emissions will likely reside in the oceans”

So your whole argument crashes and burns for many reasons, but especially because of that word in the last paragraph: eventually. Yep, a thousand or so years from now equilibrium will be reached, but as for me, I’m kinda interested in human-scale periods of time.

Dan H @70

Your maths could be right or it could be wrong. To be honest its over 30 years since I did basic university chemistry, and I don’t use it in my job, so I’m not going to comment. Clearly NASA think warming becomes the dominant factor at some stage, and reduces oceans ability to absorb CO2, and its unlikely their maths would be wrong.

But it appears oceans will still absorb less carbon due to the increasing acidity affect. There has “already” been a measured slowdown in the ability of oceans to absorb CO2 since 2000, and this is obviously really important real world data. This is put down to this acidity affect. The research is below and may be of interest to you if you are into chemistry.

http://www.earthinstitute.columbia.edu/articles/view/2586

Increased calcification won’t be much help removing CO2 from the water. The pace is very slow pace over many centuries, and given ocean acidification, there will be forces cancelling out this process by dissolving calcium.

You could be right about the outgassing thing, but its not the main issue its reducing ability to absorb CO2.

Al Bundy @74, I agree by the time oceans really do stop absorbing CO2 all sorts of other problems will have happened already. It seems every week there’s a report of some change or problem already happening, or projected to happen. Its a rate of change problem in the main.

Ocean acidification is toxic for various species, and hard to adapt to because of rates of change. Fish require very exact temperatures to breed, and are slow to adapt. Some species have migrated already, fish currently adapted to very cold oceans will not have any places to go. It goes on and on.

In fact its the multiple negative effects of climate change on oceans and land, that bother me most because it adds up insidiously.

The authors of Millar et al (2017) “Emission budgets and pathways consistent with limiting warming to 1.5C” have a guest post at CarbonBrief.

AB, #74–

“Perhaps we should kill the Amazon deliberately, eh? ;-)”

“Do ya feel lucky? Do ya?” ;-)

(Remember, Amazonian soils are strongly lateritic, so what works–or worked!–on the Prairies may not work so well in a denuded Amazon.)

Thanks for the concise clarifications, Stefan.

The United States is clearly the biggest obstacle to global GHG reductions. Our political system is currently controlled by fossil fuel, timber, and big ag (especially meat and cattle feed) corporations. We might need more aggressive pressure from outside, especially Europe. There are several alternatives here. Maybe I can discuss then with you in Bonn in November; you or others can reach me and Linda Sills at mike.greenframe@gmail.com

@Geoff Beacon,

I would have to disagree 100% with your post. The single most practical path is the BCCS path by changing agriculture to regenerative models of production. First off the land is already managed, all you need do is change management. Secondly it is both more profitable and more efficient than current models in almost every case. Thirdly, of all the possible mitigation strategies, it has by far the most additional emergent beneficial effects over a wide rage of associated “wicked problems” defying solutions.

As Nobel prize winner Rattan Lal states, “Yes, agriculture done improperly can definitely be a problem, but agriculture done in a proper way is an important solution to environmental issues including climate change, water issues, and biodiversity.”

As far as methane from agriculture, it is a non issue if again, it is done properly. The grassland biome including the animals is a net sink for methane. In fact increasing the % of pastures, especially with blends including C4 grasses is an important practical AGW mitigation strategy for both CO2 and Methane.

Feedlots though? Yes they are a methane source.

Another major carbon sink seems to be in the process of failing and turning into a source: https://www.theguardian.com/environment/2017/sep/28/alarm-as-study-reveals-worlds-tropical-forests-are-huge-carbon-emission-source

Presumably this would alter whatever carbon budget we think we might have…

AL Bundy #74

Was this a reference to the recent paper by Daniel H. Rothman?

Thresholds of catastrophe in the Earth system

Is it sensible?

…and speaking, as we were, of the loss of tropical rainforest:

http://www.cbc.ca/news/technology/tropical-forests-carbon-study-1.4312154

I *don’t* feel lucky, reading that.

@74 Al Bundy,

Trying to act like your namesake I see. lolz

No, you do not cut down rain forests to plant grasslands when there is 1/2 the planet in degraded grasslands to restore! D’oh Then we have both..grasslands and rainforests and a change to actually repair the biosphere’s ecosystem functions at least a little.

We don’t need to be taking 3 steps back to move 2 steps forward, when restoring a grassland is just a direct 2 steps forward without any negative side effects…a win win for everyone.

For example:

How much grassland and prairies are plowed herbicided insecticided fertilized and otherwise molested into non existence to grow corn for biofuels, when grass makes better ethanol than corn anyway. More efficient and more yields per acre and the side effect is significant increasing carbon sequestration in the soil too! Not some trivial amount either, serious tonnage per acre per year. More profit too BTW.

Grass Makes Better Ethanol than Corn Does

https://www.scientificamerican.com/article/grass-makes-better-ethanol-than-corn/

Soil Carbon Storage by Switchgrass Grown for Bioenergy

http://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1552&context=agronomyfacpub

Furthermore, as significant as that carbon sequestration by switchgrass is, there are farmers growing switchgrass and other plants to raise cattle that have double to triple+ the rate of carbon sequestration as the switchgrass for biofuel guys!

There are even a few guys “off the charts” like 30-40 tonnes CO2e/ha/yr for ten years straight or more, but we can safely assume them outliers until we see everyone doing that. But it does at least prove it is possible, however unlikely. If it is possible, then there should be ways to help others do it too.

My problem with the climate scientists as a whole is the silo effect. They have no idea what is possible regarding the new understanding in agricultural science. So they model their projections based on the idea at best agriculture can eliminate emissions and maybe sequester another 7% or so, when actually the high end best case scenario is well over 100% yearly emissions, and then you drop down from there based on what % of farmers actually convert their farms to carbon farming.

That might truly fall to the 7%, but that’s due to low adoption, not biophysical capacity. It makes a big difference especially when approaching political decision makers.

Some Murdoch papers are running an ad that seeks to guarantee > 1.5 , by claiming the world needs 1,200 more coal burning power plants to stave off the existential threat of the next Ice Age

https://vvattsupwiththat.blogspot.com/2017/09/mwanwhile-back-in-murdochland.html

Killian says:

23 Sep 2017 at 1:30 AM

“Bull, uf so.

The election was Trump’s as soon as Bernie got railroaded out of the nomination.

Even if there was Russian messing around, it wouldn’t have beaten Bernie’s double-digit margin.

Americans are not very bright these days.”

CH: If that’s your assessment you can include yourself in the group of “Not very bright Americans”. Bernie did not have the votes…. period. It’s called math.

calyptorhynchus @67,

The barbarian challenge to the empires of the classical era do make an interesting analogy for the challenge of modern AGW. But note, the “later Roman Empire” could mean a whole lot of things depending on the where, when and who. A landowner in 4th century Gaul would be alarmed by what were actually tiny barbarian raids & the inability of massive imperial forces to prevent them. Yet a century later, a landowner of Gaul may well not see much difference between being ruled by a succession of self-proclaimed warmongering Roman emperors and a Visigoth or Frankish king.

From the perspective of a resident of Rome, I can imagine quite a bit of 4th century denial, especially with the Aurellian walls acting as a bit of adaptation to the barbarian situation. And when the barbarians did break through, it was on the third attempt so even on August 23rd AD410 there could have been folk saying it would all turn out okay, just like it did in AD409 & AD408.

Yet the analogy does demonstrate serious differences. The later Roman Empire faced many problem and with no democracy to prevent reform, the difficulty was not denialism but identifying what to do about the empire’s underlying list of problems ♠ Increasingly unstable imperial politics, ♠ Intractable economic problems including monetary inflation ♠ Barbarian enhancement, they being no longer a crowd of naked savages with spears and now as well equipped and organised as the Roman army itself.

@70 Dan H,

Basic chemistry is incapable of explaining the rise in atmospheric CO2 at all. You say that:

“You are forgetting your basic chemistry. The amount of carbon dioxide dissolved in the ocean will be in equilibrium with its partial pressure in the atmosphere (Henry’s Law). If the atmospheric concentration increases 10%, the partial pressure will increase correspondingly, and the concentration of dissolved gas will also increase 10%.”

Consider the following:

Henry’s law doesn’t really work well for complex carbonate equilibria and big volumes of liquid water, but even as an approximation, let’s assume that if we have 38,000 Gt of dissolved inorganic carbon, DIC, (CO2 + HCO3 + CO3) in the oceans, and the preindustrial CO2 in the atmosphere is about 2,200 gigatons (300 ppm), that’s a ratio of about 0.06 (atm/ocean). Henry’s law says this ratio is a constant, the equilibrium constant. So if we double the atmospheric CO2 from the preindustrial to 4,400 Gt (600 ppm) then that’s not going to last, that’s a ratio of 0.11, and the ratio has to fall back to 0.06. A little algebra with these numbers shows that the final equilibrium should thus only be 2,400 gigatons in the atmosphere, 40,000 gigatons in the ocean!

So, Henry’s Law tells us that even if we raise atmospheric CO2 to 600 ppm, it should quickly fall back to around 310 ppm! So why is the CO2 level rising at all???

The answer is, our planet’s atmosphere and oceans are not that well described by the basic chemistry of a gas over a beaker of water. There’s the complex equilbria of DIC to take into account, plus the slow rate of ocean mixing. This was first investigated back in the 1950s, actually:

https://history.aip.org/climate/Revelle.htm

What this means is that the overall rate of absorption of CO2 by the oceans is a complex function of numerous processes – biological, chemical and physical – whose individual contributions are still a matter of active scientific research (and which are certainly changing as the planet warms). But, as the Keeling Curve shows, these processes are not capable of keeping up with fossil fuel emissions from the electricity generation and transportation sectors.

Incidentally, these kinds of dynamic processes also play roles in ocean acidification. A simple glance at the buffering power of the carbonate equilibrium system and the vast reservoir of DIC in the oceans would lead one to guess that CO2 acidification would be negligible – but it’s the rate of change, not the long-term equilibria, that matters in terms of the real-time effect. Here’s a nice discussion of that issue.

(The actual equilibrium takes on the order of a few thousand years, the mixing time of the oceans, to reach. . . But that’s at constant temperature. . . So if the oceans warm significantly, then we lock in a new equilibrium, at higher atmospheric CO2 for much longer timescales.)

Scott S: Trying to act like your namesake I see. lolz

AB: Yeah. My favorite invention of his, uh, mine, is “Shoe Lights.” And, as it turns out, shoe lights DID become cool. Who knew? Well, Al Bundy, of course.

“It’s a prediction because right now there are no biorefineries built that handle cellulosic material” (2008)

Fortunately, your link is obsolete. There are a few refineries up and running at commercial scale. Corn stover is a leading contender for feedstock. Food AND fuel. Electrification of transportation is NOT the only way to go renewable. Internal combustion engines with 50-60% efficiency using biofuel is a grand solution and two solutions are almost always superior to one.

Scott Strough:

Again, only if they persist ‘in perpetuity’, or about as long as the fossil carbon we’re digging up and burning did. Much of the high carbon-storage capacity grasslands were converted to intensive human economic use in the same time frame. A number of cultural changes appear prerequisite for any large-scale restoration of those lands to long-term carbon sequestration.

Trees, OTOH, can be grown for a few decades, harvested and deposited in offshore subduction trenches for rapid burial by anaerobic sediment: bye-bye carbon for the subsequent tectonic cycle. There’s lots of cut-over forest land in N. America that nobody wants for anything else. Other than to grow trees for lumber, that is, or for the incommensurable values one or more humans assigns to a ‘wild’ forest. Then there are the operating costs of growing the trees and depositing them in a oceanic trench, of course. A preposterous proposal? Will it scale? Hmm…

Meanwhile, AGW is driving extant forest boundaries up in elevation and/or latitude, most conspicuously by fire, cycling climatically-active carbon rapidly where I’ve been living the last couple of decades.

Don’t get me wrong, I’m all for removing the CO2 from the atmosphere that we’ve added to power prosperity. I’m in even greater favor of not adding any more. I assume that if either desideratum comes to pass, it will be by multiple, anastomosing, highly-contingent pathways. Some key events are more likely to occur than others, although few if any are certain to. Sort of like the origin of life, come to think of it. That had to wait until the Late Heavy Bombardment tapered off, and then took another hundred million years or two of remotely probable coincidences. Good thing for us there were lots of chances, but too bad about all the failed candidates.

In the time I have left,I’d be happily amazed to see the global economy decarbonized. I don’t know that it’s any more likely to happen than large-scale chernozem regeneration or direct transport of woody biomass to geologic sequestration are, though. IMHO a US Carbon Fee and Dividend with Border Adjustment Tariff has the economic appeal of being simple to implement and maintain, with a well-defined endpoint. Its political appeal has yet to be demonstrated, however. As for practical carbon re-fossilization proposals, well, you be the judge. So be the rest of us.

Modern democracy means one man one vote, a system promoted to avoid tyranny.

So in what sense is POTUS elected democratically? In what sense are voters represented in the Senate democratically?

However well democracy avoids dictatorships, it offers no guarantees against the tyranny of the majority.

We have made a mess of our habitat; we are too clever for our own good. Populations and politicians are in denial.

Already there is unbelievable human suffering around the globe. It’s just going to spread to areas we thought were exempt.

Makes no difference to the universe.

AL Bundy #74

“Pass the jellyfish and a gas mask, please”

The proliferation of jellyfish has already lead to one of our top restaurants offering them on the menu. Rather tasteless apparently, but very rich in protein. But I will pass on it.

Don’t get me wrong climate change is clearly a threat to the oceans. We have enough problems with over fishing, mercury contamination, nitrates, and plastics without climate change altering ocean chemistry as well.

Carbon budget studies is one of the grey areas of climate science. It includes things that go beyond modelling and climate. For instance, fossil fuels. There are social, economic, technical issues that are overlooked by climate scientists. For instance, the idea that “we still live in a world on a path to 3 or 4 °C global warming” does only apply under the assumption that fossil fuels are like tap water: all you have to do is to open the tap and it will flow freely.

Unfortunately, we live in a world where militarism, the defence industry, the oil industry and Nations’ interests are mixed together regarding energy ‘security’. While climate science promotes the narrative of cooperation for stopping the use of fossil fuels, one just need to look to how much money is spent in national defence budgets to see that the world is still fiercely competing to control the remaining economically viable resources of fossil fuels.

Dennis N Horne:

IMHO, thoughtful US voters agree that the ideal of popular sovereignty in a pluralistic republic implies ‘one man, one vote’ as a top-level design goal of our system of government, but recognize that that implementation is necessarily more complicated, that our system’s strengths can also be weaknesses, and that not everyone can always be satisfied regardless. They may ask “What Is To Be Done?” but be risk-averse at the margin.

Benjamin Franklin said “Those who would give up essential Liberty, to purchase a little temporary Safety, deserve neither Liberty nor Safety.” His words may be stirring, but they are purely rhetorical. In reality, all US voters who aren’t deontological libertarians are consequentialist libertarians, so we trade Liberty and Safety back and forth all the time.

@91 Mal

You said, ” A number of cultural changes appear prerequisite for any large-scale restoration of those lands to long-term carbon sequestration.”

You are wrong. Many billions of dollars every year are being spent right now to prevent restoration of those lands to long term carbon sequestration. The cultural pressure is in the opposite direction as you presume. It is the full Governmental pressure hampering the change. The full power of the US government is difficult to fight when they push as hard as they are now to force farmers to follow their road to ruin or force them out of business. Very few farmers are so stupid as to fight directly against USDA directives telling them what to grow and how. Most who refused lost their farms.

There are a few lunatic farmers that figured out cracks in the governmental wall. I recommend reading the book “Everything I Want To Do Is Illegal: War Stories from the Local Food Front” so you understand the war going on right now by farmers trying to take back their culture and community from the odious governmental interference. They might even be capable of opening the floodgates. Time will tell. But the government is fighting back hard. Very hard. We will see who wins the battle, because if the government wins, the USA will most certainly collapse under it’s own misguided policies. It is a clear and present danger.

“The nation that destroys its soil destroys itself.” – Franklin D. Roosevelt

All that has to happen is remove the regulatory barriers and stop subsidizing inefficient unprofitable types of farming and let farmers make their own decisions on what is more profitable and beneficial for THEIR farms, and the whole think collapses in a decade and reverts back to raising animals ON the farm land instead of inefficient CAFOs. The profit difference is stunning. Over 50% of all farms in the US operate at a LOSS. In the red. They get by because the whole family finds jobs off the farm and/or massive subsidies from the government setting prices and subsidizing crop insurance. The current systems are so inefficient and economically unsound they wouldn’t even last a decade without the government interference.

But convert that same land to sane types of agriculture and we get massive increases of efficiency and profits in the $3,000-10,000 dollar an acre range. Serious change from running negative. Even on the “bumpercrop” best years the current corn and soy type farmers make maybe 300 dollars an acre, and as I said before, most years actually operating at a loss. They are chasing their tails because when the bumpercrops come the price per bushel drops. The only times they actually make a buck are when everyone else has crop failures but they end up with a bumpercrop. That’s increasingly rare.

The commercial average farm in the US is between 300 to 500 acres in size, many 2000+ acres. To go from averaging no profit at all without government subsidy insurance checks to even $3,000 an acre means a farmer goes from welfare to $900,000.00/yr or more! You do NOT need to convince a farmer to do that. They just will do it the moment the government lets them. They will. Trust me on that. Many are trying and trying now! But the government always tries to quickly move in to crush them.

Scott Strough:

Well, to the extent the US government is an obstacle to large-scale humic carbon storage, it sounds like that has to change. As you point out, however, the full power of the US government is difficult to fight. That’s often true of governments. They reflect class relations in the country’s population and more globally. Won’t those need to change first?

I’d love to see your proposal for addressing those issues, Scott. What Is To Be Done?

> Goldes

Boring, right?

Thank you to

People need to stop equating deforestation with “rainforests”.

The US produces and consumes roughly 25% of the world’s wood products. The vast majority of that is softwoods, such as pine, fir, and hemlock. Over half of our wood consumption goes toward building materials, in order to build plywood/ two by four houses that last an average of 60 years.

Hardwoods are not a common consumer or industrial product, and are not seen on many shelves in building supply stores, not to mention toilet paper and paper towels, courtesy of Canadian old growth softwood forests.

Much could be done here on the demand side here in the US. We have little control over what happens in Brazil or Indonesia. Especially since America’s credibility is at an alltime low.

Scott Strough may already have linked this, but for those interested in soil carbon storage, I just stumbled across this article on the Nature Conservancy’s blog site while investigating something else: Can Grasslands, The Ecosystem Underdog, Play an Underground Role in Climate Solutions?. It’s an advocacy piece touting the American Carbon Registry for “carbon offsets/credits on the voluntary carbon market”, which this year claimed 100 Million Tonnes of carbon offset credits.

Not bad, but with all due credit, anthropogenic CO2 emissions in 2014 were an estimated 100 times that. Better not to emit that 100 Mt in the first place, no?