In a new paper in Science Express, Karl et al. describe the impacts of two significant updates to the NOAA NCEI (née NCDC) global temperature series. The two updates are: 1) the adoption of ERSST v4 for the ocean temperatures (incorporating a number of corrections for biases for different methods), and 2) the use of the larger International Surface Temperature Initiative (ISTI) weather station database, instead of GHCN. This kind of update happens all the time as datasets expand through data-recovery efforts and increasing digitization, and as biases in the raw measurements are better understood. However, this update is going to be bigger news than normal because of the claim that the ‘hiatus’ is no more. To understand why this is perhaps less dramatic than it might seem, it’s worth stepping back to see a little context…

Global temperature anomaly estimates are a product, not a measurement

The first thing to remember is that an estimate of how much warmer one year is than another in the global mean is just that, an estimate. We do not have direct measurements of the global mean anomaly, rather we have a large database of raw measurements at individual locations over a long period of time, but with an uneven spatial distribution, many missing data points, and a large number of non-climatic biases varying in time and space. To convert that into a useful time-varying global mean needs a statistical model, good understanding of the data problems and enough redundancy to characterise the uncertainties. Fortunately, there have been multiple approaches to this in recent years (GISTEMP, HadCRUT4, Cowtan & Way, Berkeley Earth, and NOAA NCEI), all of which basically give the same picture.

Composite of multiple estimates of the global temperature anomaly from Skeptical Science.

Once this is understood, it’s easy to see why there will be updates to the historical estimates over time: the raw measurement dataset used can be expanded, biases can be better understood and characterised, and the basic statistical methods for stitching it all together can be improved. Generally speaking these changes are minor and don’t effect the big picture.

Ocean temperature corrections are necessary and reduce the global warming trend

The saga of ocean surface temperature measurements is complicated, but you can get a good sense of the issues by reviewing some of the discussion that followed the Thompson et al. (2008) paper. For instance, “Of buckets and blogs” and “Revisiting historical ocean surface temperatures”. The basic problem is that method for measuring sea surface temperature has changed over time and across different ships, and this needs to be corrected for.

The new NOAA NCEI data is only slightly different from the previous version

The sum total of the improvements discussed in the Science paper are actually small. There is some variation around the 1940s because of the ‘bucket’ corrections, and a slight increase in the trend in the recent decade:

Figure 2 from Karl et al (2015), showing the impact of the new data and corrections. A) New and old estimates, B) the impact of all corrections on the new estimate.

The second panel is useful, demonstrating that the net impact of all corrections to the raw measurements is to reduce the overall trend.

The ‘hiatus’ is so fragile that even those small changes make it disappear

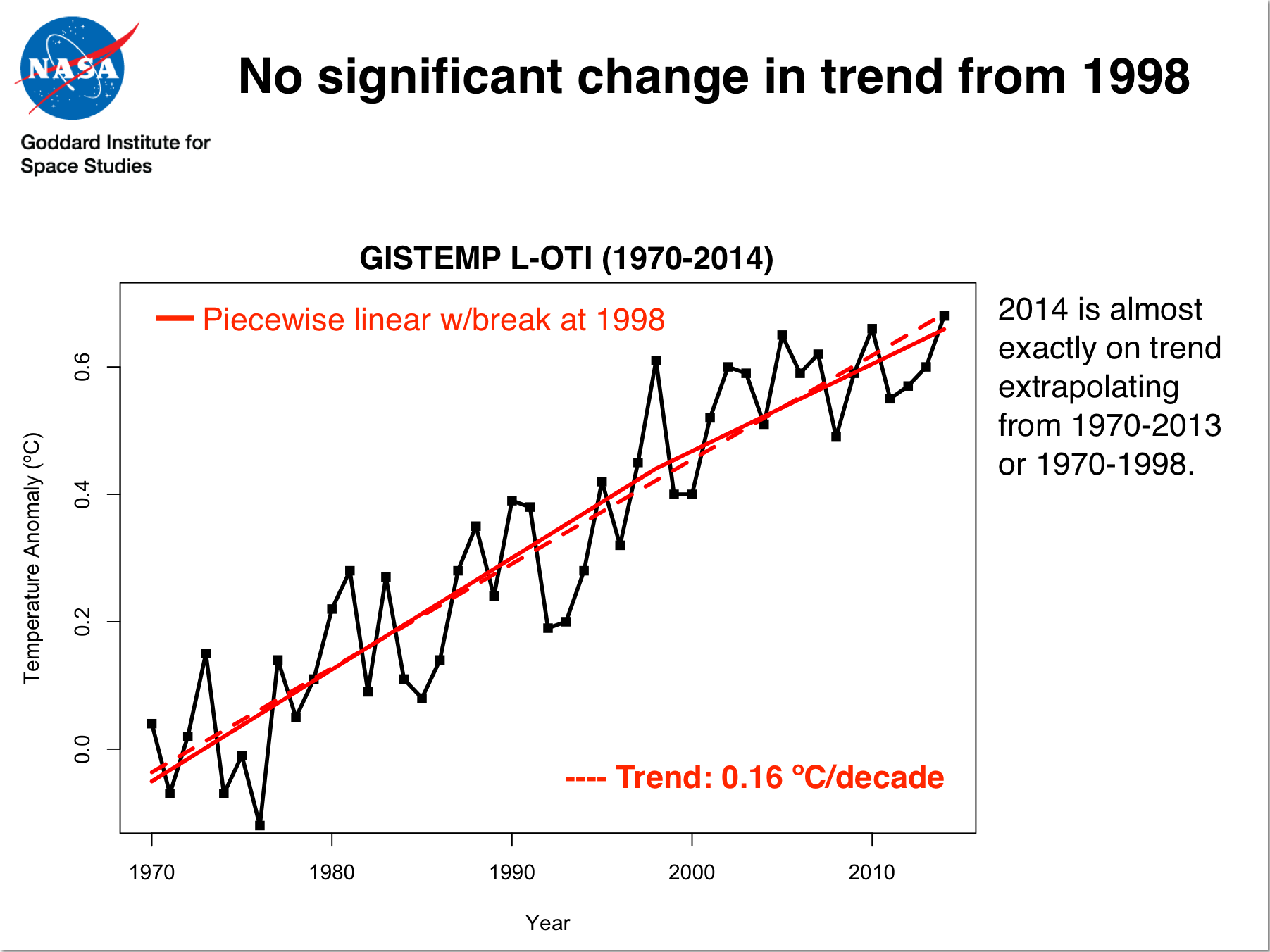

The ‘selling point’ of the paper is that with the updates to data and corrections, the trend over the recent decade or so is now significantly positive. This is true, but in many ways irrelevant. This is because there are two very distinct questions that are jumbled together in many discussions of the ‘hiatus’: the first is whether there is any evidence of a change in the long-term underlying trend, and the second is how to attribute short-term variations. That these are different is illustrated by the figure I made earlier this year:

As should be clear, there is no evidence of any significant change in trend post-1997. Nonetheless, if you just look at the 1998-2014 trend and ignore the error bars, it is lower – chiefly as a function of the pattern of ENSO/PDO variability. With the NOAA updates, the recent trends goes from 0.06±0.07 ºC/decade to 0.11±0.07ºC/decade, becoming ‘significant’ at the 95% level. However this is very much an example of where changes in (statistical) significance are not that (practically) significant. Using either record in the same analysis as shown in the last figure would give the same result – that there is no practical or statistical evidence that there has been a change in the underlying long-term trend (see Tamino’s post on this as well). The real conclusion is that this criteria for a ‘hiatus’ is simply not a robust measure of anything.

Model-observation comparisons are not greatly affected by this update

I’ve been remiss in updating these comparisons (see 2012, 2011, and 2010), but this is a good opportunity to do so. First, I show how the CMIP3 model-data comparisons are faring. This is a clean continuation to what I’ve shown before:

It is clear that temperatures are well within the expected range, regardless of the NCDC/NCEI version. Note that the model range encompasses all of the simulated internal variability as well as an increasing spread over time which is a function of model structural uncertainty. These model simulations were performed in 2004 or so, using forcings that were extrapolated from 2000.

More recently (around 2011) a wider group of centers and with more, and more up-to-date models, performed the CMIP5 simulations. The basic picture is similar over the 1950 to present range or looking more closely at the last 17 years:

The current temperatures are well within the model envelope. However, I and some colleagues recently looked closely at how well the CMIP5 simulation design has held up (Schmidt et al., 2014) and found that there have been two significant issues – the first is that volcanoes (and the cooling associated with their emissions) was underestimated post-2000 in these runs, and secondly, that solar forcing in recent years has been lower than was anticipated. While these are small effects, we estimated that had the CMIP5 simulations got this right, it would have had a noticeable effect on the ensemble. We illustrate that using the dashed lines post-1990. If this is valid (and I think it is), that places the observations well within the modified envelope, regardless of which product you favour.

The contrary-sphere really doesn’t like it when talking points are challenged

The harrumphing from the usual quarters has already started. The Cato Institute sent out a pre-rebuttal even before the paper was published, replete with a litany of poorly argued points and illogical non-sequiturs. From the more excitable elements, one can expect a chorus of claims that raw data is being inappropriately manipulated. The fact that the corrections for non-climatic effects reduce the trend will not be mentioned. Nor will there be any actual alternative analysis demonstrating that alternative methods to dealing with known and accepted biases give a substantially different answer (because they don’t).

The ‘hiatus’ is no more?

Part of the problem here is simply semantic. What do people even mean by a ‘hiatus’, ‘pause’ or ‘slowdown’? As discussed above, if by ‘hiatus’ or ‘pause’ people mean a change to the long-term trends, then the evidence for this has always been weak (see also this comment by Mike). If people use ‘slowdown’ to simply point to a short-term linear trend that is lower than the long-term trend, then this is still there in the early part of the last decade and is likely related to an interdecadal period (through at least 2012) of more La Niña-like conditions and stronger trade winds in the Pacific, with greater burial of heat beneath the ocean surface.

So while not as dead as the proverbial parrot, the search for dramatic explanations of some anomalous lack of warming is mostly over. As is common in science, anomalies (departures from expectations) are interesting and motivating. Once identified, they lead to a reexamination of all the elements. In this case, there has been an identification of a host of small issues (and, in truth, there are always small issues in any complex field) that have involved the fidelity of the observations (the spatial coverage, the corrections for known biases), the fidelity of the models (issues with the forcings, examinations of the variability in ocean vertical transports etc.), and the coherence of the model-data comparisons. Dealing with those varied but small issues, has basically lead to the evaporation of the original anomaly. This happens often in science – most anomalies don’t lead to a radical overhaul of the dominant paradigms. Thus I predict that while contrarians will continue to bleat about this topic, scientific effort on this will slow because what remains to be explained is basically now well within the bounds of what might be expected.

References

- T.R. Karl, A. Arguez, B. Huang, J.H. Lawrimore, J.R. McMahon, M.J. Menne, T.C. Peterson, R.S. Vose, and H. Zhang, "Possible artifacts of data biases in the recent global surface warming hiatus", Science, vol. 348, pp. 1469-1472, 2015. http://dx.doi.org/10.1126/science.aaa5632

- B. Huang, V.F. Banzon, E. Freeman, J. Lawrimore, W. Liu, T.C. Peterson, T.M. Smith, P.W. Thorne, S.D. Woodruff, and H. Zhang, "Extended Reconstructed Sea Surface Temperature Version 4 (ERSST.v4). Part I: Upgrades and Intercomparisons", Journal of Climate, vol. 28, pp. 911-930, 2015. http://dx.doi.org/10.1175/JCLI-D-14-00006.1

- D.W.J. Thompson, J.J. Kennedy, J.M. Wallace, and P.D. Jones, "A large discontinuity in the mid-twentieth century in observed global-mean surface temperature", Nature, vol. 453, pp. 646-649, 2008. http://dx.doi.org/10.1038/nature06982

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

This has probably come up before, but how did forcings develop relative to CMIP3 assumptions? If you put dashed lines on that comparison too, how might it look?

The first link in the post seems to be circular.

I read something about El Nino heat going to the Indian Ocean. Not that it would change the global temperature, but it would change what ENSO does. Since ENSO heat does not usually go into the Indian Ocean, does that preclude the El Nino from happening?

I learned long ago never to get too exited about noise, but:

“Nonetheless, if you just look at the 1998-2014 trend and ignore the error bars, it is lower – chiefly as a function of the pattern of ENSO/PDO variability.”

Can you summarize current state of the world regarding research on expected ENSO behavior in warming climate, i.e.:

a) About the same

b) Expect distribution of La Nina / El Nino / Neutrals to change

c) Insufficent data to be able to say much

Well summarized, and along with Tamino’s statistical post a good complement to the new Karl et al. paper.

The reference to the Monty Python dead parrot sketch may be even closer than intended, as Michael Palin’s pet store clerk, who knows perfectly well the parrot is dead, uses every dodge, rhetorical trick and outright lie to deny the obvious to an increasingly exasperated John Cleese.

As you note most of the difference in the new series comes from moving from ERSSTv3 to ERSSTv4. I seem to recall some speculation (at Skeptical Science I think) that GisTemp was also planning such a move. If so, do you have a rough target date for that change?

Surprisingly, Karl et al report almost no effect on Arctic region estimate in recent years despite enhanced coverage there. They attribute this at least in part due to a more conservative interpolation technique (compared to kriging in other analyses). But there is also no extrapolation over sea ice as far as I can tell, so that omission could also lead to an underestimate of recent Arctic warming.

Finally, I note that statistical uncertainty has been estimated according to the IPCC AR5 method, which was in turn based on the autocorrelation adjustment method in Santer et al (2008) on which you (Gavin) were a co-author. But Karl et al and AR5 apply the method to annual rather than monthly series. I think that could be problematic, especially for short periods. Having said that, I do like the idea of combining statistical and structural uncertainty into one measure.

Tamino and others have persuaded me that “slowdown” is an inaccurate description. Firstly, there’s no significant change in trend (given ARMA(1,1) noise), and secondly it ignores knowledge about what the climatological temperature is at the beginning of the trend.

If you look at all of the data and want to get a “slowdown” for some period at the end, then you have to assume there’s some kind of jump in climatological temperature just before the beginning of your “slowdown” period. Let’s say you claim a “slowdown” in 1998: you can use the data before then to estimate what the climatological temperature is, e.g. by linear regression from 1970-1998. When you start your “slowdown” trend, its initial value should be close to this estimated climatological temperature, but to get the “slowdown” you have to ignore this information and allow some kind of magical jump in climatology during 1998.

It seems that there has never been any solid statistical evidence of a slowdown, but there is some evidence that the observations have been running at the low end of model projections. Understanding why this has happened is fascinating and has led to some very interesting papers.

Here are the pre-“hiatus” and “hiatus” periods in the major temperature records as well as the new Karl et al dataset. Interestingly, Cowtan and Way have a higher trend in the 1998-2014, and Berkeley Earth has the same trend as Karl et al.

Has the new series been posted somewhere on the NOAA website? I took a quick look for it but couldn’t find it.

> first link

Now:

http://www.sciencemag.org/content/early/2015/06/03/science.aaa5632.full

Longterm, not yet active as of this moment:

DOI: 10.1126/science.aaa5632

Will this change the way sea or land temperatures are recorded/reported going forward ? ”

Ten years from now I can’t see it will make any difference since the trend is likely to be even clearer.

I agree with the last statement of your blog article Gavin as far as the global mean temperature is concerned. However, the regional impact of the tropical SST distribution associated with the “hiatus” has been significant. I think that scientific interest in this aspect of the ‘hiatus’ and our in our ability to predict its onset and demise will remain for a while.

Jim White talked at AGU about abrupt climate change in the past, and he mentioned pause events in the trends. Thus, current slowing could have been interpreted to be such a characteristic.

However, with the new picture of the continued significant positive trend, the past appears to be a bad comparison for today’s anthro warming.

Thus, before abrupt change, there probably won’t be a pause, unless the force is reduced.

Hi

This is off topic, but we’ll worth a listen given the current assault on science.

http://www.cbc.ca/radio/ideas/science-under-siege-part-1-1.3091552

Thanks to all of you for your dedication to truth and knowledge.

Hi Gavin – It would be interesting to know what implications the updated data would have for estimates of ECS and TCR, if any.

What do you say to this from the Cato statement? “The treatment of the buoy sea-surface temperature (SST) data was guaranteed to put a warming trend in recent data. They were adjusted upwards 0.12°C to make them “homogeneous” with the longer-running temperature records taken from engine intake channels in marine vessels. As has been acknowledged by numerous scientists, the engine intake data are clearly contaminated by heat conduction from the structure, and they were never intended for scientific use.”

The relevant passage from Karl et al seems to be: “In essence, the bias correction involved calculating the average difference between collocated buoy and ship SSTs. The average difference globally was −0.12°C, a correction which is applied to the buoy SSTs at every grid cell in ERSST version 4. [Notably, IPCC (1) used a global analysis from the UK Met Office that found the same average ship- buoy difference globally, although the corrections in that analysis were constrained by differences observed within each ocean basin (18).] More generally, buoy data have been proven to be more accurate and reliable than ship data, with better known instrument characteristics and automated sampling (16). Therefore, ERSST version 4 also considers this smaller buoy uncertainty in the reconstruction (13).”

They seem to be *reducing* bias, but I don’t know enough to understand what they did. Why would they apply a correction to buoy data equal to the difference between buoy and ship measurements? I don’t understand the context here or why a bias correction would work this way.

Curry suggests that the ERSST data is likely to be less careful or of lower quality than the already available HadSST3 data. It would be helpful to know what you think about that as well. It’s not clear to me how a new dataset or new adjustments are marked as an advance or improvement upon existing sets (other than adding 2014 or something.)

So what to make of the ~20 years of zero trend in both the RSS and UAH data for the lower troposphere?

Very informative article – thanks!

I have a technical question about your plot comparisons. How do you renormalise the absolute values of the 3 temperature anomaly series NCDC, GISS and Hadcrut4 when each uses a different seasonal normalisation period. GISS uses 1951-1980. H4 uses 1961 – 1990 and NCDC uses the 20th century. I believe BEST adopt a station dependent normalisation rather than a fixed time period which makes comparison even more tricky.

[Response: Each anomaly line is just baselined to a common period. This is different (and easier) to re-doing the analyses using a different normalisation period or method. – gavin]

Can someone clarify the difference between the GISTEMP L-OTI figures used for the graph titled ‘No significant change in tend from 1998’ (which look like the ones here, with 2014 higher than 2010: http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts+dSST.txt ), and the GISTEMP figures in the graphical comparisons with CMIP3 and CMIP5, in which 2010 is the highest figure?

[Response: Possibly the colour bar is misleading, but that is the Cowtan and Way record. GISTEMP is kinda buried under the NCDC lines. – gavin]

“The ‘hiatus’ is so fragile that even those small changes make it disappear”.

How is that statement different from the “denier” viewpoint that any warming over the past two decades is so fragile that just small changes make it disappear? This looks like an admission of Curry’s uncertainty argument to me.

[Response: No – the long term warming trend is a much stronger signal relative to the non-anthropogenic baseline. – gavin]

Shouldn’t those who profess to know spend more time on the implications and the need for action. There seems to be a lot of ‘fiddling while Rome burns’ now, which allows the ‘skeptic/loonies to promote the ‘no need to worry’ angle.

I was gratified to see that at least Major Meejuh outlet, the New York Times, gets it. Author Justin Gillis has unequivocally abandoned false balance [my italics]:

Gillis knows a climate-change denialist when he sees one, quoting “The Cato Institute, a libertarian think tank”:

In response to post 16 (Appell)

Some kind scientist here on RealClimate clued me in on some of the differences between the satellite data and the ground-based series. Maybe I should try to pass what I think I learned.

The RSS and UAH series attempt to infer temperatures from a thick slice of the atmosphere centering around 14,000 feet above sea level. They may differ from the ground readings either because temperature trends at that height really do differ from ground trends (i.e., both series are right, but measure different things) or due to purely technical issues (i.e., one or both are wrong).

Temperature trend at circa 14,000 feet can differ from the trend at ground level. Most importantly for the “last 20 years” issue, El Nino has a stronger effect there. The term of art is “lower tropospheric amplification”. So, compared to ground-based series, the satellite series substantially exaggerate the effect of El Nino, with some significant lag. This is why 1998 (“El Nino of the Century”) is a huge outlier in the satellite series.

Here is a link to two of Tamino’s analyses of the satellite and ground series, quantifying the degree of exaggeration of the El Nino in the satellite data, as well as greater sensitivity to volcanic activity.

https://tamino.wordpress.com/2010/12/16/comparing-temperature-data-sets/

https://tamino.wordpress.com/2011/01/06/sharper-focus/

There are numerous other systematic issues related to real differences between temperatures in the upper atmosphere and temperatures on the ground. A readable summary of those is given here:

http://www.climate-lab-book.ac.uk/2014/temperature-lower-troposphere/

Separately, there are purely technical issues related to interpreting readings from aging instruments on satellites. E.g., for years, Christy and Spencer famously claimed no warming in the UAH data, based on what turned out to be an algebra error in their corrections for satellite drift. A discussion that goes deeply into the technical weeds can be found here:

https://www.realclimate.org/index.php/archives/2005/08/et-tu-lt/

The upshot is that people who fixate on the satellite series and a circa 1998 start point are centering their entire argument around a known flaw in the satellite data. (A flaw in so far as one uses it as a proxy for ground temperature trends — which they invariably do.) Either they don’t know that there’s this well-documented issue, or, more likely, they just ignore it.

And so that’s why the real scientists here likely won’t take the time to address your post. If you know even the least little bit about the satellite data — which is how I characterize myself — the lack of concordance with the ground series, for short periods of time, starting in 1998, is a non-issue.

You speak of heat going into the oceans, but didn’t the last IPCC report show model projections of ocean heat content vs observations, and there was no extra heat in the oceans?

[Response: Not sure what you are referring to by the increasing heat in the oceans is abundantly clear: https://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT/ (fig 2 especially). – gavin]

As an observation can I make a plea to anyone making model/data comparisons that the bar separating hindcast from forecast always be included on these plots? I’m sure it’s present on other graphs but this is the first time I’ve noticed it. It’s a very important piece of information when talking to people about these displays.

David Appell – Land Ahoy!

My brain exploded halfway down Curry’s post, where the GWPF, of all people, criticize Karl et al. for taking 1998 as a starting year. Fodder for a follow-up Lewandowski paper – “Recursive Seepage”, anyone?

I’m really struggling with this study as it essentially implies that the data from all the other various temperature datasets is not correct. How can that be?

[Response: It might be less of a struggle if you actually read the second paragraph. – gavin]

Joe Duarte- There’s no implication for climate sensitivity here.

> David Appell says:

> So what to make of the ~20 years of zero trend …?

http://www.remss.com/research/climate

Gavin,

You say that that volcanoes (and the cooling associated with their emissions) was underestimated post-2000 (your p[aper says the cooling of Pinatubo eruption was overestimated).

Did you study this effect before 1991?

Joe Duarte:

They could add 0.12 C to the buoy data or subtract 0.12 C from the ship data. It doesn’t change the final result. Let’s go to an imaginary planet where we pretend that ships always measure temperatures that’re 0.3 C warmer than reality, and buoys only measure 0.1 C warmer than reality. Let’s pretend that temperatures are 20 C and they stay the over a decade during which we measure.

The ships always record 20.3 C and the buoys always record 20.1 C. We get rid of the constant bias in each type by looking at changes: both of them report the true answer of 0 C change (20.3 – 20.3 =0 C for the ships and 20.1 – 20.1 = 0 C for the buoys).

But what if we switched from using the ship measurements to using the buoys sometime in the middle? We would go from the ship record of 20.3 C at the beginning to the buoy record of 20.1 C at the end, inventing a non-existent cooling of 0.2 C.

We need to use the difference in measurements between the ships & buoys to correct. We could either add 0.2 C to all of the buoy measurements and do:

20.3 – (20.1 + 0.2) = 0 C

Or take 0.2 C from the ships and do:

(20.3 – 0.2) – 20.1 = 0 C

Either way, the result is the same.

How can we get the bias differences? There are various ways, but in this case the group looked at all the cases where ship and buoy measurements were next to each other. They should both be measuring the same real T, but they will each report different measurements because of the instrument biases. In the example above, the ship will measure 20.3 C and the buoy 20.1 C so we know in this case the difference is 0.2 C. In reality you take lots of measurements to remove random instrumental error etc. Ultimately, you end up with the required adjustment size, and you should make this adjustment because it brings you closer to reality.

The consistency of the differences between buoys & ships (0.12 C obs) shows that the ship records appear to be quite stable, and our temperature change estimates are good so long as we use this correction. For the calculated global warming it doesn’t matter if you apply the correction to the ships or to the buoys, and the fact that ship intakes are warmer than the environment is irrelevant because of the bias correction and conversion to anomalies. Cato forget to mention this.

Armndo,

The 1988 GISS climate model included volcanic forcing as well as a prediction of what would happen in the future if a large tropical volcano went boom.

“I’m really struggling with this study as it essentially implies that the data from all the other various temperature datasets is not correct. How can that be?”

I think you may be confusing “correct” with “accurate”. All datasets are measurements. All measurements have inaccuracies.

Consider, for instance, eggs. There are 12(count them) in a dozen; there aren’t 12 +-0.5. According to USDA standards, a dozen large eggs weighs(measure them) a minimum of 24 ounces; the next grade minimum is 27oz, so the scientific description would be 24 +3 -0 oz. That is a lot specification; an individual egg in a dozen large eggs can weigh as little as 23/12 of an ounce, but only 5% of the eggs per case can be lower weight. (A case is 30 dozen, so that’s 18 eggs).

The “…uneven spatial distribution, many missing data points, and a large number of non-climatic biases varying in time and space” all contribute inaccuracies to to the global temperature record – as do errors in orbital decay corrections, limb-corrections, diurnal corrections, and hot-target corrections, all of which rely on measurements (+- inherent errors), in the satellite temperature records.

In addition, since the global surface temperature records are a measure that responds to albedo changes (volcanic aerosols, cloud cover, land use, snow and ice cover) solar output, and differences in partition of various forcings into the oceans/atmosphere/land/cryosphere, teasing out just the effect of CO2 + water vapor over the short term is difficult to impossible. see https://tamino.wordpress.com/2014/12/04/a-pause-or-not-a-pause-that-is-the-question/

Eli Rabbet,

That clears things up.

Before 1990 all the eruptions comparable with the post Pinatubo eruptions (see Gavins paper of 2014) have been taken into account then.

The thing that looks fishy is that the adjustments all tend to make past colder, present warmer and increase the trend. That is nto necessarily worng. But when you see that adjustment are underwieghting ARGO buoy for [heated] ship intake, extending arctic land temps over the arctic ocean…

Dr Peter Stott, commenting on Gavin’s study in the Guardian, http://www.theguardian.com/environment/2015/jun/04/global-warming-hasnt-paused-study-finds says the term slowdown is valid because the past 15 years might have been still hotter were it not for natural variations like deep ocean heat uptake.

I have to agree with Mike – 20; the need is for action based on the lessons learned from Nature’s response to planetary heating. i.e. converting some to mechanical energy in the form of storms and moving more to the planetary heat sinks of the poles and deeper water.

Moving the heat to the poles is clearly detrimental. Moving it to the depths is an opportunity to replace all fossil fuels with heat pipe ocean thermal energy conversion. http://climatecolab.org/web/guest/plans/-/plans/contestId/1301414/planId/1315102.

Armando,

To an extent, see Brian Dodge above. One of the most interesting mysteries are what appears to be large volcanic eruptions in the proxy records pre-1500 for which everybunny and their brother searches for the volcano. It is an annual sport at AGU

Just found this

Eli,

I don’t want to go too off-topic, but in recent years, people have started to call the “big” 1258 eruption by name (Samalas).

Forcing is complicated though because you need to know things like aerosol size distribution. Even for Pinatubo there’s some issues with saturation of the SAGE satellite sensor, and the historic eruptions before that have even more uncertainty. I think there’s good evidence though that these “bigger than Pinatubo” eruptions are probably a bit smaller than is what is being forced in the current generation of last millennium simulations. Usually it’s just a prescribed stratospheric AOD based on taking your favorite ice core reconstruction (VEI doesn’t necessarily correlate with climate impact) and trying to get information from the sulfate horizon while relying a lot on what happened with Pinatubo. Some groups do even worse and just treat the eruption as a global TOA forcing, like an equivalent reduction in total solar irradiance.

There’s some ongoing effort to do better, for example doing better interactive chemistry and aerosol evolution, but you need a well-resolved stratosphere. There’s a “volMIP” project underway to look at some of this stuff. But there’s still wiggle room for re-assessing what volcanic eruptions are actually doing to the climate, even the “well observed” ones, with the ‘hiatus period’ just being one extension of where this uncertainty can creep in.

The first graph, titled “various temperature measurements” has lines for rss and uah data which look nothing at all like those published from uah or rss. It’s not just due to scaling. Please explain.

[Response: Satellites only start in 1979. And if you plot the full time-series for MSU-TLT, they line up quite closely with surface indices. The other difference is that this showing the annual mean. The monthly data is noisier and it easy to hide the actual trends by showing shorter time series. – gavin]

There has “always been a ‘pause’ ” in the various series if you cherrypick start points and do not correct for the bias you introduce in so doing.

On the other hand as many have pointed out, Tamino among the most clearly, using correct reasoning would not lead you to a reliably identified ‘pause’.

The ‘pause’ is really a variant of the Gambler’s Fallacy which proposes that short runs in a noisy system mean something. It leads the statistically unaware to reliably lose their shirts rather than anything more valuable.

Okay I’ve managed to track down the data used for that graph and plugged it into excel to see for myself. Confirmed what Gavin says above. I’m a skeptic, but you’ve convinced me that the “pause” is insignificant over longer timescales.

Two problems I still have:

1. Non-satellite estimates from before 1979, too subjective. Estimated products might be biased, even unintentionally so. My experience as an estimator/contractor is that people almost always allow biases to creep into their work. It’s human nature. Since we are working with very small numbers, even the slightest bias could mean the difference between catastrophic AGW and “meh”.

2. CO2 correlation with T from ice core data over long periods. CO2 lags T. I’ve heard the argument that both CO2 drives T and T drives CO2. Not convincing enough. How does this explain trend reversals? Something must be effecting T that is overriding CO2.

(Sorry for off-topic stuff. Just wanted to put my thoughts out there.)

The sum total of the improvements discussed in the Science paper are actually small. – See more at: https://www.realclimate.org/index.php/archives/2015/06/noaa-temperature-record-updates-and-the-hiatus/#more-18571

What made the paper worth publishing in Science Magazine? The word “hiatus” in the title?

Chris Colose, thanks. What about “observationally-based” estimates of ECS and TCR? I assume they plug in their choice of several datasets. Is ERSST not the kind of dataset that would be used by someone like Nic Lewis? In his latest paper in Climate Dynamics, he seems to draw on HadCRUT4v2. Are ERSST and HadCRUT4 apples and oranges? (https://niclewis.files.wordpress.com/2015/05/implications-of-recent-multimodel-attribution-studies_lewis_accepted_reformatted_climate-dynamics_2015.pdf)

MarkR, thanks. Your outline seems to assume that we know the true accuracy of the different methods a priori, maybe have some third reference measure (satellites?) I guess true accuracy might not matter as much if a measure is reliable and we’re mostly interested in change over some interval.

That’s fine if we have one reliably inaccurate measure. And if we have two reliably inaccurate measures A and B, where B replaces A at time T. This looks to be more complicated for a few reasons. First, I’m not sure why they would just take the global average difference between ships and buoys, and apply a universal adjustment. I would expect greater resolution in the adjustments than that, and I can imagine different outcomes based on different textures and patterns in ship-buoy differences – if conditions, temperature, season, humidity, etc. have a systematic influence on the S-B difference, and this skews the mean difference in a way that affects our results. I assume some of this stuff is already figured out.

Second, the difference between ships and buoys is based on instances where they are collocated. Yet it sounds like they applied the adjustment to *all* buoys (“every grid cell”). Is that correct? This is fine if there’s no systematic difference between the locations where they had collocated ship and buoy measurements (L1) and all buoy locations (L2). I assume L2 is a superset of L1, which creates the potential for a confound.

Of course, if there are problems with their method, resolving them could show more warming, not less. It could go either way.

I’m also curious to know more about the difference in quality between HadSST3 and ERSST, and what would happen if the former is used instead, since Curry seemed to think this was an issue.

I don’t understand where Gavin’s L-OTI graph comes from. It’s not an option on Kevin Cowtan’s cool trend tool: http://www.ysbl.york.ac.uk/~cowtan/applets/trend/trend.html

There seems to be a slowdown with most datasets there. If the idea is that the slowdown is over too short a period, a Gambler’s Fallacy type of thing, then the requisite period should be defined up front. Twenty years? 25? 30? Whatever it is, it should be defined up front based on valid constraints so that people can stop arguing in circles over the duration issue. Well, they won’t stop, but the issue could be clarified. It might not be possible or meaningful to generate an exact duration of slowdown-relevance, but a range or ballpark would do. There are probably at least two duration-related disputes on the slowdown: whether it (assuming “it” is real) was predicted by models (and whether the models should be culpable for not predicting it, our assessment of their quality impacted, etc.); and whether the trend is “significant”. Maybe these are the same issue, but they sound different.

Scratch “humidity” from the hypothetical list of conditions that could influence ship-buoy differences…

@43 Three points re. “requisite period”.

1. Any even cursory examination of the records shows “pauses” of 15 plus or minus years have occurred repeatedly. That is, such happenings are neither rare in the record nor have they shown “warming has stopped”.

2. Many articles have attempted to quantify the requisite period. All of which I am aware place it at well beyond 15.

3. “Requisite period” is modified by cherrypicking. That is, it is a totally different matter to look at a distribution for runs of length n showing a certain characteristic–where n is “improbably” long–versus examining a randomly picked section of that distribution and observing an “improbably” long run.

The simplest way to see this is to calculate the probability of observing a run of 8 heads randomly versus the probability of seeing a run of 8 heads in a long series of random throws. The second case is much more probable and needs to be controlled for by revising the alpha probability to be far more conservative. This is why cherrypicking–without controlling for the alpha–leads to wrong conclusions.

That’s @42. Sorry.

Ken @42. Good that you are taking a critical scientific attitude to things. One thing scientists try to do is corroborate findings by one method (here historical temperature methods), by other independent methods. We have many. To name just a few: glacier advances/retreats, dates of ice formation and melting, changes in vegetation and dates of flowering. All these corroborate the observations of temperature trends.

The theory of iceage changes has several part. Overall timing seems to be driven by changes in the earth’s orbit which redistributes solar energy. This directly drives some changes in global and regional temperatures, which effect the size of ice sheets, but the expected size of this effect isn’t nearly large enough to explain the full range of glacial interglacial temperature change. The change in ice volume and climate changes the planets albedo (how much sunlight is reflected) and affect carbon storage. These feedbacks are relatively slow, hundreds of year typically, but they drive the overall sensitivity of the planet to the relatively small variations in solar inputs.

Ken:

“Two problems I still have:

1. Non-satellite estimates from before 1979, too subjective. Estimated products might be biased, even unintentionally so. My experience as an estimator/contractor is that people almost always allow biases to creep into their work. It’s human nature. Since we are working with very small numbers, even the slightest bias could mean the difference between catastrophic AGW and “meh”.”

Imagine a homeowner gets three independent estimates, generated using different methodology, and they all agree very closely.

That would increase confidence accuracy.

Also, your claim that non-satellite estimates before 1979 are “too subjective” simply tell the world that you haven’t studied into how global temperature reconstructions are made, or the fact that there are multiple efforts using different methodologies that are unrelated to each other. I suggest you compare GISTemp’s methodology with the BEST project’s methodology before you claim “subjectivity”. Or at least take the time to point out just where the “subjectivity” lies. Just making unwarranted claims isn’t very convincing.

” I’ve heard the argument that both CO2 drives T and T drives CO2. Not convincing enough. How does this explain trend reversals? Something must be effecting T that is overriding CO2.”

Well, yes, something must be. Physicists have never claimed that CO2 is the only factor that drives changes in climate. Nothing wrong about “CO2 drives T and T drives CO2″ other than being incomplete. As far as other things that effect T, there’s this big yellow hot thing in the sky above you for one.

Again, your criticism appears to be uninformed. Perhaps you need to dive a bit more deeply into the science before dismissing it? A statement like ” Something must be effecting T that is overriding CO2.” makes it seem that you believe that scientists have never thought of something so blindingly obvious and have no explanations for it, both of which are false.

I’m sorry, but your “skepticism” seems very close to “I don’t really understand the science, but I have certain uninformed beliefs about it, therefore I’m skeptical”.

Skeptical Science has a long series of articles answering common denialist complaints about climate science, complete with lots of references. That would be a good place to start.

Your follow-up to Gavin’s reply to your first post makes it clear that you’re open to learning … so do so!

Joe Duarte – Absolute temperatures are not the point, but rather relative temperatures and change. Which is measured by anomalies from previous measurements using the same instruments, or (as in ship intakes and buoys) as anomalies from cross-calibrated instruments.

And if as in this situation offsets between co-located instrument systems are shown to be consistent (0.12°C), a global correction is the only reasonable approach.

How much data, how long a period to identify trend changes? That depends on the data, so you need to look at variance for the particular record (a side note – satellite mid-tropospheric records have higher variance than surface records, and inherently require more data to make that determination), at auto-correlation, the scale of trend changes, all of those, to determine whether current trends are significantly different from the past. It’s not a fixed number, although as an example Santer et al 2011 demonstrates that for the RSS record at least 17 years is required in the absence of forcing changes and with correction for ENSO effects. Both of which, needless to say, extend data requirements significantly. The ‘hiatus’ claims of major trend changes are just not supportable in the presence of climate variability.

There are other and very separate issues you’ve raised (in minimal detail) regarding models, but quite frankly GCMs are not and never were intended to project decadal scale variability – and over the scales that those climate models cover there’s certainly no ‘hiatus’. Especially when you look at how models perform given _actual_ recent forcings including volcanic and solar changes, rather than those projected for CMIP5 runs (Schmidt et al 2014). The models are actually quite good.