Last Friday, NASA GISS and NOAA NCDC had a press conference and jointly announced the end-of-year analysis for the 2014 global surface temperature anomaly which, in both analyses, came out top. As you may have noticed, this got much more press attention than their joint announcement in 2013 (which wasn’t a record year).

In press briefings and interviews I contributed to, I mostly focused on two issues – that 2014 was indeed the warmest year in those records (though by a small amount), and the continuing long-term trends in temperature which, since they are predominantly driven by increases in greenhouse gases, are going to continue and hence produce (on a fairly regular basis) continuing record years. Response to these points has been mainly straightforward, which is good (if sometimes a little surprising), but there have been some interesting issues raised as well…

Records are bigger stories than trends

This was a huge media story (even my parents noticed!). This is despite (or perhaps because?) the headline statement had been heavily trailed since at least September and cannot have been much of a surprise. In November, WMO put out a preliminary analysis suggesting that 2014 would be a record year. Earlier this month, the Japanese Meteorological Agency (JMA) produced their analysis, also showing a record. Estimates based on independent emulations of the GISTEMP analysis also predicted that the record would be broken (Moyhu, ClearClimateCode).

This is also despite the fact that differences of a few hundredths of a degree are simply not that important to any key questions or issues that might be of some policy relevance. A record year doesn’t appreciably affect attribution of past trends, nor the projection of future ones. It doesn’t re-calibrate estimated impacts or affect assessments of regional vulnerabilities. Records are obviously more expected in the presence of an underlying trend, but whether they occur in 2005, 2010 and 2014, as opposed to 2003, 2007 and 2015 is pretty much irrelevant.

But collectively we do seem to have an apparent fondness for arbitrary thresholds (like New Years Eve, 10 year anniversaries, commemorative holidays etc.) before we take stock of something. It isn’t a particularly rational thing – (what was the real importance of Usain Bolt’s breaking the record for the 100m by 0.02 hundredths of a second in 2008?), but people seem to be naturally more interested in the record holder than in the also-rans. Given then that 2014 was a record year, interest was inevitably going to be high. Along those lines, Andy Revkin has written about records as ‘front page thoughts’ that is also worth reading.

El Niños, La Niñas, Pauses and Hiatuses

There is a strong correlation between annual mean temperatures (in the satellite tropospheric records and surface analyses) and the state of ENSO at the end of the previous year. Maximum correlations of the short-term interannual fluctuations are usually with prior year SON, OND or NDJ ENSO indices. For instance, 1998, 2005, and 2010 were all preceded by an declared El Niño event at the end of the previous year. The El Niño of 1997/8 was exceptionally strong and this undoubtedly influenced the stand-out temperatures in 1998. 2014 was unusual in that there was no event at the beginning of the year (though neither did the then-record years of 1997, 1990, 1981 or 1980 either).

So what would the trends look like if you adjust for the ENSO phase? Are separate datasets differently sensitive to ENSO? Given the importance of the ENSO phasing for the ‘pause’ (see Schmidt et al (2014), this can help assess the underlying long-term trend and whether there is any evidence that it has changed in the recent decade or so.

For instance, the regression of the short-term variations in annual MSU TLT data to ENSO is 2.5 times larger than it is to GISTEMP. Since ENSO is the dominant mode of interannual variability, this variance relative to the expected trend due to long-term rises in greenhouse gases implies a lower signal to noise ratio in the satellite data. Interestingly, if you make a correction for ENSO phase, the UAH record would also have had 2014 as a record year (though barely). The impact on the RSS data is less. For GISTEMP, removing the impact of ENSO makes 2014 an even stronger record year relative to previous ones (0.07ºC above 2005, 2006 and 2013), supporting the notion that the underlying long-term trend has not changed appreciably over the last decade or so. (Tamino has a good post on this as well).

Odds and statistics, and odd statistics

Analyses of global temperatures are of course based on a statistical model that ingests imperfect data and has uncertainties due to spatial sampling, inhomogeneities of records (for multiple reasons), errors in transcription etc. Monthly and annual values are therefore subject to some (non-trivial) uncertainty. The HadCRUT4 dataset has, I think, the best treatment of the uncertainties (creating multiple estimates based on a Monte Carlo treatment of input data uncertainties and methodological choices). The Berkeley Earth project also estimates a structural uncertainty based on non-overlapping subsets of raw data. These both suggest that current uncertainties on the annual mean data point are around ±0.05ºC (1 sigma) [Update: the Berkeley Earth estimate is actually half that]. Using those estimates, and assuming that the uncertainties are uncorrelated for year to year (not strictly valid for spatial undersampling, but this gives a conservative estimate), one can estimate the odds of 2014 being a record year, or of beating 2010 – the previous record. This was done by both NOAA and NASA and presented at the press briefing (see slide 5).

In both analyses, the values for 2014 are the warmest, but are statistically close to that of 2010 and 2005. In NOAA analysis, 2014 is a record by about 0.04ºC, while the difference in the GISTEMP record was 0.02ºC. Given the uncertainties, we can estimated the likelihood that this means 2014 was in fact the planet’s warmest year since 1880. Intuitively, the highest ranked year will be the most likely individual year to be the record (in horse racing terms, that would be the favorite) and indeed, we estimated that 2014 is about 1.5 to ~3 times more likely than 2010 to have been the record. In absolute probability terms, NOAA calculated that 2014 was ~48% likely to be the record versus all other years, while for GISTEMP (because of the smaller margin), there is a higher change of uncertainties changing the ranking (~38%). (Contrary to some press reports, this was indeed fully discussed during the briefing). The data released by Berkeley Earth is similar (with 2014 at ~35%~46% (see comment below)). These numbers are also fragile though and may change with upcoming updates to data sources (including better corrections for non-climatic influences in the ocean temperatures). An alternative formulation is to describe these results as being ‘statistical ties’, but to me that implies that each of the top years is equally likely to be the record, and I don’t think that is an accurate summary of the calculation.

Another set of statistical questions relate to a counterfactual – what are the odds of such a record or series of hot years in the absence of human influences on climate? This question demands a statistical model of the climate system which, of course, has to have multiple sets of assumptions built in. Some of the confusion about these odds as they were reported are related to exactly what those assumptions are.

For instance, the very simplest statistical model might assume that the current natural state of climate would be roughly stable at mid-century values and that annual variations are Gaussian, and uncorrelated from one year to another. Since interannual variations are around 0.07ºC (1 sigma), an anomaly of 0.68ºC is exceptionally unlikely (over 9 sigma, or a probability of ~2×10-19). This is mind-bogglingly unlikely, and is a function of the overly-simple model rather than a statement about the impact of human activity.

Two similar statistical analyses were published last week: AP reported that the odds of nine of the 10 hottest years occurring since 2000 were about 650 million to 1, while Climate Central suggested that a similar calculation (13 of the last 15 years) gave odds of 27 million to 1. These calculations are made assuming that each year’s temperature is an independent draw from a stable distribution, and so their extreme unlikelihood is more of a statement about the model used, rather than the natural vs. anthropogenic question. To see that, think about a situation where there was a trend due to natural factors, this would greater reduce the odds of a hot streak towards the end (as a function of the size of the trend relative to the interannual variability) without it having anything to do with human impacts. Similar effects would be seen if interannual internal variability was strongly autocorrelated (i.e. if excursions in neighbouring years were related). Whether this is the case in the real world is an active research question (though climate models suggest it is not a large effect).

Better statistical models thus might take into account the correlation of interannual variations, or have explicit account of natural drivers (the sun and volcanoes), but will quickly run into difficulties in defining these additional aspects from the single real world data set we have (which includes human impacts).

A more coherent calculation would be to look at the difference between climate model simulations with and without anthropogenic forcing. The difference seen in IPCC AR5 Fig 10.1 between those cases in the 21st Century is about 0.8ºC, with an SD of ~0.15 C for interannual variability in the simulations. If we accept that as a null hypothesis, the odds of seeing a 0.8ºC difference in the absence of human effects is over 5 sigma, with odds (at minimum) of 1 in 1.7 million.

None of these estimates however take into account how likely any of these models are to capture the true behaviour of the system, and that should really be a part of any assessment. The values from a model with unrealistic assumptions is highly unlikely to be a good match to reality and it’s results should be downweighted, while ones that are better should count for more. This is of course subjective – I might feel that coupled GCMs are adequate for this purpose, but it would be easy to find someone who disagreed or who thought that internal decadal variations were being underestimated. An increase of decadal variance, would increase the sigma for the models by a little, reducing the unlikelihood of observed anomaly. Of course, this would need to be justified by some analysis, which itself would be subject to some structural uncertainty… and so on. It is therefore an almost impossible to do a fully objective calculation of these odds. The most one can do is make clear the assumptions being made and allow others to assess whether that makes sense to them.

Of course, whether the odds are 1.7, 27 or 650 million to 1 or less, that is still pretty unlikely, and it’s hard to see any reasonable model giving you a value that would put the basic conclusion in doubt. This is also seen in a related calculation (again using the GCMs) for the attribution of recent warming.

Conclusion

The excitement (and backlash) over these annual numbers provides a window into some of problems in the public discourse on climate. A lot of energy and attention is focused on issues with little relevance to actual decision-making and with no particular implications for deeper understanding of the climate system. In my opinion, the long-term trends or the expected sequence of records are far more important than whether any single year is a record or not. Nonetheless, the records were topped this year, and the interest this generated is something worth writing about.

References

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

Victor Venema 23 Jan 2015 at 4:42 PM

“Thus the method used above by Gavin is right and it is no surprise that HadCRUT finds similar uncertainties”

Victor, my argument is about year-year independence, not about the value of sigma, on which indeed HADCRUT and GISS agree. An analogy. Suppose you wanted to test whether children do in fact, as scientists claim, grow. So every year on 31/12 you find 100 children born 1/1/2004 and determine their average height. You find that 2103 was a record, and then so was 2014. How uncertain are you of 2014’s record?

You could do a t-test, 2013 vs 2014. You would estimate sigma from within sample variability, and then test whether, if no-growth, the 2014 result was just a chance choice of tall children. I think that is the equivalent of what is done here.

But suppose you had tried to ensure that you had kept the same children in both years, with just a few dropouts. Then you would be much more confident. The sampling error would be virtually gone.

I think that is the same here (of course, child growth is much less random than weather). Many of the differences that HADCRUT varies in the ensemble do not in fact change in GISS 2014 vs 2010. Methodology, location etc have not changed, but the sigma allows for that. The proposition that the actual thing evaluated by GISS in 2014 exceeds 2010 is much more certain. And the same applies to other notional indices.

The 38% references whether GISS 2014 exceeds what an independently constructed index would have measured in 2010, and includes all the variation that such an index would show in a 2014 vs 2014 comparison.

I’ve generated a graphic using the latest GISTEMP data that plots the anomaly over decadal average bars for decades 2005-2014, 1995-2004, and so on. The graphic clearly demonstrates that 2005-2014 was indeed warmer than 1995-2004, and so on.

#38 Alan Millar might want to take note. In fact, I calculate the difference between the last two decades to be 0.12 K, which is the same as the long-term rate given by the IPCC.

The graphic is for anyone to use.

Nick, yes I think I understood your claim. I was not talking about sigma. Also not about the year to year correlations of the temperature itself, which are significant.

The example with the children makes it even clearer what you mean. If there were a strong year to year correlation in the uncertainties, you would definitely be right.

However, the uncertainty in the anomaly estimates from 2013 and 2014 is mainly due to interpolation, because we do not know what happened between the stations. That is likely to a large part not correlated from year to year would be my expectation.

The uncertainty in the absolute temperature is also determined to a large part by a stable deviation between the mean temperature at the station and the mean temperature of area it is supposed to be representative for. Thus there I would expect a larger year to year correlation in the uncertainty.

For the example with children you could compute a t-test on the differences of their height between 2013 and 2014. Because of the importance of interpolation, I do not think that would be the solution for temperature anomalies from station data.

Steve Fish @ 37:

“What is the point of your questions?”

dftt

The point was made clear a while back. FUD regarding statistics because platitude: there are lies, damned lies and statistics. The excuse has been established, and so anything goes for trolls seeking to stir the pot.

It seems to me that a good outcome of Paris this year would be an agreement to set out a network of sensors that can reduce the noise in the annual global temperature calculation by a factor of 3 for at least three decades. This would give much more clarity about the nature of decade scale fluctuations that may provide important constraints on our understanding of how the climate system works. The present method of making this calculation relies on sparse enough data stations that improvement is quite possible since the uncertainty introduced by interpolation could be reduced.

#55–I can’t agree with you there, Chris. Paris is, and needs to be, about mitigation, not rebooting the temperature record yet again–and for that matter, the temperature record is about the least uncertain thing about the topic of climate change. FAR already identified a long-term trend of rising temps, and that was 25 years ago.

Kevin (#56),

I think Paris is also an opportunity for expanded scientific cooperation. There are places in Africa with poor coverage as well as in the Arctic and Antarctic where cooperation should already have provided dense instrumentation. I think that making a good advance on science could help with other forms of cooperation as well.

Stations that can provide satellite communications and a couple kw of solar power in remote regions might also serve some development goals. I’ve heard about computers being turned on in places with typically no more than 4th grade education and a whole math culture springing up around them among young people, without any formal instruction. Such stations might also be places where drugs that require refrigeration could be maintained.

I think we should be looking for more opportunities at Paris. This might be an excellent place for UNESCO to play a role.

Gavin, Re my #42. What is the slope of the 1998 to 2012 on each figure. In the olden days, we thought it was closeto zero, but now it is close to the predictions

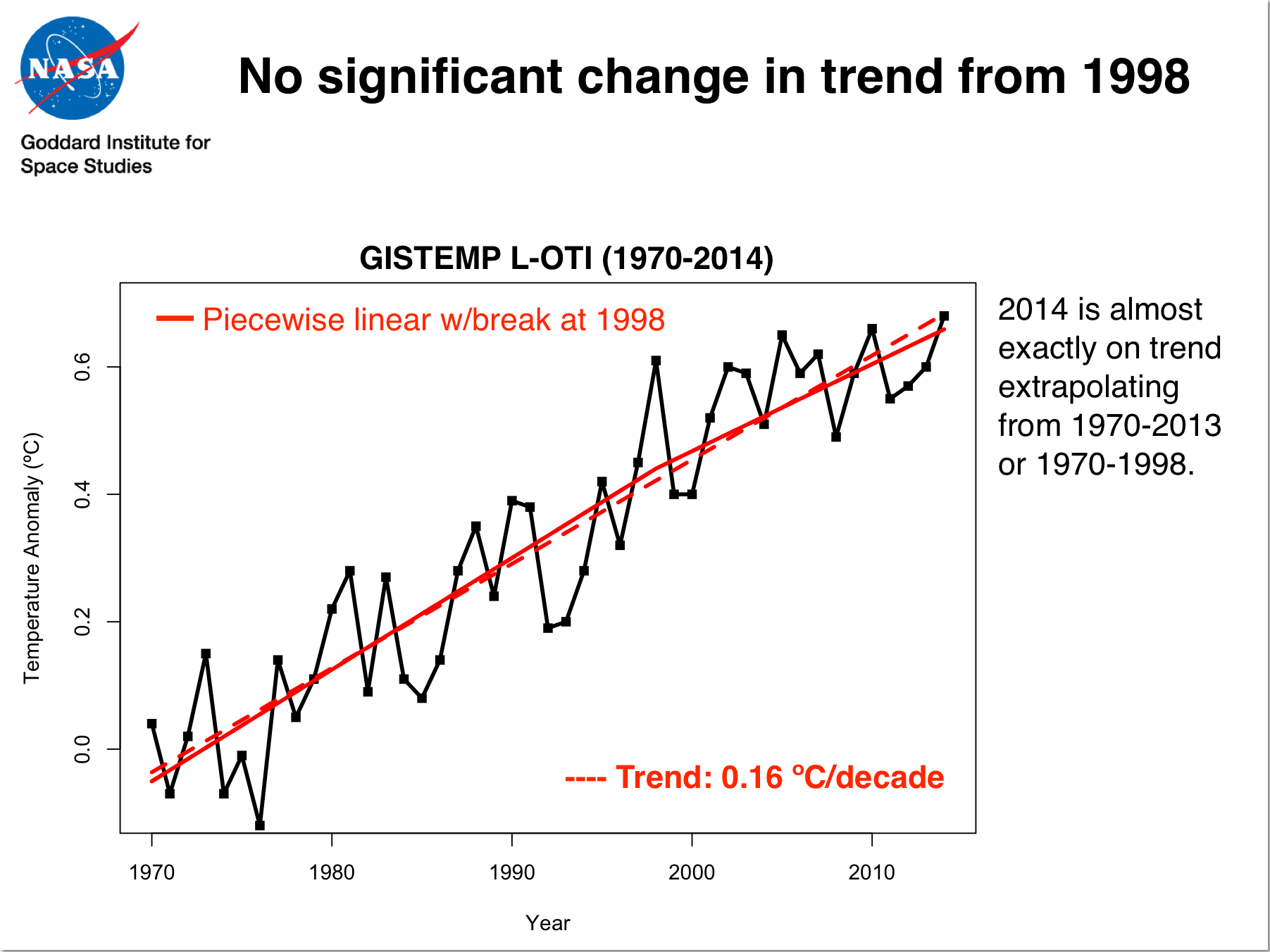

[Response: You are looking at something else. A piecewise continuous function is a better way of looking at whether a long-term trend is continuing. If instead you look at short term trends only you get these unphysical jumps at your break point. As I’ve said elsewhere, there is no physically coherent model of climate change that is linear, magic jump, then linear again. Now, are short term trends interesting? Sure – but their attribution to specific causes is going to be different than for long term trends and the shorter they get the more important interannual variations and ENSO is going to be relative to the long-term drivers. Attribution for only at 1998-2014 is largely dominated by the ENSO signal. – gavin]

> reduce the noise in the annual global temperature calculation

That’s Xeno’s Climate Plan.

Every meeting gets us halfway to doing something.

“Delay is the deadliest form of denial.”

Look at the measles outbreaks. As a public health person said today on the radio, science doesn’t convince people.

Period.

(PS ReCaptcha for me is now taking ten to fifteen attempts to get one right.)

56 KevinM, I agree. We’re going down the BAU path. Scientists and engineers will have to figure out how to dig us out of this mess, and the general public must become scared like in the world wars.

55 ChrisD, you’ve laid out a plan which might give traction to denialist memes for years. The information gathered might be useful, but I don’t see it rising to that great of importance. We’ll have plenty of new records. “Let’s recount it even more accurately” is compelling to scientific minds. but in our international situation, we’ve got to make treaties, as Kevin said, that speak to mitigation and adaption.

Victor Venema,

“However, the uncertainty in the anomaly estimates from 2013 and 2014 is mainly due to interpolation, because we do not know what happened between the stations. That is likely to a large part not correlated from year to year would be my expectation.”

Yes, with more reading and thinking I believe you (and Gavin) are right. It’s clearer with the Hansen2010 description. They check past station distributions against GCM data, integrating both GISS-wise and with full GCM accuracy, and use the spread of differences. That sounds as if it might be a characteristic of the station placing, but since the GCM weather is not synchronised to Earth, it is really just comparing the integration method and the effect of limited sampling, not the sample.

The 38% number was a poor way to present the probabilities in my opinion. I don’t know how to do this perfectly properly, but using paired months (jan-jan, feb-feb etc) to get the average diff and variance (45 minutes of work in a spread sheet), I did paired t on the last 15 years or so (NOAA data), and this is what got and what I probably would say:

Compared individually to the three next highest years, all individual records in themselves, 2014 has a 1 in 5 chance of being lower than 2010, a 1 in 21 chance of being lower than 2005, and a 1 in 6 chance of being lower than 1998.

All other years have less than a 1 in 20 chance of being higher than 2014, with the exception of 2002 which has a 1 in 11 chance.

That might be harder for the denialists to misconstrue, although they can be very talented sometimes. I should have said, “it is claimed” before the “they”.

I don’t know why I did paired analysis, I guess because it seems the SST temp anomalies move seasonally. Using months is probably not the right way to do this, but it’s the data I had in my machine…

Cheers, everyone.

48% not 38%. sorry. Bad when the second wording your post is wrong.

So .07C in 16 years comes in well below the IPCC projections.

1.7 million to 1 is good enough for me.

anyone got an answer to to this alleged tampering with south American data:

https://notalotofpeopleknowthat.wordpress.com/2015/01/20/massive-tampering-with-temperatures-in-south-america/

from this article in the UK Daily Telegraph

http://www.telegraph.co.uk/comment/11367272/Climategate-the-sequel-How-we-are-STILL-being-tricked-with-flawed-data-on-global-warming.html

Perhaps someone should reply to this issue and demonstrate why RAW data is going one way (allegedly) and the adjusted data the other.

#52 Jon Keller > GISTEMP 1-Year Running Average w/Decade Bars

Excellent graphic concept, I think. Thank you.

Andrew Revkin and others > to use.

WB:

I don’t bet on horse races, but it’s my understanding that race odds are figured based on the track record of the contenders. I don’t bet on which year is the warmest either, but if I had to choose between a working, publishing climate scientist named Gavin Schmidt and some guy on the Internet ‘nymed WB, my money would be on Gavin.

What are the odds that WB is actually Ken Cuccinelli?

Chris Dudley:

In three decades, much uncertainty will be resolved, and much opportunity to mitigate climate change will be lost. I’m hoping Paris has a different outcome.

Hank (#58),

When there are things to learn from improved measurements, then it is worth it to make those improvements. Gavin points out these sources of error: “…imperfect data and has uncertainties due to spatial sampling, inhomogeneities of records (for multiple reasons), errors in transcription etc.”

Transcription comes in with the means of cooperation between national meteorological organizations and spatial sampling is uneven owing to differences in national capabilities. Inhomogeneities might be addressed, in part, with more automation.

An agreement for greater mutual aid and transparency along with new deployment of instrumentation could likely advance data quality by even more than between the 1980s and now seen in the 5th figure.

Chris Dudley, I think that is a wonderful idea. A global reference climate network of the quality of the US climate reference network would be an enormous step forward. The same for the radiosonde network. The “global” reference radiosonde network is embarrassing small.

Do you have any idea how one would go about inserting one or two sentences in the treaty? Who should we send an email to?

Kevin McKinney, just because the mitigation sceptics like to jump on the station data and because their arguments are normally wrong, does not mean that there are no real problems. With homogenization methods we can remove some of the bias in the data, but not all. And when it comes to daily data and changes in weather extremes and weather variability, I am not at all convinced yet that we are able to remove most of the bias.

Nick Stokes, great we agree. If a smart and sceptical person does not agree with me, I always wonder what I am overlooking.

Pete Best, so 3 stations in South America have been adjusted up. There are also at least 3 that were adjusted down. Is it really necessary to respond to such stupidity? We may have to start an action for people to stop buying The Telegraph. Would be more useful use of our time.

(PS ReCaptcha for me is now taking ten to fifteen attempts to get one right.)

NO amount of better quality data will do anything to sway denier interests any more than better quality data swayed tobacco denier interests. Unfortunately, it will take things happening. The best hope is that the cheapest things happen first…which is probably true.

#69,

A few decades is needed to capture some decade scale fluctuations. An equatorial volcano occurring during that period would allow much better model calibration, for example, settling questions about transient climate response.

I’m expecting more from Paris beyond renewed and expanded scientific cooperation, but I think it would be a good thing to include.

Jim (#60),

Well, for one thing we see climate models that are as noisy as the temperature record, yet as the errors reduce, it looks like the temperature record gets less noisy. Thus, the noise in the climate models is not reproducing just inter-annual fluctuations, but instrumental noise. That is not really something that we can learn from. So, getting better measurements would challenge models to be more physical.

There are times when getting more data is just stamp collecting, unmotivated by hypothesis testing, though even then, it may later be interpreted in a way that leads to new insights. But it appears that in this case, there would be excellent results from improving data collection.

Victor (#71),

“Do you have any idea how one would go about inserting one or two sentences in the treaty? Who should we send an email to?”

As I understand it, the personal diplomacy that has gone into putting together HadCrut or GISTEMP has been pretty extensive. HadCrut, in particular, has included data from for-profit efforts with restrictions on how the data may be used. I think we’d want to keep from stepping on toes there since maintaining the current network is crucial for continuity.

Yet, Paris will be a government-to-government affair. In some cases, new meteorological capabilities will be welcomed, which may well be a place for UNESCO to play a role in conjunction with the World Meteorological Organization.

I think the place to start is at the desired noise level, say 0.05 C Full Width at Half Max for the annual temperature. Then, design the sensor network that can achieve that. Then, look a the details on the ground that would make the side benefits enticing while also preserving relations already developed. Both GISS and CRU could provide an ideal network design I think, so that would be the first set of emails. Going forward from there, likely is would be up to UNESCO and WMO to give input to Paris and likely it would be cell phone network builders that would end up installing many of the new stations.

The rapidity of the ARGO float deployment in international waters suggests that it may well be the toe trodding that is what holds back getting a denser land base climate network. So, a conference like Paris may be the place to work out those issues in a positive manner.

You don’t have to try to solve every catchpa presented. Just use the refresh button until something legible turns up.

> Chris Dudley says:

> … When there are things to learn from improved measurements,

> then it is worth it to make those improvements.

That depends on what the definition of “it” is, doesnt it?

Further study is needed translates into delay.

We knew enough 20 years ago to make a course correction — imprecisely.

That would have been a very good idea.

NO amount of better quality data will do anything to sway denier interests any more than better quality data swayed tobacco denier interests. Unfortunately, it will take things happening. The best hope is that the cheapest things happen first…which is probably true.

Comment by jgnfld — 25 Jan 2015

People will accept scientific fact UNTIL it disagrees with either their religion or their politics.

Re short-term vs. long-term trends. When I examine the temperature graphs for the 20th-21st centuries (e.g., this one, courtesy of NASA: http://en.wikipedia.org/wiki/File:Warming_since_1880_yearly.jpg), I don’t see much of a trend until ca. 1979. From then till ca. 1998, I see what looks like a very alarming upward trend, the famous “hockey stick” I suppose. According to the annual mean, this trend levels off from 1998 on — according to the 5 year running mean, the leveling commences around 2002. Looks like some sort of plateau has been reached. Temperatures still seem quite high, but regardless of how many “record-breaking” years we find from 1998 on, the slope from that year on has leveled off to approximately 0.

What I see, therefore is not a long term warming trend, covering all or most of the 20th century, as so often claimed, but a much shorter trend of 20 years or so (23, if you insist on the five year mean), preceded and followed by periods with no particular trend in either direction. In the light of the NASA graph, Gavin’s bar graph strikes me as misleading, as it glosses over distinctions that seem important. Of course, Gavin would claim that his graph is more meaningful because it represents a “long term” trend, but as we clearly see from the NASA graph, no such thing seems apparent.

So, if one wants to claim that only long terms trends really count, then I see nothing to take seriously as far as “global warming” is concerned, since I see no long term trend at all. What I see is a period of no trend, followed by a relatively short term (20-23 years) upward trend, followed by another relatively short term period with no particular trend (or if you prefer, an extremely shallow upward trend). The fact that we are now at a point where the temperatures are significantly higher than they were at the beginning of the 20th century may well be meaningful, but the present temperature was NOT arrived at via a long-term trend. What got us where we are today was a short-term trend, of only 20-23 years. But according to climate change orthodoxy, short term trends are not to be taken seriously, so . . . where does that leave us?

#56 Kevin, I agree with you. It seems to me the most significant climate takeaway for 2014 was the finding that heat movement into the ocean depths was the reason the rate of atmospheric warming the previous 15 years had flattened out. Therein at least lays the root of a solution.

Thank you for an excellent article, Gavin. I think the main significance of 2014 is that it continues the upward trend. I think your claim that the probability of it being the warmest year being around 40% is both honest and reasonable. I notice that those arguing that it might not be conveniently only talk about probabilities to the left of the mean, which could make it not the hottest year. Assuming the distribution is symmetric, half of it covers estimates higher than the mean you cite.You did not title the article “Five percent chance that 2014 hottest year by 0.12 deg Centigrade,” which, whilst it might be true, would be alarmist.

If I were involved in long term planning for responding to global warning, I would base my plans on a climate sensitivity of 3 deg C for CO2 doubling. I would hope that it would be less, maybe 2 deg. But I would certainly worry that it could end up between 4 and 5 deg, and would warn others that this was a possibility, even if not the most likely outcome.

Hank (#76),

Do you think climate scientists should all retire as soon as there is an agreement to end emissions? There is definitely more to learn about how climate behaves and there are now data sets for ocean warming and carbon dioxide distribution that could benefit from better surface temperature measurements. And, it can help to prime other cooperation to get scientific cooperation really cooking.

#78 Victor,

I’m sure others will also address what you’ve said, but I’ll throw in my piece.

First of all, the theory of AGW rests mostly on our scientific understanding of how climate works, specifically the heat-trapping properties of gases such as CO2. If CO2 does trap heat, and it increases in the atmosphere, it will trap heat in the atmosphere. It’s really as simple as that if you like. I think people have recommended to you Weart’s Discovery of Global Warming and I will also heartily recommend it, specifically the section entitled “The Carbon Dioxide Greenhouse Effect”.

Second, I think you are confusing the significance of the short term trend you are talking about (since circa 2000). While the trend since 2000 is certainly less (numerically) than the trend between 1970-2000, statistically speaking it isn’t a significant deviation from the 1970-2000 trend. The last 15 years actually follow the previous trend quite closely. See the recent Tamino post for more information.

There are more reasons to do research than to convince mitigation skeptics. That may even be the worst possible reason to do research. They have demonstrated over the decades that they have no interest in scientific understanding whatsoever.

We are performing a unique experiment with the global climate system. Future climatologists would never forgive us when we would not study it accurately. That needs a real climatological station network, rather than making do with the meteorological network.

Practically, we would need to know when we cross the 2 degree limit. The developing countries are compensated for climate change damages. Thus we need to know how much the climate and especially extreme weather is changing.

Working on the removal of non-climatic changes (homogenization) I must admit that I was mainly thinking of trying to make the network as stable as possible. Pristine places so that relocations are not necessary and the surrounding changes as little as possible. Metrological traceable calibration standards. Open hardware, so that we can still build exactly the same instruments decades or centuries into the future. (Think back to 1900 to see what a challenge that is.) And the highest quality standards by 3 double redundancy and online data transition so that errors are found fast (like in the US climate reference network). Making sure that currently badly sampled regions are also observed would also be important.

We would need a design and demonstrate how much more accurate such a network would be and which questions it can thus answer better. With some colleagues we are brainstorming about that. But we would also need to get political attention. It sounds ideal if the global climate treaty would say we need a global climate reference network. I do not think it is realistic that every country, especially poorer countries, build up such a climate network. Meteorological networks are directly useful for agriculture and so on, a climate network would be more expensive, requires more expertise and is more fundamental research. Thus doing this on a global scale seems to make sense.

(You can also not click on “I’m not a robot”, mostly you are not asked to do something.)

Who do you call when you want to talk to COP?

Because of the discussion here about how important it is to add instrumentation for better science, I would like to know what the scientists think.

Moderators, what is your wish list for added funding?

Victor: “I am not at all convinced yet…”

I rest my case.

59: Hank, re: re-captcha, sure you are not actually a robot in disguise Hank? haha!

Maybe when the data temp trends reaches 3 standard deviations will world govs take notice……no..I think not? No matter how adamant we are about the validity and certainty of the data’s message, it is still not going to change the minds of big oil and big vested interest. Next tact guys??

Victor (#83),

I think that coming up with a fairly concrete proposal that addresses some of the issues you raise and would perform with a reliable improvement in precision would be the first thing to address so that the parties know what they would be signing up for. I’d envision the meteorological capabilities being of benefit in addition to suitability for good climate science. The grounds for undertaking this kind of thing can, I think, be found in the following language already ratified by all parties, which may be the few lines you are looking for.

Framework Convention on Climate Change http://unfccc.int/files/essential_background/background_publications_htmlpdf/application/pdf/conveng.pdf

Article 4 section 1

(g) Promote and cooperate in scientific, technological,

technical, socio-economic and other research,

systematic observation and development of data

archives related to the climate system and intended

to further the understanding and to reduce or

eliminate the remaining uncertainties regarding the

causes, effects, magnitude and timing of climate

change and the economic and social consequences of

various response strategies;

(h) Promote and cooperate in the full, open and prompt

exchange of relevant scientific, technological,

technical, socio-economic and legal information

related to the climate system and climate change,

and to the economic and social consequences of

various response strategies;

(i) Promote and cooperate in education, training and

public awareness related to climate change and

encourage the widest participation in this process,

including that of non-governmental organizations;

Gavin, your responses to my #42 and 58 don’t address my concerns. I am not talking about short and long term trends, rather the changes to the historical record that always seem to support the theory

#82 Jon Keller: “I think people have recommended to you Weart’s Discovery of Global Warming and I will also heartily recommend it, specifically the section entitled “The Carbon Dioxide Greenhouse Effect”.”

Yes, I’ve read his historical summary and found it well written and clear. And yes, I know about the Greenhouse Effect. What’s in question is not the effect per se, which is certainly real, but the issue of sensitivity, i.e., how sensitive is the environment to the greenhouse effect produced by carbon from fossil fuel emissions?

As for Tamino’s post, I’d say the trend line in the graph he presents (https://tamino.files.wordpress.com/2015/01/nasa4.jpeg) looks as much like an artifact as the one presented above by Gavin. The fact that the trend line remains unchanged despite the very obvious change of direction beginning in 1998 (or a bit later, if you prefer), gives the game away. The data is straightforward enough to assess visually. A trend line is not necessary and, as in these cases, can be deceiving. I’m not claiming intentional deception. Many well meaning scientists have produced statistical artifacts, it’s an easy trap to fall into.

If you follow the bouncing ball you can see a very clear upward trend in both the uppermost and lowermost positions until 2000. After that, you can’t. From 1998 on, the line connecting the uppermost balls is very close to horizontal. From 2001, the lowermost balls actually dip down over time, followed by a brief upward zip at 2010, to a position roughly the same as 2001.

A brief perusal of the literature should make it clear that a great many climate scientists have accepted the hiatus as real, and as a problem, and a great many hypotheses have been published in various efforts to account for it (including a paper Tamino wrote with Stefan Rahmstorf, as I’m sure you are all aware). A consensus has formed around exactly none.

To quote the eminent detective Adrian Monk: “Here’s what happened”:

Beginning around 1979, climate scientists began to notice a very disturbing looking trend, in which atmospheric temperatures began to climb in tandem with atmospheric carbon associated with fossil fuel emissions that had already been steadily rising for some time. After a few years of this, it began to look very much like some sort of tipping point had been reached, in which the atmosphere began to respond rather dramatically to the increased carbon levels. The correlation between the two seemed so clear, so dramatic and so dangerous that scientists became convinced not only that fossil fuels were causing the rapid increase in atmospheric warmth, but that the increase was so dangerous as to destabilize the planetary weather system generally. The only recourse seemed to be: stop “polluting” the planet with fossil fuel emissions.

As the end of the century approached, the correlation seemed so obvious, and the dangers so clear and so extreme, that anyone could be forgiven for simply assuming the “global warming” effect had to be produced by these anthropogenically induced CO2 emissions. Most climate scientists became convinced during that time, and they managed to convince a great many others, including many very influential people in politics and the media. And since the remedy, drastic cutbacks in fuel production, was so extreme, many of these people went way out on a limb with their concerns, precipitating a veritable firestorm of anxiety — since nothing less than a form of sheer hysteria could be powerful enough to produce the necessary changes.

In view of the picture as it presented itself at the turn of the millennium, the extreme response did seem to be both reasonable and responsible.

But then a funny thing happened. The correlation between CO2 emissions and “global warming” suddenly vanished. While temperatures continued to climb, producing year after year of record breaking warmth globally, the rate of climb reduced to almost nothing, while the increase in CO2 emissions remained as high as ever. This was at first dismissed as “noise,” but as the trend of no trend continued for a period of anywhere from 15 to 18 years, it became increasingly clear that the earlier correlation had been misleading. There was in fact no longer much evidence that CO2 emissions were the cause of the warming trend over the last 20 or so years of the 20th Century.

By that time, however, it was too late for all those convinced by the earlier correlation to change their perspective. Too much sweat, tears, self esteem and self identity was invested in the original paradigm and the drastic efforts to deal with it. These were good people, whose concerns did seem, at the time, to be fully justified. How could anyone expect them to toss all that effort aside just because, well — as we detectives like to say: correlation does not imply causality — causality always implies correlation.

From Victor’s blog: “While climate change has always been an important factor in the saga of life on Earth, over the last several years the phrase has become a slogan for a worldwide movement looking more and more like a classic doomsday cult.”

Re #91 – nice try but your talking a whole load of nonsense really

http://thinkprogress.org/climate/2015/01/22/3614256/hottest-year-ocean-warming/

Oceans are a major factor in earths system as are oscillations and the winds and other large scale factors in earths system science.

I doubt greenhouse gases have suddenly stopped working as such and hence lets consider the entire system shall we. Lower Atmosphere is warming, oceans upper layers are warming, arctic summer sea ice is disappearing, WAIS and Greenland are both losing mass annually and the majority of the earths glaciers are losing mass too. Its not the Sun (ruled out) and its not random or ordained by a higher power either.

It is hard to refute all of these issues unless of course you just want to concentrate on a single subsystem and even then the temperature is still rising.

Victor: “But then a funny thing happened.”

Utter horsecrap. Anyone who contends there is now no correlation between CO2 and temperature is innumerate and ignorant of elementary physics or a liar.

Victor

You are focusing on the global land/ ocean temperature and ignoring total energy content.

The main energy reservoir in the climate system is ocean water. It is difficult to accept the hypothesis that global warming has stopped while ocean heat content continues to increase.

@90 “The fact that the trend line remains unchanged despite the very obvious change of direction beginning in 1998 (or a bit later, if you prefer), gives the game away. The data is straightforward enough to assess visually. A trend line is not necessary and, as in these cases, can be deceiving. I’m not claiming intentional deception. Many well meaning scientists have produced statistical artifacts”

Perhaps you are unaware of several statistical things:

1. that actually analyzing data against proposed alternatives is a better idea than eyeballing artifacts and “just knowing”. When you do this you find what you thought you knew is not the case.

2. that cherrypicking RELIES on the misuse of statistical artifacts to “see” so called “trends” or “nontrends” which are totally expectable given the distributional characteristics of the temp distribution.

3. STARTING your “visual inspection” in 1998 is starting at a statistical outlier or near outlier (depending on the particular series used). Starting at a picked point because of its characteristics (cherrypicking) COMPLETELY violates the standard assumptions of using OLS for inference. A proper sampling distribution could, in principle, be constructed which takes into account cherrypicking bias. Unfortunately, if you did that, all of your conclusions would be found groundless.

4. that in the annually aggregated GISS data (Jan-Dec column) from 1998 to present there is a significant statistical trend of .83/decade with a standard error which puts this trend well within the CIs of the longer term trends.

5. that subsequent ranges from 1999 and 2000 to present are also.

6. that your old meme is dead. Your new meme has NOW to be: “It is ‘obvious’ that ‘something changed’ in 2001.” Something did: Statistical power became so low that doing an “analysis” becomes silly thing to do by BOTH eyeball and calculation. In that regard, note that the error around the 2003 to present trend places it well within the long term trend. Your “visual inspection” picks up a “nontrend” for which there is simply no evidence. (This is Tamino’s main point which you fail to pick up on.)

Here are the CALCULATED OLS values using the R lm routine. (Note: All Durbin-Watson tests for autocorrelation not significant):

Year || Estimate || Std. Error || t value || Pr(>|t|).

1995 1.1602 0.2896 4.006 0.000829 ***.

1996 1.1421 0.3217 3.551 0.00246 **.

1997 0.8989 0.3235 2.778 0.0134 *

1998 0.826 0.361 2.288 0.0371 *.

1999 1.1015 0.3675 2.997 0.00961 **.

2000 0.8429 0.3840 2.195 0.0469 *.

2001 0.4615 0.3633 1.27 0.228.

2002 0.3187 0.4132 0.771 0.457.

2003 0.4406 0.4818 0.914 0.382.

Finally, that there may be second order effects presently subsumed in the error terms of various analyses may well be true. Physical scientists are of course interested in explaining those as well as the long term, more linear at the moment trend. But focusing on them as some sort of denial of the long term linear trend is either intentionally or mistakenly wrong-headed.

Victor (#83),

“Who do you call when you want to talk to COP?”

For general instructions, I’d mount a march in NYC. But, in this specific case, since the work of the World Meteorological Organization is acknowledged in the preamble of the Framework Convention, I’d think bringing them into the loop would be a good thing to do.

Victor,

Please take a look at page 264 of this and then tell me that there has been any “pause” in global warming.

About surface temperatures, you are drawing a trend from a short period that is greatly influenced by noise (such as ENSO). Notice that the general 1970-2000 trend in Tamino’s graph is posited to be the underlying trend for surface temperatures — that is, the actual trend of surface temperatures, with noise removed. Notice also that as we enter 2000, the global temperature with noise removed (that is, the trend) is at the bottom of the 1998 spike. That is because 1998 was an unusually warm year. So if that is where noiseless temperatures were as we entered 2000, anchor the start point there, not at the top of the 1998 spike. Then notice that a flat line from there to 2015 does not describe the data at all. You have to go up at basically the same rate as the 1970-2000 trend in order to get a good fit. (I seem to remember there being a Tamino post illustrating this but I can’t find it)

Now all of what I just said is not strictly statistical, because I seem to remember you expressing some distaste for statistics. However, if you were to perform some sort of basic analysis, you would find that the 1970-2000 trend extrapolated describes the data rather well, as demonstrated by Dr. Schmidt and Tamino. You would also find that any trend you calculate starting from circa 1998 will have a confidence interval which includes at least 0.12 K per decade, meaning that there is no significant deviation from the previous underlying trend.

You are correct that the numerical slowdown has been acknowledged by the scientific community, because it does exist and is of some scientific interest, so I understand. But it is not a significant deviation from the previous trend, and by significant I mean in the statistical sense. There is no evidence that it isn’t just due to chance/noise, thus these theories that CO2 and surface warming have become uncorrelated are totally baseless at the present time.

@Nick Stokes (comment 61 & earlier) and @Victor Venema (comment 53 and earlier):

Nick and Victor,

re. your discussion upthread about whether errors in year X (e.g. 2014) should be treated separately to errors in year Y (e.g. 2010).

Nick, you’re right that some errors in the HadCRUT4 error model are persistent through time and therefore could affect both years’ temperature estimates by a similar amount, if the two years are quite close in time. This component of the error would therefore almost disappear when calculating the difference between the temperature in 2014 and 2010. Ignoring this might incorrectly slightly reduce the calculated probability that 2014 is the warmest year.

Figure 3 in Morice et al., 2012: http://onlinelibrary.wiley.com/doi/10.1029/2011JD017187/abstract) shows this.

See fig. 3 here.

The urbanisation bias in 2010 will be similar in 2014. So both years will be biased in much the same way and that bias cannot contribute to making 2014 randomly warmer than 2010.

But the bias uncertainty is smaller than the errors which are not persistent in time (e.g. due to incomplete spatial coverage), so I don’t think accounting for this would make much difference, as Victor suggests. And anyway, when we’re down to differences of hundredth’s of a degC, the structural uncertainty in the error model itself may be difficult to ignore! HadCRUT4 2014 value was released today (provisional number of course) and difference from 2010 is in thousandth’s of a degC.

Hey, Victor pretended to be naive for a long time, claimed to be an academic with scientists around him he relied on — but he’s never changed his tune.

He’s here doing PR or testing the PR he does elsewhere. Eschew.