As the World Meteorological Organisation WMO has just announced that “The year 2014 is on track to be the warmest, or one of the warmest years on record”, it is timely to have a look at recent global temperature changes.

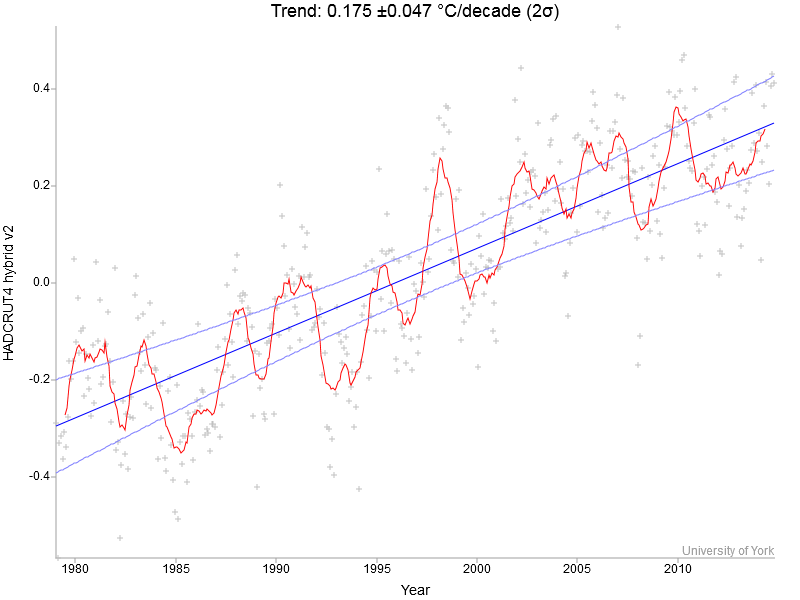

I’m going to use Kevin Cowtan’s nice interactive temperature plotting and trend calculation tool to provide some illustrations. I will be using the HadCRUT4 hybrid data, which have the most sophisticated method to fill data gaps in the Arctic with the help of satellites, but the same basic points can be illustrated with other data just as well.

Let’s start by looking at the full record, which starts in 1979 since the satellites come online there (and it’s not long after global warming really took off).

You clearly see a linear warming trend of 0.175 °C per decade, with confidence intervals of ±0.047 °C per decade. That’s global warming – a measured fact.

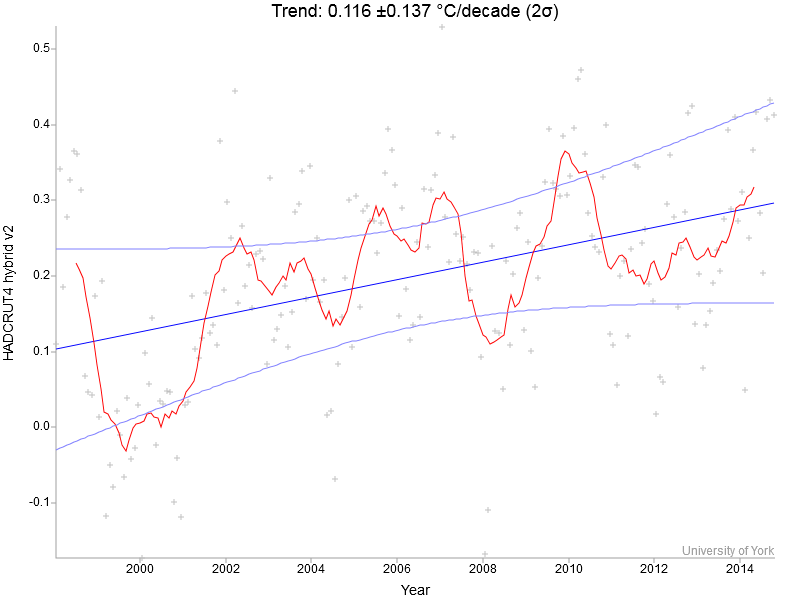

But you might have heard claims like “there’s been no warming since 1998”, so let us have a look at temperatures starting in 1998 (the year sticking out most above the trend line in the previous graph).

You see a warming trend (blue line) of 0.116 °C per decade, so the claim that there has been no warming is wrong. But is the warming significant? The confidence intervals on the trend (± 0.137) suggest not – they seem to suggest that the temperature trend might have been as much as +0.25 °C, or zero, or even slightly negative. So are we not sure whether there even was a warming trend?

That conclusion would be wrong – it would simply be a misunderstanding of the meaning of the confidence intervals. They are not confidence intervals on whether a warming has taken place – it certainly has. These confidence intervals have nothing to do with measurement uncertainties, which are far smaller.

Rather, these confidence intervals refer to the confidence with which you can reject the null hypothesis that the observed warming trend is just due to random variability (where all the variance beyond the linear trend is treated as random variability). So the confidence intervals (and claims of statistical significance) do not tell us whether a real warming has taken place, rather they tell us whether the warming that has taken place is outside of what might have happened by chance.

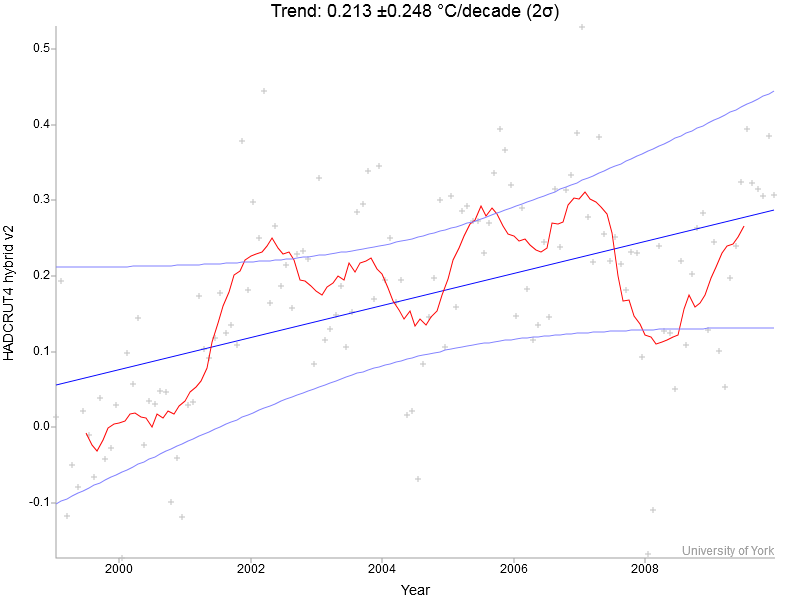

Even if there was no slowdown whatsoever, a recent warming trend may not be statistically significant. Look at this example:

Fig 3. Global temperature 1999 to 2010.

Over this interval 1999-2010 the warming trend is actually larger than the long-term trend of 0.175 °C per decade. Yet it is not statistically significant. But this has nothing to do with the trend being small, it simply is to do with the confidence interval being large, which is entirely due to the shortness of the time period considered. Over a short interval, random variability can create large temporary trends. (If today is 5 °C warmer than yesterday, than this is clearly, unequivocably warmer! But it is not “statistically significant” in the sense that it couldn’t just be natural variability – i.e. weather.)

The lesson of course is to use a sufficiently long time interval, as in Fig. 1, if you want to find out something about the signal of climate change rather than about short-term “noise”. All climatologists know this and the IPCC has said so very clearly. “Climate skeptics”, on the other hand, love to pull short bits out of noisy data to claim that they somehow speak against global warming – see my 2009 Guardian article Climate sceptics confuse the public by focusing on short-term fluctuations on Björn Lomborg’s misleading claims about sea level.

But the question the media love to debate is not: can we find a warming trend since 1998 which is outside what might be explained by natural variability? The question being debated is: is the warming since 1998 significantly less than the long-term warming trend? Significant again in the sense that the difference might not just be due to chance, to random variability? And the answer is clear: the 0.116 since 1998 is not significantly different from those 0.175 °C per decade since 1979 in this sense. Just look at the confidence intervals. This difference is well within the range expected from the short-term variability found in that time series. (Of course climatologists are also interested in understanding the physical mechanisms behind this short-term variability in global temperature, and a number of studies, including one by Grant Foster and myself, has shown that it is mostly related to El Niño / Southern Oscillation.) There simply has been no statistically significant slowdown, let alone a “pause”.

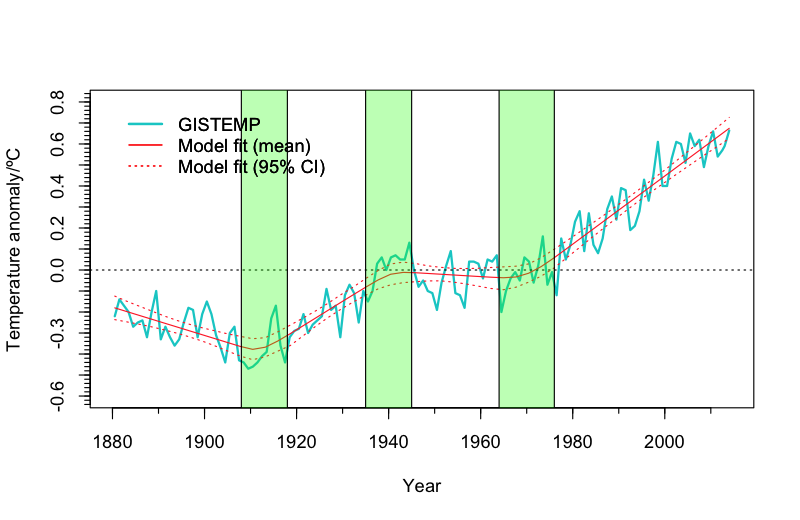

There is another more elegant way to show this, and it is called change point analysis (Fig. 4). This analysis was performed for Realclimate by Niamh Cahill of the School of Mathematical Sciences, University College Dublin.

Fig. 4. Global temperature (annual values, GISTEMP data 1880-2014) together with piecewise linear trend lines from an objective change point analysis. (Note that the value for 2014 will change slightly as it is based on Jan-Oct data only.) Graph by Niamh Cahill.

It is the proper statistical technique for subdividing a time series into sections with different linear trends. Rather than hand-picking some intervals to look at, like I did above, this algorithm objectively looks for times in the data where the trend changes in a significant way. It will scan through the data and try out every combination of years to check whether you can improve the fit with the data by putting change points there. The optimal solution found for the global temperature data is 3 change points, approximately in the years 1912, 1940 and 1970. There is no way you can get the model to produce 4 change points, even if you ask it to – the solution does not converge then, says Cahill. There simply is no further significant change in global warming trend, not in 1998 nor anywhere else.

In summary: that the warming since 1998 “is not significant” is completely irrelevant. This warming is real (in all global surface temperature data sets), and it is factually wrong to claim there has been no warming since 1998. There has been further warming despite the extreme cherry pick of 1998.

What is relevant, in contrast, is that the warming since 1998 is not significantly less than the long-term warming. So while there has been a slowdown, this slowdown is not significant in the sense that it is not outside of what you expect from time to time due to year-to-year natural variability, which is always present in this time series.

Given the warm temperature of 2014, we already see the meme emerge in the media that “the warming pause is over”. That is doubly wrong – there never was a significant pause to start with, and of course a single year couldn’t tell us whether there has been a change in trend.

Just look at Figure 1 or Figure 4 – since the 1970s we simply are in an ongoing global warming trend which is superimposed by short-term natural variability.

Weblink: Statistician Tamino shows that in none of the global temperature data sets (neither for the surface nor the satellite MSU data) has there recently been as statistically significant slowdown in warming trend. In other words: the variation seen in short-term trends is all within what one expects due to short-term natural variability. Discussing short-term trends is simply discussing the short-term “noise” in the climate system, and teaches us nothing about the “signal” of global warming.

If I could take a few pokes at some questions:

@wili #2 – Accelerate sooner from 0.175? Yes. Using the merged land-ocean-ice datasets or Cowtan & Way and then selecting start and end points cunningly as is often done by the Cornwall Alliance except looking for the high rather than the low end of the trend range, we’re already able to find for an end date of 2008:

Trend: 0.193 ±0.064 °C/decade (2σ)

β=0.019338 σw=0.00084650 ν=14.435 σc=σw√ν=0.0032162

At the high end, that’s 0.257 °C/decade, a substantial 47% faster than six decades. We have a 5% chance to see 2 °C about 2040, discounting the post-2008 dip arguably attributable to some short-term influence like a volcano or La Nina. That’s without the acceleration we can expect under the current world policy as announced by the positions of the ten highest emitting nations. To my thinking, a 5% chance is too high.

@Jef Jelten #3 – I distrust lag as a word, on a complex and convolute function like CO2 in the carbon cycle represents. Lags will be multimodal and dynamic; trying to simplify to a single term for this equation without a clear single dominating relation will always give dubious results opening needless questions. It’s as bad to look for a single lag value as to seek a single climate sensitivity number.

@Random #10 – http://www.woodfortrees.org/plot/hadcrut4gl/mean:191/mean:193/detrend:-0.17/plot/hadcrut4nh/from:1880.25/mean:6/every:12/mean:3/detrend:-0.17/plot/hadcrut4gl/from:1880.75/mean:6/every:12/mean:3/detrend:-0.17/plot/esrl-co2/normalise/offset:0.15/mean:31/plot/esrl-co2/normalise/offset:0.55/mean:31/plot/hadcrut4sh/mean:191/mean:193/detrend:-0.17/plot/sidc-ssn/normalise/offset:0.2/from:1870/mean:31 gives another view of what you’re talking about (-0.17 detrend to approximate Cowtan & Way). h/t Trenberth for the idea of looking at seasonal subtrends.

I don’t see a hiatus. I see a Northern Hemisphere winter mid-1990’s strange attractor, which makes sense. AGW is strongest where the sun shines most.

@eric #25 – Thanks for the response. Tamino always makes me feel like a messy child by comparison with the elegant work of straightforward presentations.

2010 was a long time ago in climatology. It’s a Marcott et al, Cowtan & Way, MLOST & MLOIST, and four rejigs and one critical independent audit each of RSS & UAH ago.

Moreover, we understand volcano, ENSO and the Great Conveyor’s roles somewhat better, I think, and the one key difference is we now realize — h/t Cowtan & Way — that the suitability to purpose, with that purpose often being comparison to the ensemble of model outputs, of the major datasets remains low. The models were omniscient within their simulations, excluded or randomly planted volcano and ENSO, and suffered granularity issues.

It is past time to revisit, with attention to how to treat the quality of each modern dataset in regards its fitness to compare to the models, I think.

I was rather startled when I found Hansen 1988 Scenario “B” to exactly match, with these factors accounted for; can anyone else verify?

Fig. 4. Global temperature (annual values, GISTEMP data 1880-2014) together with piecewise linear trend lines from an objective change point analysis. (Note that the value for 2014 will change slightly as it is based on Jan-Oct data only.) Graph by Niamh Cahill. – See more at: https://www.realclimate.org/index.php/archives/2014/12/recent-global-warming-trends-significant-or-paused-or-what/#more-17725

Can you please provide the link to that specific change point analysis? Note, I posted the abstract to a different change point analysis over on the Unforced Variations thread.

It is the proper statistical technique for subdividing a time series into sections with different linear trends. Rather than hand-picking some intervals to look at, like I did above, this algorithm objectively looks for times in the data where the trend changes in a significant way. It will scan through the data and try out every combination of years to check whether you can improve the fit with the data by putting change points there. The optimal solution found for the global temperature data is 3 change points, approximately in the years 1912, 1940 and 1970. There is no way you can get the model to produce 4 change points, even if you ask it to – the solution does not converge then, says Cahill. There simply is no further significant change in global warming trend, not in 1998 nor anywhere else. – See more at: https://www.realclimate.org/index.php/archives/2014/12/recent-global-warming-trends-significant-or-paused-or-what/comment-page-1/#comment-619863

to clarify my last request, I do find the result compelling, at least in part because it is what I expected a change-point analysis with linear trends between the cutpoints would show. I would just like to read more about the details of how this was done.

One only need consider the heuristics of performing changepoint analysis on a random walk to understand the common admonition against using this tool on an autoregressive timeseries. Was some new method used to avoid these difficulties and if so, could you provide a reference?

The above admonition aside, the results appear to be dataset dependent. Performing cp analysis on the Hadcrut4 northern hemisphere data using the standard R package yields 3 breakpoints (July 1925, July 1987, Jan 1997)

It’s also worth noting that any recent hiatus seems to be confined to boreal winter…e.g., here.

[Response: Good point Chris. Always good to look at seasonal changes, which can be quite different than annual mean. In this case, isn’t this just more evidence that the use of 1998 as a start point simply emphasize El Nino (which generally peaks in DJF)?–eric]

Am I seeing that the median temperature for circa 2110 was as high as the peak in temperature in 1998?

“This is perhaps the best and simplest way to visualize the absence of any ‘pause’, courtesy of Gavin:”

https://pbs.twimg.com/media/B38Na3TIAAAFWfU.png:large

I like the idea of the above link. The last period plotted would be about 1985 to 2014, which might present a problem. I unconventionally made a similar plot ending with recent trends of the last 28, 26, 24, 22…years. Noticeable flattening was seen. With change point analysis this diagram might be related to conceptually what happens: http://www.nature.com/nclimate/journal/v1/n4/images_article/nclimate1143-f2.jpg The relevant slopes change.

“I will be using the HadCRUT4 hybrid data, which have the most sophisticated method to fill data gaps in the Arctic … That’s global warming – a measured fact.”

This should not be a surprise to anyone as warming should be less ambiguous in the arctic due to arctic/polar amplification.

For

Dimitris Poulos :

The deadline for next EGU meeting is Jan 7 2015. If the paper were accepted, even as a poster, useful feedback might be gained from an expert audience.

In Figure 4, what caused the pause between 1940 and 1970?

Of course there is no doubt about the ongoing radiative forcing, anyway the “hiatus” has an influence: http://www.imgbox.de/show/img/VDB75GV2Sc.gif . The upper 95%- confidence-level was reduced sharply when looking at GISS-Data. In 2007 there was an upper limit of warming of about 2.06 K/century, in 2014 ( as the average Jan.-Oct) it’s reduced to 1.83 K/ century. It could be a hint to internal veriability?

random: In the plot you see also the trends of the months except winter: No difference in the end. The ( not significant) increase of the winter-trendslopes before 2008 was the result of some winter-warming in the 90th.

@Victor #49

The answer may simply be not to fret over intervals while staring at the global mean. It might simply be looking at the seasonal data for each hemisphere. And should it turn out that in said interval of 20 years all four indicators went up and in said interval of 16 years three go up and one goes down – then may be it’s just not about the intervals per se…

@49 (Victor): You write “The interval during which, as you put it, “global warming really took off,” is roughly 20 years. A difference of only 4 years.” From the article: “Let’s start by looking at the full record, which starts in 1979 since the satellites come online there (and it’s not long after global warming really took off).” From 1979 to 2014 is 35 years, not 20. Not sure where you get the 20 from. That would be from 1994, and nowhere in the article (unless I missed it somehow) does Stefan examine trends from 1994.

Also, you say “I’m wondering why you chose Hadcrut4 hybrid rather than simply Hadcrut4.” The answer is clearly explained — just follow the link provided by Stefan in which the Cowtan and Way paper and its meaning and significance is discussed.

Eric-

I don’t think the 1998 start date choice matters much for the “hiatus” (or a sabbatical?) in global temperature. Of course, the start date must matter quantitatively for trends of this length, but Jochem Marotzke and some others have played the game of plotting 15yr-ish trends with different start dates, and you don’t really escape the fact that there’s something somewhat interesting to explain.

> Frank says:7 Dec 2014 at 1:47 AM

> In Figure 4, what caused the pause between 1940 and 1970?

First, don’t assume there’s some one thing going on; that question’s much discussed. Look at some of the discussion here at RC for instance:

https://www.google.com/search?q=site:Arealclimate.org+climate+1940+1970

Or more generally

https://www.google.com/search?q=climate+1940+1970

But you might have heard claims like “there’s been no warming since 1998”, so let us have a look at temperatures starting in 1998 (the year sticking out most above the trend line in the previous graph)

I will be using the HadCRUT4 hybrid data, which have the most sophisticated method to fill data gaps in the Arctic with the help of satellites,

Keith Woollard said You lose me at the first graph. If you use the satellite data you cannot make any of the points you raise.

[Response: Of course the same points about the meaning of significance and confidence intervals could have been made also with satellite data. But here we are interested in the evolution of global surface temperatures, which cannot be measured by satellites. – stefan]

I’m curious, why aren’t they using the satellite data?

[Response: Satellite data doesn’t measure the same thing and has different levels of signal and noise. – gavin]

The issue is that claims like “there’s been no warming since 1998” relate to the satellite data which do show a pause for that time no matter how you try to deny it.

You cannot both incorporate satellite data and then dismiss it.

On the topic of autocorrelation; the change point analysis uses a simple linear regression approach to model the data and so does not account for autocorrelation. However, the GISS data are yearly averages. The autocorrelation in the resulting annual data will be small. Augmenting the regression model with a first order (or higher) autoregressive term may address any issues with autocorrelation, but I don’t believe that it would alter the conclusions. In fact, autocorrelation in the data would likely mask the change point(s) and make a change in rate harder to establish. Therefore, while accounting for the small amount of autocorrelation may make estimating the regression coefficients slightly more efficient, it will not result in another change point.

Chris: see this graph. I used GISTEMP data, plotted 10, 15, 20 and 25-year regression slopes ending in a given year, through 2013. Very rough, but converts slopes into levels, easier to eyeball.

Most of the heat from global warming goes into the oceans.

If AGW was a dog, the heat in the atmosphere would be the dog’s tail. When the dog is running in circles, sometimes the dog’s tail seems to go faster or slower than the body of the dog.

The air temps in the post just refer to how fast the dog’s tail moved as the dog ran an obstacle course.

If the dog is chasing a rabbit, what matters to the rabbit is how fast the dog’s teeth move. Likewise, if global warming is chasing us, we should not be focused on how fast the tail is moving. We should worry about how fast the whole process is moving including heat that goes into melting ice, melting permafrost, warming oceans, and increasing the latent heat in the atmosphere.

Plots of temperature anomalies vs year are of interest but this is not what we need.

The claim is that temperature increased because CO2 increased due to increase in the use of fossil fuel. This is the claim and therefore we must reduce the use of fossil fuel. This is what we must prove, or be relatively certain about it, before requesting the halt of use of fossil fuels.

We now have reliable measurements of CO2 since 1958. So give us a plot of yearly temperature anomalies vs yearly CO2 concentration, calculate the regression coefficient (r squared) and the p value and plot the 95% confidence interval. Pretty standard linear least squares regression analysis.

I don’t understand all this plotting temperature anomalies vs year. It has nothing to do with determination of a cause.

Gavin says, “the satellites measure something else”

Quite so, they measure the entire lower troposphere and the entire globe without the need for massive data interpolation.

[Response: Actually, they are indicative of a weighted mean with different channels having different weights through the atmosphere – including for MSU-MT signficant weighting in the stratosphere which has completely opposite trends to the lower troposphere. Secondly, there is an enormous amount of data processing that goes into these estimates – combinations of data from different viewing angles, collation of data from different paths of the polar orbiting satellite (which only gives a complete view every 16 days), adjustments for non-climatic influences (orbital drift, sensor calibration) and homogenization of records from the multiple satellites over the years. That Spencer and Christy are working on version 6 of their algorithm should indicate to you that it isn’t quite as straightforward as you appear to believe. – gavin]

RE: angech #67

You maintain that there is “no warming since 1998”. There is significant warming from 1997 AND 1999. Why is it so that 1998 is a hugely more important year than any other for the humankind?

You cannot both incorporate satellite data and then dismiss it.

angech, the rate of warming since 1998 in the UAH (satellite) record is 0.069/decade.

Even Dr Roy Spencer has suggested that RSS are using data from a faulty sensor.

PS Any comments on this?: http://wattsupwiththat.com/2014/12/07/changepoint-analysis-as-applied-to-the-surface-temperature-record/#comment-1808948

I’ve been giving some thought to a recent paper by McKitrick which claimed to find a 19 year “pause” in HadCRUTv4, a 16 year “pause” in RSS and a 26 year “pause” in UAH. When I first read it, the methodology he used seemed strange. After giving it some thought for the last couple days, I think it is beyond strange.

To test his methodology out, I tried applying it to an earlier portion of the (HadCRUTv4) temperature record. I acted as though it was the beginning of 1997, and we had no idea the huge 1998 spike was coming. When I ran his code, I found a ~15 year “pause.” When I updated the data by just a single year, his code then said there had never been in the first place.

That’s right. Under McKitrick’s methodology, if we rerun the analysis a year from now, the 19 year pause he claims we are experiencing could vanish completely. It’s crazy.

If you want to read the details, I wrote a post about it.

For the change point analysis method, it would be good to see an example of a time series where an end point is identified as a change point. It looks as though a discovered change point might require data on either side so that a slope change could be identified, in which case, it would not be sensitive to an emerging change.

@72

“Quite so, they measure the entire lower troposphere and the entire globe without the need for massive data interpolation”

satellite data undergo all kinds of manipulation in order to spit out a temperature anomaly. surely you know this…

@74 Brandon

I actually emailed McKitrick about his paper and he was courteous enough to reply. He specifically refuted claims made for his paper, that it showed statistically significant periods of pause for the time periods quoted.

His method, strange or not, actually gives trends and 95% error margins that are very close to those delivered by Kevin Cowtan’s algorithm, except for UAH data where McKitrick claims the “hiatus” started in 1998:

McKitrick:

0.061 ±0.120 °C/decade

Cowtan:

Trend: 0.062 ±0.221 °C/decade (2σ)

McKitrick’s error is in concluding that the period when warming ceases to be statistically significant equates to the beginning of a hiatus or pause.

Non statistically significant warming does not mean no warming, statistically significant or otherwise.

McKitrick”s email:

“Dear Phil

I don’t know why the UAH result is so different. I don’t know what algorithm is used on the SKS website. Also there might have been revisions to the UAH data set since I accessed it.

My calculations didn’t aim to measure a statistically-significant trend in the neighbourhood of zero, instead I was aiming to measure how far back the hiatus apparently started.

Cheers,

Dr. Ross McKitrick”

With regards to the oft heard claim of no warming since 1998.

Here is the skeptic Roy Spencer’s UAH data:

http://tinyurl.com/lwpe4vr

The el nino year of 1998 creates a reduction in the atmospheric warming rate if you take it as the start point. Wait another year until that effect is gone and it is warming as usual, paralleling the increase in CO2 concentration.

No (atmospheric) warming (or even reduced warming) for 16 years but warming as usual for 15 years is a logical nonsense based on a misinterpretation of the data.

Sixteen years ago there was an exceptionally hot year. That is all.

>”I don’t understand all this plotting temperature anomalies vs year. It has nothing to do with determination of a cause.”

But neither would

>”a plot of yearly temperature anomalies vs yearly CO2 concentration, calculate the regression coefficient (r squared) and the p value and plot the 95% confidence interval.”

I takes far more than any single graph to get at causation. The above seems to think that CO2 concentration affects temperature on a yearly timescale. There is far more going than just CO2 affecting temperature anomalies, so such a graph would have no probative value.

Re: #67 (angech)

You said:

You are mistaken on all points. Claims of a “pause” have related to both surface and satellite temperature records, in fact deniers have switched to emphasizing satellite records as the surface temperature data seems poised to embarrass their certainty. And, just as for the surface temperature data, the satellite records also lack valid evidence of a so-called “pause.”

I’ll be posting on the topic soon.

Philip Shehan, I was actually thinking about e-mailing him about his paper to see if he could offer an explanation for why people shouldn’t be bothered by what I demonstrated in that post. I agree with you that his calculations seem fine, but his conclusions seems untenable to me.

Using his methodology, one year we can say there was a “pause” from 1995-2014 while the next year we might say there was never any pause in the first place. That seems absurd. If temperatures didn’t rise for a decade, they didn’t rise for a decade. Whether or not temperatures rose years after that decade had passed shouldn’t change the answer.

I can’t even figure out how we’re supposed to interpret the results of the test. I don’t know what they could even mean. McKitrick says they show a “pause,” but as far as I can see, his definition of “pause” is weird and possibly useless.

Can you explain how it is determined a trend line is “statistically significant” or not. I understand the 2 sigma confidence level.

eg you show a result 0.213 +- 0.248 C/decade (2 sigma) and say that it is not statistically significant.

I have read the linked articles and none of them appear to say.

[Response: The long answer is that if you assume that the residuals from the linear trend are Gaussian with no auto-correlation, 90% of the time a process with this trend and standard deviation will give a least squares trend within this range. If there is autocorrelation, or residuals are not Gaussian, then you need to adjust the bounds. Trends are generally just significant (or not) with respect to a null-hypothesis (which in this case is a zero trend) if the bounds do not include that null. A shorter answer is that ‘signficance’ is a little arbitrary and we’d be better off giving pdf’s in any case. – gavin]

Thanks I think I get it. It sounds like a rule of thumb.

Actually I think I understand it better visualizing it as a normal distribution graph or something like that – so good point about the PDFs.

rd50:

As I’m sure you realize, the claim will never be ‘proved’ to the satisfaction of motivated AGW-deniers. For the rest of us, the claim is more than sufficiently supported by the overwhelming preponderance of the verifiable evidence. The evidence further shows that the cost of climate change is likely to exceed the cost of switching from fossil fuels to alternative energy sources. With the information available, a rational economic actor would decide to switch. However, actors who would lose by the switch are introducing disinformation into the decision-making environment. That is impeding us, as an agreggation of economic actors, from making the rational decision. So sayeth I.

> rd50 says: 7 Dec 2014 at 10:13 PM … temperature vs. concentration

rd50 illustrates how people are fooled by Ploy #3.

Air and ocean temperature don’t instantly adjust when CO2 changes.

– See more at: https://www.realclimate.org/index.php/archives/2014/12/the-most-popular-deceptive-climate-graph/#more-17765

So, according to Stefan, there has been no pause, no hiatus, only onward and upward. Yet the literature is full of “explanations” (hiding heat in the oceans, etc.) You can’t have it both ways. If there is nothing there than it doesn’t need an explanation.

[Response: The scientific community has indeed been trying to understand the specifics of the variations in temperature that have been observed, and in talking about that work, many scientists use the term “pause”. That’s unfortunate because it implies they agree with the fake-skeptics that the “pause” somehow shows that our fundamental understanding of climate is wrong. They don’t.–eric]

From Climate Change — How Do We Know? (http://climate.nasa.gov/evidence/)

“Data from NASA’s Gravity Recovery and Climate Experiment show Greenland lost 150 to 250 cubic kilometers (36 to 60 cubic miles) of ice per year between 2002 and 2006, while Antarctica lost about 152 cubic kilometers (36 cubic miles) of ice between 2002 and 2005.”

So, if 20 years is too brief a time to establish a trend, then obviously the loss of “150 to 250 cubic kilometers (36 to 60 cubic miles) of ice per year between 2002 and 2006” means little to nothing, right? Might just be “noise.” Yet, according to NASA, these ice sheets are shrinking. Sure looks like they’re shrinking to me.

Oh and by the way, note the absence of any linear regression analysis or confidence interval.

[Response: Or, you know, you could look at the up-dated numbers that go through to 2013: Williams et al (2014). – gavin]

First, I want to commend Stefan for airing his views out here, in the open, for anyone to comment on. And being so willing to respond, even to the most skeptical of skeptics. I’d also like to thank the monitors of this blog for being so open to all viewpoints, with minimal censorship (so far, at least, as I can tell). Such behavior is, to me, the mark of the true scientist, moreso than the use of sophisticated math or complicated experiments (those are good too). This site, as I see it, represents the Internet at its best — despite all the many personal attacks, though I suppose that can’t be helped on such an open site.

Now, in response to #64 Robert, who writes: “Also, you say “I’m wondering why you chose Hadcrut4 hybrid rather than simply Hadcrut4.” The answer is clearly explained — just follow the link provided by Stefan in which the Cowtan and Way paper and its meaning and significance is discussed.”

I ignored that link at first, but now that Robert mentioned it, I checked it out. VERY interesting. SO interesting it looks at first glance as though it could be a game changer. Problem is, as so eloquently stated by Tom C,just above in #85, “You can’t have it both ways. If there is nothing there than it doesn’t need an explanation.” And we’ve already had so many “explanations” of the hiatus, or research projects attempting to explain it away (such as the Cowtan and Way paper, not to mention a very interesting, and completely different, paper co-authored not too long ago by Stefan himself), that they are starting to cancel one another out. (In the words of the Bard: “methinks the lady doth protest too much.”)

Realistically, what we find in all these various surveys, and all the attempts to interpret them in ways that support one viewpoint or the other, is just one huge opportunity for confirmation bias on BOTH sides. You picks your data and you takes your choice. What appears to be lacking is anything truly definitive. Yet the warming argument is being made as though it is in fact definitive. And more than just definitive, but a result so clear and irrefutable that we must act upon it NOW or we are — quite literally — doomed!

Interestingly, Stefan doesn’t make all that much of Cowtan and Way’s rather dramatic findings, noting that “the same basic points can be illustrated with other data just as well.” Is that really the case?

The Hadcrut4 (non-hybrid) trend, from 1850 to present is only .047 +-.006, as opposed to Hadcrut4 hybrid from 1979 on, which is .175 — much steeper. GISSTEMP is .065. The RSS trend from 1979 is .125, which looks like warming, yes. However, the RSS trend from 97 to present is negative (-.014). And even from 99 to present (after the big El Nino) it’s only .025 (though admittedly with a very large error range).

These statistical assessments are all over the place. So how do we determine which are “right on” and which are misleading? As I see it, we don’t. We just can’t. It’s all too complicated, starting with all the different data sources themselves and ending with all the various assessments, both subjective and statistical.

So what the whole matter appears to boil down to is: 1. we can’t really say for sure, but it looks like we’re dealing with AGW, because there just doesn’t seem to be any other explanation for the radically increased warming since 1850 (hiatus or not). AND 2. we just can’t afford to take the chance that AGW might not be significant, we have to act as though it were, because the potential consequences of the skeptics being wrong are just too dire.

I don’t buy the above assessment, by the way, but I do think that’s the best case the “warmists” can make. And by the way, you will never be able to separate “the science” from the politics, so don’t even bother to try.

#83, and priors (no pun intended)–

rd50 seems to have as the null that fossil fuel use is harmless.

Which begs the questions, what evidence would allow him or her to reject the null? And is the null well-chosen–particularly given that the ‘harm’ in ‘harmless’ is potentially very real?

I see that the temperature series is Land / ocean. How is measured water temperatures combined with measured air temperatures?

Total mass and heat capacity of air and water is very different as illustrated by these estimates:

Specific heat capacity of air approximately 1.0 kJ/kg*K

Total mass of the atmosphere 5.14 x 1018 kg

Specific heat capacity or water approximately 4.2 kJ/kg*K

Total mass of the seas 1.4 x 1021 kg

What is the definition of the measurand?

> if 20 years is too brief a time to establish a trend

This is entirely dependent upon the dataset and is not a rule for all climate datasets. Again, learning statistics will help alleviate your confusion. The amount of time needed to establish a trend is a property of the data.

> How is measured water temperatures combined with measured air temperatures?

Click on the “Data Sources” link at the top menu of this site, there you can access all the various data sets used in climate science.

This post is using HadCRUT 4.

http://hadobs.metoffice.com/hadcrut4/index.html

Tamino says: 8 Dec 2014 at 8:52 AM Re: #67 “(angech) said: The issue is that claims like “there’s been no warming since 1998” relate to the satellite data which do show a pause for that time no matter how you try to deny it. ”

“You are mistaken on all points. I’ll be posting on the topic soon.”

I will look forward to reading it and know I can comment on your blog at the time. Thanks for coming back to the fray. I hope you will address the issue of the satellite data RSS which shows an 18 year pause according to skeptics. I realise it is not sea surface data, but it is still a genuine instrumental data set of the atmospheric warming that should not be ignored because it differs from your viewpoint that there has been no pause. The concept of a pause is gaining traction and others have pointed out that there have been numerous attempts to explain why it has been happening from warmists. Why would they bother putting up multiple explanations if what they are trying to explain away did not exist, to some extent, in the first place?

Comment by Unsettled Scientist — 8 Dec 2014 @ 11:24 PM

Thanks for your reply, but I cannot find any useful information by following links you provided.

This is what I find for HadCRUT at the following link:

http://www.cru.uea.ac.uk/cru/data/temperature/

“Why are sea surface temperatures rather than air temperatures used over the oceans?

Over the ocean areas the most plentiful and most consistent measurements of temperature have been taken of the sea surface. Marine air temperatures (MAT) are also taken and would, ideally, be preferable when combining with land temperatures, but they involve more complex problems with homogeneity than SSTs (Kennedy et al., 2011). The problems are reduced using night only marine air temperature (NMAT) but at the expense of discarding approximately half the MAT data. Our use of SST anomalies implies that we are tacitly assuming that the anomalies of SST are in agreement with those of MAT. Kennedy et al. (2011) provide comparisons of hemispheric and large area averages of SST and NMAT anomalies. ”

“How are the land and marine data combined?

Both the component parts (land and marine) are separately averaged into the same 5° x 5° latitude/longitude grid boxes. The combined version (HadCRUT4 ) takes values from each component and weights the grid boxes according to the area, ensuring that the land component has a weight of at least 25% for any grid box containing some land data. The weighting method is described in detail in Morice et al. (2012). The previous combined versions (HadCRUT3 and HadCRUT3v) take values from each component and weight the grid boxes where both occur (coastlines and islands) according their errors of estimate (see Brohan et al., 2006 for details). ”

Neither the paper by Kennedy et al. (2011) nor the paper by Morice et al. (2012) considers heat capacity.

Is this an issue at all? Anybody know of a paper considering differences in heat capacity?

Re: #55 (Jeff Patterson)

You say:

Autocorrelated time series are not necessarily a “random walk,” for instance global temperature time series aren’t. This has been demonstrated numerous times, most recently (for satellite data sets) by none other than Ross McKitrick — a leading proponent of the so-called “pause”. Using the random walk argument is faulty reasoning.

A caution against using change-point analysis on autocorrelated time series is that accounting for autocorrelation is a complicated process. But it can be done.

If you don’t, then there’s a distinct possibility you may identify a changepoint which isn’t real. What autocorrelation won’t do is cause you to miss changepoints that are real. If there really were a changepoint after 1970, it would not have been missed. It’s just not there.

And, by using annual averages the autocorrelation is greatly reduced, to the point that its impact is quite small. Those who insist on using monthly data must account for it explicitly, or run the risk of finding too many changepoints that aren’t real.

It seems that you weren’t using it to find a change in slope, but a change in mean, which isn’t the topic of this post at all — and certainly wouldn’t lead to the same changepoints. More to the point, since you did changepoint analysis of monthly data you should expect to find false changepoints. All you’ve shown is the folly of doing it when the autocorrelation is very strong but isn’t accounted for.

Here’s an experiment for you: generate an artificial time series for 100 years of monthly data consisting of an upward linear trend with no noise at all. Perform cp analysis using the standard R package. See what you find.

Further to my #76, another reassurance that would be helpful would be an example where the change point analysis identifies changes in slope of the same sign rather than just changes in slope of alternating sign as in fig. 4.

> “warmists”

> …

> We just can’t. It’s all too complicated

— Victor, rebunking the old whine Ignoramus et ignorabimus — the argument from incompetence as well as from incomprehension.

Nonsense.

#90–A quick answer is, this paper:

http://onlinelibrary.wiley.com/doi/10.1002/qj.2297/abstract

I believe it’s paywalled, though, so you might have to dig a little bit more to actually access the information for free. Either go to a research institution library near you (presuming there is such), or search online to find pre-prints or summaries. You can also contact the researchers directly; usually they will be willing to help those who are not too importunate.

Some here may already have some pre-print links to share.

kdmsooboy:

I don’t know if rd50 was making that claim — after all, he/she did say “we must prove [that increasing atmospheric CO2 is caused by fossil fuel emissions]”. My point, and I presume kdmsooboy’s, was that it will never be proven to a pseudo-skeptic’s satisfaction, and that a genuine skeptic would be convinced by the preponderance of existing evidence.

I did want to amplify my comment a bit more for the benefit of lurkers who don’t already know this, by pointing out that climate change is a tragedy of the commons: as long as the cost of climate change is external to the price he pays for FFs, a rational economic actor might decide not to switch from FFs to alternatives. The solution is for the society of rational actors to agree to internalize climate change costs, for example by a carbon tax on FFs. But of course each individual actor is motivated by self-interest, and there are actors who stand to lose big if the external costs of FF use are internalized. Those individuals have incentive to propagate disinformation about the causes and effects of climate change, and in fact 100s of millions of dollars have been spent for that purpose. As a result, more than a decade after the scientific consensus on AGW emerged, polls persistently show that

IMHO, it’s not an information deficit but a flood of disinformation that has prevented an effective national climate mitigation policy. If not for that, I strongly suspect there would already be a sufficient political majority in the US to enact a carbon price. Paraphrasing Socrates by way of Josh Billings by way of Will Rogers, “It ain’t what people don’t know that’s the problem, it’s what they think they know that ain’t so!”.

#96 Kevin McKinney

Thank you for being helpful. The paper by Kevin Cowtan and Robert G. Way: “Coverage bias in the HadCRUT4 temperature series and its impact on recent temperature trends» Is mainly concerned with: “Incomplete global coverage is a potential source of bias in global temperature reconstructions if the unsampled regions are not uniformly distributed over the planet’s surface.»

However, the paper is not at all concerned with the difference in specific heat capacity between air and water.

If I combine temperature changes (anomaly) of sea water and air without taking into consideration heat capacities of the amounts of water and air in the system I believe I will be adding apples and oranges.

I am sure I must be missing something here. IPCC does not mention heat capacity with a single word in their 2014 Synthesis report. I just can’t imagine how heat capacity and specific heat capacity of air and water can be disregarded when combining sea-surface temperatures and air temperatures into a temperature data series. And I can’t find any information about how this is handled.