As the World Meteorological Organisation WMO has just announced that “The year 2014 is on track to be the warmest, or one of the warmest years on record”, it is timely to have a look at recent global temperature changes.

I’m going to use Kevin Cowtan’s nice interactive temperature plotting and trend calculation tool to provide some illustrations. I will be using the HadCRUT4 hybrid data, which have the most sophisticated method to fill data gaps in the Arctic with the help of satellites, but the same basic points can be illustrated with other data just as well.

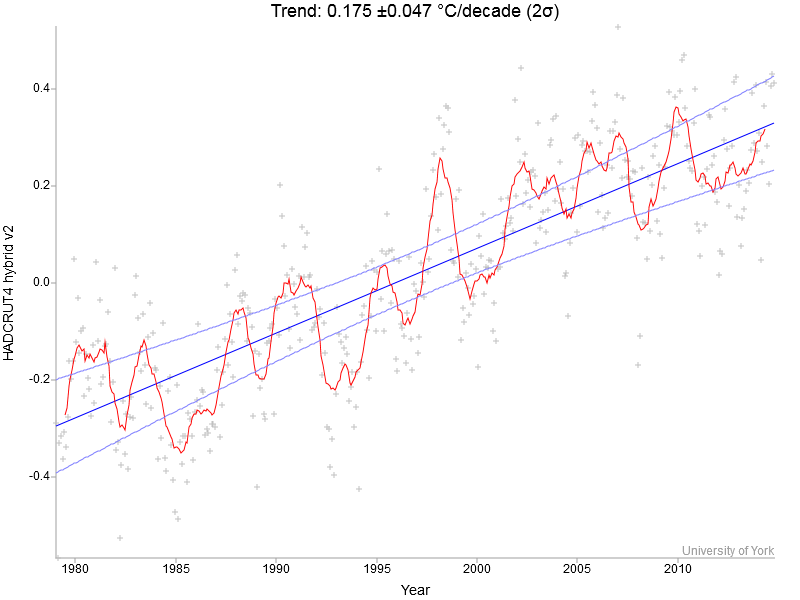

Let’s start by looking at the full record, which starts in 1979 since the satellites come online there (and it’s not long after global warming really took off).

Fig. 1. Global temperature 1979 to present – monthly values (crosses), 12-months running mean (red line) and linear trend line with uncertainty (blue)

Fig. 1. Global temperature 1979 to present – monthly values (crosses), 12-months running mean (red line) and linear trend line with uncertainty (blue)

You clearly see a linear warming trend of 0.175 °C per decade, with confidence intervals of ±0.047 °C per decade. That’s global warming – a measured fact.

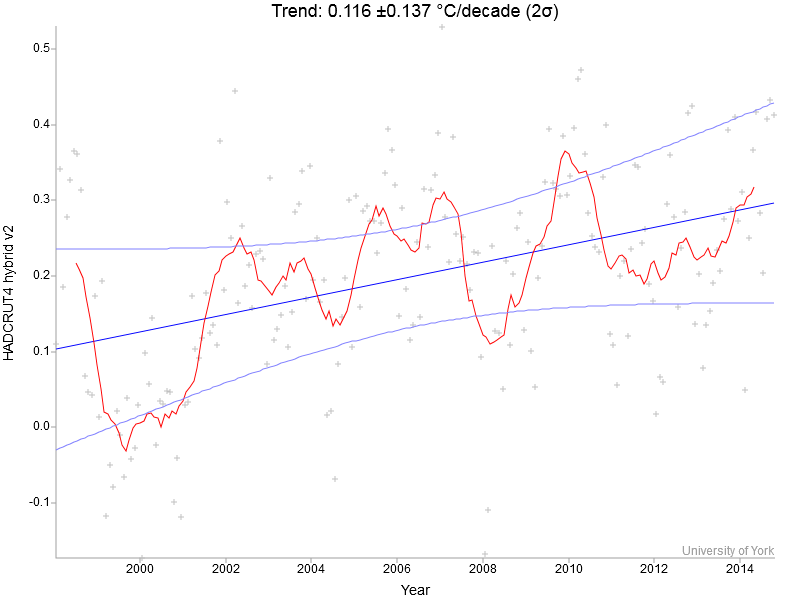

But you might have heard claims like “there’s been no warming since 1998”, so let us have a look at temperatures starting in 1998 (the year sticking out most above the trend line in the previous graph).

Fig. 2. Global temperature 1998 to present.

Fig. 2. Global temperature 1998 to present.

You see a warming trend (blue line) of 0.116 °C per decade, so the claim that there has been no warming is wrong. But is the warming significant? The confidence intervals on the trend (± 0.137) suggest not – they seem to suggest that the temperature trend might have been as much as +0.25 °C, or zero, or even slightly negative. So are we not sure whether there even was a warming trend?

That conclusion would be wrong – it would simply be a misunderstanding of the meaning of the confidence intervals. They are not confidence intervals on whether a warming has taken place – it certainly has. These confidence intervals have nothing to do with measurement uncertainties, which are far smaller.

Rather, these confidence intervals refer to the confidence with which you can reject the null hypothesis that the observed warming trend is just due to random variability (where all the variance beyond the linear trend is treated as random variability). So the confidence intervals (and claims of statistical significance) do not tell us whether a real warming has taken place, rather they tell us whether the warming that has taken place is outside of what might have happened by chance.

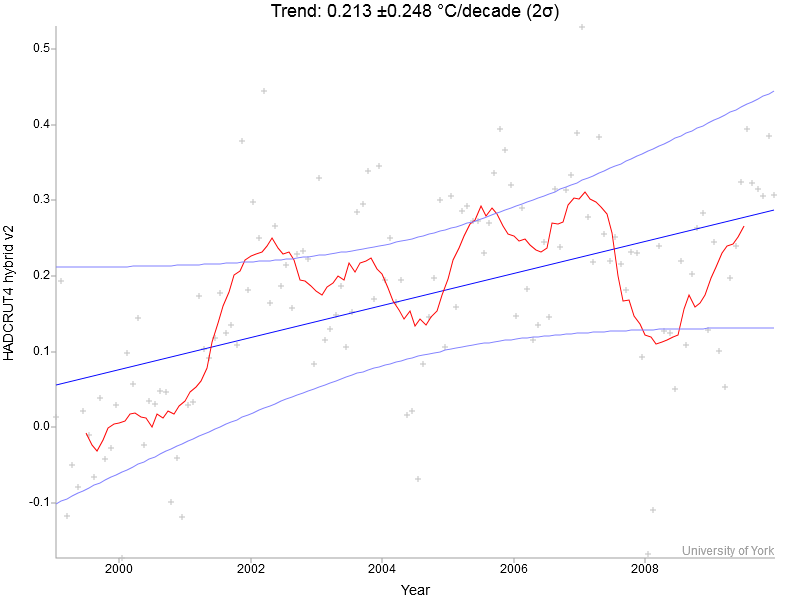

Even if there was no slowdown whatsoever, a recent warming trend may not be statistically significant. Look at this example:

Fig 3. Global temperature 1999 to 2010.

Over this interval 1999-2010 the warming trend is actually larger than the long-term trend of 0.175 °C per decade. Yet it is not statistically significant. But this has nothing to do with the trend being small, it simply is to do with the confidence interval being large, which is entirely due to the shortness of the time period considered. Over a short interval, random variability can create large temporary trends. (If today is 5 °C warmer than yesterday, than this is clearly, unequivocably warmer! But it is not “statistically significant” in the sense that it couldn’t just be natural variability – i.e. weather.)

The lesson of course is to use a sufficiently long time interval, as in Fig. 1, if you want to find out something about the signal of climate change rather than about short-term “noise”. All climatologists know this and the IPCC has said so very clearly. “Climate skeptics”, on the other hand, love to pull short bits out of noisy data to claim that they somehow speak against global warming – see my 2009 Guardian article Climate sceptics confuse the public by focusing on short-term fluctuations on Björn Lomborg’s misleading claims about sea level.

But the question the media love to debate is not: can we find a warming trend since 1998 which is outside what might be explained by natural variability? The question being debated is: is the warming since 1998 significantly less than the long-term warming trend? Significant again in the sense that the difference might not just be due to chance, to random variability? And the answer is clear: the 0.116 since 1998 is not significantly different from those 0.175 °C per decade since 1979 in this sense. Just look at the confidence intervals. This difference is well within the range expected from the short-term variability found in that time series. (Of course climatologists are also interested in understanding the physical mechanisms behind this short-term variability in global temperature, and a number of studies, including one by Grant Foster and myself, has shown that it is mostly related to El Niño / Southern Oscillation.) There simply has been no statistically significant slowdown, let alone a “pause”.

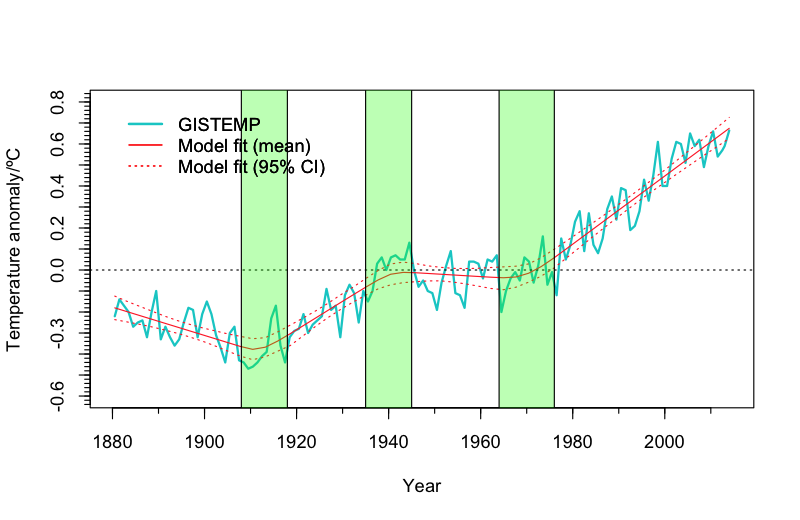

There is another more elegant way to show this, and it is called change point analysis (Fig. 4). This analysis was performed for Realclimate by Niamh Cahill of the School of Mathematical Sciences, University College Dublin.

Fig. 4. Global temperature (annual values, GISTEMP data 1880-2014) together with piecewise linear trend lines from an objective change point analysis. (Note that the value for 2014 will change slightly as it is based on Jan-Oct data only.) Graph by Niamh Cahill.

It is the proper statistical technique for subdividing a time series into sections with different linear trends. Rather than hand-picking some intervals to look at, like I did above, this algorithm objectively looks for times in the data where the trend changes in a significant way. It will scan through the data and try out every combination of years to check whether you can improve the fit with the data by putting change points there. The optimal solution found for the global temperature data is 3 change points, approximately in the years 1912, 1940 and 1970. There is no way you can get the model to produce 4 change points, even if you ask it to – the solution does not converge then, says Cahill. There simply is no further significant change in global warming trend, not in 1998 nor anywhere else.

In summary: that the warming since 1998 “is not significant” is completely irrelevant. This warming is real (in all global surface temperature data sets), and it is factually wrong to claim there has been no warming since 1998. There has been further warming despite the extreme cherry pick of 1998.

What is relevant, in contrast, is that the warming since 1998 is not significantly less than the long-term warming. So while there has been a slowdown, this slowdown is not significant in the sense that it is not outside of what you expect from time to time due to year-to-year natural variability, which is always present in this time series.

Given the warm temperature of 2014, we already see the meme emerge in the media that “the warming pause is over”. That is doubly wrong – there never was a significant pause to start with, and of course a single year couldn’t tell us whether there has been a change in trend.

Just look at Figure 1 or Figure 4 – since the 1970s we simply are in an ongoing global warming trend which is superimposed by short-term natural variability.

Weblink: Statistician Tamino shows that in none of the global temperature data sets (neither for the surface nor the satellite MSU data) has there recently been as statistically significant slowdown in warming trend. In other words: the variation seen in short-term trends is all within what one expects due to short-term natural variability. Discussing short-term trends is simply discussing the short-term “noise” in the climate system, and teaches us nothing about the “signal” of global warming.

Thank you. Very helpful for us semi-numerates.

Wow. That trend line in the first graph alone would be enough to silence any _real_ ‘skeptic,’ it seems to me. But the pseudo-skeptic will continue to natter, no matter what.

So at .175 C increase per decade, we would hit the 2 degree mark in about 6 decades. Most projections I hear these days estimate that we will hit that mark much sooner. Are there reasons to expect an acceleration soon?

Any comment on the 10 year lag vs 40 year lag?

http://iopscience.iop.org/1748-9326/9/12/124002/article

“Any given CO2 emission will have its maximum warming effect just 10 years later, new research from the Carnegie Institution for Science shows.”

I imagine the lag would shorten as we go along?

I would rather this assessment (and in particular this figure and this one), which agrees with the result above, but uses different methods. Note the figure in that assessment expresses change per year, not change per decade.

Thanks. Is there something accessible elaborating on change point analysis?

In deniersville, only satellite numbers are considered anymore–thermometers are supposedly ‘outdated.’

You lose me at the first graph. If you use the satellite data you cannot make any of the points you raise.

[Response: Of course the same points about the meaning of significance and confidence intervals could have been made also with satellite data or a time series of beer prices. But here we are interested in the evolution of global surface temperatures, which cannot be measured by satellites. – stefan]

I’m curious, why aren’t they using the satellite data?

[Response: Satellite data doesn’t measure the same thing and has different levels of signal and noise. – gavin]

Hey Rud Istvan, I assume you meant to say Footnote 25. Footnote 24 of that chapter is a single sentence:

Whose link doesn’t even work. The post you intended to link to can be found here instead. You could even just fix the domain in the link:

https://bobtisdale.wordpress.com/2012/06/02/

Though that directs people to an monthly archive page with the title and introduction of the post. Users would have to click on another link to see the post itself.

Sorry about my last comment. I tried to submit a comment here asking about what changepoint analysis was used (and hopefully any parameters set for it), but my first attempt failed because of problems with the CAPTCHA. I didn’t mind because I had saved the text in a file where I write drafts of comments (so I don’t lose them in situations like this). Unfortunately, when I went to re-submit the comment, I copied the wrong one. Feel free to delete it.

I would be interested to know what change point analysis was used though.

I’m actually a bit frustrated how those elaborate statistical discussions fail to communicate the basic point to the layman, that all the talk about the ‘hiatus’ is just a product of looking at the wrong metrics.

I had much better results in discussions with ‘skeptics’ by just stating that the *global* mean does not make for a good metrics in a situation, when three of four values go up and one goes down.

Just look at figure 6 on page 4 in Jim Hansen’s paper from early 2013:

http://www.columbia.edu/~jeh1/mailings/2014/20140121_Temperature2013.pdf

Can anybody spot a ‘hiatus’? No? I certainly can’t. Temperatures rise consistently on the southern hemisphere in summer and winter. Temperatures rise consistently on the northern hemisphere in summer. There’s no ‘hiatus’.

Only winter in the northern hemisphere has seen a decline in temperatures – which isn’t a ‘hiatus’ in my book either.

I think it would actually be better to put away fancy statistics in this case and to even not argue about the length of the period the ‘skeptics’ choose to look at – and just tell them, that the global mean was a poor choice of metrics in a situation where three indicators go up and one goes down. Because it suggests a ‘hiatus’ where clearly there isn’t one. That’s simple arithmetics that average people can understand.

I’m not saying that the statistical argument was unimportant. I’m just saying that – instead of being frustrated by laymen and the media, who “just don’t get it” – we should simply tell a story of “you’re relying on the wrong calculation” that is much easier to understand.

I for my part had very good success in discussions with “skeptics” here – no one has been able to counter that one yet. They either went mum or evasive.

When talking with deniers that say global warming has paused since 1998, I point out that 2005 and 2010 were the warmest years ever recorded (and 2014 is on the way to be warmer) and then I say:

“You can’t have a peak during a pause!”

Not as elaborate as the article above, but it’s catchy.

Good article. I’ve always thought that the hiatus argument was somewhat missing the point; its the comparison between observations and climate models that is far more interesting. Observations are still running low, though its still within the two sigma confidence interval of the spread of CMIP5 runs using a 1979-2000 base period:

http://i81.photobucket.com/albums/j237/hausfath/Modelsandobservationsannual1979-2000baseline_zpsaf7e2230.png

Significance in general is overrated, for it hides the uncertainty about the size of the effect. In the case of a significant result, the effect may be minimal if the data set is huge. Likewise, in the case of non-significance, the effect may still be huge if data are very few.

It is almost always better to communicate with confidence intervals. Confidence intervals are also easier to understand, at least from the bayesian point of view.

With those short time periods, a confidence interval or posterior distribution of the trend would immediate reveal the uncertainty: as well has having zero trend, there could be a strong warming going on behind the noise. The high side of the posterior distribution (confidence interval) is just as real, and worth of attention, than the low side! (Unless one has prior affiliation to the zero trend, that is.)

Of course, a long period hides short term trend changes. There is really no way around this complementarity (of uncertainty) between trend and time. Even change point analysis does not help. :)

The temperature time series has some autocorrelation. If properly taken into account in the analysis, it makes the effective number of samples even smaller, and the trend estimates even more uncertain.

RE #6

>You lose me at the first graph. If you use the satellite data you cannot make any of the points you raise.

One should not use satellite data to assess surface temperatures. The satellite data do not report on that. Rather, they report tropospheric temperatures averaged so that the numbers are valid for lower mid troposphere. This is nicely seen in the independent data of radiosondes, see http://www1.ncdc.noaa.gov/pub/data/ratpac/ratpac-b/RATPAC-B-annual-regions.txt

The surface level radiosonde values are in good agreement with the development reported in the main post above. On the contrary, the 400 mb radiosonde measurements look very much like the reported satellite data for “lower troposphere” (often misreported as global temperature). When plotted, one can clearly see that variation over the trend at 400 mb is much larger than at surface. I suppose this is because the thermal energy is low at higher levels and more prone to random variations. We see the same phenomenon when we compare the tropical temperature variation to that of the polar areas.

Our important ecosystems and human activities are at the surface level. Hence, it is actually irrelevant as such what takes place at mid troposphere that the satellites show. It does not help us or our ecosystems at all that there was a very warm year at that level in 1998. We live at the surface and that is what matters!

We use a simple linear regression change point analysis.

As an example, If the model had one change point., the mean of the distribution of the data would be a linear regression function, with slope b1 before the change point (cp); temp=a+b1*(year-cp) and a function with a different slope (b2) after the change point; temp=a+b2*(year-cp).

A Bayesian model was used. Prior distributions were placed on the parameters to be estimated (a,b1,b2 and cp). We do not tell the model where the change point should go. We give the parameter cp a uniform prior distribution over the range of years we have. Therefore, the prior suggests that the change point has equal probability of occurring anywhere between 1880 and 2014. The data will then inform the model and the information about cp will update to give a posterior estimate for the change point.

Models with more change points are set up in a similar way, with prior distributions for the cp parameters always being uniform over the range of the data. However, when we have more than one change point in the model we set the condition that they must be ordered, so the 2nd comes after the 1st etc.

We fit models with 1,2,3,4 change points and check for parameter convergence using a number of different diagnostics. We use model comparison tools to decide on the best model for the data.

I looked into changepoint analysis a while back, for annual data it is straightforward using tools already available in R. Unfortunately problems arise when we look at monthly data, which have substantial autocorrelation, which means that standard changepoint analysis tools identify spurious changepoints. Does anyone know of good methods for change point analysis in autocorrelated data?

Good article (as is the one at Tamino’s http://tamino.wordpress.com/2014/12/04/a-pause-or-not-a-pause-that-is-the-question/)

Do this analyses confirm the physical science (the climate models)?

no, temperatures are going to go down, it is proven by my research

http://www.itia.ntua.gr/en/docinfo/1486/

[Response: You seem to have a different concept of “proven” than we do here… At least it is a falsifyable hypothesis. Fitting cycles has a long history with dismal predictive success in the climate sciences. There is so many potential cycles that one always finds some combination that fits past data – but going into the future this approach has generally fallen apart. I prefer the physics of the Earth’s radiation balance (which includes the Milankovich cycles btw. which provide a clear and large radiative forcing). -stefan]

Re: #11 (Dan Miller)

Actually, you could have a peak during a pause, if that peak is a fluctuation rather than due to the trend. Claiming “no pause” because we had a record in 2010 is mistaken, as is claiming “pause” because we had an extreme peak in 1998.

It is catchy, though.

Note that this analysis from the outset refers to the Artic, not to any other place on Planet Earth.

If you look at UAH and RSS since the last El Nino in 2012, it’s obvious they are doing a very poor job of tracking surface temperature when there is no El Nino or La Nina. They appear to overreact to those events, and those overreactions appear to correct their error in failing to measure accurately ENSO neutral. In 2013 and 2014, ENSO has been neutral throughout, and they’ve missed much of the SAT increase. Since the 2012 La Nina, ENSO neutral has been characterized by negative ONI and rapidly increasing surface air temperature, and the people producing the satellite series need to explain how they blew the period’s measurement so badly.

[Response: The MSU-LT estimates are not ‘over-reacting’ or ‘blowing’ the measurement at all. Rather they measure something else which has a different sensitivity to tropical variations than the surface measurements do. The satellite data aren’t necessarily wrong (though the structural variation between estimates is increasingly concerning), it’s just different. – gavin]

With the caveat that I’ve no familiarity with Change Point Analysis algorithms, I would guess that detecting a change point near the beginning or end of a data sequence would be problematic. It’s not clear to me that a mathematical/statistical argument refutes those that would suggest that we’re at a transition point to a cooling phase, long term hiatus, or some such. Note that in the absence of a plausible physical argument for such a state change I’m not suggesting that makes sense, but it seems to be a weakness in the argument presented above.

thanks Stefan for taking time to respond

two things to clarify:

i) there is enough prove that my model is correct: it is not just cycles but the calculated phases are in accordance with physical trajectories,

ii) the Milankovich cycles have problems explaining glacial age variability (e.g. check here http://en.wikipedia.org/wiki/Milankovitch_cycles) that are outcome by my research.

[Response: Those Wikipedia claims of problems with Milankovitch are rather dated. Does the theory explain each and every detail? Probably not. Is it nevertheless correct? Absolutely. Anyone thinking that Milankovitch might have been wrong should read G. Roe’s excellent paper, In Defense of Milankovitch. –eric]

Jaime Sladarriaga:

This analysis uses the latest Hadcrut. This is world wide data. To make it simple for you, world wide means it takes in every place on earth. This data set is preferred because it contains more information about arctic temperatures than others.

Saying it’s arctic only is Monte Python silly.

It would be instructive to see comparison of trend quality. We know UAH and RSS have had their troubles that way, and continue to re-jig their proprietary algorithms though they haven’t so far as I can find been very transparent.

What tale do the various measures of trend quality tell us about the (rather inflated, I think) claims some make of the virtue of the satellite record compared to others, and of the various latest surface datasets, as trend tools?

It’s not an easy discipline, winnowing out which of a small set of candidates is the ‘most true’, but RSS in particular is a far outlier, inconsistent on spans above five years, I’m finding using simple measures.

What do you find when you look?

[Response: It’s always worth reading Tamino’s excellent discussion of comparing satellite and surface-based temperatures. I doubt the same analysis with more recent data (this was a 2010 post) would change anything: http://tamino.wordpress.com/2010/12/16/comparing-temperature-data-sets/ –eric ]

@Dimitris Poulos The fit of the model to the observations doesn’t look very convincing to me, I suspect the uncertainties in the model parameters are rather large and if you propagate that uncertainty through into the projections you will find that the error bars are so broad that the projection is not very informative. The problem with point estimates of the parameters is that many other parameter choices might fit the data almost as well.

If these cycles were actually affecting the sun, then I would expect there to be some measurable effect on observations of (i) the sun and (ii) other planets.

1) Because we have satellite (consistent) data since 1997 – why use any land based (inconsistent methods) in order to verify the “pause”? Land data has hundreds of apologists, and theorists, and rightly so.

2) Why use monthly Vs annual Sat data? Seems to add more visual confusion.

3) Least Mean Squares analysis of annual GSS data since 1997 seems to show dead flat. Even the annual data is “noisy” relative to decade trend.

Should we not wait for significant deviation, either up or down, from this decade long zero slope, in order to confirm actuality, before committing trillions to “control” its theoretical increase? Actuality has already taken so many deviations from the predicted behavior.

re: 20. Ugh, reading comprehension! The language is not that complicated. It refers to the HadCRUT4 data having the most sophisticated method to fill data gaps in the Arctic, not that the analysis can’t apply to a global data set.

Won’t change a thing. The more facts you throw at the denier set the deeper into denial it sends them. I showed these numbers to one of them and he immediately wrote back , ” it’s all faked by people who have a vested interest in it being so.” End of argument. Its IMO a waste of time arguing with these people. We need to only convince those with the power to change things that the trend line if allowed to stand will have increasingly severe consequences for life on this planet, sooner then later

@Dikran Marsupial

the model is just accurate in both its phases with the physical phenomena.

Thank you for this clear and concise explanation, especially on the judicious use of graphics. Very well done.

Best,

D

John Evjen @27

per your number 1, could you clarify what you’re talking about?

John @ 27, Tamino has a post just for you!

@ John Evjen

“3) Least Mean Squares analysis of annual GSS data since 1997 seems to show dead flat.”

That’s an impressive amount of wrongness to fit in one short sentence:

– the method for estimating linear trends in data sets is called least squares; least mean squares is something else entirely.

– you presumably mean NASA GISS data (everyone makes the occasional typo, of course)

– then there’s the fact that if you had actually used least squares to find the trend for the annual average GISS temperature from 1997 to 2013 (or 1998 to 2013 – it’s not clear whether you meant since 1997 including 1997 itself or excluding it) you would have found that the trend over that time period isn’t dead flat, it’s positive.

The trend of Fig. 2 is 1/3 lower than the longer trend shown in Fig. 1. The Drijfhout et al. study “Surface warming hiatus caused by increased heat uptake across multiple ocean basins” suggests this “hiatus” is the result of different mechanisms operating over different time scales in the eastern Pacific, the subpolar North Atlantic and the Southern and subtropical Indian Oceans. The western Pacific and North Atlantic have warmed considerably this year with the result it is likely to be the warmest on record and the moderating infuence of these bodies may therefore no longer be in play. Perhaps these bodies could be brought back into play with heat pipes that move heat to the deep. The University of Hawai suggests the oceans have the capacity to produce 14 terawatts of ocean thermal energy without undue environmental influence. Due to the low Carnot efficiency of these systems 20 times more heat than power would be moved to the deep thus virtually all of the excess heat accumulating in the oceans due to climate change could either be converted or moved to the relative safety of the deep. Estimates are heat returns from the deep at a rate of about 4 meters/year. Heat moved to 1000 meters therefore would take 250 years to return whereas the mitigating influence of the Southern Ocean is multi-decadal at best. Fourteen terawatts also happens to be the amount of energy we currently derive from fossil fuels.

27 suggests another analysis to debunk 27’s idea that we wait till the last decade confirm’s up or down. A data analysis might show that there has never been a time when the last decade alone confirmed up or down in in the sense of rejecting the null hypothesis.

Brilliant explanation! That is to say, perfectly ordinary language to explain an apparently difficult concept (difficult for some, anyway.) Thank you, this will be very useful.

Fiddling with the Temperature Trends graphs is lots of fun. (In case anyone is in need of a reminder, here’s the link: http://www.ysbl.york.ac.uk/~cowtan/applets/trend/trend.html )

For instance, if I do the Hadcrut4 over its full length (1979 through 2014), I get a pretty steep trend of .158. From 1979 through 1999, the trend is even steeper: .169. However, from 1998 to 2014 Hadcrut4 shows a much shallower trend of only .049. And when I choose 2001 through 2014, the trend reverses, to -.001.

So I’m sorry Stefan, but there does seem to be a distinct change in trend from roughly 1998 to 2001 and on to the present. Consistent with a hiatus, yes. Fascinating!

[Response: I’m afraid you missed the point of my article. There is nothing unusual about short sections with smaller or greater trend – this is to be expected given the natural year-to-year variations around the trend and happens all the time. They do not constitute a significant change in the trend. Whether there really is a significant change in trend (rather than just a constant trend superimposed by variability) can be tested by change point analysis. That is its purpose. – stefan]

Just a quick reminder from the communications perspective that language is important. “Pause” is the language of deniers, “slowdown” is not. And the two are, of course, different. I’m not griping about the use of “pause” in the headline, just making a general observation for readers. A lot of valuable and insightful explanation can lose its value instantly for lay people with the wrong word choice. Science deniers are generally pretty skillful in this realm, probably because they cannot rely on the underlying science to bolster their assertions.

#27–anwers…

1) Why use land thermometers, not just satellites?

Because the methodologies don’t measure the same thing. See #14 above, as well as Gavin’s inline at #21.

2) Why use monthly, not just annual?

Some folks like a little finer time resolution…

3) GSS?

Do you mean RSS? And are you seriously suggesting that we hold all mitigation efforts hostage to a single data set, when other set paint a different picture, and multiple other lines of evidence show–in some cases, quite dramatically–that warming is continuing?

#19 Tamino: Yes, you are technically correct. However, I am responding to people who don’t care at all about science and they believe that “temperatures have not gone up” since 1998. A little bit of fighting fire with fire. And in this case, the multiple peaks do indicate a continued warming.

This comment by Zeke Hausfather:

http://www.yaleclimateconnections.org/2011/02/global-temperature-in-2010-hottest-year/

Seems to plot out the UAH and the RSS against the GISTemp and HadCRUT. They seem pretty close. Trenberth had this to say about what we may be seeing: “in terms of the global mean temperature, instead of having a gradual trend going up, maybe the way to think of it is we have a series of steps, like a staircase. And, and, it’s possible, that we’re approaching one of those steps.” Discussing a possible change to a warm PDO. The PDO by some has been described by some as confused. Not a typical cool phase but more of both phases alternating. It might be expected that a re-established cool phase would move the trend line down. This line: “And the answer is clear: the 0.116 since 1998 is not significantly different from those 0.175 °C per decade since 1979 in this sense.” By using the error bars of the 0.116, yes we can say we haven’t moved far enough away from the other number with enough confidence. The error bars for shorter time frames seem to be bigger. So the question is, has there been a relatively short term change? We are uncertain. We seem to have slowed the ascent but we can’t tell yet. If we believe in abrupt climate shifts, that would seem to place more weight on the short term point of view, in order to identify these shifts in a timely manner.

Roy Spencer PhD is pretty clear that this is all bogus hype even before considering the relative neglect of the divergent UAH and RSS data and poignantly asking, “So, why have world governments chosen to rely on surface thermometers, which were never designed for high accuracy, and yet ignore their own high-tech satellite network of calibrated sensors, especially when the satellites also agree with weather balloon data?”

Good question.

I find it hard to not take Roy Spencer PhD seriously and must continue to withhold judgment. I used to be firmly in the warmist camp but am becoming increasingly skeptical.

[Response:Why does he think world governments “ignore” satellites? Government policy should be based on all available scientific information. Regarding global temperature, this shows that (a) satellites show essentially the same global climate warming trend in the troposhere as thermometer measurements do at the surface (namely 0.16 °C per decade over the past 30 years for Spencers UAH data just as for the GISTEMP data), and that (b) the troposphere shows much larger short-term variability around this trend, highly correlated with El Nino (this is very plausible given that warm air rises in the tropics and spreads in the troposphere with the Hadley circulation). What possible policy implication would this higher tropospheric sensitivity to the natural tropical variability have? Did Spencer explain this? -stefan]]

This is perhaps the best and simplest way to visualise the absence of any ‘pause’, courtesy of Gavin:

> “Note that this analysis from the outset refers to the Artic, not to any other place on Planet Earth.”

This statement is ironic, in the sense that it is so wrong it highlights the truth. HadCruT actually has gaps in the arctic, rather than focusing on it. As stated in the article, the hybrid data fills in these gaps in the arctic.

@27, John Evjen

See comment #10 – only if you look at the global mean *and* only if start the calculation in a narrow window before 1998 you get something like that. Which only goes to show that in a situation where three indicators go up and one goes down, a mean value might suggest a situation that isn’t really there.

(though the structural variation between estimates is increasingly concerning) – Gavin

Okay, to me this is increasingly concerning.

If 2015 is another ENSO neural year, and another warmest year, how is RSS going to figure it out?

@43,

does roy spencer phd know why RSS and UAH disagree with each other so much over the last several years? does roy spencer phd know that now the UAH post-98 trend is slightly higher than the pre-98 trend?

re Stefan’s response to #38: “I’m afraid you missed the point of my article. There is nothing unusual about short sections with smaller or greater trend . . . ”

The interval in question, from 1998 to 2014, is 16 years. The interval during which, as you put it, “global warming really took off,” is roughly 20 years. A difference of only 4 years. So how can a trend covering 20 years be regarded not only as diagnostic, but cause for considerable alarm, and a trend only 4 years shorter be dismissed as too brief? Should we wait another 4 years before the “hiatus” can be considered meaningful? And if it continues for another 4 years, what then? Would you change your mind?

And by the way, I’m wondering why you chose Hadcrut4 hybrid rather than simply Hadcrut4. The two present a very different picture for those last 16 years. According to plain old Hadcrut4, there is only a very small upward trend during that period, while Hadcrut4 hybrid, the graph you’ve displayed, shows the earlier trend continuing, effectively obliterating the hiatus. How would you defend yourself against an accusation of cherry picking?

Shelama:

Shelama, by “warmist camp” do you mean “people who accept the consensus of 97% of working, publishing climate scientists that the Earth is warming and that the warming is anthropogenic”? If so, and assuming you’re not a member of the expert community yourself, why would a single dissenter, even one who is as well-qualified as Spencer, cause you to increase your skepticism?

I’ve been quoting Feynman a lot lately: “The first principle [of Science] is that you must not fool yourself, and you are the easiest person to fool.” Compared to every other method of understanding the Universe, Science is superior because it relies on both empiricism and intersubjective verifiability. A scientist is trained to follow rigorous discipline when collecting data (empiricism), but the self-aware scientist relies on other trained and disciplined scientists to keep him from fooling himself (intersubjective verification). He submits his methods and conclusions to the scrutiny of his peers, who can spot the errors he’s missed. He knows his peers won’t spare his feelings, because correct understanding of the Universe is too important. And he acknowledges that if he can’t convince them he’s right, he’s probably fooling himself.

Spencer’s arguments have been exhaustively debated by climate scientists who are as well-trained as he is, and have worked with the relevant data as much or more than he has. They’ve reached a lopsided consensus that he’s (mostly) wrong. We non-experts are left with this: sure it’s possible Spencer is right and the consensus is wrong, but isn’t it more likely that he’s fooling himself?