In a comment in Nature titled Ditch the 2 °C warming goal, political scientist David Victor and retired astrophysicist Charles Kennel advocate just that. But their arguments don’t hold water.

It is clear that the opinion article by Victor & Kennel is meant to be provocative. But even when making allowances for that, the arguments which they present are ill-informed and simply not supported by the facts. The case for limiting global warming to at most 2°C above preindustrial temperatures remains very strong.

Let’s start with an argument that they apparently consider especially important, given that they devote a whole section and a graph to it. They claim:

The scientific basis for the 2 °C goal is tenuous. The planet’s average temperature has barely risen in the past 16 years.

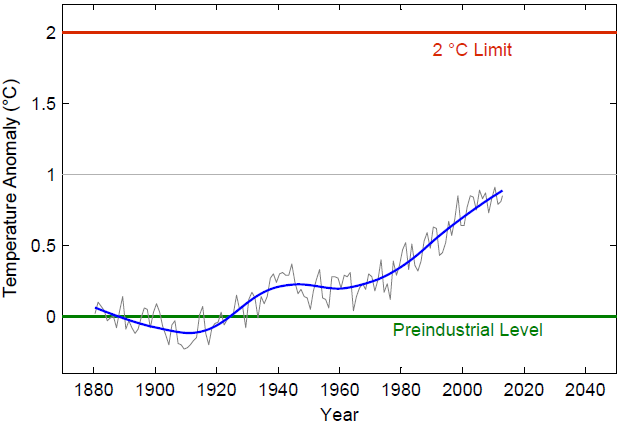

They fail to explain why short-term global temperature variability would have any bearing on the 2 °C limit – and indeed this is not logical. The short-term variations in global temperature, despite causing large variations in short-term rates of warming, are very small – their standard deviation is less than 0.1 °C for the annual values and much less for decadal averages (see graph – this can just as well be seen in the graph of Victor & Kennel). If this means that due to internal variability we’re not sure whether we’ve reached 2 °C warming or just 1.9 °C or 2.1 °C – so what? This is a very minor uncertainty. (And as our readers know well, picking 1998 as start year in this argument is rather disingenuous – it is the one year that sticks out most above the long-term trend of all years since 1880, due to the strongest El Niño event ever recorded.)

The logic of 2 °C

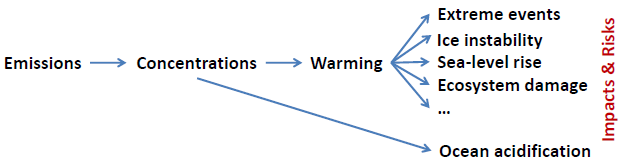

Climate policy needs a “long-term global goal” (as the Cancun Agreements call it) against which the efforts can be measured to evaluate their adequacy. This goal must be consistent with the concept of “preventing dangerous climate change” but must be quantitative. Obviously it must relate to the dangers of climate change and thus result from a risk assessment. There are many risks of climate change (see schematic below), but to be practical, there cannot be many “long-term global goals” – one needs to agree on a single indicator that covers the multitude of risks. Global temperature is the obvious choice because it is a single metric that is (a) closely linked to radiative forcing (i.e. the main anthropogenic interference in the climate system) and (b) most impacts and risks depend on it. In practical terms this also applies to impacts that depend on local temperature (e.g. Greenland melt), because local temperatures to a good approximation scale with global temperature (that applies in the longer term, e.g. for 30-year averages, but of course not for short-term internal variability). One notable exception is ocean acidification, which is not a climate impact but a direct impact of rising CO2 levels in the atmosphere – it is to my knowledge currently not covered by the UNFCCC.

From emissions to impacts.

Once an overall long-term goal has been defined, it is a matter of science to determine what emissions trajectories are compatible with this, and these can and will be adjusted as time goes by and knowledge increases.

Why not use limiting greenhouse gas concentrations to a certain level, e.g. 450 ppm CO2-equivalent, as long-term global goal? This option has its advocates and has been much discussed, but it is one step further removed from the actual impacts and risks we want to avoid along the causal chain shown above, so an extra layer of uncertainty is added. This uncertainty is that in climate sensitivity, and the overall range is a factor of three (1.5-4.5 °C) according to IPCC. This would mean that as scientific understanding of climate sensitivity evolves in coming decades, one might have to re-open negotiations about the “long-term global goal”. With the 2 °C limit that is not the case – the strategic goal would remain the same, only the specific emissions trajectories would need to be adjusted in order to stick to this goal. That is an important advantage.

2 °C is feasible

Victor & Kennel claim that the 2 °C limit is “effectively unachievable”. In support they only offer a self-citation to a David Victor article, but in fact they disagree with the vast majority of scientific literature on this point. The IPCC has only this year summarized this literature, finding that the best estimate of the annual cost of limiting warming to 2 °C is 0.06 % of global GDP (1). This implies just a minor delay in economic growth. If you normally would have a consumption growth of 2% per year (say), the cost of the transformation would reduce this to 1.94% per year. This can hardly be called prohibitively expensive. When Victor & Kennel claim holding the 2 °C line is unachievable, they are merely expressing a personal, pessimistic political opinion. This political pessimism may well be justified, but it should be expressed as such and not be confused with a geophysical, technological or economic infeasibility of limiting warming to below 2 °C.

Because Victor & Kennel complain about policy makers “chasing an unattainable goal”, they apparently assume that their alternative proposal of focusing on specific climate impacts would lead to a weaker, more easily attainable limit on global warming. But they provide no evidence for this, and most likely the opposite is true. One needs to keep in mind that 2 °C was already devised based on the risks of certain impacts, as epitomized in the famous “reasons of concern” and “burning embers” (see the IPCC WG2 SPM page 13) diagrams of the last IPCC reports, which lay out major risks as a function of global temperature. Several major risks are considered “high” already for 2 °C warming, and if anything the many of these assessed risks have increased from the 3rd to the 4th to the 5th IPCC reports, i.e. may arise already at lower temperature values than previously thought.

One of the rationales behind 2 °C was the AR4 assessment that above 1.9 °C global warming we start running the risk of triggering the irreversible loss of the Greenland Ice Sheet, eventually leading to a global sea-level rise of 7 meters. In the AR5, this risk is reassessed to start already at 1 °C global warming. And sea-level projections of the AR5 are much higher than those of the AR4.

Even since the AR5, new science is pointing to higher risks. We have since learned that parts of Western Antarctica probably have already crossed the threshold of a marine ice sheet instability (it is well worth reading the commentaries by Antarctica experts Eric Rignot or Anders Levermann on this development). And that significant amounts of potentially unstable ice exist even in East Antarctica, held back only by small “ice plugs”. Regarding extreme events, we have learnt that record-breaking monthly heat waves have already increased five-fold above the number in a stationary climate. (These are heat waves like in Europe in 2003, causing ~ 70.000 fatalities.)

And we should not forget that after 2 °C warming we will be well outside the range of temperature variation of the entire Holocene; the planet will be hotter than anything experienced during human civilisation.

If anything, there are good arguments to revise the 2 °C limit downward. Such a possible revision is actually foreseen in the Cancun Agreements, because the small island nations and least developed countries have long pushed for 1.5 °C, for good reasons.

Uncritically adopted?

Victor & Kennel claim the 2 °C guardrail was “uncritically adopted”. They appear to be unaware of the fact that it took almost twenty years of intense discussions, both in the scientific and the policy communities, until this limit was agreed upon. As soon as the world’s nations agreed at the 1992 Rio summit to “prevent dangerous anthropogenic interference with the climate system”, the debate started on how to specify the danger level and operationalize this goal. A “tolerable temperature window” up to 2 °C above preindustrial was first proposed as a practical solution in 1995 in a report by the German government’s Advisory Council on Global Change (WBGU). It subsequently became the climate policy guidance of first the German government and then the European Union. It was formally adopted by the EU in 2005.

Also in 2005, a major scientific conference hosted by the UK government took place in Exeter (covered at RealClimate) to discuss and describe scientifically what “avoiding dangerous climate change” means. The results were published in a 400-page book by Cambridge University Press. Not least there are the IPCC reports as mentioned above, and the Copenhagen Climate Science Congress in March 2009 (synthesis report available in 8 languages), where the 2 °C limit was an important issue discussed also in the final plenary with then Danish Prime Minister Anders Fogh Rasmussen (‘Don’t give us too many moving targets – it is already complex’).

After further debate, 2 °C was finally adopted at the UNFCCC climate summit in Cancun in December 2010. Nota bene as an upper limit. The official text (Decision 1/CP.16 Para I(4)) pledges

to hold the increase in global average temperature below 2 °C above pre- industrial levels.

So talking about a 2 °C “goal” or “target” is misleading – nobody in their right mind would aim to warm the climate by 2 °C. The goal is to avoid just that, namely keeping warming below 2 °C. As an upper limit it was also originally proposed by the WBGU.

What are the alternatives?

Victor & Kennel propose to track a bunch of “vital signs” rather than global-mean surface temperature. They write:

What is ultimately needed is a volatility index that measures the evolving risk from extreme events.

As anyone who has ever thought about extreme events – which by definition are rare – knows, the uncertainties relating to extreme events and their link to anthropogenic forcing are many times larger than those relating to global temperature. It is rather illogical to complain about ~0.1 °C variability in global temperature, but then propose a much more volatile index instead.

Or take this proposal:

Because energy stored in the deep oceans will be released over decades or centuries, ocean heat content is a good proxy for the long-term risk to future generations and planetary-scale ecology.

It seems that the authors are not getting the physics of the climate system here. The deep oceans will almost certainly not release any heat for at least a thousand years to come; instead they will continue to absorb heat while slowly catching up with the much greater surface warming. It is also unclear what the amount of heat stored in the deep ocean has to do with risks and impacts at our planet’s surface – if deep ocean heat uptake increases (e.g. due to a reduction in deep water renewal rates, as predicted by IPCC), how would this affect people and ecosystems on land?

Vital Signs

The idea to monitor other vital signs of our home planet and to keep them within acceptable bounds is of course neither bad nor new. In fact, in addition to the 2 °C warming limit the WBGU has also proposed to limit ocean acidification to at most 0.2 (in terms of reduction of the mean pH of the global surface ocean) and to limit global sea-level rise to at most 1 meter. And there is a high-profile scientific debate about further planetary boundaries which Victor & Kennel don’t bother mentioning, although the 2009 Nature paper A safe operating space for humanity by Rockström et al. already has clocked up 932 citations in Web of Science. The key difference to Victor & Kennel, apart from the better scientific foundation of these earlier proposals, is that these bounds are intended as additional and complementary to the 2 °C limit and not to replace it.

If one wanted to sabotage the chances for a meaningful agreement in Paris next year, towards which the negotiations have been ongoing for several years, there’d hardly be a better way than restarting a debate about the finally-agreed foundation once again, namely the global long-term goal of limiting warming to at most 2 °C. This would be a sure recipe to delay the process by years. That is time which we do not have if we want to prevent dangerous climate change.

Footnote

(1) According to IPCC, mitigation consistent with the 2°C limit involves annualized reduction of consumption growth by 0.04 to 0.14 (median: 0.06) percentage points over the century relative to annualized consumption growth in the baseline that is between 1.6% and 3% per year. Estimates do not include the benefits of reduced climate change as well as co-benefits and adverse side-effects of mitigation. Estimates at the high end of these cost ranges are from models that are relatively inflexible to achieve the deep emissions reductions required in the long run to meet these goals and/or include assumptions about market imperfections that would raise costs.

Links

The Guardian: Could the 2°C climate target be completely wrong?

We have covered just the main points – a more detailed analysis [pdf] of the further questionable claims by Victor and Kennel has been prepared by the scientists from Climate Analytics.

Climate Progress: 2°C Or Not 2°C: Why We Must Not Ditch Scientific Reality In Climate Policy

Carbon Brief: Scientists weigh in on two degrees target for curbing global warming. Lots of leading climate scientists comment on Victor & Kennel (none agree with them).

Jonathan Koomey: The Case for the 2 C Warming Limit

Robert Watson and Marlene Moses: Is it time to abandon 2 degrees?

Update 6 October: David Victor has posted a lengthy response at Dot Earth. I was most surprised by the fact that he says that I use “the same tactics that are often decried of the far right—of slamming people personally with codewords like “political scientist” and “retired astrophysicist” to dismiss us as irrelevant”. I did not know either author, so I looked up Thomson Reuters Web of Science (as I routinely do) to see what their field of scientific work is. I have no idea why calling someone a political scientist or astrophysicist are “codewords” (for what?) or could be taken as “ad hominem slam” (I’m proud to have started my career in astrophysics), or why a political scientist should not be exactly the kind of person qualified to comment on the climate policy negotiation process. I thought that this is just the kind of thing political scientists analyse. In any case I want to make very clear that characterising these scientists by naming their fields of scientific work was not intended to call into question their expertise, nor did my final paragraph intend to imply they are trying to sabotage an agreement in Paris – I just fear that this would be the (surely unintended) effect if their proposal were to be adopted.

By your references to “global warming” I infer you mean global surface warming.

Krugman:

Continues here:

http://www.nytimes.com/2014/09/19/opinion/paul-krugman-could-fighting-global-warming-be-cheap-and-free.html

A combination of 4) and 5) in the progression

1)it’s not happening 2) it’s not us 3)it’s not bad 4)it’s too hard 5)it’s too late

There is much more embedded in the Victor and Kennel opinion piece than just a literal “2C” limit debate.

The article contains arguments that are highly flawed, for sure, starting with the opening line “… has been accepted uncritically and has proved influential” as anyone who has been following this issue knows that the 2 degree goal has always been contentious. That they can claim that 2 degrees has been accepted “uncritically” given so much easy-to-find-on-the-internet discussion about the problems with 2 degrees tells me that this is more about polemics than a serious discussion.

Victor and Kennel have provided plenty of fodder for the denialism noise machine: “… growth of average global surface temperature — which has stalled since 1998 … “.

Now Victor and Kennel may argue that they are not attempting to contribute to general confusion over climate change, but I believe it is naive to think publishing intentionally provocative articles on climate change will not somehow be used against any serious effort to address the issue.

It is not too cynical of me to believe that the usual suspects will read Victor and Kennel’s editorial and claim “SEE, the science is NOT settled!!”

I’ve been convinced for some time that the science communities’ effects on actual national policies on this issue have maxed out, that we are left with the large body problem of national and international politics which are driven by more basal issues than the fineries of intellectual debates within small circles of academics.

What is the likelihood we have enough energy in the system that we have already blown the 2 degree limit? It seems to me this is likely, especially if sensitivity is towards the top end of estimates.

If that is the case, what do we do then when 2 degrees no longer has value, simply push the next limit up to 3 degrees?

Perhaps the issue to be explored here is that natural variation has such a large influence on global average temperatures that, at the very least, we may need to factor in other measures with defined numbers to give governments a clearer idea of where we really are at in terms of the limits on carbon emissions.

While I disagree with the Nature article, I wonder if there is some merit in making other indicators as prominent as the 2 degree limit to highlight the urgency of the climate situation and encourage action.

In the mid-1980s as the debate over whether there was a causal link between lung cancer and smoking cigarettes was escalating, a tobacco company famously said, “Our product is doubt and confusion.” The scientists supporting that “product” were Victor & Kennel’s ancestors with similar expertise on the matter at hand.

One should always ask, “Cui bono?” about such opinion pieces.

Where did the 2C limit come from, what peer-revued papers?

That something is affordable (0.06 % of global GDP) doesn’t show that it is feasible. I expect the global population becoming largely vegetarian is hugely affordable, even profitable (due to the relative inefficiencies of meat production) but is isn’t going to happen any time soon. Whilst it’s clearly technically feasible to avoid 2C warming, in my opinion it is no longer politically/culturally feasible.

#7–Adam, your question about the genesis of the 2-degree limit seems to presuppose a simple answer–one in which there are a couple of papers you could look up and be done. But let’s reread the relevant description from the post, above:

That’s mostly about the political deliberations, to be sure, not the scientific underpinnings of the goal. But note the citations: the CUP book, the relevant Assessment Reports (presumably TAR and AR4) and the Copenhagen Synthesis Report of 2009. That’s a bunch of reading, of course. I’m presuming that that means that, as with so much in the climate change context, that there are many, many papers on impacts, mitigation and adaptation that figured into the debate. Frustrating, but that may just be how it is.

Hi there just wanted to give you a brief

heads up and let you know a few of the images aren’t loading correctly.

I’m not sure why but I think its a linking issue.

I’ve tried it in two different browsers and both show the same

outcome.

Part 8: The Invention of the Two-Degree Target

“A group of German scientists, yielding to political pressure, invented an easily digestible message in the mid-1990s: the two-degree target.

But this is scientific nonsense.

“Two degrees is not a magical limit — it’s clearly a political goal,” says Hans Joachim Schellnhuber, director of the Potsdam Institute for Climate Impact Research (PIK).

“The world will not come to an end right away in the event of stronger warming, nor are we definitely saved if warming is not as significant.

The reality, of course, is much more complicated.”

Schellnhuber ought to know.

He is the father of the two-degree target.

“Yes, I plead guilty,” he says, smiling.

The idea didn’t hurt his career. In fact, it made him Germany’s most influential climatologist. Schellnhuber, a theoretical physicist, became Chancellor Angela Merkel’s chief scientific adviser — a position any researcher would envy.”

http://www.spiegel.de/international/world/climate-catastrophe-a-superstorm-for-global-warming-research-a-686697-8.html

[Response: Typical Spiegel stuff, a rather imaginative story…

Of course the 2 °C limit is a political choice – who else other than elected representatives would have a mandate for a normative choice for climate policy? Science can only help to inform this choice, i.e. provide an analysis of the risks that come with different warming levels, as thousands of peer-reviewed studies and the assessment of this literature by the IPCC do. What level of risk society wants to accept, and thus what goals are considered appropriate for climate policy, is not for scientists to decide. It is a decision similar to that about a speed limit on a piece of road, which is also a risk assessment that can be informed by scientific data like accident statistics but remains a political decision. And of course a limit of 50 km/h is not chosen because at 51 km/h something terrible happens – risks overall increase gradually. That even applies in the presence of thresholds (like that for marine ice sheet instability), because it is not precisely known where they are, so that the risk of crossing them gradually increases with more warming. -stefan]

The IPCC’s “0.06%” reduction on GDP growth rates should be read – and should have been prestnted as – as “zero to well within measurement error”.

Economic indicators are inherently much, much, more uncertain than physical ones. GDP isn’t a measurement, it’s an estimate – consult any manual of national income statistics or methods pages of statistics agencies on the numerous judgement choices and corrections that you have to make. For instance, production (GDP/NNP) and income statistics (GNI/NNI) should match perfectly. They never do in practice. The discrepancy for the US in 2003 was $23bn, or 0.02%. Past GDP is routinely corrected with changes an order of magnitude higher than 0.06%. Any economist would say that forecasting next year’s GDP to within 0.1% is fanciful. For 50 years, it’s a joke.

Adam,

Good question. It appears to have originated with the UNEP advisory group in 1989 when they stated, ‘2ºC increase was determined to be “an upper limit beyond which the risks of grave damage to ecosystems, and of non-linear responses, are expected to increase rapidly”.’

In 1996, the EU established its 2C policy target to avoid “the worst impacts” of global climate change.

The number appears to be more of a convenient choice, rather than a strict barrier. Indeed, some have been quite critical of this choice:

“The 2 deg C limit is talked about by a lot within Europe. It is never defined though what it means. Is it 2 deg C for the globe or for Europe? Also when is/was the base against which the 2 deg C is calculated from? I know you don’t know the answer, but I don’t either! I think it is plucked out of thin air.” — Phil Jones, email to Christian Kremer, 06Sept2007

[Response: In the original proposal by WBGU in 1995, in the EU decision of 2005 linked to above (“… to ensure that global average temperature increases do not exceed pre-industrial levels by more than 2°C”) and in the Cancun decision cited above these points are clearly defined. Not sure what the point is of citing some old email by someone who simply says he does not know how it is defined. -stefan]

“Climate policy needs a “long-term global goal””

I disagree – that’s one way of doing it

The other way is a pigovian tax to price carbon based on the likely harm (taking into account the uncertainties and the non linear impacts). A “Goal” isn’t needed, fossil fuels will only be burnt if they are economically providing more value than harm (of course we can ramp up the carbon tax over time to work with the grain of the economic cycle and reduce the economic dislocation, so, for example, existing power stations can be used for the remainder of their useful life, but the prospect of a steadily increasing carbon tax rate would make building new fossil fuel power stations uneconomic)

Off-topic, but necessary to say:

Indian Prime Minister Modi in his visit to the US, at the event at Madison Square Garden, at the Council for Foreign Relations, spoke repeatedly of the crisis that climate change is bringing upon the planet, and of his plans for clean energy for India. He has the slogan “zero defect, zero effect” for manufacturing in India – i.e., Indian manufacturing will try to be world class and try to have zero net effect on the environment.

Strange that this has received next to zero coverage in the US media and blogs.

If you estimate the sustainable rate of carbon dioxide emission (so that CO2 concentration remains constant) for the planet, and divide it by the world population, you get a per capita carbon budget (tons of CO2 per person per year).

[Response: I would say that the sustainable rate of CO2 emission is zero. Simplifies the math, if not the situation. David]

Two important observations:

Indians as of 2007 were well below this per capita limit.

1250 million Indians want to industrialize and fast (that is why they voted for Modi).

So what India is able to do is crucial to the future of the planet’s climate.

When the Indian Prime Minister Modi comes to the US and talks about climate change, and his aspiration to industrialize in a carbon-neutral way, and the US media collectively gives a yawn, it is infinitely and amply clear, that climate change is not taken seriously here, even in the liberal section of the media. When a key player in the world’s carbon dioxide economy lands on their shores, and talks planet-friendly, it is a non-event.

Conclusion: Climate change doesn’t exist for the Republicans; and for the Democrats, it is merely a way to bash Republicans as anti-science.

Sorry, $25.6 bn (Wikipedia).

@7 Uh, did you read the article? A summary of the scientific process that led to the 2 degree guideline is pretty clearly given. This process included much peer review and discussion.

The link to Climate Analytics is malformed…..

[Response: Thanks, should work now, Stefan]

Sabotage is the correct word.

I think that we have not considered targets below preindustrial which is a shame. Dropping sea level by a couple meters would likely pay for itself in coastal real estate development.

We don’t need goals and targets. We need to end all fossil fuel use as rapidly as possible, period. It’s very simple.

William Nordhaus’s new book (the title of which triggered a spam warning?!) has a lot to say along these lines that is for the most part consistent with this post.

If this piece will be reposted on “Klimalounge” my clear projection is it’ll break the 200°-barrier by Schellnhuber-/Rahmstorf-/PIK-haters within hours. If only this energy could be conserved…

Thank you Stefan for this post.

It is a very informative and well documented history of the 2°C limit.

Adam Gallon @7.

I believe the 2ºC limit first appeared in 1990 as part of the work of the AGGG. (The limit thus predates the foundation of the WBGU suggested as the source in the Der Spiegel article mentioned @11.) The need to define targets had first been identified at the Vallach & Bellagio workshops organised by WMO, UNEP & ICSU in 1987 which led to the AGGG being formed. While some of the 1990 numbers appeared first at Villach-Bellagio, the 2ºC wasn’t among them. The development of the targets as described in the full 1990 report was the work of G.W. HElL and M. HOOTSMANS who acknowledge that their ‘temperature targets’ “agree with the temperature targets suggested by the Deutsche Bundestag(1989).” Those who can read German may be able to gleen from Deutsche Bundestag, ed. 1989. Protecting the Earth’s Atmosphere: An International Challenge. what is meant by ‘agreeing’. Some may find this five page PDF extract from the full report’s Preface more managable than the whole thing.

(There is also some rather fanciful comment on-line I’ve seen that say these limits were first written on the back of a napkin in a Stockholm restaurant, presumably after a well ‘lubricated’ meal.)

Note that the “danger” engendered is not specifically about mankind but about ecosystems as well as about “non-linear responses” by the climate. The 2ºC was only one of six targets, two for SLR, one CO2e ppm, one for rate of surface warming and two “absolute temperature targets” of which the 2ºC was the higher limit which engenders “risks of grave damage to ecosystems” rather than just possible “extensive ecosystem damage.”

Arun, re India:

It remains to be seen if Modi means it. His clean energy goals are abstract and in the future. India is still building coal power plants, for example.

I hope you’re right, but I worked in India for a while- Modi’s political party, BJP, has strong relationships with local holding companies such as Tata and Reliant. They are likely to block any attempts to decarbonize.

Unfortunately, I think that a lot of the incoherency (e.g., about the hiatus and ocean uptake) in the Victor and Kennel piece takes away from a good dialogue on why one could sensibly advocate for multiple climate targets, while still not losing sight of the “gold standard” (near-zero emissions, e.g., see Ken Caldeira’s tweet and further expressed here).

While zero emissions is a goal that can be made independent of targets, the science (in particular, the questions we can ask of climate models) has advanced to the point where we can answer practical questions that policymakers have, test the response to mitigation strategies (e.g., temperature response to carbon emitted, quantify responses to gases that exhibit feedbacks on atmospheric chemistry, or agents like soot that feature climate-health co-benefits, etc.); this acts to create improved discourse along the halfway point between a step-function zero emission extreme and the political reality that such a future won’t happen overnight.

Proposals to extend the 2 C target to other metrics (and to better fulfill Article 2 of the UNFCCC) have already been advanced (e.g., Steinacher et al., 2013). People have also argued that ocean acidification ought to follow under the Article 2 mandate, “…to allow ecosystems to adapt naturally to climate change, to ensure that food production is not threatened and to enable economic development to proceed in a sustainable manner.” However, Steinacher et al. have shown that temperature targets aren’t generally the most restrictive, and also you encounter lower probability of meeting multiple targets simultaneously (for given emissions) than you do for even the most restrictive target on a list of your favorite subset of things we ought to avoid. In such cases, I don’t see much distinction between replacing a 2 C limit and adding to it.

It is here where I take some issue with Stefan’s discussion relating to Figure 2 in the post (the arrow chart). We already have a very useful vehicle for linking anthropogenic input to impacts discussed here, including ocean acidification– this whole concept of “cumulative carbon emissions.” Stefan argues that moving further toward the beginning of the causation chain creates extra steps of uncertainty, but I’d argue that (1) There are countless papers now that rather robustly link peak temperatures (roughly linearly) to cumulative carbon emissions, plus some accounting for short-lived non-CO2 greenhouse gases, and (2) what you lose in creating another step from emissions to temperature to impacts, you gain from creating a more tractable and concise metric for policy-makers, and one that still projects onto non-temperature related metrics like ocean acidification.

So, a quick list of where we can make sense of a temperature target:

1) If your favorite metric is purely climatic, then I basically agree that you can usually scale the severity of most things to global-mean temperature. This includes the amount of water vapor in the air, Arctic warming, sea ice loss, land warming, and I suspect (but haven’t checked) the fraction of global land covered by n-sigma temperature extremes at any given time relative to climatology (pick your favorite n). For more local impacts, like rainfall over southern South America, these things may be less robustly linked or coupled strongly to other factors (e.g., ozone depletion/recovery) and the large-scale hydrologic cycle (but see point #2 below).

2) If you consider other forcings (e.g., black carbon) or aerosols that force interhemispheric asymmetries or vertical structures in radiation anomalies, then you get things like ITCZ migration, global precipitation-temperature scalings break down, etc., and start to lose contact between global temperature metrics and changes in the hydrologic cycle– things we care about, and which still exhibit some robust large-scale responses to CO2-forced warming.

3) Even for sea-level rise, even though this relates to temperature, SLR continues well after global temperatures stabilize due of heat mixing into the oceans (e.g., Meehl et al., 2012)– even before you think about slow components associated with ice sheets– so you end up with a breakdown in relating temperature to SLR commitments before equilibrium.

4) If we extend our interest to ocean acidification, then the temperature target is of no validity.

All of these things can be traceable in some respect to total emissions, but there are many value systems, in addition to different vulnerabilities/risks, so I don’t see the inherent danger in presenting multiple metrics of what constitutes “dangerous.” What emerges from this is probably a cumulative emission goal that would lead to warming of far less than 2 C, as Stefan noted. But the logic that this added complexity will hinder political progress is just as odd as the notion that we should dispel of a 2 C target because we won’t have the political will to meet it.

Mike,

Modi feels he has already taken some action on climate prior to becoming PM. http://www.narendramodi.in/convenient-action-2/

I see the problem. Extrapolate the trend line out until it reaches the 2C line and we’re looking at well into the next century. That’s not going to frighten anyone sufficiently is it? That’s why the tack is being changed.

Neither annual variation or decadal changes are the right metric. Short term variations are irregular and involve significant changes in global surface temperature.

http://www.cru.uea.ac.uk/cru/data/temperature/HadCRUT4.png

It results in part from significant changes in cloud radiative forcing associated with changes in ocean and atmospheric circulation – e.g. http://meteora.ucsd.edu/~jnorris/reprints/Loeb_et_al_ISSI_Surv_Geophys_2012.pdf

The CERES net product look like this.

http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_net_flux-all-sky_march-2000toapril-2014.png

What it shows is large variability in TOA radiative flux.

Earlier ERBS data looks like this.

http://watertechbyrie.files.wordpress.com/2014/06/erbs.png

It shows a 0.7 W/m2 decrease in IR forcing and a 2.1 W/m2 increase in SW forcing(AR4 s3.4.4.1). This data shows a dominant role for natural warming in the 1976 to 1998 warming – although you may always critique the data. These are anomalies – an order of magnitude more precise than absolute values. At the very least it raises some interesting questions. .

The critical multi-decadal periods are obvious in the surface record. Changes in the trajectory of surface temperature occur in 1909, 1944, 1976 and 1998. This coincides with shifts in ocean and atmospheric indices.

Anastasios Tsonis, of the Atmospheric Sciences Group at University of Wisconsin, Milwaukee, and colleagues used a mathematical network approach to analyse abrupt climate change on decadal timescales. Ocean and atmospheric indices – in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of climate variability. Tsonis and colleagues calculated the ‘distance’ between the indices. It was found that they would synchronise at certain times and then shift into a new state.

It is no coincidence that shifts in ocean and atmospheric indices occur at the same time as changes in the trajectory of global surface temperature. Our ‘interest is to understand – first the natural variability of climate – and then take it from there. So we were very excited when we realized a lot of changes in the past century from warmer to cooler and then back to warmer were all natural,’ Tsonis said.

The changes at these multi-decadal scales would seem to be climatologically significant. The complex, dynamical mechanism in play suggest – on the basis of past behavior – that the hiatus could persist for decades. Indeed this has been addressed in a past RC post – Warming interrupted – much ado about natural variation.

Their argument was that a hemispheric annual average temperature is not a meaningful metric of anything. What is so wrong with a metric that objectively measures bad outcomes for human beings? Could it be that by all objective measures of extreme events, people and the planet are doing just fine despite a 1˚C increase in temperature?

Why don’t we quit wasting our time worrying about meaningless measures of global annual average temperature and worry about how to develop sustainably, protect our water resources and distribute natural resources more equitably?

@11 > “The idea didn’t hurt his career. In fact, it made him Germany’s most influential climatologist. Schellnhuber, a theoretical physicist, became Chancellor Angela Merkel’s chief scientific adviser–a position any researcher would envy.”

This is an outright slur because it doesn’t say what led this particular (and particularly brilliant) theoretical physicist to become a climatologist.

The PNAS profile of H.J. (John) Schellnhuber is very helpful. It’s a biography that is totally intriguing–an intrigue not of the Spiegel kind:

“The institute is on the north coast of Germany and specializes in tidal flat research. Tidal flats are ‘very peculiar ecosystems,’ he says. ‘[They are] a sort of fractal structure. It’s interesting how the water is transported and how nutrients are transported through these fractal structures, how algae start to settle there.’ Researchers from geology, biology, and chemistry came to ask him for advice about how to construct a mathematical model for their specialties. ‘So I started to become interested in ecosystems,’ he says, ‘not because I was a green activist, it was simply through sheer scientific curiosity.’

“His own group focused on the stability of nonperiodic orbits, using Kolmogorov-Arnold-Moser theory. ‘That is probably the most complicated mathematical issue you can do in nonlinear dynamics,’ he says. Meanwhile, his colleagues in the institute were considering how algae grew in the mud. ‘I found it refreshing.’ he says. ‘You cannot do 12 hours a day thinking of Kolmogorov-Arnold-Moser theory. There may be some people doing that but, in general, you do it a few hours a day. I found it more enjoyable to talk to people and even go out to the tidal flats to look at the structures.'”

http://www.pnas.org/content/105/6/1783.full

The Spiegel kind of intrigue is happening alright–as observed here:

http://www.slate.com/articles/health_and_science/science/2014/10/the_wall_street_journal_and_steve_koonin_the_new_face_of_climate_change.html#

“Being a smart physicist can just give you more elaborate ways to delude yourself and others, along with the arrogance to think you can do so without taking the time to really understand the subject you are discussing. …If you expose a panel of physicists who are ignorant of climate science to 50 percent wisdom and 50 percent nonsense, one cannot hold out much hope for a good outcome.”

Mike,

Modi is not going to not industrialize as fast as he can just because he lacks clean energy sources. He wants to, if he can, to the extent possible, etc., which is why I think he wanted to learn from Americans what might be possible, and to what extent they are willing to help.

Anyway, this was India’s stand on refrigerant gases before Modi:

http://www.thehindu.com/news/national/no-phasing-out-refrigerant-gases-india/article5266096.ece

This is after Modi:

http://www.washingtonpost.com/national/health-science/obama-modi-announce-modest-progress-in-joint-efforts-to-fight-global-warming/2014/09/30/c3e7464e-48aa-11e4-b72e-d60a9229cc10_story.html

You can see Obama & Modi had some tiny progress. I was hoping for much, much more.

-Arun

“2 degrees” needs uncertainty bars — or gray fuzz — around it for the same reason any other number or point or line should be shown as ‘somewhere in this range’ the slippery slope begins to accelerate.

http://0.tqn.com/y/grammar/1/G/S/S/-/-/blackboard_slippery_slope.jpg

“All politics takes place on a slippery slope ….”

George Will, Statecraft as Soulcraft: What Government Does (Simon & Schuster, 1983).

It appears from the graph that the warming from 1905 to 1945 is a very close match to the rise from 1965 to 2000. Can this be explained in layman terms that make a strong argument that the latest rise is man made whereas the earlier rise was natural.

I think this is an obvious question from anybody looking at this graph and would be good to have a few words of explanation included.

Thanks, Stefan. However, you seem to be more saying that there is a strong case for a temperature limit, rather than there is a strong case for the 2°C limit. The UNFCCC wants to avoid dangerous climate change. As you’ve shown, the 2°C limit probably won’t do this (e.g. the risk of GIS destabilisation is at 1°C). The safe operating space for humanity, that you cited, puts the safe CO2 concentration at 350 ppm (IIRC). Hansen, et al (2013) puts the possibly safe limit at 1°C (“possibly” because it may be lower).

Regarding that estimate of the costs of achieving 2°C, I understand the IPCC report said that all analyses are incomplete, so I’m not sure what the benefit of stating an incomplete estimate is, especially as it is unknown what the concentration of CO2 has to be limited to to achieve the goal.

Having an unmoving goal of something one doesn’t know how to achieve doesn’t seem to me to be an advantage of it. If, as you say, the CO2 concentration needs to be adjusted to keep warming below 2°C, then that greatly alters the possibility of achieving the goal. So I’m not convinced that it is a good goal. Hansen’s target of 350 ppm seems a much better one – it’s something tangible, but will likely cost far too much, so won’t be adopted. Which leaves us with …

#26–Mm, some of the Modi renewables initiatives seem pretty concrete to me. But it’s true that there seems little concern on climate; the emphasis on solar may be more motivated by the shortcomings of the coal-dominated status quo:

http://thediplomat.com/2014/10/indias-renewable-energy-opportunity/

MA,

Thanks you the reference. It was rather informative. Contrary to the post @17, the value was a target rather than a limit. There was nothing magical about this value, but was a convenient goal. It was much easier to target a whole number in subsequent discussion, than a fraction, as those involved realized that there is a continuum of consequences that will occur as temperatures rise. Others jumped on this convenient value, and it was incorporated into the previously mentioned documents.

“Limiting global warming to 2 °C – why Victor and Kennel are WRONG” … ?

This article is what is known as a REACTIVE Response to the COGNITIVE FRAMING already created by David Victor and Charles Kennel.

What we have here is a classical example of the Dog being Wagged by the Tail.

This approach has and will always FAIL to achieve the Goals of the Author, Stefan and Real Climate. The only people this article will reach or positively influence are the already “True Believers”, and not the middle ground, and definitely not the Political Power Base. The only power that is able to effect lasting change.

This issue of “Framing public discourse” is well known, is scientifically based, and has been used in advertising and politics now for over 40 years. By defaulting to responding to the specific arguments put by David Victor and Charles Kennel, only serves to REINFORCE those very arguments and the overall Framing of the Public discourse.

This approach is doomed to fail. It has never worked, and will never work to positively effect any change people’s thinking or judgment.

Change your approach or you will lose not just this minor battle, but the whole War at hand. The Hook has been baited, and Stefan has “taken it hook, line and sinker.” To use a fishing METAPHOR. (It’s not an analogy)

The only solution is to bring in real professionals in public communications and set your own FRAMING of the “debate”using powerful Linguistic tools that are well known and science based.

Several come to mind here: George Lakoff, George Marshall, Will Stefan, Luke Menzies & Will Grant, Peter Ward, Hans Rosling, Kevin Anderson, Yale Forum on Climate Change & the Media, et al.

— —

Now what if the 2C is wrong anyway? Like because it is set too HIGH.

The article quotes: “…to hold the increase in global average temperature below 2 °C above pre- industrial levels.”

And yet the facts suggest, at least to many, that the past 10-20 years projections/estimates of the Physical Manifestations in Changes expected from increasing CO2 rising and Temperature increase over pre-industrial are OBVIOUSLY occurring much faster than any major body or qualified science group has ever “predicted” since Rio or before.

Many (several?) scientists/bodies are indicating that 2C is already in the pipeline on the existing CO2 emissions already produced.

The real question to be asked and answer urgently, in my humble opinion, is should the below 2C be lowered to below 1C?

That is the proper framing of the “debate” that needs to be had: the Scientific, Public and Political dialogues need to be FRAMED by the Scientific community using Scientific based communication approaches that actually do work and can generate real change.

This kind of approach means that the focus will not continue to be driven by the likes of the Murdoch Press, Der Spiegel, Judith Curry, nor by David Victor and Charles Kennel. Whatever they say will become redundant and irrelevant to the MAJORITY and the global political dialogues.

AS interesting, and factual, and logical Stefan article is attempting to “debunk” the nature article, I fear all it has done is brought unnecessary attention to do, and allowed them to take the High Ground in this particular “battle” of to 2C or not to 2C. But that ain’t the critical question anyway, and will answer and solve nothing.

Peace!

Nordhaus interview/story at NPR:

http://www.npr.org/2014/02/11/271537401/economist-says-best-climate-fix-a-tough-sell-but-worth-it

Alvin Stone – I really appreciate what Dr Mann said in this statement;

“If we miss the 2°C mark, it doesn’t mean we just stop and give up – because 3°C degrees is worse than 2.5°C, and 4°C is a whole lot worse. So if we miss that exit ramp, we still have to do everything we can to get off at the next exit.” – See more at: http://www.planetexperts.com/climate-scientist-michael-mann-looking-entirely-different-planet/#sthash.sql0Tj12.dpuf

I have personally witnessed nature bouncing back in the Sea of Cortez back in the 70’s and 80’s. It was dead or dying and serious conservation was implemented along with economic support for those adversely affected (a key issue for any conservation effort and ironically financed by Mexico’s petroleum extraction). The Sea is now fantastically recovered, at least it was last I was there in 1998.

Chris Dudley @19, be careful what you wish for. A below preindustrial CO2 target level could risk dangerous climate change in the opposite direction, seeing as we are several thousand years past peak Milankovich forcing and should be nearing the end of the current interglacial.

It would be fairer to identify David Victor not as a “political scientist” but as a “coordinating lead author on the most recent IPCC report on climate-change mitigation” (http://mitigation2014.org/contributor/chapter-1). Incredibly, you don’t even mention that you are linking approvingly to an IPCC summary that he cowrote (http://report.mitigation2014.org/spm/ipcc_wg3_ar5_summary-for-policymakers_approved.pdf). And his coauthor, Charles Kennel, is not just a “retired astrophysicist” but the former director of NASA’s Mission to Planet Earth, a comprehensive survey and observation of our home planet that had a major climate-change focus. Meanwhile, you grossly misinterpret the costs, because the 0.06% figure you quote (from Victor’s summary for policy makers) is an ideal scenario that depends on massive deployment of carbon-capture technology for both biomass and coal–technology that environmental groups are fighting tooth and nail. In any real world scenario, as is made clear in chapters 1 and 6 of the same report, the actual costs of climate change mitigation are likely to be much higher. Since that is one of the roots of their worry about the feasibility of the 2-degree target, the point is important. There is real room to argue that Victor and Kennel are wrong. But you should not stack the deck by pretending they are not significant figures and that the worries about cost are trivial.

Setting a “target” for something that is outside our control does not make sense to me. We, mankind, can not directly control global-mean surface temperature.

This is true of climate science the same way it is true in setting statistical targets for global corporations — every target set has to be able to be directly controlled by the people responsible for it.

It makes much more sense to set targets for something controllable, like: CO2 emissions, number of new nuclear power plants opened, number of people newly having access to electrical power, percentage of those supplied from CO2 emissions controlled sources, etc.

And Dr James Hansen, retired director of NASA-GISS, who single handed in the 1980’s completely RESET and RE-FRAMED the global debate on Global Warming in one speech.

It was cognitive switch he flicked that galvanized a world to take the steps which led to the Rio Summit on Global warming and Climate Change.

He didn’t follow the accepted play book. He created a new one, and he did it with WORDS alone. At that time there was no globally financed anti-AGW tag team of talking heads motivated by self-interest, greed and power.

Hansen said what he said with self-assured knowledge he was MORALLY RIGHT, and mixed that with personal passion and commitment to stand up and ACT.

Hansen used Metaphor (by accident or design) and captured the world’s Imagination of that made total sense to every man. Hansen, just walked up to Congress and STOLE THE STAGE.

The chief ingredient in true individuality is self-responsibility. This is deciding for ourselves exactly how we are going to act and taking responsibility for what happens as a result.

This means giving up forever trying to blame others for anything that goes wrong along the way in our own lives or for not achieving our goals. Because everything we chose is our choice. No one else’s.

So, then how does pointing fingers at flaws in other people’s arguments fit with the goal of a true individual speaking truth to power?

It seems to me that all attempts at blaming or criticizing the leaders and spokespersons of the anti-Science & Reason Campaign only takes away our own power, because it implies that they have some kind of power over us and that what they have done and say defines our life.

The true individual, however, would never fall into this trap. They know that they make up their own minds and make their own choices and they choose what the TOPIC is and HOW that will be communicated to the whole world themselves – not anyone else!

Think about it. Then ask for help in solving the problem. For no man is an island, and no one can be all things to all people nor know all the answers to every question confronting us all.

Thanks

Revkin has a *very* lengthy response from Kennel and Victor on dot.earth today.

http://dotearth.blogs.nytimes.com/2014/10/03/getting-over-the-2-degree-limit-on-global-warming/#more-53273

“Of course the 2 °C limit is a political choice… What level of risk society wants to accept… is not for scientists to decide. It is a decision similar to that about a speed limit on a piece of road, which is also a risk assessment… but remains a political decision. And of course a limit of 50 km/h is not chosen because at 51 km/h something terrible happens… ”

-stefan]

How will the hard-driving electorat regard advocates of the Precautionary Principle who think in terms of 31 mile an hour speed limits ?

Wissenschaftkraft ? Nien, Danke!

In Victor’s response, the discussion of what was agreed at Cancun seems mealymouthed. Regardless of their errors on climate science, this really is their core point, that a binding agreement can’t be achieved. It seems rather obvious that motion towards an binding agreement is happening. And, it is what the US has insisted on all along. Lack of bindihg commitments in Kyoto kept the US from ratifying, for example. Victor’s political theory thus seems very far separated from the actualities in international relations.

#43–Thanks, Steven; that’s well worth reading. I was struck by a couple of comments.

“What real firms and governments can actually deliver…” An aspect worth considering, given that the ‘delivery’ so far has been pretty disappointing.

The first-mentioned POV has certainly been on display here at RC, as regular readers will be able to recall without difficulty. Sometimes the proposed targets have been insisted upon with, er, considerable ferocity. The process wasn’t helpful here, where a considerable bent toward the ‘natural science world view’ exists, and would likely do less well in the wider world.

“The diplomats have been doing that for decades and people haven’t fallen into line.” Definition of insanity, anyone?

We won’t stop the UNFCCC process, which has considerable momentum as process (results are another question) and shouldn’t try. But perhaps there are ways to reinvigorate it, rather than simply ‘doubling down’, as Victor puts it elsewhere in the piece.

I’m not advocating for V & K here; I’m actually pretty agnostic on this whole question, since my foci on climate will be 1) social networking and organizing to get ‘boots on the ground;’ 2) writing and commenting; 3) research and analysis (basically to keep up, sort of.) The current topic right now for me falls into #3, and thus seems unlikely to imply changes to my behavior in the short term at least.

But in response to some of the ‘ferocious commenters’ of past RC debates, I’ve noted the messiness of real world responses to just about anything, and stated my intuition that there will never be a single, top-down climate plan covering everything neatly which will also have a hope in hell of adoption. (Especially if you take that word “everything” seriously.) Rather, responses will occur fractally, on many levels, as we see occurring now, and will change and interact over time. We’ll have to be responsive and change our behavior, probably at multiple points, to adjust to changing realities.

I still think that true, however much we might wish for clarity, simplicity or certainty.

Steven Sullivan @43.

That David Victor reply you link to has a very interesting sub-text. And for the record, it is 5,700 words long.

But the actual substance of the reply, apart from blaming Nature for restricting their word-count & citation-count and a whole lot of other waffle, comes down to firstly the cost of staying below a 2ºC rise and secondly the way measures of performance work.

The reply does seem to be starting to say that if technology works and is harnessed without problems, then a 2ºC rise can be avoided cheaply (the 0.06%). But it makes the point that technology will not always work and will not be harnessed without problems, so the cost will be….? And the reply then starts to say that we are not on target to stay below 2ºC.

Without being funny, that does appear to suggest the 2ºC limit is achievable and working as a measure of performance.

The reply also talks of a need for something other than a 2ºC limit because “abstract goals that may be well rooted in ultimate impacts are not what inspire actual changes in human behavior.” This is very true but by developing measures of performance that politicians and public can relate to, that can “actually affect the behavior of institutions (e.g., governments, firms) and people,” it does not follow that we have to ditch the 2ºC limit.

So it is difficult to see what Victor & Kennel are about with this piece, other than being loud and controversial (although much of the subtext of the reply is an apology). If they had come out batting for the adoption of the PwC Low Carbon Economy Index or some such, they maybe their message would find a more receptive audience. Instead they call for a return to square one which is surely the last thing we should be contemplating w.r.t. AGW.

I know that mitigation, particularly discussion of zero-emission energy sources, is generally off-topic at RC. However, discussing mitigation options seems inherent in any discussion of what is realistically achievable as far as limiting temperature increase.

As such, regarding the discussion of India earlier on this thread, I’d like to respectfully offer some recommended informative reading:

Can India Achieve 100% Renewable Energy?

By Darshan Goswami

http://www.EnergyCentral.com

August 21 2014

Excerpt:

Also, this link should retrieve a listing of recent articles on renewable energy developments in India:

http://cleantechnica.com/?s=India