I have written a number of times about the procedure used to attribute recent climate change (here in 2010, in 2012 (about the AR4 statement), and again in 2013 after AR5 was released). For people who want a summary of what the attribution problem is, how we think about the human contributions and why the IPCC reaches the conclusions it does, read those posts instead of this one.

The bottom line is that multiple studies indicate with very strong confidence that human activity is the dominant component in the warming of the last 50 to 60 years, and that our best estimates are that pretty much all of the rise is anthropogenic.

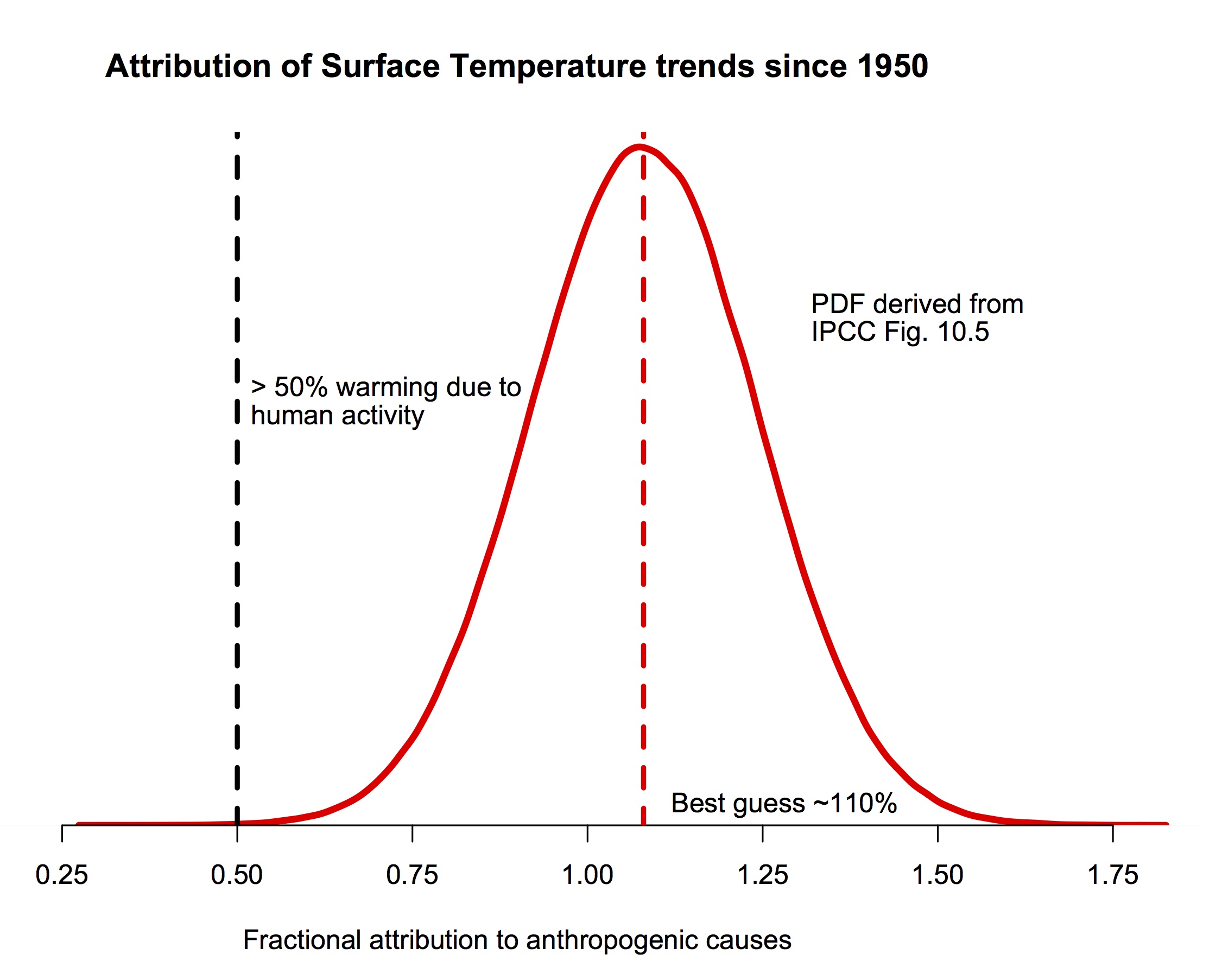

The probability density function for the fraction of warming attributable to human activity (derived from Fig. 10.5 in IPCC AR5). The bulk of the probability is far to the right of the “50%” line, and the peak is around 110%.

If you are still here, I should be clear that this post is focused on a specific claim Judith Curry has recently blogged about supporting a “50-50” attribution (i.e. that trends since the middle of the 20th Century are 50% human-caused, and 50% natural, a position that would center her pdf at 0.5 in the figure above). She also commented about her puzzlement about why other scientists don’t agree with her. Reading over her arguments in detail, I find very little to recommend them, and perhaps the reasoning for this will be interesting for readers. So, here follows a line-by-line commentary on her recent post. Please excuse the length.

Starting from the top… (note, quotes from Judith Curry’s blog are blockquoted).

Pick one:

a) Warming since 1950 is predominantly (more than 50%) caused by humans.

b) Warming since 1950 is predominantly caused by natural processes.

When faced with a choice between a) and b), I respond: ‘I can’t choose, since i think the most likely split between natural and anthropogenic causes to recent global warming is about 50-50′. Gavin thinks I’m ‘making things up’, so I promised yet another post on this topic.

This is not a good start. The statements that ended up in the IPCC SPMs are descriptions of what was found in the main chapters and in the papers they were assessing, not questions that were independently thought about and then answered. Thus while this dichotomy might represent Judith’s problem right now, it has nothing to do with what IPCC concluded. In addition, in framing this as a binary choice, it gives implicit (but invalid) support to the idea that each choice is equally likely. That this is invalid reasoning should be obvious by simply replacing 50% with any other value and noting that the half/half argument could be made independent of any data.

For background and context, see my previous 4 part series Overconfidence in the IPCC’s detection and attribution.

Framing

The IPCC’s AR5 attribution statement:

It is extremely likely that more than half of the observed increase in global average surface temperature from 1951 to 2010 was caused by the anthropogenic increase in greenhouse gas concentrations and other anthropogenic forcings together. The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

I’ve remarked on the ‘most’ (previous incarnation of ‘more than half’, equivalent in meaning) in my Uncertainty Monster paper:

Further, the attribution statement itself is at best imprecise and at worst ambiguous: what does “most” mean – 51% or 99%?

Whether it is 51% or 99% would seem to make a rather big difference regarding the policy response. It’s time for climate scientists to refine this range.

I am arguing here that the ‘choice’ regarding attribution shouldn’t be binary, and there should not be a break at 50%; rather we should consider the following terciles for the net anthropogenic contribution to warming since 1950:

- >66%

- 33-66%

- <33%

JC note: I removed the bounds at 100% and 0% as per a comment from Bart Verheggen.

Hence 50-50 refers to the tercile 33-66% (as the midpoint)

Here Judith makes the same mistake that I commented on in my 2012 post – assuming that a statement about where the bulk of the pdf lies is a statement about where it’s mean is and that it must be cut off at some value (whether it is 99% or 100%). Neither of those things follow. I will gloss over the completely unnecessary confusion of the meaning of the word ‘most’ (again thoroughly discussed in 2012). I will also not get into policy implications since the question itself is purely a scientific one.

The division into terciles for the analysis is not a problem though, and the weight of the pdf in each tercile can easily be calculated. Translating the top figure, the likelihood of the attribution of the 1950+ trend to anthropogenic forcings falling in each tercile is 2×10-4%, 0.4% and 99.5% respectively.

Note: I am referring only to a period of overall warming, so by definition the cooling argument is eliminated. Further, I am referring to the NET anthropogenic effect (greenhouse gases + aerosols + etc). I am looking to compare the relative magnitudes of net anthropogenic contribution with net natural contributions.

The two IPCC statements discussed attribution to greenhouse gases (in AR4) and to all anthropogenic forcings (in AR5) (the subtleties involved there are discussed in the 2013 post). I don’t know what she refers to as the ‘cooling argument’, since it is clear that the temperatures have indeed warmed since 1950 (the period referred to in the IPCC statements). It is worth pointing out that there can be no assumption that natural contributions must be positive – indeed for any random time period of any length, one would expect natural contributions to be cooling half the time.

Further, by global warming I refer explicitly to the historical record of global average surface temperatures. Other data sets such as ocean heat content, sea ice extent, whatever, are not sufficiently mature or long-range (see Climate data records: maturity matrix). Further, the surface temperature is most relevant to climate change impacts, since humans and land ecosystems live on the surface. I acknowledge that temperature variations can vary over the earth’s surface, and that heat can be stored/released by vertical processes in the atmosphere and ocean. But the key issue of societal relevance (not to mention the focus of IPCC detection and attribution arguments) is the realization of this heat on the Earth’s surface.

Fine with this.

IPCC

Before getting into my 50-50 argument, a brief review of the IPCC perspective on detection and attribution. For detection, see my post Overconfidence in IPCC’s detection and attribution. Part I.

Let me clarify the distinction between detection and attribution, as used by the IPCC. Detection refers to change above and beyond natural internal variability. Once a change is detected, attribution attempts to identify external drivers of the change.

The reasoning process used by the IPCC in assessing confidence in its attribution statement is described by this statement from the AR4:

“The approaches used in detection and attribution research described above cannot fully account for all uncertainties, and thus ultimately expert judgement is required to give a calibrated assessment of whether a specific cause is responsible for a given climate change. The assessment approach used in this chapter is to consider results from multiple studies using a variety of observational data sets, models, forcings and analysis techniques. The assessment based on these results typically takes into account the number of studies, the extent to which there is consensus among studies on the significance of detection results, the extent to which there is consensus on the consistency between the observed change and the change expected from forcing, the degree of consistency with other types of evidence, the extent to which known uncertainties are accounted for in and between studies, and whether there might be other physically plausible explanations for the given climate change. Having determined a particular likelihood assessment, this was then further downweighted to take into account any remaining uncertainties, such as, for example, structural uncertainties or a limited exploration of possible forcing histories of uncertain forcings. The overall assessment also considers whether several independent lines of evidence strengthen a result.” (IPCC AR4)

I won’t make a judgment here as to how ‘expert judgment’ and subjective ‘down weighting’ is different from ‘making things up’

Is expert judgement about the structural uncertainties in a statistical procedure associated with various assumptions that need to be made different from ‘making things up’? Actually, yes – it is.

AR5 Chapter 10 has a more extensive discussion on the philosophy and methodology of detection and attribution, but the general idea has not really changed from AR4.

In my previous post (related to the AR4), I asked the question: what was the original likelihood assessment from which this apparently minimal downweighting occurred? The AR5 provides an answer:

The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

So, I interpret this as scything that the IPCC’s best estimate is that 100% of the warming since 1950 is attributable to humans, and they then down weight this to ‘more than half’ to account for various uncertainties. And then assign an ‘extremely likely’ confidence level to all this.

Making things up, anyone?

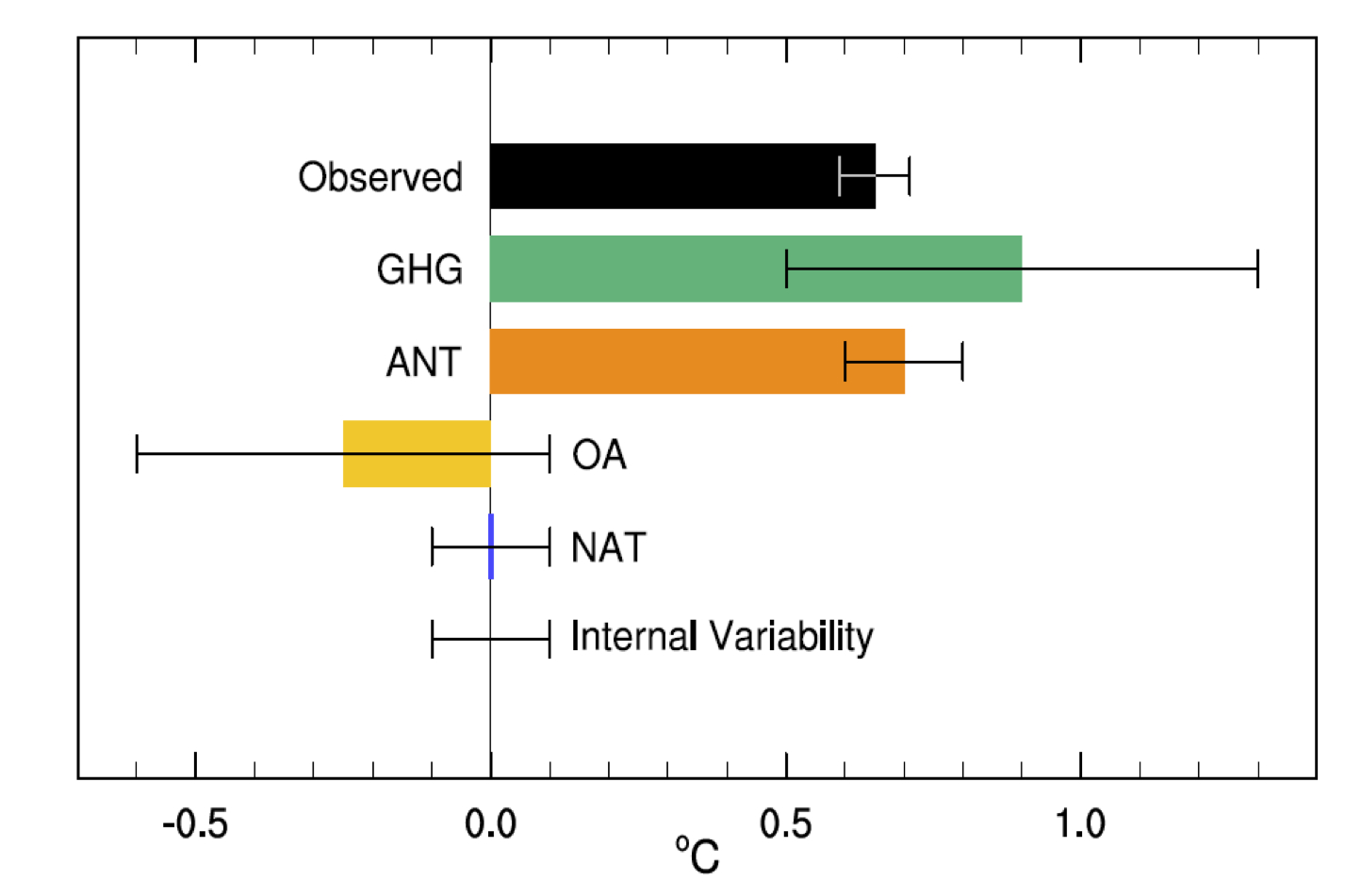

This is very confused. The basis of the AR5 calculation is summarised in figure 10.5:

The best estimate of the warming due to anthropogenic forcings (ANT) is the orange bar (noting the 1𝛔 uncertainties). Reading off the graph, it is 0.7±0.2ºC (5-95%) with the observed warming 0.65±0.06 (5-95%). The attribution then follows as having a mean of ~110%, with a 5-95% range of 80–130%. This easily justifies the IPCC claims of having a mean near 100%, and a very low likelihood of the attribution being less than 50% (p < 0.0001!). Note there is no ‘downweighting’ of any argument here – both statements are true given the numerical distribution. However, there must be some expert judgement to assess what potential structural errors might exist in the procedure. For instance, the assumption that fingerprint patterns are linearly additive, or uncertainties in the pattern because of deficiencies in the forcings or models etc. In the absence of any reason to think that the attribution procedure is biased (and Judith offers none), structural uncertainties will only serve to expand the spread. Note that one would need to expand the uncertainties by a factor of 3 in both directions to contradict the first part of the IPCC statement. That seems unlikely in the absence of any demonstration of some huge missing factors.

I’ve just reread Overconfidence in IPCC’s detection and attribution. Part IV, I recommend that anyone who seriously wants to understand this should read this previous post. It explains why I think the AR5 detection and attribution reasoning is flawed.

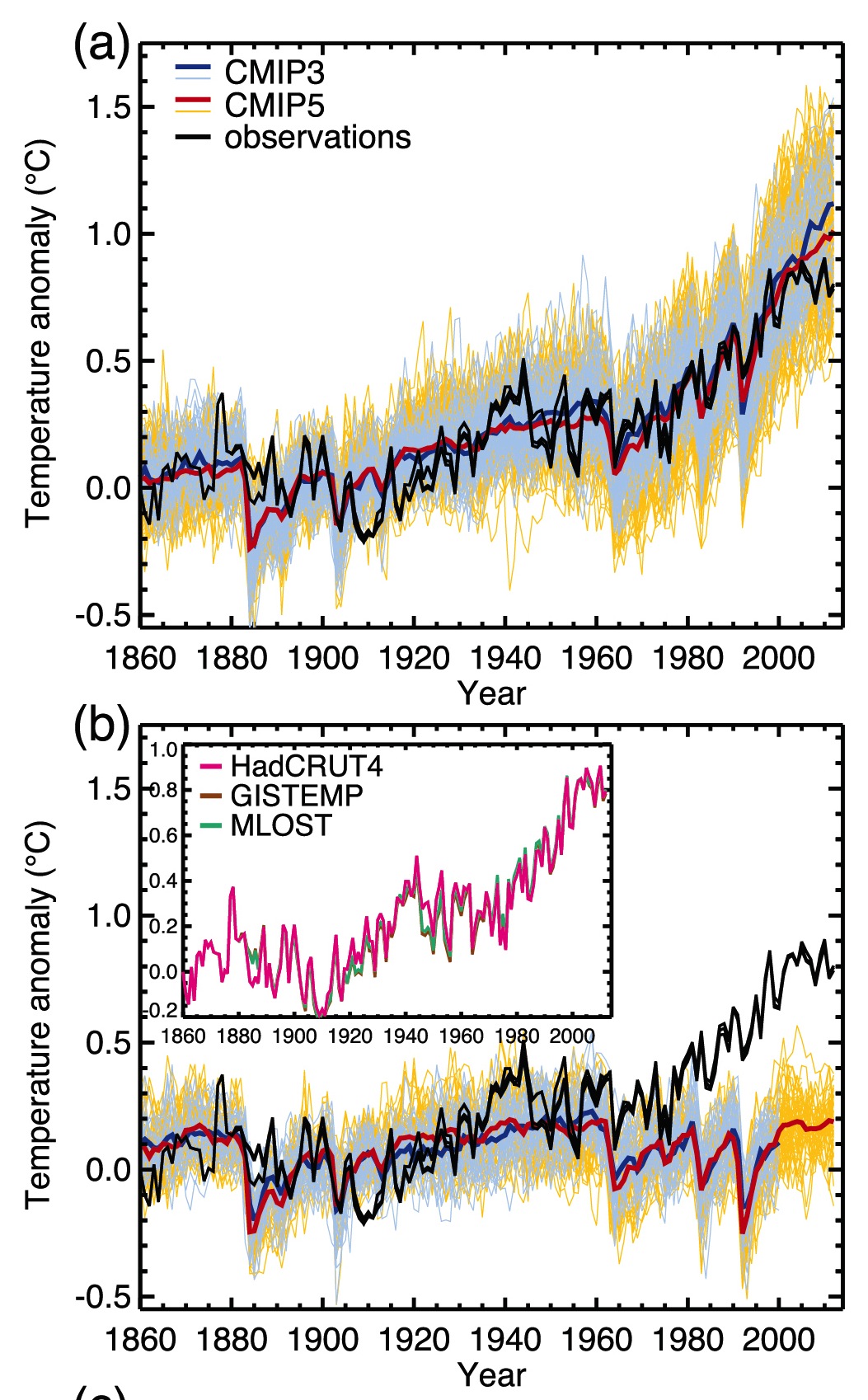

Of particular relevance to the 50-50 argument, the IPCC has failed to convincingly demonstrate ‘detection.’ Because historical records aren’t long enough and paleo reconstructions are not reliable, the climate models ‘detect’ AGW by comparing natural forcing simulations with anthropogenically forced simulations. When the spectra of the variability of the unforced simulations is compared with the observed spectra of variability, the AR4 simulations show insufficient variability at 40-100 yrs, whereas AR5 simulations show reasonable variability. The IPCC then regards the divergence between unforced and anthropogenically forced simulations after ~1980 as the heart of the their detection and attribution argument. See Figure 10.1 from AR5 WGI (a) is with natural and anthropogenic forcing; (b) is without anthropogenic forcing:

This is also confused. “Detection” is (like attribution) a model-based exercise, starting from the idea that one can estimate the result of a counterfactual: what would the temperature have done in the absence of the drivers compared to what it would do if they were included? GCM results show clearly that the expected anthropogenic signal would start to be detectable (“come out of the noise”) sometime after 1980 (for reference, Hansen’s public statement to that effect was in 1988). There is no obvious discrepancy in spectra between the CMIP5 models and the observations, and so I am unclear why Judith finds the detection step lacking. It is interesting to note that given the variability in the models, the anthropogenic signal is now more than 5𝛔 over what would have been expected naturally (and if it’s good enough for the Higgs Boson….).

Note in particular that the models fail to simulate the observed warming between 1910 and 1940.

Here Judith is (I think) referring to the mismatch between the ensemble mean (red) and the observations (black) in that period. But the red line is simply an estimate of the forced trends, so the correct reading of the graph would be that the models do not support an argument suggesting that all of the 1910-1940 excursion is forced (contingent on the forcing datasets that were used), which is what was stated in AR5. However, the observations are well within the spread of the models and so could easily be within the range of the forced trend + simulated internal variability. A quick analysis (a proper attribution study is more involved than this) gives an observed trend over 1910-1940 as 0.13 to 0.15ºC/decade (depending the dataset, with ±0.03ºC (5-95%) uncertainty in the OLS), while the spread in my collation of the historical CMIP5 models is 0.07±0.07ºC/decade (5-95%). Specifically, 8 model runs out of 131 have trends over that period greater than 0.13ºC/decade – suggesting that one might see this magnitude of excursion 5-10% of the time. For reference, the GHG related trend in the GISS models over that period is about 0.06ºC/decade. However, the uncertainties in the forcings for that period are larger than in recent decades (in particular for the solar and aerosol-related emissions) and so the forced trend (0.07ºC/decade) could have been different in reality. And since we don’t have good ocean heat content data, nor any satellite observations, or any measurements of stratospheric temperatures to help distinguish potential errors in the forcing from internal variability, it is inevitable that there will be more uncertainty in the attribution for that period than for more recently.

The glaring flaw in their logic is this. If you are trying to attribute warming over a short period, e.g. since 1980, detection requires that you explicitly consider the phasing of multidecadal natural internal variability during that period (e.g. AMO, PDO), not just the spectra over a long time period. Attribution arguments of late 20th century warming have failed to pass the detection threshold which requires accounting for the phasing of the AMO and PDO. It is typically argued that these oscillations go up and down, in net they are a wash. Maybe, but they are NOT a wash when you are considering a period of the order, or shorter than, the multidecadal time scales associated with these oscillations.

Watch the pea under the thimble here. The IPCC statements were from a relatively long period (i.e. 1950 to 2005/2010). Judith jumps to assessing shorter trends (i.e. from 1980) and shorter periods obviously have the potential to have a higher component of internal variability. The whole point about looking at longer periods is that internal oscillations have a smaller contribution. Since she is arguing that the AMO/PDO have potentially multi-decadal periods, then she should be supportive of using multi-decadal periods (i.e. 50, 60 years or more) for the attribution.

Further, in the presence of multidecadal oscillations with a nominal 60-80 yr time scale, convincing attribution requires that you can attribute the variability for more than one 60-80 yr period, preferably back to the mid 19th century. Not being able to address the attribution of change in the early 20th century to my mind precludes any highly confident attribution of change in the late 20th century.

This isn’t quite right. Our expectation (from basic theory and models) is that the second half of the 20th C is when anthropogenic effects really took off. Restricting attribution to 120-160 yr trends seems too constraining – though there is no problem in looking at that too. However, Judith is actually assuming what remains to be determined. What is the evidence that all 60-80yr variability is natural? Variations in forcings (in particularly aerosols, and maybe solar) can easily project onto this timescale and so any separation of forced vs. internal variability is really difficult based on statistical arguments alone (see also Mann et al, 2014). Indeed, it is the attribution exercise that helps you conclude what the magnitude of any internal oscillations might be. Note that if we were only looking at the global mean temperature, there would be quite a lot of wiggle room for different contributions. Looking deeper into different variables and spatial patterns is what allows for a more precise result.

The 50-50 argument

There are multiple lines of evidence supporting the 50-50 (middle tercile) attribution argument. Here are the major ones, to my mind.

Sensitivity

The 100% anthropogenic attribution from climate models is derived from climate models that have an average equilibrium climate sensitivity (ECS) around 3C. One of the major findings from AR5 WG1 was the divergence in ECS determined via climate models versus observations. This divergence led the AR5 to lower the likely bound on ECS to 1.5C (with ECS very unlikely to be below 1C).

Judith’s argument misstates how forcing fingerprints from GCMs are used in attribution studies. Notably, they are scaled to get the best fit to the observations (along with the other terms). If the models all had sensitivities of either 1ºC or 6ºC, the attribution to anthropogenic changes would be the same as long as the pattern of change was robust. What would change would be the scaling – less than one would imply a better fit with a lower sensitivity (or smaller forcing), and vice versa (see figure 10.4).

She also misstates how ECS is constrained – all constraints come from observations (whether from long-term paleo-climate observations, transient observations over the 20th Century or observations of emergent properties that correlate to sensitivity) combined with some sort of model. The divergence in AR5 was between constraints based on the transient observations using simplified energy balance models (EBM), and everything else. Subsequent work (for instance by Drew Shindell) has shown that the simplified EBMs are missing important transient effects associated with aerosols, and so the divergence is very likely less than AR5 assessed.

Nic Lewis at Climate Dialogue summarizes the observational evidence for ECS between 1.5 and 2C, with transient climate response (TCR) around 1.3C.

Nic Lewis has a comment at BishopHill on this:

The press release for the new study states: “Rapid warming in the last two and a half decades of the 20th century, they proposed in an earlier study, was roughly half due to global warming and half to the natural Atlantic Ocean cycle that kept more heat near the surface.” If only half the warming over 1976-2000 (linear trend 0.18°C/decade) was indeed anthropogenic, and the IPCC AR5 best estimate of the change in anthropogenic forcing over that period (linear trend 0.33Wm-2/decade) is accurate, then the transient climate response (TCR) would be little over 1°C. That is probably going too far, but the 1.3-1.4°C estimate in my and Marcel Crok’s report A Sensitive Matter is certainly supported by Chen and Tung’s findings.

Since the CMIP5 models used by the IPCC on average adequately reproduce observed global warming in the last two and a half decades of the 20th century without any contribution from multidecadal ocean variability, it follows that those models (whose mean TCR is slightly over 1.8°C) must be substantially too sensitive.

BTW, the longer term anthropogenic warming trends (50, 75 and 100 year) to 2011, after removing the solar, ENSO, volcanic and AMO signals given in Fig. 5 B of Tung’s earlier study (freely accessible via the link), of respectively 0.083, 0.078 and 0.068°C/decade also support low TCR values (varying from 0.91°C to 1.37°C), upon dividing by the linear trends exhibited by the IPCC AR5 best estimate time series for anthropogenic forcing. My own work gives TCR estimates towards the upper end of that range, still far below the average for CMIP5 models.

If true climate sensitivity is only 50-65% of the magnitude that is being simulated by climate models, then it is not unreasonable to infer that attribution of late 20th century warming is not 100% caused by anthropogenic factors, and attribution to anthropogenic forcing is in the middle tercile (50-50).

The IPCC’s attribution statement does not seem logically consistent with the uncertainty in climate sensitivity.

This is related to a paper by Tung and Zhou (2013). Note that the attribution statement has again shifted to the last 25 years of the 20th Century (1976-2000). But there are a couple of major problems with this argument though. First of all, Tung and Zhou assumed that all multi-decadal variability was associated with the Atlantic Multi-decadal Oscillation (AMO) and did not assess whether anthropogenic forcings could project onto this variability. It is circular reasoning to then use this paper to conclude that all multi-decadal variability is associated with the AMO.

The second problem is more serious. Lewis’ argument up until now that the best fit to the transient evolution over the 20th Century is with a relatively small sensitivity and small aerosol forcing (as opposed to a larger sensitivity and larger opposing aerosol forcing). However, in both these cases the attribution of the long-term trend to the combined anthropogenic effects is actually the same (near 100%). Indeed, one valid criticism of the recent papers on transient constraints is precisely that the simple models used do not have sufficient decadal variability!

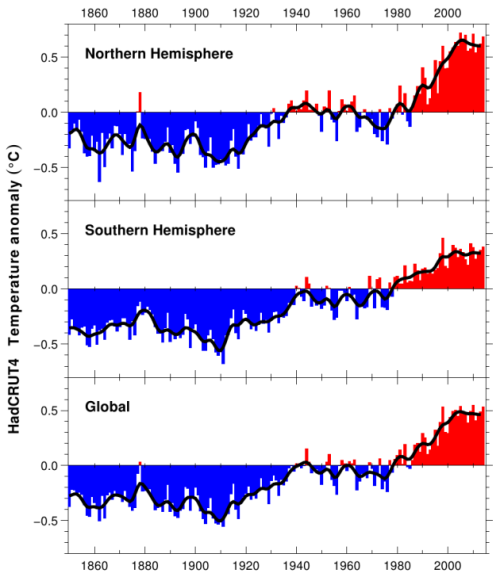

Climate variability since 1900

From HadCRUT4:

The IPCC does not have a convincing explanation for:

- warming from 1910-1940

- cooling from 1940-1975

- hiatus from 1998 to present

The IPCC purports to have a highly confident explanation for the warming since 1950, but it was only during the period 1976-2000 when the global surface temperatures actually increased.

The absence of convincing attribution of periods other than 1976-present to anthropogenic forcing leaves natural climate variability as the cause – some combination of solar (including solar indirect effects), uncertain volcanic forcing, natural internal (intrinsic variability) and possible unknown unknowns.

This point is not an argument for any particular attribution level. As is well known, using an argument of total ignorance to assume that the choice between two arbitrary alternatives must be 50/50 is a fallacy.

Attribution for any particular period follows exactly the same methodology as any other. What IPCC chooses to highlight is of course up to the authors, but there is nothing preventing an assessment of any of these periods. In general, the shorter the time period, the greater potential for internal variability, or (equivalently) the larger the forced signal needs to be in order to be detected. For instance, Pinatubo was a big rapid signal so that was detectable even in just a few years of data.

I gave a basic attribution for the 1910-1940 period above. The 1940-1975 average trend in the CMIP5 ensemble is -0.01ºC/decade (range -0.2 to 0.1ºC/decade), compared to -0.003 to -0.03ºC/decade in the observations and are therefore a reasonable fit. The GHG driven trends for this period are ~0.1ºC/decade, implying that there is a roughly opposite forcing coming from aerosols and volcanoes in the ensemble. The situation post-1998 is a little different because of the CMIP5 design, and ongoing reevaluations of recent forcings (Schmidt et al, 2014;Huber and Knutti, 2014). Better information about ocean heat content is also available to help there, but this is still a work in progress and is a great example of why it is harder to attribute changes over small time periods.

In the GCMs, the importance of internal variability to the trend decreases as a function of time. For 30 year trends, internal variations can have a ±0.12ºC/decade or so impact on trends, for 60 year trends, closer to ±0.08ºC/decade. For an expected anthropogenic trend of around 0.2ºC/decade, the signal will be clearer over the longer term. Thus cutting down the period to ever-shorter periods of years increases the challenges and one can end up simply cherry picking the noise instead of seeing the signal.

A key issue in attribution studies is to provide an answer to the question: When did anthropogenic global warming begin? As per the IPCC’s own analyses, significant warming didn’t begin until 1950. Just the Facts has a good post on this When did anthropogenic global warming begin?

I disagree as to whether this is a “key” issue for attribution studies, but as to when anthropogenic warming began, the answer is actually quite simple – when we started altering the atmosphere and land surface at climatically relevant scales. For the CO2 increase from deforestation this goes back millennia, for fossil fuel CO2, since the invention of the steam engine at least. In both cases there was a big uptick in the 18th Century. Perhaps that isn’t what Judith is getting at though. If she means when was it easily detectable, I discussed that above and the answer is sometime in the early 1980s.

The temperature record since 1900 is often characterized as a staircase, with periods of warming sequentially followed by periods of stasis/cooling. The stadium wave and Chen and Tung papers, among others, are consistent with the idea that the multidecadal oscillations, when superimposed on an overall warming trend, can account for the overall staircase pattern.

Nobody has any problems with the idea that multi-decadal internal variability might be important. The problem with many studies on this topic is the assumption that all multi-decadal variability is internal. This is very much an open question.

Let’s consider the 21st century hiatus. The continued forcing from CO2 over this period is substantial, not to mention ‘warming in the pipeline’ from late 20th century increase in CO2. To counter the expected warming from current forcing and the pipeline requires natural variability to effectively be of the same magnitude as the anthropogenic forcing. This is the rationale that Tung used to justify his 50-50 attribution (see also Tung and Zhou). The natural variability contribution may not be solely due to internal/intrinsic variability, and there is much speculation related to solar activity. There are also arguments related to aerosol forcing, which I personally find unconvincing (the topic of a future post).

Shorter time-periods are noisier. There are more possible influences of an appropriate magnitude and, for the recent period, continued (and very frustrating) uncertainties in aerosol effects. This has very little to do with the attribution for longer-time periods though (since change of forcing is much larger and impacts of internal variability smaller).

The IPCC notes overall warming since 1880. In particular, the period 1910-1940 is a period of warming that is comparable in duration and magnitude to the warming 1976-2000. Any anthropogenic forcing of that warming is very small (see Figure 10.1 above). The timing of the early 20th century warming is consistent with the AMO/PDO (e.g. the stadium wave; also noted by Tung and Zhou). The big unanswered question is: Why is the period 1940-1970 significantly warmer than say 1880-1910? Is it the sun? Is it a longer period ocean oscillation? Could the same processes causing the early 20th century warming be contributing to the late 20th century warming?

If we were just looking at 30 year periods in isolation, it’s inevitable that there will be these ambiguities because data quality degrades quickly back in time. But that is exactly why IPCC looks at longer periods.

Not only don’t we know the answer to these questions, but no one even seems to be asking them!

This is simply not true.

Attribution

I am arguing that climate models are not fit for the purpose of detection and attribution of climate change on decadal to multidecadal timescales. Figure 10.1 speaks for itself in this regard (see figure 11.25 for a zoom in on the recent hiatus). By ‘fit for purpose’, I am prepared to settle for getting an answer that falls in the right tercile.

Given the results above it would require a huge source of error to move the bulk of that probability anywhere else other than the right tercile.

The main relevant deficiencies of climate models are:

- climate sensitivity that appears to be too high, probably associated with problems in the fast thermodynamic feedbacks (water vapor, lapse rate, clouds)

- failure to simulate the correct network of multidecadal oscillations and their correct phasing

- substantial uncertainties in aerosol indirect effects

- unknown and uncertain solar indirect effects

The sensitivity argument is irrelevant (given that it isn’t zero of course). Simulation of the exact phasing of multi-decadal internal oscillations in a free-running GCM is impossible so that is a tough bar to reach! There are indeed uncertainties in aerosol forcing (not just the indirect effects) and, especially in the earlier part of the 20th Century, uncertainties in solar trends and impacts. Indeed, there is even uncertainty in volcanic forcing. However, none of these issues really affect the attribution argument because a) differences in magnitude of forcing over time are assessed by way of the scales in the attribution process, and b) errors in the spatial pattern will end up in the residuals, which are not large enough to change the overall assessment.

Nonetheless, it is worth thinking about what plausible variations in the aerosol or solar effects could have. Given that we are talking about the net anthropogenic effect, the playing off of negative aerosol forcing and climate sensitivity within bounds actually has very little effect on the attribution, so that isn’t particularly relevant. A much bigger role for solar would have an impact, but the trend would need to be about 5 times stronger over the relevant period to change the IPCC statement and I am not aware of any evidence to support this (and much that doesn’t).

So, how to sort this out and do a more realistic job of detecting climate change and and attributing it to natural variability versus anthropogenic forcing? Observationally based methods and simple models have been underutilized in this regard. Of great importance is to consider uncertainties in external forcing in context of attribution uncertainties.

It is inconsistent to talk in one breath about the importance of aerosol indirect effects and solar indirect effects and then state that ‘simple models’ are going to do the trick. Both of these issues relate to microphysical effects and atmospheric chemistry – neither of which are accounted for in simple models.

The logic of reasoning about climate uncertainty, is not at all straightforward, as discussed in my paper Reasoning about climate uncertainty.

So, am I ‘making things up’? Seems to me that I am applying straightforward logic. Which IMO has been disturbingly absent in attribution arguments, that use climate models that aren’t fit for purpose, use circular reasoning in detection, fail to assess the impact of forcing uncertainties on the attribution, and are heavily spiced by expert judgment and subjective downweighting.

My reading of the evidence suggests clearly that the IPCC conclusions are an accurate assessment of the issue. I have tried to follow the proposed logic of Judith’s points here, but unfortunately each one of these arguments is either based on a misunderstanding, an unfamiliarity with what is actually being done or is a red herring associated with shorter-term variability. If Judith is interested in why her arguments are not convincing to others, perhaps this can give her some clues.

References

- M.E. Mann, B.A. Steinman, and S.K. Miller, "On forced temperature changes, internal variability, and the AMO", Geophysical Research Letters, vol. 41, pp. 3211-3219, 2014. http://dx.doi.org/10.1002/2014GL059233

- K. Tung, and J. Zhou, "Using data to attribute episodes of warming and cooling in instrumental records", Proceedings of the National Academy of Sciences, vol. 110, pp. 2058-2063, 2013. http://dx.doi.org/10.1073/pnas.1212471110

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

- M. Huber, and R. Knutti, "Natural variability, radiative forcing and climate response in the recent hiatus reconciled", Nature Geoscience, vol. 7, pp. 651-656, 2014. http://dx.doi.org/10.1038/ngeo2228

99, meow: Huh? First, it’s a cycle, so all that speeding it up does is to raise the gross amount of heat absorbed to cause evaporation, which is exactly balanced by the gross heat released during condensation.

The cycle is not closed. Some of the latent heat carried from the surface to the upper troposphere is radiated to space. Hence, the energy returned to the surface is less than the energy expended to vaporize the surface water.

83 WebHubTelescope: Marler, you don’t seriously believe that there is some new state of matter or new phase transition that allows water vapor to be a positive feedback from 255K to 288K but then do a U-turn and become a negative feedback when the temperature hits 289K ?

What I “believe” is of no interest to anyone.

What I wrote was that the historical record does not rule out the possibility that at current temperatures and cloud covers, a future increase in CO2 or surface temperature may increase cloud cover. The oscillations in global mean temp over the last 11,000 years are not compatible with a strong positive feedback to temperature increase at the higher temperatures, and are compatible with a small negative feedback at high temperature (if not all related to changes in external forcings.) Increased cloud cover is a possible mechanism for such a negative feedback at the high temperatures.

A lot is known about mean temperature fluctuations over the past eons. We all know the problems with biased sampling, proxies and such, but there is a lot of evidence. About humidity, rainfall, and cloud cover much less is known. So future humidity, rainfall, and cloud cover are not predictable from historical data.

You made up that bit about new states of matter.

@99: Now I’m really worried, considering that the current radiative imbalance is ~0.85W/m^2. What prevents most of that from evaporating water instead of heating the deeps of the oceans?

All you are demonstrating is that over 6 years (1944 to 1950) internal variability can be a dominant factor. I doubt anyone disputes that. The difference between trends 1944 to 2010 versus 1950 to 2010 is much smaller (around 0.01-0.02ºC/decade depending on data set) and even more so relative to the overall trend of about 0.1ºC/decade. – gavin]

And from NASA.

Unlike El Niño and La Niña, which may occur every 3 to 7 years and last from 6 to 18 months, the PDO can remain in the same phase for 20 to 30 years. The shift in the PDO can have significant implications for global climate, affecting Pacific and Atlantic hurricane activity, droughts and flooding around the Pacific basin, the productivity of marine ecosystems, and global land temperature patterns. #8220;This multi-year Pacific Decadal Oscillation ‘cool’ trend can intensify La Niña or diminish El Niño impacts around the Pacific basin,” said Bill Patzert, an oceanographer and climatologist at NASA’s Jet Propulsion Laboratory, Pasadena, Calif. “The persistence of this large-scale pattern [in 2008] tells us there is much more than an isolated La Niña occurring in the Pacific Ocean.”

Natural, large-scale climate patterns like the PDO and El Niño-La Niña are superimposed on global warming caused by increasing concentrations of greenhouse gases and landscape changes like deforestation. According to Josh Willis, JPL oceanographer and climate scientist, “These natural climate phenomena can sometimes hide global warming caused by human activities. Or they can have the opposite effect of accentuating it.” http://earthobservatory.nasa.gov/IOTD/view.php?id=8703

This latter from a NASA page gives the essence of the problem. There are multidecadal regimes – 20 to 40 years in the proxy records – that in the 20th century shifted from warmer to cooler to warmer again.

We are presuming that the internal variability of the mid century cooling was cancelled by the late century warming. Thus the residual over a long enough period is the anthropogenic component – and the rate of residual warming is some 0.07 degrees C/decade from 1944. I refer you again to the Swanson graph – which demonstrates a different method. He excludes climate shifts in 1976-78 and 1998-2001 – and gets a rate of late century warming that he presumes is the anthropogenic component.

Either way – the total of late century warming is not the relevant point as there is evidently a component of natural warming preceded by decades of natural cooling. Forgive me if this is not the ‘consensus’ understanding.

There are 2 problems with the 100% attribution from 1950. The cooling regime started in 1944 and cooled some 0.324 degrees C by 2050. So we are starting at a low point that seems all ENSO. The other problem involves centennial to millennial variability. For instance – the 20th century saw a 1000 year peak in El Nino activity.

I linked to Vance et al (2012) earlier – and linked to the graph showing a salt content proxy over 100 years in a Law Dome ice core. More salt = La Nina.

Especially if warming doesn’t resume anytime soon – as indeed suggested in a realclimate post some years ago -https://www.realclimate.org/index.php/archives/2009/07/warminginterrupted-much-ado-about-natural-variability/

Swanson et al (2009) suggest that non-warming for decades could present some empirical difficulties for CO2 mitigation. So it seems. The answer is seeing the gains that can come from sustainable development and in energy innovation. The answer is certainly not bristling at complexity, denying uncertainty and righteously smiting any deemed blogospheric deniers.

I am not referring to you – your reputation precedes you – and I have certainly not felt unwelcome or poorly treated. The run of the mill blogospheric climate warriors are a different story. Noise and fury – the fog of war writ small.

Wally Broecker has been warning us that climate is wild for a long time. A proper understanding of this suggests that surprises are inevitable – and this seems likely to include non-warming at least for decades – and that climate is inherently unstable. I am just trying to get with the program.

Cheers

M. Marler said:

I take it that you have little experience with materials science? The Arrhenius rate laws do not show runaway positive feedback with increases in temperature. This has to do with the Maxwell-Boltzmann factor having the temperature term in the denominator of the exponent — exp(-E/kT).

It is also laughable that you think these are “higher temperatures” — this is the Kelvin scale remember, which you may not know because of your apparent limited experience with thermal physics.

The positive feedback is what it is, a moderate positive feedback that won’t suddenly do a U-turn (in contrast to what you think it will do, yet as you say yourself, it doesn’t matter what you think).

103, meow: Now I’m really worried, considering that the current radiative imbalance is ~0.85W/m^2.

radiative imbalance measured where?

Trenberth, Fasullo and Kiehl (2009) estimate the transport of latent heat from surface to upper troposphere at 80 W/m^2. (They put the transport by dry thermals at 17W/m^2.) All I have proposed is the possibility that a small (1C or less) increase in global mean temp or a doubling of CO2 concentration will raise the rate of latent heat transport and possibly increase the cloud cover, especially during the hottest time of the year in each region. Increased evaporation due to increased radiation and/or temperature increase is not exactly a revolutionary idea. What is not calculable at present is a quantitative estimate of the amount of increase in the dynamic climate system with its daily and annually changing temperatures and insolation.

Matthew R Marler.

Levitus OHC data (Graph here. Usually 2 clicks to ‘download your attachment’) 0-2000m for the last 5 years gives a linear rise requiring 0.82 Wm^-2 of warming. That suggests that a global figure of rather ~0.85 Wm^-2 understates the current value.

Matthew R Marler responds to Meow’s estimate of ~0.85 W/m^2 with :

Perhaps you are behind the curve in the current research? Heat that ends up in the ocean essentially contributes to the imbalance because thermal physics says it can not radiate from depth.

Now anyone can see from the data that the ocean heat capacity (OHC) has been accumulating energy at a rate on the order of 0.5 to 1 W/m^2.

See Balmaseda, et al

http://onlinelibrary.wiley.com/enhanced/doi/10.1002/grl.50382/

> Marler … I have proposed

Lindzen’s “Iris”? or something different how?

@101 & 106

But the extra water vapor created by an accelerated hydro cycle is also a strong GHG, so it traps more upwelling IR. Which effect wins out? Why?

The transport is from the surface to the atmosphere as a whole, not just the upper troposphere. How much goes to the upper troposphere is a different question, as is how much radiates to space. Gotta put numbers on these quantities to understand the importance of a faster hydro cycle. Got numbers?

TOA, per Hansen 2005 and endorsed by the same Trenberth et al paper you cite above.

…by some unknown amount with unknown consequences for radiation to space vs. stronger greenhouse effect due to more water vapor in the atmosphere.

Because? Why should clouds respond in that way? Why not, for example, more clouds at night and in winter? Further, if clouds were to respond as you say (i.e. some kind of Iris Effect), can orbital forcing still cause ice-age cycles? If not, what causes them, and how does it overcome such a strong negative feedback?

Agreed. Evaporation rates do indeed seem to be rising (Yu & Weller 2007), and so is tropospheric water vapor (Trenberth et al 2009 at 317). But this is the basis for the positive water vapor feedback, too.

“Amount of increase” of _what_ in the “dynamic climate system”? And so, the climate system is complex. That doesn’t mean we can’t understand a great deal about it. Nor does our incomplete understanding mean that ACC is nothing to be concerned about.

The “uncertainty monster” has _two_ mouths: though P > 0 that some negative feedback will save our bacon, also P > 0 that the climate system is more sensitive than consensus and/or that warming’s effects will be worse than we think.

.

110 meow: But the extra water vapor created by an accelerated hydro cycle is also a strong GHG, so it traps more upwelling IR. Which effect wins out? Why?

Good questions, aren’t they?

Because? Why should clouds respond in that way? Why not, for example, more clouds at night and in winter?

Good questions. To start with, water vapor pressure is greater at higher temps than lower (supralinearly), with a 2C increase producing a greater increase at 20C than 10C. So I would expect stronger water vapor effects in summer than winter, and stronger in daytime than at night, though clearly not by much. The giant thunderclouds that you see in the temperate and equatorial zones are more frequent and larger in summer than in winter. Palle and Lakin, linked above: In particular, a strong positive correlation between SST and total cloud is identified over the Equatorial Pacific region (6ºN–6ºS) of r = 0.74 and 0.60 for ISCCP and MODIS, respectively, which is found to be highly statistically significant (p =4.5×10-6 and 0.03).

Stronger than the negative correlation exhibited in the lower temp overall mid-latitudes; clearly that is not definitive, but it supports the possibility.

“Amount of increase” of _what_ in the “dynamic climate system”? And so, the climate system is complex. That doesn’t mean we can’t understand a great deal about it. Nor does our incomplete understanding mean that ACC is nothing to be concerned about.

Sorry, I meant the amount of increase in the rate of non-radiative heat transport from the surface to the upper atmosphere. I agree that much is understood, which is why I expressed respect for the GCMs; but the GCMs and other over-predictions of warming show there is no basis for confidence in the predictions (or “model output” if you reject the notion that they were “predictions”), and show that there is much yet to be learned. My concern is the reason that I undertook to study more of this than I had before. As far as I can tell, there is no support for “alarm” or immediate major action to reduce fossil fuel use.

The “uncertainty monster” has _two_ mouths: though P > 0 that some negative feedback will save our bacon, also P > 0 that the climate system is more sensitive than consensus and/or that warming’s effects will be worse than we think.

To date, I think that the sensitivity has been overestimated by the equilibrium calculations and the GCMs, and the benefits of increased CO2 over at least the next few decades have been underestimated. If it takes 100 plus years to double the concentration of CO2, and if the equilibrium response is a 2C increase (Pierrehumbert, “Principles of Planetary Climate”, p 623), and if the increased CO2 produces increased vegetation and crop growth, then the present rate of development of non-fossil fuel power and fuel generation is more appropriate than an Apollo type project or attempt to get rid of all fossil fuel use by 2050 starting now as fast as can be done. So yes I am concerned, but I think that the kind of promotional literature I regularly receive from AAAS is inappropriately alarmist.

I want to say “thank you” to the moderators for letting my posts appear. Some years ago I was disappointed that some were suppressed. Thank you.

Matthew, I’m surprise by the number of ‘ifs’ in your conclusion here. We can all string out a few ‘ifs’ such that, if they all come through, things may not be so bad. But you seem over-confident that your ifs are winners. My (utterly unoriginal) view is, uncertainty is not our friend. I spend a fair bit on home insurance and life insurance, despite knowing the odds of disaster are very long indeed. Given the number of ways that things can go wrong with continued CO2 emissions (from ocean acidfication and sea level rise to simple warming, shifting precipitation patterns, release of buried carbon in perma-frost, and the possibility of higher climate sensitivities– which seem to be needed to account for glacial/inter-glacial transitions), crossing our fingers and carrying on with BAU seems nothing short of crazy to me. The Apollo program wasn’t even aimed at a real public issue or danger. As I see it, a similar program aimed at a real, long term irreversible threat to the public interest everywhere on earth is very well justified.

From the Willis and Leuliette paper:

It is important to note that results

of recent studies of the observational

sea level budget are not truly global,

but are limited to the region where all

three observing systems are valid. The

analyses to date have been limited to the

Jason ground track coverage between

66°S and 66°N, regions where Argo has

profiled 900 m or deeper, and areas away

from coasts in order to limit potential

leakage of land hydrology into the

GRACE gravity signals …

In particular, the regional seas

surrounding Indonesia exhibit a large

rise during 2005–2010 that significantly

changes the trend when this area is

excluded from the budget analyses

(Han et al., 2010).

Marler said:

Can’t you cite a decent reference that doesn’t have the following caveat?

“This suggests that spurious changes exist within the ISCCP data that may have contributed to long -term changes,as suggested by numerous authors. A calibration artifact origin of these changes appears to be highly likely, as can be seen in Figure 3 where geographically -resolved long -term ISCCP trends are shown . “

That said, we can also point out other facts.

According to thermodynamics and a largely fixed lapse rate, the average cloud height will simply shift up and down in altitude with change in temperature, which you can see in their Figure 2. That looks like a strong indication of global warming, eh?

It also figures that you would use Rob Ellison’s favorite Palle and Laken reference, which he wheels out on a daily basis at Climate Etc. This paper has ZERO citations according to Google Scholar.

One can see how propaganda repeated ad nauseum has an effect on guys such as Marler.

Matthew Marler,

SST-cloud correlations in a given climate don’t tell you much about climate sensitivity or cloud feedback, for a number of reasons. Another point is that total cloud variation is a rather useless constraint on the TOA energy perturbation, since clouds act on both sides of the planetary energy budget.

The tropical free atmosphere is constrained dynamically to have rather small horizontal temperature gradients. Since convection sets in when the low level air is buoyant with respect to air aloft, it follows that the pattern of tropical cloud cover will be slaved largely to the spatial pattern of tropical SST. This doesn’t relate to the discussion of sensitivity because the free tropospheric temperatures will rise in a warming climate, thus raising the initiation temperature for free convection. There has been some (misguided) history of using the observed histogram of tropical SSTs (apped at 30 Cish) and the fact that clouds form in such regimes as an indication of stabilizing feedbacks in the tropics.

True.

But again, why should that result in an increase in SW reflectance by clouds? Why not instead an exacerbated water-vapor feedback? Also, you are still not accounting for paleoclimate. If the Iris Effect is nontrivial, how do Milankovitch cycles (or whatever other forcing you might want to hypothesize) drive glaciation/deglaciation? You have an answer for that, right?

This is from the unreviewed paper “What do we really know about cloud changes over the past decades?”, right? So, do SST changes lead or lag cloud cover? What kind of cloud cover do you get: high/mid/low/deep convective? Day or night? Summer or winter? What’s the effect on the radiative budget?

Also, speaking of that paper, I notice that Fig. 1 shows a global reduction in cloudiness of ~3% between 1985 and 2001, a ~1% increase from 2001-03, and only a slight increase between 2003 and 2012. GAT (GISTEMP) shows strong warming for the first 2 periods, and almost no change for the last. One explanation for that correlation would be a moderately positive global cloud feedback. I’d also note Dessler’s 2010 work “A Determination of the Cloud Feedback from Climate Variations over the Past Decade”, which concludes cloud feedback is likely moderately positive, 0.54 +- 0.74 W/m^2/K. Other papers conclude a slightly negative cloud feedback, such as Zhou et al “An Analysis of the Short-Term Cloud Feedback Using MODIS Data” (-0.16 +- 0.83 W/m^2/K), but with the proviso that “The SW feedback is consistent with previous work; the MODIS LW feedback is lower than previous calculations and there are reasons to suspect it may be biased low.”

What I’m not seeing is a strongly negative feedback supported in the literature, and once again, if you adopt that idea, you’ve got to explain paleoclimate.

Um, the heat’s still accumulating, just not on the surface — for the moment.

Agreed.

As far as I can tell, there is no support for that position.

I disagree. If the heat that’s accumulated in the oceans between, say, 2003 and 2012 (~9*10^22 J) were instead entirely to heat the atmosphere, GAT would have risen ~17 K in that time, ex any feedbacks. We cannot count on the heat from the continuing radiative imbalance continuing to go into the deeps (once again, uncertainty cuts both ways), nor even that all of the heat sequestered there will stay there.

You forgot that higher CO2 concentrations make it easier to make carbonated soda, too.

Despite apparent artificial issues in long-term measurements of cloud from ISCCP, and the lack of reliability in low-cloud data from irradiance-based satellite cloud estimates, we find the ISCCP and MODIS datasets to be in close agreement over the past decade globally. In turn, we find these datasets to correspond well to independent observations of SST, suggesting that some particular regions of the globe are not as affected as others by calibration artifacts. This opens the door to the possibility of using SST temperatures as proxy for past cloud variations.’ http://www.benlaken.com/documents/AIP_PL_13.pdf

In summary, although there is independent evidence for decadal changes in TOA radiative fluxes over the last two decades, the evidence is equivocal. Changes in the planetary and tropical TOA radiative fluxes are consistent with independent global ocean heat-storage data, and are expected to be dominated by changes in cloud radiative forcing. To the extent that they are real, they may simply reflect natural low-frequency variability of the climate system. http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch3s3-4-4-1.html

The interesting bit about the Palle and Laken article is that it cross validates ISCCP-FD and MODIS with SST.

It provides another way of looking at decadal variations in radiant flux shown in ERBS and ISCCP-FD. Recognizing that there are problems with view angle and cloud penetration using passive irradiance-based cloud estimations – this remains the most critical issue in climate science. The radiant flux data (ERBS and ISCCP)is consistent and avoids the cloud problems. We may – like the IPCC – draw the conclusion that the radiant flux changes are dominated by cloud radiative forcing changes without information on clouds.

What do we know about cloud? It changes decadally with multi-decadal shifts in the Pacific Ocean state. There are a number of references to be found here – http://judithcurry.com/2011/02/09/decadal-variability-of-clouds/

What the data shows as well is multi-decadal shifts in climate – e.g. http://www.geomar.de/en/news/article/klimavorhersagen-ueber-mehrere-jahre-moeglich/

Even if ultimately there is real confidence in ocean heat content data – i.e. the trends exceed the differences in data handling – without understanding changes in reflected SW and emitted IR it remains impossible to understand the global energy dynamic. It is all guesses and bias. We know in CERES – and in accordance with the Loeb et al 2012 study I quoted earlier – and which I quote much more often than Enric Palle and Ben Laken (2013) – that there are large fluctuations in TOA radiant flux in response to changes in ocean and atmospheric circulation.

So was there a climate shift after the turn of the century involving changes in ocean and atmospheric circulation involving cloud changes? Unfortunately CERES missed it – but there are intriguing correspondences between Project Earthshine and ISCCP-FD.

http://watertechbyrie.files.wordpress.com/2014/06/earthshine.jpg

‘Earthshine changes in albedo shown in blue, ISCCP-FD shown in black and CERES in red. A climatologically significant change before CERES followed by a long period of insignificant change.’

Clouds are in fact getting lower – http://onlinelibrary.wiley.com/doi/10.1029/2011GL050506/abstract;jsessionid=87A84B1A4DF2296B122261211CC7FED5.f02t02 – but I wouldn’t try to diagnose that from ISSCP_FD data as the webbly obviously can. And from a diagram that is intended to show problems with the data. Remarkable – albeit superficial and incredible.

You will find a dozen or two references to reputable sources just in the past couple of days – nor did I make much of Palle and Laken. Although it is an interesting study.

What I said was – the data shows quite clearly that changes in cloud radiative forcing in the satellite era exceeded greenhouse gas forcing. In both ERBS and ISCCP-FD. The decrease in reflected SW was 2.1 and 2.4 W/m2 between the 80’s and 90’s respectively. The increase in emitted IR was 0.7 and 0.5 W/m2. It is what it is.

But what is the significant point?

If as suggested here, a dynamically driven climate shift has occurred, the duration of similar shifts during the 20th century suggests the new global mean temperature trend may persist for several decades. Of course, it is purely speculative to presume that the global mean temperature will remain near current levels for such an extended period of time. Moreover, we caution that the shifts described here are presumably superimposed upon a long term warming trend due to anthropogenic forcing. However, the nature of these past shifts in climate state suggests the possibility of near constant temperature lasting a decade or more into the future must at least be entertained. http://onlinelibrary.wiley.com/doi/10.1029/2008GL037022/abstract

It remains astonishing to me that it still isn’t.

114 Chris Colose: SST-cloud correlations in a given climate don’t tell you much about climate sensitivity or cloud feedback, for a number of reasons. Another point is that total cloud variation is a rather useless constraint on the TOA energy perturbation, since clouds act on both sides of the planetary energy budget.

I agree: the effects of clouds are not known.

115 Meow: But again, why should that result in an increase in SW reflectance by clouds? Why not instead an exacerbated water-vapor feedback? Also, you are still not accounting for paleoclimate.

I agree. The net effect of the increased vaporization (with the increased transport of heat from surface to upper troposphere, where the vapor condenses and freezes) is not known. As far as I can tell from readings to date, the increased energy transferred to vaporization hasn’t been accounted for; and the net changes in total cloud cover and its distribution are not known.

As far as I can tell, no one can account for paleoclimate and recent climate to the level of accuracy required to resolve the questions that you have posed in response to my post.

We might not be in agreement with the thinking of Matthew R Marler but he could be on to something here.

If the mathematics of simple climate models fail to provide us with robust solutions and if the arithmatic of complex climate models produce similar outcomes (roughly the same results but still non-robust), why not solve the climate issue with philosophy. Does not existentialism show “I think therefore I am right”? So as those CO2-fertilized weeds grow strong amidst the wreckage of our smashed and crumbling civilisation, what better environment to trial the use of poetry to solve the myraid of engineering problems, feng shui for all our niggling medical crises or zoology to rebuild some semblance of a global monetary system?

And to keep this on-topic, I see Judy is ahead of the game and already applying philosophy to climate attribution (and perhaps also crimial psychology as she tells her chum David Rose at the Rail on Sunday that she “suspects” a solution).

Given its title, it is possible this use of philosophy within climate attribution being employed by Curry is explained in her great work on the subject Reasoning About Climate Uncertainty. So it might be worth a read. Or rhetorically, it might not.

The comments seemed very strange, and then I remembered that I had the Cloud2Butt app installed on this browser. So now “butt feedback is moderately positive” makes some sense.

I installed the app due to being inundated with corpo IT hype about “The Cloud,” but it makes climate blogs extra surreal, too.

Rob Ellison #98 – ‘The figure I linked to was from Louliette (2012) -http://www.tos.org/oceanography/archive/24-2_leuliette.pdf’

No, it isn’t. It’s from this apparently unpublished document residing on an NOAA server. You can tell it’s a work-in-progress by the inconsistency between numbers given in the write-up compared to the table from which you took the 0.2 +/-0.8 figure.

In terms of actual published estimates, the Antonov et al. 2005 method (depicted at http://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT/) produces a 2005-2011 trend of 0.59mm/yr for depth to 2000m. Chen et al. 2013 looked at three gridded Argo datasets produced by independent groups and found total steric trends of 0.48, 0.78 and 0.54mm/yr. All trend amounts are significant, at least in relation to OLS statistics.

Why did Leuliette find such a low figure compared to other constructions? There are a couple of clues in the paper:

1) The observed JASON1+JASON2 sea level rise given in the table is 1.6mm/yr, which is only 66% of the actual JASON1+JASON2 trend of 2.4mm/yr over the same period. The reason for this discrepancy is geographical coverage in his analysis, due to data availability and methodological choices (see Figure 3 in the document). This means the steric estimate is almost certainly biased low compared to the true global amount. However, looking at the spatial steric trends in Chen et al. 2013 SI, it’s clear those other estimates also suffer from this bias to some extent. See von Schuckmann et al. 2014.

2) Also in the write-up it’s stated that the estimate only includes depths to 900m. Measurements at further depths indicate substantial warming below 900m, so again there’s a likely low bias here.

Furthermore we now have another couple of years data. According to the Antonov et al. method from 2005 right up to the present (April-Jun 2014) the trend comes to 0.97mm/yr for 0-2000m.

‘GSSL, GOHC and GOFC derived from in situ observations are a useful benchmark for ocean and climate models and an important diagnostic for changes in the Earth’s climate system (Hansen et al., 2005; Levitus et al., 2005). Differences among various analyses and inconsistencies with other observations (e.g. altimetry, GRACE, Earth’s energy budget) require particular attention (Hansen et al., 2005; Willis et al., 2008; Domingues et al., 2008; Cazenave and Llovel, 2010; Trenberth, 2010; Lyman et al., 2010).’ http://www.ocean-sci.net/7/783/2011/os-7-783-2011.pdf

What is looked for is small changes against a background of large interannual variation to decadal variation. The changes include changes in the TOA energy dynamic related to changes in ocean and atmospheric circulation – changes in cloud.

e.g. http://www.ocean-sci.net/7/783/2011/os-7-783-2011.pdf

From the Loeb et al study cited earlier.

Thank you Paul S. I hope Rob Ellison corrects the statements on his blog and credits your work.

With regard to comments from Mathew Marler above, it’s very strange, from a risk management perspective, to see someone string together a series of ‘ifs’ all tending towards minimization of a risk, without also considering a parallel series of ‘ifs’ on the other side of the balance. I suppose that if all uncertainties are resolved in the direction of lower risk, we just might get away with BAU for the next few decades without a complete disaster (though continued sea level rise, ocean acidification and 2 degrees Celsius actually sound pretty risky to me, and the risk that there are other factors in play seems to be reinforced by paleo data on glacial-interglacial transitions). But basing our policy on the assumption that this is the way things will go is crazy, IMHO.

Thanks Paul S.

I couldn’t find the Leuliette figure that Rob Ellison linked to either, but I figured I may have overlooked something. It takes a lot of work to fact-check persistent skeptics such as Ellison. And even pointing out their errors is usually not enough, as they will generally ignore your suggestions and then simply repost again and again on blogs such as Climate Etc. Not much one can do in that case — which is why RC has moderation, thankfully.

Implement the Precautionary principal and replace fossil fuel Power Plants achieving the minimum 40% global emissions reduction requested by the IPCC .

If we are incorrect then the down side will be reduced Health Care cost associated with airborne carbon pollution, asidification of the Oceans and end contamination of Fresh water supplies with sewage!

The redistribution of wealth will also be a good thing for many more people than will affect negatively.

@119: That’s all but to say, “We don’t know everything, therefore we don’t know anything, therefore there’s no problem with partying on.” Your uncertainty monster, as Curry’s, has one mouth, but the real one has two mouths.

Finally, you’ve once more failed to explain paleoclimate given your claimed (but unsupported) strong negative feedback. Hint: average annual TSI has varied by ~ +-0.08% (~ +- 0.28 W/m^2) over the entire history of Milankovitch cycles [1], yet GAT varied by ~6K. How does such a tiny variation in TSI cause such a large change in GAT? What feedback regimes are compatible with that phenomenon? Explain at length. Then contrast with the >= 0.85 W/m^2 forcing currently being exerted by anthropogenic CO2 & feedbacks.

[1] Laskar et al, “A long-term numerical solution for the insolation quantities of the Earth” (2004), DOI: 10.1051/0004-6361:20041335 . BTW, they have a very handy web-based insolation calculator at http://www.imcce.fr/Equipes/ASD/insola/earth/earth.html , using which I got the total annual insolation figures for the last 1M years (several cycles) in 1000y steps.

Bryson Brown wrote: “it’s very strange, from a risk management perspective, to see someone string together a series of ‘ifs’ all tending towards minimization of a risk, without also considering a parallel series of ‘ifs’ on the other side of the balance. I suppose that if all uncertainties are resolved in the direction of lower risk, we just might get away with BAU for the next few decades without a complete disaster … But basing our policy on the assumption that this is the way things will go is crazy”

It is indeed crazy — IF your goal is to reduce the risk of “a complete disaster”.

But it is not crazy if your goal is to “get away with BAU for the next few decades”.

128 meow: @119: That’s all but to say, “We don’t know everything, therefore we don’t know anything, therefore there’s no problem with partying on.” Your uncertainty monster, as Curry’s, has one mouth, but the real one has two mouths.

That isn’t what I wrote. The effects of increasing temperature on likely increasing the rate of vaporization of surface water and likely increasing the rate of non-radiative transport of latent heat from surface to upper troposphere have been ignored in the calculations of the sensitivity of the mean climate to a doubling of CO2 concentration. This biases the estimate of the climate sensitivity in the upward direction. Consideration of the energy flows necessary to increase temperature of the surface and troposphere and to vaporize the additional water provides no reason to think that the bias is negligible.

Other ways that the standard or “consensus” calculations bias the climate sensitivity upward also exist and are also not negligible (or at least there is no scientific case that they are negligible), but for now it is sufficient to think about, and try to estimate, the magnitude of the increase in H2O and latent heat flow from surface to upper troposphere.

A really good book on climate, “Principles of Planetary Climate” by Raymond T Pierrehumbert, who sometimes writes here, focuses on equilibrium calculations, that they entail approximation error, and that this approximation error is not very important when comparing Earth, Mars, Venus etc. He does not, however, address the size and bias of the approximation errors with respect to a small change (1% or less of mean temp in Kelvin) resulting on Earth from a small change in forcing (doubling of CO2 concentration), over a long but finite time (140 years or so for the concentration of CO2 to double from what it is now.)

If someone has good references addressing the related changes in these two rates of flow (H2O and latent heat) in our dynamic atmosphere (round Earth rotating on a tilted axis while revolving around the sun — and having a surface that is 70% H2O, with other areas land but “not dry”) I would like to see them.

It’s not of great importance where I get my information, but for the record I have Pierrehumbert’s book and some other recent monograpsh and textbooks, I have ordered “Thermodynamics, Kinetics, and Microphysics of Clouds” by Khvorostyanov and Curry (it’s due to arrive in a few days), and I download a few dozen articles per year on the relevant topics. When I say I have not found something, it is not for lack of effort, and I would like to have the specific gaps in my knowledge filled in with detailed, published scientific articles and books.

Hank Roberts @ 124 – if you would read Climate Etc, you would already know that. Paul S. told him the same thing a few months ago.

Not that anybody over there noticed.

Arun @3,4 on greater than 100% attribution.

My understanding is that, in addition to the slight cooling trend leading up to ~1850, the meaning is that the human forcings are both warming and cooling. Some things, like our sulfate emissions and some other particulates, have cooling effects. The warming we have seen is a result of the warming we have caused, minus the cooling we have caused. So, our warming effects are more than observed warming.

Marler said:

There are so many rhetorical tricks buried in this passage that it boggles the mind. First it is a subjective opinion and what sounds like a value judgement that you call it a “small change in forcing”. If you were just above freezing, a 1% change in Kelvin would have a significant effect. And why do you start the counting now on doubling and not start from 1860, the start of the oil age?

“The effects of increasing temperature on likely increasing the rate of vaporization of surface water and likely increasing the rate of non-radiative transport of latent heat from surface to upper troposphere have been ignored in the calculations of the sensitivity of the mean climate to a doubling of CO2 concentration.”

– See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-3/#comment-590130

Now, perhaps I’m crazy, but that statement seems inconceivably bizarre to me. Constraining climate sensitivity isn’t a matter of a few ‘calculations,’ but of considering known or modeled climate forcings and responses.

In the case of paleoclimate, obviously ‘vaporization’ rates and convective heat fluxes changed, too. And in the case of model studies using the large, modern, fully-coupled models, the relevant physics would be in the model. (And, for good measure, from everything I’ve read, CS is not explicitly calculated, but ’emergent.’)

@130:

So you retract that stuff about “benefits of increased CO2 over at least the next few decades have been underestimated”?

Really? That would be news to Trenberth et al who work full-time on energy balance. Why not see what they have to say about evapotranspiration [1] ? Also, “likely increasing the rate of…transport…of…heat…to upper troposphere” is your speculation. Got anything to back it up?

It does? Why? Further, “this” isn’t even occurring because no one is ignoring evapotranspiration.

These assertions are beginning to remind me of the oft-used (but false) accusation that climate scientists ignore convection.

Which errors you persistently take as falling in the direction of lower sensitivity. PDFs have _two_ tails, and uncertainty monsters two mouths.

Given that the Milankovitch TSI forcing is less than +- 0.1%, while nonetheless driving ice-age cycles, the current anthro forcing of > 0.85 W/m^2 (> +0.2%) can hardly be called “a small change in forcing”. Also the “1% or less of mean temp in Kelvin” political rhetoric is best saved for presentations at the Heartland Institute, where people will be impressed by it.

Huh? First, doubling is usually computed over preindustrial levels (~280 ppm). Second, RCP 8.5 reaches a doubling on those terms in ~2050 (36y) and RCP 6.0 in ~2075 (61y). [2] I’m pretty sure we’re on course for RCP 8.5 (no significant constraint on emissions).

The rhetoric, it works here not.

[1] E.g., “Earth’s Global Temperature Budget” (2009), DOI:10.1175/2008BAMS2634.1 ; “Tracking Earth’s Energy” (2010), DOI: 10.1126/science.1187272 ; and especially

“Atmospheric Moisture Transports from Ocean to Land and Global Energy Flows in Reanalyses” (2011), doi:10.1175/2011JCLI4171.1

[2] AR5 WG1 Fig. 12.42(a).

[no need to repeat previous posts every time]

To imagine that there no inconsistencies is a product of marshaling evidence to a meme. In figure 3 of von Schuckmann and Le Troan (2011)are the results of three different Argo ‘climatologies’. To imagine that there are no issues with data handling is statistically naïve. To think that a trend can be discerned in Argo against a background of large variability is magic thinking.

Ocean heat follows toa radiant flux changes – as it must.

e.g – https://watertechbyrie.files.wordpress.com/2014/06/wong2006figure71.gif

From – http://www.image.ucar.edu/idag/Papers/Wong_ERBEreanalysis.pdf

So what did happen with toa flux?

Shortwave – http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_shortwave_flux-all-sky_january-2004todecember-2013.png

Longwave – http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_longwave_flux-all-sky_march-2000toapril-2014.png

These are CERES anomalies that are accurate to 0.3W/m2. Most of the decadal variability is in SW. The trend in net flux (-SW -LW | positive warming) is negligible over the period.

The change in global energy content is given as precisely as you like by the first order differential energy equation. A global energy equation that – as far as I know – I personally formulated.

d(W&H)/dt (J/s) = energy in (J/s) – energy out (J/s)

W&H is work and heat – mostly oceans – and I have to add the units or the webbly insists that dimensional analysis is lacking.

Energy in changes very little – and the cycle has gone from peak to peak this century. Energy out changes considerably. So the change in ocean heat over the period should pretty much match the trend in net outgoing energy flux. Either as an increase or decrease in forcing. There are studies that claim this is so with CERES and Argo data.

[edit] It is not so simply because I claim that climate data is far from definitive at this stage – that science is suggesting that dynamical complexity is the mechanism for regime change at multi-decadal scales in the climate system [edit]

Emergent properties of models? While I am here let’s throw one at the modeler.

‘AOS models are therefore to be judged by their degree of plausibility, not whether they are correct or best. This perspective extends to the component discrete algorithms, parameterizations, and coupling breadth: There are better or worse choices (some seemingly satisfactory for their purpose or others needing repair) but not correct or best ones. The bases for judging are a priori formulation, representing the relevant natural processes and choosing the discrete algorithms, and a posteriori solution behavior.’ http://www.pnas.org/content/104/21/8709.full

This is how models evolve from slightly different – within the bounds of feasible inputs – initial and boundary conditions.

http://rsta.royalsocietypublishing.org/content/369/1956/4751/F2.large.jpg

There are no unique solutions.

‘Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change.’ http://rsta.royalsocietypublishing.org/content/369/1956/4751.full

The comparison of model solutions to observations seems to miss a fundamental point. The solutions in opportunistic ensembles are one of many possible model solutions and is chosen on the basis of expectations about plausible outcomes. As models are failing to model the pause – it would seem to be the expectations that are incorrect rather than the models as such.

If you’re going to frame a temperature response as a percentage change in response to given size of forcing change, then at a bare minimum you need to use the temperature change due to that forcing as the denominator. Given that the greenhouse effect warms the planet by approximately 33K or so, then your denominator should be approximately 33 rather than approximately 287.

That increases the size of the temperature change per “small change” of doubling of CO2 by almost one order of magnitude, and many people would dispute that an approximately 10% GHG-driven temperature increase for a “small change” in greenhouse forcing is a “small temperature change”.