I have written a number of times about the procedure used to attribute recent climate change (here in 2010, in 2012 (about the AR4 statement), and again in 2013 after AR5 was released). For people who want a summary of what the attribution problem is, how we think about the human contributions and why the IPCC reaches the conclusions it does, read those posts instead of this one.

The bottom line is that multiple studies indicate with very strong confidence that human activity is the dominant component in the warming of the last 50 to 60 years, and that our best estimates are that pretty much all of the rise is anthropogenic.

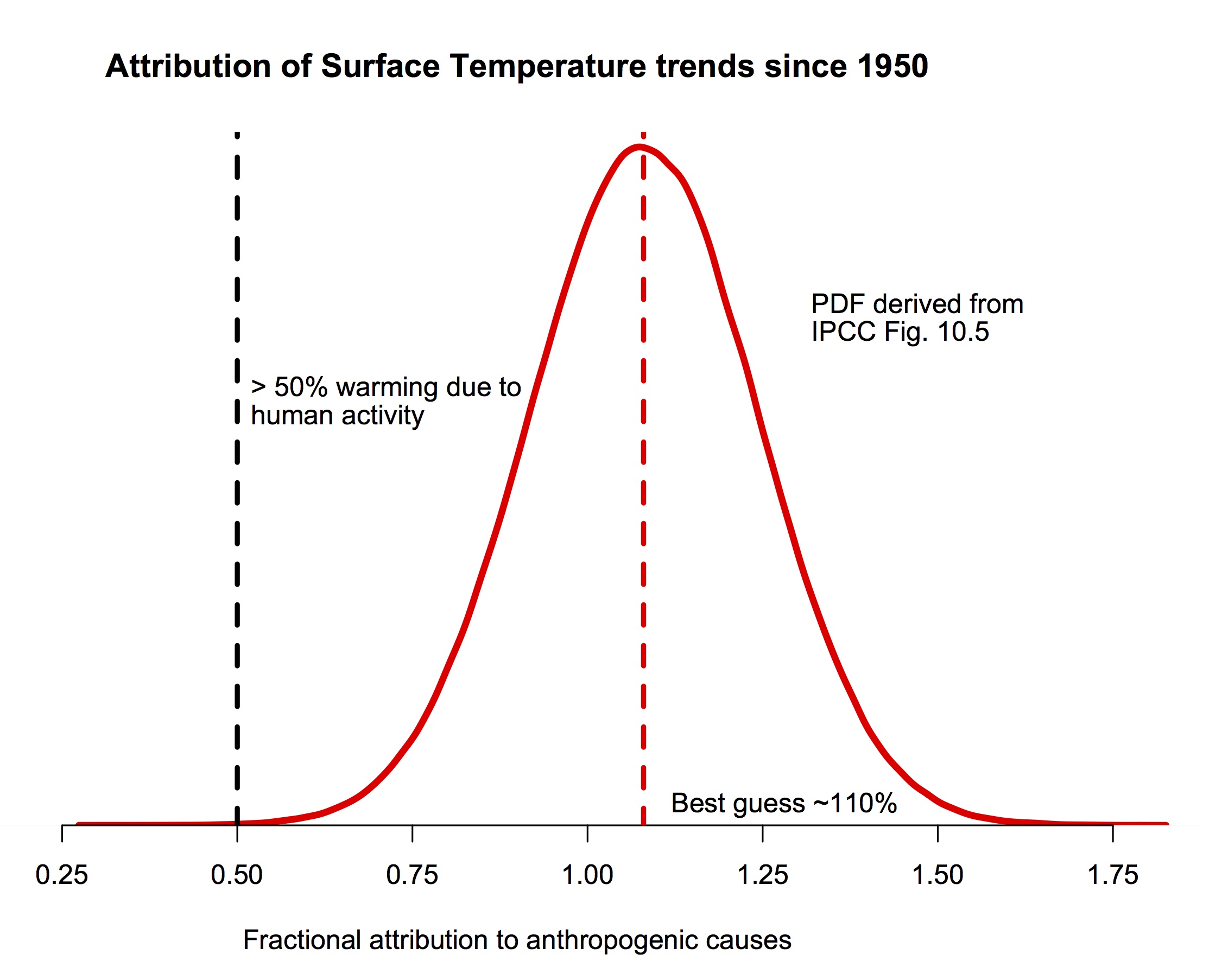

The probability density function for the fraction of warming attributable to human activity (derived from Fig. 10.5 in IPCC AR5). The bulk of the probability is far to the right of the “50%” line, and the peak is around 110%.

If you are still here, I should be clear that this post is focused on a specific claim Judith Curry has recently blogged about supporting a “50-50” attribution (i.e. that trends since the middle of the 20th Century are 50% human-caused, and 50% natural, a position that would center her pdf at 0.5 in the figure above). She also commented about her puzzlement about why other scientists don’t agree with her. Reading over her arguments in detail, I find very little to recommend them, and perhaps the reasoning for this will be interesting for readers. So, here follows a line-by-line commentary on her recent post. Please excuse the length.

Starting from the top… (note, quotes from Judith Curry’s blog are blockquoted).

Pick one:

a) Warming since 1950 is predominantly (more than 50%) caused by humans.

b) Warming since 1950 is predominantly caused by natural processes.

When faced with a choice between a) and b), I respond: ‘I can’t choose, since i think the most likely split between natural and anthropogenic causes to recent global warming is about 50-50′. Gavin thinks I’m ‘making things up’, so I promised yet another post on this topic.

This is not a good start. The statements that ended up in the IPCC SPMs are descriptions of what was found in the main chapters and in the papers they were assessing, not questions that were independently thought about and then answered. Thus while this dichotomy might represent Judith’s problem right now, it has nothing to do with what IPCC concluded. In addition, in framing this as a binary choice, it gives implicit (but invalid) support to the idea that each choice is equally likely. That this is invalid reasoning should be obvious by simply replacing 50% with any other value and noting that the half/half argument could be made independent of any data.

For background and context, see my previous 4 part series Overconfidence in the IPCC’s detection and attribution.

Framing

The IPCC’s AR5 attribution statement:

It is extremely likely that more than half of the observed increase in global average surface temperature from 1951 to 2010 was caused by the anthropogenic increase in greenhouse gas concentrations and other anthropogenic forcings together. The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

I’ve remarked on the ‘most’ (previous incarnation of ‘more than half’, equivalent in meaning) in my Uncertainty Monster paper:

Further, the attribution statement itself is at best imprecise and at worst ambiguous: what does “most” mean – 51% or 99%?

Whether it is 51% or 99% would seem to make a rather big difference regarding the policy response. It’s time for climate scientists to refine this range.

I am arguing here that the ‘choice’ regarding attribution shouldn’t be binary, and there should not be a break at 50%; rather we should consider the following terciles for the net anthropogenic contribution to warming since 1950:

- >66%

- 33-66%

- <33%

JC note: I removed the bounds at 100% and 0% as per a comment from Bart Verheggen.

Hence 50-50 refers to the tercile 33-66% (as the midpoint)

Here Judith makes the same mistake that I commented on in my 2012 post – assuming that a statement about where the bulk of the pdf lies is a statement about where it’s mean is and that it must be cut off at some value (whether it is 99% or 100%). Neither of those things follow. I will gloss over the completely unnecessary confusion of the meaning of the word ‘most’ (again thoroughly discussed in 2012). I will also not get into policy implications since the question itself is purely a scientific one.

The division into terciles for the analysis is not a problem though, and the weight of the pdf in each tercile can easily be calculated. Translating the top figure, the likelihood of the attribution of the 1950+ trend to anthropogenic forcings falling in each tercile is 2×10-4%, 0.4% and 99.5% respectively.

Note: I am referring only to a period of overall warming, so by definition the cooling argument is eliminated. Further, I am referring to the NET anthropogenic effect (greenhouse gases + aerosols + etc). I am looking to compare the relative magnitudes of net anthropogenic contribution with net natural contributions.

The two IPCC statements discussed attribution to greenhouse gases (in AR4) and to all anthropogenic forcings (in AR5) (the subtleties involved there are discussed in the 2013 post). I don’t know what she refers to as the ‘cooling argument’, since it is clear that the temperatures have indeed warmed since 1950 (the period referred to in the IPCC statements). It is worth pointing out that there can be no assumption that natural contributions must be positive – indeed for any random time period of any length, one would expect natural contributions to be cooling half the time.

Further, by global warming I refer explicitly to the historical record of global average surface temperatures. Other data sets such as ocean heat content, sea ice extent, whatever, are not sufficiently mature or long-range (see Climate data records: maturity matrix). Further, the surface temperature is most relevant to climate change impacts, since humans and land ecosystems live on the surface. I acknowledge that temperature variations can vary over the earth’s surface, and that heat can be stored/released by vertical processes in the atmosphere and ocean. But the key issue of societal relevance (not to mention the focus of IPCC detection and attribution arguments) is the realization of this heat on the Earth’s surface.

Fine with this.

IPCC

Before getting into my 50-50 argument, a brief review of the IPCC perspective on detection and attribution. For detection, see my post Overconfidence in IPCC’s detection and attribution. Part I.

Let me clarify the distinction between detection and attribution, as used by the IPCC. Detection refers to change above and beyond natural internal variability. Once a change is detected, attribution attempts to identify external drivers of the change.

The reasoning process used by the IPCC in assessing confidence in its attribution statement is described by this statement from the AR4:

“The approaches used in detection and attribution research described above cannot fully account for all uncertainties, and thus ultimately expert judgement is required to give a calibrated assessment of whether a specific cause is responsible for a given climate change. The assessment approach used in this chapter is to consider results from multiple studies using a variety of observational data sets, models, forcings and analysis techniques. The assessment based on these results typically takes into account the number of studies, the extent to which there is consensus among studies on the significance of detection results, the extent to which there is consensus on the consistency between the observed change and the change expected from forcing, the degree of consistency with other types of evidence, the extent to which known uncertainties are accounted for in and between studies, and whether there might be other physically plausible explanations for the given climate change. Having determined a particular likelihood assessment, this was then further downweighted to take into account any remaining uncertainties, such as, for example, structural uncertainties or a limited exploration of possible forcing histories of uncertain forcings. The overall assessment also considers whether several independent lines of evidence strengthen a result.” (IPCC AR4)

I won’t make a judgment here as to how ‘expert judgment’ and subjective ‘down weighting’ is different from ‘making things up’

Is expert judgement about the structural uncertainties in a statistical procedure associated with various assumptions that need to be made different from ‘making things up’? Actually, yes – it is.

AR5 Chapter 10 has a more extensive discussion on the philosophy and methodology of detection and attribution, but the general idea has not really changed from AR4.

In my previous post (related to the AR4), I asked the question: what was the original likelihood assessment from which this apparently minimal downweighting occurred? The AR5 provides an answer:

The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

So, I interpret this as scything that the IPCC’s best estimate is that 100% of the warming since 1950 is attributable to humans, and they then down weight this to ‘more than half’ to account for various uncertainties. And then assign an ‘extremely likely’ confidence level to all this.

Making things up, anyone?

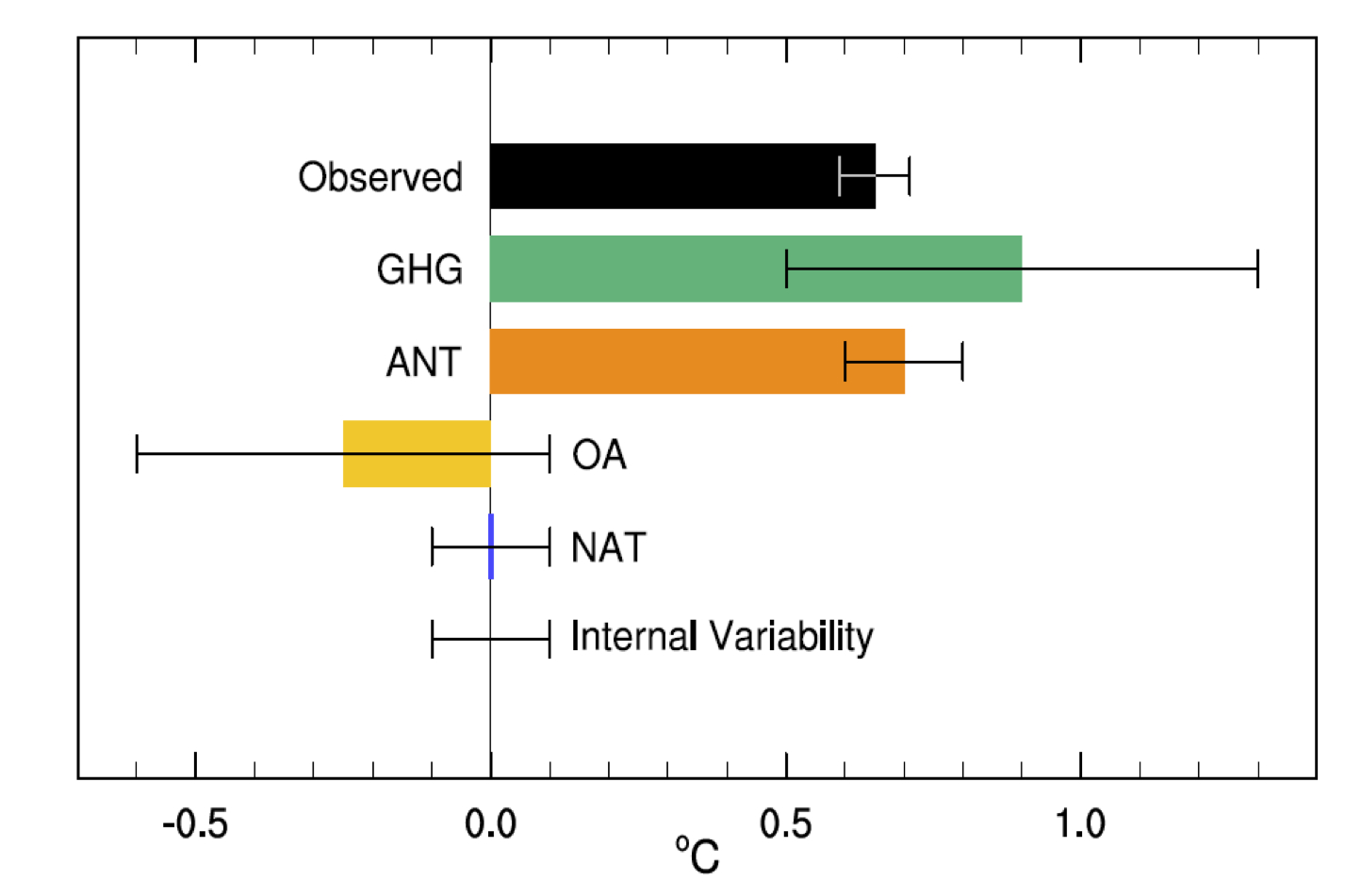

This is very confused. The basis of the AR5 calculation is summarised in figure 10.5:

The best estimate of the warming due to anthropogenic forcings (ANT) is the orange bar (noting the 1𝛔 uncertainties). Reading off the graph, it is 0.7±0.2ºC (5-95%) with the observed warming 0.65±0.06 (5-95%). The attribution then follows as having a mean of ~110%, with a 5-95% range of 80–130%. This easily justifies the IPCC claims of having a mean near 100%, and a very low likelihood of the attribution being less than 50% (p < 0.0001!). Note there is no ‘downweighting’ of any argument here – both statements are true given the numerical distribution. However, there must be some expert judgement to assess what potential structural errors might exist in the procedure. For instance, the assumption that fingerprint patterns are linearly additive, or uncertainties in the pattern because of deficiencies in the forcings or models etc. In the absence of any reason to think that the attribution procedure is biased (and Judith offers none), structural uncertainties will only serve to expand the spread. Note that one would need to expand the uncertainties by a factor of 3 in both directions to contradict the first part of the IPCC statement. That seems unlikely in the absence of any demonstration of some huge missing factors.

I’ve just reread Overconfidence in IPCC’s detection and attribution. Part IV, I recommend that anyone who seriously wants to understand this should read this previous post. It explains why I think the AR5 detection and attribution reasoning is flawed.

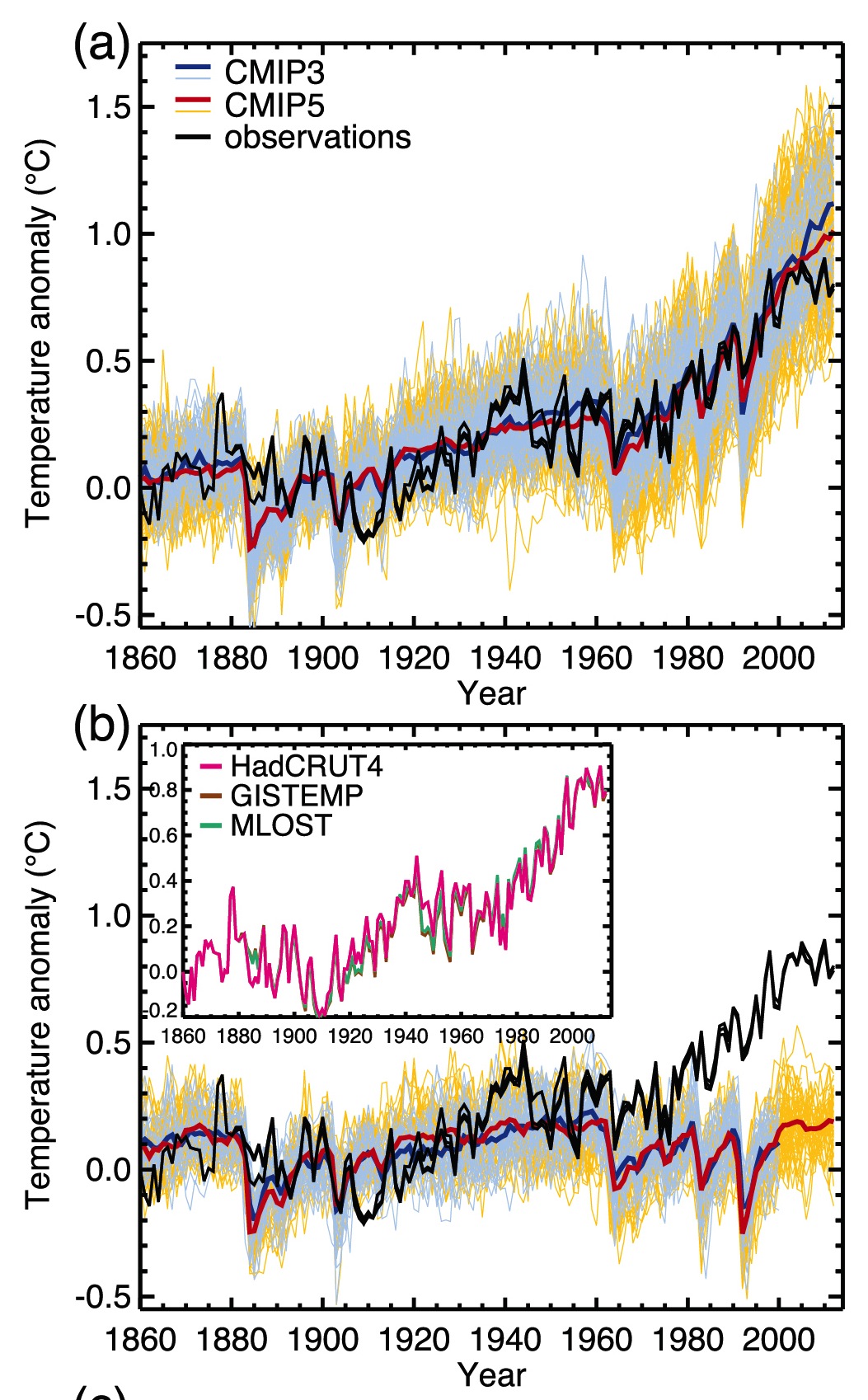

Of particular relevance to the 50-50 argument, the IPCC has failed to convincingly demonstrate ‘detection.’ Because historical records aren’t long enough and paleo reconstructions are not reliable, the climate models ‘detect’ AGW by comparing natural forcing simulations with anthropogenically forced simulations. When the spectra of the variability of the unforced simulations is compared with the observed spectra of variability, the AR4 simulations show insufficient variability at 40-100 yrs, whereas AR5 simulations show reasonable variability. The IPCC then regards the divergence between unforced and anthropogenically forced simulations after ~1980 as the heart of the their detection and attribution argument. See Figure 10.1 from AR5 WGI (a) is with natural and anthropogenic forcing; (b) is without anthropogenic forcing:

This is also confused. “Detection” is (like attribution) a model-based exercise, starting from the idea that one can estimate the result of a counterfactual: what would the temperature have done in the absence of the drivers compared to what it would do if they were included? GCM results show clearly that the expected anthropogenic signal would start to be detectable (“come out of the noise”) sometime after 1980 (for reference, Hansen’s public statement to that effect was in 1988). There is no obvious discrepancy in spectra between the CMIP5 models and the observations, and so I am unclear why Judith finds the detection step lacking. It is interesting to note that given the variability in the models, the anthropogenic signal is now more than 5𝛔 over what would have been expected naturally (and if it’s good enough for the Higgs Boson….).

Note in particular that the models fail to simulate the observed warming between 1910 and 1940.

Here Judith is (I think) referring to the mismatch between the ensemble mean (red) and the observations (black) in that period. But the red line is simply an estimate of the forced trends, so the correct reading of the graph would be that the models do not support an argument suggesting that all of the 1910-1940 excursion is forced (contingent on the forcing datasets that were used), which is what was stated in AR5. However, the observations are well within the spread of the models and so could easily be within the range of the forced trend + simulated internal variability. A quick analysis (a proper attribution study is more involved than this) gives an observed trend over 1910-1940 as 0.13 to 0.15ºC/decade (depending the dataset, with ±0.03ºC (5-95%) uncertainty in the OLS), while the spread in my collation of the historical CMIP5 models is 0.07±0.07ºC/decade (5-95%). Specifically, 8 model runs out of 131 have trends over that period greater than 0.13ºC/decade – suggesting that one might see this magnitude of excursion 5-10% of the time. For reference, the GHG related trend in the GISS models over that period is about 0.06ºC/decade. However, the uncertainties in the forcings for that period are larger than in recent decades (in particular for the solar and aerosol-related emissions) and so the forced trend (0.07ºC/decade) could have been different in reality. And since we don’t have good ocean heat content data, nor any satellite observations, or any measurements of stratospheric temperatures to help distinguish potential errors in the forcing from internal variability, it is inevitable that there will be more uncertainty in the attribution for that period than for more recently.

The glaring flaw in their logic is this. If you are trying to attribute warming over a short period, e.g. since 1980, detection requires that you explicitly consider the phasing of multidecadal natural internal variability during that period (e.g. AMO, PDO), not just the spectra over a long time period. Attribution arguments of late 20th century warming have failed to pass the detection threshold which requires accounting for the phasing of the AMO and PDO. It is typically argued that these oscillations go up and down, in net they are a wash. Maybe, but they are NOT a wash when you are considering a period of the order, or shorter than, the multidecadal time scales associated with these oscillations.

Watch the pea under the thimble here. The IPCC statements were from a relatively long period (i.e. 1950 to 2005/2010). Judith jumps to assessing shorter trends (i.e. from 1980) and shorter periods obviously have the potential to have a higher component of internal variability. The whole point about looking at longer periods is that internal oscillations have a smaller contribution. Since she is arguing that the AMO/PDO have potentially multi-decadal periods, then she should be supportive of using multi-decadal periods (i.e. 50, 60 years or more) for the attribution.

Further, in the presence of multidecadal oscillations with a nominal 60-80 yr time scale, convincing attribution requires that you can attribute the variability for more than one 60-80 yr period, preferably back to the mid 19th century. Not being able to address the attribution of change in the early 20th century to my mind precludes any highly confident attribution of change in the late 20th century.

This isn’t quite right. Our expectation (from basic theory and models) is that the second half of the 20th C is when anthropogenic effects really took off. Restricting attribution to 120-160 yr trends seems too constraining – though there is no problem in looking at that too. However, Judith is actually assuming what remains to be determined. What is the evidence that all 60-80yr variability is natural? Variations in forcings (in particularly aerosols, and maybe solar) can easily project onto this timescale and so any separation of forced vs. internal variability is really difficult based on statistical arguments alone (see also Mann et al, 2014). Indeed, it is the attribution exercise that helps you conclude what the magnitude of any internal oscillations might be. Note that if we were only looking at the global mean temperature, there would be quite a lot of wiggle room for different contributions. Looking deeper into different variables and spatial patterns is what allows for a more precise result.

The 50-50 argument

There are multiple lines of evidence supporting the 50-50 (middle tercile) attribution argument. Here are the major ones, to my mind.

Sensitivity

The 100% anthropogenic attribution from climate models is derived from climate models that have an average equilibrium climate sensitivity (ECS) around 3C. One of the major findings from AR5 WG1 was the divergence in ECS determined via climate models versus observations. This divergence led the AR5 to lower the likely bound on ECS to 1.5C (with ECS very unlikely to be below 1C).

Judith’s argument misstates how forcing fingerprints from GCMs are used in attribution studies. Notably, they are scaled to get the best fit to the observations (along with the other terms). If the models all had sensitivities of either 1ºC or 6ºC, the attribution to anthropogenic changes would be the same as long as the pattern of change was robust. What would change would be the scaling – less than one would imply a better fit with a lower sensitivity (or smaller forcing), and vice versa (see figure 10.4).

She also misstates how ECS is constrained – all constraints come from observations (whether from long-term paleo-climate observations, transient observations over the 20th Century or observations of emergent properties that correlate to sensitivity) combined with some sort of model. The divergence in AR5 was between constraints based on the transient observations using simplified energy balance models (EBM), and everything else. Subsequent work (for instance by Drew Shindell) has shown that the simplified EBMs are missing important transient effects associated with aerosols, and so the divergence is very likely less than AR5 assessed.

Nic Lewis at Climate Dialogue summarizes the observational evidence for ECS between 1.5 and 2C, with transient climate response (TCR) around 1.3C.

Nic Lewis has a comment at BishopHill on this:

The press release for the new study states: “Rapid warming in the last two and a half decades of the 20th century, they proposed in an earlier study, was roughly half due to global warming and half to the natural Atlantic Ocean cycle that kept more heat near the surface.” If only half the warming over 1976-2000 (linear trend 0.18°C/decade) was indeed anthropogenic, and the IPCC AR5 best estimate of the change in anthropogenic forcing over that period (linear trend 0.33Wm-2/decade) is accurate, then the transient climate response (TCR) would be little over 1°C. That is probably going too far, but the 1.3-1.4°C estimate in my and Marcel Crok’s report A Sensitive Matter is certainly supported by Chen and Tung’s findings.

Since the CMIP5 models used by the IPCC on average adequately reproduce observed global warming in the last two and a half decades of the 20th century without any contribution from multidecadal ocean variability, it follows that those models (whose mean TCR is slightly over 1.8°C) must be substantially too sensitive.

BTW, the longer term anthropogenic warming trends (50, 75 and 100 year) to 2011, after removing the solar, ENSO, volcanic and AMO signals given in Fig. 5 B of Tung’s earlier study (freely accessible via the link), of respectively 0.083, 0.078 and 0.068°C/decade also support low TCR values (varying from 0.91°C to 1.37°C), upon dividing by the linear trends exhibited by the IPCC AR5 best estimate time series for anthropogenic forcing. My own work gives TCR estimates towards the upper end of that range, still far below the average for CMIP5 models.

If true climate sensitivity is only 50-65% of the magnitude that is being simulated by climate models, then it is not unreasonable to infer that attribution of late 20th century warming is not 100% caused by anthropogenic factors, and attribution to anthropogenic forcing is in the middle tercile (50-50).

The IPCC’s attribution statement does not seem logically consistent with the uncertainty in climate sensitivity.

This is related to a paper by Tung and Zhou (2013). Note that the attribution statement has again shifted to the last 25 years of the 20th Century (1976-2000). But there are a couple of major problems with this argument though. First of all, Tung and Zhou assumed that all multi-decadal variability was associated with the Atlantic Multi-decadal Oscillation (AMO) and did not assess whether anthropogenic forcings could project onto this variability. It is circular reasoning to then use this paper to conclude that all multi-decadal variability is associated with the AMO.

The second problem is more serious. Lewis’ argument up until now that the best fit to the transient evolution over the 20th Century is with a relatively small sensitivity and small aerosol forcing (as opposed to a larger sensitivity and larger opposing aerosol forcing). However, in both these cases the attribution of the long-term trend to the combined anthropogenic effects is actually the same (near 100%). Indeed, one valid criticism of the recent papers on transient constraints is precisely that the simple models used do not have sufficient decadal variability!

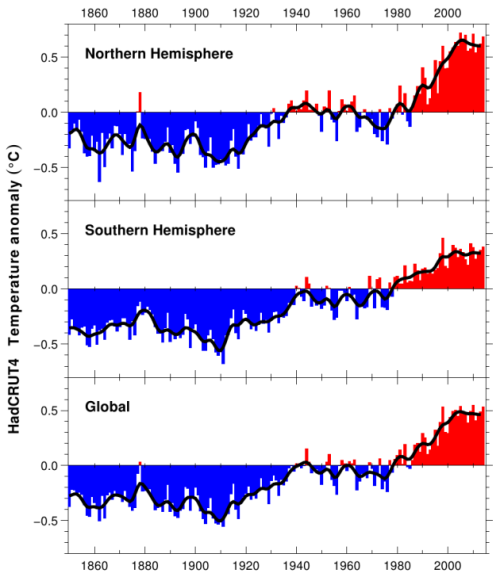

Climate variability since 1900

From HadCRUT4:

The IPCC does not have a convincing explanation for:

- warming from 1910-1940

- cooling from 1940-1975

- hiatus from 1998 to present

The IPCC purports to have a highly confident explanation for the warming since 1950, but it was only during the period 1976-2000 when the global surface temperatures actually increased.

The absence of convincing attribution of periods other than 1976-present to anthropogenic forcing leaves natural climate variability as the cause – some combination of solar (including solar indirect effects), uncertain volcanic forcing, natural internal (intrinsic variability) and possible unknown unknowns.

This point is not an argument for any particular attribution level. As is well known, using an argument of total ignorance to assume that the choice between two arbitrary alternatives must be 50/50 is a fallacy.

Attribution for any particular period follows exactly the same methodology as any other. What IPCC chooses to highlight is of course up to the authors, but there is nothing preventing an assessment of any of these periods. In general, the shorter the time period, the greater potential for internal variability, or (equivalently) the larger the forced signal needs to be in order to be detected. For instance, Pinatubo was a big rapid signal so that was detectable even in just a few years of data.

I gave a basic attribution for the 1910-1940 period above. The 1940-1975 average trend in the CMIP5 ensemble is -0.01ºC/decade (range -0.2 to 0.1ºC/decade), compared to -0.003 to -0.03ºC/decade in the observations and are therefore a reasonable fit. The GHG driven trends for this period are ~0.1ºC/decade, implying that there is a roughly opposite forcing coming from aerosols and volcanoes in the ensemble. The situation post-1998 is a little different because of the CMIP5 design, and ongoing reevaluations of recent forcings (Schmidt et al, 2014;Huber and Knutti, 2014). Better information about ocean heat content is also available to help there, but this is still a work in progress and is a great example of why it is harder to attribute changes over small time periods.

In the GCMs, the importance of internal variability to the trend decreases as a function of time. For 30 year trends, internal variations can have a ±0.12ºC/decade or so impact on trends, for 60 year trends, closer to ±0.08ºC/decade. For an expected anthropogenic trend of around 0.2ºC/decade, the signal will be clearer over the longer term. Thus cutting down the period to ever-shorter periods of years increases the challenges and one can end up simply cherry picking the noise instead of seeing the signal.

A key issue in attribution studies is to provide an answer to the question: When did anthropogenic global warming begin? As per the IPCC’s own analyses, significant warming didn’t begin until 1950. Just the Facts has a good post on this When did anthropogenic global warming begin?

I disagree as to whether this is a “key” issue for attribution studies, but as to when anthropogenic warming began, the answer is actually quite simple – when we started altering the atmosphere and land surface at climatically relevant scales. For the CO2 increase from deforestation this goes back millennia, for fossil fuel CO2, since the invention of the steam engine at least. In both cases there was a big uptick in the 18th Century. Perhaps that isn’t what Judith is getting at though. If she means when was it easily detectable, I discussed that above and the answer is sometime in the early 1980s.

The temperature record since 1900 is often characterized as a staircase, with periods of warming sequentially followed by periods of stasis/cooling. The stadium wave and Chen and Tung papers, among others, are consistent with the idea that the multidecadal oscillations, when superimposed on an overall warming trend, can account for the overall staircase pattern.

Nobody has any problems with the idea that multi-decadal internal variability might be important. The problem with many studies on this topic is the assumption that all multi-decadal variability is internal. This is very much an open question.

Let’s consider the 21st century hiatus. The continued forcing from CO2 over this period is substantial, not to mention ‘warming in the pipeline’ from late 20th century increase in CO2. To counter the expected warming from current forcing and the pipeline requires natural variability to effectively be of the same magnitude as the anthropogenic forcing. This is the rationale that Tung used to justify his 50-50 attribution (see also Tung and Zhou). The natural variability contribution may not be solely due to internal/intrinsic variability, and there is much speculation related to solar activity. There are also arguments related to aerosol forcing, which I personally find unconvincing (the topic of a future post).

Shorter time-periods are noisier. There are more possible influences of an appropriate magnitude and, for the recent period, continued (and very frustrating) uncertainties in aerosol effects. This has very little to do with the attribution for longer-time periods though (since change of forcing is much larger and impacts of internal variability smaller).

The IPCC notes overall warming since 1880. In particular, the period 1910-1940 is a period of warming that is comparable in duration and magnitude to the warming 1976-2000. Any anthropogenic forcing of that warming is very small (see Figure 10.1 above). The timing of the early 20th century warming is consistent with the AMO/PDO (e.g. the stadium wave; also noted by Tung and Zhou). The big unanswered question is: Why is the period 1940-1970 significantly warmer than say 1880-1910? Is it the sun? Is it a longer period ocean oscillation? Could the same processes causing the early 20th century warming be contributing to the late 20th century warming?

If we were just looking at 30 year periods in isolation, it’s inevitable that there will be these ambiguities because data quality degrades quickly back in time. But that is exactly why IPCC looks at longer periods.

Not only don’t we know the answer to these questions, but no one even seems to be asking them!

This is simply not true.

Attribution

I am arguing that climate models are not fit for the purpose of detection and attribution of climate change on decadal to multidecadal timescales. Figure 10.1 speaks for itself in this regard (see figure 11.25 for a zoom in on the recent hiatus). By ‘fit for purpose’, I am prepared to settle for getting an answer that falls in the right tercile.

Given the results above it would require a huge source of error to move the bulk of that probability anywhere else other than the right tercile.

The main relevant deficiencies of climate models are:

- climate sensitivity that appears to be too high, probably associated with problems in the fast thermodynamic feedbacks (water vapor, lapse rate, clouds)

- failure to simulate the correct network of multidecadal oscillations and their correct phasing

- substantial uncertainties in aerosol indirect effects

- unknown and uncertain solar indirect effects

The sensitivity argument is irrelevant (given that it isn’t zero of course). Simulation of the exact phasing of multi-decadal internal oscillations in a free-running GCM is impossible so that is a tough bar to reach! There are indeed uncertainties in aerosol forcing (not just the indirect effects) and, especially in the earlier part of the 20th Century, uncertainties in solar trends and impacts. Indeed, there is even uncertainty in volcanic forcing. However, none of these issues really affect the attribution argument because a) differences in magnitude of forcing over time are assessed by way of the scales in the attribution process, and b) errors in the spatial pattern will end up in the residuals, which are not large enough to change the overall assessment.

Nonetheless, it is worth thinking about what plausible variations in the aerosol or solar effects could have. Given that we are talking about the net anthropogenic effect, the playing off of negative aerosol forcing and climate sensitivity within bounds actually has very little effect on the attribution, so that isn’t particularly relevant. A much bigger role for solar would have an impact, but the trend would need to be about 5 times stronger over the relevant period to change the IPCC statement and I am not aware of any evidence to support this (and much that doesn’t).

So, how to sort this out and do a more realistic job of detecting climate change and and attributing it to natural variability versus anthropogenic forcing? Observationally based methods and simple models have been underutilized in this regard. Of great importance is to consider uncertainties in external forcing in context of attribution uncertainties.

It is inconsistent to talk in one breath about the importance of aerosol indirect effects and solar indirect effects and then state that ‘simple models’ are going to do the trick. Both of these issues relate to microphysical effects and atmospheric chemistry – neither of which are accounted for in simple models.

The logic of reasoning about climate uncertainty, is not at all straightforward, as discussed in my paper Reasoning about climate uncertainty.

So, am I ‘making things up’? Seems to me that I am applying straightforward logic. Which IMO has been disturbingly absent in attribution arguments, that use climate models that aren’t fit for purpose, use circular reasoning in detection, fail to assess the impact of forcing uncertainties on the attribution, and are heavily spiced by expert judgment and subjective downweighting.

My reading of the evidence suggests clearly that the IPCC conclusions are an accurate assessment of the issue. I have tried to follow the proposed logic of Judith’s points here, but unfortunately each one of these arguments is either based on a misunderstanding, an unfamiliarity with what is actually being done or is a red herring associated with shorter-term variability. If Judith is interested in why her arguments are not convincing to others, perhaps this can give her some clues.

References

- M.E. Mann, B.A. Steinman, and S.K. Miller, "On forced temperature changes, internal variability, and the AMO", Geophysical Research Letters, vol. 41, pp. 3211-3219, 2014. http://dx.doi.org/10.1002/2014GL059233

- K. Tung, and J. Zhou, "Using data to attribute episodes of warming and cooling in instrumental records", Proceedings of the National Academy of Sciences, vol. 110, pp. 2058-2063, 2013. http://dx.doi.org/10.1073/pnas.1212471110

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

- M. Huber, and R. Knutti, "Natural variability, radiative forcing and climate response in the recent hiatus reconciled", Nature Geoscience, vol. 7, pp. 651-656, 2014. http://dx.doi.org/10.1038/ngeo2228

45, Fred Moolten: . His description should be read for details, but the essence of the evidence lies in the observation that ocean heat uptake (OHC) has been increasing during the post-1950 warming. – See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-1/#comment-589446

with respect, OHC is not that well characterized for the post-1950 period. Part of the surface warming of the period 1978-1998 was due to decreased cloud cover. Average cloud cover has increased since then. That makes it harder to attribute the warming to CO2 increase, unless there is a known mechanism by which the anthropogenic CO2 produced the changes in cloud cover.

51, Matthew R Marler says:

28 Aug 2014 at 5:30 PM

. . . with respect, OHC is not that well characterized for the post-1950 period. . . .

Held’s argument really only requires OHC to not be net negative over the period. If it is net negative then explaining the observed rate of sea level rise is problematic.

Fred-

Keep in mind the attribution effort is also concerned with separating out different forced signals too.

I agree the OHC data are incompatible with a predominately internal contribution (although I’m sure Judith would argue those data are too uncertain, though I don’t think anyone has argued OHC decreasing over the last half-century, at least not at the ocean basins/depths that communicate with the atmosphere on the relevant timescales).

Even putting aside the OHC data and fingerprinting, there is absolutely no evidence in model simulations (or in prevailing reconstructions of the Holocene), that an unforced climate would exhibit half-century timescale global temperature swings of order ~1 C. I don’t see a good theoretical reason why this should be the case, but since Judith lives on “planet observations” it should be a pause for thought. Some work by Clara Deser et al. shows that for some diagnostics (e.g., regional sea level pressure 50-yr trends, wintertime western U.S temperature, etc) that the sensitivity of trends in their decadal statistics to initial conditions can compete with the sensitivity of those same statistics in responses to increases in GHGs.

But right now no credible coupled atmosphere-ocean-ice mechanism exists that could allow you to stretch that argument to global temperatures, let alone be compatible with the myriad observables that we do have. I’m sure this is true for Arctic sea ice extent trends as well, even though Gavin said he was fine with Judith’s quoted paragraph pertaining to sea ice. What’s more, no big missing link exists in paleoclimate that demand we be searching for such a process.

Finally, the amplitude of internal variability isn’t independent of climate sensitivity, so it seems that by positing a mysterious source of high-amplitude, low-frequency variability and a low climate sensitivity, Judith wants to have her cake and eat it at the same time.

Essentially what we are dealing with is a proposed paradigm framed around uncertainty, that by its sloppy construction is immovable even with the addition of new data. It hasn’t been a very compelling (or necessary) prior. It also hasn’t been well-argued that there is a need to replace the underlying framework we have of how climate changes on decadal timescales. Some of the other issues like how we do a precise fractional attribution of Atlantic vs. Pacific vs. external. vs. observation error, etc during the recent ‘hiatus’ just don’t relate.

@Matthew Marler (51) – OHC since 1950, while subject to some uncertainty, is sufficiently characterized to know it’s strongly positive, which excludes a more than minor role for internal contributions to the warming. The data come from a multitude of sources that include not only temperature measurements but also commensurate steric sea level rise. The exact magnitude of the OHC increase may not be certain, but its direction is certain. The estimates of OHC change since 1975 are even more certain, and it is the interval since then when the warming occurred.

We don’t have OHC data before 1950, and so it’s possible internal variability played a more important warming role during that interval.

Note how M.Marler makes a pure assertion with absolutely nothing to back it up. He conveniently ignores that decreased cloud cover could be a result of the warming, executing a cause/effect bait-and-switch on us.

‘Using a new measure of coupling strength, this update shows that these climate modes have recently synchronized, with synchronization peaking in the year 2001/02. This synchronization has been followed by an increase in coupling. This suggests that the climate system may well have shifted again, with a consequent break in the global mean temperature trend from the post 1976/77 warming to a new period (indeterminate length) of roughly constant global mean temperature.’

http://onlinelibrary.wiley.com/doi/10.1029/2008GL037022/abstract

There are many ways that can and have been used to calculate a maximum residual rate of warming in the order of 0.1 degree C/decade. If you divide the 1950-2010 increase of some 0.65 degrees by 6 decades – you get a little over 0.1 degree C/decade. Even more rationally you could divide the increase between 1994 and 1998 – and assume the cooler and warmer regimes net out – by the elapsed time and you get 0.07 degrees C/decade.

Here’s one using models – http://www.pnas.org/content/106/38/16120/F3.expansion.html

Here’s one from realclimate – https://www.realclimate.org/index.php/archives/2009/07/warminginterrupted-much-ado-about-natural-variability/

Here’s one subtracting ENSO – ://watertechbyrie.files.wordpress.com/2014/06/ensosubtractedfromtemperaturetrend.gif

And of course there is Tung and Zhou.

The question has and will be asked – especially if the ‘hiatus’ persists for another decade or 2 (as seems more likely than not) – is just how serious this is? The question is moot – climate is a kaleidoscope. Shake it up and a new and unpredictable – bearing an ineluctable risk of climate instability – pattern spontaneously emerges. Climate is wild as Wally has said.

Which if you think about it means that the late 20th century cooler and warmer regimes are overwhelmingly unlikely to net out. Indeed a long term ENSO proxy based on Law Dome ice core salt content suggests a 1000 year peak in El Nino frequency and intensity in the 20th century.

http://watertechbyrie.files.wordpress.com/2014/06/vance2012-antartica-law-dome-ice-core-salt-content.png

http://journals.ametsoc.org/doi/abs/10.1175/JCLI-D-12-00003.1

However – let’s put that in the imponderables basket for the time being.

The answer to the moot question – btw – is 12 phenomenal ways to save the world – http://watertechbyrie.com/

55 Web Hub Telescope: Note how M.Marler makes a pure assertion with absolutely nothing to back it up. He conveniently ignores that decreased cloud cover could be a result of the warming, executing a cause/effect bait-and-switch on us.

I am happy to agree with WebHubTelescope that the changes in observed cloud cover may be a result of increases in CO2. They contribute to the difficulties in detecting/estimating the effects of CO2.

My “bait and switch” was not intended to substitute my belief for another, but to introduce the confounding factor of cloud cover. Cloud cover and other water vapor effects for the future are completely unpredictable.

‘Climate forcing results in an imbalance in the TOA radiation budget that has direct implications for global climate, but the large natural variability in the Earth’s radiation budget due to fluctuations in atmospheric and ocean dynamics complicates this picture.’

http://meteora.ucsd.edu/~jnorris/reprints/Loeb_et_al_ISSI_Surv_Geophys_2012.pdf

The data shows quite clearly that changes in cloud radiative forcing in the satellite era exceeded greenhouse gas forcing.

e.g. – http://watertechbyrie.files.wordpress.com/2014/06/cloud_palleandlaken2013_zps3c92a9fc.png

It would be an odd sort of feedback that exceeded the forcing. There may be no equivalence a all. Zhu et al (2007) found that cloud formation for ENSO and for global warming have different characteristics and are the result of different physical mechanisms. The change in low cloud cover in the 1997-1998 El Niño came mainly as a decrease in optically thick stratocumulus and stratus cloud. The decrease is negatively correlated to local SST anomalies, especially in the eastern tropical Pacific, and is associated with a change in convective activity. ‘During the 1997–1998 El Niño, observations indicate that the SST increase in the eastern tropical Pacific enhances the atmospheric convection, which shifts the upward motion to further south and breaks down low stratiform clouds, leading to a decrease in low cloud amount in this region. Taking into account the obscuring effects of high cloud, it was found that thick low clouds decreased by more than 20% in the eastern tropical Pacific… In contrast, most increase in low cloud amount due to doubled CO2 simulated by the NCAR and GFDL models occurs in the subtropical subsidence regimes associated with a strong atmospheric stability.’

At any rate it seems fairly clear that these changes are associated with changes in ocean and atmosphere circulation.

e.g. http://watertechbyrie.files.wordpress.com/2014/06/loeb2011-fig1.png

Which you can find in the Loeb et al 2012 paper.

And that OHC follows these changes in net TOA radiant flux quite closely.

e.g. https://watertechbyrie.files.wordpress.com/2014/06/wong2006figure7.gif

Which can be found in http://www.image.ucar.edu/idag/Papers/Wong_ERBEreanalysis.pdf

But are the oceans warming currently? It depends on the Argo ‘climatology’.

http://watertechbyrie.files.wordpress.com/2014/06/argograce_leuliette2012_zps9386d419.png

A steric sea level rise of 0.2mm +/-0.8mm/year?

Which can be found at – http://www.tos.org/oceanography/archive/24-2_leuliette.html

Which has the merit of being consistent with CERES net.

http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_net_flux-all-sky_march-2000toapril-2014.png

And are sea levels rising? Depends on whether you believe Argo or Jason.

http://watertechbyrie.files.wordpress.com/2014/06/argosalinity_zpscb75296c-e1409295709715.jpg

Is it all as simple as the narratives of the climate wars suggest?

Fred Moolten: @Matthew Marler (51) – OHC since 1950, while subject to some uncertainty, is sufficiently characterized to know it’s strongly positive, which excludes a more than minor role for internal contributions to the warming.

I don’t think that is sufficient for a strong attribution concerning CO2, but you have persuaded me that I need to reread Isaac Held’s post that you referred to.

#48 Ray Ladbury “even if you start out with the wrong model, empirical evidence will eventually correct you”.

Unless you choose to ignore it. (It’s been nearly 20 years).

M.Marler, You intentionally call the cloud cover a “confounding factor”.

The cloud cover are likely not a forcing and thus a cause of warming but more likely a positive warming feedback.

Dessler, Andrew E. “Cloud variations and the Earth’s energy budget.” Geophysical Research Letters 38.19 (2011).

Then you say that the ” water vapor effects for the future are completely unpredictable”.

Great that you learned from the Curry School of Uncertainty talking points.

Positive water vapor feedback is known to contribute largely to the +33C warming that prevents the earth from a 255K average temperature. What makes you think this positive feedback will take a U-turn and turn into a negative feedback with further warming?

BTW, the “pea under the thimble” reference has been duly noted :)

[Response: At least that is a constructive influence on something! – gavin]

Congrats to verytallguy on getting a substantive response:

This is a better insight into Judith’s thinking and does reveal one thing I was confused about in her argument, but further confuses me on others. First, she appears to confound the point at which detection of a change becomes statistically clear (in this case around 1980) and when that change started (obviously well before that). It therefore makes no sense to only attribute changes from after the point of detection since you’ll miss the first 2 sigma of the change… Similarly, we can still calculate the forced component of a change even if it isn’t the only thing going on, and indeed, before it is statistically detectable in the global mean temperature anomaly.

The points that are further confusing is the contradiction between her insistence (without any quantitative support) that there should be a 50-50 split for since 1950 while at the same time insisting that for 1910-1940 it has to be either wholly internal variability or natural forcing (again without any quantitative support). If she accepts that attribution is amenable to quantitative analysis using some kind of model (doesn’t have to be a GCM), I don’t get why she doesn’t accept that the numbers are going to be different for different time periods and have varying degrees of uncertainty depending on how good the forcing data is and what other factors can be brought in. The comment above discussing the OHC data in this context is exactly right.

This whole conversation came about because I queried what the basis of Judith’s quantitative assessment was though. I am still waiting for that.

Judith seems to be saying to me that: looking at the 60 year period 1950-2010 is not enough to determine the magnitude of probability of AC (anthropogenic change). The fact that the longer record has variations not explained by the models puts into question the veracity of depending on the models and their assumptions.

[Response: “Enough” for what? There will always be unexplained variation at some point in the record, does that mean you can never come to any conclusions where you do have data? – gavin]

It is understandable given the rise in temperature over such a long period to say “something” is going on. There are models that can attribute that change to CO2 largely. However, in order for that probability assessment to make sense 2 things are required:

1) proof the models really do represent reality enough to model that period

I and others would posit this is far from known or provable since as you point out most of the data is unreliable in prior time periods and from what I’ve read the models do not coincide with great precision to the data we have anyway.

[Response: ‘Proof’ is the wrong word. What you mean is evidence that the models have skill. And yes, there is such evidence – in the predicted response to volcanic forcing, the ozone hole, orbital variations, the sun, paleo-lake outbursts, the response to ENSO etc. that all show models matching the observations skillfully (which is not to say they match perfectly). – gavin]

2) longer period analysis which shows that this period is extraordinary that may exclude prior factors that affected things in the past

This is also hard because of data problems but also is not at all clear because we are pretty sure that over periods of thousands of years temperatures were higher than even now. A lack of quality in prior data make statements about the specific form of the heating shape impossible to correlate to see if this warming is faster, has a pattern that is detectably different than past temperature changes.

[Response: Attribution has nothing to do with whether something is unprecedented. You don’t have to rob more banks than anyone ever to be found guilty of robbing the one bank you did actually rob. – gavin]

This is not an unusual situation. It is unfortunate the data quality is too low in the past but it is what it is. Therefore the prudent thing is to say we don’t know. You have a theory that this latest heating is extraordinary. I believe that it probably is but I’m not 100% convinced. I am 100% certain of the physics of CO2 GHG and therefore like everybody I puzzle how exactly that extra energy manifests itself in the environment. It is certainly reasonable that it results in higher temperatures but because of the complexity of the system and magnitude of things like ocean flows and heat fluctuations over time, how clouds are affected it makes sense to take a very basic approach to this science rather than the extremely high level approach that is being taken currently.

For the last 30 years or so the community of scientists has taken the position that overall temperatures must rise and estimating the amount.

[Response: No. The temperatures have been observed to rise, and we have over a hundred years of science quantifying the potential causes. -gavin]

That is too hard a problem to solve reliably. It would be more constructive to concentrate on showing a causal link and proof of specific theories of climate science to establish some basic knowns. For instance, can we build a satellite which will give us precise provable evidence of the energy from CO2 is being delivered to the surface.

[Response: You misunderstand what is going on – however, it would be great if we could have satellites that measured the top of atmosphere energy budget directly, but currently they are not accurate enough and so we have to rely on the ocean heat content changes instead. However, they do show clearly that the planet is gaining energy. – gavin]

The current basis of this seems to be more indirect and not 100% convincing. Doing this would be immensely valuable to establish the kind of proof that I see in a classic newtonian physics class. Second proof is the largest feedback expected from the models. This is the water vapor feedback. We need proof that indeed water vapor is increasing and in line with what a simple (non-model driven) analysis will show.

[Response: This has already been shown for short, medium and long term climate changes. Chapter 2 in AR5, pages 205-208. – gavin]

Such experiments and measurement will be hard and require billions in money similar for instance to the ARGO float project. However, doing so will give resources to leverage as well as critical data to assess the real effects. It will also lead to irrefutable proofs that will be impossible to debate.

[Response: That is a little optimistic. People can always be found to debate anything. – gavin]

Then we have a “science” in the sense that someone can show theory, measurements, calculated results and then see the actual results. I realize the biggest problem is the scale and difficulty of doing these things but it seems to me that absent doing this we are in an infinite debate until enough data can confirm or refute the current theory. I posit that absent such data that debate can never end and that it is possible to argue either side for the next 50 years.

[Response: It is *always* possible to argue if you don’t pay any attention to the actual data, observations or models. And that goes double for topics where people are heavily invested in the outcome. Scientists in general though learn to recognise what arguments are constructive and which ones are simply reactionary. – gavin]

[edit – not here].

Gavin @63,

glad to have been of help! I have asked a couple more questions there, we’ll see if Judith comes back.

I also have a question to help my understanding of the IPCC attribution which maybe you can help with.

My summary of the IPCC attribution process is:

1)Models produce a good simulation of natural variability. AR5 section 9.5.3 concludes “ Nevertheless, the lines of evidence above suggest with high confidence that models reproduce global and NH temperature variability on a wide range of time scales.”

2)Model spread of natural variability in the 1950-2010 timeframe is ca zero +/- 0.1 degC

3)Therefore the rest must be anthro

4)To allow for “structural uncertainties” (is this effectively unkown unknowns?) the spread of natural is increased by an arbitrary amount determined by the judgment of the panel; this actually makes the attribution conservative compared to the direct model output.

Is that about right? If not I think I may be confused about why the error bars on ANT are much less than OA+GHG separately.

[Response: Yes. So the assessed likelihood is not as tight as my first figure in the top post. The ANT vs. GHG+OA issue is slightly more subtle though. The issue there is that there is not as clean a distinction between the fingerprints for GHG and OA in the surface temperature fields as one might like. Therefore there is more flexibility in the exact proportions when you do the attribution with OA and GHG independently as when you lump them together in contrast to NAT factors. – gavin]

So, a couple of questions:

1)How good is the observational data which allows a comparison of observed vs model natural variability? Presumably we have very limited thermometer data on centennially variations, so it must be an assessment relying primarily on proxies? Should the uncertainties in proxy data add significantly to uncertainties in attribution via this analysis?

[Response: No. This is all done with instrumental data – but it can include more than just the weather stations. – gavin]

2)Even if models produce an overall spectrum equivalent to natural, how effective are they at reproducing a specific realisation (ie that from 1950). In other words, even if in general models produce a reasonable statistical representation of natural variability, could they be a long way out for the specific case of 1950-2010?

[Response: That is always a possibility, but you can try and use the simulated distribution to infer how likely that is (not very). – gavin]

Regarding Curry’s response reprinted in comment 63:

“I think both 0% and 100% are extremely unlikely.”

The 100% part of that statement is equivalent to saying that it is extremely unlikely that the world could have cooled since 1950 if there hadn’t been any human contribution. Alternatively, that it is extremely likely that it would still have warmed.

How can she be so sure?

– See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-2/#comment-589634”

Um, that is a salinity graph!

[Response: Indeed. Argo doesn’t measure sea level anyway. Just as an aside, impact of 1mm/year SLR of freshwater on 0-1900m salinity is 0.00002 psu/year, or for 10 years, 0.0002 psu – which is not detectable in that record. – gavin]

Further to my comment (above), Von Schuckman et al, 2014 (doi:10.5194/os-10-547-2014) says:

Sure doesn’t support that notion that JASON and ARGO are in any kind of disagreement–well (full disclosure), except for the TAA region. (See paper for discussion.)

WebHubTelescope:M.Marler, You intentionally call the cloud cover a “confounding factor”.

The cloud cover are likely not a forcing and thus a cause of warming but more likely a positive warming feedback.

I have read more than one paper on the topic, and my assessment is that cloud cover and other water vapor effects are widely recognized as among the biggest unknowns going forward. Science magazine has published reviews and so have some other peer-reviewed journals.

The cloud cover changes from the early 70s to the most recent measurements are “confounding factors” because the changes occurred concomitantly with other changes, which makes it hard for empirical model building techniques like the multiple regression you use for your csalt model to provide reasonably accurate, unbiased estimates of their effect sizes.

gavin wrote:

”

This whole conversation came about because I queried what the basis of Judith’s quantitative assessment was though.

I am still waiting for that.

”

Wait no more.

As you and others have pointed out, Dr Curry’s claims are fundamentally at odds with themselves.

Curry argues for high internal variability, but low sensitivity.

Curry argues that attribution is highly uncertain, but claims that she thinks 100% ANT is “extremely unlikely”.

It’s all very reminiscent of:

http://rabett.blogspot.ca/2014/01/curry-vs-curry.html

There are no quantitative assessments that support contradictions.

” It is worth pointing out that there can be no assumption that natural contributions must be positive – indeed for any random time period of any length, one would expect natural contributions to be cooling half the time.”

The above statement is simply not true, unless it is scientifically established that the global temperature is pre-set at a specific level and does not change — upwards or downwards — over time based on natural contributions. Since the climate has demonstrably changed in the past many times, all assumed to be natural, then our random selections of time periods would most likely encompass periods of natural warming or natural cooling. Periods of no change would be rare in nature.

[Response: Read it again – that’s exactly what I said. – gavin]

Addition ==> This would be particularly true if we are talking of changes as small as 1 degree C or less.

61. WebHubTelescope: Positive water vapor feedback is known to contribute largely to the +33C warming that prevents the earth from a 255K average temperature. What makes you think this positive feedback will take a U-turn and turn into a negative feedback with further warming?

When we are formulating hypotheses and expectations about the future (“extrapolation”, forecasting, and such), which parts of the past record deserve the greatest weight? Global mean temperature since the last ice age has oscillated quasi-periodically between about +/- 1% of its mean; over that time, the mean has slightly declined, as have the maxima and minima of the excursions. That behavior is much more compatible with a negative feedback from the higher temperatures than with a positive feedback from the higher temperatures.

It is known that water evaporates faster at higher temperature than lower temperature, and it has been demonstrated through data analyses that cloud cover is slightly greater at higher temperatures than at lower temperatures; some regional effects are dramatic, such as the greater cloud cover throughout upstate New York in the daytime than the night time in summer.

So it is reasonable to entertain the hypothesis that, starting with the temperature distribution as it is now, future CO2 increases, if they produce temperature increases of any size, will produce increased cloud cover. Once you start to entertain the hypothesis seriously, you see that evidence against it is slim to none.

Ray Ladbury: “Frankly, I’ve never come away from anything Aunt Judy wrote with any new understanding. Her analysis is shallow and her logic flawed. You cannot draw scientific conclusions when you simply reject the best science available. Is it uncertain? Of course, but it is at least an edifice to build on. That is the thing about science: even if you start out with the wrong model, empirical evidence will eventually correct you, and you’ll know why you were wrong, as well. Judy’s biggest problem is that she’s afraid to be wrong, so she’s forever stuck in the limbo of “not even wrong”.”

This is probably why there don’t seem to be many peer-reviewed papers making the same arguments to actually perform attribution, either by Curry or others. I believe she had The Uncertainty Monster published by BAMS back in 2011 but that’s hardly an actual assessment of the climate applying her ideas. Who has tried to put Curry’s philosophy about attribution to work? The answer doesn’t seem to be “Judith Curry.”

Also, it’s instructive to look at her recent posts on this topic and then go back to the bad arguments she was making in 2012, as highlighted/addressed here. She’s had several years to try and absorb these mistakes after having them pointed out.

Matthew says:

So it is reasonable to entertain the hypothesis that, starting with the temperature distribution as it is now, future CO2 increases, if they produce temperature increases of any size, will produce increased cloud cover. Once you start to entertain the hypothesis seriously, you see that evidence against it is slim to none.

The hypothesis that there will be more clouds when there is more water vapor in the air isn’t particularly troubling to me.

Earlier, Matthew said:

I have read more than one paper on the topic, and my assessment is that cloud cover and other water vapor effects are widely recognized as among the biggest unknowns going forward. Science magazine has published reviews and so have some other peer-reviewed journals.

So what I think you’re trying to do here, Matthew, is to say that we’re going to have more clouds and that clouds are poorly quantified in the models, so we really don’t know that much.

I agree that the exact effect of clouds is hard to model, and that current models do a poor job with them.

I’ll add, however, that they’re fairly well constrained. Current literature has them somewhere between a very small negative feed back and a small positive feedback, with a most-likely value of a very small positive feedback.

There’s no doubt we’ll want to make these as accurate as possible in the future. “Where it rains” is vital to agriculture, as is “where it no longer rains”. However, there’s no evidence that clouds are a strong negative feedback that will keep things under control.

Let’s try to bring this back to basics. Let’s accept that observations and forcings are correct from 1950. The critical question is first of all whether captures a mutidecadal warm and a multidecadal cool regime such that the regimes cancel out.

What seems obvious is that regimes involve changes in the Pacific Ocean state and changes in the trajectory of global surface temperatures. These changes – abrupt shifts between quasi equilibrium states occurred at the mid 1940’s, 1976/77 and 1998/2001. There is a cooler state from 1944 and a warmer state from 1976 to 1998. We may assume that the states cancel out over two full regimes – 1944 to 1998 – and that the residual is entirely anthropogenic. The difference between 1944 and 1950 is substantial – 0.324 degrees C – and ENSO influenced in the cool mode that was in full swing by 1950. The assumption – and it is just that – that natural regimes of warming and cooling cancel out from 1950 is not justified.

The assumption that these climate shifts between quasi equilibrium states cancel out over less than centennial to millennial scales is astonishingly ill founded.

There is nothing to suggest that the next shift – due perhaps in a decade to three – will follow the 20th century pattern to a warmer state.

These are the very poorly understood points – admittedly not all that clearly enunciated in this post – from Judy Curry.

[Response: All you are demonstrating is that over 6 years (1944 to 1950) internal variability can be a dominant factor. I doubt anyone disputes that. The difference between trends 1944 to 2010 versus 1950 to 2010 is much smaller (around 0.01-0.02ºC/decade depending on data set) and even more so relative to the overall trend of about 0.1ºC/decade. – gavin]

Rob Ellison (#58) claimed:

No, the figure linked does not even show changes in cloud radiative forcing. Nor does the Pallé and Laken paper it appears in. To the contrary, they are at pains to point out that “difficulties in measuring clouds means it is unclear how global cloud properties have changed over [the past 30 years]”, and suggest that “the [ISCCP] dataset contains considerable features of an artificial origin.” (The IPCC reaches similar conclusions, see AR5 WG1 ch. 2.5.6). You are adding to the confusion (cf. Kevin’s comments at #67–68).

Kip (#72)–

Whenever someone needs a sanity check on how internal variability fits into the overarching story of climate, it’s always good to turn back to this articulate comment by Andy Lacis.

Climate forcing results in an imbalance in the TOA radiation budget that has direct implications for global climate, but the large natural variability in the Earth’s radiation budget due to fluctuations in atmospheric and ocean dynamics complicates this picture.’ http://meteora.ucsd.edu/~jnorris/reprints/Loeb_et_al_ISSI_Surv_Geophys_2012.pdf

These large changes in TOA radiant flux are changes in cloud cover and utterly unrelated to CO2.

Specifically – anticorrelated to SST – e.g. http://watertechbyrie.files.wordpress.com/2014/06/clementetal2009.png

75 David Miller: I’ll add, however, that they’re fairly well constrained. Current literature has them somewhere between a very small negative feed back and a small positive feedback, with a most-likely value of a very small positive feedback. – See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-2/#comments

I do not think that they are well constrained. A small increase in cloud cover during summer daytimes can have an effect at least as strong as the doubling of CO2, and in the opposite direction. Whether that will actually happen remains to be seen, but all that is needed is a small feedback.

The other thing about water vapor of course is that more energy is required to vaporize water than to raise its temperature somewhat. If there is an increase in the rate of the hydrological cycle, even if it is small, then the temperature increase due to doubling of CO2 has been overestimated. The calculations I have seen that might “constrain” the size of this effecct are based on equilibrium conditions, which I think are unlikely to be very accurate when applied to a system in which each surface region warms and cools every day, and which has rainstorms over large regions, and which never achieves equilibrium.

I do not “believe” that overall feedback due to temperature increase and CO2 increase will be that strongly negative over the next decades, but I do think that the scientific case against it is full of liabilities and is inadequate.

I agree with most of what you wrote in that post, but I do not have a firm belief or expectation about the future consequences of future CO2 increases.

77 CM, from Palle and Lakin: Performing a correlation analysis between SST and ISCCP/MODIS total cloud amount we find that overall there is a negative correspondence between cloud at middle latitudes and a positive correspondence at low latitudes.

Other regions over the globe present localized significant positive and negative correlations [8], but this is the more extended region with consistent positive correlations

So my memory was at least incomplete on this point, and probably wrong. The cloud cover effects remain too complex for a simple summary.

All you are demonstrating is that over 6 years (1944 to 1950) internal variability can be a dominant factor. I doubt anyone disputes that. The difference between trends 1944 to 2010 versus 1950 to 2010 is much smaller (around 0.01-0.02ºC/decade depending on data set) and even more so relative to the overall trend of about 0.1ºC/decade. – gavin]

There are 2 separate issues – between 1944 and 1998 there was a complete cooler regime and a complete warmer regime. Conveniently – this is when CO2 in the atmosphere started to take off. The total warming was some 0.4 degrees C. This is of course less than the 0.65 from 1950 in the diagram above. We assume that the warm and cool regimes in the late 20th century cancel out – and that the 20th century pattern will be repeated. Questionable assumptions at best.

The less than 0.1 degree C/decade trend – is pretty much consistent with any of a number of results some of which I have mentioned. Including Tung and Zhou it seems.

So if total warming – assumed to be anthropogenic – is 0.4 degree C thus far and the trend is 0.1 degrees C/decade it will take some time to reach 2 degree C increase. Especially so if the current cool mode persists for another decade to three – and the next natural ‘climate shift’ is to yet cooler conditions.

[Response: It’s not linear. – gavin]

The question has and will be asked – is just how serious this is? The question is moot – climate is a kaleidoscope. Shake it up and a new and unpredictable – bearing an ineluctable risk of climate instability – pattern spontaneously emerges. Climate is wild as Wally has said.

As for the changes in the toa flux data someone else mentioned.

‘One important development since the TAR is the apparent unexpectedly large changes in tropical mean radiation flux reported by ERBS (Wielicki et al., 2002a,b). It appears to be related in part to changes in the nature of tropical clouds (Wielicki et al., 2002a), based on the smaller changes in the clear-sky component of the radiative fluxes (Wong et al., 2000; Allan and Slingo, 2002), and appears to be statistically distinct from the spatial signals associated with ENSO (Allan and Slingo, 2002; Chen et al., 2002). A recent reanalysis of the ERBS active-cavity broadband data corrects for a 20 km change in satellite altitude between 1985 and 1999 and changes in the SW filter dome (Wong et al., 2006). Based upon the revised (Edition 3_Rev1) ERBS record (Figure 3.23), outgoing LW radiation over the tropics appears to have increased by about 0.7 W/m2 while the reflected SW radiation decreased by roughly 2.1 W/m2 from the 1980s to 1990s (Table 3.5)…

In summary, although there is independent evidence for decadal changes in TOA radiative fluxes over the last two decades, the evidence is equivocal. Changes in the planetary and tropical TOA radiative fluxes are consistent with independent global ocean heat-storage data, and are expected to be dominated by changes in cloud radiative forcing. To the extent that they are real, they may simply reflect natural low-frequency variability of the climate system.’ AR4 WG1 3.4.4.1

What the independent – ERBS and ISCCP-FD – data shows is strong cooling in IR and strong warming in SW – associated with ocean states and cloud cover – in the 80’s and 90’s. It is what it is.

Finally – Argo seems to be showing (on the Scripps Institute data handling) that salinity in not trending.

http://watertechbyrie.files.wordpress.com/2014/06/argosalinity_zpscb75296c-e1409295709715.jpg

von Schuckman and Le Troan actually show freshwater content decreasing – if you look carefully – in the period they covered. Argo results change over time due to data handling but significantly because the system evolves with large natural variability.

So we have no trend in salinity – and according to Louliette 2011 a steric sea level rise of 0.2mm +/- 0.8mm/yr. This is inconsistent with Jason sea level rise.

[Response: A couple of points. If you are wanting an actual discussion, please provide links or doi’s to the papers you cite. Is it Louliette or Leuliette? 2011 or 2009? In either case I am unable to find the reference. Leuliette and Willis (2011) does however have the closure of the SLR in terms of steric and mass effects (fig3). – gavin]

[Further response: Your graph from von Schuckmann and Le Traon is from the preprint and has a typo in the OFC trend, not the final paper. – gavin]

#73

Marler, you don’t seriously believe that there is some new state of matter or new phase transition that allows water vapor to be a positive feedback from 255K to 288K but then do a U-turn and become a negative feedback when the temperature hits 289K ?

That is plain preposterous, and an indicator that you belong to the Kloud Kult (not to be confused with the MN band Cloud Cult).

‘A number of studies have suggested that long-term irradiance-based measurements of cloud cover from satellite may be unreliable due to the inclusion of artifacts, difficulties in observing low-cloud, biases connected to view angles, and calibration issues [1, 2, 3, 4]. Using monthly-averaged global satellite records from the International Satellite Cloud Climatology Project (ISCCP [5]) and the MODerate Resolution Imaging Spectroradiometer (MODIS) in conjunction with Sea Surface Temperature (SST) data from the National Oceanic and Atmospheric (NOAA) extended and reconstructed SST (ERSST) dataset [7] we have examined the reliability of long-term cloud measurements. The SSTs temperatures are used here, with success over certain regions of the globe, as a proxy and cross-check for cloud variability.’ http://www.benlaken.com/documents/AIP_PL_13.pdf

A change in cloud cover implies a change in cloud radiative forcing. Net cooling for an increase in low cloud and vice versa. While the difficulties in measuring cloud were acknowledged – the cross validation enhances reliability.

Indeed. Argo doesn’t measure sea level anyway. Just as an aside, impact of 1mm/year SLR of freshwater on 0-1900m salinity is 0.00002 psu/year, or for 10 years, 0.0002 psu – which is not detectable in that record.

A more saline ocean has less mass. A more saline ocean is losing more water than it is gaining – so levels are not increasing. If we combine that with the Louliette 2011 steric sea level rise calculated by reference to the Scripps Argo ‘climatologies’ then sea level rise is nowhere near the Jason result of some 3mm/year.

We are trying to measure change at the limits of detectability against a background of vigourous natural variability. Avoiding the issues is not of much use at all in understanding.

Let me try to explain this some graphs.

This is from Louliette 2011 – showing steric rise from Argo, sea level from Jason and mass from GRACE.

http://watertechbyrie.files.wordpress.com/2014/08/argograce_leuliette-2012.png

This is ocean heat content and ocean freshwater content from von Schuckmann and Le Troan 2011. It shows heat content increases and freshwater content decrease – i.e increased salinity.

https://watertechbyrie.files.wordpress.com/2014/06/vonschuckmannampltroan2011-fig5pg_zpsee63b772.jpg

Over a longer period using the Scripps Argo climatology – Louliette is showing no heat content increase – i.e. 0.2mm +/- 0.8mm/yr.

Jason and GRACE are showing sea level and mass increase. Argo is showing a mass decrease.

Now you may believe whatever you like on a basis of entrenched memes – but I challenge any rational observer to come to any other conclusion than we are at limits of observational precision.

John Mathon says:

29 Aug 2014 at 9:19 AM

[uncalibrated]

See Weart’s “Discovery of Global Warming” to get caught up on this topic. As an additional bolster to credibility also check such details as the actual budget for the Argo system. Many nations contribute to Argo, so the exact figure is a little difficult to calculate but $1 billion would run the network for perhaps 75-100 years. “Billions in money” for Argo is duff rumor; wherever/whatever/whomever misinformed you would best be ignored in the future.

Rob Ellison @76.

You round off your comment by telling us “These are the very poorly understood points … from Judy Curry.” Unfortunately I am not clear whose understanding is poor. Is it Curry? Is it you? Is it me? Is it us? Is it those people not in the know? Is it everybody?

And may I also congratulate you in getting round to linking the content of your comment to the topic being discussed, abet at the eighth attempt and still not with enough clarity to be useful, but well done you!

I do note you continue @79 & @83.

@79 you link to Loeb et al (2012) but it is not clear why. What are you hoping this paper demonstrates? The size of the “large natural variability”? The link of such wobbles to “atmospheric and ocean dynamics”? How all this “complicates the picture”?

Perhaps the nub of my problem here is that none of these uses of Loeb at al. lead to an obvious line of reasoning that makes any sense to me.

You kick off you comment @79 by saying any climate forcing results in TOA energy flux changes. And having read Lobe et al (nobody is stupid enough to present a reference that they haven’t read through, are they?), you will be aware that their findings, as well as demonstrating the wobbly spatial/temporal net TOA fluxes, also show that their satellite data needs yet more accuracy to “constrain cloud feedback.” It is therefore possible to say that their findings are ‘not simplifying’ our asessment. But that is surely not the same as ‘complicating’ it.

They also point out that the crutial “Net incoming TOA flux was positive during the 2007–2009 La Nina conditions,” so the warming continues globally even in through the deepest La Nina event. Of course, we know TOA fluxes must continue to warm globally on a decadal basis because we have OHC data. (And I mean here ‘measured’ OHC, not a value inferred by subtracting one big imprecise number from another big imprecise number.) Global surface temperature rise may have been a bit sluggish since 2007 but OHC which comprises the vast bulk of AGW (no 50:50 debate possible here), OHC continues apace.