by Michael E. Mann and Gavin Schmidt

This time last year we gave an overview of what different methods of assessing climate sensitivity were giving in the most recent analyses. We discussed the three general methods that can be used:

The first is to focus on a time in the past when the climate was different and in quasi-equilibrium, and estimate the relationship between the relevant forcings and temperature response (paleo-constraints). The second is to find a metric in the present day climate that we think is coupled to the sensitivity and for which we have some empirical data (climatological constraints). Finally, there are constraints based on changes in forcing and response over the recent past (transient constraints).

All three constraints need to be reconciled to get a robust idea what the sensitivity really is.

A new paper using the second ‘climatological’ approach by Steve Sherwood and colleagues was just published in Nature and like Fasullo and Trenberth (2012) (discussed here) suggests that models with an equilibrium climate sensitivity (ECS) of less than 3ºC do much worse at fitting the observations than other models.

Sherwood et al focus on a particular process associated with cloud cover which is the degree to which the lower troposphere mixes with the air above. Mixing is associated with reductions in low cloud cover (which give a net cooling effect via their reflectivity), and increases in mid- and high cloud cover (which have net warming effects because of the longwave absorption – like greenhouse gases). Basically, models that have more mixing on average show greater sensitivity to that mixing in warmer conditions, and so are associated with higher cloud feedbacks and larger climate sensitivity.

The CMIP5 ensemble spread of ECS is quite large, ranging from 2.1ºC (GISS E2-R – though see note at the end) to 4.7ºC (MIROC-ESM), with a 90% spread of ±1.3ºC, and most of this spread is directly tied to variations in cloud feedbacks. These feedbacks are uncertain, in part, because it involves processes (cloud microphysics, boundary layer meteorology and convection) that occur on scales considerably smaller than the grid spacing of the climate models, and thus cannot be explicitly resolved. These must be parameterized and different parameterizations can lead to large differences in how clouds respond to forcings.

Whether clouds end up being an aggravating (positive feedback) or mitigating (negative feedback) factor depends not just on whether there will be more or less clouds in a warming world, but what types of clouds there will be. The net feedback potentially represents a relatively small difference of much larger positive and negative contributions that tend to cancel and getting that right is a real challenge for climate models.

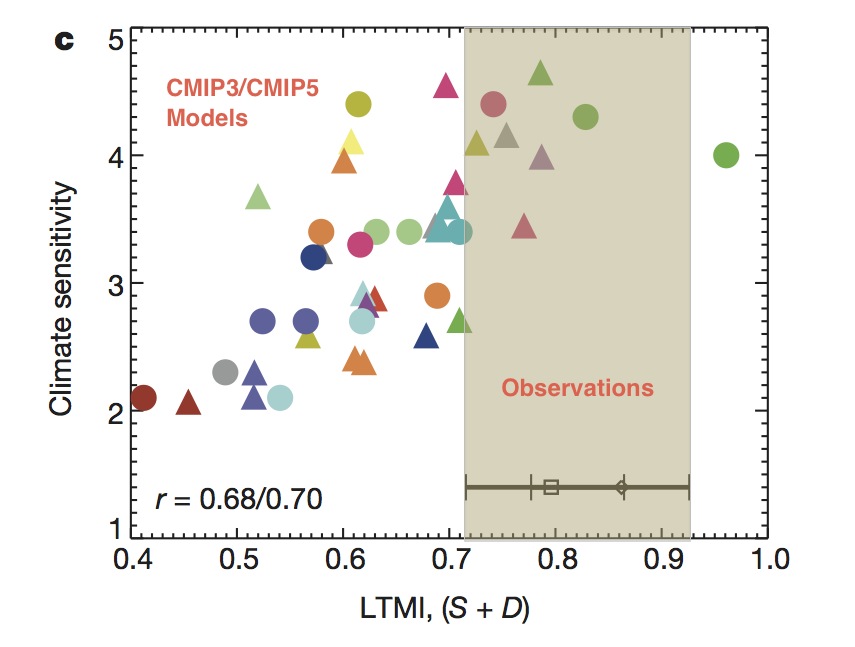

By looking at two reanalyses datasets (MERRA and ERA-Interim), Sherwood et al then try and assess which models have more realistic representations of the lower tropospheric mixing process, as indicated in the figure:

Figure (derived from Sherwood et al, fig. 5c) showing the relationship between the models’ estimate of Lower Tropospheric Mixing (LTMI) and sensitivity, along with estimates of the same metric from radiosondes and the MERRA and ERA-Interim reanalyses.

From that figure one can conclude that this process is indeed correlated to sensitivity, and that the observationally derived constraints suggest a sensitivity at the higher end of the model spectrum.

There was a interesting talk at AGU from Peter Caldwell (PCDMI) on how simply data mining for correlations between model diagnostics and climate sensitivity is likely to give you many false positives just because of the number of possible options, and the fact that individual models aren’t strictly independent. Sherwood et al get past that by focussing on physical processes that have an a priori connection to sensitivity, and are careful not to infer overly precise probabilistic statements about the real world. However, they do conclude that ‘models with ECS lower than 3ºC’ do not match the observations as well. This is consistent with the Fasullo and Trenberth study linked to above, and also to work by Andrew Dessler that suggest that models with amplifying net cloud feedback appear most consistent with observations.

There are a number of technical points that should also be added. First, ECS is the long term (multi-century) equilibrium response to a doubling of CO2 in an coupled ocean-atmosphere model. It doesn’t include many feedbacks associated with ‘slow’ processes (such as ice sheets, or vegetation, or the carbon cycle). See our earlier discussion for different definitions. Second, Sherwood et al are using a particular estimate of the ‘effective’ ECS in their analysis of the CMIP5 models. This estimate follows from the method used in Andrews et al (2011), but is subtly different than the ‘true’ ECS, since it uses a linear extrapolation from a relatively short period in the abrupt 4xCO2 experiments. In the case of the GISS models, the effective ECS is about 10% smaller than the ‘true’ value, however this distinction should not really affect their conclusions.

Third, in much of the press coverage of this paper (e.g. Guardian, UPI), the headline was that temperatures would rise by ‘4ºC by 2100’. This unfortunately plays into the widespread confusion between an emergent model property (the ECS) and a projection into the future. These are connected, but the second depends strongly on the scenario of future forcings. Thus the temperature prediction for 2100 is contingent on following the RCP85 (business as usual) scenario.

Implications

Last year, the IPCC assessment dropped the lower bound on the expected range of climate sensitivity slightly, going from 2-4.5ºC in AR4 to 1.5-4.5ºC in AR5. One of us (Mike), mildly criticized this at the time.

Other estimates that have come in since AR5 (such as Schurer et al.) support ECS values similar to the CMIP5 mid-range, i.e. ~3ºC, and it has always been hard to reconcile a sensitivity of only 1.5ºC with the paleo-evidence (as we discussed years ago).

However, it remains true that we do not have a precise number for the ECS. Sherwood et al’s results give weight to higher values than some other recent estimates based on transient estimates (e.g. Otto et al. (2013)), but it should be kept in mind that there is a great asymmetry in risk between the high and low end estimates. Uncertainty cuts both ways and is not our friend. If the climate indeed turns out to have the higher-end climate sensitivity suggested by here, the impacts of unmitigated climate change are likely to be considerably greater than suggested by current best estimates.

References

- S.C. Sherwood, S. Bony, and J. Dufresne, "Spread in model climate sensitivity traced to atmospheric convective mixing", Nature, vol. 505, pp. 37-42, 2014. http://dx.doi.org/10.1038/nature12829

- J.T. Fasullo, and K.E. Trenberth, "A Less Cloudy Future: The Role of Subtropical Subsidence in Climate Sensitivity", Science, vol. 338, pp. 792-794, 2012. http://dx.doi.org/10.1126/science.1227465

- A.E. Dessler, "A Determination of the Cloud Feedback from Climate Variations over the Past Decade", Science, vol. 330, pp. 1523-1527, 2010. http://dx.doi.org/10.1126/science.1192546

- A.P. Schurer, G.C. Hegerl, M.E. Mann, S.F.B. Tett, and S.J. Phipps, "Separating Forced from Chaotic Climate Variability over the Past Millennium", Journal of Climate, vol. 26, pp. 6954-6973, 2013. http://dx.doi.org/10.1175/JCLI-D-12-00826.1

- A. Otto, F.E.L. Otto, O. Boucher, J. Church, G. Hegerl, P.M. Forster, N.P. Gillett, J. Gregory, G.C. Johnson, R. Knutti, N. Lewis, U. Lohmann, J. Marotzke, G. Myhre, D. Shindell, B. Stevens, and M.R. Allen, "Energy budget constraints on climate response", Nature Geoscience, vol. 6, pp. 415-416, 2013. http://dx.doi.org/10.1038/ngeo1836

Frank, have you looked at the effect of introducing a delay in your investigation–there is usually a delay for a temperature effect in response to a change in forcing.

wili asked above

> Is there a long, fat tail on high-temperature end of

> sensitivity estimates and little or no tail on the other side?

That’s right. This is one case where an image search is productive:

http://www.skepticalscience.com/graphics/Climate_Sensitivity_500.jpg

Because, whatever happens when CO2 is doubled*, we know the temperature won’t stay the same, and won’t decrease. Climate sensitivity won’t be zero or less than zero — no tail in that direction.

Looking at Google image results turned up this blog by Justin Bowles (new to me) working on a clear simple explanation:

http://therationalpessimist.com/tag/charney-sensitivity/

That blogger’s “About” page says:

Ray, what delay between temperatures and cloud-forcing do you expect when we look at low altitude cloudes vs. medium altitudes cloudes? According to http://www.atmos-chem-phys.net/6/2539/2006/acp-6-2539-2006.pdf fig. 5 the effect is almost instantaneous. Anyway, the trend of the June-SST has not another slope then the May’s vs. August.

Frank, you’re citing a 2006 paper; have you read any of the more recent work citing it? http://scholar.google.com/scholar?cites=3842847038906355457&as_sdt=2005&sciodt=0,5&hl=en

Another caution that climate sensitivity during the Anthropocene may differ from that in the paleo record:

27 May 2013 doi: 10.1098/rstb.2013.0121 Phil. Trans. R. Soc. B 5 July 2013 vol. 368 no. 1621 20130121

The marine nitrogen cycle: recent discoveries, uncertainties and the potential relevance of climate change

Hank, in my view the matter of http://www.nature.com/nature/journal/v505/n7481/full/nature12829.html is NOT high cloudes but low and middle cloudes? So the googling to “stratosphere” or “tropopause” is not very useful indeed.

In my refereed paper the important thing was not the time of transport to altitudes above the tropopause but the lower part: it takes only a few ours to the troposphere as you can see it in the daily behavior of low clouds. So the expected delay should be so small, that it doesn’t matter by taking monthly data? Of course, when you have a link to a paper that shows, that there is a meaningful timelag for the cloud- forcing of low and middle clouds please let me know it.

Frank, check the “search within citing articles” to narrow the results; I didn’t suggest ‘googling to “stratosphere” or “tropopause”’ – I asked if you’d looked at the articles citing the 2006 paper you’re relying on.

Are you saying you found a source showing that sea surface temperature in the tropics is or isn’t varying along with the amount of low clouds in the same area? I just don’t follow what you’re saying yet.

Fig. 5 in Corti shows delays of hours to many days before you get the resulting change in insolation from above down to the surface, following a low cloud event that moves moisture up and away from that area, if I understand it.

But it’s an old paper. That’s why I asked if you’d read any of the citing papers subsequent to it, as it says much needs to be understood that they aren’t explaining yet in that paper.

Also, I don’t know how much of sea surface temperature depends on wind mixing at the surface, vs. upwelling of deeper water that’s often colder. Are you sure sea surface temp. follows low clouds closely due to changes in sunlight reaching the surface?

Hank, of course you are right when you look detailed at the SST. There are many influences as you mentioned. Anyway, I looked at longtime averages, the saisonal monthly SST- Graph is averaged from 1900…2012. And I think the basic question is still standing: If there is a stong positive feedback from lower/ medium clouds ( I think in this matter the physics didn’t change since 2006, the effect is very fast and good reflected in monthly data)than one should see it in different slopes of trends of the different month when there are temperature- delta of 1K and a general warming trend since 1975. When you calculate the trends for the single month than you don’t see a dependence of the slope on the absolute saisonal temperature. And that makes me wonder…

Pete- thanks for the pithy summary. I guess I’m not with the program in the sense that I’ve had some other conversations where people argue that this is a modeling cell size/operation issue…once you transport the water vapor out side the cell in which it originated different rules apply.

I’ve felt a tension/confusion between real world results vs the world as seen through a model for Sherwood overall. At this point I’m throwing up my hands and am going to write to Sherwood directly.

Even if we get more cloud bursts, as I would describe what you said- a clear statement to that effect would be useful.

My criticism of the Sherwood paper is that it doesn’t provide sufficient information to allow duplication of their observational results. In particular:

1. The drying index S is restricted to an area mostly around Indonesia. What month(s) and year(s) were used for the MERRA data?

2. The mixing index D excludes Indonesia and the Indian Ocean and is apparently restricted to just one month – september (year?).

3. There is apparently zero error on the MERRA/ERAI values for index D. Can this be true? This is the crucial result affecting the whole conclusion of the paper, since the observational value of S lies in the middle of model sensitivities. Only D is the outlier. So were the model values likewise calculated for the same area and one moment in time because they must evolve with increasing CO2 ? If yes – does that correspond to the same time (e.g. September 2012) as that used to calculate the value of D.

So I am not convinced that a different selection of the observational data could not have had an opposite conclusion.

John L #12 – Is there some good qualitative explanation for the lower estimate in Otto et al?

Otto et al. used a simple, well-known energy balance formula for calculating effective climate sensitivity over the historical record. They took a plausible historical net forcing time series and related that to surface temperature and ocean heat content observations, then linearly scaled the resultant sensitivity parameter to a 2xCO2 forcing (3.5W/m2) to get an answer of 2ºC.

Really Otto et al. itself doesn’t bring anything new to the discussion on climate sensitivity. It would probably be better to ask directly if the input time series (HadCRUT4 surface temperature, various ocean heat content records, net forcing taken from AR5) are compatible with sensitivity being > 3ºC as indicated by Sherwood et al. I would suggest it couldn’t be ruled out.

It might seem counter-intuitive that the same data which point to a best estimate of 2ºC could be compatible with minimum 3ºC, but there are several key implicit assumptions made by Otto et al. which are, at best, unsupported and at worst generally contradicted by available evidence – couple of examples:

1) They assume effective climate sensitivity measured over the historical record can give equilibrium warming. Multi-century GCM simulations have indicated that effective sensitivity can vary and typically increases over time following instigation of a significant forcing period. For example, see Figure 1 in Armour et al. 2012.

2) They assume the response to CO2-only forcing scales linearly with historical net forcing, which combines energy flux influence from numerous different factors. Some degree of linearity should be expected but it wouldn’t take a huge amount of divergence in each forcing to significantly alter the sensitivity result. As I understand it models have indicated a typical range of about +/-25% global temperature efficacy for various present day influences in comparison to CO2. As an illustration let’s say our present day net forcing is 2W/m2, which is a sum of +3W/m2 WMGHGs, -2W/m2 negative aerosol influence and +1W/m2 positive aerosol influence, then assign efficacies of 1, 1.25 and 0.75 respectively (which I understand is a plausible state of affairs). You then get an efficacy-adjusted net forcing of 1.25W/m2, which can be plugged into the Otto et al. formula with a resulting effective sensitivity of ~ 4.5ºC.

Taking into account implications of these assumptions and uncertainties in the input data, I think, makes reconcilliation with a >3ºC sensitivity plausible.

Frank–you have to fit to determine the best offset.

Clive Best – read the full methods section after the references. I guess it might not be included in the version you’re looking at so here’s a link. They used all months for years 2009-2010.

They also state ‘Values of D and S are similar over ten years of data or one year, and are similar whether individual months or long-term means for each month of the year are used.’ Although I think they’re referring specifically to the model data in this case.

To Gavin and Mike – Since they appear to be primarily using reanalysis data, is there really much independence between the reanalyses and GCM outputs for the variables in question?

Sherwood et al note :

” The spread arises largely from differences in the feedback from low clouds, for reasons not yet understood.”, and go on to attributr half the sensitivity spread to convective mixing.

May one ask : to what degree might the remainder arise from the correlation of low boundary layer cloud cover and variation in the dynamic albedo of the air-water interface , as calm seas and surface fogs are often correlated ?

Despite the excellent work done by these recent papers, the fact remains that global temperatures have remained essentially the same for more than a decade. The standard answer to this is to scoff that it’s too short a time and besides there have been such stillstands before in the record. I note that those stillstands were shorter and occurred during times when the CO2 forcing was significantly lower.

Also I recall many studies from past records showing the probability of climate sensitivity against a range of temperatures. The general character of these has been a sharp peak just above 2°C with a long tail to higher temperatures. I think the generally accepted 3°C value resulted from integration under that curve. Still the sharp peak remains a thorn in the side of this interpretation.

Of course next month temperatures may start on another 1990’s run, but one wonders.

I am aware that there have been no dearth of mechanisms given for the lack of rise in global temps in the past decade, but one still wonders. Basically the general attitude is, if it’s warming, the temps gotta go up and it ain’t. Hmmmm.

May I draw attention to the following letters in The Scotsman newspaper concerning the recent Sherwood et al paper..

http://www.scotsman.com/news/opinion/letters/climate-chaos-1-3253630

http://www.scotsman.com/news/opinion/letters/climate-of-fear-1-3257529

The second letter, from a global warming denial organisation called “The Scientific Alliance” based in Scotland, makes reference to “a critical commentary in the same edition of Nature” to which I do not have access.

For anyone wishing to respond , the address for letters to The Scotsman is Letters_ts@scotsman.com .

Chick Keller @65.

As nobody here will know what it is you mean by “global temperatures have remained essentially the same for more than a decade”, nobody will know whether you are right in pronouncing that “the fact remains.”

My own view of the ‘hiatus’ in global average surface temperature is that it is far from being a decade in length. The graphic here (usually 2 clicks to ‘download your attachment) shows the rate of global surface temperature rise increasing (red trace) up to 2007, which suggest to me that it is ‘less than a decade’.

So my scoffing at the longevity you propose is not unreasonable.

Concerning your recollection of the “many studies” of climate sensitivity, I would recommend you refresh your memory. AR4 Box 10.2 Figure 1 still provides the authoritative illustration of “sharp peaks”. And note that because OHC continues to rise, climate forcing (of which CO2 plays a significant part) remains positive and warming continues. Don’t it!

Chick Keller –

The general character of these has been a sharp peak just above 2°C with a long tail to higher temperatures. I think the generally accepted 3°C value resulted from integration under that curve. Still the sharp peak remains a thorn in the side of this interpretation.

I think the issue is that taking the peak as the best estimate leaves you in a situation where sensitivity is more likely to be higher. As an anology let’s say you take a 6-sided die and define the possible outcomes by grouping 1 and 2 into a single set then leave the other numbers as individuals. In this situation your most likely outcome is to roll into the “1 or 2” set – that’s your peak. However, you are actually more likely to roll any one of the other numbers.

With probabilities you need to carefully define the question of interest. If you have this peak at, say, 2 – 2.5ºC and want to ask which 0.5ºC slice is most likely then your answer is 2 – 2.5ºC. However, what if it’s more likely that sensitivity lies in the 2.5ºC – 4.5ºC space? It’s not clear to me why relative density should be considered the sole determinant for interpreting probability space in terms of where a single true value might be. How would you justify a best estimate of 2.3ºC if the true value were most likely higher?

In this framework of an individual study a 3ºC result would be best characterised as the median probability rather than a best estimate. To the extent that it is, I think 3ºC is considered a best estimate because of the amount of independent studies which find a median of around 3 +/-1ºC.

Slioch,

Doesn’t sound all that critical to me. Here’s the concluding paragraph:

“For now, Sherwood et al. have proposed and tested a convincing mechanism that explains half of the spread of models’ climate sensitivities, and which suggests that future climate will be warmer than expected. The fact that their findings are variously consistent and inconsistent with those of other studies poses further challenges for wide areas of research, including observations and reconstructions of climate systems, understanding of the processes involved, climate modelling, and analyses of climate simulations. All will be needed to solve the recondite climate-sensitivity puzzle.”

Methinks our denialist friend is counting on people not having access behind the paywall.

Paul S.

I’ve commented on this previously–but if you look at the distribution of different sensitivity estimates, it is actually bimodal, with one mode at ~3.5 degrees per doubling and one around 2.1 degrees per doubling. This suggests we may need to be more careful in how we define the concept.

#61 Paul S

Thanks for your explanation, it makes sense to me. One may also add that Trenberth and Fasullo noted that the Otto et al best ECS estimate was sensible to the chosen data set for ocean heat content. They explicitly mentioned that instead using the new ORAS-4 ocean reanalysis from ECMWF for the 2000s would raise the value from 2.0K to 2.5K.

Clarifying my point, very different estimates, including Ray Bradbury’s “bimodal” clusters does not necessarily mean large uncertainties. Combining different lines of evidence is difficult but you must really first interpret each method/result individually against all knowledge. Typically there is a good reason to assume a bias. If you don’t do this you will overestimate the uncertainties. Perhaps that is why IPCC still thinks a ‘likely’ range means 1.5-4.5, 35 years and a large number of confirming (or at least not contradicting) papers, using different methods, after the Charney-report? For example, as Paul S mentions there might not be any real (or rather: large) contradiction between the Sherwood paper and the result of the methods used by e.g. Otto et al.

I think it would be very interesting if someone would do a similar expert elicitation survey as Horton et al did for the sea level.

> Chick Keller

I read your guest post at Pielke’s blog a while back and your posts at John Daly’s. I liked the quotes from you at the bottom of this news story on Chylek but can’t figure out what you refer to by “the fact remains” in your post in this thread.

What fact?

Ray,

Interestingly the CMIP5 ensemble ECS estimates, according to the Andrews et al. 2012 method, show no sign of following a normal distribution. The model mean is about 3.2C but that lies in almost the least populated area of the 2.1-4.7C sensitivity space. There are two relatively dense clusters at 2.6-2.9C and 3.8-4.1C.

Chick K said, “I am aware that there have been no dearth of mechanisms”

I assume you know of Foster and Rahmstorf (201?) which blames solar, ENSO and I think volcanoes. Back out their influence and the trend line continues upwards unabated.

So, “no dearth”? Please post one or two alternate mechanisms.

71 John L says, “very different estimates, including Ray Bradbury’s “bimodal” clusters does not necessarily mean large uncertainties.”

In this layman’s (pet peevish) opinion, either they do or a value judgement must be made about the quality of the models.

Hank, (and Gavin)

re #50

Thank you very much for the pointer to the 1986 Senate Hearings of the Subcommittee on Environmental Pollution of the Committee on Environment and Public Works, with Sherwood Rowland and James Hansen. It is fascinating to read. There is a real sense that there are adults in the room, taking things seriously. In contrast to some of what is happening presently. Sighhh…

Slioch #66,

The Nature commentary accompanying the Sherwood et al paper can be accessed here.

> Paul S

> … no sign of following a normal distribution.

You wouldn’t expect them to, would you?

Say you have a stack of wood, and in it a little fire going, and you pour a cup of gasoline over it.

What’s the distribution of likely outcomes going to look like?

Certainly not a bell curve. No chance the fire size will decrease, or stay around zero, or one.

No chance the outcome is going to be less than the size of the fire originally.

Odds are the fire is going to get bigger — how much bigger? That’s the probability distribution.

And that depends; does the gasoline just burn up? So you get maybe an increase from 1.0 to 1.5.

Or does it add enough heat that some, or all, the unburned wood in the pile catches fire? So you get, oh, 3x or 4x or 5x or ….

That’s how the long tail thing works.

Basically the general attitude is, if it’s warming, the temps gotta go up and it ain’t.

I guess you missed those ice caps, the sea ice and even those big fluid oceans. They’re pretty hard to miss and to first order the thermodynamics involved in understanding this result is grade school stuff. Try harder.

At the Conversation Sherwood

http://theconversation.com/how-clouds-can-make-climate-change-worse-than-we-thought-21617

#69 Ray and #77 Stephen

Many thanks.

Wrong. 1979 to 1997.

Jim Larsen,

Foster & Rahmstorf, 2011,

Global temperature evolution 1979–2010

http://iopscience.iop.org/1748-9326/6/4/044022

Kaufman et al, 2011,

Reconciling anthropogenic climate change with observed temperature 1998–2008,

http://www.pnas.org/content/early/2011/06/27/1102467108.abstract

Cohen et al, 2012,

Asymmetric seasonal temperature trends.

http://www.pnas.org/content/early/2011/06/27/1102467108.abstract

Which is to be read in conjunction with:

Kosaka & Xie, 2013,

Recent global-warming hiatus tied to equatorial Pacific surface cooling

http://www.nature.com/nature/journal/vaop/ncurrent/full/nature12534.html

Note that where K&X’s model finds a warming in Eurasia, Cohen finds a cooling, in reality there’s been a cooling.

This person’s not going to be able to come back with much more strictly relevant stuff. And yes, the field has been narrowed down to the main players being ENSO, Solar Activity, and Aerosols, with a small winter regional contribution from loss of sea ice in which the ENSO may be playing a role. As a set of mechanisms only the exact relative roles remain to be wholly tied down. Unfortunately as Kaufman and Foster/Rahmstorf cover different periods they’re not readily comparable.

This whole idea that the lack of warming means AGW has gone away is as stupid as someone calling out a heating engineer because their heating isn’t working, and when the engineer arrives he find that all the windows are open. It really is that stupid, an ongoing forcing to warming (the heating system / AGW) is over-ridden by an extraneous factor (the windows being left open / ENSO,Solar,Aerosols).

The people who continually raise this canard seem as stupid as the person who calls the heating engineer in the example above. What confirms their stupidity is that they repeat this stuff without realising how stupid it makes them look!

Of course it is possible that some of them see the flaw in the argument, yet continue to push it. That’s symptomatic of a deep disrespect for those they hope will fall for it, which is dishonourable and unworthy.

Either way, I use the raising of this issue as Chick Keller has done as an indicator that I can safely ignore them, without fear of missing something of use, and get on with more profitable uses of my time. The hiatus is real, it is interesting, but it doesn’t disprove AGW.

Warming since 1998:

See figure 13 here.

(There is a clear graph of all this somewhere but I can’t find it.)

The temperature graph has a long term upward trend. Year to year it steps up in an El Niño year and steps down in a La Niña year. The La Niña right after the very hot El Niño of 1998 was a quite cool year compared to the most recent years, as you would expect.

But now, La Niña years are warmer than all El Niños prior to 1998. That is a lot of warming. How much in Kelvins is it?

Pete Dunkelberg,

Thanks for pointing that out. We need to stop using the language of the contrarians. There has been surface warming since 1998, even though the rate has slowed over that cherry picked time frame, versus the immediately preceding similar time frame (though I understand that the overlaping 15 years to 2006 shows a very high rate of change – we didn’t hear the contrarians talking about that). Even though we know there has been continued total warming, and that the trend is almost unchanged with temporary effects corrected for (perhaps, following Cowtan and Way’s paper, even without correcting for temporary effects), we may be helping to propagate a meme which is incorrect, by the language we use.

So we should deny the deniers, even when referring to surface warming. Let’s not acknowledge any pause or hiatus, just a possible slowing (of surface warming).

Hi Tony,

I just popped back because I remembered my rather intemperate rant above and thought I might have to reply.

I’m going to try to avoid being intemperate again, but: Anyone who says there has been no warming since 1998 can’t read graphs, and their opinion on AGW can safely be ignored on that basis alone.

Take HAD/CRU, which neglects high latitude northern hemisphere warming. Even in that dataset there was warming after 1998.

http://www.cru.uea.ac.uk/cru/data/temperature/HadCRUT4.png

The hiatus is seen to happen after about 2002, not 1998.

NASA GISS shows an increase in temperatures throughout the ‘hiatus’ and as 1) it’s publicly available data and methods, 2) it covers high latitude regions, 3) I’ve used it since I was a sceptic (when fellow sceptics seemed to like it because IIRC it had lower trend than HAD/CRU).

In my earlier comment I list research into this feature of recent global surface temperature evolution. Denying it really is playing into the hands of the denialists. It’s natural variation about a long term forced warming trend due to human activities, with CO2 being the most important single human forcing and the one with the potential to really ramp things up.

Letter:

Recent global-warming hiatus tied to equatorial Pacific surface cooling

Yu Kosaka & Shang-Ping Xie

Nature

(19 September 2013)

doi:10.1038/nature12534

Tony Weddle, speaking, I dare say, for many of us:

If only our flagship journals would cooperate. On the UF thread, Hank links to a recent Nature news feature:

It isn’t just the item’s author, who is after all a science journalist rather than a scientist, using the language of the deniers:

The news item is mostly about proposed explanations for where the “missing heat” is going (mostly into the oceans), but there’s plenty of denier-bait there too. With conflicting messages like these from the scientific community, it’s not hard to imagine that a layperson might be confused.

#83:

And statistically insignificant, i.e. there is no statistically significant departure from the existing long term (40 year) trend.

“The hiatus is real, it is interesting”

In the UAH satellite temperature record, which is the work of noted skeptics Spencer & Christie, before 1998 the OLS trend was less than the overall trend. In the last 180 months, the trend has increased. http://www.woodfortrees.org/plot/uah/last:180/plot/uah/last:180/trend/plot/uah/to:1999/plot/uah/to:1999/trend/plot/uah/trend

If by “hiatus” you mean “accelerated warming, accompanied by increasing loss in Arctic sea ice resulting in stuck extreme weather, increasing Greenland and Antarctic ice loss, collapsing ice shelves, worldwide accelerating glacial ice loss, more frequent deadly heat waves(including “black flag” days when it’s too hot for US Marines to train), near restriction levels in Lake Meade, record levels of heat and wildfire danger in Australia, declines in Lakes Chad and Victoria, declines in rice yields due to rising temperatures,….”

And if by “interesting” you mean “may you live in interesting times” then we agree.

Ref:

“First, ECS is the long term (multi-century) equilibrium response to a doubling of CO2 in an coupled ocean-atmosphere model.”

Do you know the time constant for this system?

Multi century seems to indicate a much slower response in average temperature to increase in CO2 level than I would think from observing:

– Day to night changes in temperature at clear sky conditions

– Summer to winter changes in ground temperature

– Typical duration of El Niño/ Southern Oscillation cycles.

El Niño/ Southern Oscillation (ENSO) has been mentioned to have intervals in the order of magnitude 2 – 7 years.(Ref.: http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/ensostuff/ensofaq.shtml#ENSO)

It would be interesting to know how much this new discovery would affect ECS and Earth System Sensitivity:

Rapid Soil Production and Weathering in the Western Alps, New Zealand

Isaac J. Larsen, Peter C. Almond, Andre Eger, John O. Stone, David R. Montgomery, and Brendon Malcolm

Science 1244908Published online 16 January 2014 [DOI:10.1126/science.1244908]

Found via: http://www.climatenewsnetwork.net/2014/01/rainy-mountains-speed-co2-removal/

That’s what so annoys me right now. It wasn’t that long ago that 30 years to get a good strong trend in temperature was the thing, and only denialists made hay with 3 or 5 year trends. Now there’s been a short period of flat temperature, on the order of 5 years, or 9 since the last warmest, just as has happened all the way through the 20th century, and idiots start talking about a hiatus or a pause, as if 5 or 9 years is significant.

More on the heating of the oceans at SkS

http://www.skepticalscience.com/warming-oceans-rising-sea-level-energy-imbalance-consistent.html

“Warming oceans consistent with rising sea level & global energy imbalance”

Posted on 29 January 2014 by dana1981, Rob Painting, Kevin Trenberth

“Key Points:

The ocean is quickly accumulating heat and is doing so at an increased rate at depth during the so-called “hiatus” – a period over the last 16 years during which average global surface temperatures have risen at a slower rate than previous years.

This continued accumulation of heat is apparent in ocean temperature observations, as well as reanalysis and modeling experiments, and is now supported by up-to-date assessments of Earth’s energy imbalance.

Another key piece of evidence is rising global sea level. The expansion of the oceans (as they warm) has contributed to 35–40% of sea level rise over the last two decades – providing independent corroboration of the increase in ocean temperatures.”

Re continued warming and sea level (wili ~94):

I would like to see more emphasis on rising sea level in public discussions. The average person is quite capable of taking in the simple connection, and the evidence has become solid (so to speak). Sea level rise on a decadal scale comes mostly from expansion and melting ice. Both are due to warming. Tends to cut through the confusion and obfuscation, for anyone not terminally resistant to connected thought. Sea level is the single simplest diagnostic of global warming.

guthrie,

I have the same pet peeve. People use short-term data all to frequently to “prove” their point. Whether it is 5-, 9-, 15-, 30-years, whatever. Unless they can show why the recent short-term deviation from the long-term is significant, then it is just noise. Witness how many poeple have pointed to the 2012 summer heat wave in the U.S. as proof that the planet is warming, or that the 2013-14 winter cold snap as proof that it is not. All these are just natural variations, albeit somewhat towards the extreme ends, but not unprecedented. Long term, the planet is still warming at ~0.6C/century. This trend has been in place for at least 133 years (longest reliable temperature dataset). Proxy evidence hints that it could be much longer. Some would even say that that is just a short-term variation in the long-term scheme of things. Either way, using the last 15 years or the previous 15 to make a point, misses the big picture. Unless of course that someone is selling air conditioners or snow blowers, then short-term trends can influence business.

“This trend has been in place for at least 133 years (longest reliable temperature dataset). Proxy evidence hints that it could be much longer.”

Mm, not sure what proxy evidence you are thinking of; most reconstructions show a cooling trend over multi-century times scales:

http://i1108.photobucket.com/albums/h402/brassdoc/SpaghettiGraphoftempsAR5Fig5-7.png

Dan H. perhaps forgot

Dan H.,

There is a big difference between drawing conclusions based on a single event or a short time series and drawing conclusions based on extreme values and other order statistics. Extrema are a gift from the gods of chance. We should use them.

As to your assertion of 0.6 degrees per century, I would be careful. If we extrapolate back even 100 years, you can’t fit the data without a nonlinear term.

Ray,

Of course. The data more closely mimics a sinusoidal rise. However, using the steeper rises or falls of the curve to extrapolate long-term changes runs the risk of being seriously off target. Extremes have always existed, and are often clustered. Explanations for the extrema are seldom correlated with long-term averages.