A new study by British and Canadian researchers shows that the global temperature rise of the past 15 years has been greatly underestimated. The reason is the data gaps in the weather station network, especially in the Arctic. If you fill these data gaps using satellite measurements, the warming trend is more than doubled in the widely used HadCRUT4 data, and the much-discussed “warming pause” has virtually disappeared.

Obtaining the globally averaged temperature from weather station data has a well-known problem: there are some gaps in the data, especially in the polar regions and in parts of Africa. As long as the regions not covered warm up like the rest of the world, that does not change the global temperature curve.

But errors in global temperature trends arise if these areas evolve differently from the global mean. That’s been the case over the last 15 years in the Arctic, which has warmed exceptionally fast, as shown by satellite and reanalysis data and by the massive sea ice loss there. This problem was analysed for the first time by Rasmus in 2008 at RealClimate, and it was later confirmed by other authors in the scientific literature.

The “Arctic hole” is the main reason for the difference between the NASA GISS data and the other two data sets of near-surface temperature, HadCRUT and NOAA. I have always preferred the GISS data because NASA fills the data gaps by interpolation from the edges, which is certainly better than not filling them at all.

A new gap filler

Now Kevin Cowtan (University of York) and Robert Way (University of Ottawa) have developed a new method to fill the data gaps using satellite data.

It sounds obvious and simple, but it’s not. Firstly, the satellites cannot measure the near-surface temperatures but only those overhead at a certain altitude range in the troposphere. And secondly, there are a few question marks about the long-term stability of these measurements (temporal drift).

Cowtan and Way circumvent both problems by using an established geostatistical interpolation method called kriging – but they do not apply it to the temperature data itself (which would be similar to what GISS does), but to the difference between satellite and ground data. So they produce a hybrid temperature field. This consists of the surface data where they exist. But in the data gaps, it consists of satellite data that have been converted to near-surface temperatures, where the difference between the two is determined by a kriging interpolation from the edges. As this is redone for each new month, a possible drift of the satellite data is no longer an issue.

Prerequisite for success is, of course, that this difference is sufficiently smooth, i.e. has no strong small-scale structure. This can be tested on artificially generated data gaps, in places where one knows the actual surface temperature values but holds them back in the calculation. Cowtan and Way perform extensive validation tests, which demonstrate that their hybrid method provides significantly better results than a normal interpolation on the surface data as done by GISS.

The surprising result

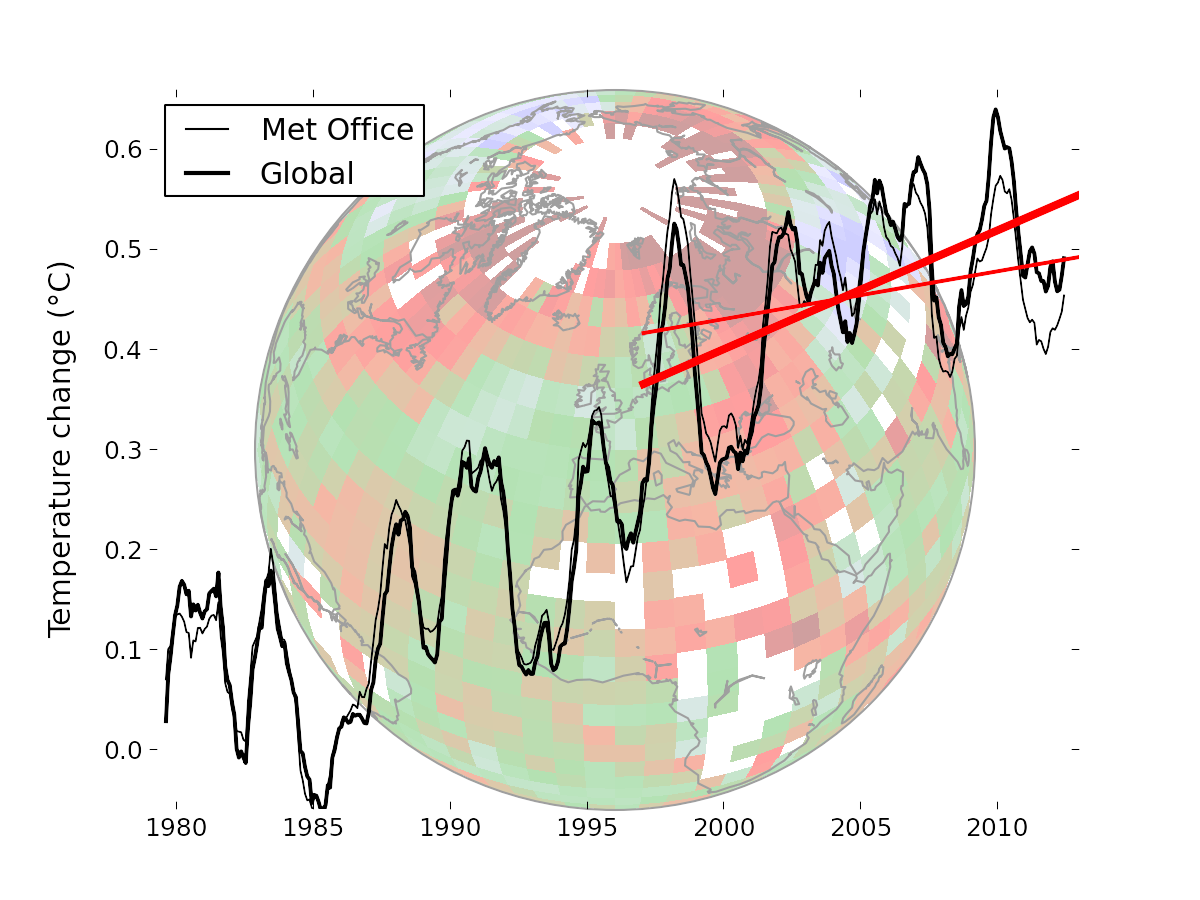

Cowtan and Way apply their method to the HadCRUT4 data, which are state-of-the-art except for their treatment of data gaps. For 1997-2012 these data show a relatively small warming trend of only 0.05 °C per decade – which has often been misleadingly called a “warming pause”. The new IPCC report writes:

Due to natural variability, trends based on short records are very sensitive to the beginning and end dates and do not in general reflect long-term climate trends. As one example, the rate of warming over the past 15 years (1998–2012; 0.05 [–0.05 to +0.15] °C per decade), which begins with a strong El Niño, is smaller than the rate calculated since 1951 (1951–2012; 0.12 [0.08 to 0.14] °C per decade).

But after filling the data gaps this trend is 0.12 °C per decade and thus exactly equal to the long-term trend mentioned by the IPCC.

The corrected data (bold lines) are shown in the graph compared to the uncorrected ones (thin lines). The temperatures of the last three years have become a little warmer, the year 1998 a little cooler.

The trend of 0.12 °C is at first surprising, because one would have perhaps expected that the trend after gap filling has a value close to the GISS data, i.e. 0.08 °C per decade. Cowtan and Way also investigated that difference. It is due to the fact that NASA has not yet implemented an improvement of sea surface temperature data which was introduced last year in the HadCRUT data (that was the transition from the HadSST2 the HadSST3 data – the details can be found e.g. here and here). The authors explain this in more detail in their extensive background material. Applying the correction of ocean temperatures to the NASA data, their trend becomes 0.10 °C per decade, very close to the new optimal reconstruction.

Conclusion

The authors write in their introduction:

While short term trends are generally treated with a suitable level of caution by specialists in the field, they feature significantly in the public discourse on climate change.

This is all too true. A media analysis has shown that at least in the U.S., about half of all reports about the new IPCC report mention the issue of a “warming pause”, even though it plays a very minor role in the conclusions of the IPCC. Often the tenor was that the alleged “pause” raises some doubts about global warming and the warnings of the IPCC. We knew about the study of Cowtan & Way for a long time, and in the face of such media reporting it is sometimes not easy for researchers to keep such information to themselves. But I respect the attitude of the authors to only go public with their results once they’ve been published in the scientific literature. This is a good principle that I have followed with my own work as well.

The public debate about the alleged “warming pause” was misguided from the outset, because far too much was read into a cherry-picked short-term trend. Now this debate has become completely baseless, because the trend of the last 15 or 16 years is nothing unusual – even despite the record El Niño year at the beginning of the period. It is still a quarter less than the warming trend since 1980, which is 0.16 °C per decade. But that’s not surprising when one starts with an extreme El Niño and ends with persistent La Niña conditions, and is also running through a particularly deep and prolonged solar minimum in the second half. As we often said, all this is within the usual variability around the long-term global warming trend and no cause for excited over-interpretation.

Jim @ 151 and Hank @ 148, also not too mention virtually all the arable land in Canada is in production, and if we were growing wheat and corn in Canada rather than in the Midwest US we in the US wouldn’t be growing our own food. Is there a problem with that?

Chuckle. Nope, just commenting that people do still forget that Mercator’s projection is not an accurate representation of the surface area of the planet. Did WTF correct that misapprehension about the spacing of the data points?

Who is responsible to put some pressure on countries such as China to reduce their emissions? Governments shall develop mandatory standards for import from those countries

My latest post in now a complete reply to Curry and also Lucia. For the people who followed the discussion here, it does not much many new ideas, however.

To the many questions questions about Arctic accuracy, it would be worth reading the paper on the cross-validation, which as Robert Way commented includes Arctic buoys in the middle of the region. They have done an excellent job on cross-validating their approaches.

Satellite trends (and any biases in them) aren’t relevant, as they use the satellite data patterns for each month individually to derive the satellite/surface relationship. Any satellite bias will drop out with the constant recalibration.

Roger Lambert – Regarding stoichiometry and the temperature change, atmospheric temperature represents perhaps 2.3% of the energy in the climate. I expect that the C&W estimates, which are within the 95% range of HadCRUT4, are far smaller than the uncertainties in ocean heat content.

—

To the authors, Way and Cowtan – I note that the trend difference for the short term (since 1998, which is of course statistically insignificant) appears to be due both to 2009-2013 increases above HadCRUT4 _and_ to a reduced excursion during the 1998 El Nino. Would it be correct to conclude that your estimate of global temperatures is less sensitive to ENSO than HadCRUT4?

> all this is within the usual variability

> around the long-term global warming trend

> and no cause for excited over-interpretation.

Are there any data outside the physics — phenology, breeding times, plankton bloom observations (handwaving frantically), maybe sound transmission across long distance in thermal layers — anything that would be expected to -amplify- a real warming signal but not noise? In the temperate zones, the phenology work suggests that nature’s a better detector of temperature changes than the instruments and statistics so far.

Hank Roberts, do you know of any quantitative estimates of the temperature trend based on phenological data? I would be very much interested.

Hank Roberts:

“Nope, just commenting that people do still forget that Mercator’s projection is not an accurate representation of the surface area of the planet.”

Poor Mercator. People also forget that Mercator’s projection was explicitly developed for use by mariners, and he was well aware of the scaling issue. The benefits for navigation at sea far outweighed the scaling issue which is why it became the standard projection for so long …

Victor Venema,

Of course, phenological studies are local. Nonetheless here is one:

http://arnoldia.arboretum.harvard.edu/pdf/articles/2007-65-2-climate-change-and-cherry-tree-blossom-festivals-in-japan.pdf

for Victor Venema — I’m an amateur and reader, so the best answer I can give you is “Yes, and here’s how I’d look for that”

https://www.google.com/search?q=estimates+of+the+temperature+trend+based+on+phenological+data

and

http://scholar.google.com/scholar?q=estimates+of+the+temperature+trend+based+on+phenological+data

This won’t be the global annual average temperature trend.

All phenology is local.

There’s at least one RealClimate discussion of the subject; the search box (upper right) will find “phenology”

As a scientist with an Ivy league Ph.D. I don’t care much about credentials and they do not play a role when I act as a peer reviewer or editor. In order to pass peer review, ones needs to have spent quite a lot of time reading the literature to place a study in context. So, if scientists who have not published much in a field do their homework on the literature and come up with an important analysis of data, more power to them. This contrasts with bloggers and critics who have a poor understanding of scientific research, don’t know the relevant literature and usually are not competent to criticize the methods.

According to Google News, this story is being largely ignored in the media.

here’s another:

Environ. Res. Lett. 8 (2013) 035036 (12pp) doi:10.1088/1748-9326/8/3/035036

Shifts in Arctic phenology in response to climate and anthropogenic factors as detected from multiple satellite time series

Heqing Zeng1,2, Gensuo Jia1 and Bruce C Forbes3

http://iopscience.iop.org/1748-9326/8/3/035036/pdf/1748-9326_8_3_035036.pdf

(focusing more on what can be derived from satellite work, noting that phenology is local, and trying to make sense of the local data, which is highly variable — some of that local variation would be “noise” and some would be attributable to the site’s location/elevation/land use/history)

I’m posting what I find hoping to tempt some scientist who knows more about this to let that trickle down from her brain through her fingertips onto the screen here :-)

Thanks for the information on phenology. Looks like something I should look into.

In Germany, this story is covered by three main newspapers. Sueddeutsche Zeitung, TAZ, Spiegel Online (all in German).

The article in Spiegel Online by the infamous Axel Bojanowski is so bad that I gave the link a NoFollow tag in my post, normally only NoFollow WUWT. One of the main climate blogs, PrimaKlima, just had the headline that Spiegel had written a balanced and interesting article on climate. That is news, in case of Spiegel.

Whilst trying to bear in mind Stefan’s warning not to “over-interpret short-term trends, one way or the other”, I can’t help musing over the following:

Foster and Rahmstorf (2011) published a paper in Environmental Research Letters in which they largely removed the influence of ENSO, solar variations and volcanic eruptions from the five main global temperature series (three surface and two satellite)from 1979 onwards. The results, of course, already have shown that “the pause” is non-existent, see: http://www.skepticalscience.com/pics/FR11_Figure8.jpg.

However, one of the slightly surprising aspects of that Foster and Rahmstorf graph is the relentlessly linear nature of the trend in global temperatures that it shows since 1979, in spite of increasing levels of GHGs in the atmosphere.

Cowtan and Way’s contribution appears to be additional to that revealed by Foster and Rahmstorf. What might be the result of applying the Cowtan and Way methodology to HADCRUT4 AFTER its modification by the Foster and Rahmstorf method? Might it be that it would then show global warming, as expressed in the surface temperature data, to be accelerating?

BillD (#162): I would think that as an expert reviewer you can detect poor science, and can thus not have to worry about the author’s credentials. For a non-scientist trying to read a scientific paper, do you think that I should look up the background of the authors? I personally have seen a lot climate misinformation from qualified scientists who are outside of the climate field. Or do you think I should only check to see if the paper is in a decent journal, and trust the peer review process? I am trying to learn how to evaluate scientific information from the perspective of a limited science background, and appreciate any help in this area.

> Cowtan and Way’s contribution appears to be additional

But if you look back at the beginning, you’ll see it’s not:

Their contribution points out that the appearance of a “hiatus” is over-interpretation of short term noise, and that better analysis reduces the noise level so the signal is clearer. And lo, the signal is the same, not different.

Wiggles “appear to be” because we over-interpret what we see.

That’s why statistics. The long-term global warming trend is.

#166 Slioch,

This is a Foster and Rahmstorf-style analysis (applying ENSO, solar, volcanic, etc) to Cowtan & Way’s hybrid correction:

http://contextearth.com/2013/11/19/csalt-ju-jutsu/

Amusing that Inspector McIntyre raises suspicions on C&W but it backfires on him as the jigsaw pieces fit tightly together. A slight recent cooling in comparison to the model is made up by the abnormally high temperatures in the Arctic. C&W reveal this correction and science marches forward.

Hank #167 – I don’t think that quote provides the information you attribute to it.

Foster and Rahmstorf removed the effect of ENSO from the temperature records. During La Nina episodes more heat than normal is absorbed into the oceans. Taking that into account in adjusting the temperature record results in a higher average global surface temperature in the record than formerly. Similarly, taking into account that the Arctic has warmed more rapidly than other regions results in a higher average global surface temperature in the record than formerly. The two phenomena, La Nina and Arctic warming, are not the same and it therefore appears that their effects on adjustments to the temperature series are additive.

#166 Slioch,

Bear in mind that although global emissions rose near-expponentially to the mid-1970s, the rise from then to c. 2000 was not *that* great – the West finished industrializing, oil crises limited consumption rises, and then the USSR collapsed. So to an approximation, the increase in CO2-forcing was linear from perhaps 1975-2000, after which China started on the path of digging Australia up and burning it.

Given the delays in the system, it follows that you’d expect a near-linear trend from 1980-2005 at least. All to a first approximation, of course.

Stefan” “The public debate about the alleged “warming pause” was misguided from the outset, because far too much was read into a cherry-picked short-term trend. Now this debate has become completely baseless, because the trend of the last 15 or 16 years is nothing unusual – even despite the record El Niño year at the beginning of the period.”

OK, well that’s good then, but it does NOT chnage the fact that at the release of the ARG WGI massive coordinated ‘marketing campaign’ [by rent seekers and special interest groups] was implemented globally that focuses solely on “the IPCC confirms the pause”.

Sorry but Stefan, the billions of people exposed to that “public opinion making campaign” are not visiting this site now – so whether the “debate was baseless” is utterly irrelevant here, it was still “successful”. As a Public Policy issue it still has legs. being “right” is one thing .. winning the minds of the Public is another matter entirely. In this regard the UNFCCC, the IPCC and all Climate Scientists have missed the boat here and failed to communicate the actual REALITY.

Also, if this “arctic region” errors in surface temps is correct over the last 15 years then what is being done about all the current figures in AR5 which are not correct going all the way back whenever in your GCM models? Clearly, unless I am making a serious error here, if this paper is correct then every estimate of past Global mean avg surface temps is therefore incorrect. That is not a complaint about past errors, only a cry from the wilderness that Global Temps must have been significantly understated all the time, including the latest “data summaries” in the AR5 WGI barely 8 weeks old now.

To me this is a PR disaster of global proportions, not a reason to be chipper and smug about being “right”. Yes? No? thx Sean

@169 “after which China started on the path of digging Australia up and burning it.” excuse me, as an aussie, i just had to laugh out loud at that. :) Well damn it, at least we have the cleanest burning coal and NG on the planet. :)

@154 Alfred re “some pressure on countries such as China to reduce their emissions?” Whilst I understand the concern here I think it’s unfair, biased, and misplaced considering the big picture over time.

A rather large % of their CO2e in the last 2 decades growth has been being the global manufacturer for OECD nations. Yet OECD emissions have not significantly reduced in relative terms to China’s increase.

and the real crux of this issue imho is about 1850 to now and the total cumulative carbon budget used up. [is it over 65% of total budget already used before 450ppm +3-4C?]

China, India, Africa & sth america did not contribute any significant amount of CO2e relative to nth america, europe, russia, japan, korea, australia etc have contributed to date. Sure the reality of now needs to be rationally dealt with, but this should not be done by ignoring History either. imho, don’t you think? these matters seem far harder to work out than the science itself.

> Clearly, unless I am making a serious error here,

> if this paper is correct then every estimate of past

> Global mean avg surface temps is therefore incorrect.

Seems to me you’re making a serious error here.

As I read what’s said above:

They improved the accuracy of some data points in the Arctic.

The global average for those years calculated using the improved data remains within the gray fuzzy uncertainty band drawn around the long term trend line. The trend is unchanged by adding this improved data point. The trend is unchanged using the data points over the last fifteen years. No lurch, no hiatus; wiggles.

Note this also doesn’t mean there’s a ruler-straight line inside. The uncertainty is showi by that gray fuzzy band, as best we can tell — that’s the measure of the uncertainty.

Robert already commented on this but I have the same question, where will we be if China and India don’t (or do) clean up their carbon dioxide issues? http://savetherainforest.com/pollution-and-politics-in-china/

And what about the US? We’re currently second to China in our CO2 emissions. Are we already past the point of no return or can we correct the damage done?

> Clearly, unless I am making a serious error here,

> if this paper is correct then every estimate of past

> Global mean avg surface temps is therefore incorrect.

Beyond what HR said, I would add that if the Arctic was more stable in the past and tracked what the rest of the world as doing (stationary scaling implied) then the correction would not be needed.

In fact, what we are seeing is a subtle divergence between model and data that has not been seen in the past. I created a model called CSALT which is based on applying variational principles wrt thermodynamic parameters, and this can detect these subtle changes across the historical records. This link shows how it is used with Cowtan and Way’s hybrid correction:

http://contextearth.com/2013/11/19/csalt-with-cw-hybrid/

BTW, this model was originally inspired by the success of F&R and C&W and K&X (Kosaka and Xie) and others in creating simple models of the temperature time series.

#176–A nice source for up-to-date carbon emissions data is here:

http://www.globalcarbonatlas.org/?q=emissions

China accounted for 27% of world emissions last year; India, roughly one quarter of that. Call the two about one third of total emissions. Clearly, if they magically stopped emitting tomorrow, we’d still be in trouble climate-wise. (Perhaps slightly less trouble, but still not out of the soup.)

To match that imaginary reduction, the rest of the top 11 or so emitters would have to stop cold turkey as well. That ‘distinguished’ club includes (in descending order) the US, Russia, Japan, Germany, South Korea, Iran, Saudi Arabia, Canada and Indonesia.

From there, we’d need the other 204 identified emitters all to pony up and do their bit.

From this I conclude that there’s really no way out of everybody mitigating emissions. Yes, historical realities and present realities both need to be considered. The best compromise I know about is called “contraction and convergence.” See:

http://www.gci.org.uk/index.html

For “Robert” and “Jenna” —

Click the Start Here button, top of the page.

Hi, I haven’t read all the comments so maybe this has been discussed already.

The polar region in question represents about 3% of globe area (from 70N to 90N seems to be in the ballpark). So if this region contribute 0.04degC/decade extra to the global avg then the unobserved or “extra” warming in this region is given by 0.04/3% = 1.33 degC/decade. Maybe someone can comment on how likely this is?

Phenology!

http://www.cbc.ca/news/technology/missing-sea-ice-data-found-in-crusty-canadian-algae-1.2432103

I think that in some ways, discussions of who is “responsible” for emissions and who needs to cut back are misguided. The reality is that the planet needs to develop a new, clean, renewable energy infrastructure. Whichever nation cracks that nut first is going to make a killing selling it to everyone else. This isn’t about cutting back so much as it is about realizing that this is a race to get where the world needs to go first.

@Hank #180:

Yeah, looks interesting, but I can only access the abstract and the SI. Like to see RC do a piece on this. The last sentence of the abstract says:

But I can’t find a graph of this anywhere. I can only assume it was a more gradual decline until 50 – 60 ya when the polar amplification really kicked in. Of course, the denial-o-sphere is getting great mileage out of that little breadcrumb, no doubt assuming that the 150 y decline is linear or something (in the absence of further detail).

I was with you up to the second step but you lost me on the third one. I didn’t look, but I”d expect one of the authors is flagged as the “correspondence author” — the one to contact.

Asking is better than assuming. Paywalls aren’t absolute barriers, just hurdles, for getting at most science.

http://www.research-in-germany.de/dachportal/en/Research-Landscape/News/2013/11/2013-11-18-arctic-algae–witnesses-of-sea-ice-decline.html

#175 #177 TY. I understand the trend, as before the ‘lower’ temp was still inside the bands too. I can also appreciate the issue of increasing arctic melts in the last 15 years especially being an indicator which was outside the prior global avg temp figures. What I do not get is that one day we wake up and realise/are told the arctic temps are being significantly underestimated, and we have very limited surface temp stations today to ensure the data sets have a decent input to begin with.

Given it was already known for decades that most warming will rapidly rise in the polar regions first, and given it;s already clear that is exactly what’s happening in the nth arctic circle land masses, I am totally surprised (naively probably) that across the arctic north pole there is such a huge gap in real time surface temp monitoring and an over-reliance on the ‘estimating’ it using satelite and modeling.

That this “gap/under-estimate” actually makes a significant lift to avg global surface temps as a whole the last 15 years to make a such major shift in the graph red lines is to me “startling”.

From a PR reputation pov I’d be embarrassed if things are so out of whack. ie if the new study becomes the “norm” and proven reliable. Given the “pause/hiatus” issue already, it simply makes the whole process dubious to the average person, and even more so to the anti-activists of how unreliable the “figures” are just released in Sept by the ipcc. See?

I am not ‘arguing’ the validity of the science, nor trends, nor AGW, nor issues that constrain certainty … but if anyone ever needed a professional Sachi & sachi type Advertising Public Relations and Public Communications Dept it is the IPCC and Climate Scientists globally.

That Cowtan and Way are on Curry’s blog may be seen as a positive but I think it’s the most dangerous of things. The real world simply doesn’t operate like the ‘controlled accepted norms’ inside of academia and climate science circles. “Business” is very very different. Truth is irrelevant. Winning is all. cheers

According to the Arctic Pilot, a drop in air temperature indicates the presence of ice. So, isn’t there a danger that an extrapolation of sea surface temperatures to ice covered regions could overstate the actual temperature

Andrew #171

Barton Paul Levenson provides a handy table, including ln[atmospheric CO2 concentration] (not emissions), since 1880 (though only to 2007) here: http://bartonpaullevenson.com/Correlation.html

Since 1880 the graph of ln[CO2] against time shows an acceleration, though it is more or less linear for the last few decades.

Sean said:

I would instead say it is subtly significant. The bump it provides may not even be 0.1C while the year-to-year fluctuations can easily be that much.

Curry right now is mocking it as being a “2.8% solution” while she should really know better than that. She should actually be in the business of teaching her online students that science often advances by making many little steps instead of huge breakthroughs.

The C&W Hybrid model is one of those missing little pieces of the larger jigsaw puzzle that not only seems to fit but provides valuable insight into what is actually happening the last few years — that the heat buildup is spatially arranged and it has shifted northward the last few years.

Blair Dowden @167

There seems to be an assumption that there should be some way a person with limited knowledge of a technical discipline can evaluate a scientific paper in that discipline without doing the work to be at least a journeyman.

There are many things I can do, and my personal history is an argument that some scientific generalists are left in the world. However, if someone presents me with a paper on the fine details of resolving the x-ray crystal structure of an enzyme and its impact on a metabolic pathway or drug binding, I’m going to step back from making a judgement about it myself. I cannot evaluate the math or the theory behind the Higgs boson. You might want to consider the same possibility- that unless you put the archetypical 10,000 hours in, you won’t have the ability. As we’ve said in our family, “that’s a wish you can’t have little bear”. (props to anyone who gets that kiddy lit reference)

Now, if a paper is important enough to me, I will do two things you haven’t considered yet, one of which probably isn’t available to you.

First, I will use google scholar to look up citations of the paper in question to see if the conclusions have been affirmed or challenged, or simply ignored. In the same light, depending on how recent the paper is you can look for review articles on the topic that cite the paper, and see what at least one expert says about the position of that paper in the general body of knowledge. I will NOT use blogs as a source for those opinions.

The other alternative I have, that you do not, is that being able to sign my name with Ph.D. and having a visible professional presence in the sciences under my real name, I can and do write to scientists whose work interests me, ask a respectful question and get a reply. I have done so recently with scientists involved in an aspect of climate change and one has kindly responded and I’m waiting on the other.

> I can and do write to scientists whose work interests me,

> ask a respectful question and get a reply.

This, done right, works even for those of us without PhDs.

My father taught his Biology 101 students how to do it (back

in the days of paper offprints and long distance phone calls).

The best summary advice I know about asking smart questions — written long ago — came, ironically, from that gun’totin’ climate’nialist ‘ibertarian ‘nix guy ESR:

How To Ask Questions The Smart Way – Catb.org

(If he’d applied his own methods, he’d know better about climate)

This approach works — whether you’re a gradeschooler or college faculty — if you ask with preparation.

As Dave123 says: be interested.

Show you’ve read some of the person’s work, and looked into the citing papers.

I think we’d see a lot more scientists responding in public forums, if those asking questions made that much effort first.

Dave123 @~190,

Thanks for that! It is exhausting trying to point out that expertise is, well, expert. Current New Yorker has a bit about Syrian blogger who says something similar about his (different line of country) work:

Also WebHub just before: “science often advances by making many little steps instead of huge breakthroughs”

Blair Dowden #167:

I’d echo Dave123’s advice, with the proviso that a non-expert should suspend acceptance of a newly-published finding until it moves toward the Einstein end of John Nielsen-Gammon’s Crackpot-Einstein Scale. That may take years, but often takes less time for surprising new results than for incremental contributions.

Re: evaluating scientific information, here’s an excerpt from Matt Strassler’s Of Particular Significance blog:

What Matt seems to be pointing out is that we all need a good B.S. detector! Read a ton of abstracts, read a couple of the highly cited papers all the way through. Even if you can’t follow all the math pay close attention to Analysis, Discussion, and/or Conclusions sections. A slight familiarization with the topic can oftentimes be enough to make your B.S. detector useable.

@190-194 all great comments and ideas. I’d echo the point that one doesn’t need a PhD to email questions to “expert” sources and get not only a reply but a very useful and genuine one, with extra resources offered. Sincerity, some humility, and respect is the key imho.

The tip from Dave “I will NOT use blogs as a source for those opinions.” is a no-brainer and should be followed. No matter how many PhDs, “fellows” or science degrees the Blog owner or contributors may ‘appear’ to have.

[realclimate being a excellent exception that proves the rule]

> Crackpot-Einstein scale

see also: John Baez’s Crackpot Index

.

Dave123 (#190) and Mal Adapted (#193), thanks for the advice. I have done the work to be a journeyman in climate science, but of course there are many other fields I know much less about. I understand that this paper is piece in the puzzle of where the extra long wave radiation retained by increasing greenhouse gases has gone. I know that the paper has a very reasonable thesis that a method of estimating the Earth’s average temperature that leaves out the high Arctic will return too low a result. I have no ability to evaluate if the authors did their work correctly. While you gave good advice about contacting the author, learning more about how to calculate global average temperature is not a priority for me.

I tried to use ‘scientific meta-literacy’ to get a quick feel for how reliable the paper is. There is no revolutionary hypothesis, and it is published in a decent peer reviewed journal. But I did notice the lead author was from outside climate science, and in my experience that is often a problem. My opinion now is that the paper contains little actual climate science, meaning that it could be written knowing nothing about the greenhouse effect, or maybe even without knowing about the existence of the sun. Therefore an X-ray crystallographer may be well qualified to write such a paper, and bring with him some new ideas.

Perhaps more interesting is my response to the Simple Physics and Climate article here last week. I am interested in the physics of the greenhouse effect, so I tried to follow the related paper. I was fine until section 1.2 when it switched gears into a higher level of math. While I cannot claim to fully understand what they are doing, the assumptions being made just look wrong to me. I would write this off as my ignorance except for their result. Forgive my arrogance, but I have High Confidence that raising pCO2 from 280 ppm to 400 ppm will not give a forcing of 6.6 deg C before feedbacks. That rather contradicts the IPCC WG1 reports which give 1.2 deg C for a CO2 doubling. To explain the order of magnitude difference by invoking unspecified (negative!) feedbacks is absurd. So what am I supposed to do? I asked a question in the forum, but it did not get answered. I don’t feel qualified to contact the authors.

I see no reason to think that cosmic radiation has any significant effect on our short term climate, so the conclusions of the paper are not very surprising. But either the authors chose to use an inappropriate method for calculating the greenhouse forcing, or there is something seriously wrong with my understanding, and I would like to know which.

Blair, to the extent I follow the “related paper” link, the article is for various reasons proposing an vastly over-simplified climate model with basically only one or two major positive feedbacks involved, particularly the increased height of the warmer troposphere. They are not saying that this is in any way a realistic model, afaics.

Blair, physicist John Baez said the same thing you did, that further explanation was needed to clarify that question.

(in that thread, where John Baez says: 13 Nov 2013 at 2:35 AM)

Perhaps someone will make the attempt.

The Cowtan & Way paper distinctly say the area they cover is 16% of the globe. That area that is missing data.

Thus an early comment here goes: “Remarkable, 50% of the global warming has been hiding out in the 16% of the Globe we can’t accurately monitor temperature. Of the 50% increase in warming how much of that was attributed to the Poles and how much from Africa?”

Later someone responds “I guess the Artic.” [Arctic] RC says? Nothing?

The Arctic & Antarctic circles each equal 4% of the globes surface area = 8%

Meaning that if 16% is actually the combined area covered by Cowtan and Way, this leaves 8% outside the arctic/antarctic circles. {no I haven’t checked thru the full paper to see how this delineated by them – yet]

So we now come to Judith Curry http://judithcurry.com/2013/11/19/the-2-8-effect/ She heads her ‘blog post’ about their paper as “The 2.8% effect”

She gets 2.8% by geography for the surface area of the Arctic Ocean. And mentions 70-90N as being the location of Arctic region studied.

OK, no problem there. Then Kevin Cowtan shows up and posts this: “Thank you for that thoughtful post.” and few other things. http://judithcurry.com/2013/11/19/the-2-8-effect/#comment-415504

Does he mention the Blog title 2.8%? No.

But he does say this: “I for one haven’t found a good way of communicating this last issue to a lay audience.”

Plus this short note: http://judithcurry.com/2013/11/19/the-2-8-effect/#comment-415547

OK so what we have here is that with in 6 days of this material being posted on RC, you have a whole thread on Curry and shared cross-posted across multiple anti-science blogs sites and news reports (possibly or soon) that has EFFECTIVELY RE-FRAMED THE BASIC PARAMETERS OF THIS Science Paper into:

“Climate Scientists claim that new corrections 2.8% of the globes surface increases Global Warming by 50% over the last 16 years”

And even the authors do not say a word about this…..? Question mark becausue I cannot be sure. maybe the specifically address the specific area but that is NOT what I am addressing here. It is the PUBLIC IMAGE out there in the Public Consciousness.

Whether C&W are right or frauds or genuii within a week the Scientists have lost control of this public discourse…. and have been misrepresented ie ‘verballed’ by others as well as complimented at times.

More “twisting/reframing/distortion goes like this:

Kevin Cowtan | November 19, 2013 at 5:54 pm |

No, length is only one of the problems. We shouldn’t be drawing conclusions from trends at all, unless we can isolate the contribution we are interested in and know it is linearly increasing.

Peter Davies | November 19, 2013 at 6:29 pm |

+1 ‘No conclusions should be made from trends at all’. As David Springer would say. Write that down. /end quote

Meanwhile on another Blog page http://judithcurry.com/2013/11/18/uncertainty-in-arctic-temperatures/#comment-415279

Robert Way says: ” We have updated our FAQ to address several of the comments from here including the incorrect assertion that we included reanalysis into our reconstruction.”

FAQ says: Do you use any form of atmospheric reanalysis in your data?

Atmospheric reanalysis data are not used in our global temperature reconstructions. We use them for validation where observational information is limited, and for uncertainty estimation.

and

In our results we show that atmospheric some reanalysis products perform reasonably well in determining surface air temperature (SAT) in the Arctic and Antarctic similar to results shown by Screen and Simmonds (2011), Screen et al (2012) and Screen and Simmonds (2012).

http://www-users.york.ac.uk/~kdc3/papers/coverage2013/faq.html

Do they mention **the incorrect assertion** by Curry? No. Has it been “corrected” by Curry or all the rest? No.

Who would know if the explanation on the FAQ means “Atmospheric reanalysis data are not used in our global temperature reconstructions.” Yes, no, who knows?

Way persists with Curry: “Finally if you will be discussing our paper I was wondering if you could give your opinion on our cross-validation measures, our comparison with the IABP data, the tests with interpolating from SSTs versus land and the errors associated with leaving regions null (e.g. setting trends to global average).

I look forward to this continued discussion but I feel that these issues were not addressed last time so it would be worth discussing further.”

Well, thanks, that does me in. :) All the best RC.

I would also suggest, given the ‘pattern’ shown already, that Judith Curry is “playing them” with a thin veneer of ‘genuineness’… which sends them off (especially Cowtan) chasing more and more info down the rabbit hole.

http://en.wikipedia.org/wiki/What_the_Tortoise_Said_to_Achilles