Readers will be aware of the paper by Shaun Marcott and colleagues, that they published a couple weeks ago in the journal Science. That paper sought to extend the global temperature record back over the entire Holocene period, i.e. just over 11 kyr back time, something that had not really been attempted before. The paper got a fair amount of media coverage (see e.g. this article by Justin Gillis in the New York Times). Since then, a number of accusations from the usual suspects have been leveled against the authors and their study, and most of it is characteristically misleading. We are pleased to provide the authors’ response, below. Our view is that the results of the paper will stand the test of time, particularly regarding the small global temperature variations in the Holocene. If anything, early Holocene warmth might be overestimated in this study.

Update: Tamino has three excellent posts in which he shows why the Holocene reconstruction is very unlikely to be affected by possible discrepancies in the most recent (20th century) part of the record. The figure showing Holocene changes by latitude is particularly informative.

____________________________________________

Summary and FAQ’s related to the study by Marcott et al. (2013, Science)

Prepared by Shaun A. Marcott, Jeremy D. Shakun, Peter U. Clark, and Alan C. Mix

Primary results of study

Global Temperature Reconstruction: We combined published proxy temperature records from across the globe to develop regional and global temperature reconstructions spanning the past ~11,300 years with a resolution >300 yr; previous reconstructions of global and hemispheric temperatures primarily spanned the last one to two thousand years. To our knowledge, our work is the first attempt to quantify global temperature for the entire Holocene.

Structure of the Global and Regional Temperature Curves: We find that global temperature was relatively warm from approximately 10,000 to 5,000 years before present. Following this interval, global temperature decreased by approximately 0.7°C, culminating in the coolest temperatures of the Holocene around 200 years before present during what is commonly referred to as the Little Ice Age. The largest cooling occurred in the Northern Hemisphere.

Holocene Temperature Distribution: Based on comparison of the instrumental record of global temperature change with the distribution of Holocene global average temperatures from our paleo-reconstruction, we find that the decade 2000-2009 has probably not exceeded the warmest temperatures of the early Holocene, but is warmer than ~75% of all temperatures during the Holocene. In contrast, the decade 1900-1909 was cooler than~95% of the Holocene. Therefore, we conclude that global temperature has risen from near the coldest to the warmest levels of the Holocene in the past century. Further, we compare the Holocene paleotemperature distribution with published temperature projections for 2100 CE, and find that these projections exceed the range of Holocene global average temperatures under all plausible emissions scenarios.

Frequently Asked Questions and Answers

Q: What is global temperature?

A: Global average surface temperature is perhaps the single most representative measure of a planet’s climate since it reflects how much heat is at the planet’s surface. Local temperature changes can differ markedly from the global average. One reason for this is that heat moves around with the winds and ocean currents, warming one region while cooling another, but these regional effects might not cause a significant change in the global average temperature. A second reason is that local feedbacks, such as changes in snow or vegetation cover that affect how a region reflects or absorbs sunlight, can cause large local temperature changes that are not mirrored in the global average. We therefore cannot rely on any single location as being representative of global temperature change. This is why our study includes data from around the world.

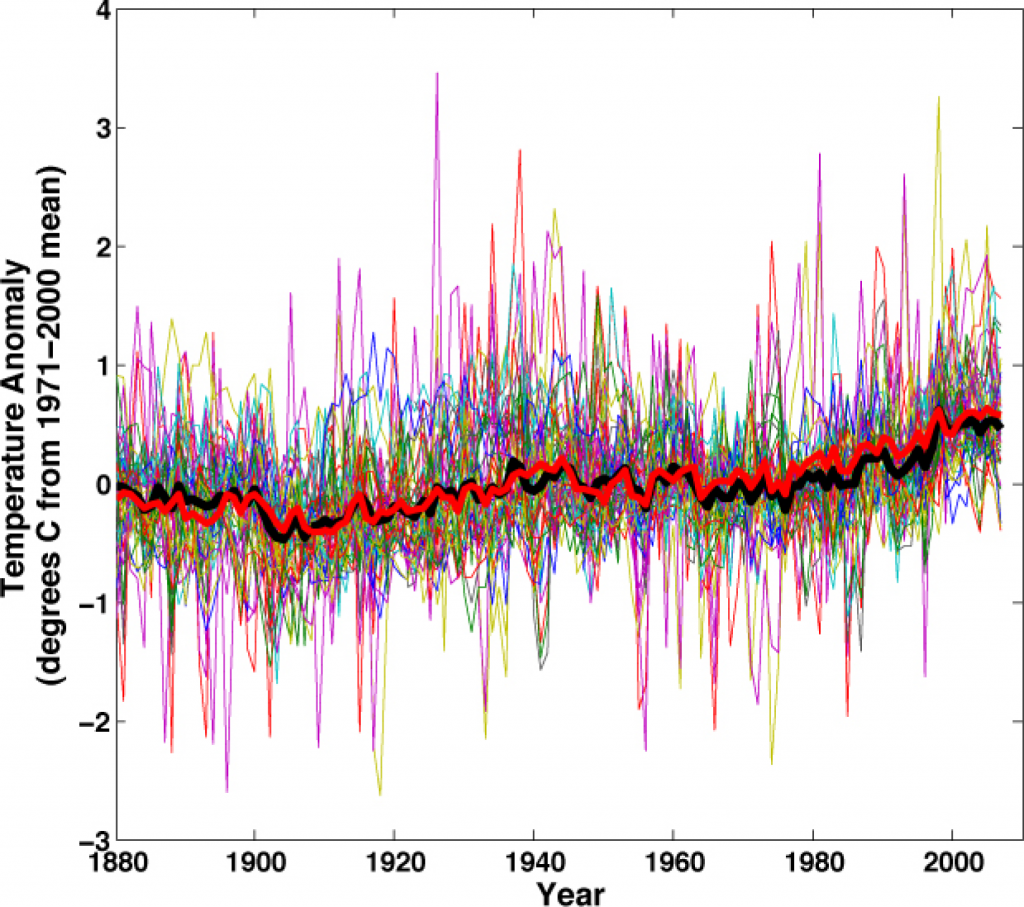

We can illustrate this concept with temperature anomaly data based on instrumental records for the past 130 years from the National Climatic Data Center (http://www.ncdc.noaa.gov/cmb-faq/anomalies.php#anomalies). Over this time interval, an increase in the global average temperature is documented by thermometer records, rising sea levels, retreating glaciers, and increasing ocean heat content, among other indicators. Yet if we plot temperature anomaly data since 1880 at the same locations as the 73 sites used in our paleotemperature study, we see that the data are scattered and the trend is unclear. When these same 73 historical temperature records are averaged together, we see a clear warming signal that is very similar to the global average documented from many more sites (Figure 1). Averaging reduces local noise and provides a clearer perspective on global climate.

Figure 1: Temperature anomaly data (thin colored lines) at the same locations as the 73 paleotemperature records used in Marcott et al. (2013), the average of these 73 temperature anomaly series (bold black line), and the global average temperature from the National Climatic Data Center blended land and ocean dataset (bold red line) (data from Smith et al., 2008).

New Scientist magazine has an “app” that allows one to point-and-plot instrumental temperatures for any spot on the map to see how local temperature changes compare to the global average over the past century (http://warmingworld.newscientistapps.com/).

Q: How does one go about reconstructing temperatures in the past?

A: Changes in Earth’s temperature for the last ~160 years are determined from instrumental data, such as thermometers on the ground or, for more recent times, satellites looking down from space. Beyond about 160 years ago, we must turn to other methods that indirectly record temperature (called “proxies”) for reconstructing past temperatures. For example, tree rings, calibrated to temperature over the instrumental era, provide one way of determining temperatures in the past, but few trees extend beyond the past few centuries or millennia. To develop a longer record, we used primarily marine and terrestrial fossils, biomolecules, or isotopes that were recovered from ocean and lake sediments and ice cores. All of these proxies have been independently calibrated to provide reliable estimates of temperature.

Q: Did you collect and measure the ocean and land temperature data from all 73 sites?

A: No. All of the datasets were previously generated and published in peer-reviewed scientific literature by other researchers over the past 15 years. Most of these datasets are freely available at several World Data Centers (see links below); those not archived as such were graciously made available to us by the original authors. We assembled all these published data into an easily used format, and in some cases updated the calibration of older data using modern state-of-the-art calibrations. We made all the data available for download free-of-charge from the Science web site (see link below). Our primary contribution was to compile these local temperature records into “stacks” that reflect larger-scale changes in regional and global temperatures. We used methods that carefully consider potential sources of uncertainty in the data, including uncertainty in proxy calibration and in dating of the samples (see step-by-step methods below).

NOAA National Climate Data Center: http://www.ncdc.noaa.gov/paleo/paleo.html

PANGAEA: http://www.pangaea.de/

Holocene Datasets: http://www.sciencemag.org/content/339/6124/1198/suppl/DC1

Q: Why use marine and terrestrial archives to reconstruct global temperature when we have the ice cores from Greenland and Antarctica?

A: While we do use these ice cores in our study, they are limited to the polar regions and so give only a local or regional picture of temperature changes. Just as it would not be reasonable to use the recent instrumental temperature history from Greenland (for example) as being representative of the planet as a whole, one would similarly not use just a few ice cores from polar locations to reconstruct past temperature change for the entire planet.

Q: Why only look at temperatures over the last 11,300 years?

A: Our work was the second half of a two-part study assessing global temperature variations since the peak of the last Ice Age about 22,000 years ago. The first part reconstructed global temperature over the last deglaciation (22,000 to 11,300 years ago) (Shakun et al., 2012, Nature 484, 49-55; see also http://www.people.fas.harvard.edu/~shakun/FAQs.html), while our study focused on the current interglacial warm period (last 11,300 years), which is roughly the time span of developed human civilizations.

Q: Is your paleotemperature reconstruction consistent with reconstructions based on the tree-ring data and other archives of the past 2,000 years?

A: Yes, in the parts where our reconstruction contains sufficient data to be robust, and acknowledging its inherent smoothing. For example, our global temperature reconstruction from ~1500 to 100 years ago is indistinguishable (within its statistical uncertainty) from the Mann et al. (2008) reconstruction, which included many tree-ring based data. Both reconstructions document a cooling trend from a relatively warm interval (~1500 to 1000 years ago) to a cold interval (~500 to 100 years ago, approximately equivalent to the Little Ice Age).

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Our global paleotemperature reconstruction includes a so-called “uptick” in temperatures during the 20th-century. However, in the paper we make the point that this particular feature is of shorter duration than the inherent smoothing in our statistical averaging procedure, and that it is based on only a few available paleo-reconstructions of the type we used. Thus, the 20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions. Our primary conclusions are based on a comparison of the longer term paleotemperature changes from our reconstruction with the well-documented temperature changes that have occurred over the last century, as documented by the instrumental record. Although not part of our study, high-resolution paleoclimate data from the past ~130 years have been compiled from various geological archives, and confirm the general features of warming trend over this time interval (Anderson, D.M. et al., 2013, Geophysical Research Letters, v. 40, p. 189-193; http://www.agu.org/journals/pip/gl/2012GL054271-pip.pdf).

Q: Is the rate of global temperature rise over the last 100 years faster than at any time during the past 11,300 years?

A: Our study did not directly address this question because the paleotemperature records used in our study have a temporal resolution of ~120 years on average, which precludes us from examining variations in rates of change occurring within a century. Other factors also contribute to smoothing the proxy temperature signals contained in many of the records we used, such as organisms burrowing through deep-sea mud, and chronological uncertainties in the proxy records that tend to smooth the signals when compositing them into a globally averaged reconstruction. We showed that no temperature variability is preserved in our reconstruction at cycles shorter than 300 years, 50% is preserved at 1000-year time scales, and nearly all is preserved at 2000-year periods and longer. Our Monte-Carlo analysis accounts for these sources of uncertainty to yield a robust (albeit smoothed) global record. Any small “upticks” or “downticks” in temperature that last less than several hundred years in our compilation of paleoclimate data are probably not robust, as stated in the paper.

Q: How do you compare the Holocene temperatures to the modern instrumental data?

A: One of our primary conclusions is based on Figure 3 of the paper, which compares the magnitude of global warming seen in the instrumental temperature record of the past century to the full range of temperature variability over the entire Holocene based on our reconstruction. We conclude that the average temperature for 1900-1909 CE in the instrumental record was cooler than ~95% of the Holocene range of global temperatures, while the average temperature for 2000-2009 CE in the instrumental record was warmer than ~75% of the Holocene distribution. As described in the paper and its supplementary material, Figure 3 provides a reasonable assessment of the full range of Holocene global average temperatures, including an accounting for high-frequency changes that might have been damped out by the averaging procedure.

Q: What about temperature projections for the future?

A: Our study used projections of future temperature published in the Fourth Assessment of the Intergovernmental Panel on Climate Change in 2007, which suggest that global temperature is likely to rise 1.1-6.4°C by the end of the century (relative to the late 20th century), depending on the magnitude of anthropogenic greenhouse gas emissions and the sensitivity of the climate to those emissions. Figure 3 in the paper compares these published projected temperatures from various emission scenarios to our assessment of the full distribution of Holocene temperature distributions. For example, a middle-of-the-road emission scenario (SRES A1B) projects global mean temperatures that will be well above the Holocene average by the year 2100 CE. Indeed, if any of the six emission scenarios considered by the IPCC that are shown on Figure 3 are followed, future global average temperatures, as projected by modeling studies, will likely be well outside anything the Earth has experienced in the last 11,300 years, as shown in Figure 3 of our study.

Technical Questions and Answers:

Q. Why did you revise the age models of many of the published records that were used in your study?

A. The majority of the published records used in our study (93%) based their ages on radiocarbon dates. Radiocarbon is a naturally occurring isotope that is produced mainly in the upper atmosphere by cosmic rays. This form of carbon is then distributed around the world and incorporated into living things. Dating is based on the amount of this carbon left after radioactive decay. It has been known for several decades that radiocarbon years differ from true “calendar” years because the amount of radiocarbon produced in the atmosphere changes over time, as does the rate that carbon is exchanged between the ocean, atmosphere, and biosphere. This yields a bias in radiocarbon dates that must be corrected. Scientists have been able to determine the correction between radiocarbon years and true calendar year by dating samples of known age (such as tree samples dated by counting annual rings) and comparing the apparent radiocarbon age to the true age. Through many careful measurements of this sort, they have demonstrated that, in general, radiocarbon years become progressively “younger” than calendar years as one goes back through time. For example, the ring of a tree known to have grown 5700 years ago will have a radiocarbon age of ~5000 years, whereas one known to have grown 12,800 years ago will have a radiocarbon age of ~11,000 years.

For our paleotemperature study, all radiocarbon ages needed to be converted (or calibrated) to calendar ages in a consistent manner. Calibration methods have been improved and refined over the past few decades. Because our compilation included data published many years ago, some of the original publications used radiocarbon calibration systems that are now obsolete. To provide a consistent chronology based on the best current information, we thus recalibrated all published radiocarbon ages with Calib 6.0.1 software (using the databases INTCAL09 for land samples or MARINE09 for ocean samples) and its state-of-the-art protocol for site-specific locations and materials. This software is freely available for online use at http://calib.qub.ac.uk/calib/.

By convention, radiocarbon dates are recorded as years before present (BP). BP is universally defined as years before 1950 CE, because after that time the Earth’s atmosphere became contaminated with artificial radiocarbon produced as a bi-product of nuclear bomb tests. As a result, radiocarbon dates on intervals younger than 1950 are not useful for providing chronologic control in our study.

After recalibrating all radiocarbon control points to make them internally consistent and in compliance with the scientific state-of-the-art understanding, we constructed age models for each sediment core based on the depth of each of the calibrated radiocarbon ages, assuming linear interpolation between dated levels in the core, and statistical analysis that quantifies the uncertainty of ages between the dated levels. In geologic studies it is quite common that the youngest surface of a sediment core is not dated by radiocarbon, either because the top is disturbed by living organisms or during the coring process. Moreover, within the past hundred years before 1950 CE, radiocarbon dates are not very precise chronometers, because changes in radiocarbon production rate have by coincidence roughly compensated for fixed decay rates. For these reasons, and unless otherwise indicated, we followed the common practice of assuming an age of 0 BP for the marine core tops.

Q: Are the proxy records seasonally biased?

A: Maybe. We cannot exclude the possibility that some of the paleotemperature records are biased toward a particular season rather than recording true annual mean temperatures. For instance, high-latitude proxies based on short-lived plants or other organisms may record the temperature during the warmer and sunnier summer months when the organisms grow most rapidly. As stated in the paper, such an effect could impact our paleo-reconstruction. For example, the long-term cooling in our global paleotemperature reconstruction comes primarily from Northern Hemisphere high-latitude marine records, whereas tropical and Southern Hemisphere trends were considerably smaller. This northern cooling in the paleotemperature data may be a response to a long-term decline in summer insolation associated with variations in the earth’s orbit, and this implies that the paleotemperature proxies here may be biased to the summer season. A summer cooling trend through Holocene time, if driven by orbitally modulated seasonal insolation, might be partially canceled out by winter warming due to well-known orbitally driven rise in Northern-Hemisphere winter insolation through Holocene time. Summer-biased proxies would not record this averaging of the seasons. It is not currently possible to quantify this seasonal effect in the reconstructions. Qualitatively, however, we expect that an unbiased recorder of the annual average would show that the northern latitudes might not have cooled as much as seen in our reconstruction. This implies that the range of Holocene annual-average temperatures might have been smaller in the Northern Hemisphere than the proxy data suggest, making the observed historical temperature averages for 2000-2009 CE, obtained from instrumental records, even more unusual with respect to the full distribution of Holocene global-average temperatures.

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Here we elaborate on our short answer to this question above. We concluded in the published paper that “Without filling data gaps, our Standard5×5 reconstruction (Figure 1A) exhibits 0.6°C greater warming over the past ~60 yr B.P. (1890 to 1950 CE) than our equivalent infilled 5° × 5° area-weighted mean stack (Figure 1, C and D). However, considering the temporal resolution of our data set and the small number of records that cover this interval (Figure 1G), this difference is probably not robust.” This statement follows from multiple lines of evidence that are presented in the paper and the supplementary information: (1) the different methods that we tested for generating a reconstruction produce different results in this youngest interval, whereas before this interval, the different methods of calculating the stacks are nearly identical (Figure 1D), (2) the median resolution of the datasets (120 years) is too low to statistically resolve such an event, (3) the smoothing presented in the online supplement results in variations shorter than 300 yrs not being interpretable, and (4) the small number of datasets that extend into the 20th century (Figure 1G) is insufficient to reconstruct a statistically robust global signal, showing that there is a considerable reduction in the correlation of Monte Carlo reconstructions with a known (synthetic) input global signal when the number of data series in the reconstruction is this small (Figure S13).

Q: How did you create the Holocene paleotemperature stacks?

A: We followed these steps in creating the Holocene paleotemperature stacks:

1. Compiled 73 medium-to-high resolution calibrated proxy temperature records spanning much or all of the Holocene.

2. Calibrated all radiocarbon ages for consistency using the latest and most precise calibration software (Calib 6.0.1 using INTCAL09 (terrestrial) or MARINE09 (oceanic) and its protocol for the site-specific locations and materials) so that all radiocarbon-based records had a consistent chronology based on the best current information. This procedure updates previously published chronologies, which were based on a variety of now-obsolete and inconsistent calibration methods.

3. Where applicable, recalibrated paleotemperature proxy data based on alkenones and TEX86 using consistent calibration equations specific to each of the proxy types.

4. Used a Monte Carlo analysis to generate 1000 realizations of each proxy record, linearly interpolated to constant time spacing, perturbing them with analytical uncertainties in the age model and temperature estimates, including inflation of age uncertainties between dated intervals. This procedure results in an unbiased assessment of the impact of such uncertainties on the final composite.

5. Referenced each proxy record realization as an anomaly relative to its mean value between 4500 and 5500 years Before Present (the common interval of overlap among all records; Before Present, or BP, is defined by standard practice as time before 1950 CE).

6. Averaged the first realization of each of the 73 records, and then the second realization of each, then the third, the fourth, and so on, to form 1000 realizations of the global or regional temperature stacks.

7. Derived the mean temperature and standard deviation from the 1000 simulations of the global temperature stack.

8. Repeated this procedure using several different area-weighting schemes and data subsets to test the sensitivity of the reconstruction to potential spatial and proxy biases in the dataset.

9. Mean-shifted the global temperature reconstructions to have the same average as the Mann et al. (2008) CRU-EIV temperature reconstruction over the interval 510-1450 years Before Present. Since the CRU-EIV reconstruction is referenced as temperature anomalies from the 1961-1990 CE instrumental mean global temperature, the Holocene reconstructions are now also effectively referenced as anomalies from the 1961-1990 CE mean.

10. Estimated how much higher frequency (decade-to-century scale) variability is plausibly missing from the Holocene reconstruction by calculating attenuation as a function of frequency in synthetic data processed with the Monte-Carlo stacking procedure, and by statistically comparing the amount of temperature variance the global stack contains as a function of frequency to the amount contained in the CRU-EIV reconstruction. Added this missing variability to the Holocene reconstruction as red noise.

11. Pooled all of the Holocene global temperature anomalies into a single histogram, showing the distribution of global temperature anomalies during the Holocene, including the decadal-to century scale high-frequency variability that the Monte-Carlo procedure may have smoothed from the record (largely from the accounting for chronologic uncertainties).

12. Compared the histogram of Holocene paleotemperatures to the instrumental global temperature anomalies during the decades 1900-1909 CE and 2000-2009 CE. Determined the fraction of the Holocene temperature anomalies colder than 1900-1909 CE and 2000-2009 CE.

13. Compared global temperature projections for 2100 CE from the Fourth Assessment Report of the Intergovernmental Panel on Climate Change for various emission scenarios.

14. Evaluated the impact of potential sources of uncertainty and smoothing in the Monte-Carlo procedure, as a guide for future experimental design to refine such analyses.

Hello, I found this FAQ after reading an update in the New York Times.

I read RealClimate occasionally as a non-scientist trying to understand the issues. I say occasionally because I hate the personal attacks and the gotcha approach I see on both sides. Sometimes it is like being invited to dinner and the hosts get in a huge fight that makes one want to crawl under the table.

I do feel a great deal of sympathy for authors who have done their best and then have to face what sometimes seems to be a pack of hungry wolves. And even as a lay-person I can see a great deal of bad faith argument- splitting of hairs, deflection, focusing on language etc. I really want to hear explanations that are clear and say: this is what our data shows, this is what we THINK it suggest, but doesn’t prove, and this is what it doesn’t really help with one way or another, even if we wish it did. That is why I appreciate the FAQ the authors have done. The FAQ takes into account that this is an important issue not just to scientists.

I recognize that scientists often rely on the press to explain their results to the public and that we can’t hold the scientists responsible for the press getting it wrong — as seems to be happening here to some extent.

I don’t agree with some posts that latch on to the press articles and ignore the clear statements of the authors in the paper. But I also can’t agree with the dismissive posts about a non-issue being ginned up. As a member of the public, I read the New York Times story, and Mann’s comments elsewhere and came away thinking that this paper said something that the FAQ seems to contradict. The Times, to their credit, followed up and that is how I found this FAQ. But I can understand why the “skeptics” jumped on the issue – and I think the FAQ, and a discussion of what the paper does and does not show is the appropriate response. Not back and forth accusations and ad hominem attacks I see here and (especially) on other blogs.

But I really do want to understand this, so I am commenting for the first time on this blog.

I hope the experts will indulge an outsider and someone will read this and try to explain it to me — and I apologize to everyone else for the level of my understanding …

In reading the FAQ, I THINK the authors are saying that some reporters (and some third-party scientists) commenting on their paper got a couple of things wrong. They clarify (or reiterate) that 1) their reconstruction for the past century is not robust. Therefore one could not say that their reconstruction independently reconfirms the sharp temperature rise of last century. Rather, that sharp rise is recorded in the previously published temperature record. Is that right? 2) the paper does NOT compare the rate of the sharp rise in the past century to the rate of any rise or fall in the paleotemperature reconstruction because the temporal resolution of the reconstruction is larger than 100 years — and other factors in the proxies tend to increase this “smoothing”. Thus, a sharp uptick or downtick in temperature during 100 or 200 years would not have been preserved in the paleoclimate record. Did I get that right?

None of that diminishes the value of the paper in extending our understanding (i really mean YOUR understanding) of the paleotemperature record to an earlier period. But it does mean that newspaper reports that said the paper confirmed the hockey stick or that it showed that the rate of rise in the past century is unprecedented (over the long period studied) are not correct. The observations in the paper are not INCONSISTENT with the hockey stick or the claim that the sharp rise is unprecedented. The reconstruction is simply not robust enough on those points to be said to confirm or prove them.

I really hope I got the above right because that’s the part I think I UNDERSTAND. And I would appreciate any of the experts gently correcting me if I missed the point.

Here is the part I am having trouble with. The FAQ also includes the following:

“Holocene Temperature Distribution: Based on comparison of the instrumental record of global temperature change with the distribution of Holocene global average temperatures from our paleo-reconstruction, we find that the decade 2000-2009 has probably not exceeded the warmest temperatures of the early Holocene, but is warmer than ~75% of all temperatures during the Holocene. In contrast, the decade 1900-1909 was cooler than~95% of the Holocene. Therefore, we conclude that global temperature has risen from near the coldest to the warmest levels of the Holocene in the past century. ”

This statement seems very significant on its surface. When I first read it, it almost seemed to be saying what the part of the FAQ i discuss above says the paper did NOT prove: that the sharp spike in the last 100 years (and the jump in the 2000-2010 decade) are very unusual and perhaps unprecedented in the long record.

But on closer reading I THINK it really is saying we had an unusually cold decade (1900-1910) and 100 years later we had an unusually hot decade (and a sharp 100 year rise in between). And that the sharp rise took us from a point near the bottom of Holocene temperatures to a point near the top of the Holocene temperatures. And it is one of the main findings listed at the top of the FAQ, so I feel like I am missing the significance. Is it saying something more than my summary in the first two sentences of this paragraph?

I guess the question I am asking is, yes there is a 100 year spike, and now we see how that spike compares to smoothed out temperatures in sometimes two or three hundred year periods — but does this paper show that that spike over that temperature range tells us something new about the last hundred years as it compares to the past? (Ie. why is it a key finding?)

Based on the paper, what are the chances that similar spikes — from unusually low to unusually high temperatures — has happened one, two, three or more times, in the past (including in the warm part of the Holocene itself) and that the past spikes are all hidden in the smoothing? Is there a way to look at the data in the paper and figure out whether this 100 year spike is probably unusual or is probably common? With what level of confidence?

I know I am probably misusing terms or mismatching concepts — so please don’t jump on the mistakes. I REALLY do want to understand this. I know that if the paper doesn’t prove X that doesn’t mean the opposite of X is true. (And that even if the paper showed X, someone some day might expand upon or take issue with X).

I got interested in this because of the initial press coverage and now that it seems that coverage may have gone beyond what the authors of the actual paper intended, I just want to try to figure out what this one paper really, robustly and confidently did show. .

On that note, thank you very much for the FAQ, the blog, and in advance for your patient, non-judgmental replies….

Spikers ought to consider

https://www.realclimate.org/index.php/archives/2013/03/response-by-marcott-et-al/comment-page-1/#comment-325945

before continuing.

KR: I think your attribution is insufficient: 3-4 species of magical beings are needed, not just 1:

For the post-Industrial Revolution temperature history to have nothing to do with GHG effects and cause the upward part of a spike, we need:

a) A set of unknown-to-science positive forcing agents (gremlins) that generate temperature increases indistinguishable from those of GHGs AND

b) Another set of agents (leprechauns) that magically cancel the known effects of post-IR GHGs. They are needed because we certainly have good post-IR data on GHG increases, and these things must apply a negative forcing large enough to cancel GHG forcing.

Some people seem to want worldwide early Holocene spikes, whose upsides are similar to the post-IR, but those may require magical entities of different sorts:

c) We need entities that can raise the temperature over 50-100 years, getting the heat from somewhere, call them demons, in honor of Maxwell, perhaps. Until both gremlins and demons are measured and understood, they should not be assumed to be identical. Gremlins need to exactly match all the other side-effects of GHGs, and other current data, i.e., gremlins can’t be a huge upward spike in solar activity or cessation of centuries of extreme vulcanism. Demons may have more flexibility, to the extent they can sneak among higher-resolution proxies. Since demons are less-constrained, gremlins may be a subspecies of them.

d)But finally, we need one more kind, basilisks that implement the downward 50-100=year parts of early Holocene spikes, via unknown mechanisms that escape notice by any proxies. They differ from leprechauns that nullify the effects of GHGs. They have to dissipate the heat content built up by the demons, and would be very useful today. I suppose they could be demons running in reverse. Basilisks seem to have died out (as weasels are not susceptible to their gaze and can harm them), but people are proposing modern equivalents under “geoengineering,” like giant solar shades, not available in the early Holocene.

For reasons others have given, it is hard for any of these to be state-change events like Younger Dryas or 8.2kyr. In any case, we need 3-4 magical species.

We could, of course, stick with science, but many prefer otherwise.

In their FAQ Marcott et. al say:

“The smoothing presented in the online supplement results in variations shorter than 300 yrs not being interpretable.”

Why then do many of those commenting above assume that a spike has to occur with 100 years? With a data resolution of 120 years this means for a feature that is “spike-like” in form to be visible clearly as a “spike” (but visible only with diminished height) it would have to have much larger duration, about 800 to 1200 years.

This is the obvious interpretation of “not being interpretable” at 300 years or shorter.

And most likely such a thing would look like any one of many small bumps clearly visible in the “averaged output” of Monte Carlo simulation produced by Marcott (i.e., bumps with its height greatly reduced).

So it is clear by their own qualifications, and looking at their output, that many such large excursions (~ 0.5 C) in temperature have occurred (at least over a 800 to 1000 year interval). And, yes, there could be some of shorter duration in the paleoclimate record (prior to 2000 BP). How short on a global basis? We do not know the answer to that yet, until better global proxies are found, but ice cores would indicated there is a good probability of it (given their many such spike “locally”).

So no one should be too certain about ruling out 100, 200 or 300 year “spikes”, just yet.

PhysicsGuy @102 — Follow the links from comment #100 to discover why no such global spikes exist. There are plenty of other proxies in which such spikes would show up; none found.

:”PhysicsGuy @102 — Follow the links from comment #100 to discover why no such global spikes exist. There are plenty of other proxies in which such spikes would show up; none found.:’

And, of course, arguing that the paper’s analysis can’t capture such excursions is proof that they exist is just …

kinky.

Even without the fact that other proxies don’t show such spikes.

PhysicsGuy is right !

“The smoothing presented in the online supplement results in variations shorter than 300 yrs not being interpretable.”

The smoothing was due both to the Monte-Carlo variations with the 20 year interval interpolation. The reason why despite this smoothing, Marcott’s paper still showed a large spike at the 1940 end point has been explained by NZ Willy.

“Marcott performed 1000 “perturbations” on the raw data, which permutated each datum 1000x time-wise within the age-uncertainty of that datum. But Marcott set the age-uncertainty of the 1940 bin to zero. Therefore the 1940 bin is protected from the homogenization which affects all other bins, so its uptick is protected.

If you look at the raw data binned in 50 year bins then you can see other spikes over the entire period : see here

I’d still love to hear someone describe the physical processes that could lead to such short-term global temperature spikes. Simon? Clive? PhysicsGuy? Nancy? Bueller? Or is this more of the ever-mysterious “cycles” that so many are fond of?

@Ray Ladbury #84 “that means that when I interacted with him while I was at Physics Toady he was in his 70s”. Ray, shouldn’t that say “while I was a Physics Toady”?

@garhighways

These short term spikes in the proxy data are just statistical fluctuations and have no physical significance. However there are some very interesting regional variations in the data as noted in Marcott’s paper. The NH shows stronger cooling than does the SH see here. There is also agood discussion of these differences on Tamino’s blog.

About 11,500 years ago the seasons were inverted. So NH summers occurred in “December” and coincided with the Earth being at its closest distance to the sun (as is the case now for the SH). In addition to this the obliquity was greater than now so there was also more insolation at higher latitudes in the summer. Perhaps this change can explain the slow cooling of the NH.

You can also view an interactive flash map of the 73 individual proxies and see how they vary with latitude.

If you look at the raw data binned in 50 year bins then you can see other spikes over the entire period : see here

1- That’s not “spikes”, that’s “noise”. Why do you think the authors performed this Monte-carlo simulation? Because the raw data itself is not interpretable on short timescales.

2- A short “spike” in the past could not be comparable to modern warming, because modern warming is not just fast, it is also *durable*. Even if CO2 emissions completely stop in 2100, the warmth will remain for centuries. If something like *that* had happened in the past, Marcott’s proxies and methods would have detected it.

They didn’t, so it hasn’t. Hence, “unprecedented”.

[Response: What a lot of this discussion of “spikes” is missing is that the point isn’t whether the Holocene had any sudden rises with a cause analogous to the present. As many people have pointed out, that couldn’t be, because the present rise is due to CO2, and is hence “durable,” as many people have pointed out; it would show up in a record like Marcott’s. But also, as others have pointed out, we already know from ice cores that CO2 fluctuated very little in the pre-industrial Holocene, so that isn’t in the cards anyway. What is at issue is whether there are any other mechanisms of centennial-scale variability that could cause a 1C or even a .5C spike. We know that the AMO can go a smallish fraction of that distance, and it’s hard to rule out on first principles that some kind of ocean variability might not be able to do something bigger in amplitude if you wait long enough. There’s no evidence that it can, and I myself find it hard to see how you could make the ocean do a centenniel scale uptick (a centennial scale downtick is easier, since there’s all that cold water you could conceivably bring to the surface). But the Marcott analysis has no bearing on that question. I don’t think the paper ever claimed it did, but the comparision with the instrumental era rise may have confused some people into thinking so. To repeat my earlier comment, it is useful to compare the instrumental era rise and the forecast of further rise to the Marcott record because we know the rise is durable and will last millennia. Thus, we know we are bringing about a durable increase that is huge compared to any long-duration variation over the Holocene. That’s a big, big deal. –raypierre ]

[Response: To add to Raypierre: it would have to be ocean variability causing a spike in global-mean temperature without causing a spike in the high-resolution proxies from the ice cores. That is exceedingly unlikely; the ocean’s deep-water formation sites near Greenland and Antarctica would surely experience major change during such a spike. Think of the 8k-event: the biggest in the Greenland ice cores over the entire Holocene, yet minor impact on global-mean temperature. -Stefan]

Physics Guy,

Where’s the physics?

Simon, so I’ll take your #95 as an admission that you have given up all rationality.

Dude, the planet is really, really big. It isn’t easy to pump that much energy in in a decade or two. We’ve only managed to do so by liberating most of the carbon sequestered since the Jurassic! The Paleocene-Eocene Thermal Maximum failed to achieve such rates of growth despite (probably) burning up the Deccan coal field!

Simon,

No, because I am still a physics toady. I no longer work at Physics Today (or Physics Toady to its friends).

@Ray #112 So it wasn’t a typo after all and the joke’s on me. Ah well. Luckily I have my Scents of Yuma handy.

Simon, how were you to know. Susan might be familiar with the typo-turned humor, but Physics Today is pretty obscure, and the joke even moreso.

Update! Tamino strikes again!

http://tamino.wordpress.com/2013/04/03/smearing-climate-data/

Tamino adds three real spikes and tries to “smear” their imprint as the method of Marcott might do. The result:

“The spikes are a lot smaller than with no age perturbations, which themselves are smaller than the physical signal. But they’re still there. Plain as day. All three of ‘em.“

Physics Today is obscure? [scratches head, realizes he’s not *like* other people]

test – I can’t seem to post a simple comment here. Is there anything wrong with the site? I’m not having any trouble posting at other WordPress blogs.

[Response: All comments are moderated. They won’t appear until approved by one of us. So be patient. –raypierre]

The masthead here says “Climate science from climate scientists” (I have the impression, which may be wrong, that it once was “by scientists for scientists”.)

Visitors curious about or wishing to promote the Mac attack promulgated elsewhere and looking to comment here should be aware that all the scientists here have day jobs and are not amateurs. (I am an observant layperson and often just lurk.) They are too knowledgeable and experienced in both science and “blog science” to give equal weight to appearance and substance. It will not work to discredit the messenger here.

You must not blame them for any unwise assertions made by yours truly. On the whole, they have wisely refrained from getting down in the mud.

I have always believed that one reason I am tolerated is because I mostly act like the guest though like all hotheads I occasionally get a gentle warning to desist. Lately I’ve been wishing I took their most recent advice a little more to heart!

Ray L, thanks for the humor etc. – got myself in the hot seat without enough armor; it’s hard to put up with bullying without hitting back. Nobody’s perfect, so what else is new?

John Mashey @~103, magical beings indeed, that should make it clear to the open-minded.

And on the invisible past spikes, well, maybe, but it seems to me a mite of Occam’s razor is needed. In general, big events leave some kind of trace, and there appears so far to be none of it.

Lots of good nutrition here!

afeman,

Not everyone is a physicist. Not everyone loves physics. It was a pretty fun gig, though.

@clive #110

You are missing the point. No one was suggesting Marcott showed meaningful spikes. The deniers were saying that there COULD BE spikes but that Marcott’s resolution was too poor to show them, and therefore recent warming might not be remarkable.

My point was that those who sought to jam an unobserved spike into the Marcott graph ought to also explain how it could be: what would the physical explanation be of such a spike? How could it happen?

Now, since then, as has been noted upthread, Tamino put the whole thing to rest by showing that if such a spike in temps actually existed it really would show up in Marcott, so the point is now moot, and those who object to Marcott need another reason to do so. History being what it is, the next reason will not be consistent in any way with the prior reasons, but that’s normal I guess.

Re #13 – For the composite age model for MD01-2421, 3cm was location of a radiocarbon bomb spike and therefore post-1950. Because we only went up to 1940 in the reconstruction, we didn’t use data after that time.

Re #13 – The paper was never submitted to Nature, only to Science.

Re #13 –In the original publications for both MD95-2011 and MD95-2043, the core top ages were not defined in the text, so we assumed 0 yr BP as explained above in the FAQs.

Re#39 – Since the thesis, we updated our treatment of age models and their uncertainty, and corrected some programming errors when preparing the manuscript for publication. It is important to remember that with any reconstruction from geologic archives there is always uncertainty in the age component. The paleoclimate records used in our reconstruction typically have age uncertainties of up to a few centuries, which means the climate signals they show might actually be shifted or stretched/squeezed by about this amount. One of the primary goals of this study was to quantify these uncertainties to see how they might impact the final composited global temperature reconstruction using what is called a random walk model that allows uncertainty to expand moving away from an age control point (radiocarbon dates in this case).

After recalibrating all radiocarbon control points to make them internally consistent and compliant with the scientific state-of-the-art (see FAQ – Technical Questions), we constructed age models for each sediment core. It is pretty straightforward to model ages lower down in each core between radiocarbon dates, but various factors can make it more difficult to pin down ages near the tops of the cores above the uppermost date. For instance, radiocarbon dating does not work for the last couple centuries (see FAQ), uncompacted sediments near the core top can be lost during the coring process, burrowing organisms mix up older sediment so that a core collected today will not necessarily have 0 year old mud at the top, etc.

Given these complexities, there is often no simple “correct” way to define a core top age, and so researchers typically use one of a few different reasonable assumptions. For instance, the core top age of when the sediment core was extracted could be used, though this can be problematic for the reasons I just mentioned. Or, one could simply extrapolate the age of the core top from radiocarbon dates deeper in the core – though in our study this would have in many cases yielded a core top age close to present with an age uncertainty of +/- several hundred years using the random walk model. In other words, the core top age might be 0 years before present +/- 300 years, which would have generated some age models extending into the future in our Monte Carlo simulations – which is obviously not meaningful. Instead, we chose to use the common practice (e.g., http://www.sciencemag.org/content/suppl/2007/09/27/1143791.DC1/Stott.SOM.pdf, ftp://ftp.ncdc.noaa.gov/pub/data/paleo/contributions_by_author/tierney2012/tierney2012.txt) of setting core top ages to 0 yr BP, unless the original publications mentioned some firmer constraint on the core top ages. The take-home point here is that you almost always have to make some assumption when dealing with core top ages – this is one reason why we provided the published and our recalculated age models together, as well as the raw radiocarbon dates, so that future people working with these data can further evaluate this. It’s important to note though that the choice of core-top assumption should have little impact on our overall Holocene reconstruction or our main conclusion that 20th century warming from the instrumental record spanned much of the Holocene range.

[Response: Jeremy, thanks for stopping by and helping clarify. – gavin]

[Response: Ditto. -mike]

Would it be fair to consider that –

An expectation that the global temperature suffers excursions of short duration (so as to be too short for the smoothing to capture) but equal to twice the maximum difference betwen high and low over shown in the graph (as it has to average out to the values obtained), is NOT reasonable?

That in the absence of any known physical driver for such a phenomenon, there is no justification for assuming it JUST because the statistics leave open the possibility?

Would we not have noticed that sort of climate variation elsewhere in our histories?

Just sayin

I have a lot of trouble reconciling the original publicity about Marcott et al (what the majority of citizens accepting, uncritically, grasped from this recent AGW paper) with the yawning about the recent data, now.

Did this NSF misunderstand the research, as well. Does this press release not *state,* never mind imply, that AGW is getting bad, badder, worst — today? ….Lady in Red

NSF Press Release

[edit – cut and paste replaced with link]

Lady in Red:

“Did this NSF misunderstand the research, as well. Does this press release not *state,* never mind imply, that AGW is getting bad, badder, worst — today? ….Lady in Red

Earth Is Warmer Today Than During 70 to 80 Percent of the Past 11,300 Years”

No. We know what recent temps have been from the instrumental record. Marcott et al said they can’t rule out the possibility of short warm spikes in the past record due to the temporal resolution of their proxy ensemble. That does not change the conclusion that the earth today is warmer than the past 70 to 80% of the last 11,300. If they had said 100%, that would be a misrepresentation, but they didn’t.

Tamino’s shown that a large spike on the order of the 0.9C warming seen the past century followed by equivalent cooling over the following century would show up using their methodology (though attenuated), in other words his analysis makes him believe the authors have been too conservative.

But that’s irrelevant.

“What that history shows, the researchers say, is that during the last 5,000 years, the Earth on average cooled about 1.3 degrees Fahrenheit–until the last 100 years, when it warmed about 1.3 degrees F.

The largest changes were in the Northern Hemisphere, where there are more land masses and larger human populations than in the Southern Hemisphere.

Climate models project that global temperature will rise another 2.0 to 11.5 degrees F by the end of this century, largely dependent on the magnitude of carbon emissions.

“What is most troubling,” Clark says, “is that this warming will be significantly greater than at any time during the past 11,300 years.””

And this is what models show. Actually 2F is extremely unlikely, it will be higher. Regardless, the previous century’s 0.9C rise plus another century of continued warming leads to a period of a couple of centuries of warming. Even at the low range, that’s about 2C for the two centuries.

If we’d seen this in the past, their methodology *would* have detected it. So the last statement is correct.

I’m not going to go through the rest of the quotes you’ve provided one-by-one, others can if they have the patience, but, you have a track record that leads me to believe you won’t listen.

After all, you believe stuff like this:

“lady in red:

It’s important not to underestimate the courage it took for CERN to *permit* this “science” in today’s intl “climate change” political climate: massive govt spending for “scientists” to “prove” AGW…. the IPCC fraud reports of the past two decades…. the pressure on scientists of all stripes to sign petitions backing the AGW theory…..

It will be interesting to see how Michael Mann’s hockey stick (which “evened” both the Medieval Warm Period and the Little Ice Age) and has been the poster star for the IPCC and Al Gore’s AGW green investment fraudsters, as well as the dupes who have PhD’s in “climate science” and want to save the world’s polar bears and may now hit the unemployment lines…..fare in all this. Hmmmmmm…..”

Regarding Peter Dunkelberg’s post in #117, I will stand by what I said in #104. Tamino’s analysis is flawed. It does not take into account the uncertainty inherent in the proxies themselves (time and temperature) many of which have uncertainties in temp of +/- 0.5 C or more and resolutions 200+ years. It the synchronizes the timing of all test spikes into all proxies, which would not happen.

Clive has done a similar analysis as Tamino with different results taking into account these issues. See link.

http://clivebest.com/blog/?p=4833

Maybe some people need to go here first… http://en.wikipedia.org/wiki/Proxy_(climate) or use Real Climate’s own search for climate proxies for a better understanding of climate proxies. If you don’t like the explanation here or over at Tamino’s site, fine, but give us a detailed explanation of how these mysterious spikes somehow manage to elude all the proxies. Show us some real physical mechanisms you can back up with actual science.

Curious. Roger Pielke Jr. is claiming the the FAQ here is a ‘startling admission’ that the post 1890 ‘uptick’ is not robust. He is also asking as part of the ‘fix’ that the authors should change the paper to show this.

This goes against what most others have interpreted the paper to already say. This perhaps may be just a problem with how Roger is understanding the wording of ‘robust’. I would say that ‘the difference is not robust’ means that since the two methodologies do not support each other, that the authors are claiming no knowledge of the temperature post 1890 from their reconstruction.

Roger says this ‘suggests the opposite’ (scroll and read full conversation, Andreas Schmittner, myself, Roger, HaroldW).

Since this is being used as a way to call the integrity of several people into question, any input from RC would be helpful.

@Garhighways 123

“Now, since then, as has been noted upthread, Tamino put the whole thing to rest by showing that if such a spike in temps actually existed it really would show up in Marcott”

I disagree.

I repeated the same procedure as Tamino by simulating exactly the same 3 spikes. I then used the Hadley 5 degree global averaging algorithm to derive anomalies from the 73 proxies. The 3 peaks are visible but are smaller than shown by Tamino. This procedure assumes that there is perfect time synchronization between the peak and all proxy measurements. Once I include a time synchronization jitter between proxies equal to 20% of the proxy resolution then the peaks all but disappear. see plot here

Jeremy Shakun writes above …. “The paleoclimate records used in our reconstruction typically have age uncertainties of up to a few centuries, which means the climate signals they show might actually be shifted or stretched/squeezed by about this amount.

Therefore I think that any climate excursions lasting less than about ~400 years will be lost in the noise.

[Response: Doing a proper synthetic example of this (taking all sources of uncertainty into account – including spatial patterns, dating uncertainty, proxy fidelity etc) is more complicated than anyone has done so far. However, one could also make an experiment of adjusting age models within uncertainties to line up anomalies. This is generally not done without independent information that there is a real event there – however, it might be fun to do here with the actual data. I’m doubtful whether any reasonable redating could produce anything as large as the 20th C. – gavin]

A far more interesting point in this respect is to look at the full set of the 1000 realizations in Marcott et al 2013 (Supplemental Fig. S3). If such a (gremlin-induced) two-century spike were in the data at least a few of the realizations would show it – even in the presence of dating errors, the space of perturbations would include some shifting the proxies into roughly the correct and reinforcing alignment. In fact, spikes above that level would be expected in a Monte Carlo reconstruction as unrelated variations were shifted to coincide with such a spike.

There are _no_ such 0.9C spikes in any realization in the 1000 set prior to the last 200 years. None.

Now – I have seen no physical mechanism proposed to drive a 25×10^22 Joule rise and fall (as per ocean heat content) of climate energy over 200 years, there is no sign of such a global spike in any of the paleo data including near-annual speleotherms, and hence there is neither support for (a) such spikes to exist, let alone be missed in the Marcott analysis, nor (b) for any claims that current warming might therefore be natural rather than anthropogenic.

Marcott et al 2013 is a very interesting paper, and I expect will be expanded upon in future work. Can we _please_ drop the red herrings of mythical spikes from the discussion?

Since the CERN experiment keeps getting brought up, perhaps repeating what Eli has said elsewhere would be useful. First, it has been well known since the earliest atmospheric science textbooks that Eli has, that ions can form nuclei for aerosol growth and that cosmic rays produce ions in the atmosphere. The real question, which the CERN experiment does not touch, is what is the rate limiting step in nucleation.

That is a question which a recent experiment comes much closer to answering. A Finnish group, recently published Direct Observations of Atmospheric Aerosol Nucleation which found that at the surface a) less than 10% of nanometer sized aerosols are ionic in character b) that growth is limited by sulfate and organic vapor availability and c) that growth above a few nm depends on the availability of organic vapors.

The Rabett will point out that he said this at RC about two years ago

—————-

The most interesting thing about aerosol growth to Eli is the role that SOx plays. Cleaning the air, at least locally, strongly affects cloud cover (see the Molina’s work in Mexico City, and Monet’s pictures of Parliament), and there is also something obvious there WRT volcanos. A much more direct and convincing part of the mechanism. Clean air is air without much SOx and that must have effects on global temperature.

—————

and at Rabett Run about 7 years ago.

It’s always a pleasure when science catches up with blog science

124 Jeremy Shakun: Since the thesis, we updated our treatment of age models and their uncertainty, and corrected some programming errors when preparing the manuscript for publication.

Do you plan to publish the code used in the Science paper? Last I looked at the supporting online material, the code was not there.

@Clive 131: Hey, maybe you are on to something. Maybe you should write a paper and submit it to peer review for publication. Let us know how that goes for you.

@ 135

The peer review process just might select Shakun, Marcott, and Tamino to be his reviewers ;)

It could be that his work gets rejected for publication because it’s not “interesting” since he’s attempting to refute something that Marcott et al never specifically concluded. Perhaps it’s worth one of those famous “comments” wind up alongside a publication.

To add to the Rabett’s points, the rate limiting process in cosmic rays putative effect on climate is not only the relative effect of ions in aerosol nucleation, but also (and perhaps more so) the growth of freshly nucleated particles (about a nanometer in diameter) to sizes where they can influence cloud formation (larger then approx 100 nanometer).

As I wrote here 4 years ago:

“Freshly nucleated particles have to grow by about a factor of 100,000 in mass before they can effectively scatter solar radiation or be activated into a cloud droplet (and thus affect climate). They have about 1-2 weeks to do this (the average residence time in the atmosphere), but a large fraction will be scavenged by bigger particles beforehand.”

https://www.realclimate.org/index.php/archives/2009/04/aerosol-effects-and-climate-part-ii-the-role-of-nucleation-and-cosmic-rays/

Given that the methodology imparts a different level of confidence to the 20th century part of the graph, and that the point of the study was the preceding ten centuries, much anguish could have been avoided by ending the graph at 1900 AD.

In fact, the right thing to do would be to republish, either deleting or reworking the 20th century.

Re- Comment by Chris Lynch — 6 Apr 2013 @ 6:27 PM

What you say might be true for those folks who are unable to read and understand science, or who enjoy distorting science for their own purposes. The rest of us, especially the non-experts like myself, like to have the instrumental record side by side with the previous Holocene period to provide context.

Steve

“Given that the methodology imparts a different level of confidence to the 20th century part of the graph, and that the point of the study was the preceding ten centuries, much anguish could have been avoided by ending the graph at 1900 AD.”

Even more anguish could’ve been prevented by not publishing at all, and by not doing a PhD thesis on paleoclimatology.

In this way, the intentional misreading, personal attacks, cries of “fraud”, etc would’ve been avoided.

Which is rather the point of all the attacks, no? Discredit scientists, put them on the defensive, and in general make life for climate scientists such a pain in the butt that graduate students will find something else to study.

“In fact, the right thing to do would be to republish, either deleting or reworking the 20th century.”

Like, oh, maybe presenting the instrumental record for the 20th century rather than their truncated proxy series which they openly said was most likely not robust?

Wait … they’ve did that, only in addition to rather than instead of the truncated proxy series.

And cries of “scientific fraud” have been the result.

You don’t seem to understand what McI, Watts et al are up to here. The intent is to destroy, not learn, science.

Gavin et. al. have been more than patient in their responses here. Scientists aren’t saying that the ending up-tick is robust. Marcott et. al. never claimed that themselves. Anyone can see that there are only 18 of the 73 proxies remaining ca. 1940. Duh.

But what is the salient point of the paper, and what has the AGW deniers so obviously terrified is… that for 5000 years we had started the slow, inexorable slide into the next ice age that Milankovitch has explained so many years ago. But now mankind has reversed that. Yes, there are *mathematically possible* places in the proxy record where a .9C spike like we have produced since the start of the industrial revolution could hide due to their mean temporal resolution. But there is, on the other hand, no possible physical basis for these spikes. No known forcing could cause the climate to warm by more than .5C over just a hundred years or so, and then take it down by a corresponding amount just as quickly so as to be completely missed by *all the proxies*. You can’t hide that much heat, even if most of it might have gone into the ocean.

But whenever you posit the obvious, the AGW deniers just go all quiet, and attack again a few hours later with their “Hey, look, there goes a squirrel!” shtick. It’s becoming quite tiresome/juvenile.

“But what is the salient point of the paper, and what has the AGW deniers so obviously terrified is… that for 5000 years we had started the slow, inexorable slide into the next ice age that Milankovitch has explained so many years ago.”

Apparently the current line of attack over at CA is an attack to show that Marcott et al have miscalculated their monte carlo error estimation. Nick Stokes brought this up at tamino’s (and thinks they’re wrong), led my RomanM, who was also involved in the attack on Eric Steig’s temp trend reconstruction for antarctica a couple of years ago.

Steve #141

Look you are probably right that no-one can propose a possible physical basis for such spikes which are *mathematically possible* hiding in the proxy data due to their mean temporal resolution and have no theoretical basis – as 19th century physicists discovered to their cost.

dhogaza #142

Milankovitz is only a half victory as he doesn’t really explain the details of ice ages at all. No insolation orbital change predicted any cooling over the last 6000 years. Timing of ice ages for he last 1 million years is still a mystery – although it seem likely another one is due within 2000 years.

Clive Best @143 — Unfortunately you have it rather wrong. Look at June isolation for 65N for the past 10,000 years. As for another glacial soon, read David Archer’s “The Long Thaw”. Regarding the term ice age, Terra has been in one for at least the past 2.588 million years and continues to do so despite the long thaw.

@David #144

Certainly Roe showed good correlation for June 65N insolation with Arctic Ice volume, but the correlation with global Benthic dO18 is not so clear. Nor can it explain the ~100K cycles of interglacials.

> clivebest

Your blog refers to: “orbital plain (oblicity)”

What?

> Hank, My blog has not been “peer reviewed” :-)

E. Tziperman, M. E. Raymo, P. Huybers, C. Wunsch, 2006. Consequences of pacing the Pleistocene 100 kyr ice ages by non linear phase locking to Milankovitch forcing (pdf), Paleoceanography

Ridgewell and Maslin Quaternary pacing review

http://lgmacweb.env.uea.ac.uk/e114/publications/manuscript_maslin_and_ridgwell.pdf

Archer & Ganpolski, A Movable Trigger

http://melts.uchicago.edu/~archer/reprints/archer.2005.trigger.pdf

I’d just like to thank the paper’s authors for putting together this FAQ and further responding to other comments, and thank RealClimate for making this happen. This has been a great opportunity, and I hope the acidic rhetoric from certain quarters doesn’t sour the team from engaging the public or doing their research.

For those interested in ‘spike’ discussions, I’ve run a 200 year 0.9 C spike through the measured Marcott et al frequency gain function (http://www.skepticalscience.com/news.php?p=2&t=71&&n=1951#93527), and find that a 0.3 C x 600 year spike remains after filtering – which would have shown in the Marcott data. The zero frequency average value increase (unaffected by the Marcott Monte Carlo procedures) has to go somewhere, after all.

Claims regarding mythic and unevidenced spikes, particularly when attempts are made to draw parallels to current conditions, are (IMO) quite unsupportable.