Readers will be aware of the paper by Shaun Marcott and colleagues, that they published a couple weeks ago in the journal Science. That paper sought to extend the global temperature record back over the entire Holocene period, i.e. just over 11 kyr back time, something that had not really been attempted before. The paper got a fair amount of media coverage (see e.g. this article by Justin Gillis in the New York Times). Since then, a number of accusations from the usual suspects have been leveled against the authors and their study, and most of it is characteristically misleading. We are pleased to provide the authors’ response, below. Our view is that the results of the paper will stand the test of time, particularly regarding the small global temperature variations in the Holocene. If anything, early Holocene warmth might be overestimated in this study.

Update: Tamino has three excellent posts in which he shows why the Holocene reconstruction is very unlikely to be affected by possible discrepancies in the most recent (20th century) part of the record. The figure showing Holocene changes by latitude is particularly informative.

____________________________________________

Summary and FAQ’s related to the study by Marcott et al. (2013, Science)

Prepared by Shaun A. Marcott, Jeremy D. Shakun, Peter U. Clark, and Alan C. Mix

Primary results of study

Global Temperature Reconstruction: We combined published proxy temperature records from across the globe to develop regional and global temperature reconstructions spanning the past ~11,300 years with a resolution >300 yr; previous reconstructions of global and hemispheric temperatures primarily spanned the last one to two thousand years. To our knowledge, our work is the first attempt to quantify global temperature for the entire Holocene.

Structure of the Global and Regional Temperature Curves: We find that global temperature was relatively warm from approximately 10,000 to 5,000 years before present. Following this interval, global temperature decreased by approximately 0.7°C, culminating in the coolest temperatures of the Holocene around 200 years before present during what is commonly referred to as the Little Ice Age. The largest cooling occurred in the Northern Hemisphere.

Holocene Temperature Distribution: Based on comparison of the instrumental record of global temperature change with the distribution of Holocene global average temperatures from our paleo-reconstruction, we find that the decade 2000-2009 has probably not exceeded the warmest temperatures of the early Holocene, but is warmer than ~75% of all temperatures during the Holocene. In contrast, the decade 1900-1909 was cooler than~95% of the Holocene. Therefore, we conclude that global temperature has risen from near the coldest to the warmest levels of the Holocene in the past century. Further, we compare the Holocene paleotemperature distribution with published temperature projections for 2100 CE, and find that these projections exceed the range of Holocene global average temperatures under all plausible emissions scenarios.

Frequently Asked Questions and Answers

Q: What is global temperature?

A: Global average surface temperature is perhaps the single most representative measure of a planet’s climate since it reflects how much heat is at the planet’s surface. Local temperature changes can differ markedly from the global average. One reason for this is that heat moves around with the winds and ocean currents, warming one region while cooling another, but these regional effects might not cause a significant change in the global average temperature. A second reason is that local feedbacks, such as changes in snow or vegetation cover that affect how a region reflects or absorbs sunlight, can cause large local temperature changes that are not mirrored in the global average. We therefore cannot rely on any single location as being representative of global temperature change. This is why our study includes data from around the world.

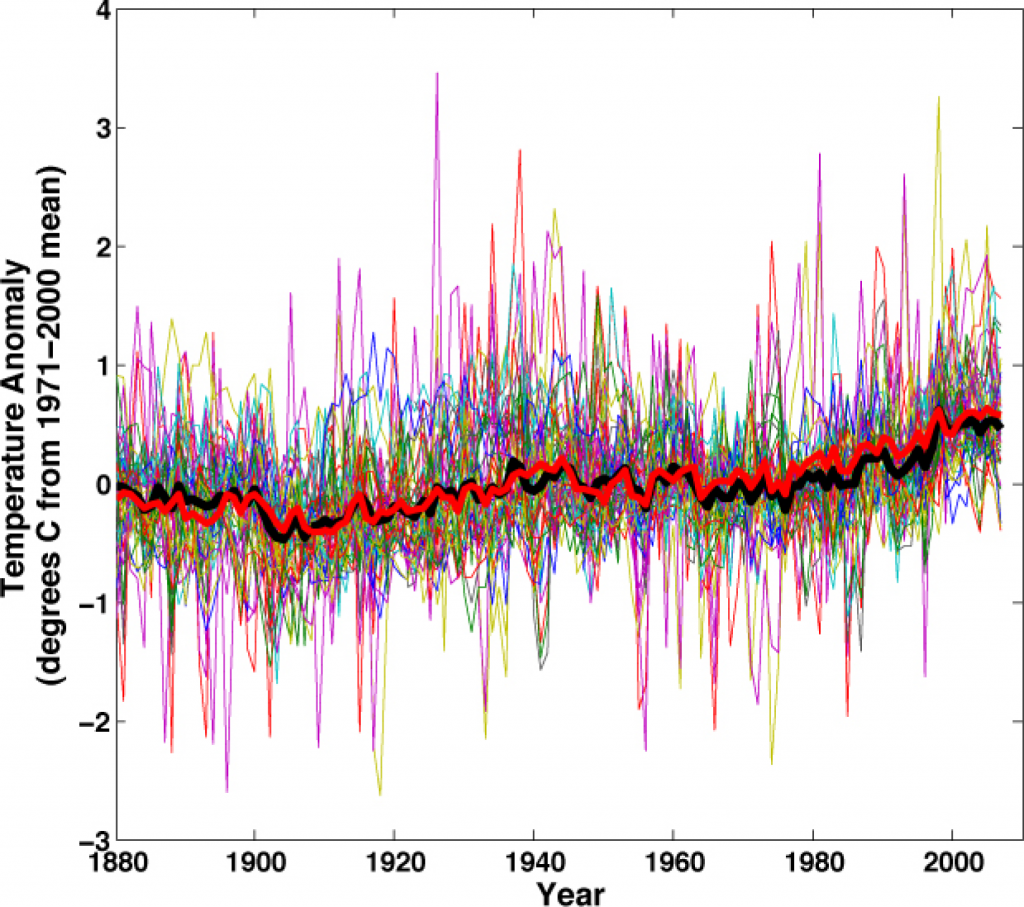

We can illustrate this concept with temperature anomaly data based on instrumental records for the past 130 years from the National Climatic Data Center (http://www.ncdc.noaa.gov/cmb-faq/anomalies.php#anomalies). Over this time interval, an increase in the global average temperature is documented by thermometer records, rising sea levels, retreating glaciers, and increasing ocean heat content, among other indicators. Yet if we plot temperature anomaly data since 1880 at the same locations as the 73 sites used in our paleotemperature study, we see that the data are scattered and the trend is unclear. When these same 73 historical temperature records are averaged together, we see a clear warming signal that is very similar to the global average documented from many more sites (Figure 1). Averaging reduces local noise and provides a clearer perspective on global climate.

Figure 1: Temperature anomaly data (thin colored lines) at the same locations as the 73 paleotemperature records used in Marcott et al. (2013), the average of these 73 temperature anomaly series (bold black line), and the global average temperature from the National Climatic Data Center blended land and ocean dataset (bold red line) (data from Smith et al., 2008).

New Scientist magazine has an “app” that allows one to point-and-plot instrumental temperatures for any spot on the map to see how local temperature changes compare to the global average over the past century (http://warmingworld.newscientistapps.com/).

Q: How does one go about reconstructing temperatures in the past?

A: Changes in Earth’s temperature for the last ~160 years are determined from instrumental data, such as thermometers on the ground or, for more recent times, satellites looking down from space. Beyond about 160 years ago, we must turn to other methods that indirectly record temperature (called “proxies”) for reconstructing past temperatures. For example, tree rings, calibrated to temperature over the instrumental era, provide one way of determining temperatures in the past, but few trees extend beyond the past few centuries or millennia. To develop a longer record, we used primarily marine and terrestrial fossils, biomolecules, or isotopes that were recovered from ocean and lake sediments and ice cores. All of these proxies have been independently calibrated to provide reliable estimates of temperature.

Q: Did you collect and measure the ocean and land temperature data from all 73 sites?

A: No. All of the datasets were previously generated and published in peer-reviewed scientific literature by other researchers over the past 15 years. Most of these datasets are freely available at several World Data Centers (see links below); those not archived as such were graciously made available to us by the original authors. We assembled all these published data into an easily used format, and in some cases updated the calibration of older data using modern state-of-the-art calibrations. We made all the data available for download free-of-charge from the Science web site (see link below). Our primary contribution was to compile these local temperature records into “stacks” that reflect larger-scale changes in regional and global temperatures. We used methods that carefully consider potential sources of uncertainty in the data, including uncertainty in proxy calibration and in dating of the samples (see step-by-step methods below).

NOAA National Climate Data Center: http://www.ncdc.noaa.gov/paleo/paleo.html

PANGAEA: http://www.pangaea.de/

Holocene Datasets: http://www.sciencemag.org/content/339/6124/1198/suppl/DC1

Q: Why use marine and terrestrial archives to reconstruct global temperature when we have the ice cores from Greenland and Antarctica?

A: While we do use these ice cores in our study, they are limited to the polar regions and so give only a local or regional picture of temperature changes. Just as it would not be reasonable to use the recent instrumental temperature history from Greenland (for example) as being representative of the planet as a whole, one would similarly not use just a few ice cores from polar locations to reconstruct past temperature change for the entire planet.

Q: Why only look at temperatures over the last 11,300 years?

A: Our work was the second half of a two-part study assessing global temperature variations since the peak of the last Ice Age about 22,000 years ago. The first part reconstructed global temperature over the last deglaciation (22,000 to 11,300 years ago) (Shakun et al., 2012, Nature 484, 49-55; see also http://www.people.fas.harvard.edu/~shakun/FAQs.html), while our study focused on the current interglacial warm period (last 11,300 years), which is roughly the time span of developed human civilizations.

Q: Is your paleotemperature reconstruction consistent with reconstructions based on the tree-ring data and other archives of the past 2,000 years?

A: Yes, in the parts where our reconstruction contains sufficient data to be robust, and acknowledging its inherent smoothing. For example, our global temperature reconstruction from ~1500 to 100 years ago is indistinguishable (within its statistical uncertainty) from the Mann et al. (2008) reconstruction, which included many tree-ring based data. Both reconstructions document a cooling trend from a relatively warm interval (~1500 to 1000 years ago) to a cold interval (~500 to 100 years ago, approximately equivalent to the Little Ice Age).

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Our global paleotemperature reconstruction includes a so-called “uptick” in temperatures during the 20th-century. However, in the paper we make the point that this particular feature is of shorter duration than the inherent smoothing in our statistical averaging procedure, and that it is based on only a few available paleo-reconstructions of the type we used. Thus, the 20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions. Our primary conclusions are based on a comparison of the longer term paleotemperature changes from our reconstruction with the well-documented temperature changes that have occurred over the last century, as documented by the instrumental record. Although not part of our study, high-resolution paleoclimate data from the past ~130 years have been compiled from various geological archives, and confirm the general features of warming trend over this time interval (Anderson, D.M. et al., 2013, Geophysical Research Letters, v. 40, p. 189-193; http://www.agu.org/journals/pip/gl/2012GL054271-pip.pdf).

Q: Is the rate of global temperature rise over the last 100 years faster than at any time during the past 11,300 years?

A: Our study did not directly address this question because the paleotemperature records used in our study have a temporal resolution of ~120 years on average, which precludes us from examining variations in rates of change occurring within a century. Other factors also contribute to smoothing the proxy temperature signals contained in many of the records we used, such as organisms burrowing through deep-sea mud, and chronological uncertainties in the proxy records that tend to smooth the signals when compositing them into a globally averaged reconstruction. We showed that no temperature variability is preserved in our reconstruction at cycles shorter than 300 years, 50% is preserved at 1000-year time scales, and nearly all is preserved at 2000-year periods and longer. Our Monte-Carlo analysis accounts for these sources of uncertainty to yield a robust (albeit smoothed) global record. Any small “upticks” or “downticks” in temperature that last less than several hundred years in our compilation of paleoclimate data are probably not robust, as stated in the paper.

Q: How do you compare the Holocene temperatures to the modern instrumental data?

A: One of our primary conclusions is based on Figure 3 of the paper, which compares the magnitude of global warming seen in the instrumental temperature record of the past century to the full range of temperature variability over the entire Holocene based on our reconstruction. We conclude that the average temperature for 1900-1909 CE in the instrumental record was cooler than ~95% of the Holocene range of global temperatures, while the average temperature for 2000-2009 CE in the instrumental record was warmer than ~75% of the Holocene distribution. As described in the paper and its supplementary material, Figure 3 provides a reasonable assessment of the full range of Holocene global average temperatures, including an accounting for high-frequency changes that might have been damped out by the averaging procedure.

Q: What about temperature projections for the future?

A: Our study used projections of future temperature published in the Fourth Assessment of the Intergovernmental Panel on Climate Change in 2007, which suggest that global temperature is likely to rise 1.1-6.4°C by the end of the century (relative to the late 20th century), depending on the magnitude of anthropogenic greenhouse gas emissions and the sensitivity of the climate to those emissions. Figure 3 in the paper compares these published projected temperatures from various emission scenarios to our assessment of the full distribution of Holocene temperature distributions. For example, a middle-of-the-road emission scenario (SRES A1B) projects global mean temperatures that will be well above the Holocene average by the year 2100 CE. Indeed, if any of the six emission scenarios considered by the IPCC that are shown on Figure 3 are followed, future global average temperatures, as projected by modeling studies, will likely be well outside anything the Earth has experienced in the last 11,300 years, as shown in Figure 3 of our study.

Technical Questions and Answers:

Q. Why did you revise the age models of many of the published records that were used in your study?

A. The majority of the published records used in our study (93%) based their ages on radiocarbon dates. Radiocarbon is a naturally occurring isotope that is produced mainly in the upper atmosphere by cosmic rays. This form of carbon is then distributed around the world and incorporated into living things. Dating is based on the amount of this carbon left after radioactive decay. It has been known for several decades that radiocarbon years differ from true “calendar” years because the amount of radiocarbon produced in the atmosphere changes over time, as does the rate that carbon is exchanged between the ocean, atmosphere, and biosphere. This yields a bias in radiocarbon dates that must be corrected. Scientists have been able to determine the correction between radiocarbon years and true calendar year by dating samples of known age (such as tree samples dated by counting annual rings) and comparing the apparent radiocarbon age to the true age. Through many careful measurements of this sort, they have demonstrated that, in general, radiocarbon years become progressively “younger” than calendar years as one goes back through time. For example, the ring of a tree known to have grown 5700 years ago will have a radiocarbon age of ~5000 years, whereas one known to have grown 12,800 years ago will have a radiocarbon age of ~11,000 years.

For our paleotemperature study, all radiocarbon ages needed to be converted (or calibrated) to calendar ages in a consistent manner. Calibration methods have been improved and refined over the past few decades. Because our compilation included data published many years ago, some of the original publications used radiocarbon calibration systems that are now obsolete. To provide a consistent chronology based on the best current information, we thus recalibrated all published radiocarbon ages with Calib 6.0.1 software (using the databases INTCAL09 for land samples or MARINE09 for ocean samples) and its state-of-the-art protocol for site-specific locations and materials. This software is freely available for online use at http://calib.qub.ac.uk/calib/.

By convention, radiocarbon dates are recorded as years before present (BP). BP is universally defined as years before 1950 CE, because after that time the Earth’s atmosphere became contaminated with artificial radiocarbon produced as a bi-product of nuclear bomb tests. As a result, radiocarbon dates on intervals younger than 1950 are not useful for providing chronologic control in our study.

After recalibrating all radiocarbon control points to make them internally consistent and in compliance with the scientific state-of-the-art understanding, we constructed age models for each sediment core based on the depth of each of the calibrated radiocarbon ages, assuming linear interpolation between dated levels in the core, and statistical analysis that quantifies the uncertainty of ages between the dated levels. In geologic studies it is quite common that the youngest surface of a sediment core is not dated by radiocarbon, either because the top is disturbed by living organisms or during the coring process. Moreover, within the past hundred years before 1950 CE, radiocarbon dates are not very precise chronometers, because changes in radiocarbon production rate have by coincidence roughly compensated for fixed decay rates. For these reasons, and unless otherwise indicated, we followed the common practice of assuming an age of 0 BP for the marine core tops.

Q: Are the proxy records seasonally biased?

A: Maybe. We cannot exclude the possibility that some of the paleotemperature records are biased toward a particular season rather than recording true annual mean temperatures. For instance, high-latitude proxies based on short-lived plants or other organisms may record the temperature during the warmer and sunnier summer months when the organisms grow most rapidly. As stated in the paper, such an effect could impact our paleo-reconstruction. For example, the long-term cooling in our global paleotemperature reconstruction comes primarily from Northern Hemisphere high-latitude marine records, whereas tropical and Southern Hemisphere trends were considerably smaller. This northern cooling in the paleotemperature data may be a response to a long-term decline in summer insolation associated with variations in the earth’s orbit, and this implies that the paleotemperature proxies here may be biased to the summer season. A summer cooling trend through Holocene time, if driven by orbitally modulated seasonal insolation, might be partially canceled out by winter warming due to well-known orbitally driven rise in Northern-Hemisphere winter insolation through Holocene time. Summer-biased proxies would not record this averaging of the seasons. It is not currently possible to quantify this seasonal effect in the reconstructions. Qualitatively, however, we expect that an unbiased recorder of the annual average would show that the northern latitudes might not have cooled as much as seen in our reconstruction. This implies that the range of Holocene annual-average temperatures might have been smaller in the Northern Hemisphere than the proxy data suggest, making the observed historical temperature averages for 2000-2009 CE, obtained from instrumental records, even more unusual with respect to the full distribution of Holocene global-average temperatures.

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Here we elaborate on our short answer to this question above. We concluded in the published paper that “Without filling data gaps, our Standard5×5 reconstruction (Figure 1A) exhibits 0.6°C greater warming over the past ~60 yr B.P. (1890 to 1950 CE) than our equivalent infilled 5° × 5° area-weighted mean stack (Figure 1, C and D). However, considering the temporal resolution of our data set and the small number of records that cover this interval (Figure 1G), this difference is probably not robust.” This statement follows from multiple lines of evidence that are presented in the paper and the supplementary information: (1) the different methods that we tested for generating a reconstruction produce different results in this youngest interval, whereas before this interval, the different methods of calculating the stacks are nearly identical (Figure 1D), (2) the median resolution of the datasets (120 years) is too low to statistically resolve such an event, (3) the smoothing presented in the online supplement results in variations shorter than 300 yrs not being interpretable, and (4) the small number of datasets that extend into the 20th century (Figure 1G) is insufficient to reconstruct a statistically robust global signal, showing that there is a considerable reduction in the correlation of Monte Carlo reconstructions with a known (synthetic) input global signal when the number of data series in the reconstruction is this small (Figure S13).

Q: How did you create the Holocene paleotemperature stacks?

A: We followed these steps in creating the Holocene paleotemperature stacks:

1. Compiled 73 medium-to-high resolution calibrated proxy temperature records spanning much or all of the Holocene.

2. Calibrated all radiocarbon ages for consistency using the latest and most precise calibration software (Calib 6.0.1 using INTCAL09 (terrestrial) or MARINE09 (oceanic) and its protocol for the site-specific locations and materials) so that all radiocarbon-based records had a consistent chronology based on the best current information. This procedure updates previously published chronologies, which were based on a variety of now-obsolete and inconsistent calibration methods.

3. Where applicable, recalibrated paleotemperature proxy data based on alkenones and TEX86 using consistent calibration equations specific to each of the proxy types.

4. Used a Monte Carlo analysis to generate 1000 realizations of each proxy record, linearly interpolated to constant time spacing, perturbing them with analytical uncertainties in the age model and temperature estimates, including inflation of age uncertainties between dated intervals. This procedure results in an unbiased assessment of the impact of such uncertainties on the final composite.

5. Referenced each proxy record realization as an anomaly relative to its mean value between 4500 and 5500 years Before Present (the common interval of overlap among all records; Before Present, or BP, is defined by standard practice as time before 1950 CE).

6. Averaged the first realization of each of the 73 records, and then the second realization of each, then the third, the fourth, and so on, to form 1000 realizations of the global or regional temperature stacks.

7. Derived the mean temperature and standard deviation from the 1000 simulations of the global temperature stack.

8. Repeated this procedure using several different area-weighting schemes and data subsets to test the sensitivity of the reconstruction to potential spatial and proxy biases in the dataset.

9. Mean-shifted the global temperature reconstructions to have the same average as the Mann et al. (2008) CRU-EIV temperature reconstruction over the interval 510-1450 years Before Present. Since the CRU-EIV reconstruction is referenced as temperature anomalies from the 1961-1990 CE instrumental mean global temperature, the Holocene reconstructions are now also effectively referenced as anomalies from the 1961-1990 CE mean.

10. Estimated how much higher frequency (decade-to-century scale) variability is plausibly missing from the Holocene reconstruction by calculating attenuation as a function of frequency in synthetic data processed with the Monte-Carlo stacking procedure, and by statistically comparing the amount of temperature variance the global stack contains as a function of frequency to the amount contained in the CRU-EIV reconstruction. Added this missing variability to the Holocene reconstruction as red noise.

11. Pooled all of the Holocene global temperature anomalies into a single histogram, showing the distribution of global temperature anomalies during the Holocene, including the decadal-to century scale high-frequency variability that the Monte-Carlo procedure may have smoothed from the record (largely from the accounting for chronologic uncertainties).

12. Compared the histogram of Holocene paleotemperatures to the instrumental global temperature anomalies during the decades 1900-1909 CE and 2000-2009 CE. Determined the fraction of the Holocene temperature anomalies colder than 1900-1909 CE and 2000-2009 CE.

13. Compared global temperature projections for 2100 CE from the Fourth Assessment Report of the Intergovernmental Panel on Climate Change for various emission scenarios.

14. Evaluated the impact of potential sources of uncertainty and smoothing in the Monte-Carlo procedure, as a guide for future experimental design to refine such analyses.

Once cannot conclude from Marcott that there have been no short term events of similar magnitude to the Younger Dryas (approx 15C) within the period covered by Marcott. Such events would be hidden by the lack of resolution so long as they were shorter than 1/2 the sampling rate as per Nyquist.

[Response: These are the same core that show the YD! You can’t claim that a YD like event wouldn’t be shown in the same cores. Please, you can do better than this. – gavin]

The fact of the matter is that the 20th C rise is real, anomalous, pretty well understood…

The 20th C rise is clearly real. But with all the uncertainties stated (and restated) by Marcott et al., there’s little to support calling it “anomalous.” And the recent divergence of observed temperatures from predictions suggests it is not “pretty well understood.”

[Response: You can think whatever you like. – gavin]

The FAQ makes it clear that Marcott et. al have measured the broad holocene temperature dependency at a maximum resolution of ~ 100 years. This shows a clear cooling trend over the last 5000 years. The data says nothing at all about any trends over the last 100 years.

It is only by comparing instrument data eg. Hadcrut4 with the Marcott data that statements like

“We conclude that the average temperature for 1900-1909 CE in the instrumental record was cooler than ~95% of the Holocene range of global temperatures, while the average temperature for 2000-2009 CE in the instrumental record was warmer than ~75% of the Holocene distribution.”

can be made. I think it would have been better to have made this fact much clearer in the various press releases and media interviews.

Clive Best:

” This fact ” was clear from the beginning. It was stated , more than once , in the paper. It was stated , more than once , by Shakun in his Skype interview . It was stated, more than once, in the FRQ.

What do you require. Should ” this fact” be written on a 2×4 and be emphatically and repeatedly applied.

John McMaus

Marcott et al. tells us what temperatures were doing over the past few thousand years. GISTEMP, BEST, etc. tell us what temperatures have been doing over the past century.

Clearly a lot of people desperately want to forbid any comparison of A to B. Just because it hurts Anthony Watts’s feelings, though, doesn’t mean that the rest of us have to promise not to put 2 and 2 together.

What I see in this discussion is the death throes of organized denial.

Apologies if this is stepping away from the science too far for this discussion (please delete if so).

I am interested in messaging for policy makers so I have adapted Jos Hagelaar’s http://ourchangingclimate.wordpress.com/2013/03/19/the-two-epochs-of-marcott/ presentation of Marcott et al., Shakun et al., recent temperatures and IPCC’s A1B. I have added in temperature bands and the outlook temperatures and the simplistic alternative emissions outlooks. See here at https://docs.google.com/file/d/0B5NgIqKD_aX4RWF1MGZ4YjhDVzQ/edit .

Please do let me know if it this adaptation is mis-representing the outlook choice or history in any policy-relevant way as I would like to get it right. Obviously this is intended purely as messaging to summarise the recent science as currently understood and not as a comment or development of the science.

Paul

@ The_J (55).

GISTEMP, BEST provide data based on one-year means. Maarcott et al provide data based on 100-year means. For any previous century without instrumental data (eg. from year 1000 to year 1100), we have no way of knowing whether annual temperatures fluctuated over the century by zero degC, bu 0.2 degC, 0.5 degC or even 1.0 degC. Therefore, we cannot use a comparison of GISTEMP/BEST and Marcott et al to judge whether 20th C fluctuations are normal or unusual.

Or do you disagree with that?

Does Tamino’s deconstruction by latitude suggest that the Southern Hemisphere did not experience much change from end-glaciation to now, in fact a slight cooling? that the Holocene is/has been largely a NH experience (due to continental vs marine ice masses)? That the tropics were little affected once the glaciers started to melt?

If the beginning of the Holocene to now in the SH didn’t show much change, one wonders what happened during the glaciation relative to the NH.

Are we seeing sudden, serious climatic variations on a hemispheric level happening because of ocean currents, not atmospheric temperatures?

John McMaus,

Everyone from NYT to Daily Telegraph to Mcintyre interpreted the statement as a direct result from the proxy data. It would have been easy to clarify immediately. There remains some uncertainty in my mind regarding the normalisation of proxy anomalies from 5000ybp to 1961-1990. I just added 0.3 C to the Marcott anomalies. What is the net shift used in the paper ?

The phrase “not robust” keeps being used. Perhaps someone could explain exactly what this means in scientific terms. Content-free? Unreliable? Weak? Wrong? Vacuous random noise? In probabilities, anywhere from potentially 5% to 95% accurate, but nobody knows? See, eg, the work of William C. Wimsatt on ‘scientific robustness’.

In this matter “should not have been included” seems a good definition.

[Response: it’s not so hard. Any kind of complex analysis involves choices, and for each choice there are usually conflicting justifications. A result is robust if it is the same regardless of the choices made. If the result changes a lot depending on choices that can’t be a priori decided then it isn’t. Easy. – gavin]

Quercetum

You mean, aside from conservation of energy. Find me a mechanism that will cause temperatures to fluctuate by a degree on decadal timescales but remain unchanged on centennial timescales. THAT’s a neat trick.

S. A.: “What I see in this discussion is the death throes of organized denial.

Optimist! I suspect they’ll be twitching a few decades more.

SecularAnimist is wrong to say this discussion is the “death throws of organised denial” (whatever that is). Instead it shows that climate scientists cannot admit even the slightest error, and it is this failure which drives many people such as myself to accept the sceptical position.

It does not even seem possible for RC to say something like “while accurate, on reflection the publicity was mis-judged” or that “Perhaps better choices could have been made about the end point of the graph”.

[Response: What tosh. We have criticized many press releases and suggested many papers could have ‘done something a little better’. But this isn’t one of those cases – instead we have people making a huge mountain out of an irrelevant molehill. Your assessment is based simply on your prior judgement and an inability to accept that other people can genuinely come to a different conclusion. You find it easier to impugn our integrity rather than agree to disagree. Readers can judge who is more credible. – gavin]

One again we see Gavin patiently and expertly responding to a long series of determined point missers – what looks like (taking other web sites into consideration) powerful organized denial.

Why?

As Gavin @ 46 says:

“It was indeed predictable that this paper would be attacked regardless of where they ended the reconstruction since it does make the 20th C rise stand out in a longer context than previously, and for some people that is profoundly disquieting.”

So SA @ 56 is curious.

Re: #59 (Doug Proctor)

My deconstruction by latitude is remarkably similar to that of Marcott et al. except for the 20th century. Note in fact that I showed their reconstructions for these latitude bands as well and compared them to mine (which are done by the “differencing method”). Look at panels I, J, and K in their figure 2, you’ll see essentially the same thing.

Paul Rice #57:

Very nice graph! One thing I reacted to was the “Temperatures unknown to humans” as humans probably were around during at least last interglacial, which were a little bit warmer than the present. Perhaps it should say “Temperatures unknown to human civilization”?

I also wonder about the projections for 2100? I guess it is the IPCC 2007 upper range projections. This should be annotated, and I would choose a different color for those projections than the temperature reconstruction (maybe orange and replace the A1B line).

The only cause for concern would be if the original paper was sufficiently incorrectly determining the paleoclimate reconstruction or if the modern instrument record was sufficiently incorrect to invalidate any conclusions comparing the two.

I’m not seeing either point in any of the objections.

This is an excellent piece of work. Like raypierre @3 I have concerns that it is being over-interpreted in the popular press (eg the Times article) and the blogosphere. Even the raw Marcott data is time-smoothed with a bandwidth of about 120 years plus. That means the smoothing may hide high frequency transients (such as spikes) or even high frequency oscillations. So it’s not strictly appropriate to add the recent instrumental record on to the end of the Holocene reconstruction and compare the two as done by the NYT, Tamino & others. It’s conceptually the same error as comparing weather and climate.

I guess there are two caveats to this:

1) Science communication seems to require some degree of simplification, but at what point do we throw the baby out with the bathwater?

2) There is good reason to believe the recent surge in global temperatures is the start of a larger more-prolonged episode due entirely to human actions. This is the key message, and this finding helps highlight that.

I was venting about this to my father (PW Anderson), and he mentioned that he had read the article in Science and I could quote him, and even found the issue for me. Since he will be 90 soon and prefers to stay out of this donnybrook, this is quite a compliment, and I hope Marcott will see it!

He said he was impressed; the article was “very clean” and “well put together”.

Cf. “Death throes of denial”–

#64: Definition (and note spelling): “1. A severe pang or spasm of pain, as in childbirth. See Synonyms at pain.

2. throes A condition of agonizing struggle or trouble: a country in the throes of economic collapse.”

#63: Well, Ray, one can hope. erhaps it’s just me, but the quality of argument amongst the usual suspects isn’t getting any higher–rather, I think, the reverse.

I have a (different, probably easier) question regarding the Marcott Study.

I was never under the impression that direct changes to obliquity (compared to precession for example, which is of course modulated by obliquity) was the main driver of Holocene climate change, in particular the high-latitude seasonality. This is mentioned in their text on a few occasions. Did they get this right?

There is a message in Marcott that I think many have missed. Marcott tells us almost nothing about how the past compares with today, because of the resolution problem. Marcott recognizes this in their FAQ. The probability function is specific to the resolution. Thus, you cannot infer the probability function for a high resolution series from a low resolution series, because you cannot infer a high resolution signal from a low resolution signal. The result is nonsense.

However, what Marcott does tell us is still very important and I hope the authors of Marcott et al will take the time to consider. The easiest way to explain is by analogy:

50 years ago astronomers searched extensively for planets around stars using lower resolution equipment. They found none and concluded that they were unlikely to find any at existing the resolution. However, some scientists and the press generalized this further to say there were unlikely to be planets around stars, because none had been found.

This is the argument that since we haven’t found 20th century equivalent spikes in low resolution paleo proxies, they are unlike to exist. However, this is a circular argument and it is why Marcott et al has gotten into trouble. It didn’t hold for planets and now we have evidence that it doesn’t hold for climate.

What astronomy found instead was that as we increased the resolution we found planets. Not just a few, but almost everywhere we looked. This is completely contrary to what the low resolution data told us and this example shows the problems with today’s thinking. You cannot use a low resolution series to infer anything about a high resolution series.

However, the reverse is not true. What Marcott is showing is that in the high resolution proxies there is a temperature spike. This is equivalent to looking at the very first star with high resolution equipment and finding planets. To find a planet on the first star tells us you are likely to find planets around many stars.

Thus, what Marcott is telling us is that we should expect to find a 20th century type spike in many high resolution paleo series. Rather than being an anomaly, the 20th century spike should appear in many places as we improve the resolution of the paleo temperature series. This is the message of Marcott and it is an important message that the researchers need to consider.

Marcott et al: You have just looked at your first star with high resolution equipment and found a planet. Are you then to conclude that since none of the other stars show planets at low resolution, that there are no planets around them? That is nonsense. The only conclusion you can reasonably make from your result is that as you increase the resolution of other paleo proxies, you are more likely to find spikes in them as well.

MODS: This post’s artificial controversy is centered on a specific graph. IMHO, that graph oughta be front-and-center. For your readers to have to link to Denialist sites to see the graph (as everyone knows, seeing the actual paper is against the rules for regular folks), well….

58 Quer said, “For any previous century without instrumental data (eg. from year 1000 to year 1100), we have no way of knowing whether annual temperatures fluctuated over the century by zero degC, bu 0.2 degC, 0.5 degC or even 1.0 degC.”

Doesn’t make sense to me. First, you’re really talking about four ~1C deviations. First, the ~1C rise in temps, then the ~1C decline to get back to “average”. Then, you’d need to compensate for the up-wiggle with another ~1C decline, and finally end with a second ~1C rise to get back to “normal”.

Fudge it as you desire, but to “hide the incline” would require something close to four 1C deviations over a few hundred years. That would surely leave a tremendous mark on the biosphere and our civilizations. Even if you could cobble up a scenario that this particular study wouldn’t pick up, it would leave traces. For example, freeze-intolerant species could show up in a core for a century and then disappear. Agriculture, cities, all kinds of stuff would be affected by such wild and constant swings. Are you saying that such things would be overlooked by the biologists who study the same (and other) cores and by the anthropologists who study past civilizations?

62 Ray L said, “You mean, aside from conservation of energy. Find me a mechanism that will cause temperatures to fluctuate by a degree on decadal timescales but remain unchanged on centennial timescales. THAT’s a neat trick.”

You forget Arthur C Clarke’s, “Any sufficiently advanced technology is indistinguishable from magic.”

Since ALL science is sufficiently advanced for that statement to apply to Denialists, they need not even reply.

But since you ask, a serious (Yellowstone) volcano at the beginning of a low solar period, followed by a solar max and almost no volcanoes might do it. (probably not, but hey, just speculating)

Now, if you add in, “a mechanism that leaves NO trace other than temperature changes”, then we’re into seriously Hogwarts territory….

AndyL #64: try a google scholar search on climate science error. There. I did it for you. Over 1.8-million hits. Even if you take out the papers where the word relates to “error bar” or “error bars” you still have 1.7-million hits to choose from.

Anyone attracted to a side that is extremely self-critical would not feel comfortable with the self-styled sceptics. Any argument goes, including some that are complete balderdash. Here is a compendium of articles from one of my blogs that makes the case. Just the tip of the iceberg. Read their stuff really sceptically and you will find a lot more.

Anyone who thinks climate scientists never admit to errors has never read the literature.

Here’s an example for you: previous studies of the volume and depth of Antarctic ice turn out to be flawed; the latest British Antarctic Survey paper on the subject corrects the error. It seems the Antarctic is a rather smaller land mass than previously thought and if all the ice went, a large part of it would be isolated islands.

Then there’s the error in estimates of the speed of loss of Arctic sea ice, which is happening a lot faster than generally modelled:

Going back a bit further, the IPCC’s 2007 report underestimated sea level rise.

And of course there is the well-known fiasco of the IPCC report on Himalayan glaciers melting by 2035.

The vast majority of significant errors understate the effects of climate change; see Hansen on scientific reticence to understand why.

This is all very well known, right out in the open. No cover up. No conspiracy. Not on this side of the aisle anyway. What about yours?

Chris Colose #72

I don’t know. But I found they have messed up the references for their insolation sources. I think they meant to refer to Laskar et al 2004. And used this site for the calculations.

@67 Perwis Thanks! And thanks for the suggestions. You are right I need to make the differences in projections clearer. The intention in the graph is to demonstrate policy choices faced now, but the original data should be separated more as you say.

@RealClimate.org Many thanks for this blog in general and this Q&A/discussion in particular. All immensely helpful.

I continue to be most dismayed by academics like Pielke Jr who continue to work so hard to miss the point. His critique of the reconstruction, using McIntyre as a reference, is made especially absurd in attempting to cloud the actual paper and its evidence by basing his attack on not liking the press release. At least many of these attacks are becoming seriously silly, which could be viewed as heartening. It seems the same in the media: Revkin did not like this Q&A because it came out on a Sunday. You couldn’t make it up.

Paul

Quercetum writes:

For any previous century without instrumental data (eg. from year 1000 to year 1100), we have no way of knowing whether annual temperatures fluctuated over the century by zero degC, bu 0.2 degC, 0.5 degC or even 1.0 degC. Therefore, we cannot use a comparison of GISTEMP/BEST and Marcott et al to judge whether 20th C fluctuations are normal or unusual.

Or do you disagree with that?

Yes, I disagree with that. First of all, the present CO2-induced temperature rise is almost certainly going to be around for longer than a century. A paleofeature of equivalent magnitude and duration would certainly show up in Marcott’s reconstruction.

Secondly, we know that the current temperature rise is real and we know the physical basis for it, but as far as I know nobody has suggested a physical mechanism that would cause sub-century-scale global “warm spikes” on the order of 1C. And we see nothing like that in the high-resolution proxy record, do we? Before you ask me to believe in gremlins, shouldn’t you have some line of reasoning — a physical process that would produce gremlins, or paleoevidence of gremlins?

Remember that such a spike has to be *global* not just local, it has to be sustained for at least a few decades but not as long as a century, it has to be large (on the scale of 1C) and it has to be *warm* not *cold*.

Can you give me an example of when such an event occurred, and/or an explanation of what physical process did or would produce it?

Nancy Green, I would be more inclined to respond to your analogy about detecting planets around other stars, if you acknowledged how seriously wrong your previous comments in this thread have been.

You claimed that the Younger Dryas was a 15C swing in temperatures, and that the Younger Dryas isn’t detectable in the proxies. But both of those claims are wildly, absurdly wrong. I think you may have been confused by a statement on Wikipedia that the summit area on Greenland was 15C colder during YD than it is today. But that’s not a global-scale change of 15C! As discussed here, the entirety of the change from last glacial maximum to the Holocene was approximately 5C, and the Younger Dryas was approximately 0.6C. And of course the Younger Dryas is readily detectable in climate proxies … that’s how we know about it.

#73–A cross-post from Tamino’s where I called it “ingenious but perverse.”

Nancy’s point boils down to: ‘It is happening now, therefore it probably happened before.’

Except that we know quite why why it is happening now–it is because humanity has increased atmospheric CO2 by ~40% since the 19th century, with a fillip of miscellaneous other greenhouse gases. We are pretty much certain that that has never been done before–that whole Plato “Atlantis thing” being pretty much debunked and all.

So the ‘logic’ requires us to ignore what we do know about the present–the very period about which we have the best, most complete, least uncertain information.

Denialism in a nutshell!

This follows a very familiar path (Yamal springs to mind). Step 1 – Create a controversy. Step 2 – rely on sympathetic outlets to amplify the supposed controversy. Step 3 – try to mire other scientists in the supposed controversy. Makes no difference to science, and it matters to almost no-one outside the denier-sphere and their chosen targets. Nevertheless, I am grateful to this blog and to Tamino for clarifying matters.

@ 73 One fine analogy deserves another. You bury your head in your GPS but you don’t look out the window. Worse, you fail to imagine the reason to. It has been explained to you, but you don’t bother to look up.

You are explaining GPS to people who already know how to use GPS, but you still don’t know where you are.

Susan Anderson@70

Wow! Your father’s almost 90. That makes me feel old. Then again, that means that when I interacted with him while I was at Physics Toady he was in his 70s. I wouldn’t have guess that even then. My best to him.

I don’t think I can ever remember seeing the denialati so terrified!

And remember: when dealing with weapons-grade stupid, wear protection!

Marcott et al. disavow any statistical significance in their paleotemperature reconstructions for the recent 100 year period. In plain language, there is no evidence to be drawn from their work which would indicate that the temperature trend in the past 100 year period is different from the trend in earlier periods studied. Marcott et al. state the reasons in their FAQ at “Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?”

The overlay of data from other sources and the adjustment of the authors’ data baseline to conform to the Mann series serves to put the authors’ work in a comparative context. The only conclusion to be drawn from this is that the authors’ paleotemperature reconstructions are not at variance with models forecasting future average global temperature.

Nancy Green says:

I agree with nearly everything you write. However I don’t think there is strong evidence for the following statement.

“However, the reverse is not true. What Marcott is showing is that in the high resolution proxies there is a temperature spike. This is equivalent to looking at the first star with high resolution equipment and finding planets. To find a planet on the first star tells us you are likely to find planets around many stars.”

I studied only the measurement data. I binned the data in 50 year intervals to avoid interpolation, and then used the Hadley 5×5 grid global averaging software. The results for the published dates are here. The results for the corrected dates are here. Both sets agree with the instrument data, but there is no clear uptick.

I think it would be more correct to say that Marcott’s result is compatible with the observed instrumental rise in 20th century temperatures.

Creationists have been predicting the imminent demise of Darwinism for 150 years. Deniers have been predicting the imminent end of warming and AGW for 30 years (while simultaneously admitting “Of course the planet is warming up” when pushed).

Their prognostications have been, and will continue to be, just as much in vain as before.

Simon, to the extent that you think that spikes like the current one lurk in the low-resolution history of the past, could you explain the physics of such spikes? What would cause them to start and stop?

simon abingdon wrote: “Just watch while the tenuous link between increasing atmospheric CO2 and global surface temperature grows ever weaker, almost by the month.”

What fantasy world are you living in? One surrounded by towering walls of willful ignorance, apparently.

“Nancy is saying ‘if we were able to peer more and more closely into the past we would find that it looked more and more like the recent present’…”

Except, of course, where we can, via specific proxy records, it doesn’t. She makes an assertion not backed by evidence, and, where the evidence exists, not consistent with that evidence.

“Kevin, everyone now knows that such an assertion is no more than mere hypothesising, flagrantly and unashamedly begging the question. Just watch while the tenuous link between increasing atmospheric CO2 and global surface temperature grows ever weaker, almost by the month.”

Oh … well, whatever.

@garhighway #89 “could you explain the physics of such spikes?”

I really shouldn’t have to. They’re only “spikes” because of the grossly compressed x-axis needed for millennial presentation. If you made the x and y axes commensurate you’d see ordinary natural variation rises and falls.

” If you made the x and y axes commensurate you’d see ordinary natural variation rises and falls.”

Oh, I see, CO2 doesn’t cause warming – graph paper causes warming!

Simon and Nancy have been asked repeatedly by others here to propose a *physical basis* for all these spikes that are thought to be hiding in the paleo records due to the mean proxy resolution of ~120 years in the proxies used by Marcott et. al. So, what is it? Fairies? Leprechauns?

What could cause, say, a .5C upwards spike in temps over 50 years that would also dip back underneath the radar in the next 50 years. The answer is: nothing that we know of. For the Simons and the Nancys of the world it is simply enough that someone like McIntyre can cast doubt. It doesn’t matter if there is no rational basis for the doubt.

And so these elusive spikes become the climate science equivalent of evolution’s ‘god of the gaps’.

Simon,

Do you have any idea how rare it is to see the temperature spike, say, a degree in a few decades? Do you have any comprehension of the energies involved? Have you heard of conservation of energy? Or does the desperation behind your magical thinking make you willing to give up all rationality in the Universe?

@Ray Ladbury #94 “Do you have any idea how rare it is to see the temperature spike, say, a degree in a few decades?”

No Ray I’m afraid I don’t. Pray tell me, how rare is it? (I don’t suppose it’s happened recently or we’d already know about it).

Dr Schmidt I haven’t visited RC for sometime and I’m struck by the difference in approach to posters. I congratulate you on your patient, considered, informative and numerous responses. I can’t think of another blog dealing with climate science where the number and calibre of responses even approaches let alone equals the level reached here

SA.What? Did we repeal the laws of physics while I was at work? Or does Ray have it right; magic?

Gavin, Ray, and others. The authors chose your site to post the FAQ’s. Why is it that you don’t insist on having them answer questions, rather than the sometimes ambiguous answers you are forced to give. Not sarc, a serious question.

[Response: I’ve asked them to chime in when they can and hopefully they will. I’m not quite sure why you think we can ‘insist’ on anything though – blogging and/or commenting here or elsewhere is a voluntary activity and sometimes other things take precedence. I would much rather have a few considered responses come in slowly than hurried responses to dozens of queries. -gavin]

Nancy Green, simon abingdon:

Indeed, what physical mechanism could cause a spike that causes 25*10^22 Joules or more of warming (http://www.nodc.noaa.gov/OC5/3M_HEAT_CONTENT/), and then disperses it within 300 years?!? A spiking mechanism, I should note, other than the 40% increase in CO2 we’ve caused over the last one and half centuries?

Gremlins, I would say. Definitely gremlins…. If you have neither a plausible mechanism, nor evidence, for such a spike (there’s lots of evidence against such occurrences, in fact), you’re just blowing smoke, promoting nonsense and confusion.

We _are_ responsible for recent warming, whether you like it or not.

Gavin, ” I’ve asked them to chime in when they can and hopefully they will. I’m not quite sure why you think we can ‘insist’ on anything though – blogging and/or commenting here or elsewhere is a voluntary activity and sometimes other things take precedence. I would much rather have a few considered responses come in slowly than hurried responses to dozens of queries. -gavin]”

Perhaps “insist” was not the proper word. I just think it odd that Marcott et al chose RC to post their FAQ’s, but thus far have not chosen to participate.