Progress has been made in recent years in understanding the observed past sea-level rise. As a result, process-based projections of future sea-level rise have become dramatically higher and are now closer to semi-empirical projections. However, process-based models still underestimate past sea-level rise, and they still project a smaller rise than semi-empirical models.

Sea-level projections were probably the most controversial aspect of the 4th IPCC report, published in 2007. As an author of the paleoclimate chapter, I was involved in some of the sea-level discussions during preparation of the report, but I was not part of the writing team for the projections. At the core of the controversy were the IPCC-projections which are based on process models (i.e. models that aim to simulate individual processes like thermal expansion or glacier melt). Many scientists felt that these models were not mature and understated the sea-level rise to be expected in future, and the IPCC report itself documented the fact that the models seriously underestimated past sea-level rise. (See our in-depth discussion published after the 4th IPCC report appeared.) That was confirmed again with the most recent data in Rahmstorf et al. 2012.

As a result of the IPCC-discussions, in 2006 I developed a complementary approach to estimating future sea-level rise and offered it to IPCC (but it was not used); this was published in Science in 2007 (and with over 300 citations to date it turned out to be the second-most-cited of the ~10,000 sea-level papers that were published since 2007). This “semi-empirical approach” linked the rate of global sea-level rise to global temperature in a simple physically motivated equation, calibrated with past data. It suggested that sea-level might rise about twice as much by 2100 AD as predicted by IPCC. My main conclusion was not that semi-empirical models are necessarily better, but that “the uncertainty in future sea-level rise is probably larger than previously estimated”. We will come back to this issue, i.e. the overall uncertainty across different model types and using all available information, in part 2 of this post.

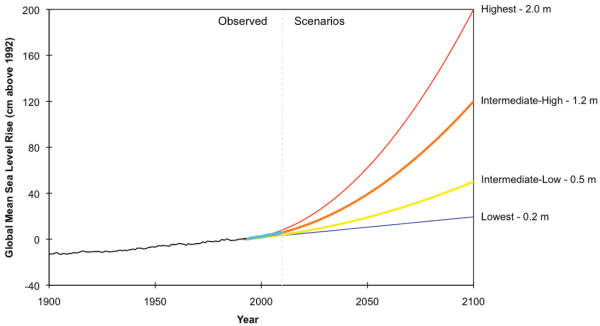

Much higher projections than IPCC are also a consistent feature of more recent assessments published since 2007, e.g. the Antarctic Science Report, the Copenhagen Diagnosis, the Arctic Report of AMAP and the recent World Bank Report. Higher projections are also commonly used in coastal planning, e.g. in the Netherlands, in California and North Carolina, and included in the recommendations of the US Army Corps of Engineers. And last month NOAA published the following new sea-level scenarios for the US National Climate Assessment:

Fig. 1. Source: Global Sea Level Rise Scenarios for the United States National Climate Assessment, NOAA (2012)

The range intermediate-low to intermediate-high of 0.5-1.2 meters is almost the same as the range 0.5-1.4 meters of my 2007 Science paper.

This week an expert elicitation by Bamber and Aspinall was published in Nature Climate Change, which confirms that the body of expert opinion expects much higher sea level rise than the 4th IPCC report. The median contribution from ice sheets alone by 2100 was estimated as 29 cm, with a 95th percentile value of 84 cm. The paper compares a range for total sea-level rise for the RCP4.5 scenario of 33-132 cm based on their expert elicitation to our recent semi-empirical range (Schaeffer et al., Nature Climate Change 2012) of 64-121 cm.

Recent progress in understanding sea-level rise

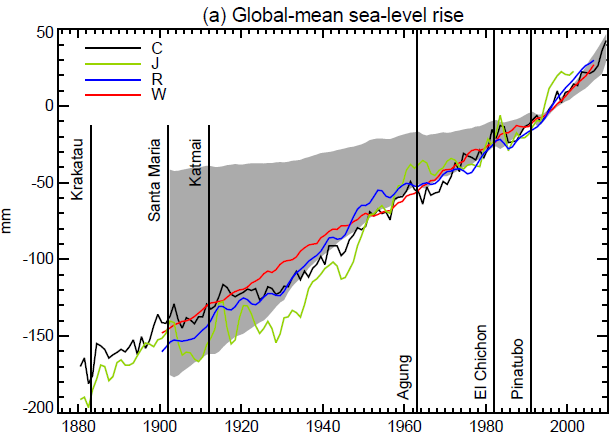

Just before Christmas an overview of process-based sea-level estimates for the 20th Century was published by Gregory et al in Journal of Climate, a paper with many authors that presents a whole suite of estimates for individual sea-level contributions, partly data-based and partly model-based. The paper then looks at the sum of all these components, see Fig. 2.

Fig. 2: Comparison of timeseries of annual-mean global-mean sea-level rise from four analyses of tide-gauge data (lines) with the range of 144 synthetic timeseries (grey shading). Each of the synthetic timeseries is the sum of a different combination of thermal expansion, glacier, Greenland ice-sheet, groundwater and reservoir timeseries. Source: Gregory et al in Journal of Climate.

This diagram shows the range of sea-level histories that is obtained by combining all the single estimates in various combinations. It shows that the observed sea-level history can be obtained when combining the individual components in all possible combinations (144 in all), but the observations lie at the very edge of the range. The authors write:

We would judge that a given synthetic timeseries gave a satisfactory account of observed global mean sea-level rise if it lay within the uncertainty envelope for 90% of the time. Very few of the synthetic timeseries pass this test.

If we take the mid-point of the grey range, this shows that the central estimate of 20th Century sea-level rise, based on adding up all processes, is ~11 cm. The central estimate of observed rise is ~16 cm and ~40% larger.

The authors conclude that a residual trend is needed to make up for the discrepancy and argue that this must come from a long-term ice loss in Antarctica. They write:

If we interpret the residual trend as a long-term Antarctic contribution, an ongoing response to climate change over previous millennia, we may conclude that the budget can be satisfactorily closed.

I guess it depends on how easily one is satisfied. The estimated required residual trend is given as 0 – 0.2 mm/year, so it explains at most 2 cm of rise over the 20th Century and does not make up for the shortfall mentioned; as I understand it, it just increases the synthetic range enough to bring most observations into its 90% confidence interval, though still near its edge.

In any case the now higher sea-level estimates from process models for the 20th Century naturally also imply higher projections for the 21st. Here it is important to compare like with like – same emissions scenario, same time interval. E.g. for the A1B scenario over the interval 1990-2095, the 4th IPCC report’s central estimate is 34 cm while the semi-empirical estimate of Science in 2007 is 78 cm. While we do not want to enter discussions about the draft 5th IPCC report (this would be premature, given there will still be numerous changes), given that it is in the public domain now it is no secret that for this scenario it projects 59 cm – a whopping 73% increase over the 4th report and much closer to my 2007 semi-empirical estimate. However, the more recent semi-empirical models have tended to give higher projections, so there remains a substantial gap between these two modelling approaches.

Personally, for various reasons I expect that process-based estimates may well keep edging up in future as the models are improved, considering e.g. the recent Nature-Paper by Winkelmann et al. and the remaining tendency to underestimate past rise. I dearly hope, however, that the truth will turn out to be a lower rise than suggested by semi-empirical models, for the sake of all people who live near the sea or love the coast.

Is 20th C sea-level rise related to global warming?

The Gregory et al. paper was greeted with enthusiasm in “climate skeptics” circles, since it includes the peculiar sentence:

The implication of our closure of the budget is that a relationship between global climate change and the rate of global-mean sea-level rise is weak or absent in the past.

The abstract culminates in a similar phrase, which can easily be misunderstood as meaning that global warming has not contributed to sea-level rise. That is wrong of course, and the claimed closure of the sea-level budget in this paper is only possible because increasing temperatures are taken into account as the prime driver of 20th Century sea-level rise.

When read in full context, the true meaning of the statement becomes clear: it is intended to discredit semi-empirical sea-level modelling. That is both fallacious and odd, given that the paper does not even contain any examination of the link between global temperature and the rate of global sea-level rise which is at the core of semi-empirical models, and which has been thoroughly examined in a whole suite of papers (e.g. Rahmstorf et al. 2011). Instead, it dismisses semi-empirical models offhand based on two arguments.

The first is that individual contributions to the sea-level budget do not show a clear link to global temperature. That is simply a fundamental misunderstanding of the semi-empirical approach: its principal idea is to cut through the uncertainty and complexity surrounding the time evolution of the individual components by considering only the overall sea-level rise and its link to global temperature. The usefulness of this idea is based on the following factors:

1. More accurate data. We may assume that the observational data for the time evolution of global sea-level rise are much more accurate than those for any individual component. A particular irony is that the glacier melt component of “process models” is in fact estimated by a semi-empirical equation quite similar to the one we use for sea level, but poorly validated since data are available only for ~350 of the world’s ~ 200,000 glaciers. Thus results from questionable semi-empirical modelling are used to dismiss rather better-validated semi-empirical modelling.

2. Partial cancellation of regional climate variability. It is obvious that e.g. the Greenland ice sheet responds to local and not global temperature, so it is not surprising that the Greenland component alone shows little relation to global temperature in the past. However, the ice sheet contributions come from both polar areas, the mountain glacier components from global land masses across a range of latitudes (with a mid- to high-latitude bias), while thermal expansion is particularly sensitive to warming over low-to mid-latitude oceans since the thermal expansion coefficient is much larger there than in colder waters. This broad mix of different regions contributing to sea-level rise makes it likely that the total rise is more clearly linked to global-mean temperature than any single component.

3. Future dominance of global warming over natural regional variability. Since the global warming signal increases over time while the amplitude of natural climate variability does not (much), the effect of global warming on sea level will become more dominant in future, making it likely that semi-empirical models are an even better approximation in future than they were in the past.

The counter-argument that with progressive warming we run out of glacier ice is an artifact of the split between mountain glaciers and larger ice masses and does not apply if total sea level is considered. As shown in Rahmstorf et al. 2011, the argument vanishes if we consider all continental ice together as a continuum (see their Fig. 13), in which melting progressively affects the colder ice surfaces as climate heats up.

Has sea-level rise accelerated?

The second argument for dismissing semi-empirical models in Gregory et al. is that “acceleration of global-mean sea-level rise during the 20th Century [is] either insignificant or small”. That argument was also put forth by Houston and Dean (2011) (see our discussion of this paper), and in our published comment on this we showed why it is false (Rahmstorf and Vermeer 2011). The argument is based only on considering the acceleration factor from a quadratic fit, an almost meaningless statistic (see our tutorial explanation). In fact, if the rate of sea-level rise perfectly follows global-mean temperature, then such a small acceleration factor is exactly what one gets, due to the specific shape of the global temperature curve. Thus, a small quadratic acceleration factor in no way speaks against semi-empirical models, but rather is what one would find if the semi-empirical model were perfect. Frankly, I am quite surprised that the authors (ten of whom are also authors of the sea-level chapter of the upcoming IPCC report) display such unfamiliarity with the fundamentals of (and prejudice against) semi-empirical models.

As John Church phrased it right after the paper was published:

I would argue that there is an unhealthy focus on one single statistic — an acceleration number — and insufficient focus on the temporal history of sea level change.

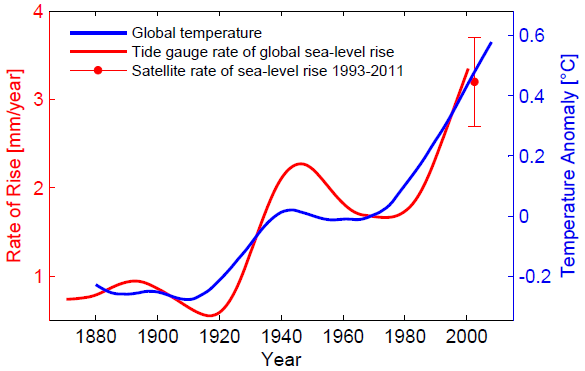

That is well said – and the temporal histories of the Church&White sea-level data and global temperature match rather well, as the following graph shows.

Fig. 3: Rate of global sea-level rise based on the data of Church & White (2006), and global mean temperature data of GISS, both smoothed. The satellite-derived rate of sea-level rise of 3.2 ± 0.5 mm/yr is also shown. The strong similarity of these two curves is at the core of the semi-empirical models of sea-level rise. Graph adapted from Rahmstorf (2007).

If we do focus on the temporal history, we find that in all but one of the sea-level reconstructions shown in Gregory et al. (their Fig. 6) the most recent rate of rise is unprecedented since the start of the record, despite the curves ending already in 2000 and all below the more reliable satellite rate of 3.2 mm/year. Early in the 20th Century, all show rates around 1.5 mm/year. In addition there is good evidence for very low rates of SLR in centuries preceding the 20th (presented e.g. in the 4th IPCC report or more recently in Kemp et al. 2011).

The one curve that does not show an unprecedented recent rate in Gregory et al. is the data of Jevrejeva et al. (2008). That contrasts with our treatment of the same data in Rahmstorf et al. 2011 (Fig. 5), where we applied a stronger and more sophisticated smoothing (as compared to the running average used by Gregory et al) which lowers the temporary high peak in the rate around 1950. This peak is not found in any of the other data sets, and as shown in Fig. 2 above, it makes the Jevrejeva data run outside the grey range found by combining all contributions.

I think this peak is spurious and results from the fact that the data of Jevrejeva et al. cannot be considered an estimate of global-mean sea level on such relatively short time scales (a couple of decades). For example, in this data set the North Atlantic data (including Arctic and Mediterranean, overall 16.6% of the global ocean area) provide 31% of the global average and are weighted four times as strongly as the Indian Ocean, although the latter is larger (19.5% of the global ocean). The Northern Hemisphere is weighted more strongly than the Southern Hemisphere, although the latter has a greater ocean surface area. (For more on the Jevrejeva weighting scheme, see our reader’s exercise below.) That is not to say that other tide-gauge based estimates guarantee a properly area-weighted global sea-level history, but it means that Jevrejeva et al. are guaranteed to not represent an area-weighted global mean, while e.g. Church and White (2006, 2011) are making a decent attempt at representing a global mean.

All reconstructions to a different extent are affected by spurious variability that is not real variability in global sea level. The satellite data are least affected by this because they almost cover the entire global ocean (and they show a remarkably constant rate of sea-level rise since 1993). The spurious variability is bound to increase further back in time due to the fewer early tide gauges. It is bound to be much reduced by time-averaging, because in the longer run the effect of water “sloshing around” the global ocean under the influence of winds and currents (due to natural variability) will largely average out, given the restoring force of gravity. Hence, the further back in time one looks, the more time averaging is required to see a signal rather than noise, and the tide gauge data sets generally require much more averaging than the satellite data.

My bottom line: The rate of sea-level rise was very low in the centuries preceding the 20th, very likely well below 1 mm/yr in the longer run. In the 20th Century the rate increased, but not linearly due to the non-linear time evolution of global temperature. The diagnosis is complicated by spurious variability due to undersampling, but in all 20th C time series that attempt to properly area-average, the most recent rates of rise are the highest on record. At the end of the 20th and beginning of the 21st Century the rate had reached 3 mm/year, a rather reliable rate measured by satellites. This increase in the rate of sea-level rise is a logical consequence of global warming, since ice melts faster and heat penetrates faster into the oceans in a warmer climate.

Update 14 January: Today I received this 200-page report on sea-level rise by the National Research Councils of the National Academies in the post. The committee that wrote it derived their own sea-level rise projections which are highly consistent with those of NOAA and my 2007 Science paper: “Global sea level is projected to rise 8-23 cm (3-9 in) by 2030, relative to 2000 levels, 18-48 cm (7-19 in) by 2050, and 50–140 cm (20-55 in) by 2100.”

Continue to Part 2 of this post, with some thoughts on “cycles” of sea level rise, the maturity of process models and the IPCC process.

—

A reader’s exercise: the “virtual station method” of Jevrejeva et al.

A thorough, critical assessment of different climate data sets is of course not the job of blogs but of the IPCC, and its experts get several years to prepare this. Nevertheless, some insights can already be obtained by the sea-level amateur spending half an hour on the following exercise.

In the virtual station method the global ocean is subdivided into 13 ocean regions. The global mean sea level is computed as the arithmetic average over these 13 regions. As mentioned above, this does not provide an area-weighted global average, since e.g. the North Atlantic consists of four regions (western and eastern North Atlantic, Arctic and Mediterranean) while the entire Indian Ocean is just one region. The North Pacific is two regions and a half: the western and eastern North Pacific, and the Central Pacific which stretches across both hemispheres.

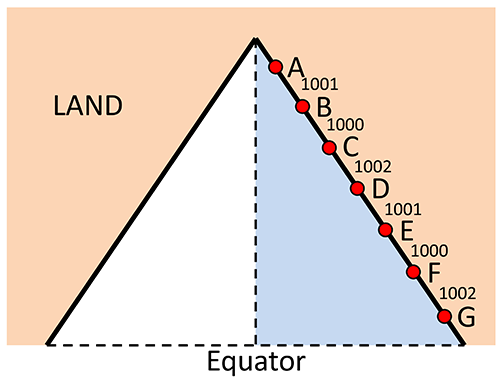

But now let us look at how the average sea level change within each region is computed. Take the example in the graph shown below, where the light-blue ocean region (think of it as eastern half of a northern hemisphere ocean basin) is covered by 7 tide gauges A-G. These are spaced about 1000 km apart – the exact distances are shown in the graph. Maybe you want to ponder first how you would average those stations to obtain a reasonable average over the light-blue ocean region!

The virtual station method averages the rise observed at these stations (over a given time interval) by application of the following simple set of rules:

1. Take the two stations closest to each other and average them.

2. Replace those two stations by a new “virtual station” which consists of the above average, located at the mid-point between the two stations.

3. Go back to step 1 with this new set of stations.

Repeat this until you are left with only one virtual station, which now is your regional average.

So here is our reader puzzle: with what weighting factors do the above 7 stations enter the final regional average?

Update 10 January: Our reader MARodger was the first to give the right answer:

A, G: 1/4

B, C, D: 1/8

E, F: 1/16

So the weights attached to these gauges differ by up to a factor of 4, apparently for no good reason. To be fair, the method was not designed to deal with regular spacing of tide gauges as in this idealised example, but with cases where spacing is highly irregular. I think it is a reasonable method e.g. to average a cluster of gauges into one number before averaging it with one far-away gauge. Nevertheless I think the example illustrates important limitations of this method, and the point that the weights by design must differ by factors of 2, 4, 8, 16… Maybe this post will inspire some mathematically-minded reader to propose a better scheme.

There is an additional issue in that the weights change over time, since the number of tide gauges available changes over time. That is another avenue by which spurious variability can enter the final average curve.

[With thanks to Svetlana Jevrejeva for answering my questions on the virtual station method.]

References

- S. Rahmstorf, G. Foster, and A. Cazenave, "Comparing climate projections to observations up to 2011", Environmental Research Letters, vol. 7, pp. 044035, 2012. http://dx.doi.org/10.1088/1748-9326/7/4/044035

- S. Rahmstorf, "A Semi-Empirical Approach to Projecting Future Sea-Level Rise", Science, vol. 315, pp. 368-370, 2007. http://dx.doi.org/10.1126/science.1135456

- J.L. Bamber, and W.P. Aspinall, "An expert judgement assessment of future sea level rise from the ice sheets", Nature Climate Change, vol. 3, pp. 424-427, 2013. http://dx.doi.org/10.1038/nclimate1778

- M. Schaeffer, W. Hare, S. Rahmstorf, and M. Vermeer, "Long-term sea-level rise implied by 1.5 °C and 2 °C warming levels", Nature Climate Change, vol. 2, pp. 867-870, 2012. http://dx.doi.org/10.1038/nclimate1584

- J.M. Gregory, N.J. White, J.A. Church, M.F.P. Bierkens, J.E. Box, M.R. van den Broeke, J.G. Cogley, X. Fettweis, E. Hanna, P. Huybrechts, L.F. Konikow, P.W. Leclercq, B. Marzeion, J. Oerlemans, M.E. Tamisiea, Y. Wada, L.M. Wake, and R.S.W. van de Wal, "Twentieth-Century Global-Mean Sea Level Rise: Is the Whole Greater than the Sum of the Parts?", Journal of Climate, vol. 26, pp. 4476-4499, 2013. http://dx.doi.org/10.1175/JCLI-D-12-00319.1

- R. Winkelmann, A. Levermann, M.A. Martin, and K. Frieler, "Increased future ice discharge from Antarctica owing to higher snowfall", Nature, vol. 492, pp. 239-242, 2012. http://dx.doi.org/10.1038/nature11616

- S. Rahmstorf, M. Perrette, and M. Vermeer, "Testing the robustness of semi-empirical sea level projections", Climate Dynamics, vol. 39, pp. 861-875, 2011. http://dx.doi.org/10.1007/s00382-011-1226-7

- "Sea-Level Acceleration Based on U.S. Tide Gauges and Extensions of Previous Global-Gauge Analyses", Journal of Coastal Research, vol. 27, pp. 409, 2011. http://dx.doi.org/10.2112/JCOASTRES-D-10-00157.1

- S. Rahmstorf, and M. Vermeer, "Discussion of: Houston, J.R. and Dean, R.G., 2011. Sea-Level Acceleration Based on U.S. Tide Gauges and Extensions of Previous Global-Gauge Analyses. Journal of Coastal Research, 27(3), 409–417", Journal of Coastal Research, vol. 27, pp. 784, 2011. http://dx.doi.org/10.2112/JCOASTRES-D-11-00082.1

- A.C. Kemp, B.P. Horton, J.P. Donnelly, M.E. Mann, M. Vermeer, and S. Rahmstorf, "Climate related sea-level variations over the past two millennia", Proceedings of the National Academy of Sciences, vol. 108, pp. 11017-11022, 2011. http://dx.doi.org/10.1073/pnas.1015619108

- S. Jevrejeva, J.C. Moore, A. Grinsted, and P.L. Woodworth, "Recent global sea level acceleration started over 200 years ago?", Geophysical Research Letters, vol. 35, 2008. http://dx.doi.org/10.1029/2008GL033611

- J.A. Church, and N.J. White, "A 20th century acceleration in global sea‐level rise", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2005GL024826

- J.A. Church, and N.J. White, "Sea-Level Rise from the Late 19th to the Early 21st Century", Surveys in Geophysics, vol. 32, pp. 585-602, 2011. http://dx.doi.org/10.1007/s10712-011-9119-1

Kees,

I would be leery of the prediction accuracy of any synthetic time series that so poorly estimates past rates.

Krige, of course, was a South African mining engineer*. Geostats appears to have moved on a bit to techniques based more in statistical simulation. As I understand it, you attempt to replicate the spatial field in a probabilistic model arranged to reproduce all the knowns: the point samples (with errors), the “geology” (inferred spatial patterns / discontinuities), and the variograms / correlations for each domain. Then you just monte carlo-up an answer.

You’re suggesting get that “geology” from the satellite record patterns, also the variograms / correlations. Makes sense. A bit of a look how some in another field have done it might be helpful.

(* I think he’s still with us, though I doubt practicing.)

#44 jake: the major natural influence on long-term climate variability is the sun and once you smooth out the solar cycle, that’s very long-term (millions of years for a significant effect).

Milankovitch cycles are on the order of multiples of 10k years (variations in the planet’s orbit, precession, etc.).

Very occasionally there is a big enough change in sunspot activity to put the planetary climate in a different regime.

You can find a summary of the scale of solar variability vs. greenhouse warming here. The critical thing is greenhouse warming is the bigger effect, but it increases in steps that are smaller in the short term than natural variability, so it does not overwhelm natural variability unless you look at a sufficiently long period.

You can find more detail at Skeptical Science.

Thanks for your thoughtful and useful response, eric. It certainly would seem reasonable and prudent, given the best science, to start looking at starting to move away from the lowest meter above sea level.

And of course, time does not stop at the end of the century. The Foster and Rohling paper are talking about an eventual rise of 9 to 31 meters. That means most of the major cities along the eastern sea board will be totally or largely under water. Not tomorrow, perhaps, but something we now have to consider for long term plans.

It takes a very long time to make changes necessary to accommodate the evacuation of cities holding collectively tens of millions of people (billions, worldwide, iirc). We should at least start to be sending the right signals, and to avoid major infrastructure investments that will be likely or certainly threatened by high sea levels and by the increasingly intense surges and storms that are coming in the next few decades, at least. I see little hint, though, of this type of talk among policy makers at this point yet.

(reCaptcha: “once Itsfair”)

Hong Kong and NYC both had large storm surges last year. NYC had large damage, but Hong Kong had prepared and suffered little damage.

The ice sheet models are being run in conjunction with the general circulation models that notoriously missed the Arctic Sea Ice Melt Event. While this has been blamed on various factors, I think these models understate transport of latent heat into the Arctic.

I expect the ice sheet models run in conjunction with the community circulation models to understate ice sheet melt and collapse to the same extent that Arctic Sea Ice Melt was understated, and for the same problems with the same models.

@Eric Steig, inline response #43,

Hansen & Sato think Pfeffer et al might still under-estimate the risk of fast SLR:

http://arxiv.org/ftp/arxiv/papers/1105/1105.0968.pdf

They say(pp.22-23):

“The kinematic constraint may have relevance to the Greenland ice sheet, although the assumptions of Pfeffer at al. (2008) are questionable even for Greenland. They assume that ice streams this century will disgorge ice no faster than the fastest rate observed in recent decades.

That assumption is dubious, given the huge climate change that will occur under BAU scenarios, which have a positive (warming) climate forcing that is increasing at a rate dwarfing any known natural forcing. BAU scenarios lead to CO2 levels higher than any since 32 My ago, when Antarctica glaciated. By mid-century most of Greenland would be experiencing summer melting in a longer melt season. Also some Greenland ice stream outlets are in valleys with bedrock below sea level. As the terminus of an ice stream retreats inland, glacier sidewalls can collapse, creating a wider pathway for disgorging ice.

The main flaw with the kinematic constraint concept is the geology of Antarctica, where large portions of the ice sheet are buttressed by ice shelves that are unlikely to survive BAU climate scenarios. West Antarctica’s Pine Island Glacier (PIG) illustrates nonlinear processes already coming into play. The floating ice shelf at PIG’s terminus has been thinning in the past two decades as the ocean around Antarctica warms (Shepherd et al., 2004; Jenkins et al., 2010).

Thus the grounding line of the glacier has moved inland by 30 km into deeper water, allowing potentially unstable ice sheet retreat. PIG’s rate of mass loss has accelerated almost continuously for the past decade (Wingham et al., 2009) and may account for about half of the mass loss of the West Antarctic ice sheet, which is of the order of 100 km3 per year (Sasgen et al., 2010).

PIG and neighboring glaciers in the Amundsen Sea sector of West Antarctica, which are also accelerating, contain enough ice to contribute 1-2 m to sea level. Most of the West Antarctic ice sheet, with at least 5 m of sea level, and about a third of the East Antarctic ice sheet, with another 15-20 m of sea level, are grounded below sea level. This more vulnerable ice may have been the source of the 25 ± 10 m sea level rise of the Pliocene (Dowsett et al., 1990,

1994). If human-made global warming reaches Pliocene levels this century, as expected under BAU scenarios, these greater volumes of ice will surely begin to contribute to sea level change.

Indeed, satellite gravity and radar interferometry data reveal that the Totten Glacier of East Antarctica, which fronts a large ice mass grounded below sea level, is already beginning to lose mass (Rignot et al., 2008).

The eventual sea level rise due to expected global warming under BAU GHG scenarios is several tens of meters, as discussed at the beginning of this section. From the present discussion it seems that there is sufficient readily available ice to cause multi-meter sea level rise this century, if dynamic discharge of ice increases exponentially.”

How certain can we be that Hansen & Sato are wrong and that the ice sheets are not as vulnerable as they fear or suspect?

Aaron,

Recent research suggests that a shift in the path of Russian fresh water from the Eurasian Basin towards the Canadian Basin created by changes in the AO led to increases ice melt in recent years. Nasa brief and Nature paper cited below:

http://www.nasa.gov/topics/earth/features/earth20120104.html

http://www.nature.com/nature/journal/v481/n7379/full/nature10705.html

Just posting to close open bold and italic commands.

Dan H.,

Just to clarify, I am sure you meant that the shift could explain the huge downward fluctuations in 2007 and 2012, not the general nonlinear decline of sea ice that accounts for over 50% reduction in volume over 40 years. Right?

Ray,

That is correct.

#58, 60–Well, since the Morison et al says: “Here we use observations to show that during a time of record reductions in ice extent from 2005 to 2008…” one would be assuming & extrapolating even WRT 2012, let alone the long-term trend.

And actually, I don’t see anything in either link that supports Dan’s contention. For example, the lead in the NASA link says:

“A new NASA and University of Washington study allays concerns that melting Arctic sea ice could be increasing the amount of freshwater in the Arctic enough to have an impact on the global “ocean conveyor belt” that redistributes heat around our planet.”

Anything in there suggesting the paper is about attributing ice loss? Sounds like it’s about attributing Arctic freshening, rather.

The closest thing to Dan’s interpretation appears to be:

But note that that is a “could” statement, not a “does” statement, and that its scope is regional–“that part of the Arctic”–not pan-Arctic.

If the idea is to represent the SLR of the entire ocean area from samples just along the edge (and I think this is correct), then perhaps a better weighting scheme is:

1. Determine the center of the ocean area of interest. Call this point C.

2. Determine the distances D from each gauge to point C.

3. Weight each gauge by the factor W=1/D²

4. Run a standard weighted average using these weights.

Keith Pickering @63 — Far too simple:

http://en.wikipedia.org/wiki/Geoid

Actually kriging is just a special case of least-squares collocation, a variant of the least squares method especially suitable for time series and spatial fields developed by geodesists like Helmut Moritz and Torben Krarup, primarily for use in modelling the gravity field.

Geodesists would have things to give to climatology in the field of statistical methodology. Gauss was a land surveyor / geodesist ;-)

Also Church and White’s method is well known in the geodesy / adjustment theory literature, as a ‘hybrid-norm optimization method’ combining observational and a priori data (and I’m pretty sure its formal equivalence to LSC could be shown). It is well described in Kaplan et al. 2000.

Stefan, just curious: you say that your 2007 paper in Science was “with over 300 citations to date it turned out to be the second-most-cited of the ~10,000 sea-level papers that were published since 2007”.

Which paper is the most cited sea level paper in that period? Pfeffer et al 2008?

So Krige just reinvented an existing technique in geodesy? I don’t know that it matters; popularising techniques across disciplines seems to be something important in itself. Another example is the Cooley–Tukey fast fourier transform, one of the more technologically important algorithms of our time. Turns out that one is also down to Herr Gauss (and geodesy?), well over a century before.

On the formal equivalence suggestion — maybe, but it somehow feels unlikely. (How would multiple mean replacement equate with something involving squares of differences…) But then I admit to being surprised, long ago, to discover that Thiessan polygons and the Voronoi triangulation are equivalent. (If you use a conventional triangulation-based contouring algorithm to compute a spatial average, the answer should be identical to what you’d get with the Thiessan construction.)

BTW, John Church has been in the media a bit here lately. His humble but firm, “I’m sorry, but the quote is wrong” nicely deflated Mr Murdoch’s putative journal of record (The Australian newspaper).

I searched the Web of science for “sea level” OR “sea-level” from 2007 and onwards and the winner in terms of number of citations was this paper:

http://www.pnas.org/content/105/6/1786.full (Hint: Stefan Rahmstorf is also a co-author)

Rahmstorf (2007) was second.

Third I cannot remeber. :)

http://www.utexas.edu/news/2012/05/10/ice_sheet/

http://www.utexas.edu/news/files/figures-crop.jpg

“… The basin is divided into two components (A and B) and lies just inland of the West Antarctic Ice Sheet’s grounding line (black line), where streams of ice flowing toward the Weddell Sea begin to float.

Steep reverse bed slope at the grounding line of the Weddell Sea sector in West Antarctica

doi:10.1038/ngeo1468

So imagine you have a fleet of bottom-trawlers,

and you can sell ‘most anything you drag up to hungry customers.

How’s these fresh new shallow seas look to you, eh? Productive?

http://www.earthtimes.org/newsimage/west-antarctic-ice-shelf-nudge-push-collapse_2_10512.jpg

There’s more: http://www.utexas.edu/news/2011/06/01/fjords_antarctic_ice/

excerpt follows (press release, references to journals at the original —-

“… the first high-resolution topographic map of one of the last uncharted regions of Earth, the Aurora Subglacial Basin, an immense ice-buried lowland in East Antarctica larger than Texas….

…

“We chose to focus on the Aurora Subglacial Basin because it may represent the weak underbelly of the East Antarctic Ice Sheet, the largest remaining body of ice and potential source of sea-level rise on Earth,” said Donald Blankenship, principal investigator for the ICECAP project ….

Because the basin lies kilometers below sea level, seawater could penetrate beneath the ice, causing portions of the ice sheet to collapse ….

… the East Antarctic Ice Sheet grew and shrank widely and frequently, from about 34 to 14 million years ago, causing sea level to fluctuate by 200 feet. Since then, it has been comparatively stable, causing sea-level fluctuations of less that 50 feet. The new map reveals vast channels cut through mountain ranges by ancient glaciers that mark the edge of the ice sheet at different times in the past, sometimes hundreds of kilometers from its current edge.

“We’re seeing what the ice sheet looked like at a time when Earth was much warmer than today,” said Young. “Back then it was very dynamic, with significant surface melting. Recently, the ice sheet has been better behaved.”

However, recent lowering of major glaciers near the edge detected by satellites has raised concerns about this sector of Antarctica.

Young said past configurations of the ice sheet give a sense of how it might look in the future, although he doesn’t foresee it shrinking as dramatically in the next 100 years.

————end excerpt ——–

Oh, well, then, 100 years …. waitaminit, last I heard it was a thousand …

Thanks for the reference to the Aurora Basin. I have been watching Totten, Moscow University (which drain the Aurora region) and Amery further west for a while, and I suspect the EAIS is not as deep in slumber as is assumed. See e.g.Pritchard et al. (Nature 2012, v484 pp 502 et seq., doi:10.1038/nature10968) documenting dynamic thinning of EAIS shelves, driven by CDW.

Seem that everywhere we look we see big holes under the ice upstream of the grounding lines. I wonder if all thos glaciers are perched on pinning points downstream of the giant pits.

sidd

I found only one paper citing the rapid drumlin paper (I mentioned over at Stoat a while back): http://scholar.google.com/scholar?cites=8777559913109189749&as_sdt=5,47&sciodt=0,47&hl=en

But that one has 20 cites. Might be more there worth looking at, about the rate of change under the ice.

Uh, oh. This is the kind of thing I was speculating about over at Stoat years ago, back when the notion of rapid change under the ice was just beginning to be mentioned as a possibility, back when drumlins were first noticed forming fast.

http://www.sciencedirect.com/science/article/pii/S0169555X08001347

http://dx.doi.org/10.1016/j.geomorph.2008.04.005

Geomorphology Volume 102, Issues 3–4, 15 December 2008, Pages 364–375

A meltwater origin for Antarctic shelf bedforms with special attention to megalineations

“The geomorphology of troughs crossing the Antarctic shelf is described and interpreted in terms of ice-stream hydrology. The scale of tunnel channels on the inner shelf and the absence of sediment at their mouths are taken to infer catastrophic drainage. Drumlins on the inner and outer shelves with pronounced crescentic and hairpin scours are also interpreted as products of catastrophic flow. Gullies and channels on the continental slope and turbidites on the rise and abyssal plain point to abundant meltwater discharge across the shelf.

“Attempts to explain this morphology and sedimentology in terms of release or discharge of meltwater by pressure melting, strain heating, Darcian flow, or advection in deforming till are shown to be unrealistic. We suggest that meltwater flow across the middle and outer shelves might have been in broad, turbulent floods, which raises the possibility that megascale glacial lineations (MSGL) on the shelf might originate by erosion in turbulent flow. This possibility is explored by use of analogs for MSGL from flood and eolian landscapes and marine environments. An extended discussion reflects on objections that stand in the way of the flood hypothesis.”

Here’s another worth a look, for thinking about how drainage happens: http://www.sciencedirect.com/science/article/pii/S104061820100091X

http://dx.doi.org/10.1016/S1040-6182(01)00091-X

and

http://www.sciencedirect.com/science/article/pii/S0169555X1000139X

http://dx.doi.org/10.1016/j.geomorph.2010.03.020

Hank, thanks for the interesting references.

Have you seen this? “Map of Antarctic bedrock elevation now available in Atlas of the Cryosphere” http://nsidc.org/data/atlas/news/bedrock_elevation.html

Really nice pictures, and with scalable online-bedrock maps. I guess there are better and more detailed maps available now, but they give a nice overview.

perwis, yep, thanks, I followed up in the Greenland Melt topic.

Just one thing, I know that acronyms sort of proofs ones deeper understanding of ones subject, ahem, but I prefer to see how the writer thinks without needing my ‘lexicon of current acronyms’ :)

It can become a little tiresome for someone interested to look up all acronym’s used by you guys/gals. When I use such I also make the effort to put the acronym used into words, at least once (inside a parenthesis normally. It simplifies the understanding, and allows you to catch the flow of ideas better.)

Otherwise it’s interesting reading.