Climate sensitivity is a perennial topic here, so the multiple new papers and discussions around the issue, each with different perspectives, are worth discussing. Since this can be a complicated topic, I’ll focus in this post on the credible work being published. There’ll be a second part from Karen Shell, and in a follow-on post I’ll comment on some of the recent games being played in and around the Wall Street Journal op-ed pages.

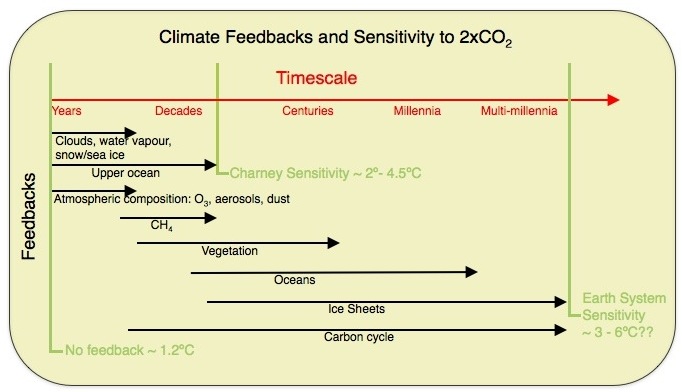

What is climate sensitivity? Nominally it the response of the climate to a doubling of CO2 (or to a ~4 W/m2 forcing), however, it should be clear that this is a function of time with feedbacks associated with different components acting on a whole range of timescales from seconds to multi-millennial to even longer. The following figure gives a sense of the different components (see Palaeosens (2012) for some extensions).

In practice, people often mean different things when they talk about sensitivity. For instance, the sensitivity only including the fast feedbacks (e.g. ignoring land ice and vegetation), or the sensitivity of a particular class of climate model (e.g. the ‘Charney sensitivity’), or the sensitivity of the whole system except the carbon cycle (the Earth System Sensitivity), or the transient sensitivity tied to a specific date or period of time (i.e. the Transient Climate Response (TCR) to 1% increasing CO2 after 70 years). As you might expect, these are all different and care needs to be taken to define terms before comparing things (there is a good discussion of the various definitions and their scope in the Palaeosens paper).

Each of these numbers is an ’emergent’ property of the climate system – i.e. something that is affected by many different processes and interactions, and isn’t simply derived just based on knowledge of a small-scale process. It is generally assumed that these are well-defined and single-valued properties of the system (and in current GCMs they clearly are), and while the paleo-climate record (for instance the glacial cycles) is supportive of this, it is not absolutely guaranteed.

There are three main methodologies that have been used in the literature to constrain sensitivity: The first is to focus on a time in the past when the climate was different and in quasi-equilibrium, and estimate the relationship between the relevant forcings and temperature response (paleo constraints). The second is to find a metric in the present day climate that we think is coupled to the sensitivity and for which we have some empirical data (these could be called climatological constraints). Finally, there are constraints based on changes in forcing and response over the recent past (transient constraints). There have been new papers taking each of these approaches in recent months.

All of these methods are philosophically equivalent. There is a ‘model’ which has a certain sensitivity to 2xCO2 (that is either explicitly set in the formulation or emergent), and observations to which it can be compared (in various experimental setups) and, if the data are relevant, models with different sensitivities can be judged more or less realistic (or explicitly fit to the data). This is true whether the model is a simple 1-D energy balance, an intermediate-complexity model or a fully coupled GCM – but note that there is always a model involved. This formulation highlights a couple of important issues – that the observational data doesn’t need to be direct (and the more complex the model, the wider range of possible constraints there are) and that the relationship between the observations and the sensitivity needs to be demonstrated (rather than simply assumed). The last point is important – while in a 1-D model there might be an easy relationship between the measured metric and climate sensitivity, that relationship might be much more complicated or non-existent in a GCM. This way of looking at things lends itself quite neatly into a Bayesian framework (as we shall see).

There are two recent papers on paleo constraints: the already mentioned PALAEOSENS (2012) paper which gives a good survey of existing estimates from paleo-climate and the hierarchy of different definitions of sensitivity. Their survey gives a range for the fast-feedback CS of 2.2-4.8ºC. Another new paper, taking a more explicitly Bayesian approach, from Hargreaves et al. suggests a mean 2.3°C and a 90% range of 0.5–4.0°C (with minor variations dependent on methodology). This can be compared to an earlier estimate from Köhler et al. (2010) who gave a range of 1.4-5.2ºC, with a mean value near 2.4ºC.

One reason why these estimates keep getting revised is that there is a continual updating of the observational analyses that are used – as new data are included, as non-climatic factors get corrected for, and models include more processes. For instance, Köhler et al used an estimate of the cooling at the Last Glacial Maximum of 5.8±1.4ºC, but a recent update from Annan and Hargreaves and used in the Hargreaves et al estimate is 4.0±0.8ºC which would translate into a lower CS value in the Köhler et al calculation (roughly 1.1 – 3.3ºC, with a most likely value near 2.0ºC). A paper last year by Schmittner et al estimated an even smaller cooling, and consequently lower sensitivity (around 2ºC on a level comparison), but the latest estimates are more credible. Note however, that these temperature estimates are strongly dependent on still unresolved issues with different proxies – particularly in the tropics – and may change again as further information comes in.

There was also a recent paper based on a climatological constraint from Fasullo and Trenberth (see Karen Shell’s commentary for more details). The basic idea is that across the CMIP3 models there was a strong correlation of mid-tropospheric humidity variations with the model sensitivity, and combined with observations of the real world variations, this gives a way to suggest which models are most realistic, and by extension, what sensitivities are more likely. This paper suggests that models with sensitivity around 4ºC did the best, though they didn’t give a formal estimation of the range of uncertainty.

And then there are the recent papers examining the transient constraint. The most thorough is Aldrin et al (2012). The transient constraint has been looked at before of course, but efforts have been severely hampered by the uncertainty associated with historical forcings – particularly aerosols, though other terms are also important (see here for an older discussion of this). Aldrin et al produce a number of (explicitly Bayesian) estimates, their ‘main’ one with a range of 1.2ºC to 3.5ºC (mean 2.0ºC) which assumes exactly zero indirect aerosol effects, and possibly a more realistic sensitivity test including a small Aerosol Indirect Effect of 1.2-4.8ºC (mean 2.5ºC). They also demonstrate that there are important dependencies on the ocean heat uptake estimates as well as to the aerosol forcings. One nice thing that added was an application of their methodology to three CMIP3 GCM results, showing that their estimates 3.1, 3.6 and 3.3ºC were reasonably close to the true model sensitivities of 2.7, 3.4 and 4.1ºC.

In each of these cases however, there are important caveats. First, the quality of the data is important: whether it is the LGM temperature estimates, recent aerosol forcing trends, or mid-tropospheric humidity – underestimates in the uncertainty of these data will definitely bias the CS estimate. Second, there are important conceptual issues to address – is the sensitivity to a negative forcing (at the LGM) the same as the sensitivity to positive forcings? (Not likely). Is the effective sensitivity visible over the last 100 years the same as the equilibrium sensitivity? (No). Is effective sensitivity a better constraint for the TCR? (Maybe). Some of the papers referenced above explicitly try to account for these questions (and the forward model Bayesian approach is well suited for this). However, since a number of these estimates use simplified climate models as their input (for obvious reasons), there remain questions about whether any specific model’s scope is adequate.

Ideally, one would want to do a study across all these constraints with models that were capable of running all the important experiments – the LGM, historical period, 1% increasing CO2 (to get the TCR), and 2xCO2 (for the model ECS) – and build a multiply constrained estimate taking into account internal variability, forcing uncertainties, and model scope. This will be possible with data from CMIP5, and so we can certainly look forward to more papers on this topic in the near future.

In the meantime, the ‘meta-uncertainty’ across the methods remains stubbornly high with support for both relatively low numbers around 2ºC and higher ones around 4ºC, so that is likely to remain the consensus range. It is worth adding though, that temperature trends over the next few decades are more likely to be correlated to the TCR, rather than the equilibrium sensitivity, so if one is interested in the near-term implications of this debate, the constraints on TCR are going to be more important.

References

- . , "Making sense of palaeoclimate sensitivity", Nature, vol. 491, pp. 683-691, 2012. http://dx.doi.org/10.1038/nature11574

- J.C. Hargreaves, J.D. Annan, M. Yoshimori, and A. Abe‐Ouchi, "Can the Last Glacial Maximum constrain climate sensitivity?", Geophysical Research Letters, vol. 39, 2012. http://dx.doi.org/10.1029/2012GL053872

- P. Köhler, R. Bintanja, H. Fischer, F. Joos, R. Knutti, G. Lohmann, and V. Masson-Delmotte, "What caused Earth's temperature variations during the last 800,000 years? Data-based evidence on radiative forcing and constraints on climate sensitivity", Quaternary Science Reviews, vol. 29, pp. 129-145, 2010. http://dx.doi.org/10.1016/j.quascirev.2009.09.026

- J.D. Annan, and J.C. Hargreaves, "A new global reconstruction of temperature changes at the Last Glacial Maximum", 2012. http://dx.doi.org/10.5194/cpd-8-5029-2012

- A. Schmittner, N.M. Urban, J.D. Shakun, N.M. Mahowald, P.U. Clark, P.J. Bartlein, A.C. Mix, and A. Rosell-Melé, "Climate Sensitivity Estimated from Temperature Reconstructions of the Last Glacial Maximum", Science, vol. 334, pp. 1385-1388, 2011. http://dx.doi.org/10.1126/science.1203513

- J.T. Fasullo, and K.E. Trenberth, "A Less Cloudy Future: The Role of Subtropical Subsidence in Climate Sensitivity", Science, vol. 338, pp. 792-794, 2012. http://dx.doi.org/10.1126/science.1227465

- M. Aldrin, M. Holden, P. Guttorp, R.B. Skeie, G. Myhre, and T.K. Berntsen, "Bayesian estimation of climate sensitivity based on a simple climate model fitted to observations of hemispheric temperatures and global ocean heat content", Environmetrics, vol. 23, pp. 253-271, 2012. http://dx.doi.org/10.1002/env.2140

Bill,

Sorry, my bad. On my dataset, the line obscurved the 2005 data point. Both the 2007 and 2012 lows were affected by favourable weather conditions, and I concur that it would be unlikely for another record to be set for several years. The data from 2008-2011 fell reasonably well on the linear trend established over the past two decades. I would expect the next few years to follow suit.

Dan H.,

I would be careful in drawing any conclusions about the temporal dependence of new sea ice minima. The most notable aspect of the graph is the downward trend, and it is arguable that a linear trend no longer cuts it as a fit. What is more, the decline in thickness is even more marked that the sea ice extent decline, and thin ice is easier to melt. While it is true that weather affects the ultimate decline year to year, I don’t think it is an accident that the last two records haven’t just broken their predecessors, but smashed them. The number of aces in the deck has increased.

> on my dataset the line obscurved the 2005 data

But “Dan H.” claimed to be using the data file he pointed to, not a picture

> I concur that it would be unlikely for another record to be set

The familiar “I agree with myself and pretend you said it” bait again

This is the uncanny valley simulation of discourse.

@Hank #49. Not that simple. I agree on getting everyone together, but you can’t go back and revise published papers (fortunately). The way forward is to continue to debate and refine the estimates. In some areas it just takes time to get consensus, but if the problem is solvable, we’ll eventually get there.

[Response: actually you can go back and revise published results using updated datasets and/or calibrations and/or age models. Indeed we should be building archives that allow for that as a matter of course. I would expect that this might be much more cost effective than drilling new cores… ;-) -gavin]

“I would expect that this might be much more cost effective than drilling new cores… ;-) -gavin]”

So you turned something we all want into something “they” can say no to? Thanks…

> David Lea

> …. I agree on getting everyone together

Is there an appropriate umbrella organization (AGU?) that overlaps the two communities? (and hosts barbeques?)

Is there a journal where reviewers are drawn from both modeling and paleo communities?

Can scientists get such early feedback on choices before starting papers, without having their ideas stolen?

> Gavin

> … using updated datasets and/or calibrations and/or age models.

> … we should be building archives

Is there any project to create such archives?

Some sketch of what would be collected, etc.?

(Lists and pointers to lists, rather than copies — Rule One of Databases — if possible)

(I’d guess this might be long discussed but lacking funding — and not yet ready for Kickstarter)

Would authors (and journal editors) cooperate in creating a new layer of science publishing,

to be dedicated to “using updated datasets and/or calibrations and/or age models” for revising/reworking papers?

Would original authors — whose approach was known good — agree with having someone else crank through their

same procedure after the “datasets and/or calibrations and/or age models” were changed? Get credit? Not lose face or funds?

Could recalculating involve citizen/volunteers working with guidance from original authors?

I know reworking previous papers isn’t a high priority for most scientists or grad students.

They ought to be left free to do more interesting and new work.

At the rate the “datasets and/or calibrations and/or age models” are improved — it’d sure be interesting.

On sea ice records and the probability of setting new records:

I haven’t done a quantitative analysis, but my guess is that given an old record, “A”, and a new record, “B”, the expectation that a year soon after B will be even less than B (a newer record) is probably less the larger the B minus A difference (eg, reversion to the mean), BUT, the expectation that a year soon after B will be less than A should increase the larger that difference (eg, there is more confidence in a larger decreasing trend due to Bayesian updating).

(I might also guess that the shorter the time period between A and B, the more expectation there should be that a year soon after B will exceed B)

So, to apply this to 2012: the large 2012 minus 2007 difference means that I’d expect the next record to be more years off than I would have had 2012 barely beat 2007. It will be interesting to watch over the next few years… if we don’t see a new record until 2017, I wonder how many “sea ice recovery!” posts from WUWT we’ll have to endure…

“Both the 2007 and 2012 lows were affected by favourable weather conditions…”

The only really favorable 2012 circumstance I can think of, weather-wise, was the cyclone. And that was in effect for about a week.

The melt weather otherwise was fairly ‘middle of the road,’ according to assessments I’ve read. Yet the 2007 record was obliterated, and apparently still would have been without the cyclone.

Moreover, as Tamino and others have pointed out, this is all extent, yet volume is in some ways more to the point, as implied by Ray’s comments in #52. The most remarkable year for volume decline was actually 2010, if the PIOMAS results are correct:

http://tinyurl.com/WipneusSeptemberPIOMASannuals

This decline isn’t just weird weather–though goodness knows, we seem to be seeing more of that:

http://www.cbc.ca/news/technology/story/2013/01/08/sci-aussie-heat-map.html

I don’t pretend to know what will happen with next year’s minimum. But it is much more likely to be below 2007 than not–and a new record would sadden but not shock me. (If I had to guess odds, I’d probably say 50-50.)

32 Lennart said, “It seems Hansen thinks less, about 4 W/m2 as well,”

Thanks. I wouldn’t bet too wide of Hansen. Was he assuming cold turkey? If so, we’d end up a lot lower than our current 400ppm, so the persistent modern forcing (on which everything else must pile) is lots lower than the current CO2 forcing.

@David Lea #42-43: thanks for the comment, from where I’m sitting, it seems to be more of a disconnect between two sides of the paleodata community :-) I’m not trying to take sides, just using the most recent and comprehensive proxy compilations. You are certainly right that a colder tropical LGM would result in a higher sensitivity estimate.

44.Paul Williams @37 — The ice sheets become dirtier over time.

Comment by David B. Benson — 7 Jan 2013

———

Antarctica and Greenland and mountain glaciers seem to stay white enough. Everytime it snows, they are back to Albedos of 0.8.

Now dirt and material will migrate to the top of a glacier as it is melting back/receding and the edges can even become black. But the main glacial region will still be white as long as it is stable or advancing and snow falls over a long enough period of the year. Sounds like a last glacial maximum.

Jim @59,

As I understand Hansen he’s saying: if we double CO2 this century (so upto about 550-600 ppm), that will mean a forcing of about 4 W/m2 and 3 degrees C warming in the short term (decades), and thru slow feedbacks (albedo + GHG) another 4 W/m2 and 3 degrees in the long term (centuries/millennia).

@James Annan #60. Your point is a fair one; what’s in print doesn’t always reflect what’s discussed in the halls. But more importantly, I think your paper provides a way forward on the sensitivity problem by providing a plausible scaling between tropical cooling and sensitivity — something that has eluded me in past papers. I am very confident that we can nail down the tropical cooling (if we havn’t already) and, as I said previously, I would be very surprised if it’s much less than 2.5 deg tropics wide — partly because of the agreement between the various geochemical proxies, partly because of the oxygen isotope constraint. If you adjusted the LGM tropical cooling to 2.8 ± 0.7 deg (my published value from 2000), what would it translate to in terms of sensitivity?

Paul Williams @61 — But during LGM it didn’t snow over the entire Laurentide ice sheet. There were two accumulation centers. Around the margins the winds blew loess in great quantities. Much of it lies here in the Palouse but I’m rather sure that much of it ended up on the ice sheet.

Ray,

The most vulnerable ice has already melted, and was likely enhanced by changes in the AO.

http://www.nasa.gov/topics/earth/features/earth20120104.html

The remaining ice is further from the inflow of warm waters, and closer to the Greenland glaciers. This ice is thicker than the ice that melted previously. Going forward, it is likely not to melt linearly, but less so, as the ice becomes harder to melt.

Gavin Schmidt

I am glad to see that my input into the Wall Street Journal op-ed pages has prompted a piece on climate sensitivity at RealClimate. I think that some comment on my energy balance based climate sensitivity estimate of 1.6-1.7°C (details at http://www.webcitation.org/6DNLRIeJH), which underpinned Matt Ridley’s WSJ op-ed, would have been relevant and of interest.

[Response: Part III. – gavin]

You refer to the recent papers examining the transient constraint, and say “The most thorough is Aldrin et al (2012). … Aldrin et al produce a number of (explicitly Bayesian) estimates, their ‘main’ one with a range of 1.2ºC to 3.5ºC (mean 2.0ºC) which assumes exactly zero indirect aerosol effects, and possibly a more realistic sensitivity test including a small Aerosol Indirect Effect of 1.2-4.8ºC (mean 2.5ºC).”

The mean is not a good central estimate for a parameter like climate sensitivity with a highly skewed distribution. The median or mode (most likely value) provide more appropriate estimates. Aldrin’s main results mode for sensitivity is between 1.5 and 1.6ºC; the median is about halfway between the mode and the mean.

[Response: All the pdfs are skewed – but using the mode to compare to the mean in previous work is just a sleight of hand to make the number smaller. The WSJ might be happy to play these kinds of games, but don’t do it here. – gavin]

I agree with you that Aldrin (available at http://folk.uio.no/gunnarmy/paper/aldrin_env_2012.pdf) is the most thorough study, although its use of a uniform prior distribution for climate sensitivity will have pushed up the mean, mainly by making the upper tail of its estimate worse constrained than if an objective Bayesian method with a noninformative prior had been used.

It is not true that Aldrin assumes zero indirect aerosol effects. Table 1 and Figure 15 (2nd panel) of the Supplementary Material show that a wide prior extending from -0.3 to -1.8 W/m^2 (corresponding to the AR4 estimated range) was used for indirect aerosol forcing. The (posterior) mean estimated by the study was circa -0.3 W/m^2 for indirect aerosol forcing and -0.4 W/m^2 for direct. The total of -0.7 W/m^2 is the same as the best observational (satellite) total aerosol adjusted forcing estimate given in the leaked Second Order Draft of AR5 WG1, which includes cloud lifetime (2nd indirect) and other effects.

When Aldrin adds a fixed cloud lifetime effect of -0.25 W/m^2 forcing on top of his variable parameter direct and (1st) indirect aerosol forcing, the mode of the sensitivity PDF increases from 1.6 to 1.8. The mean and the top of the range goes up a lot (to 2.5ºC and 4.8ºC, as you say) because the tail of the distribution becomes much fatter – a reflection of the distorting effect of using a uniform prior for ECS. But, given the revised aerosol forcing estimates given in the AR5 WG1 SOD, there is no justification at all for increasing the prior for aerosol indirect forcing prior by adding either -0.25 or -0.5 W/m^2. On the contrary, it should be reduced, by adding something like +0.5 W/m^2, to be consistent with the lower AR5 estimates.

[Response: They aren’t ‘AR5’ estimates yet. Still being reviewed, remember? – gavin]

It is rather surprising that adding cloud lifetime effect forcing makes any difference, insofar as Aldrin is estimating indirect and direct aerosol forcings as part of his Bayesian procedure.

[Response: Not sure this is true. I think they are starting to do so in subsequent papers. – gavin]

The reason is probably, because the normal/lognormal priors he is using for direct and indirect aerosol forcing aren’t wide enough for the posterior mean fully to reflect what the model-observational data comparison is implying. When extra forcing of -0.25 or -0.5 W/m^2 is added his prior mean total aerosol forcing is very substantially more negative than -0.7 W/m^2 (the posterior mean without the extra indirect forcing). That results in the data maximum likelihoods for direct and indirect aerosol forcing being in the upper tails of the priors, biasing the aerosol forcing estimation to more negative values (and hence biasing ECS estimation to a higher value).

Ring et al. (2012) (available from http://www.scirp.org/fileOperation/downLoad.aspx?path=ACS20120400002_59142760.pdf&type=journal) is another recent climate sensitivity study based on instrumental data. Using the current version, HadCRUT4, of the surface temperature dataset used in a predecessor study, it obtains central estimates for total aerosol forcing and climate sensitivity of respectively -0.5 W/m^2 and 1.6 ºC. This is a 0.9ºC reduction from the sensitivity of 2.5°C estimated in that predecessor study, which used the same climate model. The reduction resulted from correcting a bug found in the climate model computer code. (Somewhat lower and higher estimates of aerosol forcing and sensitivity are found using other, arguably less reliable, temperature datasets.)

> Dan H. says:…

> The most vulnerable ice has already melted

Begins with an assumption, without citation.

Conflates sea level (floating) ice and glacial ice.

Ignores multiple ways that ice shelves protect glaciers.

Arrives at his usual conclusion.

Search

http://nsidc.org/cryosphere/quickfacts/iceshelves.html

“… Ice streams and glaciers constantly push on ice shelves, but the shelves eventually come up against coastal features such as islands and peninsulas, building pressure that slows their movement into the ocean. If an ice shelf collapses, the backpressure disappears. The glaciers that fed into the ice shelf speed up, flowing more quickly out to sea….”

http://www.ldeo.columbia.edu/news-events/scientists-predict-faster-retreat-antarcticas-thwaites-glacier

Search better

Dan H. also says: “This ice is thicker than the ice that melted previously.”

And yet most of the multi-year ice has already disappeared, and ice thickness continues to drop, as best as we can tell.

Kevin,

The minimum Arctic sea ice has declined by a little over half since its maximum extent of the past three decades.

http://neven1.typepad.com/blog/2012/10/nsidc-arctic-sea-ice-news-september-2012.html

The following animation from MIT shows the sea ice changes. Notice the ice repeatedly melts along the Siberian, Alaskan, and the Northern Canadian coastlines. The Ice around Northern Greenland, and northern Canadian islands remains year after year. This is the thick ice to which I was referring. Yes, it is thinner than two decades ago, but it is thicker than the ice around the continents which has melted in the previous summers. Does this adequately explain by preious posts?

http://www.staplenews.com/home/2013/1/10/understanding-arctic-sea-ice-at-mit.html

I thought James Annan had demonstrated that using a uniform prior was bad practise. That would tend to spread the tails of the distribution nsuch that the mean is higher than the other measures of central tendency. So is it justified in this paper?

Can we quit chasing Dan H.’s red herrings? He is _so_ good at diverting a topic to talking about his mistakes. Paste his claim into Google, and sigh.

Large ice age animation

The older ice is not up against the shoreline, and is not protected.

“Does this adequately explain by preious posts?”

No, in view of the fact that the thick multi-year ice which formerly made up a considerable proportion of the sea ice has nearly disappeared.

Dr. Joel Norris of the Scripps Institution gave an excellent colloquium presentation titled “Cloud feedbacks on climate: a challenging scientific problem” to Fermilab National Laboratory, May 12, 2010. Please see the archived video and his powerpoint presentation at this link:

http://www-ppd.fnal.gov/EPPOffice-w/colloq/Past_09_10.html

New paper mixing “climate feedback parameter” with climate sensitivity… “climate feedback parameter was estimated to 5.5 ± 0.6 W m−2 K−1” “Another issue to be considered in future work should be that the large value of the climate feedback parameter according to this work disagrees with much of the literature on climate sensitivity (Knutti and Hegerl, 2008; Randall et al., 2007; Huber et al.,

2011). However, the value found here agrees with the report by Spencer and Braswell 10 (2010) that whenever linear striations were observed in their phase plane plots the slope was around 6Wm−2K−1. Spencer and Braswell (2010) used middle tropospheric temperature anomalies and although they did not consider any time lag they may have observed some feedback processes with negligible time lag considering that the tropospheric

temperature is better correlated to the radiative flux than the surface air 15 temperature. The value found in this study also agrees with Lindzen and Choi (2011) who also considered the effects of lead-lag relations.”

Open for interactive discussion.

http://www.earth-syst-dynam-discuss.net/4/25/2013/esdd-4-25-2013.html

[Response: Another paper confusing short term variations with long-term shifts. – gavin]

Regarding my #74: On sea ice thickness, here is an unreviewed but sensible discussion/analysis of Arctic sea ice volume and thickness as modeled by PIOMAS. Note particularly the plots of June and September thickness time series.

http://dosbat.blogspot.co.uk/2012/09/why-2010-piomas-volume-loss-was.html

Kevin,

Not sure about your contention that the thick ice has nearly disappeared (it could be a difference in our definitions of “thick ice”). I am not disputing that the thicker ice has not thinned. Indeed, there has been a general thinning of the entire sea ice. The thickness of the remaining, multi-year ice, along with its geographic location, will make it more difficult to melt than the ice that was spread across the Arctic, and exposed to Pacific and Atlantic ocean currents, along with runoff from fresh water rivers.

Also, usign volume to determine when the Arctic may be ice-free suffers from exponential decay. Volume will also decrease faster than area initially, but volumetric decrease will slow as less ice remains (simple mathematics). Hence, any prediction that volumetric losses will continue exponentially is mathematically flawed.

> the remaining, multi-year ice, along with its geographic location

Still no cite for this theory that ice is protected north of Greenland.

The multi-year ice is not protected by its geographic location.

http://journals.ametsoc.org/doi/abs/10.1175/JCLI-D-11-00113.1

Dan H. linked to a video at MIT that shows dark red for older ice next to Greenland. Other models, and the satellite imagery, show that the older ice circulates — and is melting.

that’s from February 2012:

Comiso, Josefino C., 2012: Large Decadal Decline of the Arctic Multiyear Ice Cover. J. Climate, 25, 1176–1193.

doi: http://dx.doi.org/10.1175/JCLI-D-11-00113.1

Any one with better background in this area that want to point out the worst mistakes in the paper in 76 can still do so.

http://www.earth-system-dynamics.net/comment_on_a_paper.html

https://www.realclimate.org/index.php/archives/2013/01/on-sensitivity-part-i/comment-page-2/#comment-313595

#80–read the discussion linked, Dan. You’ll find that ice more than 3 meters thick now forms an insignificant proportion of the total population. It’s true, of course, that the Transpolar Drift tends to accumulate ice in the area we’ve been discussing, but that hardly means that it is going to slow the overall melt any.

As to your second paragraph, nobody knows, at this point, whether volume will continue to follow an exponential curve right down to zero extent. That’s not a mathematical question, but an empirical one–and it will remain empirical unless and until we can model sea ice physically a lot better than we can now. Therefore, what you say in that paragraph is pure bravado, unsupported by any evidence. It would be nice if it were true, but there’s really no reason to think that it actually is.

The total of -0.7 W/m^2 is the same as the best observational (satellite) total aerosol adjusted forcing estimate given in the leaked Second Order Draft of AR5 WG1, which includes cloud lifetime (2nd indirect) and other effects.

There are only a handful of published estimates for total anthropogenic aerosol forcing, including first indirect and cloud lifetime effects. Of these, the smallest best estimate I can find is -0.85W/m^2, which means the reported -0.7 is unlikely to be representative of total aerosol forcing, whatever else it relates to.

Kevin, the real reason that sea ice volume will likely not reach zero any time soon is that calving from Greenland and from the Canadian archipelago will continue and will likely increase. That will keep some (comparatively small) amount of ice in the sea for a good while.

That is why informed folks, like those at Neven’s Arctic Sea Ice blog, talk instead about time frames for an ‘essentially’ or ‘virtually’ ice free Arctic Ocean–by this they generally mean anything under one million square meters of sea ice extent. And many see it as likely that we will reach this level very soon–if not this September, then within the next two or three years.

But you are right that, given the massive failure of earlier modelers, we have to conclude that we can’t really know what is coming at us with any certainty.

Following on from the comments by Nic Lewis and Graeme,

Yes, using a flat prior for climate sensitivity doesn’t make sense at all.

Subjective and objective Bayesians disagree on many things, but they would agree on that. The reasons why are repeated in most text books that discuss Bayesian statistics, and have been known for several decades. The impact of using a flat prior will be to shift the distribution to higher values, and increase the mean, median and mode. So quantitative results from any studies that use the flat prior should just be disregarded, and journals should stop publishing any results based on flat priors. Let’s hope the IPCC authors understand all that.

Nic (or anyone else)…would you be able to list all the studies that have used flat priors to estimate climate sensitivity, so that people know to avoid them?

Steve Jewson,

The problem is that the studies that do not use a flat prior wind up biasing the result via the choice of prior. This is a real problem given that some of the actors in the debate are not “honest brokers”. It has seemed to me that at some level an Empirical Bayes approach might be the best one here–either that or simply use the likelihood and the statistics thereof.

Hank:

It’s as easy as not reading them at all, which many of us are already doing. Try it! When catching up on a thread, at the point one’s eyes come to “Dan H. says:”, they simply skip past the entire comment without taking it in. Think of it as a mental killfile.

Ray,

I agree that no-one should be able to bias the results by their choice of prior: there needs to be a sensible convention for how people choose the prior, and everyone should follow it to put all studies on the same footing and to make them comparable.

And there is already a very good option for such a convention…it’s Jeffreys’ Prior (JP).

JP is not 100% accepted by everybody in statistics, and it doesn’t have perfect statistical properties (there is no framework that has perfect statistical properties anywhere in statistics) but it’s by far the most widely accepted option for a conventional prior, it has various nice properties, and basically it’s the only chance we have for resolving this issue (the alternative is that we spend the next 30 years bickering about priors instead of discussing the real issues). Wrt the nice properties, in particular the results are independent of the choice of coordinates (e.g. you can use climate sensitivity, or inverse climate sensitivity, and it makes no difference).

Using a flat prior is not the same as using Jeffreys’ prior, and the results are not independent of the choice of coordinates (e.g. a flat prior on climate sensitivity does not give the same results as a flat prior on inverse climate sensitivity).

Using likelihood alone isn’t a good idea because again the results are dependent on the parameterisation chosen…you could bias your results just by making a coordinate transformation. Plus you don’t get a probabilistic prediction.

When Nic Lewis referred to objective Bayesian statistics in post 66 above, I’d guess he meant the Jeffreys’ prior.

Steve

ps: I’m talking about the *second* version of JP, the 1946 version not the 1939 version, which resolves the famous issue that the 1939 version had related to the mean and variance of the normal distribution.

Steve, Ray

First, when I refer to an objective Bayesian method with a noninformative prior, that means using what would be the original Jeffreys’ prior for inferring a joint posterior distribution for all parameters, appropriately modified if necessary to give as accurate inference (marginal posteriors) for individual parameters as possible. In general, that would mean using Bernardo and Berger “reference priors”, one targeted at each parameter of interest. In the case of independent scale and location parameters, doing so would equate to the second version of the Jeffreys’ prior that Steve refers to. In practice, when estimating S and Kv, marginal parameter inference may be little different between using the original Jeffreys’ prior and targeted reference priors.

Secondly, here is a list of climate sensitivity studies that used a uniform prior for main results when for estimating climate sensitivity on its own, or when estimating climate sensitivity S jointly with effective ocean vertical diffusivity Kv (or any other parameter like those two in which observations are strongly nonlinear) used uniform priors for S and/or Kv.

Forest et al (2002)

Knutti et at (2002)

Frame et al (2005)

Forest et al (2006)

Forster and Gregory (2006) – results as presented in IPCC AR4 WG1 report (the study itself used 1/S prior, which is the Jeffreys’ prior in this case, where S is the only parameter being estimated)

Hegerl et al (2006)

Forest et al (2008)

Sanso, Forest and Zantedeschi (2008)

Libardoni and Forest (2011) [unform for Kv, expert for S]

Olson et al (2012)

Aldrin et al (2012)

This includes a large majority of the Bayesian climate studies that I could find.

Some of these papers also used other priors for climate sensitivity as alternatives, typically either informative “expert” priors, priors uniform in the climate feedback parameter (1/S) or in one case a uniform in TCR prior. Some also used as alternative nonuniform priors for Kv or other parameters being estimated.

Sorry to go on about it, but this prior thing this is an important issue. So here are my 7 reasons for why climate scientists should *never* use uniform priors for climate sensitivity, and why the IPCC report shouldn’t cite studies that use them.

It pains me a little to be so critical, especially as I know some of authors listed in Nic Lewis’s post, but better to say this now, and give the IPCC authors some opportunity to think about it, than after the IPCC report is published.

1) *The results from uniform priors are arbitrary and hence non-scientific*

If the authors that Nic Lewis lists above had chosen different coordinate systems, they would have got different results. For instance, if they had used 1/S, or log S, as their coordinates, instead of S, the climate sensitivity distributions would change. Scientific results should not depend on the choice of coordinate system.

2) *If you use a uniform prior for S, someone might accuse you of choosing the prior to give high rates of climate change*

It just so happens that using S gives higher values for climate sensitivity than using 1/S or log S.

3) *The results may well be nonsense mathematically*

When you apply a statistical method to a complex model, you’d want to first check that the method gives sensible results on simple models. But flat priors often given nonsense when applied to simple models. A good example is if you try and fit a normal distribution to 10 data values using a flat prior for the variance…the final variance estimate you get is higher than anything that any of the standard methods will give you, and is really just nonsense: it’s extremely biased, and the resulting predictions of the normal are much too wide. If flat priors fail on such a simple example, we can’t trust them on more complex examples.

4) *You risk criticism from more or less the entire statistics community*

The problems with flat priors have been well understood by statisticians for decades. I don’t think there is a single statistician in the world who would argue that flat priors are a good way to represent lack of knowledge, or who would say that they should be used as a convention (except for location parameters…but climate sensitivity isn’t a location parameter).

5) *You risk criticism from scientists in many other disciplines too*

In many other scientific disciplines these issues are well understood, and in many disciplines it would be impossible to publish a paper using a flat prior. (Even worse, pensioners from the UK and mathematicians from the insurance industry may criticize you too :)).

6) *If your paper is cited in the IPCC report, IPCC may end up losing credibility*

These are much worse problems than getting the date of melting glaciers wrong. Uniform priors are a fundamentally unjustifiable methodology that gives invalid quantitative results. If these papers are cited in the IPCC, the risk is that critics will (quite rightly) heap criticism on the IPCC for relying on such stuff, and the credibility of IPCC and climate science will suffer as a result.

7) *There is a perfectly good alternative, that solves all these problems*

Harold Jeffreys grappled with the problem of uniform priors in the 1930s, came up with the Jeffreys’ prior (well, I guess he didn’t call it that), and wrote a book about it. It fixes all the above problems: it gives results which are coordinate independent and so not arbitrary in that sense, it gives sensible results that agree with other methods when applied to simple models, and it’s used in statistics and many other fields.

In Nic Lewis’s email (number 89 above), Nic describes a further refinement of the Jeffreys’ Prior, known as reference priors. Whether the 1946 version of Jeffreys’ Prior, or a reference prior, is the better choice, is a good topic for debate (although it’s a pretty technical question). But that debate does muddy the waters of this current discussion a little: the main point is that both of them are vastly preferable to uniform priors (and they are very similar anyway). If reference priors are too confusing, just use Jeffreys’ 1946 Prior. If you want to use the fanciest statistical technology, use reference priors.

ps: if you go to your local statistics department, 50% of the statisticians will agree with what I’ve written above. The other 50% will agree that uniform priors are rubbish, but will say that JP is rubbish too, and that you should give up trying to use any kind of noninformative prior. This second 50% are the subjective Bayesians, who say that probability is just a measure of personal beliefs. They will tell you to make up your own prior according to your prior beliefs. To my mind this is a non-starter in climate research, and maybe in science in general, since it removes all objectivity. That’s another debate that climate scientists need to get ready to be having over the next few years.

Steve

This thread now appears under “Older Entries”. Maybe the dialogue between Nic Lewis and Steve Jewson merits some continuing attention, unless it is accepted (as Ray Ladbury has confidently asserted) that Climate Sensitivity is now a “mature field” with a trend raround +2.8K generally agreed.

#66 [Response: Part III. – gavin] Any expected release date?

Steve Jewson,

I agree that Jeffrey’s Prior is attractive in a lot of situations. However, it is not clear that it would help in this case, is it? I mean in some cases, JP is flat.

To Steve Jewson:

Steve,

You have clarified many things that I have appreciated but have been unable to express with your clarity. I offer my thanks.

I have been aware that some specific choices of priors are necessary in even the most mundane of statistical issues. E.G. the choice of a prior for the variance (or standard deviation) for the normal distribution. Also that there are desirable properties that should be maintained, be invariant, under coordinate transformations, and that parameter estimators should be unbiased in at least one case of the mean, median, or mode. Such things being elemental requirements based on the generic class of the problem.

At the risk of disagreeing with you, I do have that problem that rejects the notion of anything being non-informative. To me such priors would be better called differently, elementally, generically, ideally, or statistically informed, if that gets my intention across to you.

I doubt I have the reserves of wit to grapple with how the JP is derived from Fisher Information, for my purposes, and I suspect those required for the problem at hand, simpler arguments based directly on the need for invariance under coordinate transformations, specifically under a coordinate flip, and perhaps the desire for well behaved estimators would suffice, and be easier for those such as I to comprehend.

As it happens, I have no objection to completely subjective priors providing people are prepared to hold the ensuing argument, and be clear and transparent in their reasoning, which I can either embrace or dismiss. That said, it seems that the JPs would be the better points for departure (as opposed to flat priors) to argue from.

Many Thanks

Alex

In 2006, Barton Paul Levenson counted 62 papers that estimated climate sensitivity, starting with Arrhenius in 1896.

http://bartonpaullevenson.com/ClimateSensitivity.html

Values

Less than 1 : 6

Between 1 and 2: 19

Between 2 and 3: 12

Between 3 and 4: 12

Between 4 and 5: 4

Between 5 and 6: 1

Greater than 6 : 1

Average across all is 2.86, median 2.6

Ari Jokimaki lists more recent papers here: http://agwobserver.wordpress.com/2009/11/05/papers-on-climate-sensitivity-estimates/

A certain picture emerges.

[Response: These are not all commensurable as discussed above, and some are just wrong or rely on out-of-date data. A proper assessment requires a little more work, not just counting. – gavin]

Not looking too good is it?

Nic Lewis out first with his study on climate sensitivity, comments above by Steve Jewson on the use of flat priors, now a Norwegian group on their new revised estimate for climate sensitivity.

http://www.forskningsradet.no/en/Newsarticle/Global_warming_less_extreme_than_feared/1253983344535/p1177315753918

That 1.9 C for CO2 doubling not so unreasonable after all…

nvw,

It seems that nature has a greater influence than first thought. So much for Simon’s claim above.

If a dominance of La Nina/ocean variability, is causing a hiatus, does that mean climate sensitivity is lower? Doesn’t sound right to me.

#96 from your link:

“When the researchers at CICERO and the Norwegian Computing Center applied their model and statistics to analyse temperature readings from the air and ocean for the period ending in 2000, they found that climate sensitivity to a doubling of atmospheric CO2 concentration will most likely be 3.7°C, which is somewhat higher than the IPCC prognosis.

But the researchers were surprised when they entered temperatures and other data from the decade 2000-2010 into the model; climate sensitivity was greatly reduced to a “mere” 1.9°C.”

Well, doesn’t this just show that the method is not robust? How can a single decade be enough to overturn previous results that much, especially when there are other straightforward methods explaining the differences in trend from empirical data, i.e. Foster/Rahmsdorf 2012? I assume that climate sensitivity does not change with time.

Any one seen the paper #99? I can not find a published article…