There has been a lot of discussion related to the Hansen et al (2012, PNAS) paper and the accompanying op-ed in the Washington Post last week. But in this post, I’ll try and make the case that most of the discussion has not related to the actual analysis described in the paper, but rather to proxy arguments for what people think is ‘important’.

The basic analysis

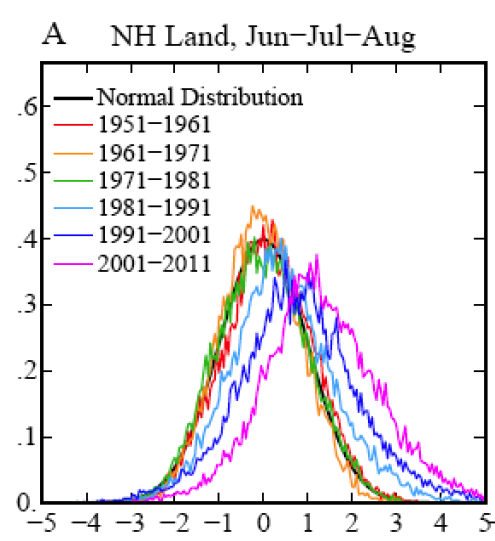

What Hansen et al have done is actually very simple. If you define a climatology (say 1951-1980, or 1931-1980), calculate the seasonal mean and standard deviation at each grid point for this period, and then normalise the departures from the mean, you will get something that looks very much like a Gaussian ‘bell-shaped’ distribution. If you then plot a histogram of the values from successive decades, you will get a sense for how much the climate of each decade departed from that of the initial baseline period.

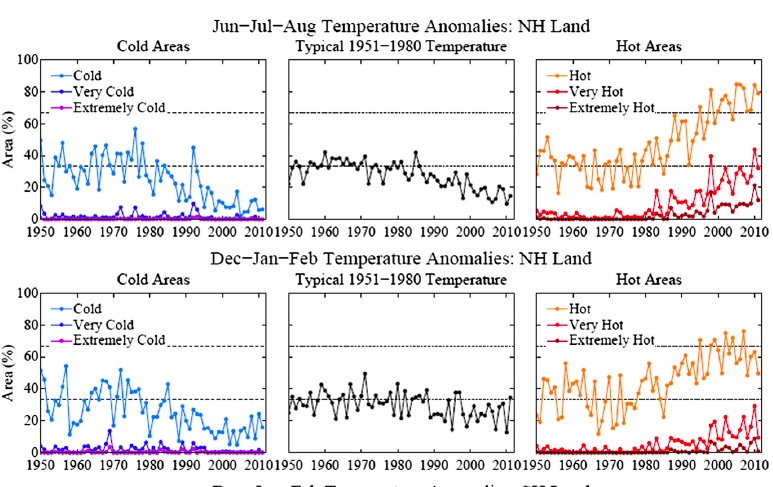

The shift in the mean of the histogram is an indication of the global mean shift in temperature, and the change in spread gives an indication of how regional events would rank with respect to the baseline period. (Note that the change in spread shouldn’t be automatically equated with a change in climate variability, since a similar pattern would be seen as a result of regionally specific warming trends with constant local variability). This figure, combined with the change in areal extent of warm temperature extremes:

are the main results that lead to Hansen et al’s conclusion that:

“hot extreme[s], which covered much less than 1% of Earth’s surface during the base period, now typically [cover] about 10% of the land area. It follows that we can state, with a high degree of confidence, that extreme anomalies such as those in Texas and Oklahoma in 2011 and Moscow in 2010 were a consequence of global warming because their likelihood in the absence of global warming was exceedingly small.”

What this shows first of all is that extreme heat waves, like the ones mentioned, are not just “black swans” – i.e. extremely rare events that happened by “bad luck”. They might look like rare unexpected events when you just focus on one location, but looking at the whole globe, as Hansen et al. did, reveals an altogether different truth: such events show a large systematic increase over recent decades and are by no means rare any more. At any given time, they now cover about 10% of the planet. What follows is that the likelihood of 3 sigma+ temperature events (defined using the 1951-1980 baseline mean and sigma) has increased by such a striking amount that attribution to the general warming trend is practically assured. We have neither long enough nor good enough observational data to have a perfect knowledge of the extremes of heat waves given a steady climate, and so no claim along these lines can ever be for 100% causation, but the change is large enough to be classically ‘highly significant’.

The point I want to stress here is that the causation is for the metric “a seasonal monthly anomaly greater than 3 sigma above the mean”.

This metric comes follows on from work that Hansen did a decade ago exploring the question of what it would take for people to notice climate changing, since they only directly experience the weather (Hansen et al, 1998) (pdf), and is similar to metrics used by Pall et al and other recent papers on the attribution of extremes. It is closely connected to metrics related to return times (i.e. if areal extent of extremely hot anomalies in any one summer increases by a factor of 10, then the return time at an average location goes from 1 in 330 years to 1 in 33 years).

A similar conclusion to Hansen was reached by Rahmstorf and Coumou (2011) (pdf)) but for a related but different metric: the probability of record-breaking events rather than 3-sigma events. For the Moscow heat record of July 2010, they found that the probability of a record had increased five-fold due to the local climatic warming trend, as compared to a stationary climate (see our previous articles The Moscow warming hole and On record-breaking extremes for further discussion). An similarly concluded extension of this analysis to the whole globe is currently in review.

There have been been some critiques of Hansen et al. worth addressing – Marty Hoerling’s statements in the NY Times story referring to his work (Dole et al, 2010) and Hoerling et al, (submitted) on attribution of the Moscow and Texas heat-waves, and a blog post by Cliff Mass of the U. of Washington. *

*We can just skip right past the irrelevant critique from Pat Michaels – someone well-versed in misrepresenting Hansen’s work – since it consists of proving wrong a claim (that US drought is correlated to global mean temperature) that appears nowhere in the paper – even implicitly. This is like criticising a diagnosis of measles by showing that your fever is not correlated to the number of broken limbs.

The metrics that Hoerling and Mass use for their attribution calculations are the absolute anomaly above climatology. So if a heat wave is 7ºC above the average summer, and since global warming could have contributed 1 or 2ºC (depending on location, season etc.), the claim is that only 1/7th or 2/7th’s of the anomaly is associated with climate change, and that the bulk of the heat wave is driven by whatever natural variability has always been important (say, La Niña or a blocking high).

But this Hoerling-Mass ratio is a very different metric than the one used by Hansen, Pall, Rahmstorf & Coumou, Allen and others, so it isn’t fair for Hoerling and Mass to claim that the previous attributions are wrong – they are simply attributing a different thing. This only rarely seems to be acknowledged. We discussed the difference between those two types of metrics previously in Extremely hot. There we showed that the more extreme an event is, the more does the relative likelihood increase as a result of a warming trend.

So which metric ‘matters’ more? and are there other metrics that would be better or more useful?

A question of values

What people think is important varies enormously, and as the French say ‘Les goûts et les couleurs ne se discutent pas’ (Neither tastes nor colours are worth arguing about). But is the choice of metric really just a matter of opinion? I think not.

Why do people care about extreme weather events? Why for instance is a week of 1ºC above climatology uneventful, yet a day with a 7ºC anomaly is something to be discussed on the evening news? It is because the impacts of a heat wave are very non-linear. The marginal effect of an additional 1ºC on top of 6ºC on many aspects of a heat wave (on health, crops, power consumption etc.) is much more than the effect of the first 1ºC anomaly. There are also thresholds – temperatures above which systems will cease to work at all. One would think this would be uncontroversial. Of course, for some systems not near any thresholds and over a small enough range, effects can be approximated as linear, but at some point that will obviously break down – and the points at which it does are clearly associated with extremes that with the most important impacts.

Only if we assume that the all responses are linear, can there be a clear separation between the temperature increases caused by global warming and the internal variability over any season or period, and the attribution of effects scales like the Hoerling-Mass ratio. But even then the “fraction of the anomaly due to global warming” is somewhat arbitrary because it depends on the chosen baseline for defining the anomaly – is it the average July temperature, or typical previous summer heat waves (however defined), or the average summer temperature, or the average annual temperature? In the latter (admittedly somewhat unusual) choice of baseline, the fraction of last July’s temperature anomaly that is attributable to global warming is tiny, since most of the anomaly is perfectly natural and due to the seasonal cycle! So the fraction of an event that is due to global warming depends on what you compare it to. One could just as well choose a baseline of climatology, conditioned e.g. on the phase of ENSO, the PDO and the NAO, in which case the global warming signal would be much larger.

If however, the effects are significantly non-linear then this separation can’t be done so simply. If the effects are quadratic in the anomaly, a 1ºC extra on top of 6ºC, is responsible for 26% of the effect, not 14%. For cubic effects, it would be 37% etc. And if there was a threshold at 6.5ºC, it would be 100%.

Since we don’t however know exactly what the effect/temperature curve looks like in any specific situation, let alone globally (and in any case this would be very subjective), any kind of assumed effect function needs to be justified. However, we do know that in general that effects will be non-linear, and that there are thresholds. Given that, looking at changes in frequency of events (or return times, as is sometimes done), is more general and allows different sectors/people to assess the effects based on their prior experience. And choosing highly exceptional events to calculate return times – like 3-sigma+ events, or the record-breaking events – is sensible for focusing on the events that cause the most damage because society and ecosystems are least adapted to them.

Using the metric that Hoerling and Mass are proposing is equivalent to assuming that all effects of extremes are linear, which is very unlikely to be true. The ‘loaded dice’/’return time’/’frequency of extremes’ metrics being used by Hansen, Pall, Rahmstorf & Coumou, Allen etc. are going to be much more useful for anyone who cares about what effects these extremes are having.

References

- J. Hansen, M. Sato, and R. Ruedy, "Perception of climate change", Proceedings of the National Academy of Sciences, vol. 109, 2012. http://dx.doi.org/10.1073/pnas.1205276109

- J. Hansen, M. Sato, J. Glascoe, and R. Ruedy, "A common-sense climate index: Is climate changing noticeably?", Proceedings of the National Academy of Sciences, vol. 95, pp. 4113-4120, 1998. http://dx.doi.org/10.1073/pnas.95.8.4113

- S. Rahmstorf, and D. Coumou, "Increase of extreme events in a warming world", Proceedings of the National Academy of Sciences, vol. 108, pp. 17905-17909, 2011. http://dx.doi.org/10.1073/pnas.1101766108

- R. Dole, M. Hoerling, J. Perlwitz, J. Eischeid, P. Pegion, T. Zhang, X. Quan, T. Xu, and D. Murray, "Was there a basis for anticipating the 2010 Russian heat wave?", Geophysical Research Letters, vol. 38, pp. n/a-n/a, 2011. http://dx.doi.org/10.1029/2010GL046582

Hank in 148 linked to a very interesting discussion and asked “So — what does the data show?”

It shows that our statistical Kung Fu is weak: http://www.youtube.com/watch?v=3g92KbAPJMg

It is incredibly difficult to tease out changes in the shape of distributions from such small amounts of data. I believe that these temperature extremes, heat waves, etc are a consequence of global warming, but that is a belief. Before the economic collapse I believed we were in a housing bubble. When I casually observed housing prices I could see a spike. Paul Krugman wrote for years before the collapse that we were in a bubble and I believed he was right. Alan Greenspan didn’t. I don’t think Greenspan was an idiot. I think that there was no rigorous analysis demonstrating that we were in a bubble possibly because a rigorous analysis is impossible with such small amounts of data. You look at a short stochastic time series and say “Gee prices are going up awfully fast.” or “Gee, temperatures are going up awfully fast” but showing that such increases are statistically meaningful is hard when you have a time series of about 130 years or so and want to argue in a statistically rigorous way that the last few decades are special. I think that doing this on the basis of time series analysis is somewhere between hard and impossible. I think more and better analyses are needed. I predict that no one will argue with that.

John (#151)

You may be able to estimate the number of spatial grid points used by looking that the figures involving the US lower 48 here: http://www.columbia.edu/~mhs119/PerceptionsAndDice/

Once you do, I’m sure you’ll revise your statement about too few data.

John,

I agree with you completely. While we may think something will happen based on the evidence, drawing a conclusion will require more data. This can be confirmed (or refuted) with mroe and better analyses.

“…but you can’t relax the independent condition.” John E. Pearson — 25 Aug 2012 @ 9:14 AM

But if you impose a forcing (AGW) which changes the degree of independence, making the tails fatter/less Gaussian, doesn’t that mean the forcing ACTUALLY makes the probability of what were once 3,4,5 sigma events MUCH higher than indicated by tamino’s method of analysis, which removes these effects? Doesn’t using a “baseline for anomaly calculation” “equal to the time span being analyzed” decrease REAL extreme weather event probabilities much the same way as using a sliding baseline minimizes the slope of temperature increase? This appears to me to be using Middleton’s Method for Minimizing Risk.

reCAPTCHA – “spread thatdo” – Yes, it do spread that distribution &:>)

Re 177 the reason Siberia was not glaciated was that with the lower sea levels it was too far inland to get any precipitation. No snow no glaciers.

As a newby here, I want to raise an issue I haven’t seen addressed. The thrust of the present discussion, and most discussions I have seen related to climate change, is the effect of climate on weather. The long-term trend of temperature increase appears to enable more extreme weather events, covering a number of different definitions of ‘extreme’. But, what about the impact of weather on climate?

Because of the potential non-linearity, or non-hysteresis, of extreme events, mechanisms could be triggered by these extreme events that could impact climate. These are over and above those positive feedback mechanims triggered by evolving climate mechanisms. One could postulate, for example, an overly warm Arctic week that crosses a threshold and triggers a burst of methane that would not be triggered at even slightly lower temperatures. This, of course, would add GHG to the atmosphere, feeding into further warming. So, it appears there is a symbiosis between climate and warming, each feeding upon the other to exacerbate the situation. Is this weather-climate symbiosis considered in the models?

I am reading the book “Hockey Stick and the Climate Wars”. My 8-year-old asked what it is about and I did not know how to respond without overwhelming him. Can you recommend any books that are truthful about the upcoming effects of climate change that I can read to my son?

8 years is too young to be given ownership of this problem. Stick to generally modeling good values instead, that’s my advice. Or failing that, make a plan for communications that isn’t the equivalent of emptying a bag of hammers over a young mind.

Repeating some remarks I made at P3, my son was the inadvertent subject of well-intended willy-nilly environmental destruction narratives delivered by his parents, various teachers, NPR, the “Planet Earth” documentary, IMAX movies, nearly every magazine he picks up, etc. etc. etc.

The healthy response to this is to let the snorkel valve rise to the “flooded” position and shut off the source of emotional drowning. My son has his valves shut now, presumably will crack the hatch and peek out at some point in the future.