There has been a lot of discussion related to the Hansen et al (2012, PNAS) paper and the accompanying op-ed in the Washington Post last week. But in this post, I’ll try and make the case that most of the discussion has not related to the actual analysis described in the paper, but rather to proxy arguments for what people think is ‘important’.

The basic analysis

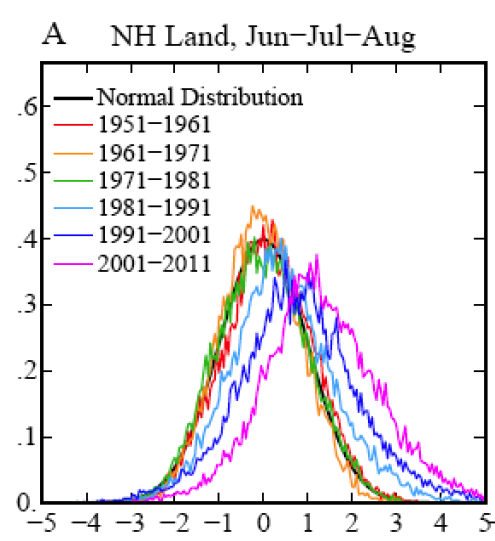

What Hansen et al have done is actually very simple. If you define a climatology (say 1951-1980, or 1931-1980), calculate the seasonal mean and standard deviation at each grid point for this period, and then normalise the departures from the mean, you will get something that looks very much like a Gaussian ‘bell-shaped’ distribution. If you then plot a histogram of the values from successive decades, you will get a sense for how much the climate of each decade departed from that of the initial baseline period.

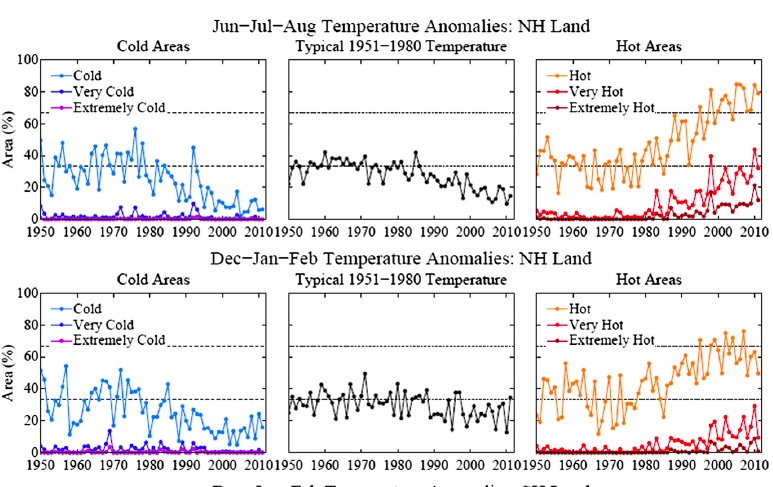

The shift in the mean of the histogram is an indication of the global mean shift in temperature, and the change in spread gives an indication of how regional events would rank with respect to the baseline period. (Note that the change in spread shouldn’t be automatically equated with a change in climate variability, since a similar pattern would be seen as a result of regionally specific warming trends with constant local variability). This figure, combined with the change in areal extent of warm temperature extremes:

are the main results that lead to Hansen et al’s conclusion that:

“hot extreme[s], which covered much less than 1% of Earth’s surface during the base period, now typically [cover] about 10% of the land area. It follows that we can state, with a high degree of confidence, that extreme anomalies such as those in Texas and Oklahoma in 2011 and Moscow in 2010 were a consequence of global warming because their likelihood in the absence of global warming was exceedingly small.”

What this shows first of all is that extreme heat waves, like the ones mentioned, are not just “black swans” – i.e. extremely rare events that happened by “bad luck”. They might look like rare unexpected events when you just focus on one location, but looking at the whole globe, as Hansen et al. did, reveals an altogether different truth: such events show a large systematic increase over recent decades and are by no means rare any more. At any given time, they now cover about 10% of the planet. What follows is that the likelihood of 3 sigma+ temperature events (defined using the 1951-1980 baseline mean and sigma) has increased by such a striking amount that attribution to the general warming trend is practically assured. We have neither long enough nor good enough observational data to have a perfect knowledge of the extremes of heat waves given a steady climate, and so no claim along these lines can ever be for 100% causation, but the change is large enough to be classically ‘highly significant’.

The point I want to stress here is that the causation is for the metric “a seasonal monthly anomaly greater than 3 sigma above the mean”.

This metric comes follows on from work that Hansen did a decade ago exploring the question of what it would take for people to notice climate changing, since they only directly experience the weather (Hansen et al, 1998) (pdf), and is similar to metrics used by Pall et al and other recent papers on the attribution of extremes. It is closely connected to metrics related to return times (i.e. if areal extent of extremely hot anomalies in any one summer increases by a factor of 10, then the return time at an average location goes from 1 in 330 years to 1 in 33 years).

A similar conclusion to Hansen was reached by Rahmstorf and Coumou (2011) (pdf)) but for a related but different metric: the probability of record-breaking events rather than 3-sigma events. For the Moscow heat record of July 2010, they found that the probability of a record had increased five-fold due to the local climatic warming trend, as compared to a stationary climate (see our previous articles The Moscow warming hole and On record-breaking extremes for further discussion). An similarly concluded extension of this analysis to the whole globe is currently in review.

There have been been some critiques of Hansen et al. worth addressing – Marty Hoerling’s statements in the NY Times story referring to his work (Dole et al, 2010) and Hoerling et al, (submitted) on attribution of the Moscow and Texas heat-waves, and a blog post by Cliff Mass of the U. of Washington. *

*We can just skip right past the irrelevant critique from Pat Michaels – someone well-versed in misrepresenting Hansen’s work – since it consists of proving wrong a claim (that US drought is correlated to global mean temperature) that appears nowhere in the paper – even implicitly. This is like criticising a diagnosis of measles by showing that your fever is not correlated to the number of broken limbs.

The metrics that Hoerling and Mass use for their attribution calculations are the absolute anomaly above climatology. So if a heat wave is 7ºC above the average summer, and since global warming could have contributed 1 or 2ºC (depending on location, season etc.), the claim is that only 1/7th or 2/7th’s of the anomaly is associated with climate change, and that the bulk of the heat wave is driven by whatever natural variability has always been important (say, La Niña or a blocking high).

But this Hoerling-Mass ratio is a very different metric than the one used by Hansen, Pall, Rahmstorf & Coumou, Allen and others, so it isn’t fair for Hoerling and Mass to claim that the previous attributions are wrong – they are simply attributing a different thing. This only rarely seems to be acknowledged. We discussed the difference between those two types of metrics previously in Extremely hot. There we showed that the more extreme an event is, the more does the relative likelihood increase as a result of a warming trend.

So which metric ‘matters’ more? and are there other metrics that would be better or more useful?

A question of values

What people think is important varies enormously, and as the French say ‘Les goûts et les couleurs ne se discutent pas’ (Neither tastes nor colours are worth arguing about). But is the choice of metric really just a matter of opinion? I think not.

Why do people care about extreme weather events? Why for instance is a week of 1ºC above climatology uneventful, yet a day with a 7ºC anomaly is something to be discussed on the evening news? It is because the impacts of a heat wave are very non-linear. The marginal effect of an additional 1ºC on top of 6ºC on many aspects of a heat wave (on health, crops, power consumption etc.) is much more than the effect of the first 1ºC anomaly. There are also thresholds – temperatures above which systems will cease to work at all. One would think this would be uncontroversial. Of course, for some systems not near any thresholds and over a small enough range, effects can be approximated as linear, but at some point that will obviously break down – and the points at which it does are clearly associated with extremes that with the most important impacts.

Only if we assume that the all responses are linear, can there be a clear separation between the temperature increases caused by global warming and the internal variability over any season or period, and the attribution of effects scales like the Hoerling-Mass ratio. But even then the “fraction of the anomaly due to global warming” is somewhat arbitrary because it depends on the chosen baseline for defining the anomaly – is it the average July temperature, or typical previous summer heat waves (however defined), or the average summer temperature, or the average annual temperature? In the latter (admittedly somewhat unusual) choice of baseline, the fraction of last July’s temperature anomaly that is attributable to global warming is tiny, since most of the anomaly is perfectly natural and due to the seasonal cycle! So the fraction of an event that is due to global warming depends on what you compare it to. One could just as well choose a baseline of climatology, conditioned e.g. on the phase of ENSO, the PDO and the NAO, in which case the global warming signal would be much larger.

If however, the effects are significantly non-linear then this separation can’t be done so simply. If the effects are quadratic in the anomaly, a 1ºC extra on top of 6ºC, is responsible for 26% of the effect, not 14%. For cubic effects, it would be 37% etc. And if there was a threshold at 6.5ºC, it would be 100%.

Since we don’t however know exactly what the effect/temperature curve looks like in any specific situation, let alone globally (and in any case this would be very subjective), any kind of assumed effect function needs to be justified. However, we do know that in general that effects will be non-linear, and that there are thresholds. Given that, looking at changes in frequency of events (or return times, as is sometimes done), is more general and allows different sectors/people to assess the effects based on their prior experience. And choosing highly exceptional events to calculate return times – like 3-sigma+ events, or the record-breaking events – is sensible for focusing on the events that cause the most damage because society and ecosystems are least adapted to them.

Using the metric that Hoerling and Mass are proposing is equivalent to assuming that all effects of extremes are linear, which is very unlikely to be true. The ‘loaded dice’/’return time’/’frequency of extremes’ metrics being used by Hansen, Pall, Rahmstorf & Coumou, Allen etc. are going to be much more useful for anyone who cares about what effects these extremes are having.

References

- J. Hansen, M. Sato, and R. Ruedy, "Perception of climate change", Proceedings of the National Academy of Sciences, vol. 109, 2012. http://dx.doi.org/10.1073/pnas.1205276109

- J. Hansen, M. Sato, J. Glascoe, and R. Ruedy, "A common-sense climate index: Is climate changing noticeably?", Proceedings of the National Academy of Sciences, vol. 95, pp. 4113-4120, 1998. http://dx.doi.org/10.1073/pnas.95.8.4113

- S. Rahmstorf, and D. Coumou, "Increase of extreme events in a warming world", Proceedings of the National Academy of Sciences, vol. 108, pp. 17905-17909, 2011. http://dx.doi.org/10.1073/pnas.1101766108

- R. Dole, M. Hoerling, J. Perlwitz, J. Eischeid, P. Pegion, T. Zhang, X. Quan, T. Xu, and D. Murray, "Was there a basis for anticipating the 2010 Russian heat wave?", Geophysical Research Letters, vol. 38, pp. n/a-n/a, 2011. http://dx.doi.org/10.1029/2010GL046582

There are three simple points in the Hansen paper

First if there is an increasing/decreasing linear trend on a noisy series, the probability of reaching new maxima or minima increases with time

Second, if you put more energy into a system variability increases.

Third, if you put more energy into a system variability increases asymmetrically towards the direction favored by higher energy

Fourth, if you keep going in the direction you’re headed, you’ll get there.

101 Eli wrote: “Second, if you put more energy into a system variability increases.

Third, if you put more energy into a system variability increases asymmetrically towards the direction favored by higher energy”

I’m not sure you meant this but the way those two points are stated makes it sound as if these statements are true in general, i.e. for systems other than the climate system. While I expect that they are true for the climate system it’s unlikely that they’re generally true as they would comprise new physical laws.

“There is no reason to expect this…either from modeling or theoretical results”

As you can see, Mass just waves away newer research showing the impact of melting arctic ice on NH atmospheric patterns. He feels the research is unconvincing, but I’ve yet to see him explain why. (If I’ve missed his explanation could someone point me too it?)

It’s not clear if he thinks it’s fundamentally flawed research, or just too new — e.g. not enough support from other studies/researchers. I wish he’d address this in his blog.

I applaud the fact that you link to Tamino’s explanation why the increased variance may simply be a natural artifact of regional variability and where the baseline was chosen, and *not* actual increased variability. Apparently not many people on this thread read it, or understood it.

The real blowback against Hansen has a lot more to do with the alarmist and scientifically unsupportable statements he makes in his op-eds at the WP and NYT. In this article you speak to how science communication to the public should occur, and I would submit that there is a lot more communication happening in these op-eds than the PNAS paper.

“To the contrary, our analysis shows that, for the extreme hot weather of the recent past, there is virtually no explanation other than climate change.”

“Sea levels would rise and destroy coastal cities. Global temperatures would become intolerable. Twenty to 50 percent of the planet’s species would be driven to extinction. Civilization would be at risk.”

Many of the cheerleaders hear may well agree with Hansen’s statements, made with absolute conviction and certainty, but I would venture to say that the consensus of scientists does not.

Choose your leaders wisely.

63 cliff mass says

Cliff Mass, I can understand your point. Here is an article that would support your view. Blocking events have increased. Some think because of Global Warming but that may not be the case. There is a graph at the end of this article that shows blocking days in the North Atlantic since 1901 by decade. It clearly shows that the number of blocking events is variable by decade and that we are in a phase with more blocking days than the period of time used for the Hansen research of 1951 to 1980. Looking at the graph in the articl I am linking to, what were the frequency of heat or cold waves from 1921 to 1950 which show many more blocking days than the 1951 to 1980 time period.

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/20110008410_2011008681.pdf

@105 Tom Scharf,

‘Choose your leaders wisely’. I would say we ignore Hansen at our collective peril. All those scientist who think Hansen is being too alarmist have not put forward convincing arguments, as far as I know, why his reasoned suspicion of the risks of faster changes than the current scientific consensus allows for are not justified. As long as these suspicions are reasonable we should be precautious and take them seriously.

Case in point (verbatim):

GEOPHYSICAL RESEARCH LETTERS, VOL. 39, L16703, 7 PP., 2012

doi:10.1029/2012GL052775

Trends in record-breaking temperatures for the conterminous United States

Key Points

Standard methods of identifying record-setting trends are complex and not robust

Record low minimums decreasing, record high maximums increasing

Novel, simple method presented here will be useful for climate impact studies

Clinton M. Rowe

Department of Earth and Atmospheric Sciences, University of Nebraska-Lincoln, Lincoln, Nebraska, USA

Logan E. Derry

Department of Earth and Atmospheric Sciences, University of Nebraska-Lincoln, Lincoln, Nebraska, USA

In an unchanging climate, record-breaking temperatures are expected to decrease in frequency over time, as established records become increasingly more difficult to surpass. This inherent trend in the number of record-breaking events confounds the interpretation of actual trends in the presence of any underlying climate change. Here, a simple technique to remove the inherent trend is introduced so that any remaining trend can be examined separately for evidence of a climate change. As this technique does not use the standard definition of a broken record, our records* are differentiated by an asterisk. Results for the period 1961–2010 indicate that the number of record* low daily minimum temperatures has been significantly and steadily decreasing nearly everywhere across the United States while the number of record* high daily minimum temperatures has been predominantly increasing. Trends in record* low and record* high daily maximum temperatures are generally weaker and more spatially mixed in sign. These results are consistent with other studies examining changes expected in a warming climate.

Trends in record*-breaking temperatures for the conterminous United States http://www.agu.org/pubs/crossref/pip/2012GL052775.shtml

Link to it…

105 Tom Scharf told me to choose my leader wisely:

I plan to. http://contribute.barackobama.com

prokaryotes says: 21 Aug 2012 at 8:18 PM

Trends in record*-breaking temperatures…

Published by AGU, the erratic defender of ethics in science communications, potential samaritan, inadvertent author of irony.

#103 / John Pearson:

101 Eli wrote: “Second, if you put more energy into a system variability increases.

Third, if you put more energy into a system variability increases asymmetrically towards the direction favored by higher energy”

I’m not sure you meant this but the way those two points are stated makes it sound as if these statements are true in general, i.e. for systems other than the climate system. While I expect that they are true for the climate system it’s unlikely that they’re generally true as they would comprise new physical laws.

Eli thinks this is indeed a fairly general proposition rooted in statistical mechanics. All systems are constrained globally by conservation of energy but the possibility of local fluctuations depends on the amount of energy available locally, e.g. the distribution of energy in the system. The more energy in the system, the larger these local fluctuations can be. Examples can be found in many areas including protein folding and unfolding, molecular dynamics, etc. For example http://jcp.aip.org/resource/1/jcpsa6/v89/i9/p5852_s1

Many of the cheerleaders hear may well agree with Hansen’s statements, made with absolute conviction and certainty, but I would venture to say that the consensus of scientists does not.

Sure, because some guy said so on some blog. I guess that explains why many previous civilizations collapsed when their local climate changed as well, and their local sources of food and water became unreliable.

You understand the difference between local and global, right? You understand the difference between a few hundred or thousands of people having to evacuate their desert homes and seven to nine billion on a planet? I would venture to say you don’t have a clue about the physics and biology at play here.

> The more energy in the system, the larger these local fluctuations

I’d been trying to think of any case John P. could cite where you wouldn’t expect to see local fluctuations toward the high end while energy is being added, and toward the low end when energy is being removed. Short of a superconductor, anyhow.

Imagine a pot of water at 50C.

Drop in a block of iron at 1C; you’ll see fluctuations, bits of colder water coming off the cold iron and mixing. Nowhere does any of it go above 50C.

Instead drop in a block of iron at 99C; you’ll see some water almost at 99C swirling as the heat goes into the water — but none of it goes below 50C.

Example needed where change _isn’t_ in the direction suggested.

http://www.gfdl.noaa.gov/blog/isaac-held/2012/08/04/30-extremes/

Tom Scharf wrote: “the alarmist and scientifically unsupportable statements he makes in his op-eds at the WP and NYT”

There is absolutely nothing whatsoever “scientifically unsupportable” about anything Hansen wrote in those op-eds, and given the reality that he accurately describes, “alarm” is fully justified.

Hansen is right. You are wrong. Period. End of story.

re John E Pearson (@103) and the “laws” concerning distribution of fluctuations in physical systems according to temperature (energy).

In general Eli’s postulates which you reproduce don’t constitute “new physical laws” since they’re already more or less contained within existing laws! Most notably Boltzmann’s law defining the distribution of states (that differ in energies) according to temperature.

According to Boltzmann’s law p ~ e^(-E/k.T), where p is probability of a state occurring, E is its energy and k is Boltzmann constant.

So as you increase the temperature of a system that is defined by a number of different states separated by different energies (a climate system, or perhaps a protein whose configuration in solution may be characterized by a set of fluctuations around the sort of structure one might observe in a crystal), you not only increase the variability by populating more states[*], but the distribution of sub-states shifts towards those that are favoured at higher temperature.

[*] Note that at any temperature all of the states exist according to the Boltzmann distribution. However according to an observer a particular state may not be apparent until it is sufficiently populated. Thus increasing the temperature may populate a particular protein fluctuational state at a level (say 1% of the total distribution) where it might be detectable spectroscopically…..and increasing the global temperature may increase the frequency of a particular climate state (e.g. a severe drought or heat-wave) so that it becomes annoyingly apparent.

In reality the climate system won’t accord strictly to Boltzmann’s laws of distribution of states due to it’s complexity and the possibility of threshold effects (phase changes)/feedbacks and so on. However this also applies to more simple physical systems like the protein..heat the protein sufficiently and it will undergo an abrupt phase change to an unfolded (denatured) state…

112 and 114:

If those points are generally true then the constitute physical laws and their domain of validity ought to be specified, and of course they ought to have names Rabett’s second and third laws, I guess. I’m not sure they’re true in the case of hard turbulence.

117 Chris wrote about Boltzmann statistics:

The connection to equilibrium statistical mechanics is opaque because we’re talking about driven systems far from equilibrium. At least that is what I thought we were talking about.

It sounds plausible that a system with higher energy has greater variability, but under what conditions? And with what held constant? Is there a theorem about this, or is it just a plausibility argument? (And is this actually claimed as a general theorem in the Hansen article, as the summary in @101 seems to imply?)

Consider a box willed with gas, under two conditions: (1) the first box is in equilibrium, at high temperature, and thus has a high energy content; (2) the second box has low energy content, but is out of equilibrium: it is stirred by turbulent convection, produced by heating from below and cooling from above. Which box has more hydrodynamic fluctuations (i.e., weather)? It seems plausible to me from this thought experiment that while the amount of energy may affect the size of the fluctuations, the degree of disequilibrium is also important in determining their size.

@114 Hank Roberts. Here is an example of a system where there is locally a change in the wrong direction. Suppose we have a block of iron and a beaker of water, initially at the same temperature. The iron and the water are connected to a heat engine which is connected to an air conditioner in a room also at the same initial temperature. Now we add energy to the system by heating the iron block. The temperature of the room (and the energy in it) decreases. While this particular situation might not occur by chance in nature, it shows that it is not a law of nature that if you add energy to a system, all sub-parts of the system experience only fluctuations in the same direction.

Tom Scharf @105 ends his comment “Choose your leaders wisely.”

This may be indeed a criticism of Hansen who attempts instead (in one of the Op-Eds that Tom Scharf quotes from) to get action from a reluctant leader. “President Obama speaks of a “planet in peril,” but he does not provide the leadership needed to change the world’s course.”

However the actual brickbats Tom Scharf tosses in are far from well aimed.

The first Hansen Op-Ed quote Tom Scharf objects to begins “To the contrary…” so presumably Tom Scharf is more at ease with what is being disavowed by Hansen when he said “…it is no longer enough to say that global warming will increase the likelihood of extreme weather … (nor) to repeat the caveat that no individual weather event can be directly linked to climate change.” I suppose Tom S. thinks it still remains “enough to say…” That’s fine by me. It will always take some folk a bit of time to catch up with the game.

The second Hansen Op-Ed dates from May 2012 and the apocolyptic prediction is about a world following continued oil/coal/gas use as well as exploited tar sands. So this is what? 750ppm of atmospheric CO2? I would be difficult to argue that such a level would fail to leave a big legacy like flooded cities etc.

That is not to let Hansen off without a better aimed brickbat. He says “…concentrations of carbon dioxide in the atmosphere eventually would reach levels higher than in the Pliocene era, more than 2.5 million years ago…” Surely this is an error. It should be Paleogene 34 million years ago and you are not going to get full marks with mistakes like that.

80 Jonathan asks, “Is there any simple way that doesn’t do violence to the data to plot temperature on the x-axis instead of standard distributions?”

No. On Vancouver island, a 10 degree increase in temperature is astounding, but in Pheonix it’s a yawner. Since Vancouver Islanders often live without owning AC, such a change is more important than it would be in Pheonix. So, by using sigma instead of temperature, you automagically warp the data into something important to people.

I am not smart enough to post on Tamino’s site http://tamino.wordpress.com/2012/07/23/temperature-variability-part-2/ or maybe it is closed to comments now. Tamino presents a fairly convincing argument that Hansen’s method for estimating changes in variance is flawed.

Two remarks:

(1) IF you consider the distribution of a sum of independent gaussian random variables the variance is the sum of the variances of the individual variables. Differences in the means don’t affect the variance. If I’ve understood Tamino he considered dependent variables. In particular he considered a distribution, p(x) which was a sum of distributions, p_i(x), with arbitrary means. In that case the variance of p is the arithmetic mean of the variances of the p_i + contributions due to differences in the means. It seems to me that one ought to be trying to ascertain the dependence/independence of different sites?

(2)That being said it becomes clear that Clifford Mass’ claim that you can only attribute the global mean anomaly to local heat waves is clearly wrong too. If it is correct that you can only attribute changes in mean temperature to heat waves it ought to be the change in the local mean, for example the anomaly in a particular region for a particular month averaged over, say, the last decade. Local anomalies can be much larger than the global anomaly because some places are warming more than others as everyone knows.

http://data.giss.nasa.gov/gistemp/maps/

John @119, the connection to equilibrium statistical mechanics isn’t so opaque I think.

If we forget about enhanced greenhouse forcing (say the climate system has come to equilibrium with respect to enhanced greenhouse forcing), then a local seasonal climate will be characterised by a distribution of states that we might consider the equilbrium (e.g. so many days of such and such a temperature with characteristic minimum and maximum temperatures, some sort of charactersitic rainfall and wind patterns etc.). That’s pretty much what climate is at the local scale.

Now make the Earth’s global temperature warmer. This will shift the distribution of states on the local scale to a new equilibrium (i.e. a new climatology).

Of course where this differs from a true Boltzmann relationship is the presence of non-linear effects, thresholds, phase changes and complex second order effects that I referred to in my post. For example tropical storms (hurricanes) don’t form unless the sea surface temperature reaches a threshold temperature (~26.5 oC according to William Gray) and the while the hurricane wind speed increases around 5% for each 1 oC of temperature rise, the destructive power in monetory terms increases with something like the cube of the wind speed (e.g. Emanual Nature 436, 686 (2005)).

However we expect Rabbet’s laws of distribution of climate states to apply. Just like the distributions under Boltzman’s laws, the number of detectable states will increase (increased variability) and states characterstic of higher temperature will take an increasing share of the distribution as the temperature rises. That’s certainly what one expects in he majority of local climatologies in a warming world, although of course the local response to global warming will be larger in some areas than other and etc…

Mike P, I don’t think you’ve described what you are trying to think about.

Your air conditioner is bringing energy in from outside the room, and dumping heat outside. Try doing this without a heat pump, just adding energy to the system.

If we had a way of dumping the excess heat, we wouldn’t have the climate problem.

Oh well, put up something on Eli’s Laws (with Hank’s codicil) on Rabett Run, but pretty much the same as what Chris said in 117 and 124

Re:Increasing energy implying increasing variability

Phase transitions have already been mentioned. Consider a system having a phase transition a temperature Tc, initially at temperature TTc and I _decrease_ the temperature toward Tc.

I am not implying that the global climate system may be close to a phase transition, but as we see, large parts of the Arctic Ocean are evolving toward seasonal ice free state.

sidd

I see that my attempt to put in greater than and less than signs mangled my post. Let me try again.

Consider a system having a phase transition a temperature Tc, initially at temperature T less than Tc and I increase the temperature toward Tc. I will observe fluctuations which are correlated over large and larger regions of space and time, and increased variability.But this will also occur if I begin at T greater than Tc and _decrease_ the temperature toward Tc.

I am not implying that the global climate system may be close to a phase transition, but as we see, large parts of the Arctic Ocean are evolving toward seasonal ice free state.

sidd

John E. Pearson: On Vancouver island, a 10 degree increase in temperature is astounding, but in Pheonix it’s a yawner. Since Vancouver Islanders often live without owning AC, such a change is more important than it would be in Pheonix.

Phoenix is just concluded a heatwave setting records for consecutive days above 110F, perhaps more importantly nights not falling below 90F. Some local produce truck markets have suspended operations due to the impact on crops, won’t be operating again this year. The heat was a distinct hazard or even showstopper on construction sites and at other places where people must work outdoors.

Temperatures were about 10F above normal during this event.

Before anybody begins yapping about specific attribution (sophisticated meteorologists tell us that such record-breaking sequences are perfectly in keeping with an unchanging climate), I mention this as an example counter to Jim’s implication that living in a place with a torrid climate toughens the local culture. I’d offer that while that may be true, there’s also precious little headroom available.

#129–

Yes, one of the “non-linearities” we need to consider is the capacity of large mammals to lose heat at sufficient rates.

125 Hank, I guess I don’t understand the constraints of the puzzle you posed. I thought that your challenge was to add energy to a system, and cause the temperature of some sub-part system to decrease spontaneously. Heat pumps do operate spontaneously, if you have a high temperature reservoir and a low temperature reservoir, so I don’t know why you would exclude them from the solution to your puzzle, but I’ll try again without using a heat pump.

My system is a balloon, a pulley, a rope, a rock, and the atmosphere. The balloon starts on the ground at neutral buoyancy. I raise the rock (adding energy to the system) and tie it to the rope. The rock then falls back to Earth, lifting the balloon. The balloon expands as it rises, losing energy by doing work on the atmosphere, and cooling adiabatically.

I suppose there are much simpler solutions to the problem I’ve attempted to address, which makes me think that I still haven’t understood what the question is.

This doesn’t seem to be related to the question of how to refrigerate the Earth as a whole. There is a low temperature reservoir available (outer space), but the problem, or so it seems to me, is that our thermal contact with it is poor, being essentially limited to radiation from the upper atmosphere. So what we need to do is to add giant heat sinks to the Earth, yes?

But this seems distant from the original topic of whether or not increasing the energy in the atmosphere leads to wider (or even counter-varying) variations in the local weather, which only requires energy transport within the system.

> add giant heat sinks to the Earth

Problem solved, then.

dbostrom 129 attributed Jim Larson’s post 122 to me

Mr. Mike P. writes on the 22nd of August, 2012 at 2:43 PM:

“My system is a balloon, a pulley, a rope, a rock, and the atmosphere.”

I am sorry, but as a sometime fizicist, I must say this:

Wot, no banana, no monkey ?

sidd

Chris in 124 went back to the specific case of “climate states” but Eli’s original phrasing was not climate-specific and it is the general claim that I have trouble with, not the specific case of climate. In any event I don’t want to be guilty of hijacking the thread any further off-topic.

Mike P.: So what we need to do is to add giant heat sinks to the Earth, yes?

Well, we already have a giant heat sink connecting Earth to space, a free gift, but we’re busily degrading its efficiency.

“I’m too warm because I’m wearing a sweater. I’ll put on another sweater, then call in a ventilation contractor to install air conditioning. While they’re doing their work and taking my money, I’ll continue bundling up with more sweaters.”

Not to pick on Mike P. but that’s a totally crazy path to take, unless we’re prepared to give up on ending our relationship with hydrocarbon slaves.

dbostrom “…living in a place with a torrid climate toughens the local culture. I’d offer that while that may be true, there’s also precious little headroom available.”

Absolutely. When we had our “once in 3000 years” heatwave a few years ago, it was at the same time as our major arts festival. Normally, there are thousands of people wandering, and bustling, around night and day to various events and installations. Skipping around all the crowded pavement cafes.

After a few days (we’re quite accustomed to a few days here and there of 38C+, but in summer not autumn), the whole city became like a ghost town on minimum speed. No building or other outside workers, lots of outdoor events cancelled. When it got to a fortnight, it was like the place had switched off. And people died.

Reading the paper, it is hard to find the three points that Eli gives in #101 within the paper. There is a QED point in the post publication discussion that sounds a little like Eli’s first point, but neither of the other two points seem to be touched on.

I would say that the chief point of the paper is:

“The climate dice are now loaded to a degree that a perceptive

person old enough to remember the climate of 1951–1980 should

recognize the existence of climate change, especially in summer.”

What we exprience directly as weather is also now a direct experience of climate change when we undergo heat anomalies. The variability that used to disguise climate change is now making it apparent.

See discussion at Open Mind starting here.

When looking at PDFs that seem more-or-less-normal, it would be really nice to compute mean, S.D., skew and kurtosis, and maybe do a normality test.

I.le., first 4 moments.

As noted in the post, there may well not be enough data to be significant,

but when these are not shown in a little table, people sometimes make comments about whether or not a curve is skewed or tails weighted more heavily or not.

An author might explicitly say: there is not enough data to say anything meaningful about skew or tail-weighting, but if people are going to discuss those anyway, it seems helpful to compute the 3rd and 4th moments along ith the first two, rather than leaving it to eyeballs.

Thank you John Mashey for the pointer -into- the long Tamino thread. It stays interesting for quite a while after that (with a few excursions). Seems to reach a bottom line where MT comments and Tamino replies.

My brief excerpt of that:

_______________________________

MT: … This all said, would you agree that none of it matters in terms of the lesson for policy?

T: [Response: Yes.]

MT: With respect to baseline statistics, severe hot outliers are increasing to an impressive extent. That’s what matters no matter how you slice it. It’s important not to confuse an academically interesting disagreement with one that has practical policy implications.

T: [Response: I agree that pretty much any method will be prone to confusing trend and variation. Still I think it’s an issue worthy of closer inspection — as an academically interesting problem. From a practical policy viewpoint, any way you slice it severe hot outliers are increasing.]

————end excerpt———-

140: This is not a mere academic challenge. It is important to quantify the the level to which the current weather has been influenced by climate change. Policy requires some level of agreement on the science. You can’t expect a group of policy makers (roughly half of whom don’t believe in anthropic warming no matter what the science says) to make policy if an iron clad case hasn’t been made that we’re seeing the effects of climate change now. For every op-ed piece Hansen writes Lindzen writes one saying the opposite.

Jim: For every op-ed piece Hansen writes Lindzen writes one saying the opposite.

We desperately need for Lindzen to be authoritatively contextualized, pronounced upon. Yes, there would be a hue and cry but that clamor would necessarily put Lindzen’s activities under the spotlight. “Why are they picking on poor Lindzen? Let’s take a look.” No pain, no gain.

Isn’t it remarkable that Hansen writes an op-ed with an apparent peripheral glitch poking out of a bulky and dire implication and the glitch becoming the topic of the day, while Lindzen can claim in his respective op-ed that there’s no recent statistical anomaly in record temperatures and get away with it without a peep?

An error versus… what? An oversight? Abject ignorance while speaking as an authority? Worse?

Enough of wrong with no opportunity costs, already. Great research done 40 years ago isn’t a free pass to waltz around the world changing governments in pursuit of romantic economic fantasies.

Lindzen is the Moby-Dick of this fiasco, drives some of us crazy.

Another example of the noise vs. real change dilemma is ascribing extreme events to human-caused climate change. If tropical storm Isaac does turn into a hurricane disrupting the Republican National Convention, some may see some irony in the party with the strongest climate change denial credentials having their convention disrupted, but I doubt it will do any good, and I wouldn’t wish a storm big enough to matter on the people of Florida. Anyway these guys deny lots of other well-established science, so a monster storm wouldn’t do it.

142 dbostrom called me Jim and said: “Isn’t it remarkable that Hansen writes an op-ed with an apparent peripheral glitch poking out of a bulky and dire implication and the glitch becoming the topic of the day”

I believe what you are calling a “peripheral glitch” is the artifactual broadening of the anomaly distribution which Tamino suggests is due to spatial variance.

Hansen said in the opening paragraph of the first section, “Principal Findings” of the Discussion: Seasonal-mean temperature anomalies have changed dramatically in the past three decades, especially in sum- mer. The probability distribution for temperature anomalies has shifted more than one standard deviation toward higher values. In addition, the distribution has broadened, the shift being greater at the high temperature tail of the distribution.”

By my reading this is dead center. If it is really self-evident that the temperature extremes have gotten enormously hotter then there ought to be a valid analysis indicating that.

>> John E Pearson says: …

>> 140: This is not a mere academic challenge….

>> Policy requires … an iron clad case ….

Sorry, John, but you’re expressing hopelessness about politics there, which is not the same thing as assessing the question Tamino and MT are talking about.

The question Tamino raised is, as he says, an interesting academic one, which Hansen may want to think hard about while continuing to revise the paper — remember it’s a draft paper for comment. The thread at Tamino’s is comments on the draft. This is how science works.

When you say policy requires iron clad science papers, you’re dismissing the use of statistics — which is how the deniers work, they insist on the impossible.

It’s an interesting academic question Tamino raised over there — a question that doesn’t affect the implications of Hansen’s paper for policy, for any values of policy that rely on sensible consideration of the real world.

Yes, today, in the USA, policy decisions aren’t being made. Period.

No “iron clad” presentation is going to change the denial.

Work to get the science and statistics done right, including uncertainty.

Work on the politics where that work can be productive.

We need better politicians, not “iron clad” science.

John @ 144

I don’t see anything in either Hansen’s PNAS paper or discussion paper that accords with your assertion about temperature extremes:

“By my reading this is dead center. If it is really self-evident that the temperature extremes have gotten enormously hotter then there ought to be a valid analysis indicating that.”

Hansen doesn’t say/show that “temperature extremes have gotten enormously hotter”. He shows that hot/very hot temperatures (> 3 sigma) constitute a much higher proportion of the temperature distribution, and that the spatial extent of hot/very hot periods have considerably increased.

But I don’t see that this interpretation requires that temperature extremes have gotten hotter at all (let alone “enormously hotter”). For example since the temperature anomalies used in the analyses are local seasonal averages, then an increase in the value of a temperature anomaly might arise simply from a shift in the local temperature distribution. One could model an increase in a local summer anomaly of 21 oC (in 1951, say) to 24.6 oC (in 2011) by a shift in the seasonal distribution of 18 oC cool days and 28 oC hot days from a ratio of 2 in 1951 to 0.5 in 2011. No increase in maximum temperature at all, but a large effect on the anomaly.

Of course temperature extremes have gotten hotter as we can observe in the temperature records. But the point is that the analysis doesn’t indicate “temperature extremes have gotten enormously hotter” at all, and the “valid analysis” that indicates that temperature extremes have gotten a bit hotter are readily apparent simply by inspection of local temperature series.

The point about increased variability is a rather minor one I would have thought. I suspect that the increased variability relates to the global (or hemispheric) scale and not necessarily the local scale as others have indicated (including the top article).

In 144 I used the phrase “temperature extremes have gotten enormously hotter” which I meant as a paraphrase of Tamino who wrote: “any way you slice it severe hot outliers are increasing.” I submit that my phrase was not a severe distortion of Tamino’s meaning.

Everyone seems to claim that that hot outliers are increasing and that this is self-evident. I believe it might be so, but I’d like to see a published analysis.

Chris wrote “The point about increased variability is a rather minor one I would have thought.”

I think that a result presented in the 3rd sentence in the first paragraph of a section entitled “Principal Findings” is being presented as a principal finding of the manuscript in question.

Chris wrote “then an increase in the value of a temperature anomaly might arise simply from a shift in the local temperature distribution.”

I agree. It might. I suggested that in 123. I don’t know that anyone has done such an analysis. What seems reasonably clear to me (based on Tamino’s analysis and the discussion) is that Hansen’s analysis generates spurious variance. You cannot use the spurious variance to attribute hot outliers to a changed distribution.

You can play around with these maps to get some idea of where the local mean is higher. http://data.giss.nasa.gov/gistemp/maps/ but you quickly get to the point where “playing around” is inadequate.

“the “valid analysis” that indicates that temperature extremes have gotten a bit hotter are readily apparent simply by inspection of local temperature series.”

You can’t tell anything about the statistics of hot outliers by simple inspection of local time series. Statistical analysis is required. I once flipped 10 consecutive heads. My immediate response was that there was something wrong with the coin. 90 flips later the coin looked fair. If you can demonstrate that the outliers imply that the distribution is altered beyond a simple shift of the mean in a statistically meaningful way you should write it up and publish it.

to again quote Isaac Held (cited earlier), consider two descriptions that sound very different:

“the ‘number of very hot days increases dramatically’

[or]

‘the number of very hot days does not change but they are on average \delta T warmer'”

[and then he writes]

“My gut reactions to these two descriptions of the same physical situation are rather different. The goal has to be to relate these changes to impacts (things we care about) to decide what our level of concern should be, rather than relying on these emotional reactions to the way we phrase things.”

Those are my brief snips from the original at his blog:

http://www.gfdl.noaa.gov/blog/isaac-held/2012/08/04/30-extremes/#more-4862

So — what does the data show?

147 John said, ” If you can demonstrate that the outliers imply that the distribution is altered beyond a simple shift of the mean in a statistically meaningful way you should write it up and publish it.”

The shapes of the distribution curves has changed each decade as the mean has increased, so Hansen’s paper does show exactly that. Now, as I suggested earlier, subtracting the median change (local, regional, global?) before assigning the sigma rating would explicitly show the result you’re asking for. I hope some kind statistician does such an analysis.

149 Jim said: The shapes of the distribution curves has changed each decade as the mean has increased, so Hansen’s paper does show exactly that.

You need to read Tamino’s analysis here, along with the comments particularly John N-G’s. I think they made a convincing argument that Hansen’s methodology produces spurious variance.

http://tamino.wordpress.com/2012/07/23/temperature-variability-part-2/

I think that ultimately that this comes down to whether the temperature at different sites is independent or not. The central limit theorem says that the distribution of the mean of a bunch of independent random variables tends to be gaussian with variance that is the sum of the individual variances.

This is a fairly general result but you can’t relax the independent condition. My interpretation of Tamino’s discussion (particularly the 3rd and 4th figures here: http://tamino.wordpress.com/2012/07/21/increased-variability ) is that Hansen lumped together dependent variables and treated them as independent. I don’t think this means Hansen’s paper shouldn’t have been published but it means that the method he used to ascertain variance generates spurious variance so that that aspect of Hansen’s analysis is flawed.