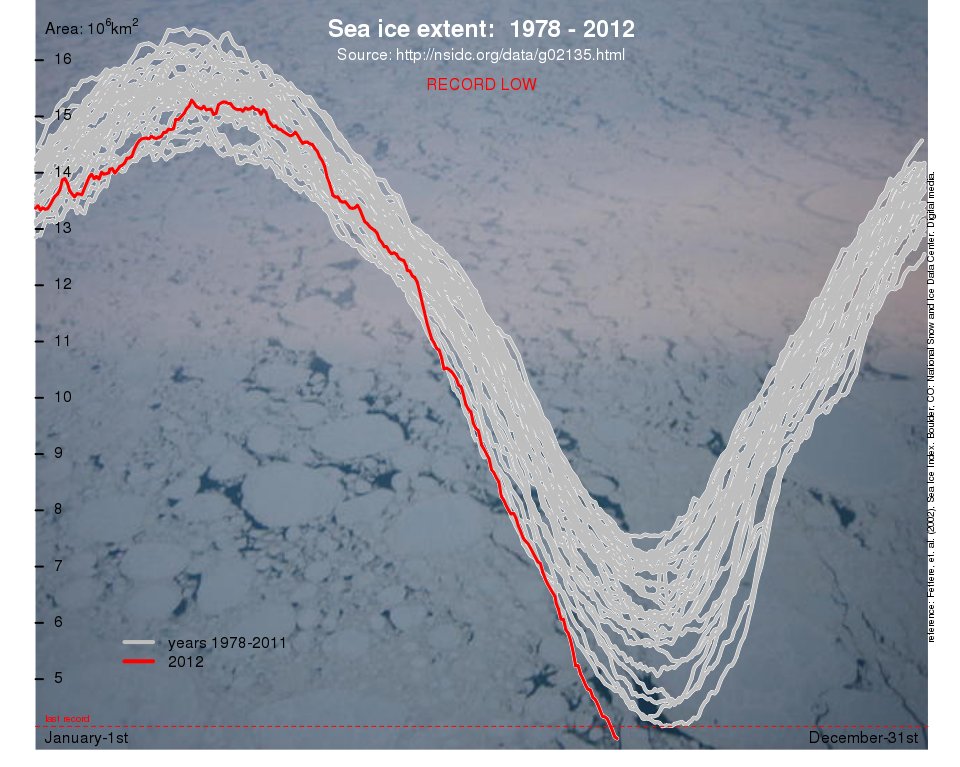

We noted earlier that the Artic sea-ice is approaching a record minimum. The record is now broken, almost a month before the annual sea-ice minima usually is observed, and there is probably more melting in store before it reaches the minimum for 2012 – before the autumn sea-ice starts to form.

The figure shows annual variations in the area of sea-ice extent, and the x-axis marks the time of the year, starting on January 1st and ending on December 31st (for the individual years). The grey curves show the Arctic sea-ice extent in all previous years, and the red curve shows the sea-ice area for 2012.

(The figure is plotted with an R-script that takes the data directly from NSIDC; the R-environment is available from CRAN)

UPDATE on the update The National Snow and Ice Data Center announced today (August 27th, 2012) that the 2007 record has now been broken by their more conservative 5-day running average criterion. They also note that “The six lowest ice extents in the satellite record have occurred in the last six years (2007 to 2012).”

MARodger @290 — Thank you.

DP @298 — Ocean continue to absorb CO2 until equilibrium is reached. That will be well above pre-industrial levels.

DP @298.

You presumably know that about 57% of the CO2 we emit into the atmosphere is effectively absorbed by the biosphere & oceans. This occurs under a regime of on-going & rising CO2 emissions.

This does not mean the system is in equilibrium at the end of each year. In my understanding, the oceans are the one major player in this not being in equilibrium. If CO2 emissions ceased, the oceans would continue to suck CO2 out of the air for centuries. However the process gets smaller year-on-year as CO2 accumulates in the oceans (and also with any rise in ocean temperature which at depth can lag behind surface temperature rises). As a result the process pretty much slows to a halt after a couple of centuries with about 20% of the CO2 left in the atmosphere. It is eventually removed from the oceans (& thus the atmosphere) by calcium made available from the weathering of rocks, a process that would take thousands (some say hundreds of thousands) of years.

298 DP asks, “Why would co2 levels drop if emissions stopped? Why does nature seek to reduce concentrations above pre-industrial levels?”

Rough numbers with errors and omissions, meant to give a visual, not a robust answer:

Atmospheric carbon VS total atmospheric, bio, & ocean carbon is 1/10, so if you spike atmospheric carbon, the eventual result is 1/10th the initial spike as carbon leaves the atmosphere and flows into the ocean and biosphere. (390ppm – 280ppm) / 10 = 11ppm, so the planet would tend to settle back to ~291ppm if we disappeared and no further feedback occurred.

Currently 1/2 of human emissions are absorbed, so the rate of decline would be roughly the same as the current increase, at least at first. It took us 130 years to get here, so by 2150 we’d be somewhere around 300 or 325ppm. Not a permanent solution, but this implies that cutting emissions in half would stabilize current concentrations.

299 Toni,

The area affected by an icebreaker is teensy compared to the whole ocean, but…

Another way to look at it is an icebreaker stacks ice in piles while leaving open water. Just like snow plows increase the longevity of snow, icebreakers should increase ice. Plus, in winter open water is grand for ice formation. Combine the two and shouldn’t we take non-fossil icebreakers and plow the Arctic Ocean all winter?

On a similar level of possibility, we could put a net across the Fram Straight…

Lots of things should be forbidden by international treaty. But the issue of icebreakers will soon be moot.

Toni M @299 — I am sure the effect is small in comparison the the additional warmth.

“I have been scanning the scientific press and see no reference to this mechanical effect, which may have contributed to accelerating the extent and depth of melt rates, by increasing the surface area exposed to thermal effects of solar radiation.”

Maybe because there isn’t a lot of “solar radiation” in the Arctic in the winter? Nor is there [yet] much melting going on in winter, especially in the Russian Arctic. Nor is there even much icebreaker traffic there in winter, the icebreakers primarily facilitate tourist and cargo shipping passage during the summer season.

Russia has had a fleet of N-powered icebreakers for a while now, adding another larger one isn’t likely to accelerate the Arctic melt, and they probably wouldn’t take kindly to the suggestion that use of their Northern Sea Route be outlawed.

#299–Toni, please try to do some math first. Even at the current record low minimum, the ice extent is about 3.5 million square kilometers. That’s roughly twice the size of Alaska. In that area, there’s less than a dozen ships.

If you imagine a dozen snowmobiles driving around two Alaskas for a couple of months, how much do you think they will mark the snow? How often will they meet one another, or cross one another’s tracks? What percentage of the surface will they leave unvisited?

Hard to say? Well, let’s make some assumptions and run some numbers. Let’s say they travel 200 kilometers a day, and let’s say for simplicity that territory within 1/2 a kilometer of their track is ‘covered,’ so each machine leave a 1-km wide track of ‘covered’ snow behind it. Then:

12 snowmobiles x 60 days x 200 km = 144,000 square kilometers, less than a 20th of the total. (And icebreakers don’t make 200 km a day through solid ice, and don’t break kilometer-wide swathes.)

Neven has a new post on models: http://neven1.typepad.com/blog/2012/09/models-are-improving-but-can-they-catch-up.html#more

Perk (288),

That lag can be used to set a limit on how high temperatures can rise. In my 265, I realize I forget to integrate eqn. 1 of Kharecha and Hansen when writing my comments, so, killing emissions today leads to about 0.65 C of final warming of the pre-industrial climate, not 0.3 C. But, that is still lower that the 0.8 C we have experienced, so cooling must be the final result of ending emissions now. The eqn was integrated in the code to calculate the concentration.

How is the lag useful? We can say that we are about 30 years behind where we should be in warming with a 3 C per doubling fast feedback climate sensitivity. And, because the system is driven, that is a lower limit on the lag time for a straight step function response. It is not just the concentration from 1982 that has got us to the warming we expect for 1982 today. We have a higher concentration which drives warming faster, so the observed lag must be shortened from its physical value. Since 30 years is a lower limit on the lag, all we need to ask is at what point in the instantaneous temperature curve does the lagging curve cross the instantaneous curve and have to turn over too cooling. If each year the temperature moves one thirtieth of the way between the lagged curve and the instantaneous curve the maximum temperature is 1.1 C above the pre-industrial climate. So, while at 400 ppm, we’d expect 1.5 C of warming, we can’t get there if we end emissions now, we can’t get above 1.1 C or some lower value before having to start cooling. For a longer lag of 60 years, we stop at 1.0 C and 120 years, 0.96 C. The dashed, dot-dashed and triple-dot-dashed lines in the first panel produced here show this. Longer lags give lower maximum temperatures.

a=findgen(1000) ;year since 1850

b=fltarr(1000) ;BAU concentration profile

b(0)=1

for i=1,999 do b(i)=b(i-1)*1.02 ;2 percent growth

;plot,b(0:150)*4.36+285.,/ynoz ; 370 ppm year 2000

c=(18.+14.*exp(-a/420.)+18.*exp(-a/70.)+24.*exp(-a/21.)+26.*exp(-a/3.4))/100. ;Kharecha and Hansen eqn 1

e=fltarr(1000) ;annual emissions

for i=1,999 do e(i)=b(i)-b(i-1)

d=fltarr(1000) ; calculated concentration

t=400.-285. ;target concentration

f=0 ;flag to end BAU growth

for i=1,499 do begin & d(i:999)=d(i:999)+e(i)*c(0:999-i)*4.36*2. & if d(i) gt t then begin & e(i+1:999)=0 & f=1 & h=i & endif else if f eq 1 then e(i+1:999)=0 & endfor ;factor of two reproduces BAU growth

!p.multi=[0,2,2]

g=alog(d/285.+1)/alog(2.)*3; instantaneous temperature curve.

gg=g*0.8/1.5

for i=h, 998 do gg(i+1)=gg(i)+(g(i)-gg(i))/30. ; 30 year lag curve

gg6=g*0.8/1.5

for i=h, 998 do gg6(i+1)=gg6(i)+(g(i)-gg6(i))/60. ; 60 year lag curve

gg12=g*0.8/1.5

for i=h, 998 do gg12(i+1)=gg12(i)+(g(i)-gg12(i))/120. ; 120 year lag curve

plot,a+1850.,alog(d/285.+1)/alog(2.)*3.,/ynoz,xtit='Year',ytit='Final warming (C)',charsize=1.5 ; Temperature assumming 3 C per doubling of carbon dioxide concentration

oplot,a+1850.,gg,linesty=2

oplot,a+1850.,gg6,linesty=3

oplot,a+1850.,gg12,linesty=4

plot,a(0:499)+1850,e(0:499),xtit='year',ytit='carbon dioxide emissions (AU)',charsize=1.5 ;emission profile in arbitrary units

CD #309: Interesting clarification, however it still begs the question of how would FF emissions stop? Maybe enough renewables, Ecat cold fusion or hot fusion, or economic collapse. Something else to consider is once we stop spewing aerosols in such enormous tonnage, we lose global dimming and there’s more warming too.

In any case, popped on here tonight to post this: Talking about the state of the climate, let’s get a geographic understanding of how much 2012’s ice extent is less than 2007’s record.

Here’s a graph at NSIDC:

http://nsidc.org/data/seaice_index/images/daily_images/N_stddev_timeseri…

Looking at the numbers at left, it appears the melt right now is at about 3370k kilometers. 2007’s record was 4170k, for a difference of ~800,000 sq. kilometers.

Here’s a link showing the size of different US states in miles and kilometers: http://www.enchantedlearning.com/usa/states/area.shtml

The reduced ice extent for 2012 has so far exceeded the 2007 record by an area approx. equal to Texas (695k) and Kentucky (104k)!

Chris Dudley #309,

The paper by Friedlingstein and Solomon, PNAS, 2005, lays it out in this excerpt from their Abstract:

“We estimate that the last and the current

generation contributed approximately two thirds of the present day CO2-induced warming. Because of the long time scale required for removal of CO2 from the atmosphere as well as the time delays characteristic of physical responses of the climate system, global

mean temperatures are expected to increase by several tenths of a degree for at least the next 20 years even if CO2 emissions were immediately cut to zero; that is, there is a commitment to additional CO2-induced warming even in the absence of emissions.”

A 21st record low arctic sea ice extent was set on the 16th of September. The 17th saw a rise in extent. Presently, the recovery rate needed to get to the average extent on October 28th over the last ten years when tracks converge is a factor of 1.6 lower than the strongest seven day recovery rate seen during that period. If the current level of sea ice extent occurs on October 2nd, then 26 days of the most rapid recovery seen in the last ten years would need to be maintained for 26 days to converge on the 28th. It is interesting to consider how that rapid sustained latent heat energy release might affect weather patterns. Would rain be made more likely relative to snow in central Canada or Siberia during this type of event? Examples in 2007 and 2011 might be worth a look.

Perk (#310) and Superman1 (#311),

My script gives 0.3 C of further warming for a 30 year lag with turn around to cooling staring at about 2050. That is fairly close agreement with what Superman1 cites. I suspect that the effect of reducing sulphate aerosols is to cut the lag time leading to a slightly higher rise in temperature and a quicker turn around to cooling. I’m not entirely happy with my lag implementation but I do think that it captures the crucial aspect that the target is the instantaneous fast feedback climate sensitivity. There is no inertia in the sense that the oceans would keep on warming without an energy imbalance out of habit (momentum). The instantaneous curve is an attractor that pulls against honey-thick-drag (lag). There is no coasting. So, once that instantaneous curve indicates that the energy imbalance has reversed owing to reduced carbon dioxide concentration, then cooling starts with lag (drag) now applied in the opposite direction.

This is really a mathematical investigation. Policy-wise, I think Hansen is correct that we will control climate through setting the concentration of carbon dioxide. Since cutting emissions to zero now hits his target concentration of 350 ppm around 2150, further emissions are required to maintain that target and not sink to 330 ppm. Substantial emissions are needed to achieve and maintain the popular 450 ppm target. So, cutting emissions to zero is not a realistic policy. If Hansen’s target is correct (and he is usually correct) there may be some scramble to soak up past emissions if it takes us too long to realize that. But, even so, we would be using the concentration of carbon dioxide as a tool. It is in our nature to make and use tools and this looks to be the most powerful we have ever made. Wielding it is irresistible really.

If the world is not warming as is suggested here http://elizaphanian.blogspot.co.uk/2012/09/those-odd-and-pesky-little-facts.html why is the sea ice melting? I’m not a sceptic but where did this data come from? I cannot decide whether the site Sam Norton links to is sceptic or not.

[Response: It is a standard cherry pick – show only one record (which is obsolete) and pick the start point to give a misleading impression. The woodfortrees.com website allows you to play around with this kind of stuff. The point being that it is almost always possible to insist that the world is cooling when it is not, just by virtue of the weather noise in the system. This is a good demonstration of the tactic: http://skepticalscience.com/still-going-down-the-up-escalator.html – gavin]

> elizaphanian

Sad to see that guy, whose blog is mostly about his personal faith and belief and reliance – mostly religious — has been relying on the “climate4you” site’s bogus claims.

If he’s open to looking at facts, he’ll be changing his mind.

How about a time lapse animation for 2012’s Arctic ice melt?

http://phys.org/news/2012-09-manhattan-sized-ice-island-sea.html

Has a sliding bar at the bottom showing the months rolling by, and when it gets to July & August it melts incredibly fast! Also looks like what’s left is pretty whispy.

Chris Dudley @ 313 wrote:

“Since cutting emissions to zero now hits his target concentration of 350 ppm around 2150, further emissions are required to maintain that target and not sink to 330 ppm.”

I have never gotten the impression that 350 is a goal we should *not* get _below_. My impression is that it is the *maximum* level of global atmospheric concentration we can hit and have some reasonable assurance that really bad things won’t happen. But even that is probably too high. Since during all of human civilization the level was never above 300ppm iirc, getting below that would seem to me to be the really rational goal to try to reach. If you have a clear passage that makes it clear that Hansen doesn’t think we should go below 350, please link it.

Nuclear war heads are also a “tool,” but I, for one, am glad that we have managed to resist using them in war since WWII. Sometimes resisting what seems irresistible desires for control is the wisest route. At one point, Lovelock said that putting humans in charge of tending the global climate would be like putting a goat in charge of tending a garden–an apt comparison.

The Hansen et al. paper expressing 350 ppm stated something to the effect of a maximum of 350 ppm and quite likely lower than that.

My own estimate is that even 300 ppm is too high.

318 David B said, “My own estimate is that even 300 ppm is too high.”

You had to know you’d be asked for clarification when you made that statement, so you’re probably well-prepared to answer my question: Say what??

wili (#317),

You may be right that 300 ppm is better for now, and bringing emissions to zero and then capturing some past emissions would be needed to get there. I doubt that 300 is good over the next 10,000 years and we would want to adjust up to avoid glaciation over the Northern Hemisphere. And, recalling, that was the point Hansen was getting at. We won’t allow an ice age to happen since we can control that.

Still, as a practical matter, a numeric target is going to be what we aim at and we may go along at 350 ppm for a bit before deciding on a new one and taking steps to control at the new level.

If there is a god of goats, may it teach the goats some self-control so that we might learn from the example.

As I recall, we did use a couple of nuclear bombs to end a war and then lots of nuclear tests to warn off an enemy, so we did wield them. Climate control will require much more cooperation than that though. Perhaps that would be a good thing.

Jim Larsen @319 — First of all, any such reduction is going to take a long time so there is plenty of time to research the preferred level. My prefer is to actually go enough below the preferred level to regrow ice sheets and glaciers to the extent practicable. We have good reason to suspect that such would occur at around 270 ppm. Then increase the CO2 concentration just enough to avoid a decent into a glacial (with a small safety margin). I suspect that about 285 ppm should do it for the next millenium or so.

After that the orbital forcing begins to decline, so possibly lowering the CO2 concentration might be wise. In about 100,000 years the orbital forcing will make a serious and sustained stab at a glacial. That can be avoided by again rasing the CO2 concentration a little; I think Jim Hansen has suggested one modest CFC factory instead.

David B, please answer on the Unforced V thread.

This article on arctic resources has some depth to it.

http://www.nytimes.com/2012/09/19/science/earth/arctic-resources-exposed-by-warming-set-off-competition.html

Thanks for that response Gavin. I found the http://skepticalscience.com/ and found it very helpful of course as a scientist you always want a biggest data set you can get…

CD @ #320 wrote: “If there is a god of goats, may it teach the goats some self-control so that we might learn from the example.”

LOL. Nice, thanks.

So if we can stop beating each other up and get back to the science–this is the situation as I understand it: the large number of models that were used to predict future Arctic sea ice melt for the last IPCC report turned out to have predicted a vastly slower rate of melt than what we have actually seen in the succeeding years.

For the work of these people to be of use at all, it seems to me that the important thing now is to figure out what factor or factors they overlooked or gave not enough weight to so that future models for both poles might be more accurate and yield more undestanding.

Any ideas?

It sounds like melting from below played a very large role in this season’s loss of volume. Was that figured in as a possibility? Are there good ideas about why and how exactly that happened? Did that warm water come from outside the Arctic? From super-heated surface waters flowing under the ice sheet? From deeper warmer and saltier layers of Arctic water getting brought up to the surface by whatever mechanism?

Are there feedbacks that weren’t adequately accounted for?

Did black carbon play a larger role than expected?

Were the various effects of increased water vapor (both as a GHG itself, and as a source of snow that might insulate thin sea ice in early winter and keep it from getting thicker…) under-appreciated?…

And while I’m barraging you good people with annoying questions: What in sam hill is going on with sea surface temperature in the Beaufort Sea, especially near the Alaska-Canada border?

http://polar.ncep.noaa.gov/sst/ophi/color_anomaly_NPS_ophi0.png

http://polar.ncep.noaa.gov/sst/ophi/color_sst_NPS_ophi0.png

(The opening note above at 326 was a reference to the mudslinging going on in the open thread. We’ve been quite polite over here, I think–maybe too quiet??)

regards 309 is this concept of the accelerated curve proven? It seems that the warming we have experienced is consistant with emissions up to approx 1982. surely the warming to 1.5C is already in the system regardless of future concentrations.

http://scienceblogs.com/stoat/2012/09/18/wadhams-on-seaice-again/

RE: Wili, 326,327: Too quiet. I like that kind of probing attitude.

In regards to your questions, here’s my thoughts & questions. Most of us have noticed that persistent open patch of water not too far from the North Pole. I’ve been wondering for a while now if that’s due to methane emissions. What would be interesting to find out is the water depth in that area in relation to other areas around it? Is it shallower? Also, the high water temps in the satellite photos near the coast, i.e. shallow water – is that due to methane emissions also? Could methane be the missing (feedback) factor in the inaccurate melt projections?

Perk Earl: I think you are searching for explanations that aren’t needed. The ice is very thin this year. A large area of first year ice had drifted into the area where the hole appeared.

No further explanation is called for. This year, virtually no first year ice has survived.

326 wili said, “For the work of these people to be of use at all,”

The amygdala is a region of the brain that is great for running away from potential predators. Make a mistake by running when you didn’t have to, and you’re out some calories. The converse mistake was fatal. Unfortunately, the amygdala is our enemy when logic is required. Once activated, it is designed to override all other input. One good example of the amygdala in action is Wayne D. Mention the n-word and he’s 100% running from the MegaLion. Just guessing here, but I sense some amygdala in your post.

Models and scientists’ interpretations, which are not based solely on models by any stretch, are useful now, and will be useful in the future even if the amazingly hard task of building a virtual planet isn’t complete before the minimum hits 1 or 2. Ice free-ish isn’t game over, but game beginning. Remember when I did the 2012 ice area compared to the last decade as weighted by potential solar insolation? 2012 is average. You don’t start getting into serious albedo trouble until after the minimum gets way low. But ice-free-ish in September means we’ll need seriously accurate info on how quickly open water will spread towards June.

And if you’re going to blame anybody, blame computer makers. With a few orders of magnitude more processing power, scientists could make those models dance.

There is a claim now that the melt season is over: http://www.nytimes.com/2012/09/20/science/earth/arctic-sea-ice-stops-melting-but-new-record-low-is-set.html

On models,

Several times I’ve read articles which say that scientists believe the inaccuracies in their models seem to arise from the size of their “boxes” – I have no idea how big they are, but if a box 100m across is needed to ‘capture’ an event, then if your boxes are 100km across, you’ll miss it, and there is no way to legitimately capture it using a bottom-up philosophy, as models do.

So the options might be to wait for computers to get fast enough to allow for the capture of crucial events, or to use real world data to apply fudges which approximate those events. When informing policy-makers and the public, the latter seems smarter to me, with appropriate disclosure, of course.

wili @326,

This paper was included in SkepticalScience’s round-up of new papers. I haven’t got round to reading it yet but it should throw at least some light on why modelling Arctic Ice levels is proving so difficult. (And semantics can easily add to all the difficulty so I will no more than mention that your use of the word ‘predicted’ @326 may not be entirely exact.)

‘Limitations of a coupled regional climate model in the reproduction of the observed Arctic sea-ice retreat’. Dorn et al 2012

Over at NSIDC they’ve announced that the melt has probably maxed out on 16 September at 3.41 million square km, breaking the previous 2007 record of 4.17 million square km. That’s an 18% drop, or just over half the 1979-2000 average minimum extent. Here’s the most recent graph.

wili, to be fair, the best recent example of my amygdala taking control was my hyperbole about EVs spewing more carbon than fossil cars. It’s not an insult to say somebody has something which everybody has. It’s knowledge folks can use to override natural errors.

PE, much as I am concerned about methane, I don’t quite see how it could explain the bottom melt I was talking about. But maybe I’m missing something.

Thanks for the link to the Stoat Blog, Hank. In the back-of-envelope calculations they do there, they don’t seem to consider anything but the effect from the change of albedo. Won’t a whole new open ocean also generate a lot of new water vapor, itself a GHG? Won’t that increase the effect on GW of a newly ice-free (or nearly so) Arctic Ocean? Or did they just leave that out because it’s in the nature of BoE calculations to just consider the most basic factor?

[Response: Changes in water vapour in the Arctic go with the near-constant relative humidity, and occur mainly due to advection from lower latitudes, not local evaporation (except near the surface). The enhancement in LW absorption is certainly part of the feedback but there was a paper – I think by Jennifer Kaye et al on what makes the polar signal amplify relative to the globe – that found that the water vapour effect was not important – but I might not be remembering it completely. – gavin]

Jim Larsen #335,

“Several times I’ve read articles which say that scientists believe the inaccuracies in their models seem to arise from the size of their “boxes” – I have no idea how big they are, but if a box 100m across is needed to ‘capture’ an event, then if your boxes are 100km across, you’ll miss it, and there is no way to legitimately capture it using a bottom-up philosophy, as models do.

So the options might be to wait for computers to get fast enough to allow for the capture of crucial events, or to use real world data to apply fudges which approximate those events. When informing policy-makers and the public, the latter seems smarter to me, with appropriate disclosure, of course.”

This is a long-standing issue that is by no means confined to climate modeling. In many real-world complex systems, phenomena have to be incorporated that operate on both long and short time scales, and long and short spatial scales. Rather than wait for computer powert to increase sufficiently, one needs to be clever about choosing the appropriate grids to capture such phenomena. Grids that can adapt and place higher resolution in regions of large temporal and spatial gradients are preferable, albeit more complex.

Equally important, incorporating critical feedbacks and capturing their synergy is mandatory to credible future projections. I don’t see the major models as having this capability yet, and their projections should be viewed as extremely conservative. My preference is to examine all the observations and trends we are seeing, think hard about the physics underlying these trends and where the feedback mechanisms could take us, then use our best judgment and the precautionary principle for future planning.

> the Stoat Blog

Oh.

You don’t know William Connolley’s writing, available both here at RC and there at his blog.

Do the reading. Asking FAQS here about information available there shows you didn’t yet read what’s already written there.

Take the time and effort, catch up, and after doing that ask thoughtful questions — questions that show you’ve already read what’s already been typed in and made available.

Seriously. Take a week off from posting pointers to stuff you worry about — instead, read in depth there, while the end of the melt season happen and the conversations among those who placed bets about it discuss everything about how they’ve made their published guesses year after year. See how it’s been done.

Google’s site search tool wants to be your friend.

I am disturbed by the constant demeaning of Maslowski and Wadhams on this site. It almost seems like the reaction of some little high school clique; they’re not in our club so let’s talk bad about them.

Wadhams has been doing polar field research since 1969. Maslowski and the Naval Postgraduate School have a regional arctic climate model that takes into account many of the variables missing from GCMs – and at far better resolution.

I really don’t know why there is always some snide remark at hand whenever these two names are mentioned. I would really like to see it stop.

The thread Pondering the Path To an Open Polar Sea in

http://dotearth.blogs.nytimes.com/

contains a short slide show well worth the time of anyone interested in artic sea ice.