In the Northern Hemisphere, the late 20th / early 21st century has been the hottest time period in the last 400 years at very high confidence, and likely in the last 1000 – 2000 years (or more). It has been unclear whether this is also true in the Southern Hemisphere. Three studies out this week shed considerable new light on this question. This post provides just brief summaries; we’ll have more to say about these studies in the coming weeks.

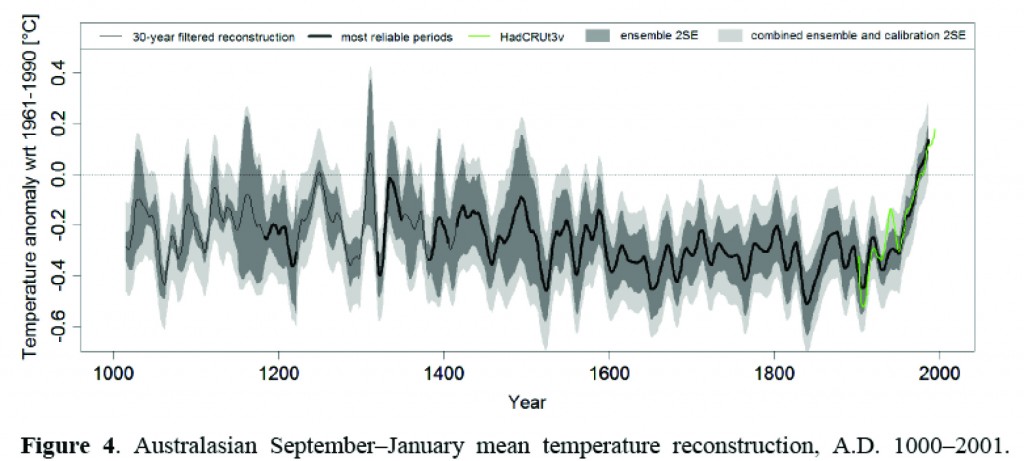

First, a study by Gergis et al., in the Journal of Climate [Update: this paper has been put on hold – see comments] uses a proxy network from the Australasian region to reconstruct temperature over the last millennium, and finds what can only be described as an Australian hockey stick. They use an ensemble of 3000 different reconstructions, using different methods and different subsets of the proxy network. Worth noting is that while some tree rings are used (which can’t be avoided, as there simply aren’t any other data for some time periods), the reconstruction relies equally on coral records, which are not subject to the same potential (though often-overstated) issues at low frequencies. The conclusion reached is that summer temperatures in the post-1950 period were warmer than anything else in the last 1000 years at high confidence, and in the last ~400 years at very high confidence.

Gergis et al. Figure 4, showing Australian mean temperatures over the last millennium, with 95% confidence levels.

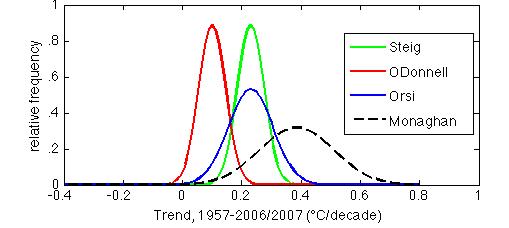

Second, Orsi et al., writing in Geophysical Research Letters, use borehole temperature measurements from the WAIS Divide site in central West Antarctica, a region where the magnitude of recent temperature trends has been subject of considerable controversy. The results show that the mean warming of the last 50 years has been 0.23°C/decade. This result is in essentially perfect agreement with that of Steig et al. (2009) and reasonable agreement with Monaghan (whose reconstruction for nearby Byrd Station was used in Schneider et al., 2012). The result is totally incompatible (at >95%>80% confidence) with that of O'Donnell et al. (2010).

Probability histograms of temperature trends for central West Antarctica (Byrd Station [80°S, 120°W; Monaghan] and WAIS Divide [79.5°S, 112°W; Orsi, Steig, O’Donnell]), using published means and uncertainties. Note that the histograms are normalized to have equal areas; hence the greater height where the published uncertainties are smaller.

This result shouldn’t really surprise anyone: we have previously noted the incompatibility of O’Donnell et al. with independent data. What is surprising, however, is that Orsi et al. find that warming in central West Antarctica has actually accelerated in the last 20 years, to about 0.8°C/decade. This is considerably greater than reported in most previous work (though it does agree well with the reconstruction for Byrd, which is based entirely on weather station data). Although twenty years is a short time period, the 1987-2007 trend is statistically significant (at p<.1), putting West Antarctica definitively among the fastest-warming areas of the Southern Hemisphere -- more rapid than the Antarctic Peninsula over the same time period. We and others have shown (e.g. [cite ref = "Ding et al., 2011"]10.1038/ngeo1129[/cite]), that the rapid warming of West Antarctica is intimately tied to the remarkable changes that have also occurred in the tropics in the last two decades. Note that the Orsi et al. paper actually focuses very little on the recent temperature rise; it is mostly about the "Little-ice-age-like" signal of temperature in West Antarctica. Also, these results cannot address the question of whether the recent warming is exceptional over the long term -- borehole temperatures are highly smoothed by diffusion, and the farther back in time, the greater the diffusion. We'll discuss both these aspects of the Orsi et al. study at greater length in a future post. Last but not least, a new paper by [cite ref = "Zagorodnov et al."]10.5194/tcd-5-3053-2011[/cite] in The Cryosphere, uses temperature measurements from two new boreholes on the Antarctic Peninsula to show that the decade of the 1990s (the paper state “1995+/-5 years”) was the warmest of at least the last 70 years. This is not at all a surprising result from the Peninsula — it was already well known the Peninsula has been warming rapidly, but these new results add considerable confidence to the assumption that that warming is not just a recent event. Note that the “last 70 years” conclusion reflects the relatively shallow depth of the boreholes, and the fact that diffusive damping of the temperature signal means that one cannot say anything about high frequency variability prior to that. The inference cannot be made that it was warmer than present, >70 years ago. In the one and only century-long meteorological record from the region — on the Island of Orcadas, just north of the Antarctica Peninsula — warming has been pretty much monotonic since the 1950s, and the period from 1903 to 1950 was cooler than anything after about 1970 (see e.g. Zazulie et al., 2010). Whether recent warming on the Peninsula is exceptional over a longer time frame will have to await new data from ice cores.

References

- J. Gergis, R. Neukom, S.J. Phipps, A.J.E. Gallant, D.J. Karoly, and . , "Evidence of unusual late 20th century warming from an Australasian temperature reconstruction spanning the last millennium", Journal of Climate, pp. 120518103842003, 2012. http://dx.doi.org/10.1175/JCLI-D-11-00649.1

- A.J. Orsi, B.D. Cornuelle, and J.P. Severinghaus, "Little Ice Age cold interval in West Antarctica: Evidence from borehole temperature at the West Antarctic Ice Sheet (WAIS) Divide", Geophysical Research Letters, vol. 39, 2012. http://dx.doi.org/10.1029/2012GL051260

- E.J. Steig, D.P. Schneider, S.D. Rutherford, M.E. Mann, J.C. Comiso, and D.T. Shindell, "Warming of the Antarctic ice-sheet surface since the 1957 International Geophysical Year", Nature, vol. 457, pp. 459-462, 2009. http://dx.doi.org/10.1038/nature07669

- D.P. Schneider, C. Deser, and Y. Okumura, "An assessment and interpretation of the observed warming of West Antarctica in the austral spring", Climate Dynamics, vol. 38, pp. 323-347, 2011. http://dx.doi.org/10.1007/s00382-010-0985-x

- R. O’Donnell, N. Lewis, S. McIntyre, and J. Condon, "Improved Methods for PCA-Based Reconstructions: Case Study Using the Steig et al. (2009) Antarctic Temperature Reconstruction", Journal of Climate, vol. 24, pp. 2099-2115, 2011. http://dx.doi.org/10.1175/2010JCLI3656.1

- N. Zazulie, M. Rusticucci, and S. Solomon, "Changes in Climate at High Southern Latitudes: A Unique Daily Record at Orcadas Spanning 1903–2008", Journal of Climate, vol. 23, pp. 189-196, 2010. http://dx.doi.org/10.1175/2009JCLI3074.1

Ray Ladbury @146 — In the strict sense of proof, a hypothesis can only be proven false via deduction from some prior axioms. A hypothesis can be demonstrated to be false in this universe by an appropriate experiment, but that is not a proof sensu stricto.

Could you write a bit more about what you mean by information theoretic methods? These are recent enough that many here on Real Climate would probably appreciate a short description.

toto #144: actually the Gergis at al. paper already contains an example like the one you show, in Figure 2 on page 47. I would like to see that one duplicated using random synthetic proxies only…

I did some numerical experimenting myself, result and octave code. It is simply not true that ‘screening’ selects for hockey stick like proxies. Yes, it selects for proxies having the same trend as that screened against (artificial trend line in green, 1900-2000), and the effect of this propagates back to about 1850 due to the assumed autocorrelation; but before that the proxies are unaffected. They are exactly the same as without the trend screening, centering on zero. There is no ‘screening bias’ or ‘screening fallacy’.

Note that in my figure the ‘stick’ of the hockey stick points to the middle of the ‘blade’, i.e., it’s more like a bishop’s staff! Not at all like in real reconstructions (like Gergis et al. Figure 4 on page 49 — or actually, all serious hockey sticks since the dawn of time (like, MBH98/99), where the pre-instrumental temperatures average well below the mean of the instrumental period, which is precisely the point.

FWIW, there’s what appears to my untutored eye to be a sadly predictable biased take on this over at DotEarth, where McIntyre and others are holding court about all the nasty science druids:

http://dotearth.blogs.nytimes.com/2012/06/11/australian-warming-hockey-sticks-and-open-review/

Gavin said above “despite personally feeling bad after making a mistake, fixing errors is a big part of making progress”; well said indeed. But Lotharsson in linking to a recent attack on me by Tim Lambert at Deltoid, which picked up on Tamino’s pointing out an error in my recent paper in TSWJ(also noted here) did not mention that I (1) admitted the error and then (2) explained why I was in error, because I overlooked that the independent variable in my Table 1(radiative forccing by the aggregate of all GHGs) was unlikely to be autocorrelated, as for example CH4 and CO2 are not correlated See Table 2.1 in AR4 WG1). Tamino did not find I repeated my error in the many other regressions I reported in my paper and its SI.

More generally, why is it that the expert econometricians here and at Lambert’s and Tamino’s never themselves undertake and report regressions rejecting the nul that increases in GHGs since 1958 do NOT explain temperature anomalies?

And, AFAIK, nobody here has commented on what seems to me the basic problem with the Gergis et al paper, given its sweeping title,

“Evidence of unusual late 20th century warming from an Australasian temperature reconstruction spanning the last millennium”.

That is its total absence of any proxy records for mainland Australia, as shown in its Fig.1. A more modest title would have been appropriate and would have attracted less attention.

[Response: There are only a few TR records of multi-centennial length on the mainland (Neukom and Gergis, 2012) and, not surprisingly given the climate and topography of Australia, they are apparently not good T proxies. To me, it appears that the authors have a pretty good spatial distribution of proxies across their defined region–Jim]

Further to Susan’s mention of the DotEarth mention of McM, what struck me about Revkin’s piece was his generalization about the improving effects of “blog review” on science, while failing to note that this “blog review” activity is confined nearly if not completely exclusively to those provinces of investigation that collide with revenue streams and other worldly matters not having to do with science.

As Revkin feels free to make his unsupported claims, I’ll offer that the confusion and retardation of public understanding of science is not worth the apparently sparse benefits of “blog review.”

Scientific progress is pretty much an accidental effect of “blog review,” rather like being “saved” from driving over a cliff by crashing into a telephone pole.

It’s also interesting to note that even as Oransky warningly waves the Dutch finger at Gergis, cautioning about retractions, he uses Wegman as an example without mentioning the silent, post hoc “redo” of one of Wegman’s papers. Revkin doesn’t help his readers with additional information, a spot-on example of what journalists call “phoning it in.”

David Benson,

By information theoretic methods, I mean the methods that allow comparison of models and characterization of the efficiency of models in accommodating new information/data. Hirotsugo Akaike’s Information Criterion was the first entry in this regard–for although AIC is looked on by most as a useful tool, it is actually an unbiased estimator of the Kullback-Liebler divergence (which can be viewed as an expectation of the logarithmic divergence between two distributions) between the “real” distribution and the model being examined. Similar quantities approach the problem from a Bayesian viewpoint(e.g. BIC/SIC) or from a more general viewpoint (DIC). All of these quantities are useful for comparing predictive power of statistical models, but what do they mean? As it turns out, you can look at the behaviors of quantities like AIC as measuring not just the goodness of fit of the model, but also how sharply the data define the parameters of the model.

A further step in this direction–which I haven’t seen applied too much yet–is information geometry, in which spaces defined by the parameters of a model have a metric defined by the Fisher Information matrix. This is a very elegant approach, and has some interesting features when you look at different models–e.g. the fact that the exponential distribution is a limiting case of both the Weibull and the Beta distribution means that the parameter spaces of these distributions coincide along a line in their spaces. I’ve used this somewhat in my own research–though I haven’t explicitly used the information geometric point of view as nobody in my field would understand it.

As I noted on that Deltoid thread, Tim Curtin has entirely missed the point of my reference to that thread.

DBostrom @~155, thanks.

There is nothing unintentional about the escalating and rather successful effort to derail scientific communication and scientific progress. We are moving from the naming of RealClimate, Mann et al. as charlatans and traitors to actual threats of violence, and I find it disturbing, to put it mildly. Inasmuch as Andy appears to be unable to do as much science as I can in reviewing these claims, and that’s not much, he should acknowledge that he does not know what he does not know, and stop stoking the fires of ignorance and hatred. This is not a high school social and merit is merit. That’s why I persist in a discussion here that is largely above my head (though I still work hard and read almost all of it), in the hope that there is some way to penetrate the fog before it’s way too late.

#159–In a way, the disgusting developments you rightly deplore, Susan, are encouraging: the famous dictum Isaac Asimov put in the mouth of Salvor Hardin, that “Violence is the last refuge of the incompetent,” is fairly intuitive. Most of us know that the loudness of one’s shouts is not correlated positively with the soundness of one’s knowledge–and that in fact the need to scream often screams most loudly of intellectual impotence.

One hopes at least that the recent over-reach of the Heartland Institute will prove to be something of a pattern–or a deterrent.

Susan: Inasmuch as Andy appears to be unable to do as much science as I can in reviewing these claims, and that’s not much, he should acknowledge that he does not know what he does not know, and stop stoking the fires of ignorance and hatred.

Or at least ponder on the meaning of the word “promoter.” Revkin’s not the only journalist who seems to fall prey to the role of being a bucket on a conveyor belt raising what is low to a higher level.

Serving as Steve McIntyre’s volunteer editor to clean up his blog review speech habits and pass McIntyre’s opinions upward after being stripped of revealing context is a form of promotion.

Jim

In post #133, I asked if you can point out any specific examples of data which had a correlation in the range of p=.05 to .10?

You said: “[Response:Of course…almost every reconstruction study uses a p value in that range. Indeed, point me to one that uses some higher value–Jim]”

But let me rephrase the question, because what I really want to know is whether the data has beat the odds (in support of your “…relationship to local temperature are waaaaaaaaaaaaaaaaaay too strong ….” argument above.)

[Response:My “waaaay too strong” statement addresses strength of relationship, rather than frequency of significant relationship, just to clarify a little, but I’m following.–Jim]

As I understand it, the p value is the probability of obtaining a test statistic at least as extreme as the one that was actually observed. So obtaining one result with p value of .1 out of, say, 10 tree rings (10%) could hardly be considered remarkable. Or obtaining around 100 correlations out of 1000 with p=.1 could not be considered remarkable. I would argue that in those cases, a p=.1 could easily be attributable to random noise.

[Response:Yes, I agree, but with a potentially important caveat depending on exactly what you mean by “random noise”.–Jim]

So my rephrased question is: Can you point to any specific examples where data shows much greater than 10% percentage of the tree rings samples matched temperatures with a correlation of p=.1 or better?

Thanks

[Response:Thanks for clarifying, wasn’t clear to me before. Yes, I can point you to such. But have you made a look yourself first?–Jim]

I had hoped that Andy would take as a caveat the fact that Heartland considered him to be sympathetic. It appears that instead he is rushing obliviously on into a status worthy of parody on Comedy Central.

To rise weakly to his defense, I really think Andy desperately wants to believe in the bona fides of all players in the so-called “climate debate”. However, to persist in such a belief in the face of the behavior exhibited by the denialati betrays a naivete so extreme that I just want to reach out and comfort him by inviting him to a p-o-ker game.

David B. Benson wrote: “The overwhelming weight of the evidence establishes beyond a reasonable doubt that humans have added more than enought carbon dioxide to cause the climate to warm.”

I feel like I’m getting pedantic here, but this is an important point about the semantics of effectively communicating the realities of AGW to the general public.

As I understand it, you are arguing that it’s wrong to say that anthropogenic warming has been “proved” — and indeed, wrong to ask whether it’s been “proved” — because, as you wrote earlier, “outside of mathematics (and deductive logic) there are no proofs”.

So it’s interesting that you use the phrase “beyond a reasonable doubt” in suggesting an alternative way of asserting anthropogenic causation — since “beyond a reasonable doubt” is the standard of proof required of the prosecution in a criminal trial.

As it happens, I’ve served on a jury in a criminal trial. When the judge instructed the jury before we went to deliberate, he very clearly and carefully stated that the standard of proof (his word) required for a conviction was “beyond a reasonable doubt” — and explicitly distinguished that from proof “to a mathematical certainty” (his exact words). In effect, he told us “the word ‘proof’ has multiple meanings, and this is the meaning that applies here”.

So, I think your very use of the phrase “beyond a reasonable doubt” serves to point out that there are other “formal” uses of the word “proof” that are different from the use of that word in mathematics and logic, and that in context are entirely legitimate, appropriate, and well-understood.

My point again is this:

When scientists are asked “Has it been proved that humanity’s emissions of CO2 are causing the Earth to heat up?” they have two choices.

They can launch into a lecture about how the one and only “correct” use of the word “proved” is the way it’s used in formal mathematics, and that outside of that context nothing can ever be “proved”. Which is both counterproductive as it leaves the questioner confused and doubtful about the reality of anthropogenic causation, and a semantic fallacy since there are, in fact, other legitimate, formal and well-understood meanings of the word “proof” — in law, for example.

Or, they can recognize that the questioner is using the word “proved” in something much closer to its legal sense than its mathematical sense — that the questioner is a juror and not a mathematician — and simply answer, “Yes, it has been proved beyond a reasonable doubt”.

Susan @ 158

Well said.

As to “…some way to penetrate the fog before it’s way too late” I can only suggest a re-examination of the roles played by deniers and clueless “promoters” (very apt) and how they end up shaping the conversation to either contain or destroy opposition. Distraction, screening, intimidating, bluffing, and perhaps more than anything else wasting time, ends up defining what takes place for those trapped in the denier arena –out of all proportion to actual numbers or scientific acumen.

And always, always, always ask who exactly is your audience? Take it to them.

“[Response:Thanks for clarifying, wasn’t clear to me before. Yes, I can point you to such. But have you made a look yourself first?–Jim]”

Okay, so you are answering and not blocking. Good. My mistake for making assumption and I retract calling you cowards.

Answer to your question, I have looked and have not been able to find and study with local data to compare against. My question was genuine.

[Response:Very busy right now, but I’ll put a short list together in the next day or two if nobody else answers.–Jim]

#163

Here are some screening stats from Mann et al 2008.

Start Dendro Other Total

=======================================

1400–1499 46/119 20/26 66/145

1000–1099 4/ 22 14/19 18/ 41

It’s also worth noting that Mann et al produced EIV reconstructions with both screened and full proxy sets, with similar results.

The full table is here in the SI.

Reformatted version of above screening stats:

Start – – – – || Dendro || Other || Total

============================

1400–1499 || 46/119 || 20/26 || 66/145

1000–1099 || 04/022 || 14/19 || 18/041

Ray@~162,

You’re right, I overstated: a bad unhelpful habit. Promoting, or just giving a pass and cleaning up is not stoking fires, though the end effect is the same. The framers of the hate talk make it an almost irresistible force. Since the planet is an immovable object there are fireworks ahead.

Andy’s desire to find good in people permits advantage taking. I think many indulge in wishful thinking when they assume it’s meant well; people are to be prevented from thinking for themselves.

Looking beyond to the real audience, this seems to point to make as often as possible, that people can and must think for themselves.

Re- Comment by Susan Anderson — 15 Jun 2012 @ 6:50 PM:

You say- “The framers of the hate talk make it an almost irresistible force.”

How about “hate tank.”

Steve

Ray Ladbury @156 — Clear. Thank you.

SecularAnimist @163 — On the other hand, scientists require unambigously defined terms (to the greatest possible extent). I realize not all follow the high standards which the mathematicians must maintain, but increasingly that is (uniformly) so. Thereby for STEM folk, scientists, technicians, engineers and mathematicians (but also philosophers), proof has only one meaning, that as used in deductive logic.

I fully realize that it has other meanings in other settings including jurisprudence and potable alcohol testing. But climatology is a STEM subject, one which being science has to make do with less that certainty in matching observations and measurements to calculations from models.

However that may be I see nothing wrong with a answer of beyond a reasonable doubt which almost all will understand correctly.

> can and must think for themselves

well, most people “might could” think for themselves,

on some subjects, but not so likely able to think on other subjects;

“if y’can think for yourself y’ought to;

if not, think hard on who you trust.”

In most people’s heads, I think,

it’s a zoo in there.

Going only by my own.

Susan,

One of the aspects of science that I think it is hard for laymen to appreciate is the fact that the truth doesn’t lie in the middle. Even scientific consensus is not so much an “averageing” effect as it is an assessment of what theories/viewpoints are making most rapid progress. Truth is where the evidence points.

This makes science different from other disciplines–like journalism or theology–that at least purport to seek truth. They try to find balance between extremes. Science tries to minimize entropy.

BTW, one of the clearest statements of the 2nd law of thermo applies here:

If you add a teaspoon of wine to a gallon of sewage, you get sewage. If you add a teaspoon of sewage to a gallon of wine, you get sewage.

Thanks Ray. Time for me to brush up on those laws. I am not your typical layperson, I’m afraid, as it would be nice if most laypeople gave science its proper value. It took a lot of hard work for me to know as little as I do, but understanding how science works is deeply embedded in my life. Dad continues to produce physics, and we wonder if he will be alive by the time it is recognized, or if he is suffering from emeritusitis. He recently attended a meeting about new developments with cold atoms and such and said it was just like his early work, only different (ot, maundering on as usual).

Hi Susan,

I think I knew your father when I was an editor at Physics Today. Physics gets a lot tougher to do as one gets older.

Ray, for me yes. For him, not so much. I think he finds it hard not to do physics. Time for me to shut up and let everyone talk about the topic, I think.

layman; have you checked eg doctoral thesis of H Grudd, where he states “The Torneträsk density record is the longest in the world and with a temperature signal that is exceptionally strong: The correlation to instrumental

summer (June – August) temperature is 0.79.”

http://swepub.kb.se/bib/swepub:oai:DiVA.org:su-1034

I’m wondering. Dendrocronology work aimed at temperature reconstruction sometimes picks places where temperature change has a stronger effect than say drought on tree ring growth in order to get a clearer signal.

When I read about the ice bore hole results here, I thought, well, you can constrain earlier temperatures to some extent since NYC would not be built where it is if it had been much warmer than now for very long (say 300 years) owing to induced sea level rise. But that is not very high temporal resolution. So, I wonder if, just as a dendrochronologist might choose and forest edge to gain sensitivity, a glaciologist might use ice on different slopes to set an upper limit on past temperature by saying this or that configuration could not have survived any warming similar to the present for three decades or six etc. Combined with the age of the ice, some fairly robust limits might be worked out for a region perhaps.

Andy,

Thanks for the link. The coldest summer in the past 1500 years was 1904, which appears to match the glacial extent of the LIA.

[Response: Not according to anyone else’s definition of the term. – gavin]

The warmest summers were centered around 1000, which showed both higher and prolonged warmth compared to the recent century.

Of particular note is the increased sensitivity of the growth to temperature in the past two centuries. While no explanation could be given for this change, could it be the combined effect of increasing temperatures and atmospheric CO2 concentrations?

Gavin,

Glacial maximums during the LIA difference considerably over different global regions.

http://www.grida.no/graphicslib/detail/overview-on-glacier-changes-since-the-end-of-the-little-ice-age_cc01

While the glacial maximum from the LIA is generally given as mid to late 19th century (earlier in places like Alaska), Norway experienced a second maximum around and after 1900.

http://www.uio.no/studier/emner/matnat/geofag/GEG2130/h09/Reading%20list/Norwegian%20mountain%20glaciers%20in%20the%20past,%20present%20and%20future.pdf

[Response: So is your contention that any cold period at any point on the Earth at any time in the past 500 years is the Little Ice Age? Regardless of how coherent that is with anything else? Good to know. Generally speaking, things are defined so that they can be discussed as distinct entities – people who then subsequently extend them to everything are kind of missing the point. – gavin]

> definition of the term.

Which term? whose definition?

I’m guessing Gavin means “summer” — redefined in this thesis for this location explicitly.

This is not the summer you were thinking of.

Dan H. uses several terms in his sentence, besides the one unattributed quotation he takes out of context without quotes. The rest is his usual.

The thesis (by a student of Briffa’s) describes four statistical approaches used to reconstruct temperature for a particular location: “… an area of about 100×100 km … mountains of alpine type …. The climate is continental subarctic ….”

It seems this author had to redefine “summer” to get the thesis result.

That’s reasonable, I suppose, to document a small local area with unique conditions. Perhaps it’s why this didn’t get published, as it’s not directly comparable to other work?

Oh, heck, I meant to give up on Dan H.

I’m swearing off.

Gavin,

I think you added another 0 to 50 years from the mid 19th century to 1900. An event like the LIA, which lasted several centuries, could very easily have local ending times which vary by 50 years (not 500). Actually reading the report would shed some light on these definitions, insteading of guessing.

[Response: I suggest you read up on some definitions: American heritage dictionary; Encyclopedia of Global Environmental Change, Lamb (1972) etc. In no description does after 1900 fall within the Little Ice Age. – gavin]

The fuss made me look for a definition or explanation of ” screening fallacy”. There were three things usefull on the first 6 google pages; then the topic ran out. One piece by a stats guy explained things to me.

The interesting thing is that the ” fallacy” only exists at CA. Other climate parrot sites repeat McIntyre’s stuff ( incoherent to me) and the Blackboard has a couple of attempts.

My conclusion is that the “fallacy” doesn’t exist. It’s just made up by McI . He seems to be saying that if you check data for relevance you’re cheating but if you don’t check data you’re a fraud.

I just wasted a couple of hours. Thanks Steve.

Gavin,

Perhaps you should read some of the following, with the quote, “The cold period around AD 1900 marks the culmination of the Little Ice Age in northern Sweden:”

http://sp.lyellcollection.org/content/344/1/207.abstract

http://hol.sagepub.com/content/7/1/45.abstract

[Response: Ha! You post a link to the Nesje et al paper that says “The maximum ‘Little Ice Age’ glacial extent in different parts of southern Norway varied considerably, from the early 18th century to the late 19th century.”, with a comment where you misleading claimed that they actually said the LIA extended ‘after 1900’. Now instead of defending your previous post, you have googled around to find something else entirely. Your first link doesn’t mention the ‘Little Ice Age’ at all, while the second manages to use the phrase 22 times without ever defining it. Their conclusion is clear though “Maximum glaciation occurred during the ’Little Ice Age’ starting with a pronounced glacial advance in the thirteenth or fourteenth centuries, and culminating at the most distal moraines during the nineteenth century.”. So, yet again we have you supposedly citing a conclusion or statement from a paper, that on inspection doesn’t actually support your statement, and when challenged on this, you produce links to other papers, none of which support the original quote either. While this might be tremendous fun for you, it is mildly irritating to everyone else, so let me propose a new rule – just for you. If you want to post here, any actual scientific claims need to be backed by a real citation, and the claim has to be actually backed by the citation you give. If you make claims with no reference, they will get binned. If you make a claim that is not supported by your reference, it will get binned. So the way to not get binned is to make scientific claims that can be supported by the literature you cite. Should be easy, no? – gavin]

>Actually reading the report would shed some light on these definitions, insteading of guessing.

Oh the irony! Thank you Gavin for the service you (and other RC contributors) provide those actually wishing to learn and discuss the science in an honest fashion. I lack your ability to stay focused in the face of this kind of nonsense. I have learned a lot in the past 2 years here — not just about climate science, but about the relationship of science and society as well.

@ 184: Thanks Gavin.

[In no description does after 1900 fall within the Little Ice Age. – gavin]

That doesn’t make it incorrect. Glaciers in much of the Arctic on Baffin Island, Western Greenland and Bylot island had their LIA maximum dates between 1880 and 1910… If you need references I can provide – My work is reconstructing former glacier advances during the LIA.

One would think that this late advance probably has something to do with the significant impact of Krakatau and Novrupta on Temperature’s along the North Atlantic basin during this time period.

[Response: I’m not saying that there were not glacier advances in some places in the early 1900s, rather that it makes no sense to associate them with the ‘Little Ice Age’. – gavin]

[Response:I agree with Gavin here, but in point of fact it is quite common to refer — in published literature — to glacier moraines reflecting advances in the late 1800s and early 20th century as “LIA moraines”. It is equally common to refer to moraines in the 1600s as “LIA moraines.” Hence the total confusion on this topic. It’s time we did an LIA post, methinks.–eric]

Gavin, I’m curious as to what your thoughts are regarding this quote from the Gergis paper: “Although the Mount Read record from Tasmania extends as long as 3602 years, in this study we only examine data spanning the last 1000 years which contains the better replicated sections of the Silver Pine chronology from New Zealand (Cook et al., 2002b; Cook et al., 2006) and is the key period for which model simulations have been run for comparison with palaeoclimate reconstructions (e.g. Schmidt et al., 2012).”

Can you explain why the longer record was not ‘examined’? The original study was supposed to be 2000 years but apparently somewhere it was chopped in half. How/why did that happen? And when did your ‘model simulation’ for comparison with this palaeoclimate reconstructions begin? Before or after they decided to only examine the past 1000 years as compared to the past 2000 years? And does their reanalysis of the data have any affect on your model simulations?

[Response: The specifications for the PMIP last millennium runs were worked out in ~2010 and were a compromise that took into account the existence of suitable forcing time histories (solar, volcanic, land use etc), length of simulations, scientific interest etc. We decided to start the runs in 850AD so that we had multiple estimates for the solar forcings and have enough time before the high medieval period to see any structure around then. See Schmidt et al (2011) for more details. The decisions had nothing to do with the existence or not of a few very long annually resolved paleo records. Since Gergis et al are looking at a multiproxy reconstruction, they need multiple proxies, and even at 1000AD they only have two, so extending back beyond that adds little or nothing. People will hopefully use the simulations in all sorts of analyses – and comparisons with regional reconstructions are likely to be part of that. But there is nothing specific about any one reconstruction that influnenced the simulations (many of which have been completed and are downloadable from the CMIP5 database). – gavin]

> “LIA moraines”

Eric #187, perhaps the more generic subject of easily misunderstood jargon. My favourite nit is the use of “orbital” and “sub-orbital” by climatologists for time periods corresponding to, or shorter than, Milankovich periods. In fact, changes in the Earth orbit are only one contributing factor in this; the orientation of the Earth’s axis of rotation being actually more important.

Lost in all the discussion about the ending of the LIA is the question of why tree rings have been more sensitivy to temperature recently. Does anyone have any other thoughts besides higher CO2 concentrations?

I doubt there is a need to say this, but I’m a skeptic, or denier, or whatever you want to call me. Ignorant, stupid, whatever.

Am I correct in understanding that the effects of C02 are so well understood, that many, some, most, hold that without change, massive warming will occur, change the planetary climate system, etc.?

Given the climate system is so well understood, then shouldn’t the cooling agents, such as Sulfur compounds, also be understood to a certainty? And if they are, why aren’t those who think C02 is such a danger offering up the cheap alternative of injecting sulfur compounds into the atmosphere?

In other words, help me to understand if C02 is going to cause these problems, and yet curtailing C02 emissions would be so incredibly difficult, and the climate system is so well understood, why are there no advocates of Sulfur compounds?

[Response:There ARE advocates for sulfur compounds. Bizarrely, many of them are “skeptics”, which contradicts the logic you very reasonably lay out. Don’t ask me why — ask Bjorn Lomborg.

There are at least two fundamental differences between CO2 and sulfur in this context. First, CO2 (being a gas) is well mixed through the atmosphere, and so we can know to very high accuracy how much there will be at any location as long as how much we know there is on average. Second, CO2 is CO2 is CO2 — it’s radiative properties in the lab are the same as anywhere else. The “sulfur” you refer to is really sulfate, which is a solid, and a hygroscopic solid besides. SO2 gas is emitted to the atmosphere but it quickly becomes oxidized to sulfate (SO4(2-)) before it has a chance to become well mixed. So it’s not uniformly distributed, which means its global radiative properties can’t be known (for example, lots of sulfate over Antarctic during winter (where there is no sunlight) will have no effect). And sulfate in the lab isn’t sulfate in the atmosphere. Sulfate particles can be various sizes, and they can have various compositions (since it isn’t just sulfate in the aerosol – it’s sulfate and water and organic nitrogen compounds and …etc.). So it’s radiative properties cannot be known very precisely in the real atmosphere just from lab measurements.

Now, some experts on aerosol radiative properties will tell you we know all this well enough to start pumping SO2 into the atmosphere. Note that this can’t just be done once – it has to be done continuously because the darned stuff keeps glomming onto water and falling out as (acid) rain.

Most (all?) of us at RC think this is a silly idea, despite that fact that, yes, the CO2 part is very very certain.

I strongly encourage you to read Alan Robock’s excellent RC article on this, here. –eric]

Gavin, thanks so much for your reply. Now I have some silly questions: Do authors contact other authors before referencing them in their papers? Does that need approval or is it just done? In this case it just seems so out of the blue…

[Response: No, it’s like links on the Internet- once something is published, any one can reference it. Sometimes people send out preprints, but that is optional and varies widely. – gavin]