In the Northern Hemisphere, the late 20th / early 21st century has been the hottest time period in the last 400 years at very high confidence, and likely in the last 1000 – 2000 years (or more). It has been unclear whether this is also true in the Southern Hemisphere. Three studies out this week shed considerable new light on this question. This post provides just brief summaries; we’ll have more to say about these studies in the coming weeks.

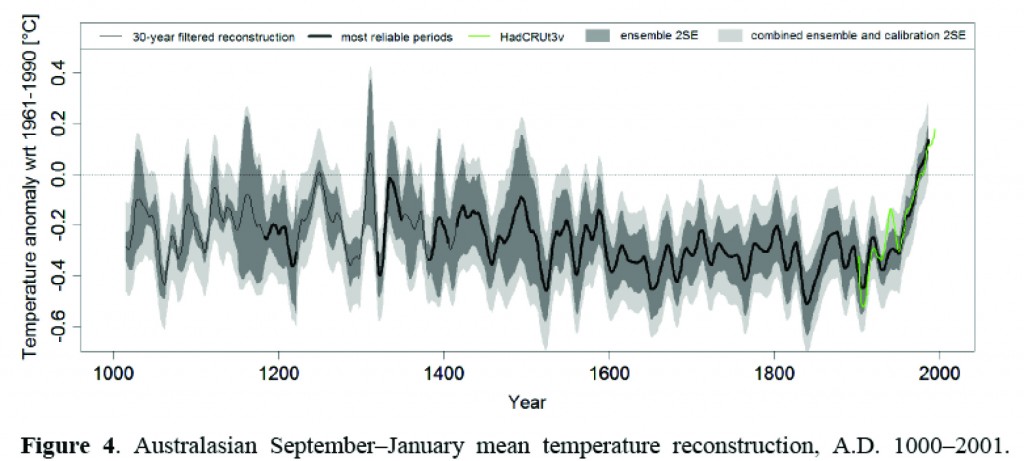

First, a study by Gergis et al., in the Journal of Climate [Update: this paper has been put on hold – see comments] uses a proxy network from the Australasian region to reconstruct temperature over the last millennium, and finds what can only be described as an Australian hockey stick. They use an ensemble of 3000 different reconstructions, using different methods and different subsets of the proxy network. Worth noting is that while some tree rings are used (which can’t be avoided, as there simply aren’t any other data for some time periods), the reconstruction relies equally on coral records, which are not subject to the same potential (though often-overstated) issues at low frequencies. The conclusion reached is that summer temperatures in the post-1950 period were warmer than anything else in the last 1000 years at high confidence, and in the last ~400 years at very high confidence.

Gergis et al. Figure 4, showing Australian mean temperatures over the last millennium, with 95% confidence levels.

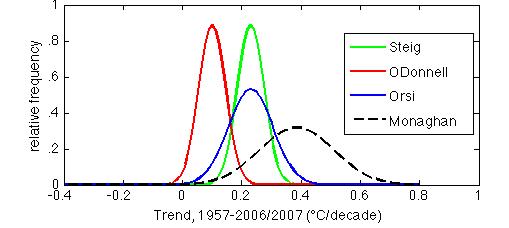

Second, Orsi et al., writing in Geophysical Research Letters, use borehole temperature measurements from the WAIS Divide site in central West Antarctica, a region where the magnitude of recent temperature trends has been subject of considerable controversy. The results show that the mean warming of the last 50 years has been 0.23°C/decade. This result is in essentially perfect agreement with that of Steig et al. (2009) and reasonable agreement with Monaghan (whose reconstruction for nearby Byrd Station was used in Schneider et al., 2012). The result is totally incompatible (at >95%>80% confidence) with that of O'Donnell et al. (2010).

Probability histograms of temperature trends for central West Antarctica (Byrd Station [80°S, 120°W; Monaghan] and WAIS Divide [79.5°S, 112°W; Orsi, Steig, O’Donnell]), using published means and uncertainties. Note that the histograms are normalized to have equal areas; hence the greater height where the published uncertainties are smaller.

This result shouldn’t really surprise anyone: we have previously noted the incompatibility of O’Donnell et al. with independent data. What is surprising, however, is that Orsi et al. find that warming in central West Antarctica has actually accelerated in the last 20 years, to about 0.8°C/decade. This is considerably greater than reported in most previous work (though it does agree well with the reconstruction for Byrd, which is based entirely on weather station data). Although twenty years is a short time period, the 1987-2007 trend is statistically significant (at p<.1), putting West Antarctica definitively among the fastest-warming areas of the Southern Hemisphere -- more rapid than the Antarctic Peninsula over the same time period. We and others have shown (e.g. [cite ref = "Ding et al., 2011"]10.1038/ngeo1129[/cite]), that the rapid warming of West Antarctica is intimately tied to the remarkable changes that have also occurred in the tropics in the last two decades. Note that the Orsi et al. paper actually focuses very little on the recent temperature rise; it is mostly about the "Little-ice-age-like" signal of temperature in West Antarctica. Also, these results cannot address the question of whether the recent warming is exceptional over the long term -- borehole temperatures are highly smoothed by diffusion, and the farther back in time, the greater the diffusion. We'll discuss both these aspects of the Orsi et al. study at greater length in a future post. Last but not least, a new paper by [cite ref = "Zagorodnov et al."]10.5194/tcd-5-3053-2011[/cite] in The Cryosphere, uses temperature measurements from two new boreholes on the Antarctic Peninsula to show that the decade of the 1990s (the paper state “1995+/-5 years”) was the warmest of at least the last 70 years. This is not at all a surprising result from the Peninsula — it was already well known the Peninsula has been warming rapidly, but these new results add considerable confidence to the assumption that that warming is not just a recent event. Note that the “last 70 years” conclusion reflects the relatively shallow depth of the boreholes, and the fact that diffusive damping of the temperature signal means that one cannot say anything about high frequency variability prior to that. The inference cannot be made that it was warmer than present, >70 years ago. In the one and only century-long meteorological record from the region — on the Island of Orcadas, just north of the Antarctica Peninsula — warming has been pretty much monotonic since the 1950s, and the period from 1903 to 1950 was cooler than anything after about 1970 (see e.g. Zazulie et al., 2010). Whether recent warming on the Peninsula is exceptional over a longer time frame will have to await new data from ice cores.

References

- J. Gergis, R. Neukom, S.J. Phipps, A.J.E. Gallant, D.J. Karoly, and . , "Evidence of unusual late 20th century warming from an Australasian temperature reconstruction spanning the last millennium", Journal of Climate, pp. 120518103842003, 2012. http://dx.doi.org/10.1175/JCLI-D-11-00649.1

- A.J. Orsi, B.D. Cornuelle, and J.P. Severinghaus, "Little Ice Age cold interval in West Antarctica: Evidence from borehole temperature at the West Antarctic Ice Sheet (WAIS) Divide", Geophysical Research Letters, vol. 39, 2012. http://dx.doi.org/10.1029/2012GL051260

- E.J. Steig, D.P. Schneider, S.D. Rutherford, M.E. Mann, J.C. Comiso, and D.T. Shindell, "Warming of the Antarctic ice-sheet surface since the 1957 International Geophysical Year", Nature, vol. 457, pp. 459-462, 2009. http://dx.doi.org/10.1038/nature07669

- D.P. Schneider, C. Deser, and Y. Okumura, "An assessment and interpretation of the observed warming of West Antarctica in the austral spring", Climate Dynamics, vol. 38, pp. 323-347, 2011. http://dx.doi.org/10.1007/s00382-010-0985-x

- R. O’Donnell, N. Lewis, S. McIntyre, and J. Condon, "Improved Methods for PCA-Based Reconstructions: Case Study Using the Steig et al. (2009) Antarctic Temperature Reconstruction", Journal of Climate, vol. 24, pp. 2099-2115, 2011. http://dx.doi.org/10.1175/2010JCLI3656.1

- N. Zazulie, M. Rusticucci, and S. Solomon, "Changes in Climate at High Southern Latitudes: A Unique Daily Record at Orcadas Spanning 1903–2008", Journal of Climate, vol. 23, pp. 189-196, 2010. http://dx.doi.org/10.1175/2009JCLI3074.1

The application of the “screening fallacy” to this scenario somewhat escapes me. I’m not inclined to try for an explanation at McIntyre’s site as it’s such an emotional mess.

[Response:My experience is that you are very unlikely to get an answer that means anything. I’ve already tried, several times, very directly and the lack of a clear and definite answer of what he means by the term, supported with a clear conceptual formulation and specific code and other evidence, has been complete. Accordingly, I don’t believe he himself knows what he means–Jim]

McIntyre’s central objection seems to be this:

“In the terminology of the above articles, screening a data set according to temperature correlations and then using the subset for temperature reconstruction quite clearly qualifies as Kriegeskorte “double dipping” – the use of the same data set for selection and selective analysis. Proxies are screened depending on correlation to temperature (either locally or teleconnected) and then the subset is used to reconstruct temperature. “

[Response:It’s just a foolish argument. It’s this simple: trees respond to multiple potential external inputs and you want to find that set of trees that is responding most strongly and clearly to that external input in which you are most interested. This necessarily involves a selection process, and that selection process is based on the correlation between an observed process (radial tree growth) and a variable (temperature) that we know from many other lines of evidence is a legitimate physical driver of that observed process. The fact that other drivers can also affect that process, and might sometimes even be correlated with that observed process in a statistically confounding way, does not negate the legitimacy of this procedure.–Jim]

I’m not sure how this does not actually rule out calibration as applied by McIntyre. For instance, if we have an assembly line churning out thermometers and we select samples for various brackets of accuracy against an independent standard, does that mean we can’t obtain useful measurements from thermometers found to be performing within certain brackets of accuracy? Would our measurements be better if we ignored a known good standard and simply included all the thermometers coming off the line?

Gergis and crew didn’t use tree rings or coral for samples for screening; rather as in the case of the thermometer production line example they used an independent standard:

“Our instrumental target was calculated as the September–February (SONDJF) spatial mean of the HadCRUT3v 5[deg]x5[deg] monthly combined land land ocean temperature grid (Brohan et al., 2006; Rayner et al., 2006) for the Australasian domain over the 1900–2009 period.”

McIntyre’s objection seems to come down to Gergis’ rejection of measurements that don’t hold up against an independent standard, as describe in Gergis et al:

“Only records that were significantly ( p [less than] 0.05) correlated with the detrended instrumental target over the 1921–1990 period were selected for analysis.”

Ok. So looking at this through McIntyre’s lens, how would one obtain a proxy record calibrated against an independent standard? As far as I know, all thermometers are proxies (unless there’s an experimental physicist who can say otherwise?) so it seems that if we test McIntyre’s logic to the breaking point, we don’t really know the temperature of anything because all of our measurement devices have been tested, with under-performing devices rejected, thus leading to a “screening fallacy.”

[Response:Right. As I noted in my response to Martin, his arguments if taken to their logical extreme, will eventually close off all use of tree rings as a climatic proxy. He says otherwise (that tree rings are not completely worthless as a proxy) but this conclusion is where his arguments ultimately take you if you follow them.–Jim]

Or is the “screening fallacy” a “get out of jail free” card?

Re: dbostrom

“manuscript is still available for those interested; see upthread for link”

I skimmed it prior to the dust up but can’t find it in my downloads folder anymore, so I must have looked at it in my browser (the stupid way).

Where is the article still available? The link in the reference list doesn’t work.

I’ve got a question for the dendro-folks…It’s probably already discussed in a paper I can be pointed to.

In other sampling-type studies, a certain number needs to be taken so that the power of the sample can accurately describe the whole with a manageable margin of error. Does this sort of thing not play a role at all in dendrochronology after selecting a site a priori for its determined ability to signal global temperature (ie, ‘treeline’, etc.)?

I’m just thinking there are hundreds of thousands of trees in a region, and the cores per site can be 20-30. I’m assuming since that’s not enough to be representative of the whole that there’s got to be a set of arguments why:

(a) out of an entire population of, say 10,000 trees, 10 cores out of 20-30 are said to effectively model the instrumental temperature record (regardless of what they display in other eras), and have that be certainly NOT a product of chance, but rather a lead-pipe lock that it is describing the signal, or

[Response:Why you say “10 cores out of 20-30” I don’t know, because all the cores of a site, not just some fraction thereof, are used to provide the climate signal of interest. But to your point specifically: please point me to any verbal concept, any algorithm, any model code, ANY WAY in which a stochastic process can lead to the types of high inter-series (i.e. between cores) correlations typically seen in the tree ring data archived at the ITRDB. Just go there and start randomly looking at the mean interseries correlations documented in the COFECHA output files of each site, and tell me exactly how you would generate such high numbers with any type of stochastic process that’s not in fact related to climate. And then how you would, on top of that, further relate the mean chronology of those sites to local temperature data at the levels documented in many large scale reconstructions. I want to see that model. And if I don’t see that model, I’m not buying these bogus “screening fallacy” arguments. Make sense?–Jim]

(b) that it doesn’t matter how many trees exist in a region, nor how many cores are taken relative to the total number of trees– it’s simply about discovering any individual trees that evidence the temperature signal and discovering as many co-located as possible. It doesn’t matter how many there are, but instead how well they match the signal.

[Response:At the risk of having this statement completely misunderstood and mangled by the usual suspects…if you only had one tree out of 10K that responded well to temperature, and you found and cored that one tree, you would have legitimate evidence of a temperature signal. Fortunately, the situation is nowhere remotely so extreme as that, and that’s because, in fact, that many trees respond in this way, and therefore you only need some couple dozen or similar to get a signal that emerges strongly from the noise at any given location. And why do many trees respond this way? Because, lo and behold….temperature is a fundamental determinant of tree radial growth in general, i.e. a fundamental tenet of tree biology.–Jim]

Salamano,

OK, there are thousands of trees. However, if the same physical conditions persist over the entire range, then trees of a particular species ought to respond in a comparable fashion, no? And remember, the time series consists of rings for a single individual. You are looking at multiple time series to average out extraneous effects–e.g. microclimate.

“…if you only had one tree out of 10K that responded well to temperature”

But how do you know that one tree is not just more noise, which by coincedence just happens to match roughly to 20th century temperatures? You have not answered this question.

(expecting the typical blockage of comments you don’t like, a response would still be nice, nonetheless…)

[Response:Yeah, no kidding, because that’s not the question that was asked. It’s a hypothetical given; the point is that if you knew you had one good thermometer and 9,999 bad ones, the one is all you need to make your estimates. The answer to the question you are trying to ask is the one I already answered: the mean interseries correlations and their relationship to local temperature are waaaaaaaaaaaaaaaaaay too strong and too frequent to be explained by chance. It’s not even a topic for legitimate consideration frankly; indeed, it’s game-playing–Jim]

Rephrasing Jim’s remarks, I suppose we might say that if we had 100,000 monkeys sitting in 100,000 trees banging away on typewriters and one of ’em turned out Moby-Dick while the rest were spewing gibberish that singular primate would indeed turn out to be Melville. The probability of an actual lower-functioning primate authoring a single chapter let alone 135 is effectively nil.

In this case there are even fewer trees so the chances of an accidental masterpiece are rather less, even though the plot still involves monkeys and obsession.

“Moby Dick seeks thee not. It is thou, thou, that madly seekest him!”

I think there’s a minor, legitimate but well-understood point underlying the selection fallacy talk at CA that could be better observed in scientific publication. It is actually true that if you select proxies according to their correlation with instrumental temperature in some training period, then you don’t have an independent measure of the temperature in that period. If you have ten clocks in a shop and set them going according to your watch, then the fact that 11 timepieces show the same does not improve your knowledge of the time, though each clock does tell the time.

The lack of real info in the training period isn’t serious – we have more reliable instruments.

When a graph shows instrumental and proxy temps for the last 1000 years, it’s intended to convey the best temp estimate. But it usually includes the 20Cen proxy curves, and for that purpose, they don’t belong. Instead, their proper rationale is to show how well the proxies did in fact align with instrumental – ie how well they were chosen.

This confounding underlies a lot of recent troubles. With “hide the decline”, I imagine post-1960 divergent temps sometimes aren’t shown because we know they aren’t right, and nobody, most of CA included, seriously believes that they are. The legitimate objection is to hiding the divergence.

But I think the proper remedy is not to try to show both things on one plot. To show temperatures, just show proxies pre-training period and instrumental after. Or to show how effectively the proxies correlated, just show instrumental and proxies in the training period (and include divergence).

A great deal of the fussing at CA is over suspicions that someone is favoring proxies that show hockey sticks. But the hockey stick, correctly understood, has a proxy shaft and an instrument blade. The proxies only tell you about the shaft (because of “selection fallacy”). So there should be nothing to achieve by selecting for HS-ness. If the proxies were not plotted post 1900, that would be made clearer.

[Response: This is a fair enough point, but the real test to prevent overfitting (which is the real accusation) is cross-validation and out-of-sample verification. This is something that has been done in many papers but which is generally completed ignored by McI et al when they start talking about fallacies. There are multiple ways to do CV and I wouldn’t pretend to be an expert on all the different methods, but it seems to me that this would be the most positive contribution that the critics could make. If they don’t think that is done properly, what would they suggest instead, and what impact would it have. – gavin]

But surely you need to know why discarded trees don’t respond to temperature like they should. Because what happened to them in the 20th century might have happened to the ‘chosen’ ones 500 years ago?

Or is it just a kind of ‘genetic’ thing?

[Response:There’s always a genetic element in trees due in large part to their outcrossing nature and consequent generally high genetic diversity, but that’s not the primary consideration here. All trees, indeed all plants, respond to temperature in some way; the questions are ones of “how?” and “how much?” and “when?”. Yes, it’s important to know why a given set of trees may not be responding to T in a simple and stable way, given that unimodal responses to T are very common across a wide range of response variables in the biological world, and hence, these nonlinearities can create real errors in the paleo-estimates. I’d recommend reading carefully (among many others) the following set of articles:

Kingsolver, J. (2009) The Well-Temperatured Biologist. American Naturalist 174:755-768.

D’Arrigo, R., et al (2008) On the ‘Divergence Problem’ in Northern Forests… Global and Planetary Change 60:289–305

Loehle, C. (2009) A mathematical analysis of the divergence phenomenon. Climatic Change 94:233–245

–Jim]

In fact the timber industry depends on this, the entire point of planting even-aged stands is that they’ll all grow at very close to the same rate within a given stand which grows within a uniform microclimate, so you can go in and harvest them all in one fell swoop 40, 60, or 80 years later and end up with a very uniform product.

Romain #107: redundancy, redundancy, redundancy. Yes, anything might have happened 500 years ago, but hardly to all trees or tree species at the same time, or to trees and corals and lake sediments and speleothems at the same time (and if it did, let’s call it ‘climate’). Compare subsets and look for outliers.

Screening is just a way to get rid of obvious problem trees / problem sites rightaway. Like not hiring a CFO with a conviction for embezzlement, though lack of a criminal record doesn’t make people honest. Trust, but verify.

> why discarded trees don’t respond to temperature like they should.

You’re assuming “they should” — but why are you assuming that?

Look into the reasons.

Basic Principles and Methods of Dendrochronological … – BioOne

http://www.bioone.org/doi/abs/10.3959/2011-2.1

by PP Creasman – 2011 –

May 25, 2011 – The adjustment for cockchafer beetle outbreaks (Melolontha melolontha L. and M. hippocastani F.) in the Hohenheim University oak and pine tree-ring …

http://www.sciencedirect.com/science/article/pii/S1040618299000154

by M Friedrich – 1999 – Cited by 106 –

Jan 24, 2000 – … In this respect, cockchafer (Melolontha Melolontha) is most important. … trees with such rhythms were identified and discarded from the chronologies. …

The “McIntyre team” needs to spend some time down on the farm. Every farmer knows that his field of corn responds almost uniformly to annual weather conditions. The corn farmer doesn’t need to go out and survey a thousand stalks to see how his corn is growing. Just a glance here and there, and a careful examination of a few plants gives him a really good “statistically meaningful” sampling. When I grew up on the farm, our local farmers had a saying about how fast the corn had to grow to get a good crop… the corn had to be “knee high by the 4th of July” (in western Pennsylvania and eastern Ohio, and in general across the American Midwest).

Too bad plant biology doesn’t play by the rules the McIntyre team pretends hold sway. Farming would be far less risky, if a significant proportion of the corn field grew well in bad years and matured to produce a reasonable crop. But like the unfortunate farmers in Texas found out last summer, in a bad year the entire field grows badly, and there isn’t a salable crop. They don’t call ’em “crop failures” for no reason.

[Response:Paul, that’s different. A corn crop in a local area is a system with very high levels of genetic and environmental uniformity. Therefore, you can as you state, get a pretty good idea of performance from a pretty small sample of plants (I had a job inspecting corn fields for a while). But perennial, woody plants growing on the fringes of their growth tolerance envelope are a different beast. There’s a lot more variability that has to be accounted for and signal to noise is harder to discern. What we need to do, and what I’m trying to emphasize without explicitly stating it, is, if we are to approach this problem fairly, to neither under-sell, nor over-sell, the real analytical challenges that exist with estimating former climates from tree rings. No need to mountainize molehills, nor vice versa. Indeed, no legitimacy to either.–Jim]

Romain,

Exactly! That is why different proxy studies yield different results. The following paper shows that some tree rings (genus Pilgerodendron uvifera) respond positively to temperature in one area, and negatively in another. Oftentimes, the trees respond most significantly to minimum temperatures.

http://www.glaciologia.cl/textos/lar.pdf

[Response: “Exactly!”… err.. not quite. Actually, not at all. Rather, different places with identical trees often limited by different factors – temperature, rainfall, competition, soil nutrients etc. and so will have different patterns of change over time. Read the papers Jim suggested. – gavin]

“But surely you need to know why discarded trees don’t respond to temperature like they should. Because what happened to them in the 20th century might have happened to the ‘chosen’ ones 500 years ago?”

I think you’re still missing Jim’s point.

Why certain trees diverge from the record is a question, but it’s a question that does not pertain here.

An airplane crashes. The DFDR is mangled in the wreck but the QAR is not. The crash can still be reconstructed because the conditions that destroyed the DFDR did not ruin the recording on the QAR. The exact circumstances of the DFDR’s destruction are interesting but not relevant to understanding why the crash happened. Meanwhile the chances of the QAR inadvertently mimicking the record of the flight are nil.

Follow Jim’s response to Salamano at 103 above. “I doubt it” isn’t a useful argument; if you choose not to believe Jim’s description you’ll need to do the work to show with numbers how a proxy temperature record can match an independent record in detail, by chance.

> Dan H

> Pilgerodendron uvifera) respond positively to temperature

> in one area, and negatively in another.

That’s half the story presented in a misleading way.

Read the paper for the reason:

” … Pilgerodendron uvifera is the only conifer growing between 43°30ʼ and 54° S, which shows both a temperature and precipitation signal …”

That’s the trick to this citation thing — you need to read the paper.

112 Dan H. “Treeline and high elevation sites in the central and southern Chilean Andes (32°39ʼ to 55°S) have shown to be an excellent source of paleoenvironmental records because their physical and biological systems are highly sensitive to climatic and environmental variations. In addition, most of these sites have been less disturbed by logging and other human induced disturbances, which enhances the climatic signals present in the proxy records (Luckman 1990; Villalba et al. 1997).” Did you actually read the paper?

Credit where credit is due: a brief search suggests that “Exactly! That is why…” appears to be an entirely novel misinterpretation. It appears nobody has previously been wrong in exactly that way.

Freshly minted contrarian talking points are as rare as hen’s teeth. Congratulations, and be careful with that tradecraft!

Tokodave,

Yes, I did. The question is did you? Your quote from the report simply states that region is an excellent source of records, because of their sensitivity and the undisturbed environment. Had you read further, you would have read that not all trees respond similarly. The trick is that sometimes, you have to read past the first paragraph.

For instance, “Sites located in the southern portion of this range show a strong negative response to summer temperatures. Conversely, a positive response to summer and annual temperatures is reported for two sites at or near treeline in the Coastal Archipelagoes (46°10ʼ, 700 m asl). A marked increase in tree-growth since the mid-20th century, attributed to an increase in mean annual temperature in this period, is reported (Szeics et al. 2000). However, none of the other Pilgerodendron sites show evidence of a warming trend during the 20th century, following a similar pattern as the one described for the 3,622-year temperature reconstruction from Fitzroya tree-rings (Lara and Villalba 1993).”

This is evidently not the only species showing this response.

Notice how we’re no longer discussing the droids we were looking for?

Pilgerodendron uvifera has zero to do with the point being made by Jim regarding the impossibility of a proxy temperature history as recorded by tree rings accidentally resembling the instrumental record.

You have to watch the hands and the ball, or you’ll miss the instant when you’ve been had.

Links to the three papers suggested inline (in reply to Romain) above at 107:

http://www.mendeley.com/research/the-welltemperatured-biologist-american-society-of-naturalists-presidential-address/

http://www.wsl.ch/info/mitarbeitende/cherubin/download/D_ArrigoetalGlobPlanCh2008.pdf

http://www.springerlink.com/content/45u6287u37x5566n/

[Response:Thanks Hank. The Kingsolver paper is not freely accessible unfortunately, which is too bad, because it’s excellent. Anybody with literature access, interested in a truly synthetic and insightful discussion of the effects of temperature on biological systems, should grab it and read it–Jim]

118 dbostrom: Well put.

All the authors need to do is show that the selected proxies correlate with each other outside the screening period just as well as they do during the screening period, then you have firm evidence that the temperature correlation is maintained throughout the reconstruction. (the alternative is that they correlate based on a non-temperature variable and just happened to correlate with temperature during the screening period – unlikely – especially seeing we’ve chosen these proxies based on an a priori understanding that they should correlate with temperature)

Is it typical to show this?

[Response:If you are referring to the within-site scale of analysis, which I think you are, then your fundamental point is sound and I agree with it. All data submitted for archive at the NOAA Paleo ITRDB are evaluated for a number of basic quantitative characteristics using a program called COFECHA. One of these metrics is the mean inter-series correlation, the average of all pairwise correlations on the annual resolution data over the common interval. This is similar to what you are suggesting, but not broken out by instrumental vs pre-instrumental period (obviously, since these periods are not defined given that the relevant climate data are not part of the submitted tree ring data), nor by each individual pair of ring series. It would be easy to write an R script or function to do this once the climate data are in hand. As for existing studies, I’m pretty sure Ed Cook et al did analyses along these lines some few years ago, but don’t have exact refs. at fingertips–Jim]

If correlation fails outside the screening period then it must be said that the temperature correlation is failing also in that period – to the extent indicated. In this case the noise outside the screening period will have a tendency to cancel and we will see the instrumental record given undue emphasis (relative to the rest) over the screening period and hence claims about “unprecedented [whatever characterised the screening period]” become rather dubious.

(above true for decadal – higher resolution subject to regional differences)

[Response: It isn’t the case that proxies need to correlate with themselves – because of course the actual temperatures don’t either (assuming you are looking at proxies of local temperatures). For a local or regional reconstruction where you expect more coherence, this might be a more useful test. – gavin]

Gavin:

But isn’t it so that areal temperature reconstructions (e.g., for the NH) based on subsets of proxies (like tree rings vs. non- tree rings, or why not even-numbered vs. odd-numbered) each with acceptable geographical coverage, can be meaningfully intercompared?

Thank you Jim and others for your kind answers and paper references.

But yes the papers are not freely available except the D’Arrigo…

So I’ll start with this one.

[Response:No problem Romain. Contact address for a reprint for the Kingsolver article is: jgking@bio.unc.edu, and the Loehle article is here. Another good one is Way and Oren (2010).

In addition to what Nick Stokes brings up above (107), the “screening fallacy”, according to McIntyre c.s., potentially causes a hockeystick shape out of random data.

McI links to a post by Lucia, who explains it quite well and without the insinuating framing that is so common at CA: http://rankexploits.com/musings/2009/tricking-yourself-into-cherry-picking/

In discussions over at my blog, Jeff Id gave a very succint explanation of why this is ( http://ourchangingclimate.wordpress.com/2010/09/28/open-thread-2/#comment-8324 ):

“Temperature proxies are millenia long series of suspected but unknown temperature sensitivity among other things (noise). Other things include moisture, soil condition, CO2, weather pattern changes, disease and unexpected local unpredictable events. Proxies are things like tree growth rates, sediment rates/types, boreholes, isotope measures etc.

In an attempt to detect a temperature signal in noisy proxy data, today’s climatologists use math to choose data which most closely match the recently thermometer measured temperature (calibration range) 1850-present.

The series are scaled and/or eliminated according to their best match to measured temperature which has an upslope. The result of this sorting is a preferential selection of noise in the calibration range that matches the upslope, whereas the pre-calibration time has both temperature and unsorted noise. Unsorted noise naturally cancels randomly (the flat handle), sorted noise (the blade) is additive and will average to the signal sorted for.”

That to me was a helpful explanation of what their central criticism is about. It is the same as what McIntyre describes as “screening fallacy”, afaict.

I responded to Jeff (http://ourchangingclimate.wordpress.com/2010/09/28/open-thread-2/#comment-8330 ) that this is only problematic inasfar as the correlation with temperature is indeed random, but much less so if there is a physica reason for a relation with temperature:

“To me it sounds entirely reasonable to weigh the proxies based on how well they reproduce the instrumental temperature record. You seem to assume a good correlation over this period is based on noise, i.e. coincidence? Or at least, that noise could have contributed to the good correlation, which is fair enough. (With sorting, you mean weighing, right? (giving it more weight, i.e. importance, in the final reconstruction) )

You argue that inasfar as by coincidence the noise correlated with the measured temperature increase since 1850, that takes care of the upswing in the proxie reconstruction (the blade), whereas the pre-1850 proxies have random noise which causes the flat shaft.

Is that good paraphrasing of your position? [to which Jeff later replied “yes it is very close.”]

In that case the critical point is really, to what extent is a good correlation between measured temperature and proxies coincidence (ie not due to a causal relation between the proxie and temperature), and to what extent is it due to a real causal relationship? Inasfar as the latter dominated, there shouldn’t be a problem.

This could -and I think has- been investigated by people studying the actual dynamics of the proxies involved, eg plant physiologists for tree proxies.

It also shows that in the end, finding statistical relation still has to rest on physics (or chem or biology) in order to be properly interpreted.”

In trying to understand where the other is coming from, this was -to me at least- a very useful discussion.

[Response:Bart, the problem is that these explanations are just fundamentally wrong, for a number of reasons. Let’s start with the last one you mentioned, because these “correlation by chance” arguments implicitly assume that we have no fundamental understanding (i.e. at the population, individual plant, tissue, cellular or molecular levels of analysis) of the effect of temperature on tree growth. This is so ludicrous that it hardly deserves mention; you have to close your eyes to the mountains of evidence at these levels of analysis to make those arguments, and is why I cited the Kingsolver article below.]

[Continued: Second, knowledge of tree ring sampling and statistics provide another set of arguments. Each site is sampled by, typically, 20 to 30 tree cores (and sometimes many more, as in Gergis et al here). A robust mean (to downweight extreme outliers) of each year’s set of sampled rings is then taken (after “detrending” out the age/size effect from each core), to give the site “chronology” (i.e. a single estimate of the climate parameter of interest, for each year). Even with extremely high levels of auto-correlation in each such resulting series (much higher than typically observed), what is the chance of getting this robust mean to correlate at say p = .05 or .10 over a typical 100 year instrumental calibration period, with the climate variable of interest? Remember, the red noise process that is hypothesized as the cause of the supposed spurious correlations, has to be operating on each tree individually. This, not even counting that such relationships once computed, typically have to pass verification tests based on e.g. two 50 year intervals, in both directions?]

[Continued: So, to summarize this… Notwithstanding these two extremely strong and entirely independent arguments, and also not even including the previously mentioned fact that the typical ITRDB tree ring site has much higher inter-series correlations over the full chronology length than could ever possibly result by chance, we have people who are neither biologists nor tree ring experts making these kinds of nebulous, unclear, and just flat out unfounded arguments that fly under vague and concocted terms such as “pre-selection bias” and “screening fallacy”. What do they expect, that nobody’s going to know enough about these topics to counter these bogus arguments? Well, good luck on that.–Jim [edited for clarity]]

Jim — inline response needs paragraph breaks, please, for us older readers.

Good explanation there from you and from Bart.

[Response:Thanks Hank; have to break into separate “Responses” to make paragraphs, which I did.–Jim]

Good summary by Bart of the idea put forward by Jeff that the data could just happen to give a slope recently and a flatline earlier.

[Response:An important point I forgot to make relates to that: the selection of sites to include is not influenced by the multi-decadal trend during the calibration interval, it depends only on the yearly scale correlation probability; i.e. there is no selection bias for chronologies that have positive slopes during the instrumental period.–Jim]

Seems to me it’s like the argument about how likely it is that all the molecules that are warmer go to the north side of the room and all the cooler ones go to the south side — seems like it could happen. Understanding the probability, though, makes it something you aren’t likely to expect to see.

[Response:Right, could happen, but at such a low likelihood as to be meaningless.–Jim]

Jim,

You seem to argue the same as what I’m arguing: That there are physical reasons for a relationship between the variables to exist. I agree. But that doesn’t seem to be the crux of the argument with McIntyre. His argument is hardly ever physics based, but rather maths based, as it is in this case.

[Response:I agree Bart, that’s why I added the second argument based purely on statistical considerations, to counter that.–Jim]

If I understand his criticism correctly, it is that just from the methodology, one cannot distinguish whether the hockeystick shape is due to such a shape existing in the underlying data or due to the screening process. That means that even if one expects a physics-based relation, the fact that a relation arises is no proof of anything.

[Response:I think it’s better not to think in terms of “proof” Bart, but in terms of strength of evidence. The fact that we know that temperature affects radial growth, and the processes that lead to radial growth, lends evidence to the idea that when we see radial growth corresponding to temperature change, that there is indeed a relationship. To ignore such biophysical evidence is completely unwarranted.–Jim]

You say that the probability that the relation with the instrumental period is by chance, is very small. Ok, I accept that. As I accept that there are sound physical and biological reasons to expect such a relation.

But the methodology would still create a hockeystick shape out of random noise. Lucia’s example is quite convincing, as is Jeff’s explanation of why that would be. That’s a problem, because it lowers trust in the results.

[Response:Only very rarely in any realistic situation Bart. That’s the overall point. Think perhaps a little more about the replication issue mentioned.–Jim]

Bart’s comment and synopsis along w/Jim’s response could well serve as the basis for a fuller treatment as an RC posting. Might save an awful lot of continued grinding on the issue to have a fully developed popular treatment available? Half-life of the issue show signs of being long (after all, it’s an isotope of something that’s been going on for a full 14 years).

> if one expects a physics-based relation,

> the fact

for “fact” read statistical likelihood

> that a relation arises

for “arises” read appears likely

> is no proof of anything

outside of mathematics proof is not possible

If you’re describing the problem correctly, what they’re saying is you can’t prove anything by doing science.

We agree on that. Proof isn’t an option, except in mathematics.

What’s the problem then?

Is someone unhappy about living life without proofs?

That, again outside of mathematics, is the only option available.

Statistics, which is quite a new branch of math, is giving some help here.

Hank Roberts wrote: “outside of mathematics proof is not possible”

Often repeated but not true.

Predictions from theory can be proved correct, or disproved, by empirical observation of the real world.

Without the ability to prove or disprove the predictions from theory through empirical observation, there is no such thing as science.

SA, I halfway agree with you.

A testable prediction may be proved wrong by observation.

Point taken about proof vs evidence. I entirely agree and make that same point frequently.

The problem is, that a hockeystick shape could have appeared because of A) the method used (in spite of the underlying data having no such shape)

[Response:Bart, it can’t, that’s the whole point. The argument is wrong; it’s not a realistic explanation for the observed data.–Jim]

or B) because of the underlying data indeed have such a shape (which is then still emphasized by the method used).

People who trust that there is a physical relation and who trust the scientists will go for explanation B. Those who’re not so sure about either or one of the above will go for explanation A.

A more robust method would clearly be preferable.

DBostrom: seconded.

Jim, in comment #125, you said: “Even with extremely high levels of auto-correlation in each such resulting series (much higher than typically observed), what is the chance of getting this robust mean to correlate at say p = .05 or .10 over a typical 100 year instrumental calibration period, with the climate variable of interest?”

Can you point out any specific examples of data which had a correlation in the range of p=.05 to .10?

[Response:Of course…almost every reconstruction study uses a p value in that range. Indeed, point me to one that uses some higher value–Jim]

SecularAnimist — can you give examples of theories proven or disproven by empirical observation?

My normal SOP as a scientist would be to publish results with an error analysis to show that my experimental data made theory X (un)likely, very (un)likely etc. Look at particle physics for example and the hunt for the Higgs. When they find a peak with a 5-sigma deviation from the background they might say they’ve found it — but really they are saying it is extremely likely this is it. Most areas of science won’t reach statistical levels of certainty as high as this, but theories will still be accepted as the framework for understanding the data.

SecularAnimist, Hank Roberts & Bart Verheggen — Outside of mathematics (and deductive logic) there are no proofs. Proofs only exist in deductive logic, which is what mathematicians use. [So do physicists, economists, etc., but when acting in the role of establishing some axioms and deducing consequences. For a fine example, see

http://en.wikipedia.org/wiki/Arrow%27s_impossibility_theorem

In sciencce we use inductive logic, making decisions based on the weight of the evidence. IMO the best (but not only) approach is Bayesian reasoning for which please read the highly informative and entertaining Probability Theory: The Logic of Science by the late E.T. Jaynes.

“Science is organized common sense where many a beautiful theory was killed by an ugly fact.” Thomas Henry Huxley

Gator wrote: “can you give examples of theories proven or disproven by empirical observation?”

That’s not what I said. I said that predictions from theory can be proven or disproven by empirical observation.

David B. Benson wrote: “Outside of mathematics (and deductive logic) there are no proofs.”

Einstein’s theory of relativity predicted that the path of light would be altered by the gravitational field of a star. Are you saying that it is impossible to prove that prediction correct or incorrect by empirical observation?

I understand that the point of a scientific theory is not to be “true” or “false” in the abstract mathematical sense, but to make correct predictions about the results of observation. Which requires the ability to prove whether or not those predictions are correct through empirical observation.

If there is no such thing as “proof” outside of the abstract mathematical sense, then there is no possibility of testing predictions against empirical observation to prove those predictions correct or incorrect, and there is no possibility of doing science.

SecularAnimist wrote “Einstein’s theory of relativity predicted that the path of light would be altered by the gravitational field of a star. Are you saying that it is impossible to prove that prediction correct or incorrect by empirical observation?”

Like Hank said above: you’re half right! You can prove the theory or prediction is incorrect with empirical study. But with successful empirical tests of a theory, all you’ve shown is that the predicted behavior holds in the instances you’ve tested it. Once you’ve tested it enough times and in enough different enough settings, you become more confident in the theory (possibly to the extent that you stop evaluating it for most purposes), but it’s still not proven in the formal sense of the word.

There’s the rub. When you talk to scientists, they’re likely to insist on the formal sense of proof. You are using proof in a much more casual way.

Cheers!

Secular Animist et al.

I *think* we’re running into that hoary old problem of how scientists use specific words, though I couldn’t *prove* it in the scientific sense – couldn’t resist the silly turn of speech.

Scientists, in order to communicate, use words to which they assign more precise meanings than we generally apply in day-to-day life. In this case, I think proof is overdefined but that’s just me. I ran into an example in Oreskes, I think, about how we couldn’t prove that the sun would rise, but we are quite sure it will, something along those lines. Or, say, if one sees someone on the road using a cell phone one gives them a wide birth, being convinced without high-level scientific proof of danger in distraction (not saying there isn’t statistical information, just that on the roads we use a much looser definition to avoid trouble).

I am not entirely convinced that this stringent and careful practice is serving us well in this day of ignorant attacks being taken as gospel by the public, but we’re stuck with it.

MartinJB put it better than I did. That’s the idea.

MartinJB wrote: “When you talk to scientists, they’re likely to insist on the formal sense of proof. You are using proof in a much more casual way.”

Which is exactly why scientists are generally so ineffective at communicating with the public about global warming and climate change.

John Q. Public asks, “Has it been proved that humanity’s emissions of CO2 are causing the Earth to heat up?”

The scientist answers, “Well, it’s really impossible to ‘prove’ anything in science, because, you see, in a strict formal sense of the word ‘proof’, you can only ‘prove’ things in abstract mathematics, so … blah, blah, blah …”

John Q. Public hears, “No.”

WRONG ANSWER.

The RIGHT answer is, simply, “Yes.”

SecularAnimist — The overwhelming weight of the evidence establishes beyond a reasonable doubt that humans have added more than enought carbon dioxide to cause the climate to warm.

Longer than just yes but absolutely factual without the slightest hedging. Take your pick.

MartinJB @138 — That is Karl Popper’s notion of falsifiability as the hallmark of science. Unfortunately it is actually not always applicable and towards the end of his days, Sir Karl came to agree that falsifiability is too strong a notion for all the sciences. I suspect that in parts of biology and the vetenary & medical sciences, for example, that criterion can only rarely be met.

Instead one uses a form of

http://en.wikipedia.org/wiki/Bayes_factor

to determine of two hypotheses, H0 and H1 say, whether or not there is enough evidence to distinguish the two (often called models in this context). That is the hard part in that simply collecting more evidence may be out of the question. Even so, there is certain sujective aspect to determining whether the weight of the evidence clearly supports one of the two hypotheses. There are various tests such as

http://en.wikipedia.org/wiki/Akaike_information_criterion

to assist in the determination. Nonetheless, determining whether treatment B is actually ‘better’ than treatment A remains overly difficult. I’ll just say it is much easier than it was 15 years ago.

Bart & Jim: you’re talking past each other.

Bart:

Steve’s (and Lucia’s, and JeffID’s) argument only appears convincing because they omit the other half of the “Mannian” method: the part where the final reconstruction is actually tested against new, unseen data that was not used in the calibration / selection process.

I believe the reason why Lucia and Jeff don’t “register” this bit is due to unfamiliarity with basic machine learning concepts. Mann is basically applying standard machine learning to climate reconstructions (that’s pretty much the defining contribution of his career). People with a “hard” engineering background have usually not been exposed to machine learning. So when they see the “training” bit, they go berserk and ignore the “testing” bit. Or they see it just as a distraction, rather than the entire point of the method. The punchline of the paper isn’t the reconstruction itself, it’s the p-value of the fit on the (unseen) test data. It’s a completely different approach, so people can get confused.

As for Steve McIntyre, well, I suppose he has his reasons.

Jim:

You’re talking about pure dendrochronology, where the situation is different. There, you can find matches so strong that the probability of getting them by chance vanishes (example), so you don’t really need machine learning methods – though of course you want to have some external validation, especially in the distant past where uniformity is far from guaranteed (independent ENSO reconstructions or volcanic eruptions provide important sanity checks).

The “vanishing p-value” argument does not apply to massive multiproxy reconstructions a la Mann (or Gergis), where the match of each individual proxy to temperature is relatively weak. That’s what Bart is talking about. In this case, simply selecting proxies based on match with historical records *would* be a mistake – if you did *just* that. Which Mann doesn’t (neither did Gergis, apparently).

[Response:I don’t agree with this assessment. I’ve already mentioned that any calibration relationship has to pass validation/verification tests–that idea’s been around a long time; it’s not a “completely different approach” but a fundamental tenet of model testing and evaluation, something you learn as an undergraduate student. And the point of these papers is indeed the reconstruction itself–that’s the whole point. As for the relationship between proxy and climate, that can be strong, weak or non-existent; you can’t make the sweeping statement you did. Whatever fundamental relationship exists between ring response and environment is what will emerge in the final estimate. And please, don’t refer this whole issue back to Mike; that’s the skeptic approach of trying to focus and blame everything on him. The approaches used in this field have long histories and many people and viewpoints are involved–Jim]

Hey SecAn,

while I totally get your point, it has its problem. If a scientist says “yes, it’s proven” when speaking to the public, someone who wants to cause trouble will say that “look, this scientist said it’s proven, but in his publication he has these error bars. Liar!!!!” I’ll take David Benson’s formulation.

Cheers!

David,

Popper’s falsifiability is certainly a powerful notion, and one that works very well where it works. However, where it works is for relatively simple logical systems. A hypothesis can certainly be proven false. However, a theory that has a long track record of successful predictions, but suddenly gets one wrong…not so much. It is much more likely that the theory will be “tweaked,” or that some previously unconsidered influence will be found to be important for that situation. That is why the whole notion of “falsifying” anthropogenic warming or evolution is just silly. The theories will certainly change–I doubt either Arrhenius or Darwin would recognize their respective creations. The probabilistic/Bayesian approach does come a lot closer, and when joined with the information theoretic approach is pretty powerful.

Hi David,

as a former biologist (I did fisheries growth and population modeling), I absolutely agree. The idea of reducing the complexity of a system sufficiently or collecting enough data to even approach falsifying a model is, well, amusing. Almost as amusing as when my current colleagues (I’m in finance now) speak with certainty about the conclusions of economic models….

Cheers!

> something you learn as an undergraduate student.

Since when, youngster? :-)

Agreeing with Jim to watch the ‘founding father’ myth — statistical methods change a lot still, it’s a very new field, and new ideas get passed around and used by one group and adopted by others if they’re useful and if the statisticians consulted agree it may be worth applying new methods to new kinds of data.

I’ve seen some mighty complicated statistical analysis plans.

For climate change, there are no groups, no test and control planets, no pre- and post-treatment comparisons.

But the data are often paleo and point source records for comparisons.

I think y’all could restate the comparisons in really simple terms, here or somewhere, and begin to teach the rest of us some statistics.

Tim Curtin (as one example) provides an ongoing object lesson in the perils of that approach.

Does John Q. Public respond to statements that sound like they were made by Dr. Sheldon Cooper, and do they run to the scientific lit so they can jibber jabber about error bars?

How about something like, we know way beyond a reasonable doubt that human beings are causing the climate to warm too quickly. We know this has the potential to turn very ugly. Etc.

Or “proof enough”…

You ought to turn on your TVs and listen to the language aimed at certain segments of John Q. Public for technical matters: The Doctors, Dr. Oz… If they misestimated their audience by much, they’d probably be off the air already. And yes I know, it’s depressing.