Guest commentary from Barry Bickmore (repost)

The Wall Street Journal posted yet another op-ed by 16 scientists and engineers, which even include a few climate scientists(!!!). Here is the editor’s note to explain the context.

Editor’s Note: The authors of the following letter, listed below, are also the signatories of“No Need to Panic About Global Warming,” an op-ed that appeared in the Journal on January 27. This letter responds to criticisms of the op-ed made by Kevin Trenberth and 37 others in a letter published Feb. 1, and by Robert Byer of the American Physical Society in a letter published Feb. 6.

A relative sent me the article, asking for my thoughts on it. Here’s what I said in response.

Hi [Name Removed],

I don’t have time to do a full reply, but I’ll take apart a few of their main points.

- The WSJ authors’ main point is that if the data doesn’t conform to predictions, the theory is “falsified”. They claim to show that global mean temperature data hasn’t conformed to climate model predictions, and so the models are falsified.

- The WSJ authors say that, although something like 97% of actively publishing climate scientists agree that humans are causing “significant” global warming, there really is a lot of disagreement about how much humans contribute to the total. The 97% figure comes from a 2009 study by Doran and Zimmerman.

- The WSJ authors further imply that the “scientific establishment” is out to quash any dissent. So even if almost all the papers about climate change go along with the consensus, maybe that’s because the Evil Empire is keeping out those droves of contrarian scientists that exist… somewhere.

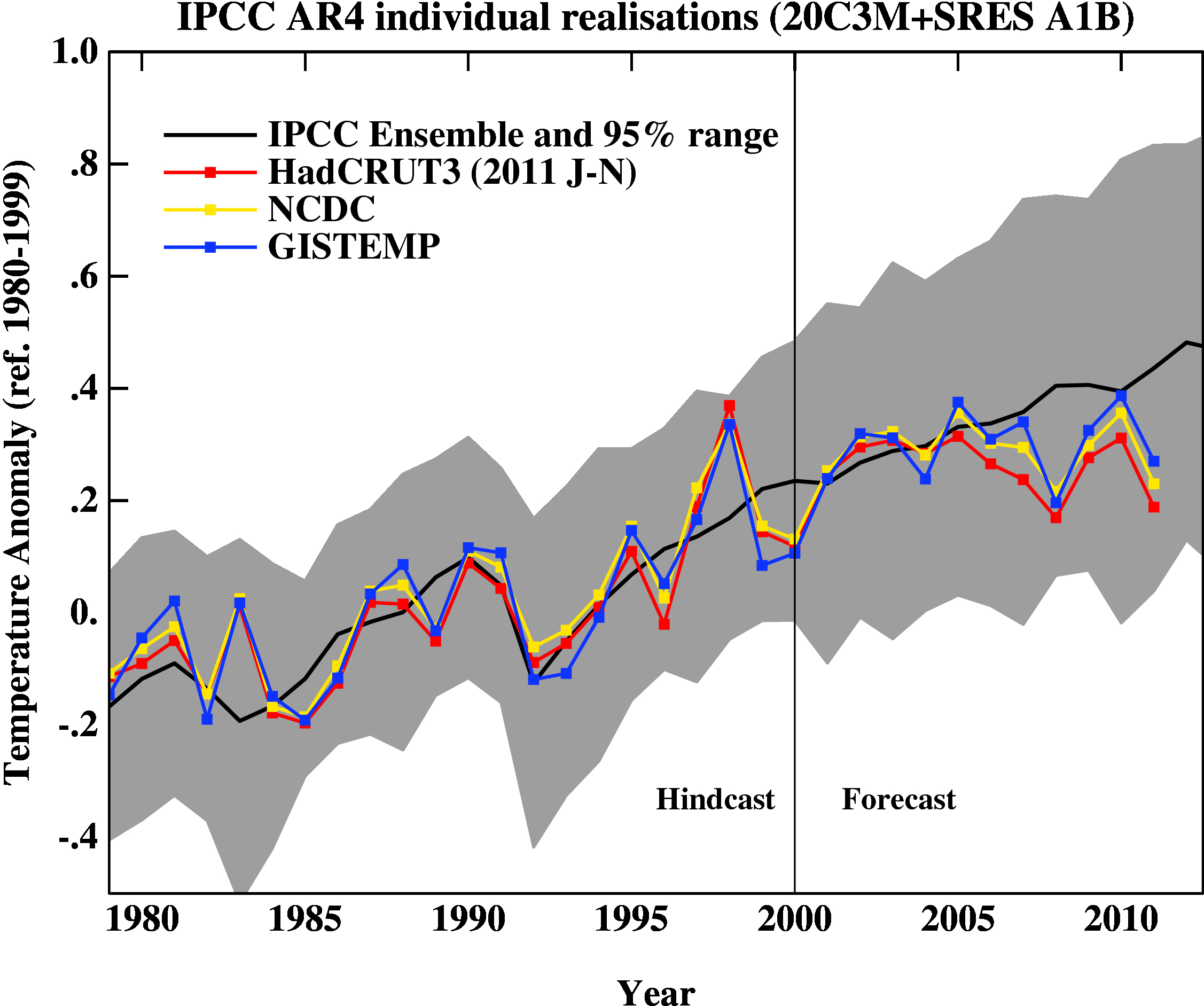

But let’s look at the graph. They have a temperature plot, which wiggles all over the place, and then they have 4 straight lines that are supposed to represent the model predictions. The line for the IPCC First Assessment Report is clearly way off, but back in 1990 the climate models didn’t include important things like ocean circulation, so that’s hardly surprising. The lines for the next 3 IPCC reports are very similar to one another, though. What the authors don’t tell you is that the lines they plot are really just the average long-term slopes of a bunch of different models. The individual models actually predict that the temperature will go up and down for a few years at a time, but the long-term slope (30 years or more) will be about what those straight lines say. Given that these lines are supposed to be average, long-term slopes, take a look at the temperature data and try to estimate whether the overall slope of the data is similar to the slopes of those three lines (from the 1995, 2001, and 2007 IPCC reports). If you were to calculate the slope of the data WITH error bars, the model predictions would very likely be in that range.

But let’s look at the graph. They have a temperature plot, which wiggles all over the place, and then they have 4 straight lines that are supposed to represent the model predictions. The line for the IPCC First Assessment Report is clearly way off, but back in 1990 the climate models didn’t include important things like ocean circulation, so that’s hardly surprising. The lines for the next 3 IPCC reports are very similar to one another, though. What the authors don’t tell you is that the lines they plot are really just the average long-term slopes of a bunch of different models. The individual models actually predict that the temperature will go up and down for a few years at a time, but the long-term slope (30 years or more) will be about what those straight lines say. Given that these lines are supposed to be average, long-term slopes, take a look at the temperature data and try to estimate whether the overall slope of the data is similar to the slopes of those three lines (from the 1995, 2001, and 2007 IPCC reports). If you were to calculate the slope of the data WITH error bars, the model predictions would very likely be in that range.

Comparison of the spread of actual IPCC projections (2007) with observations of annual mean temperatures

That brings up another point. All climate models include parameters that aren’t known precisely, so the model projections have to include that uncertainty to be meaningful. And yet, the WSJ authors don’t provide any error bars of any kind! The fact is that if they did so, you would clearly see that the global mean temperature has wiggled around inside those error bars, just like it was supposed to.

So before I go on, let me be blunt about these guys. They know about error bars. They know that it’s meaningless, in a “noisy” system like global climate, to compare projected long-term trends to just a few years of data. And yet, they did. Why? I’ll let you decide.

So they don’t like Doran and Zimmerman’s survey, and they would have liked more detailed questions. After all, D&Z asked respondents to say whether they thought humans were causing “significant” temperature change, and who’s to say what is “significant”? So is there no real consensus on the question of how much humans are contributing?

First, every single national/international scientific organization with expertise in this area and every single national academy of science, has issued a statement saying that humans are causing significant global warming, and we ought to do something about it. So they are saying that the human contribution is “significant” enough that we need to worry about it and can/should do something about it. This could not happen unless there was a VERY strong majority of experts. Here is a nice graphic to illustrate this point (H/T Adam Siegel).

But what if these statements are suppressing significant minority views–say 20%. We could do a literature survey and see what percentage of papers published question the consensus. Naomi Oreskes (a prominent science historian) did this in 2004 (see also her WaPo opinion column), surveying a random sample of 928 papers that showed up in a standard database with the search phrase “global climate change” during 1993-2003. Some of the papers didn’t really address the consensus, but many did explicitly or implicitly support it. She didn’t find a single one that went against the consensus. Now, obviously there were some contrarian papers published during that period, but I’ve done some of my own not-very-careful work on this question (using different search terms), and I estimate that during 1993-2003, less than 1% of the peer-reviewed scientific literature on climate change was contrarian.

Another study, published in the Proceedings of the National Academy of Sciences in 2010 (Anderegg et al, 2010), looked at the consensus question from a different angle. I’ll let you read it if you want.

Once again, the WSJ authors (at least the few that actually study climate for a living) know very well that they are a tiny minority. So why don’t they just admit that and try to convince people on the basis of evidence, rather than lack of consensus? Well, if their evidence is on par with the graph they produced, maybe their time is well spent trying to cloud the consensus issue.

The WSJ authors give a couple examples, both of which are ridiculous, but I have personal experience with the Remote Sensing article by Spencer and Braswell, so I’ll address that one. The fact is that Spencer and Braswell published a paper in which they made statistical claims about the difference between some data sets without actually calculating error bars, which is a big no-no, and if they had done the statistics, it would have shown that their conclusions could not be statistically supported. They also said they analyzed certain data, but then left some of it out of the Results that just happened to completely undercut their main claims. This is serious, serious stuff, and it’s no wonder Wolfgang Wagner resigned from his editorship–not because of political pressure, but because he didn’t want his fledgling journal to get a reputation for publishing any nonsense anybody sends in.[Ed. See this discussion]

The level of deception by the WSJ authors and others like them is absolutely astonishing to me.

Barry

PS. Here is a recent post at RealClimate that puts the nonsense about climate models being “falsified” in perspective. The fact is that they aren’t doing too badly, except that they severely UNDERestimate the Arctic sea ice melt rate.

Barry Bickmore say that the “individual models actually predict that the temperature will go up and down for a few years at a time, but the long-term slope (30 years or more) will be about what those straight lines say.” I’ve plotted annual temperature for 23 models in my site here which show that both parts of the statement (that predictions go up and down for a few years and that the 30-year trend is followed) are both true.

What is surprising is the difference between the models. The average temperature of the ‘hottest’ model is 15.4 °C and of the ‘coolest’ is 12.4 °C. I’ve also plotted precipitation and there are similarly large differences. The ‘wettest’ model has an average precipitation of 1184 mm/year and the ‘driest’ a precipitation of 918 mm/year. These represent large differences in forcing.

I’ve got two questions:

1.Do the differences between the models matter; if not, why not?

2.Why does the IPCC almost completely ignore precipitation which is as important as temperature for climate prediction?

While the criticism of the WSJ article is well justified, the comment on error bars raises an obvious problem – the readers of the WSJ are unlikely to have the faintest idea of what an error bar means. Ideally our institutions of higher learning would be dealing with this problem, but I fear they are falling short. When I checked the otherwise excellent text “Global Warming..” by Archer, I failed to find any discussion of error bars or statistics. Apparently the students of a prestigious institution of higher learning are not expected to understand such basic concepts as standard deviation and normal (Gauss) distribution. I did find one “bell” curve, but sad to say it described the expected production of oil by year. Surely it would have been more useful to show how the IPCC uses the bell curve to estimate the probability distribution for climate sensitivity. Might I suggest that the NSIDC data on Arctic Ice Coverage could be used to provide a very nice illustration of how to determine trend curves { even if you can only find the error bars in the responses to FAQ’s}. A minor peeve with the climate community – ppm vs. ppmv. Archer quotes CO2 concentrations as ppm while most of the literature uses ppmv. Students who have a hard time with standard deviations are unlikely to see that, assuming Avogadro was right, ppm and ppmv are equivalent. So could we please standardize on one or the other unit.

Q:

If we assume the earth is absorbing more energy due to increased CO2 levels, then some parts of the earth must warm. Since the oceans are the largest potential heat carrier we expect them to carry most of this burden if we had an equilibrium state (do we ever expect this?). Since humans live on land we are interested in the air temperature a few feet off of the ground, but that doesn’t carry much heat.

So, an interesting question is how much temperature variability of this low atmosphere measurement can be due to heat just sloshing around the globe, from ocean to air and back, without increasing or decreasing the total heat content? What time constant would this have?

I suppose this is one type of “natural variability”. Another is variations in cloud cover, or moisture content of the atmosphere. What time constant does this have?

Why don’t we measure or estimate overall heat content of the globe instead of the temperature of something like the atmosphere 6 ft off the ground with a tiny heat capacity? Why is this the headline number? Is it a good proxy for heat content? Is there a less noisy measurement possible with better instrumentation?

CPV, I’ll take a stab at answering your questions. You have hit the nail on the head in noting that ocean temperatures are the ideal measurement of the earth’s heat content, and that air temperatures are less useful. Our problem is a pragmatic one: we have lots more air temperature data and ocean subsurface temperature data. This breaks down into several dimensions:

Historical: we have widespread reliable air temperature data going back more than a hundred years for many different locations on the planet; our ocean temperature data only goes back to (roughly) the 1950s, and even that is patchy.

Proxies: we have lots of proxies for air temperature in tree rings, isotopic ratios in ice, and various biological markers. The only proxy we have for water temperatures is coral, and that doesn’t go back very far, is limited to warm areas, and doesn’t go very deep.

Depth: Most of the ocean’s water is far below the surface and so participates in climate with a very long time constant. That time constant varies greatly with the degree of mixing, both lateral and vertical, but is on the order of decades.

Satellites: our best modern data comes from satellites, but they can measure only surface temperatures, which again are not good representatives of overall water temperatures.

The ‘sloshing around’ of heat that you mention is in fact observed in the various decadal oscillations. Obviously, these complicate the analysis.

Here’s another kicker: the greenhouse effect serves to directly increase surface temperatures, which increases are then communicated to the ocean depths by a slower, indirect process.

The ideal measurement, which is only now becoming feasible, would be obtained by a large set of globally distributed thermometers deep in the ocean. The weighted average of these readings would give us a stable, almost noiseless long-term measure of the overall heat content of the earth’s thermodynamic system. However, it would suffer from its disconnect from what we actually experience. After all, the “weather” deep in the ocean isn’t what we worry about; it’s the weather we experience on the surface.

BTW, this suggests one simpler measure of ocean heat content: average global sea level. Sea level rise comes from two components: melting ice and thermal expansion. That thermal expansion component is an excellent measure of overall ocean heat content. But disentangling it from the ice melt contribution is a bit tricky, and only recently have we started getting satellite data good enough for this task.

[Response:Actually radiation comes from the atmosphere, not from deep in the ocean, and the atmospheric temperature profile scales pretty well with changes in near-surface air temperature. So, the temperature that will warm to bring the Earth back into equilibrium is the surface temperature. The deep ocean is important only as a delay mechanism, determining how long it takes for the surface ocean to warm up. Measurements of the deep ocean heat storage are important since they give a direct indication of how much the oceans have delayed the approach to equilibrium, but the air temperature measurements are of prime importance since we live in the air, and it’s the air temperature changes that will get us most directly. –raypierre]

CPV @103

Addressing your first couple of questions, Foster & Rahmstorf 2011 show what happens when you extract the impacts of ENSO, TSI & volcanic aerosols from the surface temperature record. The ‘sloshing heat’ of ENSO is by far the biggest cause of temperature wobbles. I add a link to a graph I maintain to demonstrate the point.

Foster & Rahmstorf 2011

http://sciences.blogs.liberation.fr/files/rahmstorf-vraies-temp%C3%A9ratures.pdf

A wobbly graph (two clicks down link).

https://1449103768648545175-a-1802744773732722657-s-sites.googlegroups.com/site/marclimategraphs/collection/G09.jpg?attachauth=ANoY7cohb1ILqnK1chNctcIAnvynh7pkWLTPzYtyWWzbseaIJ4EjD2OO0LO0tTkriGuEfbg1Rg8Zuywu8IRZo2KSB8Z5JMqXKMXKOvwGwusY8xkxj–sNxBLal4TX4EPJkFcyvXKtHWJNfRLDjsxDNyyXBWRkJPHqlHyqkeLlrIOogzVkDqXwiAbJ16dtOhmssKIyJtVoKQd51QmwhbDSdkm9hHD5V8vHg%3D%3D&attredirects=0

Thanks, Chris (#104)!

The measurement problem seems hard. I can see how it leads to disputes in interpretation, especially in the short term. I don’t think the critical need for much more sophisticated or widely deployed instrumentation gets heard out of this noisy debate. Is there a good summary somewhere of what instrumentation is in the pipeline?

Without any measurements you can start with the baseline zero feedback approach, that seems plausible to me ceteris paribus, and seems to match the rough magnitude of the long term trend data, such a it is. It would then be up to feedback models to match some type of atmospheric observable or other better quality data to be validated, IMO. Is there a scientific counter argument to this?

There aren’t many serious disputes over the basic temperature record; we have so many different sources that agree with each other that there is no question about the basic conclusion that the earth’s temperatures have been rising. The complexities arise from noise and lag. In the case of global temperatures, the signal to noise ratio is now high enough to reject any hypothesis that temperature changes we have seen in the last century are attributable to noise. But we always like to get every detail nailed down, and so much work continues in cleaning up the signal.

The lag issue arises from the fact that the oceans are lagging the atmosphere in their response to the greenhouse effect. This means that they are holding back temperature increases that we would be experiencing were there not so much water soaking up heat. To put it in stark terms: the temperatures we are now experiencing reflect the state of affairs several decades ago; were we to stop all carbon emissions today, the earth’s temperature would continue to rise for some time. That’s a truly pessimistic thought.

CPV:

And when it is stated, it’s frequently taken out of context and twisted by the denialist community. For instance, Kevin Trenberth’s “travesty” e-mail was about the lack of observational data and our need to improve monitoring, as was clear if you read the paper of his that he referred to, but has been spun as being something very different by denialist interpreters of “Climategate”.

Physics argues against this, hard to get around the fact that warmer temps tend to lead to greater evaporation from the oceans therefore higher absolute humidity, which leads to water vapor generating a positive feedback.

That’s not what the professionals seem to think. Do you know something they don’t ???

Thanks MA Rodger!

A lot of the trend arguments seem to revolve around one side or the other picking these peaks or valleys as a starting point for some calculation that fits an agenda!

I am having trouble sorting out several issues when it comes to OHC and how it relates to decedal osscilations verses OHC as an explanations for the radiation imbalence. As I understand it, in regards to the earths radiation imbalence the ocean has a great potential to alter its baseline heat content state, which could help explain why there has not been surface warming in the last 10 years despite net intake of radiation. On the other hand there is a decadal trend whereby the atmosphere and the ocean trade heat content over roughly 10 year time periods.

To what extent is the former true? How much heat that one might expect to affect surface tmeperature could be absorbed by the ocean? If that heat is not going to be returned to the surface does that mean we should be less concerned about surface tmeperature warming and more concerned about sea level rise?

In regards to the decadal osscilations, have we just gone through a period whereby heat has been traded into the ocean and should we expect it to oscillate back to the surface temperature in the next decade? It seams like there are a number of noise variables potentially working against a current trend in surface temperature warming. To what extent can we model el nino/el nina events or decadal oscillations (the noise) in future predictions?

Would it be possible to differentiate forcing events to surface temperature from forcing events to the earths temperature?

The key line in Doug Proctor’s comment (#51) is this:

“all you who hope to correct Man’s ways through CO2 control”

Based on that, you could pretty much predict how the rest of his comment will go.

Is there a good summary somewhere of what instrumentation is in the pipeline?

One place to look, find other places to look:

Argo

Forgot to mention, with regard to Argo, other programs of that type the currently dominant party in the US House of Representatives is quite desperate to defund these activities. Good thing to remember when making choices as a voter.

> the baseline zero feedback … seems plausible to me ceteris paribus,

Translating:

“baseline no feedback seems plausible [assuming no feedbacks, no changes]”

Why assume what always happened in the past won’t happen now?

Scholar “climate feedbacks” — Results … about 1,580,000.

I am unclear as to the difference between: 1) due to higher than expected (but still within the uncertainty range) sea level rise we need to revisit the way we model future SLR and 2) due to the lower than expected (but still within the uncertainty range) surface temperature rise, we need to revisit the way we model future surface temperature change.

It seams that while it is ok for Stefan Rahmstorf to take this line of logic in regards to SLR it is not ok when applied to surface temperature modelling.

http://www.sciencemag.org/content/315/5810/368.full

Now granted SLR modelling is a lot more challenging and a lot less mature than surface temperature modeling and probably does need revision. I also understand the timelines are different. Nevertheless it strikes me as inconsistent. It comes down to the confidence you have in the underlying physics and the methods used to create the model not what the tendline is at any given moment. It is so easy to make a graph look like it is accelrating/decelerating or not by adjusting the smoothing and the starting point.

[Response: Any kind of mismatch is interesting from a research point of view – and going in, you have no idea whether the mismatch might cause you to rethink some (or all) of your initial assumptions. Each case needs to be investigated separately. Sometimes it’s quickly clear that something important was left out (dynamic ice sheet effects for SLR for instance, or aerosols as in some of the early work on transient predictions), other times, it is related to a mismatch in the forcing scenario (CFCs were discontinued in the 1990s – something not envisaged in the 1980s predictions, which makes them harder to evaluate), sometimes the observed data itself is wrong (i.e. the MSU ‘cooling’ error), other times it is the nature of the comparison that is at fault (i.e. comparing ensemble means to single realisations over short periods). It is never as simple as just comparing two trends and deciding. – gavin]

CPV, We have all the data we are willing to pay for. We don’t have more data because although climate change is the most important thing going on it gets only a small fraction of the research budget. Some potentially valuable data collection is just too much for some in Congress to allow. We don’t use our most reliable launch vehicles for climate satellites and some that have been launched did not survive. We measure surface temperatures a lot because that’s where we live.

Gavin,

It is very clear that sceptics are using the recent pause in warming to successfully attack the models, which of course we agree is very premature, as the sample period they refer to is not long enough to exclude short term natural variations.

But, just for the record, how many more continued years of ‘no warming’ would it take for you to start doubing the models ? (Assuming no large volcanic eruptions). Personally, I would be concerned if the trend remained flat until 2020 (Of course I don’t expect this to happen). But, putting a timeframe on this might help squash the sceptic’s argument.

[Response: Well it might if it was a serious argument, but since it isn’t, it won’t. But I’ve made such statements in the past – here – here etc. – and I’m happy to be held to those ~4 year old opinions. – gavin]

Pete: We don’t use our most reliable launch vehicles for climate satellites…

Recycled missile boosters with a positively wretched (25%) “success” rate* used when data necessary to assure safety for millions of people is in play, human-rated vehicles absolutely mandatory when one or a handful of lives are at risk.

Human nature is sometimes very difficult to figure out.

*Although to be fair the last two climate research satellites going to their graves in the ocean were killed by el-cheapo aerodynamic fairings. Still, Orbital describes these botches as “cost-effective”; how does the definition of “cost-effective” include “does not actually work?”

Hank Roberts in #99 suggested that I look up a blog post presenting Mr. Proctor’s ideas on the effects of clouds on climate. I found it readily enough and read Mr. Proctor’s piece. It was embarrassingly bad, riddled with pedestrian blunders. I posted a criticism of just the first paragraph, which was rejected by the moderators on the grounds that it constituted libel. Surprised at their response, I rewrote my post to remove all references to Mr. Proctor, relying heavily on passive tense and references to quotations from his piece. This too was rejected on the grounds that it was “attacking”. Ironically enough, the blog declares that its only rule is that there are no rules.

Except, of course, for the rule banning critical commentary. ;-) I should have known better — it’s a denialist website.

Ack! “passive VOICE”, not “passive TENSE”. I’m covered with shame!

dhogaza:

Last 100 yrs temperature rise is 1.2 C, CO2 goes from 280-390 ppm. That fits an equation like 1.9 C * ln(CO2(2)/CO2(1))/ln(2), which I think is pretty close to zero feedback estimates. If this is not a reasonable way of looking at the record, why not?

[Response: It’s not a reasonable way to look at it because (a) you haven’t allowed for aerosol cooling effects (or for that matter any non-CO2 effects), and (b) you haven’t allowed for ocean heat uptake, which has caused the warming to lag the equilibrium value. Before going off half cocked, you should read some of the extensive literature on estimating climate sensitivity from data. Knutti and Hegerl is a good place to start. –raypierre]

dhogaza:

That’s not an argument. That’s a political statement of some sort. No credit.

The 1.2 C rise over past 100 yrs matches a simple no-feedback logarithmic model pretty well. Given the certificates in the measurements, I’d be inclined to use the longest data set we have and take guidance from that.

[Response: No it doesn’t – with such a scenario you wouldn’t see anything like as much ocean heat content change. – gavin]

Is there a good summary somewhere of what instrumentation is in the pipeline?

A rhetorical question, apparently, and yet another red flag missed.

In their first op-ed the sixteen made a point which seemed quite plausible

at first sight.

where ‘this’refers to the CO2 fertiliser effect. But is it true that the major foods evolved so long ago? It is absurd for me to become an expert in about two minutes of searching on Google ; furthermore using Google involves some typical problems such as the need to navigate around this sort of web page:

“Liberals, how does evolution explain the Irish potato famine?”

so I hope others will help me. Potatoes seem to be quite simple, it appears that they are supposed to have emerged in Peru about 7,000 years ago when CO2 was far lower than claimed in the quote. So I now have doubts about the other crops. How about the emergence of the modern forms of rice, maize, wheat and sorghum? I did find this

http://www.pnas.org/content/101/26/9903.long

which I have not digested yet.

Incidentally I hope creationists will accept my assurance that I did not originally set out to open up a split between them and these authors. I am more concerned with ensuring that all sixteen of them have checked their article for accuracy. That is supposed to be an advantage of multiple authorship.

CPV:

The no-feedback sensitivity to CO2 doubling alone is about 1C, so with a zero feedback scenario we wouldn’t expect to get 1C warming until CO2 rises from the late 1800s value of 280 to 560. Yet here we are with a 1.2C rise with much less than a doubling of CO2.

1.9C’s about mid-way between the zero feedback and IPCC best estimate for sensitivty. And that ~3C estimate is for *equilibrium* sensitivy, we’ve not seen all of the warming that is expected for the rise we’ve seen thus far. And there are other non-feedback factors involved – higher TSI in the first half of the 19th century, solar minimum the last decade, increased aerosol output due to human industrial activity, etc. These factors (and more) need to be teased out rather than handwave as though CO2 and feedbacks are the *only* factors that affect climate.

CPV,

Your argument assumes we know the total radiative forcing with good enough accuracy and that the system has re-established radiative equilibrium. Neither are true.

deconvoluter, plants and animals have been evolving for hundreds of millions of years. So what?

http://www.washington.edu/news/articles/models-underestimate-future-temperature-variability-food-security-at-risk

Ocean acidification? Don’t worry, evolution has been going on for a long time. Don’t worry, sea life has been evolving for a long time. Yes, hasn’t it.

denconvoluter #124 asks about the observations that plants and animals evolved when CO2 concentrations were about ten times higher than they are today. There are indeed some problems with this statement. First, it appears to confuse photosynthesizing creatures, which arose more than 3 billion years ago, with non-photosynthesizing creatures. But let’s give them the benefit of the doubt and focus exclusively on photosynthesizing creatures. Yes, the early atmosphere in which they evolved was quite different from the modern atmosphere. In the first place, it had no oxygen. The oxygen in our atmosphere came from all the photosynthesis. I’m not sure that they would prefer such an atmosphere. Their basic point, that higher concentrations of CO2 promote faster growth rates of plants, is solid. What they don’t mention is that an earth with lots more CO2 in its atmosphere would be a very different place than today’s earth. Most of its surface would enjoy tropical conditions and the sea level would be quite a bit higher. I’m tempted to suggest to them a temporal variation on the old line “love it or leave it”: if you want to live in the Carboniferous Period, build a time machine and go there! ;-)

Seeing how the WSJ has been so wrong about the subject it claims to be expert in (economics, high inflation as a result of the stimulus, etc.) it’s not surprising they are also clueless about climatology.

I am not a scientist, but have been following this as closely as I can. My understanding of what Dr. Hansen at GISS has said is that La Nina and the solar minimum during the past 3 years have prevented temperatures from rising as much as during an El Nino and solar maximum, both of which we will probably see during the next few years. Did the WSJ take Nino/Nina and solar cycle into account?

CPV, were the temperature record of the past 100 years all we had to explain, and were the system we are dealing with not a complex, interconnected one with various delays and couplings, your suggestion might be a reasonable one. Unfortunately, that doesn’t look much like Earth, does it. It turns out that we cannot explain a broad range of phenomena–from climatic response to volcanic eruptions to temperature swings from glacial to interglacial to… unless the climatic system has significant positive feedback.

What is more, we know that strong positive feedbacks exist in the system, and we know that heat is exchanged with the deep ocean. You need to look at ALL the evidence and then look at the successful predictions by the theory that best explains ALL the evidence. You will notice that the so-called skeptical scientists are not proposing alternatives.

I am still stunned when I read some commenters claiming no warming or a stall in GT’s, or even a cooling since 1998. We must present things differently in order to make it very difficult for them, so gullible or ignorant, who try to claim likewise. “There is no lull” alternate presentation is badly needed. Sort of like a standard referring link which needs to be explained wrong before anyone can claim that ice melts more when temperatures cool.

I must remind that we live at the bottom of an hydrostatic atmosphere, when even cooling so far up

has an impact on us bottom dwellers. An apparent cooling on the surface may not match what is going up above. Everything shifts in our atmosphere constantly seeking equilibrium. The temperature record is one but many ways of measuring warming. True cooling requires strong evidence, the best metrics are not thoroughly measured, 3d ocean temperatures, small glaciers, the true surface temperature for melting sea ice having shifting total sea water thermal signatures, the effects of wind sublimation, all these are important, yet I read again and again that it hasn’t warmed in ten years. Time to change gears and focus on making the case unassailable.

Previously in this thread somebody asked for leads on information to do with gathering data on heat content of the ocean-atmosphere system. There’s no such thing as bad ink, even if the original question was simply part of the theatrical setting for subsequent drama; delving into the UCSD Argo site reveals detailed records of Argo meeting reports. This is quite fascinating stuff.

Reading the Argo meeting reports makes contemplated vandalism by the party in control of the US House even more irritating to imagine, sort of like picturing malicious adolescents equipped with spray paint being let loose in a museum of fine art.

You might also consider whether all sixteen had suitable skills to apply those checks – and this might not be entirely obvious from the descriptions given by the WSJ.

(I’d say Burt Rutan has ruled himself out on that measure over at Scholars & Rogues although he continues to imply otherwise – e.g. most recently by posting a link to a rehashed piece by David Evans claiming it answers critiques of his claims. Evans’ piece claims amongst other things that climate sensitivity is about 0.6 C per doubling.

Not sure about the other fifteen.)

An asside, but going to the general issue raised in the 1st paragraph of the response of the WSJ16, “expertise is important … in any matter of importance to humans or our environment.”

Revkin headlined a post on his blog about the original WSJ16 OpEd of Jan 27th with, “…[They].Appear to Flunk Climate Economics.” He solicited a reaction from economist William Nordhaus who, in their Jan OpEd, the WSJ16 explicitly sourced for their statement “… nearly the highest benefit-to-cost ratio is achieved for a policy that allows 50 more years of economic growth unimpeded by greenhouse gas controls …. And it is likely that more CO2 and the modest warming that may come with it will be an overall benefit to the planet.”

Nordhaus replied, “The piece completely misrepresented my work …. I can only assume they either completely ignorant of the economics on the issue or are willfully misstating my findings.”

This time around, they confine themselves to two paragraphs on economics and abandon any effort at claiming support of an “economist”, instead relying on variations of the self-evident, to them, argument that if atmospheric CO2 abatement were economically “efficient”, it would be happening now through the agency of Adam Smith’s farsighted fairies.

Speaking of the skills of some of the other fifteen, this:

and this:

may prove useful.

And this also surveys the climate change research credentials of the 16 (within a larger article also looking at the credibility of some of their claims and more…).

Perhaps the owner of the WSJ needs to be pressured into applying his new ethical standards for the SUN, to the WSJ as well.

Which journalistic standards and ethics were violated by the WSJ in the Oped in question?

The Wall Street Journal is an American English-language international daily newspaper. It is published in New York City by Dow Jones & Company, a division of News Corporation, along with the Asian and European editions of the Journal.

Sun on Sunday hits news stands with ethics pledge

Rupert Murdoch’s Sun on Sunday tabloid hit news stands on Sunday, replacing the defunct News of the World with a pledge to meet high ethical standards after a “challenging” chapter in its history.

Inside, an editorial titled “A new Sun rises today” said the newspaper was appointing a so-called Readers’ Champion to deal with complaints and correct errors, while also vowing that its journalists would be ethical.

“Our journalists must abide by the Press Complaints Commission’s editors code, the industry standard for ethical behaviour, and the News Corporation standards of business conduct,” the editorial read.

102 Dave Griffiths: “Global Warming” by Archer is intended for non-science majors. I agree that everybody should be required to take the Engineering and Science Core Curriculum plus a laboratory course in probability and statics. Push for it, but don’t count on it happening any time soon. The innumerate humanitologists and people in general do not have that much math IQ. Maybe when we become the Borg…. There is a statistics book that is suitable for third graders. The title is “Probability” and the author is Jeanne Hendrix or Bendix or something like that. My daughter liked it so well that she made a histogram when she was 8 years old. Then the public school teachers convinced her that she didn’t like math.

Actually, it’s arguably worse than that. In the article text it claims:

It provides no citation for the claim, although it does have a footnote at that point – but it says, in entirety:

So Evans argues – without evidence – that a doubling of CO2 may lead to as little as 0.275 degrees C warming.

And then there’s the absolute howler of a deliberately deceptive claim that:

This, from a guy who is touted as having a Stanford Ph.D. in Electrical Engineering, a discipline about which the article claims:

All of this means that he must have studied automatic control theory (likely to an advanced level) and knows that positive feedbacks with gain below unity are stable – and should know that net feedback in the climate system is considered to have a gain of well below 1.

And yet he has the unmitigated gall to argue that:

I think Stanford might want their Ph.D. back.

Doug posits “CAGW narratives and solar-dominant theories” and Dave123 asks “What solar dominant theories?”

I think Doug means the Svensmark/Lindzen theory where less solar activity causes more GCRs which cause more clouds and that causes cooling which counteracts trace gas CO2 forcing which is saturated or minor or something and so the increasing temperatures aren’t the result of fossil fuel combustion.

Which means that we don’t have to change anything about our fossil fueled society by changes which he alleges are “considered too harmful.”

Until the fossil fuel runs out.

At which point society will have to convert to renewable energy sources. Hubbert predicted peak oil and increasing fuel prices back in ’56 about the same time Gilbert Plass was expanding upon Callendar’s work on global warming, infra-red radiation and anthropogenic carbon dioxide. Which was in turn based on Svante Arrhenius’ 1896 work. All part of the soc Zialist econazi conspiratorial CAGW hoax, no doubt.

“I understand why Barack Obama wants to send every kid to college, because of their indoctrination mills, absolutely,” Rick Santorum said in an interview with Glenn Beck. “The indoctrination that is going on at the university level is a harm to our country.” I’m surprised he didn’t note that reality has a liberal bias, hence the need for conservative Republicans “to create our own reality”.

Liberals are the most educated group with 49% being college graduates compared to an average of 26.5% among all the conservative groups.

College graduates (59%) and those with some college experience (55%) are more likely than those with less education to view the Republican Party as more extreme.

According to Pew Research 70% of college grads view global warming as a somewhat or very serious problem; 58% of Republicans think GW is not a problem(which probably boils down to “not MY problem”).

deconvoluter @124

While worries about the combined impact of higher CO2 & climate change on most plants is often dismissed with the silly ‘they-evolved-when-CO2-was-much-much-higher‘ argument or the ‘plant-food‘ argument, the case of C4 grasses does tend to point out the silliness at it directly contradicts their statements.

While grasses as a whole began to thrive under ‘low’ CO2, C4 grasses appear to have actually evolved under such conditions. There are relatively few species but they account for 30% of terrestrial carbon fixation, are predominantly tropical & include major food crops maize, sugar cane, sorghum, and millet.

http://en.wikipedia.org/wiki/C4_carbon_fixation

(I should clarify that I’m talking about “loop gain” being below unity to amplify without triggering runaway feedback; Evans talks first about the mainstream conclusion that net feedbacks result in a net amplification which is finite and positive (e.g. ~3x) and therefore indicates positive feedback with a loop gain < 1, e.g. ~2/3 if I recall my geometric series correctly.)

re: my #124 (after some sleep)

I still think that the sixteen’s attempt to invoke evolution as an argument that food production will be assisted by increasing CO2 is highly non-rigorous. Contrarians are all too quick to drop their usual argument that it is impossible to predict anything because there are too many variables.

[I am open to correction in the following]

Very roughly natural selection tends to optimise numbers whereas artificial selection following domestication optimised what humans require such as food and protein. As just one example, it appears that most of the grasses used for foods emerged during the holocene and were then further modified by artificial selection and both stages occurred at relatively low levels of CO2. By the way I am aware of the CO2 fertilisation effect.

How about Fox News?

Anyway the trouble is that these standards refer to a promise not to bribe the police for private information or to continue with phone hacking on an industrial scale, it may not extend to telling the truth about science and health.

Saw this recently on Paul Krugman’s blog (of course mentioning that will send certain folks into denial sphere – anyone who reads that is a xxxx and thus should not be listened to – but the comment was very valuable in understanding some of what is going one the very perilous situation we are in where some people who ought to be part of finding solutions/mitigations aren’t even seemingly able to face the evidence in front of them.

From Krugman’s blog – “Digby sends us to Chris Mooney on how conservatives become less willing to look at the facts, more committed to the views of their tribe, as they become better-educated:

For Republicans, having a college degree didn’t appear to make one any more open to what scientists have to say. On the contrary, better-educated Republicans were more skeptical of modern climate science than their less educated brethren. Only 19 percent of college-educated Republicans agreed that the planet is warming due to human actions, versus 31 percent of non-college-educated Republicans.

…

But it’s not just global warming where the “smart idiot” effect occurs. It also emerges on nonscientific but factually contested issues, like the claim that President Obama is a Muslim. Belief in this falsehood actually increased more among better-educated Republicans from 2009 to 2010 than it did among less-educated Republicans, according to research by George Washington University political scientist John Sides.

The same effect has also been captured in relation to the myth that the healthcare reform bill empowered government “death panels.” According to research by Dartmouth political scientist Brendan Nyhan, Republicans who thought they knew more about the Obama healthcare plan were “paradoxically more likely to endorse the misperception than those who did not.”””

If being eduacated and supposedly being versed in what a bill contained provided little to no protection from taking a really weird stance, then how can we address the problem? Note – these stances make the person seem less educated/informed etc and makes their criticisms less likely to be heard because it then becomes hard to take them seriously.

So education, facts, what you would think would be a need to appear credible has little impact on getting these folks to change thier minds – what is left?

By the way – I don’t see this as an indictment of conservatives, I am sure that the same thing would be found if certain other groups were checked – th eissue is how to reach people and get them to see the problems/facts.

@97…I realize this is not science, but the “the cost is prohibitive therefore do nothing” argument has a lot of holes in it. There are a number of rather cheap things that we could be doing that could have significant impacts on ultimate costs. As a couple of examples (which are occurring in some places already), zoning changes can be made to stop building in what will become flood zones. Subsidized property insurance for zones with a high likelihood of flooding could be stopped. These 2 alone could save many, many billions world-wide and cost very little up front–well except to property speculators and truly committed denier types! There are any number of additional relatively cheap things we could experiment with right now. To not do so actually risks “prohibitive costs” rather more than not doing so in my opinion. More sophisticated economic modeling could probably identify specifics in fairly short order.

Lotharsson

The claim by Evans is ridiculous. As it happens, I just did a post discussing why positive feedbacks don’t necessarily lead to a runaway effect (using some of the ccm radiation code from Ray Pierrehumbert’s online supplement to his book). It’s even possible for the feedback factor to exceed unity locally to bifurcate into some new climate regime that is not necessarily a runaway. Perhaps he should have bothered to even work out the problem.

Yep, reliable prediction in the face of uncertainty for me, but not for thee…

This observation needs a memorable name and needs to be pointed out over and over and over again.

“This observation needs a memorable name and needs to be pointed out over and over and over again.”

Hard to think of something pithy, how about

“the uncertainty flip”? Or “the uncertainty U-turn”?

Maybe “Uncertainty U-ey?”

I think it is something broader than just regarding prediction. Just that YOU can know nothing due to all the uncertainties, but I’M certain it’s X, or that it can’t be what you say…

ReC:gentlemanly sicsat

So someone is claiming in writing that positive feedbacks must lead to a runaway climate? That’s just plain ordinary dumb.

Link for Donna’s comment:

http://krugman.blogs.nytimes.com/2012/02/27/this-tribal-nation/

When the denying gets harder, deniers deny harder.