And so it goes – another year, another annual data point. As has become a habit (2009, 2010), here is a brief overview and update of some of the most relevant model/data comparisons. We include the standard comparisons of surface temperatures, sea ice and ocean heat content to the AR4 and 1988 Hansen et al simulations.

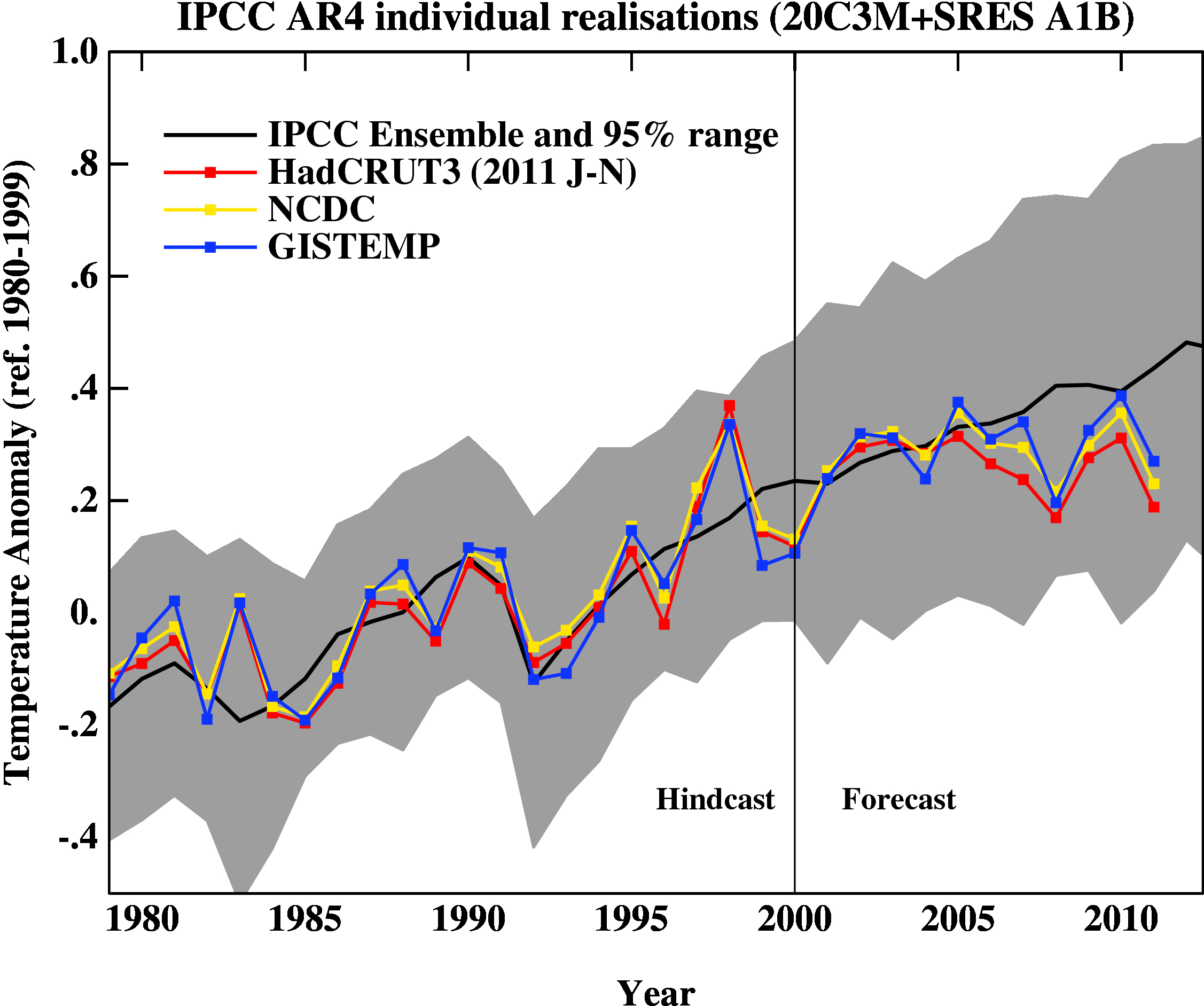

First, a graph showing the annual mean anomalies from the IPCC AR4 models plotted against the surface temperature records from the HadCRUT3v, NCDC and GISTEMP products (it really doesn’t matter which). Everything has been baselined to 1980-1999 (as in the 2007 IPCC report) and the envelope in grey encloses 95% of the model runs.

The La Niña event that emerged in 2011 definitely cooled the year a global sense relative to 2010, although there were extensive regional warm extremes. Differences between the observational records are mostly related to interpolations in the Arctic (but we will check back once the new HadCRUT4 data are released). Checking up on our predictions from last year, I forecast that 2011 would be cooler than 2010 (because of the emerging La Niña), but would still rank in the top 10. This was true looking at GISTEMP (2011 was #9), but not quite in HadCRUT3v (#12) or NCDC (#11). However, this was the warmest year that started off (DJF) with a La Niña (previous La Niña years by this index were 2008, 2001, 2000 and 1999 using a 5 month minimum for a specific event) in the GISTEMP record, and the second warmest (after 2001) in the HadCRUT3v and NCDC indices. Given current indications of only mild La Niña conditions, 2012 will likely be a warmer year than 2011, so again another top 10 year, but not a record breaker – that will have to wait until the next El Niño.

People sometimes claim that “no models” can match the short term trends seen in the data. This is not true. For instance, the range of trends in the models for 1998-2011 are [-0.07,0.49] ºC/dec, with MRI-CGCM (run5) the laggard in the pack, running colder than observations.

In interpreting this information, please note the following (repeated from previous years):

- Short term (15 years or less) trends in global temperature are not usefully predictable as a function of current forcings. This means you can’t use such short periods to ‘prove’ that global warming has or hasn’t stopped, or that we are really cooling despite this being the warmest decade in centuries.

- The AR4 model simulations were an ‘ensemble of opportunity’ and vary substantially among themselves with the forcings imposed, the magnitude of the internal variability and of course, the sensitivity. Thus while they do span a large range of possible situations, the average of these simulations is not ‘truth’.

- The model simulations use observed forcings up until 2000 (or 2003 in a couple of cases) and use a business-as-usual scenario subsequently (A1B). The models are not tuned to temperature trends pre-2000.

- Differences between the temperature anomaly products is related to: different selections of input data, different methods for assessing urban heating effects, and (most important) different methodologies for estimating temperatures in data-poor regions like the Arctic. GISTEMP assumes that the Arctic is warming as fast as the stations around the Arctic, while HadCRUT3v and NCDC assume the Arctic is warming as fast as the global mean. The former assumption is more in line with the sea ice results and independent measures from buoys and the reanalysis products.

- Model-data comparisons are best when the metric being compared is calculated the same way in both the models and data. In the comparisons here, that isn’t quite true (mainly related to spatial coverage), and so this adds a little extra structural uncertainty to any conclusions one might draw.

Foster and Rahmstorf (2011) showed nicely that if you account for some of the obvious factors affecting the global mean temperature (such as El Niños/La Niñas, volcanoes etc.) there is a strong and continuing trend upwards. An update to that analysis using the latest data is available here – and shows the same continuing trend:

There will soon be a few variations on these results. Notably, we are still awaiting the update of the HadCRUT (HadCRUT4) product to incorporate the new HadSST3 dataset and the upcoming CRUTEM4 data which incorporates more high latitude data (Jones et al, 2012). These two changes will impact the 1940s-1950s temperatures, the earliest parts of the record, the last decade, and will likely affect the annual rankings (and yes, I know that this is not particularly significant, but people seem to care).

Ocean Heat Content

Figure 2 is the comparison of the ocean heat content (OHC) changes in the models compared to the latest data from NODC. As before, I don’t have the post-2003 AR4 model output, so I have extrapolated the ensemble mean to 2012 (understanding that this is not ideal). New this year, are the OHC changes down to 2000m, as well as the usual top-700m record, which NODC has started to produce. For better comparisons, I have plotted the ocean model results from 0-750m and for the whole ocean. All curves are baselined to the period 1975-1989.

I’ve left off the data from the Lyman et al (2010) paper for clarity, but note that there is some structural uncertainty in the OHC observations. Similarly, different models have different changes, and the other GISS model from AR4 (GISS-EH) had slightly less heat uptake than the model shown here.

Update (May 2012): The figure has been corrected for an error in the model data scaling. The original image can still be seen here.

As can be seen the long term trends in the models match those in the data, but the short-term fluctuations are both noisy and imprecise [Update: with the correction in the graph, this is less accurate a description. The extrapolations of the ensemble give OHC changes to 2011 that range from slightly greater than the observations, to quite a bit larger]. As an aside, there are a number of comparisons floating around using only the post 2003 data to compare to the models. These are often baselined in such a way as to exaggerate the model data discrepancy (basically by picking a near-maximum and then drawing the linear trend in the models from that peak). This falls into the common trap of assuming that short term trends are predictive of long-term trends – they just aren’t (There is a nice explanation of the error here).

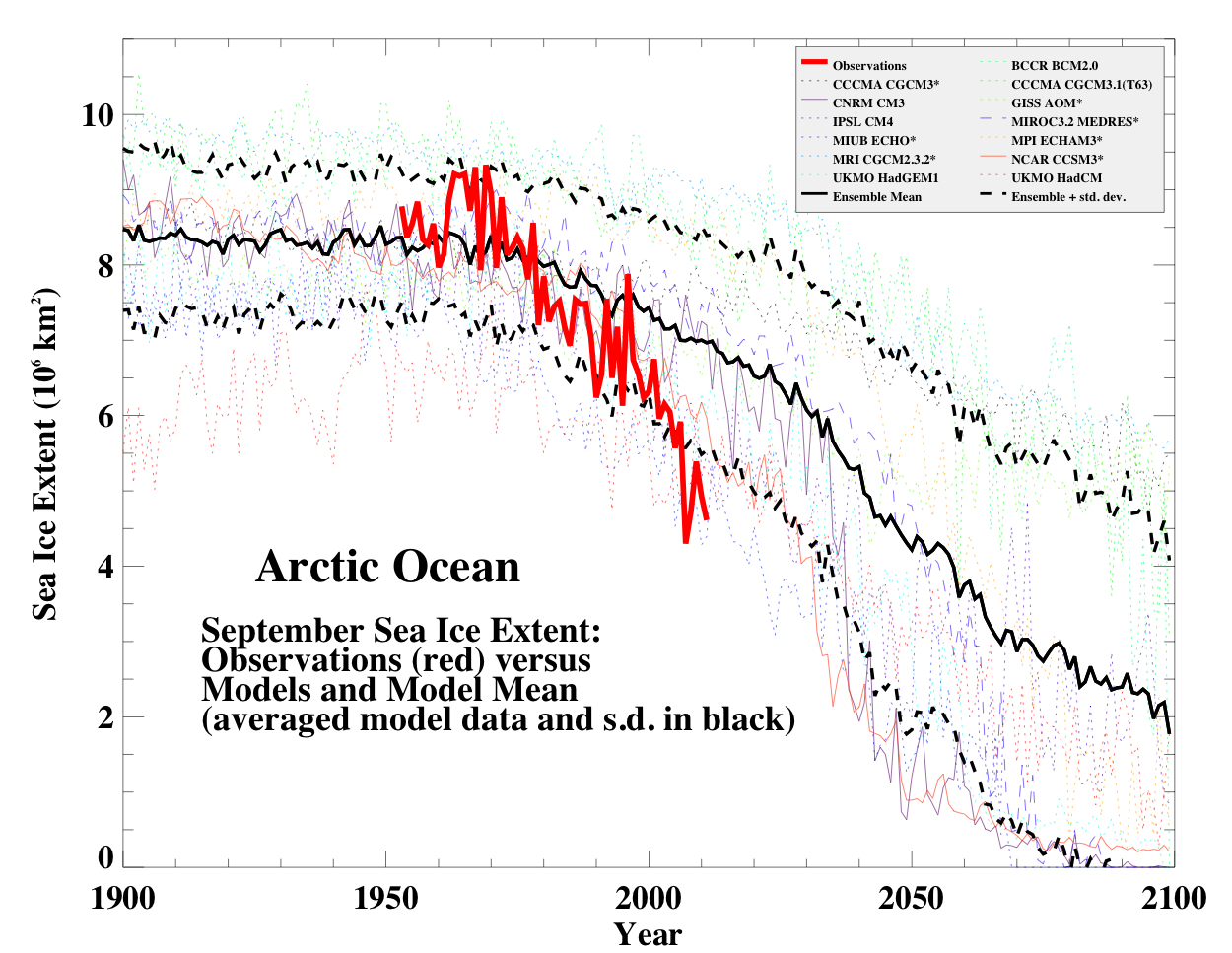

Summer sea ice changes

Sea ice changes this year were dramatic, with the Arctic September minimum reaching record (or near record) values (depending on the data product). Updating the Stroeve et al, 2007 analysis (courtesy of Marika Holland) using the NSIDC data we can see that the Arctic continues to melt faster than any of the AR4/CMIP3 models predicted.

This may not be true for the CMIP5 simulations – but we’ll have a specific post on that another time.

Hansen et al, 1988

Finally, we update the Hansen et al (1988) comparisons. Note that the old GISS model had a climate sensitivity that was a little higher (4.2ºC for a doubling of CO2) than the best estimate (~3ºC) and as stated in previous years, the actual forcings that occurred are not exactly the same as the different scenarios used. We noted in 2007, that Scenario B was running a little high compared with the forcings growth (by about 10%) using estimated forcings up to 2003 (Scenario A was significantly higher, and Scenario C was lower).

The trends for the period 1984 to 2011 (the 1984 date chosen because that is when these projections started), scenario B has a trend of 0.28+/-0.05ºC/dec (95% uncertainties, no correction for auto-correlation). For the GISTEMP and HadCRUT3, the trends are 0.18+/-0.05 and 0.17+/-0.04ºC/dec. For reference, the trends in the AR4 models for the same period have a range 0.21+/-0.16 ºC/dec (95%).

As we stated before, the Hansen et al ‘B’ projection is running warm compared to the real world (exactly how much warmer is unclear). As discussed in Hargreaves (2010), while this simulation was not perfect, it has shown skill in that it has out-performed any reasonable naive hypothesis that people put forward in 1988 (the most obvious being a forecast of no-change). However, the use of this comparison to refine estimates of climate sensitivity should be done cautiously, as the result is strongly dependent on the magnitude of the assumed forcing, which is itself uncertain. Recently there have been some updates to those forcings, and so my previous attempts need to be re-examined in the light of that data and the uncertainties (particular in the aerosol component). However, this is a complicated issue, and requires more space than I really have here to discuss, so look for this in an upcoming post.

Overall, given the latest set of data points, we can conclude (once again) that global warming continues.

References

- G. Foster, and S. Rahmstorf, "Global temperature evolution 1979–2010", Environmental Research Letters, vol. 6, pp. 044022, 2011. http://dx.doi.org/10.1088/1748-9326/6/4/044022

- P.D. Jones, D.H. Lister, T.J. Osborn, C. Harpham, M. Salmon, and C.P. Morice, "Hemispheric and large‐scale land‐surface air temperature variations: An extensive revision and an update to 2010", Journal of Geophysical Research: Atmospheres, vol. 117, 2012. http://dx.doi.org/10.1029/2011JD017139

- J.C. Hargreaves, "Skill and uncertainty in climate models", WIREs Climate Change, vol. 1, pp. 556-564, 2010. http://dx.doi.org/10.1002/wcc.58

Thanks, Mike! Looking forward to reading your new book.

[Response: Thanks ThingsBreak :) -mike]

Thingsbreak@46

Hmm, Andy seems to make a lot of “honest errors”…and always in the same direction.

Lindzen was not trying make a prediction. Quite the opposite.

He was trying to cast doubt on Hansen’s work, while avoiding any testable alternative predictions. He sought to have it both ways.

#36

“When I look at the gray bands for the ensemble model projections, it seems to be that a model could be created that has temperatures declining to 0 or less abnormality with a gray band that easily contains the actual recorded temperatures.”

But you would need to be able to explain the reason. eg 1998, 2009

there is a clear understanding for both years, I did not think that 1998 would have been so near top of 95% and i thought that 2009 would have been much nearer to low end of 95%

if temperatures declined to zero for no apparent reason then you may have a point but i am not sure at all that cs is less than 3DegC it looks to me that it may be higher.

Sorry 2008 not 2009

How did Revkin make an error? CO2 continues to climb and the model estimates are all higher than observed temperatures. If this does not suggest that sensitivities are a tad too high, then what it is suggesting?

First of all the graphs are too small to read and enlarging them shows them to be of too low a resolution to be enlarged usefully.

Second, I note that, using LANL’s seaice model for sea ice, CCSM can actually reproduce the observations

Third, this site needs a thorough discussion of transient climate sensitivity (TCS) comparing the rather low values from observations with the higher ones the models usually get. There is increasing literature indicating that TCS is closer to 2.0-2.5 °C and the 3°C model average. One complicating factor is the increasing information that AMO has a ~60 yr cycle (0ff and on over the past 8,000 yrs) that added significantly to warming in the 1990s and to the lack thereof in the past decade. While models do capture some of the AMO variability, it is generally agreed that they underestimate it. Finally only a few modeling exercises attempt to get the AMO synchronized as observed (from careful initializations). Where the AMO is not specifically in the simulations it is hard to assess model’s accuracy in reproducing observed temperatures in the past quarter century.

Can Real Climate give us a careful look at all this literature and its implications for model accuracy?

> Woodshedder …

> … model estimates are all higher

Why do you believe that’s the case?

What source are you relying on for that misinformation?

Did you read the main post above? Start with

“People sometimes claim that “no models” can match the short term trends seen in the data. This is not true.”

Then below that is a bullet list repeated each year.

Oh, wait — Woodshedder, when you wrote “model estimates are all higher” — are you saying that because in the picture at the top, the black line is higher than the blue, yellow, and red lines? If so — you missed the meaning of the gray background.

Hey Gavin,

do you think there could be a better way of presenting sea ice and temperature data? Why don’t you use percentages? For example, Arctic Sea Ice could be -5%, global temperature could be +7%, etc. That way people might not be so alarmed at the changes, still have 95% of NH sea ice, Phew!

Just a though.

I wonder if you could explain the choice of comparison with Hansen’s scenario ‘B’ rather than scenario ‘A’?

Scenario ‘A’ assumed that the growth rate of trace gas emissions typical of 1970s and 1980s continue indefinitely; the assumed annual growth was about 1.5% of current emissions, so the net greenhouse forcing increases exponentially.

Scenario ‘B’ had decreasing trace gas growth rates such that annual increase of greenhouse forcings remained constant at the 1988 level.

According to Skeptical Science http://www.skepticalscience.com/iea-co2-emissions-update-2010.html CO2 emissions rose from 21BT per year in 1990 to 30.6BT in 2010. I make that an average annual rate of increase of 1.8%, which is higher than the 1.5% mentioned as the “Business as Usual” growth of emissions up to 1988.

[Response:]

The important factor for these models and these metrics is the net forcing, and the real world forcing are significantly less than scenario A. How close it is to scenario B is to be determined in the light of updates to the aerosol forcing. – gavin]\

Transient climate sensitivity seems to be the new buzzword in denial land–although this is pretty much meaningless wrt the ultimate consequences of climate change. What is more, I would think that volcanic eruptions put some fairly tight constraints on it. It might be a reasonable topic for a post, as it seems to be the latest straw at which the denialists are grasping.

A few months ago a similar comparison was done on the Blackboard. The graphs look pretty similar, except that Lucia smooths her observation lines (I don’t know why, but it doesn’t look to make much of a difference). The biggest difference seems to be the size of the 95% CI. How is that calculated?

The Blackboard post is here: http://rankexploits.com/musings/2011/la-nina-drives-hadcrut-nhsh-13-month-mean-outside-1sigma-model-spread/

Hank, I understand error bars.

Revkin said that this post suggested the CO2 sensitivity was a tad too high. It seems you are suggesting that observed temps would have to break out of the gray area in order for CO2 sensitivity to be a tad too high. IMO, if observed temps break lower than the error bars, CO2 sensitivity is not a tad too high; rather, the models are broken.

Anyway, I assumed Revkin was referring to the Hansen predictions.

I’d like to see the ensemble model results and Foster and Rahmstorf’s adjusted data on the same graph. Despite the admonitions to not rely on eyeballing graphs, I’d like to eyeball those together all the same because, if I have this right, the ensemble results are an average of models and model runs and would tend to smooth the impacts of the strongest known influence on year to year global temperature variations – ENSO. That the adjusted data graph got prominence in the post suggests such a comparison would have some validity.

> Woodshedder … “model estimates are all higher” …

> Woodshedder … “I assumed Revkin was referring to the Hansen ….”

Quoting from the original post above:

“… the old GISS model had a climate sensitivity that was a little higher (4.2ºC for a doubling of CO2) than the best estimate (~3ºC) …”

Is that all you meant by “all higher”? Just trying to figure out what you’re describing.

There are a _lot_ of climate models, and a lot of runs with each, and the runs will be different. Certainly it’s not correct to say what you said.

But what did you mean?

[Response: This was discussed further up in the comment thread. In a comment on twitter about the last RealClimate post, Revkin (@Revkin) mistakenly took Gavin’s comment about the old (80s) GISS model having a high sensitivity as if it applied to all/current models. I (@MichaelEMann) pointed out the error on twitter and he corrected it. – mike]

Is this the ‘woodshedder’ writing about climate in stock trading blogs and in Amazon book reviews? If that’s you, it’s clear what you mean I think.

Yes, it is. And it’s obvious he doesn’t know the first thing about climate models, as he parrots the oft-repeated claim that they’re build by statistical fitting of long time series of data.

Woodshedder: if you don’t know the first thing about a subject you’re pontificating on, you’re not going to impress the knowledgeable. Your equally ignorant buddies on your own blog are fooled, but that’s not a particularly impressive trick, ya know?

#60–“Sea ice could be -5%.”

It *could* be–if you picked your metric carefully enough.

However, if you compare the September minimum, as a simple-to-calculate instance, to the (roughly) 30-year average, the sea ice *is* about -31%.

Oh boy, I’m reassured now.

In general, monthly trends are declining at about 3-5% PER DECADE.

http://nsidc.org/arcticseaicenews/2011/09/

#54

Not really. The simplest model would be a random walk around constant temperature with a little bit of inertia built-in. My point is that a model like that would perform as well as the ensemble models for the short time period in the graph.

[Response: I agree. Just one more reason why testing the long-term forced response of GCMs based on short time series is non-informative. The first graph above is not there to show that the models are perfect, but rather that what has happened is not inconsistent with the ensemble. This is of course a weak test, and wouldn’t be worth stressing at all if it wasn’t for people saying otherwise. – gavin]

So we have to wait for the next El Nino year for the next significant record setter. Do we have any idea when that might be? How many La Nina years are there likely to be in a row?

Meanwhile, isn’t China hurrying to put scrubbers on its coal plants? Won’t that rapidly decrease the amount of global atmospheric aerosol and bring about an end (or a great reduction, at least) in global dimming, kicking the global temp up .5-2 degrees C?

Granted it is a relatively minor contribution, but the sun is also coming out of a relatively quiet phase, so that should contribute a bit more energy into the system.

And atmospheric methane concentrations are on the rise again, so this should be a new forcing that will add to upward pressure on global temps in the coming years.

Does all this add up to a fairly strong probability of a rather large upward swing in global temps in the next few years?

Oh, and on the last point about methane, note that the latest AIRS maps show a fairly stunning increase in Arctic methane over the same time period last year. Compare especially methane concentrations over the Arctic Ocean and Siberia this January versus last January:

ftp://asl.umbc.edu/pub/yurganov/methane/MAPS/NH/ARCTpolar2012.01._AIRS_CH4_400.jpg

ftp://asl.umbc.edu/pub/yurganov/methane/MAPS/NH/ARCTpolar2011.01._AIRS_CH4_400.jpg

Should we be worried yet?

#65 Ken Fabian:

Perhaps, but let me point out two risks.

1. Yes, ensemble averaging tends to remove the effect of natural variability from the model result. But ENSO removal as done in Foster & Rahmstorf removes only part of natural variability (though a prominent part affecting the appearance of smoothness) from the single instance of reality we have access to.

2. The forcings may be wrong! The comparison you propose does not just evaluate model logic but also forcings correctness. And note that forcings errors affect models and F&R in opposite ways: say that there is an erroneous trend (or a missing, trending contribution) in one of the forcings which is used by both the model runs and by F&R. This will then affect the models in one direction (as they are driven by the forcings) and the F&R result in the other direction (as the effect of the forcings is backed out of the result). This would double up any discrepancies found.

So, one should be careful.

Gavin: You’ve listed the Model-ER as the source of your simulations in your OHC model-data graph. But I can only count 5 ensemble members for each of the depths. The Model-ER in the CMIP3 archive has 9 ensemble members for the 20C3M simulations, while the Model-EH has 5. Why does your Model-ER only include 5 ensemble members? Is it based on a paper that didn’t use the CMIP3 20C3M simulations data? If so, what paper?

Regards

[Response: There were 9 GISS-ER simulations – labelled a through i. The first 5 were initiallised from control run conditions that were only a year apart (done when we needed to have some results early, and before the control run was complete). The subsequent 4 were initiallised in (more appropriate) 20 year intervals. Thus runs b,c,d,e are not really independent enough of run a to be useful in a proper IC ensemble. All of our papers therefore used runs a,f,g,h,i as the ensemble. – gavin]

Its a good thing to revisit this each year, and for those of us ‘fixated’ on global temperature records it seems resonable to ask the question is there something missing?

The individual realisations contributing to the E.Mean are so different over the decadal timeframe that the confidence intervals remain outrageously wide allowing any trend you like(ie. positive or negative) within that timeframe.

Surely it would be better to form subgroups according to the various external forcings conceived in the models and display these over the original gray area to give insight to which group seems more or less likely?(Alternatively plot separetely with their own C.Is)

It would be nice to plot the F+R index annually given its apparent popularity and surely prospective validation against observations is appropiate

A response to Wili’s 12 Feb 2012 at 12:29 PM on methane is in the unforced-variations-february-2012 thread

These graphs might help some better understand why Scenario A is not what has happened.

#69

Yep, so I only did a rough calc. for the satellite era, calculated from annual anomalies….

and its like -5%. Interesting, because if you talk to some of the more alarming supporters of climate change, they seem to think that climate change in the arctic is ‘where it’s at’.

Where it’s at is 95% Sea Ice. I’ll go with the glass ‘95%’ full.

Thanks for posting this; I hope you will be able to continue doing this in future years.

I have only one doubt/question. If I have dome my arithmetic correctly, a TOA imbalance of 1 watt/M^2 corresponds to an ocean accumulation of about 1.6 * 10^22 joules per year, and 0.5 watt/M^2 is ~0.8 * 10^22 joules per year.

Looking at the limited (2003 to present) NOAA 0-2000 meter ocean heat data, the OLS trend suggests a most probable value somewhere near ~7 * 10^21 joules per year accumulation rate. I have read a recent estimate (based on deep ocean temperature transects) of the >2000 meter ocean which says there are perhaps another 1 * 10^21 joules per year accumulating below 2000 meters, which means in total ~0.8 * 10^22 per year, and a TOA imbalance of about 0.5 watt/M^2. So it seems to me unlikely that much higher imbalances (near 1 watt/M^2) are correct. Of course, the ever accumulating 0 – 2000 meter data from ARGO should help narrow the uncertainty in the accumulation rate within a decade or so.

Isotopious,

Do you really equate year-old ice with hundred-year old ice? Do you think ise won’t freeze in the winter. Look at September ice extent–that is the relevant statistic

Re #57: Upon reading your comment, Chick, my first thought was that Petr must have made a recent contribution to the AMO literature. A quick check of Google Scholar confirms that. (Although wait, Petr says 20 years, not so much the 60. This is so confusing…)

You say “Where the AMO is not specifically in the simulations it is hard to assess model’s accuracy in reproducing observed temperatures in the past quarter century.”

I think you’re getting ahead of yourself. The question of whether the AMO is a cycle or just variabilty remains open at the least, and the FOD would seem to indicate that the relevant IPCC authors are unconvinced. What they do cite when discussing the AMO is Tokinaga and Xie (2011b) (press release), which identifies a phenomenon that would seem to make it very difficult to point to a meaningful non-anthropogenic AMO signal.

So, contrary to your surmise, right now the AMO may be more wild goose chase than cycle and perhaps not a high priority for modelers to try to incorporate.

Martin @72, climate scientists need to do the best they can to be accurate but inspiring real action requires the ability to illuminate and persuade. As a tool of persuasion the first graph, of ensemble vs global temperatures will, for those who choose to cherry pick the last decade, work quite effectively to demonstrate a mismatch between models and data irrespective of what most people here know about the inappropriateness of extrapolation from a too short selection of data. Foster and Rahmstorf are providing something very valuable by accounting as best as reasonably possible for the known impacts of known natural influences. Isn’t that one of the constant refrains from climate science deniers, that science fails to consider known natural influences?

It may not be cutting edge science but as a tool to illuminate and persuade, both about the true state of our planet’s climate as well as the intellectual dishonesty of climate denialist arguments, comparing ensemble averages to F&R’s adjusted data could be quite useful. Time to turn that ‘failure to consider known natural influences’ argument against those who selectively ignore known natural influences and are enabling the building global climate problem to continue to gather strength. unchecked.

Thanks for the clarificaton, Gavin.

Funny how the “It’s the sun!” people can’t figure out why arctic sea ice is at 95% coverage in the winter.

Ken Fabian #81, yes a good point in principle, but any such comparison should be simple in order to be convincing. Adding complexity adds scope for obfuscation, and some — not me, I hasten to point out :-) — would expect this to be exploited to the hilt.

The problem is that while models produce simulated real global temperature paths, Foster&Rahmstorf provide something more complicated — so, not apples and apples, making proper comparison nontrivial.

Note again that in a comparison of this kind there is model uncertainty — our imperfect understanding, or deficient modelling, of the physical processes — but also uncertainties on the forcings that drive the models — and the F&R reduction. The latter has nothing to do with the quality of our understanding or running code, and everything with our observational capabilities; Trenberth’s lament.

This is why I feel that a comparison as you propose will find it hard to give a realistic view of model capabilities, and possibly cause more confusion than enlightenment. To some extent this is a problem common to all model-data comparisons.

#77–“Yep, so I only did a rough calc. for the satellite era, calculated from annual anomalies….”

Oh, really? Just where did you find these annual anomalies?

Ken Fabian #81, before you ask, a better way of comparing models with data is intercomparing them with/without the forcing of interest, as done for the well known Figure 9.5 in AR4. IMHO.

Gavin,

Regarding the comparison of Hansen’s 1988 scenarios with observations I’ve been looking at something which has got me a bit confused.

I took the hindcast forcings, from observed emissions data, on the GISS site and used them to calculate total net forcing change so I could compare with Hansen’s projected forcings. The 1988 forcings appear to be baselined to 1958, compared to 1880 for hindcast forcings so I adjusted the latter to match and found a net RF change of ~1.2W/m^2 at 2010.

Looking at the 1988 projected forcings from this file you provided in 2007, Scenario C appears to be closest to this value by quite a distance. Given that this scenario is usually ignored in these discussions have I missed something or taken a wrong turn somewhere?

[Response: No. But note that these forcings are from Hansen’s recent paper (2011) and the aerosols are derived from inverse modelling – so using them would be a bit circular in this context. The forcings I used originally (from 2006?) had a smaller aerosol component. Other estimates – such as used for the CMIP5 runs – are different still. Thus I wanted to put all of that together in a new post before saying much about it – the aerosol uncertainty really is much larger than we would like it to be. – gavin]

Hi, I have been having a look at the data at KNMI Climate Explorer, and it seems there are only 54 model runs for SRES A1B, can you tell me how you calculate the 95% coverage interval from the 54 runs (just so I can reproduce the first figure exactly)?

#77, #85–Crickets, so far, but perhaps Isotopius missed my question, what with all the traffic on the “Free Speech” thread.

The reasons that I am skeptical about I’s claim are twofold. First, to find any metric which shows 95% of sea ice remaining is not trivial–the decline is (of course) quite sharp, as most of us aware. Second, “annual anomalies” are not so easy to find, in my experience–the NSIDC data is organized by month, and the only “anomalies” I found were on maps–spatial anomalies.

What I had to do was to download the monthly data from NSIDC, copy them into Excel (yeah, I know, Excel is for amateurs–but that would be me!), calculate annual extents (interpolating for the two missing months of data in 1987-88), calculate a baseline (I went with ’79-2010, since that is used by NSIDC elsewhere), and calculate anomalies from there.

But then how do you compare the anomalies? The most naive comparison would be to compare the first and last anomalies: 5.62 and -10.09, for a decline of 15.71. Slightly more sophisticated would be five-year averages: the ’79-’83 mean anomaly is 5.41, while the 2007-2011 anomaly is -7.75, for a decline of 13.16.

I think, though, that the annual extents are a perfectly good metric. Fitting a linear trend to the annual extents gives a decline of about 14%:

http://preview.tinyurl.com/NISDC-Annual-Mean-Extent

Of course, annual means obscure the stunning decline observed at minimum, which is close to 38%.

But even the most conservative value I calculated–2011 anomaly WRT a ’79-2010 baseline–shows a decline of a tad over 10%.

So I think Isotopius’ claim is–shall I say?–“unsupported.” There’s no way that any sensible comparison can find that 95% of the Arctic sea ice remains.

Of course, all that work basically leaves me right where I started: with decadal declines of nearly 5%, which is what I mentioned to “I” in the first place. But I guess I’ve gone from “most monthly declines” to actual calculated annual declines, which is what he proposed–a bit of added confidence, essentially.

Hank Roberts @75

Hank

I think I think a bit like wili – except that I think that there is a possibility that our plight might be so serious that we may need geo-engineering to stand much of a chance of a non-horrific scenario for the only planet we have.

I worry about the feedbacks that are missing from estimates of climate change, particularly carbon dioxide and methane being released (or generated) in the northern regions of Europe and Asia. Add to that worry the missing feedbacks of methane from dissociating clathrates in Arctic seas.

More specifically the climate models being used in AR5 have these feedbacks missing.

Do you accept this?

If you do, you might expect people like wili and myself look for clues to see if these feedbacks are happening.

Just look at the sequence of images from Yurganov

January 2011,

February 2011,

March 2011,

April 2011,

May 2011,

June 2011,

July 2011,

August 2011,

September 2011,

October 2011,

November 2011,

December 2011

and finally

January 2012

These images suggest temporal and geographical distributions of methane compatible with the some of the missing feedbacks that scare us.

Is “suggest” part of scientific method?

What if we call it a heuristic?

Having looked through the 13 images and I just can’t see that the graph you referenced in the unforced-variations-february-2012 thread tells us much. It seems to fit the images OK – but so what?

Have you any idea where the methane concentrations are coming from?

Are they instrumental artifacts?

Let me repeat the important question:

Do you accept the climate models being used in AR5 have important feedbacks missing?

“Lindzen’s ‘prediction’, which has been fairly consistent over the proceeding 20 years, is that any warming from CO2 will likely barely rise above natural variation.”

A prediction which has failed in a most spectacular way! I deal with the reality of these projected past 24 years in the high Arctic, what happened approaches Hansen and Al, but no one expected Arctic to show such a demonstrably strong warming as exemplified by the Arctic ocean sea extent graph above. Arctic lands glaciers , in particular small ones, have been hit so hard as to change the Arctic scape as it appears so , forever. I deal with this on my website, an Artist presentation of small Islands or hill sides once parched with spots of ice surviving the summer, an iconic description of the Arctic itself, will be soon a thing of the past.

I have found the model presentations here very instructive, but there is another way of depicting climate change, it is ascension of air, convection as seen in summer cumuliform clouds, as opposed to lateral displacement of it under boundary layers, mostly observed during winter anywhere. The former is gaining over the latter in the Arctic, giving some serious implications. The lower atmosphere, now in close relation with a warmer sea and land surface, is feeding heat to the upper atmosphere again changing the view of the clouds themselves from mostly sculpted by winds stratiform, now tending to be more cumuliform. This summer like heat transfer process may not be depicted well with surface temperature anomalies, since the heat it gains quickly ascends, but this is the primary reason Arctic small glaciers are vanishing, the stratiform like boundary itself is severely perforated by increasing convection. It is likely the nature and size of these convections which the models do not simulate at high high enough resolutions.

Transformed to warmer Arctic atmosphere will soon if not has already overtaken ENSO’s impact on the Northern Hemisphere weather patterns. Although the voices of the few people Up Here are scantily heard, the absence of or unrecognizable winters sure are making an impression, this is just the beginning. of a radically different worldwide climate to come.

Might I suggest to compare the curve from Foster and Rahmstorf (2011) to the average of model. This would be much clearer that your first figure as it would allows a direct comparison of the slopes.

Dumb question time:

What happened to Hansen’s Scenario C which is an almost perfect match?

I should state in advance that I don’t think the current trend of actual temps is going to remain at the current relatively low level as years since 2010 start to be incorporated. Still, I’d like to understand more, and also why nobody else ever brings it up. I did check this:

https://www.realclimate.org/index.php/archives/2007/05/hansens-1988-projections/

#94 Susan

I understand scenario C was a moderate mitigation scenario. What happened? No mitigation.

But maybe the increase in dirty coal burning in countries like China during the last couple of decades effectively amounts to moderate mitigation in the short run. I have seen no evidence that the aerosol effect is strong enough to result in a forcing in line with scenario C but maybe it’s plausible considering the uncertainty.

I suppose it’s more likely that Hansen’s 1988 model warms a bit faster than the real climate.

Susan, the most recent paper on energy balance will help:

http://www.columbia.edu/~mhs119/

Thanks, that’s a great link! Yes, I know “moderate mitigation” has not happened, quite the reverse, tragic. And I live in the modern world and watch world weather, so I know things are getting out of hand. Good reminder about the news about China et al.’s coal and aerosols. Then there’s the developing understanding of ocean heat absorption at various depths, currents, El Niño and La Niña. It *is* complicated.

In addition, it is quite weird to insist that work done in 1988 be perfect in 2012. What is astounding is how well it has held up.

Too bad it’s so easy for shallow thinkers with an agenda to grab a teensy little piece of the puzzle and bring it to mommy.

Response to Steve Bloom Comment #80:

Good points, but for clarity let’s not refer to the two large warmings of the 20th Century as AMO, let’s just say there is reason to look carefully at natural variations in Atlantic ocean temperatures in that time frame. Several studies suggest that a large fraction of warming in the 1940s and 1990s was due to abnormally warm oceans. Petr is more interested in the 20yr cycles seen in the Arctic, but his recent work confirms earlier work on both 20 and 60 yrs. Gerry North and Petr Chelyk (Third Santa Fe Climate Conference, Nov. 2011) have looked for both the 20 and 60 yr. cycles in ice core records. Gerry didn’t find the 60 yr one, but Chelyk’s work corroborated Knudsen et al (2011) showing that both these cycles are not continuous, but come and go over the centuries. So it’s likely that the past two cycles of 60 years are present and important. When Chris Follen (2011) deconvolved recent temps, in addition to other factors, he got a substantial signal roughly similar to AMO which contributed to warming in the 1990s and subsequent lack thereof after 2005. Other authors (Knudson,2011, Frankcome et al, 2010, Kravtsov & Spannagle, 2007,Grossman & Klotzbach, 2009) get similar results. So I think we should look more seriously at this work because, if it’s close to being correct, expected warming due to AGHGs will be slightly less than currently accepted.

http://tamino.wordpress.com/2011/03/02/8000-years-of-amo/

How did Hansen do? Well it looks like Scenario ‘C’ is the best match for the surface temperature record.

Maybe there is something in his Chinese aerosols (although Trenberth disagrees) but his ‘delayed Pinitubo rebound effect’ – bizarre.