It is not every day that I come across a scientific publication that so totally goes against my perception of what science is all about. Humlum et al., 2011 present a study in the journal Global and Planetary Change, claiming that most of the temperature changes that we have seen so far are due to natural cycles.

They claim to present a new technique to identify the character of natural climate variations, and from this, to produce a testable forecast of future climate. They project that

the observed late 20th century warming in Svalbard is not going to continue for the next 20–25 years. Instead the period of warming may be followed by variable, but generally not higher temperatures for at least the next 20–25 years.

However, their claims of novelty are overblown, and their projection is demonstrably unsound.

First, the claim of presenting “a new technique to identify the character of natural climate variations” is odd, as the techniques Humlum et al. use — Fourier transforms and wavelet analysis — have have been around for a long time. It is commonplace to apply them to climate data.

Using these methods, the authors conclude that “the investigated Svalbard and Greenland temperature records show high natural variability and exhibit long-term persistence, although on different time scales”. No kidding! Again, it is not really a surprise that local records have high levels of variability, and the “long-term persistent” character of climate records has been reported before and is even seen in climate models.

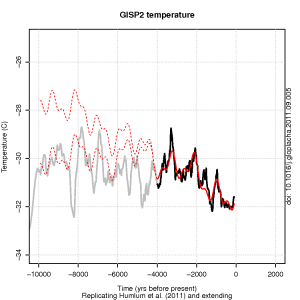

The most problematic aspect of the paper concerns the Greenland temperature from GISP2 and their claim that they can “produce testable forecasts of future climate” from extending their statistical fit.

Of course, these forecasts are testable – we just have to wait for the data to come in. But why should extending these fits produce a good forecast? It is well known that one can fit a series of observations to arbitrary accuracy without having any predictability at all. One technique to demonstrate credibility is by assessing how well the statistical model does on data that was not used in the calibration. In this case, the authors have produced a testable forecast of the past climate by leaving out the period between the end of the last ice age and up to 4000 years before present. This becomes apparent if you extend their fit to the part of data that they left out (figure below).

I extended their analysis back to the end of the last ice age. The figure here shows my replication of part of their results, and I’ve posted the R-script for making the plot. The full red line shows their fit (“model results”) and the dashed red lines show two different attempts to extend their model to older data.

For the initial attempt, keeping their trend obviously caused a divergence. So in the second attempt, I removed the trend to give them a better chance of making a good hind cast. Again, the fit is no longer quite as remarkable as presented in their paper.

Clearly, their hypothesis of 3 dominant periodicities no longer works when extending the data period. So why did they not show the part of the data that break with the pattern established for the 4000 last years? According to the paper, they

chose to focus on the most recent 4000 years of the GISP2 series, as the main thrust of our investigation is on climatic variations in the recent past and their potential for forecasting the near future.

One could of course attempt to rescue the fit by proposing that some other missing factor is responsible for the earlier divergence. But this would be entirely arbitrary. Choosing to ignore the well known (anthropogenic) factors affecting current climate, on the other hand, is not arbitrary at all.

Humlum et al. also suggest, on the basis of a coincidence between one of their cycles (8.7 years) and a periodicity in the Earth-Moon orbital distance (8.85 years), that the Moon plays a role for climate change (seriously!):

We hypothesise that this may bring about the emergence of relatively warm or cold water masses from time to time in certain parts of oceans, in concert with these cyclic orbit variations of the Moon, or that these variations may cause small changes in ocean currents transporting heat towards high latitudes, e.g. in the North Atlantic.

How wonderfully definitive. They, however, admit that their

main focus is the identification of natural cyclic variations, and only secondary the attribution of physical reasons for these.

So, if the curve-fitting points to periodicities that are anywhere near any of the frequencies that can be associated with a celestial object, then that’s apparently sufficient. You can get quite a range of periodicities if you consider all the planets in our solar system, their resonances and harmonics, see any of Scafetta’s recent papers for more examples. And of course, if there are parts of the data that do not match the periodicity you believe to be there, you can just throw it away to make the cycles fit. Quite easy really.

In short, Humlum et al’s results are similar to those I discussed concerning meaningless numerical exercises, and their efforts really bring out the points I made in my previous post: arbitrarily splitting a time series up into parts generally does not allow one to learn anything.

References

- O. Humlum, J. Solheim, and K. Stordahl, "Identifying natural contributions to late Holocene climate change", Global and Planetary Change, vol. 79, pp. 145-156, 2011. http://dx.doi.org/10.1016/j.gloplacha.2011.09.005

#98 Ray Tomes

Are you aware that with any large data set of any type you can apply computer analysis and find patterns within… the larger the better. Take for example hypothesis about the bible code.

Without a mechanism all you have is a guess based on the analysis. It has been said that statistics are like bikini’s, they show a lot, but often critical components remain hidden.

At the same time, while cycles do exist in natural variability, that does not diminish the impacts and potentials of human induced forcing on the system. It’s too easy to use ‘cycles’ as a red herring distraction to divert attention to a more real and serious problem. And when it comes to economics, it is unwise to ignore real and serious problems.

Fourier prediction of tides has its own interesting history. For one thing, this approach lends itself to solution by an elegant mechanical combination of gears and pulleys. NOAA has its No. 2 tide machine on exhibit in the Rockville, MD headquarters of the National Ocean Survey. It’s not as complicated as Babbages’s mechanical computer, but still a fine sample of mathematical machinery. It takes me only a few seconds to look at a circuit board, but I spent hours admiring the design of the tide machine when I worked for NOAA. Here’s the place to look: http://co-ops.nos.noaa.gov/images/mach1b.gif

The fact that the Greenland record covers the entire Holocene suggests that another test – slightly different to that usefully applied by Rasmus – can be applied to the technique (which is a frequency domain description being extrapolated into a forecast). A 4kyr “training set” can be regressed in 1ka steps back to the start of the Holocene, the major component putative cycles extracted by Fourier analysis, and the effectiveness of the “prediction” for the subsequent 1 ka can then be evaluated. This makes the test closer to the “local” forecast being suggested in this paper. I wouldn’t be too optimistic about it working, though!

The fact that the original dataset covers the entire Holocene suggests another slightly different test for the technique (which is a frequency-domain description extrapolated into a prediction) apart from the one already usefully applied by Rasmus. The 4 kyr “training set” can be taken back in 1 ka steps to the start of the Holocene. A Fourier analysis of each 4 kyr interval can then be used to produce a local “prediction” for the subsequent 1 ka, based on the putative cycles in the preceding 4 kyr. I’m not too optimistic that the method will pass this test, though! It is obviously unsuited to anticipating the effects of unprecedented forcing components, but it would be nice to know whether it works at all for natural background cycles.

Just to add to Eric’s inline response @79, one should also be aware of the 100,000 year problem.

The obliquity correlates to temperature very well between 0.8 million and 2.7 million years ago (41K obliquity world).

However, since 800,000 years ago, the earth started to skip the 2nd and 3rd cycles. Scientists cannot explain why this happens. There are plenty of theories, but very little confidence (hence the name: 100k problem).

Why wasn’t it warm like today 40,000 years ago, instead of being cool?

Thus, there is no clear physical mechanism to explain our current interglacial. The clear explanation became untenable 800,000 years ago.

[Response: The 100kyr ‘problem’ is greatly overstated. Check back here in a day or two and I’ll have a slew of references for you on this point.-eric]

Eric reply to @98: Why do you say that my suggestions are unsubstantiated? The cycles that I have mentioned are found in a variety of proxies. They have been given names and appear frequently in the literature. (more below)

Ray Laybury @100: Fourier series does not always make good predictions because it does not determine the accurate period. A subtle point perhaps. Determining significant cycles frequencies is interesting because it does allow prediction. Of course for a series like sunspots, predictions are not5 awfully good for the 11 year cycle because its period is not very stable. But it will tell you about coming turning points. Likewise, the 50 – 60 year cycle in climate is not of stable period. But we can see that it had peaks around 1941 and 1998. So the next trough is expected about 2025 and the following peak around 2055.

Please see http://cyclesresearchinstitute.wordpress.com/2011/07/13/analysis-of-be10-records-as-a-solar-irradiance-proxy/ again, especially the graph of the 207 year component. In the case of this 207 year cycle it does tell us that the cycle was at a low around 1900 and a high around 2000 and will be now be contributing a downward trend for the next century whereas it was upward for the last century. This is very important because it will now be opposite to any CO2 effects rather than the same direction.

[Response: Sure. But the magnitude is important — which is my repeated point here. The solar variations are more than an order of magnitude smaller than the greenhouse forcing.–eric]

Isotopius @105: The 100,000 year problem (to explain) is that the 41,000 year component of Milankovitch is expected to dominate over the 100,000 year component. In some past periods (millions of years ago) it did. But now it is the other way around. The fact is that there are some problems with Milankovitch theory.

My suggestion (and I have seen others suggest this too) is that insolation is not the only factor. Another factor could be the variations of the earth’s orbital shape cause it to vacuum clean a different part of the near earth space, picking up more dust and meteorites as the orbit moves into new regions. This would explain why the 100,000 year period has more effect than expected.

Dan @102: Interesting, thanks.

John @101: Bible codes are quite a different thing. Are you suggesting that meaningful codes are found more often than would be suggested by chance? I think not. I am suggesting that cycles periods are found more often than would be expected by chance, and that the ones that I have referred to are accepted as real for that reason. I recommend again, look at http://www.cyclesresearchinstitute.org/dewey/case_for_cycles.pdf which is a well checked and tested result which lists hundreds of time series in many disciplines where significant cycles have been found. This does tell us things about the Universe that are not in the common awareness of scientists looking at only one discipline.

Isotopius @105 I know Eric is going to address this, but Isotopius might like to consider Peter Huyber’s latest paper, in the current edition of Nature, which effectively concedes that precession, tilt and eccentricity all play a role in the climate forcing of the last 1Ma. He doesn’t identify eccentricity as such in the title of the paper, but it is there in his orbital forcing model, as the amplitude modulation of precession, and needed for his model to work. Significantly, this paper is from one of the initiators of a small “we don’t trust Milankovitch tuning” splinter-group within palaeoceanography.

The editorial describes the paper as the first firm quantitative evidence in favour of the Milankovitch theory – in my opinion this is absurd, because the Milankovitch hypothesis was validated by Imbrie, Hays and Shackleton in 1976 and by many papers afterwards – I expect Eric will document them. Huyber’s paper it would be better described as the end (for the time being at least) of yet another attempt to challenge the already well established, and justifiably dominant, Milankovitch hypothesis. This is the current model of the governing principle of climate behaviour on 5kyr – 1Ma timescales over the Cenozoic at least.

The “100,000 year problem” has many possible solutions: the difficulty is selecting which one is the best, ie closest to reality. Muller and McDonald’s “inclination” model, by the way, isn’t one of the currently viable ones. Strangely, Huyber’s paper characterises the Hays, Imbrie and Shackleton 1976 solution as a “precession only” solution, whereas it manifestly and clearly identified all three Milankovitch components – eccentricity, tilt and precession, as involved. Huybers has in effect admitted it was right all along.

Ray Tomes.

I am a little confused by your comments in this thread. Possibly others are also.

You appear to be discussing cycles in the sun’s activity. But you also stray beyond this @87 where you talk of the question of identifying the different drivers of climate change – human activities & natural cycles. Then without pause for an answer to this question you continue – “The levelling off in temperatures since about 1998 was entirely expected on the basis of the dominant cycles.” This is strong stuff, Ray Tomes.

So can we please go back to your question about how (I assume you are arguing) the sun is affecting the climate in some major or minor way? Otherwise your comments will remain as intractable as them there biblical codes.

[Response: Sure. But the magnitude is important — which is my repeated point here. The solar variations are more than an order of magnitude smaller than the greenhouse forcing.–eric]

Solar and temperature oscillations are not always synchronous, and often are in anti-phase, but that doesn’t exclude need for a further research.

http://www.vukcevic.talktalk.net/CET-GMF.htm

One could claim the above just a coincidence, but considering that each set of data is product of daily records averaging, statistical probability of it being coincidence is likely to be negligible. It is not claimed that link is direct, indirect or even two parallel processes with a common cause.

Since the above is not in line with the current understanding, worth attention even if the moderator does not approve of the post.

#108 Ray Tomes

Are bible codes really ‘quite a different thing’?

The paper you mention speaks of the influence from a man from mars. It discusses many different concepts and parameters regarding cycles. But what I am saying is that you can take specific time periods of climate data or all of the climate data and identify all sorts of cycles in it.

But that does not mean you understand why the cycles happen. So some may see cycles in the data and then try to claim why that cycle happens without actually understanding the mechanism. In other words, identifying cycle sin data without mechanisms is basically like identifying patterns in the bible through various forms of analysis.

So the man form Mars might see climate patterns on Earth and deduce that something is causing natural fluctuations, but even deductive reasoning may not be able to show the really interesting parts hidden under the bikini, and therefore leave any such claims about mechanism wanting.

But, pertaining to whatever your argument may be, what do natural cycles have to do with anthropogenic forcing and increased radiative forcing or human induced albedo changes or the like?

Let me help you out on this one. Nothing, other that their usefulness in determining the difference between natural variation cycles and human induced forcing and change.

So what I can determine by logical reasoning, using the same logic that is illustrated in the paper you linked to, is that your focus is to hold up natural cycles as if it means something, or possibly means human induced forcing is not occurring and that natural cycles dominate in current climate change…

Of course if that is your position, you’re wrong.

#108 Ray Tomes

My friend was just contemplating your postulation. She said of course there are natural cycles in nature. There is winter and spring, summer and fall. There is night and day. Then she said what does that have to do with anthropogenic global warming?

#108 Ray Tomes

And Ray, the whole point of the article about curve fitting and natural cycles is that it is inappropriate to make strong claims about random fits without mechanism, attribution and supporting physics and observations, unless you are perfectly willing to accept that the fact that the confidence in any assumptions indicated by any such ‘curve fitting’ is likely lower in contrast to more relevant methods.

Eric’s reply @106 & MARogers @110: Does anyone doubt that solar fluctuations must translate into climate fluctuations? I presume the question is also related to the question of amplitude. This assumes that we understand all of the mechanisms which it is generally being stated we do not (e.g. even the Milankovitch cycles). I suggest that if both Solar proxy’s and temperature proxies both show the same length cycle of the same phase over a long period of time, then, even if your calculations do not support the amplitude of the temperature variations, there is something going on.

I will give just one possible explanation which no-one has probably serious considered. Suppose that there are cyclical fluxes of energy throughout the Universe which affect both the activity and temperature of the Sun and Earth. In that case a 207 year cycle might appear of the same phase in both. If you then calculate from the Solar “cause” to the Earth “effect” you will get too small an answer, as you do.

Now, this idea is no doubt way out of left field to all climate researchers. But to cycles researchers that is not the case. As Dewey showed in his “The Case for Cycles” paper, and as many others have shown before, contemporaneously and since, there are often found cycles in seemingly unrelated things which have common period and phase – Dewey calls this cycle synchrony. I urge you to look at the evidence for such things before coming to the conclusion that you really understand how the entire universe works. And I refer you back to Dewey’s quote about medicine before the discovery of germs.

Ray Tomes,

I guess that I am not all that impressed when people rediscover–for the nth time–the Fourier series. Cycles and quasi-cycles are visible everywhere we look. Select a period, and probably somewhere you will find something that “sort of” oscillates with that period. If you ignore the physics, you’ll be able to approximate any time series with a few terms. What is missing is the mechanism by which the cycle becomes a forcing. Hell, they don’t even usually bother looking for the mechanism behind the oscillation.

Contrast this approach with that of Foster and Rahmstorf 2011, who consider just the 3 most important “noise” terms and show that they explain the vast majority of the noise about the linear trend in global temperatures. F&R 2011 is scientific modeling; the “fun-with-Fourier” types are simply mathturbating.

#115 Ray Tomes

Milankovitch cycles are quite well understood actually.

So your saying things that are not well understood should be considered… that is fair.

What is not fair is to include them in well quantified GCM’s. You can’t add something that is unknown.

The current observation and physics are extremely clear. Without increased greenhouse gases, there is no other quantifiable way to reasonably explain current warming.

Also, the amplitude of Schwabe cycles are well understood at this time.

Let me give you just one possible explanation for climate variability. Suppose Tinker Bell really does exist and has great power to alter Earth climate, and every so often in regular cycles does so…

This is not to say that other influences should not be examined, but if you can’t quantify what you are looking at then you’ve still got nothing. But do keep at it.

Ray Tomes &115

You say it is not the sun that is driving these oscillations in the earth’s climate, the oscillations that you said ‘levelled off temperatures since about 1998’. You are proposing that the driver is possibly “cyclical fluxes of energy throughout the Universe” and that is why the effect is greater than the changes in the sun would cause alone.

You urge us “to look at the evidence for such things” which perhaps I would if you point me in the right direction. But don’t rush with those directions as I (and my colleague Higgs Boson), to be able to look, will first have to stop laughing.

And suppose further that you have a pack of organisms that flux up those cycles by injecting a linear spurt of energy into one atmosphere ….

As you say, “I urge you to look at the evidence for such things before coming to the conclusion that you really understand how the entire universe works.”

> no-one has probably serious considered ….

> that there are cyclical fluxes of energy

> throughout the Universe

Epicycles.

Ray Tomes @ 115

Universal Business Time Cube explains climate:

Now seriously, you may be able to help yourself out if you go back and re-read what people have been telling you about curve fitting. Only this time, play devil’s advocate against yourself and try to understand the topic from their point of view assuming that they may actually be on to something and are not somehow simply closed minded.

Speculation is a fun way to get the juices going, but too much impatient fantasizing and you start to part company with reality– slow to discern and often unfriendly though it may be.

Evidence: It’s a good thing.

Fortunately, we now are entering into the 23rd year of an out of sample test (Hansen, 1988)

Of course, the only tests to base policy on are out of sample tests.

Several of my replies have not appeared. Please at least tell people that you are censoring me if you are, so that they do not think that I am not answering.

[Response: Repetitive and uninformative comments go to the Bore Hole in order to maintain signal to noise. – gavin]

Repeatedly people say “curve fitting” and such. Yes, all science is in fact curve fitting if you think about it. The question is, is it statistically significant or not? Those that say I did not understand the accusations have ignored this point. If a cycle has p<0.0001 chance of happening by chance then it makes no sense to refer to it as curve fitting. It is very likely real. Likewise, if a cycle shows in a variety of different time series with same period and each p<0.01 say. I trained in statistics and can assure you that Dewey's findings stand up to very close scrutiny.

A question for you: Can you explain why about a dozen different species around the world show a population cycle of 9.6 years, all with the same phase? In the case of Canadian Lynx, the population grows to about 10x what it was and collapses again each cycle. Please do not tell me it is a predator prey cycle with snowshoe rabbits, because you will not be able to explain all the other species with that sort of thinking. Coincidentally, there is evidence for a cycle of 9.6 years in ozone with same phase. Why? There are things going on that do not vaguely fit any accepted human "science" models.

[Response: I’m a little confused here – calculating significance with respect to a reasonable null hypothesis is very much a human ‘science’ model, and yet you appear to be claiming that your results lie outside of that. That seems contradictory. But regardless, all science is not ‘curve fitting’ – it is based on finding physical explanations for phenomena, not randomly correlating things to numerological fantasies. – gavin]

Ray Tomes, to contend that all science is curve fitting betrays a deep misunderstanding of what science is and how it is done. A p<0.0001 means nothing if you are using the wrong probability model. What you are doing is just a poor approximation of the first stage of scientific inquiry–exploratory data analysis. Moreover, you are applying only a tiny fraction of the tools available for EDA, so you are doing a poor job of that.

As to your contention about 9.6 years, google the law of large numbers. Or better yet, actually do some work and look into the population biology of the Canadian Lynx and try to understand the mechanism. Then you would be a lot closer to doing science. Right now, you're just a friggin' Pythagorean.

A cautionary blog post — remember this when you hear “hockey stick” — it’s a visual illusion, one of the common ways we fool ourselves about rate of change. There’s no point out in the future to worry about. It’s here, now.

http://www.kazabyte.com/2011/12/we-dont-understand-exponential-functions.html

Excerpt follows:

—————-

… there seems to be a fundamental misunderstanding about the properties of an exponential function. And the lack of a fundamental understanding of the exponential have grave consequences — we may draw incorrect conclusions that have huge errors because of it. Albert A. Bartlett perhaps said it best,”The greatest shortcoming of the human race is our inability to understand the exponential function.”

…

There is no such thing as a knee to an exponential function.

…

It’s not completely clear to me why we are fooled. But basically, I think it has something to do with the idea that regardless of where your observation point is, looking “left” always looks flat and small, while looking “right” always looks steep.

Not understanding the power of the exponential will result in us making bad decisions. …the “knee of the of curve” (or where the curve “hockey sticks”). “Where is it?” you are asked. You should think carefully about how you are going to answer this question that doesn’t quite make sense. It’s quite the “gotcha.”

Simon C.

Thank you for highlighting Huyber’s latest paper. I read it all, and it was interesting. It takes a while to get your head around, but I think this analogy helps:

‘Nearly all anomalously cool summer days are associated with significant cloud cover, however, well over two-thirds of days with significant cloud cover are not associated with anomalously cool summer days.’

….So major deglaciation nearly always coincides with insolation maxima, however, over two-thirds of the time insolation maxima are not associated with major deglaciation events.

108, Ray Tomes,

This link was broken:

http://www.cyclesresearchinstitute.org/dewey/case_for_cycles.pdf

91, Ray Ladbury: It is by no means necessary to understand everything to make reliable predictions.

I agree wholeheartedly!

What is necessary is to accumulate a bunch of independent tests to confirm that the predictions are reliable enough for the intended purposes. How large a “bunch” is necessary, and how reliable is “reliable enough for the intended purposes” are next to impossible to specify a priori. To date, climate predictions a few years in advance have not been reliable enough for planning purposes anywhere on earth (witness the Queensland Australia mistaken decision not to enlarge their dam and reservoir system, for one example.)

Ray Tomes’s contention, to which you objected, could be toned down a little to: unknown parts of the total mechanism might be influential enough that our ignorance of them makes the predictions of our models too inaccurate for practical purposes. Toned down that way, I doubt that you could make a case that the point is demonstrably false.

90, John P. Reisman: Funny how those that are most confused about climate change often claim change often claim the kettle is black and while simultaneously… such as it’s cooling and we are heading back to an ice age, or it’s warming, but it’s natural cycle…

Can you answer this for me: How can it be cooling and warming at the same time?

Do you have examples of the first claim?

It can be warming in some places and cooling in other places. It can be alternately warming and cooling.

OR,

We can have some evidence of cooling, and have some evidence of warming, and not know whether there is any net warming or cooling for a span of time.

63, mike: Surely you do not want us to think of you as a turd? Or is this an attempt at irony? If it is, I have to tell you that the precise meaning of your irony laden name evades me

A “septic skeptic” is a skeptic who repeatedly doesn’t accept the truth of assertions (refutations, explanations, etc) of AGW promoters. That fairly characterizes me. I chose “Septic Matthew” to distinguish myself from another “Matthew”; I took the insult into my monicker the same as others adopted the insults “Yankee”, “Knickerbocker”, “Hoosier”, “Dodger” and others.

#129–In my experience, John is correct; many garden-variety faux skeptics do indeed maintain rhetorical positions that are internally inconsistent. That’s what convinced me, more than anything else, that the mainstream has it more or less right.

The clearest public example of ‘warming and cooling’ (IMO) was Monckton in 2006 with the infamous ‘no SUVs in space’ gotcha. He was arguing, in essence, that Earth wasn’t warming, but Mars was warming at the same rate that Earth was.

Other inconsistencies I’ve noted over the years include selective approval of the use of modeling techniques (‘climate models bad, DMI high Arctic temps [based upon reanalysis data] good‘), selective views of climate sensitivity, redefining IR absorption and re-emission to be equivalent to IR reflection (I think that was the late John Daly), and of course the ever-popular temporal inconsistency of the moving goal post.

The irony of the current discussion is that you can claim that not only can it be ‘warming and cooling’ at the same time, it almost always is–that is, on different time-scales.

For example, where I am, it’s clearly cooling right now as a result of normal season changes, but it is just as clearly warming due to cyclic diurnal variability. (And, based upon the HadCRU monthly means I glanced at yesterday, it may also be cooling on a yearly scale while warming on a monthly scale, due to ENSO-related effects and other sorts of ‘natural variability.’) At the same time, I have every reason to think that the GE-driven warming trend–robust over lo, these thirty-plus years–continues unabated. Yet has the Holocene cooling trend really ended?

And so on. This may seem a pointless bit of Alice-in-wonderland fantasizing, but perhaps it’s worth reminding some of us what a world of epistemological hurt can be carried in that little word “is.” It’s very apt to make unwitting Platonists of us all–that is, to make us think that there is a simple, easily-stated core reality which trumps all else. What, we ask plaintively, is the temperature really doing? (And let’s spare a thought for the undefined “it” as well, since that’s what let me sneakily conflate global and local temps in the preceding paragraph.)

What we really want to know, of course, is not what it ‘is doing,’ but what is going to happen next. And that requires us to specify–in this particular Atlanta suburb, it’s going to get warmer for the next several hours, get cooler for the next several weeks (though with lots of ‘noise,’ and correspondingly low confidence.) Globally, it will probably warm over the next several months as La Nina fades, and will continue to warm over multi-decadal timescales due to greenhouse forcing.

The trouble is, now we’re starting to talk a bit like scientists ourselves, which means we’re starting to lose folks who just want to know what the hell it’s really doing.

Re: Warming/Cooling on different timescales

Fig. 4 in Hansen’s latest is a beautiful depiction of the evolution of temperature distribution over the last 60 years.

http://www.columbia.edu/~jeh1/mailings/2011/20111110_NewClimateDice.pdf

sidd

131, Kevin McKinney,

That’s a good comment.

Has the “Holocene cooling” been widely accepted as real? I thought that it was based mostly on a select few data series of disputed applicability to global average trends. Has the “Holocene cooling” ended?

> climate predictions a few years in advance have not been reliable enough

Which ones, by whom, for whom?

http://www.cpc.ncep.noaa.gov/products/expert_assessment/

climate predictions a few years in advance have not been reliable enough

…

How about Hansen’s 1988 forecast, which has temps trending below Scenario C (no growth in emissions from 2000 forward)?

[Response: So… Let’s say that yesterday I predicted that *if* it rained today, most people would have carried red umbrellas. But it didn’t rain – that implies that my prediction about the colour of people’s umbrellas is moot, since it is was contingent on it raining (which it didn’t). Scenario C is similar – you cannot validate a prediction for which the contingent factors did not occur. Thus Scenario B is the closest one can come to a prediction that is testable, and we have examined that on numerous occasions. – gavin]

I don’t know if the last went through; so I’ll be brief

Can you direct me to a quantitative analysis of the forecast. I understand that Scenario B is closest to what occurred; the link you gave me just states the obvious that temps are running low. Well, they are running below C as well (just as an easy benchmark for eyeball analysis)

You could say we got the temps expected in C but without the economic pain that C would have brought. A win/win.

So it’s not about C vs B; it’s about how good was B. C happens to be an easy comparison. My prior (based on the provided data) is that the forecast performed poorly, since temps are below what was predicted for a scenario with fewer forcings.

But analysis is needed; what sort of diagnostics have been performed on the 1988 Hansen forecast. And I don’t mean the calibrations that the link describes, since that just accepts the forecast and adjusts it ex post.

[Response: See Hargreaves (2010) – the prediction had skill (i.e. it was more informative than any reasonable ‘naive’ forecast that anyone was proposing in 1988). Additionally, the forecast would have been better if the model had a sensitivity closer to 3ºC – the independently determined to be the most likely value. – gavin]

#133–I don’t know how bombproof “Holocene cooling” is these days, though I don’t have the sense that it’s terribly controversial in general.

Just to expand for those who may know even less about it than I do, the general idea is that the Holocene era–the current one, pending official certification of the “Anthropocene”–reached its peak temperature around eight thousand years ago, and global climate has gradually been cooling since. (An inconvenient perspective for those who try to claim that “Of course we’re warming, we’re recovering from an Ice Age!”)

Robert Rodale prepared the figure below on the question back in 2004; I’m not sure how different the picture would look today. Perhaps someone will chime in with the latest research news on the topic.

http://www.globalwarmingart.com/wiki/File:Holocene_Temperature_Variations_Rev_png

Note the arrow to 2004 temps at the right of the figure.

> globalwarmingart

by Robert Rohde, who recently got his PhD and who has become a bit better known since then.

#137–Ack. Indeed. Must have got gardening on the brain. . .

Thanks; I’ll have a look at the link

I’ll note another prior: being better than a naive forecast does not mean a model is good enough to make policy decisions on. Plus, the model needs to be better than the forecast made in 1988.

Happy New Year!

From Hargreaves:

In the first section, it was argued that it is impossible to assess the skill (in the conventional sense) of current climate forecasts. Analysis of the Hansen forecast of 1988 does, however, give reasons to be hopeful that predictions from current climate models are skillful

You stated that there is some ‘skill’; Dr Hargreaves said there’s hope there there might be.

Is there an actual quantitative analysis of the Hansen forecast?

[Response: Please don’t play games. It reduces any possibility that you will get a substantive response to almost exactly zero. (For the hard of reading Hargreaves is referring to the impossibility of directly assessing the skill of a ~20 year forecast prior to the 20 years actually happening). – gavin]

Number9:

As gavin said above: “the forecast would have been better if the model had a sensitivity closer to 3ºC – the independently determined to be the most likely value.” (I believe the sensitivity in the late 80s model Hansen was using was closer to 4C).

Implicit in his comment is that 20-odd years later, models like NASA GISS Model E *do* exhibit climate sensitivity near 3C (just slightly less in the case of Model E, IIRC), and also fit observations quite well in a lot of other ways.

This “about 3C” sensitivity is constrained by a bunch of data and has been independently derived by a bunch of different groups employing a bunch of different methods including a bunch that don’t use GCMs at all.

So, many paths lead to the conclusion that modern models are getting sensitivity about right, while Hansen’s was a smidge high. For work done over two decades ago (today’s smart phones have computational power equivalent to a pretty sizable research computer from the 1980s) it’s held up remarkably well.

Number9:

Nor does it mean that totally ignoring science is a sound basis for making policy decisions on, which would seem to be your choice …

Suppose you have two identical pendulums. One of them is connected to a mechanism which regulates mechanical energy delivered to the pendulum depending on the position of the pendulum – an escapement mechanism in a grandfather clock. The other pendulum has random bits of energy delivered to it by a paddle poking into a randomly turbulent airflow. If you record the displacement of the pendulums versus time over a few cycles, and perform FFT’s on the data sets, both will show a strong peak at the pendulum frequency. However, the pendulum driven by the airflow is not an oscillator(assuming the swing of the pendulum doesn’t change the coupling of the paddle to the airflow); it’s a noise source, with the high and low frequency components filtered out by the pendulum.

Now lets replace the pendulum coupled to the turbulent airflow with a balanced stick – that has no natural frequency, and no elastic force like gravity. Couple the paddle to the stick with a leaky piston, so that low frequency perturbations are suppressed. Put a mechanism that turns the paddle for preferential coupling of turbulence that returns the stick to center, proportional to the displacement of the stick from center. the limit of force that can be generated by the paddle, combined with the mass it’s driving will filter out high frequency motions. Perform an FFT on the stick motion, and you’ll see a “peak” that looks the same as if there were some periodic mechanism involved, when there is only noise and dissipative filtering.

Because of the filtering, you can’t even tell if the turbulence noise is red, pink, or white. The climate also has very regular periodic drivers(diurnal, seasonal, Milankovic cycles) that couple into noisy processes(Hadley & Ferrell circulation, AMOC, NAO, ENSO). The irregular landforms and distribution of surface features, and the chaotic nature of fluid(atmospheric and oceanic) flow create noisy drivers, that couple into filtering mechanisms that result in “periodic” phenomena, like ocean waves, and probably ENSO. the warmest part of a day is usually in the afternoon, and the warmest days occur in the summer, but the temperature trajectories aren’t neat constant amplitude and phase sine waves. It may be impossible to tell from mathematical manipulations of limited data whether there is a periodic driver and noisy filtering process, or vice versa. I think that most climate cyclists don’t appreciate the difference.

There is also the issue of relaxation oscillators. They are characterized by a rate limited source(of energy or mass), a storage mechanism, and a triggerable mechanism or switch which quickly empties the storage – common examples are tipping buckets, neon lamp oscillators, and glacial cycles. One unifying feature is assymetry of the rates of charge and discharge. Another is the ease with which their behavior can be made chaotic. Start at the wikipedia entry on Van der Pol oscillator, and chaos. Fig 10-8 here looks a lot like glacial cycles.

recaptcha says awseries Camp