It is a good tradition in science to gain insights and build intuition with the help of thought-experiments. Let’s perform a couple of thought-experiments that shed light on some basic properties of the statistics of record-breaking events, like unprecedented heat waves. I promise it won’t be complicated, but I can’t promise you won’t be surprised.

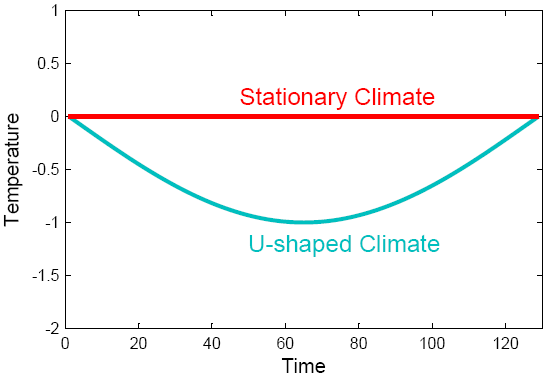

Assume there is a climate change over time that is U-shaped, like the blue temperature curve shown in Fig. 1. Perhaps a solar cycle might have driven that initial cooling and then warming again – or we might just be looking at part of a seasonal cycle around winter. (In fact what is shown is the lower half of a sinusoidal cycle.) For comparison, the red curve shows a stationary climate. The linear trend in both cases is the same: zero.

Fig. 1.Two idealized climate evolutions.

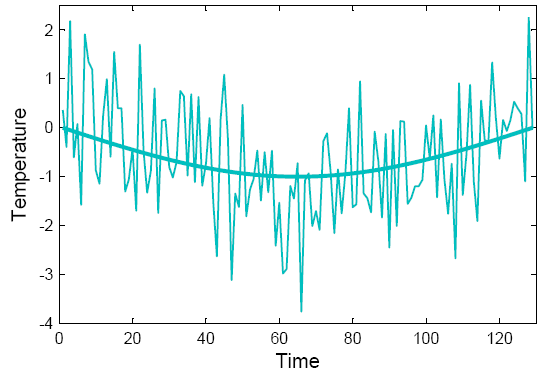

These climates are both very boring and look nothing like real data, because they lack variability. So let’s add some random noise – stuff that is ubiquitous in the climate system and usually called ‘weather’. Our U-shaped climate then looks like the curve below.

Fig. 2. “Climate is what you expect, weather is what you get.” One realisation of the U-shaped climate with added white noise.

So here comes the question: how many heat records (those are simply data points warmer than any previous data point) do we expect on average in this climate at each point in time? As compared to how many do we expect in the stationary climate? Don’t look at the solution below – first try to guess what the answer might look like, shown as the ratio of records in the changing vs. the stationary climate.

When I say “expected on average” this is like asking how many sixes one expects on average when rolling a dice a thousand times. An easy way to answer this is to just try it out, and that is what the simple computer code appended below does: it takes the climate curve, adds random noise, and then counts the number of records. It repeats that a hundred thousand times (which just takes a few seconds on my old laptop) to get a reliable average.

For the stationary climate, you don’t even have to try it out. If your series is n points long, then the probability that the last point is the hottest (and thus a record) is simply 1/n. (Because in a stationary climate each of those n points must have the same chance of being the hottest.) So the expected number of records declines as 1/n along the time series.

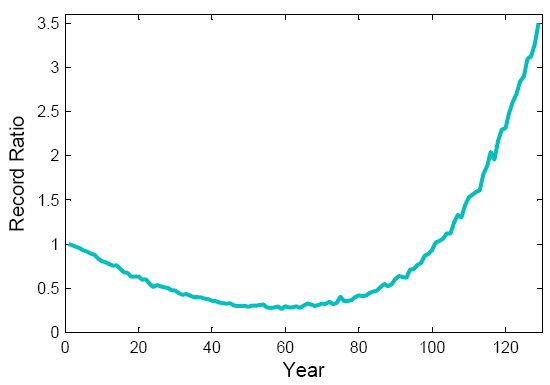

Ready to look at the result? See next graph. The expected record ratio starts off at 1, i.e., initially the number of records is the same in both the U-shaped and the stationary climate. Subsequently, the number of heat records in the U-climate drops down to about a third of what it would be in a stationary climate, which is understandable because there is initial cooling. But near the bottom of the U the number of records starts to increase again as climate starts to warm up, and at the end it is more than three times higher than in a stationary climate.

Fig. 3. The ratio of records for the U-shaped climate to that in a stationary climate, as it changes over time. The U-shaped climate has fewer records than a stationary climate in the middle, but more near the end.

So here is one interesting result: even though the linear trend is zero, the U-shaped climate change has greatly increased the number of records near the end of the period! Zero linear trend does not mean there is no climate change. About two thirds of the records in the final decade are due to this climate change, only one third would also have occurred in a stationary climate. (The numbers of course depend on the amplitude of the U as compared to the amplitude of the noise – in this example we use a sine curve with amplitude 1 and noise with standard deviation 1.)

A second thought-experiment

Next, pretend you are one of those alarmist politicized scientists who allegedly abound in climate science (surely one day I’ll meet one). You think of a cunning trick: how about hyping up the number of records by ignoring the first, cooling half of the data? Only use the second half of the data in the analysis, this will get you a strong linear warming trend instead of zero trend!

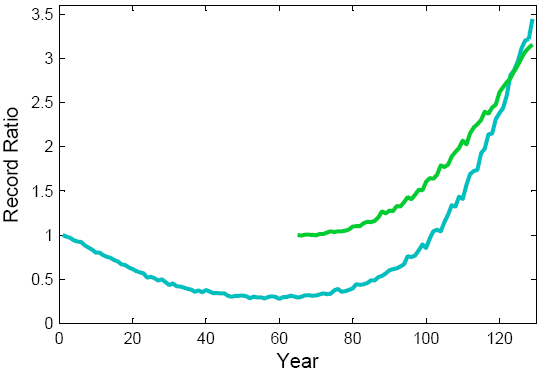

Here is the result shown in green:

Fig. 4. The record ratio for the U-shaped climate (blue) as compared to that for a climate with an accelerating warming trend, i.e. just the second half of the U (green).

Oops. You didn’t think this through properly. The record ratio – and thus the percentage of records due to the climatic change – near the end is almost the same as for the full U!

The explanation is quite simple. Given the symmetry of the U-curve, the expected number of records near the end has doubled. (The last point has to beat only half as many previous points in order to be a record, and in the full U each climatic temperature value occurs twice.) But for the same reason, the expected number of records in a stationary climate has also doubled. So the ratio has remained the same.

If you try to go to even steeper linear warming trends, by confining the analysis to ever shorter sections of data near the end, the record ratio just drops, because the effect of the shorter series (which makes records less ‘special’ – a 20-year heat record simply is not as unusual as a 100-year heat record) overwhelms the effect of the steeper warming trend. (That is why using the full data period rather than just 100 years gives a stronger conclusion about the Moscow heat record despite a lesser linear warming trend, as we found in our PNAS paper.)

So now we have seen examples of the same trend (zero) leading to very different record ratios; we have seen examples of very different trends (zero and non-zero) leading to the same record ratio, and we have even seen examples of the record ratio going down for steeper trends. That should make it clear that in a situation of non-linear climate change, the linear trend value is not very relevant for the statistics of records, and one needs to look at the full time evolution.

Back to Moscow in July

That insight brings us back to a more real-world example. In our recent PNAS paper we looked at global annual-mean temperature series and at the July temperatures in Moscow. In both cases we find the data are not well described by a linear trend over the past 130 years, and we fitted smoothed curves to describe the gradual climate changes over time. In fact both climate evolutions show some qualitative similarities, i.e. a warming up to ~1940, a slight subsequent cooling up to ~ 1980 followed by a warming trend until the present. For Moscow the amplitude of this pattern is just larger, as one might expect (based on physical considerations and climate models) for a northern-hemisphere continental location.

NOAA has in a recent analysis of linear trends confirmed this non-linear nature of the climatic change in the Moscow data: for different time periods, their graph shows intervals of significant warming trends as well as cooling trends. There can thus be no doubt that the Moscow data do not show a simple linear warming trend since 1880, but a more complex time evolution. Our analysis based on the non-linear trend line strongly suggests that the key feature that has increased the expected number of recent records to about five times the stationary value is in fact the warming which occurred after 1980 (see Fig. 4 of our paper, which shows the absolute number of expected records over time). Up until then, the expected number of records is similar to that of a stationary climate, except for an earlier temporary peak due to the warming up to ~1940.

This fact is fortunate, since there are question marks about the data homogeneity of these time series. Apart from the urban adjustment problems discussed in our previous post, there is the possibility for a large warm bias during warm sunny summers in the earlier part, because thermometers were then not shaded from reflected sunlight – a problem that has been well-documented for pre-1950 instruments in France (Etien et al., Climatic Change 2009). Such data issues don’t play a major role for our record statistics if that is determined mostly by the post-1980 warming. This post-1980 warming is well-documented by satellite data (shown in Fig. 5 of our paper).

It ain’t attribution

Our statistical approach nevertheless is not in itself an attribution study. This term usually applies to attempts to attribute some event to a physical cause (e.g., greenhouse gases or solar variability). As Martin Vermeer rightly said in a comment to our previous post, such attribution is impossible by only analysing temperature data. We only do time series analysis: we merely split the data series into a ‘trend process’ (a systematic smooth climate change) and a random ‘noise process’ as described in time-series text books (e.g. Mudelsee 2010), and then analyse what portion of record events is related to either of these. This method does not say anything about the physical cause of the trend process – e.g., whether the post-1980 Moscow warming is due to solar cycles, an urban heat island or greenhouse gases. Other evidence – beyond our simple time-series analysis – has to be consulted to resolve such questions. Given that it coincides with the bulk of global warming (three quarters of which occurred from 1980 onwards) and is also predicted by models in response to rising greenhouse gases, this post-1980 warming in Russia is, in our view, very unlikely just due to natural variability.

A simple code

You don’t need a 1,000-page computer model code to find out some pretty interesting things about extreme events. The few lines of matlab code below are enough to perform the Monte Carlo simulations leading to our main conclusion regarding the Moscow heat wave – plus allowing you to play with the idealised U-shaped climate discussed above. The code takes a climate curve of 129 data points – either half a sinusoidal curve or the smoothed July temperature in Moscow 1881-2009 as used in our paper – and adds random white noise. It then counts the number of records in the last ten points of the series (i.e. in the last decade of the Moscow data). It does that 100,000 times to get the average number of records (i.e the expected number). For the Moscow series, this code reproduces the calculations of our recent PNAS paper. In a hundred tries we find on average 41 heat records in the final decade, while in a stationary climate it would just be 8. Thus, the observed gradual climatic change has increased the expected number of records about 5-fold. This is just like using a loaded dice that rolls five times as many sixes as an unbiased dice. If you roll one six, there is then an 80% chance that it occurred because the dice is loaded, while there is a 20% chance that this six would have occurred anyway.

To run this code for the Moscow case, first download the file moscow_smooth.dat here.

----------------------

load moscow_smooth.dat

sernumber = 100000;

trendline = (moscow_smooth(:,2))/1.55; % trendline normalised by std dev of variability

%trendline = -sin([0:128]'/128*pi); % an alternative, U-shaped trendline with zero trend

excount=0; % initialise extreme counter

for i=1:sernumber % loop through individual realisations of Monte Carlo series

t = trendline + randn(129,1); % make a Monte Carlo series of trendline + noise

tmax=-99;

for j = 1:129

if t(j) > tmax; tmax=t(j); if j >= 120; excount=excount+1; end; end % count records

end

end

expected_records = excount/sernumber % expected number of records in last decade

probability_due_to_trend = 100*(expected_records-0.079)/expected_records

----------------------

Reference

Mudelsee M (2010) Climate Time Series Analysis. Springer, 474 pp.

Dan H. might want to find out where that image comes from originally — what data, when, by whom; it’s much blogged, but I didn’t chase that particular red herring down once I saw how much it’s been reused.

Here’s a link for a Google image search (apology for the length)

https://www.google.com/search?tbs=sbi:AMhZZiuDMqGX6GVcIghi467Y9FLS_19bcEKq7rAP2cROXXB8ElQ99MWWZFALoRPT19mVl616s4dk6hNZ1I9VCXIgv-AvZ9CfrPeHN0bSB8qjM9VUr97EAhoHv_18_1vSge2vG-EXA1mHNyKXi1LpfPlqDCcprf1-TXpcLjsEedekzDd-DraKoa3C6oyQP35m5y6Rn7LZbGSB5sEAHJxHPOUATuCjCkWlwLNyp5H7gLBMAr9vrd890ZmTTsvTj5FX9Dv3-a6WZ8BRiKOG2QXNdS4wsO1OlLB6j_1Yu8vavNo_18FyJ4kD-VlSARjYE_19gZBHsuHy98TeTpwMPHtjBDJYOnr48qT_1uaZBTyHMoiOvZCopebYQUPWqOkOo9uvtprZwLTgAxR_1Pnslli9Gbn5ct9K-ITSigmBODa0lma3VjxBGs8jPf_1NV97YprYjM601g-4n62yHp1SkaKlUsbKKUYw3LRmrL46gJ4ClFLNNCjrBQnxtelTFy9C8hd6mxuikiyW5-w09jO7TWbNjCRhvjPhIKELQ-IabUOeAQAprtBmtkf-IsYesLLnxnllkgvxzEwPq-Sy2jJxBx6E66o1XUi4lIi95F56iCnrxUOVQ9kxmpPKVPE_1P1X3QUMA4e7jFho_1GgCo0SD4vy_1BLIeqsCD7-aC4QgEvhpZEkpHgFKfGdrq8DJrO2iaz-mhrmQMfm8bZScfw56XOPXFwDmSVG9ZukbK_1itSywjCZ-EwoU8ThyPHWId2ikjP6RZGv1wpAm6fs9HZeBM2P6PlTlvzocx46Wdxane4DTifV7AmQnkV_1YUUcoRzuk_1t0rEo-5YV9nboL8qn81vh9aDJFerR9mPyPTUHvUQE7Lt4_1pLcU5IezhnXcqyeOCJ15KZ0DvmWKooA2WO2NIz-1Pq2LhON3ToRZRqfcE4Y5-xZMR449UC-nV6c8bL5lVelPKMPGHWp3Shbba0uVV17Yyjb5ZGP3w56YprAd398cXsTxE6kPBr3LY1oyZccOaOOVShK37ugG0e0GLMqSJ2ZTHJe6XYKmvhITa8khCW6TgTtMkUdXGus_1_1oM_1wPYZVwkidfD28TgblvSjJfmNHhB0rZZJ8QpI18yHePKjQ_15C_1GOJfQEhey3OFtlDl4qUTjDJir_1zDTyuTz4JcALGlXhKrOSD05Gu1qe3YJx-cASz3GdZv31KU12dX1QStb3lChgdWQHifqamCpas7bbD6BVx0N5k6kni-nK3vvt7wv2w02vc-wqOX8rJDJv-UQ1-_1VkbKmKHldDsJf58yX25M6NiAiS9cIYV_1Y3eIE4s_1miOdDAVN3Q&hl=en&sa=G&biw=1505&bih=889&gbv=2&site=search&ei=qFrFTuSYGeegiQKwuLjaBQ&ved=0CD4Q9Q8

I must love the smell of red herring in the morning — it was too tempting.

Looks like Dan H’s chart originates with this blogger, attributed to “NOAA data”

http://hallofrecord.blogspot.com/2008/02/2007-monthly-us-high-temperature.html

Kevin, Hank, Dan,

I mainly posted that link to show how many records are really occurring so we could think about what is extreme. We would indeed expect the ratio of highs to lows to go in the direction of the temperature trend. We also might expect more record cold in the 30s as the temperature record really only goes back to 1880s with large numbers of thermometers and without using proxies. The 30s were extreme when it came to records but so are the 2000s, and when measured just by record temperatures part of the reason has to be warming.

Now there is another point to this all. If we describe extreme a different way do those extremes in the 1930s like the dust bowl still lock extreme in the world of 2011. They definitely do to me. I live in Texas, and this summer has been catagorized as extreme. I would agree, but my city and state did not break the record high for the year, but did break many daily highs and had very little rain. But even given the summer of 2011s extreme we would still consider the dust bowl in the 30s and the decade without rain in the 40s and 50s to be extreme.

Norm’s link has data through Sep 2009. The ratio keeps getting worse:

http://capitalclimate.blogspot.com/2010/10/endless-summer-xii-septembers.html

I wonder if Capital Climate could round up the full year’s result for 2010? and 2011 in a couple months?

Dan H. keep in mind that the plethora of new highs are all higher than anything in earlier decades including the 30’s.

Hank,

See Figure 7 bottom right panel of the Hansen paper. It wouldn’t surprise me if US records weren’t currently beating previous decades. Although the NCAR article linked to in #146 should give anyone pursuing that line in an honest fashion pause for thought.

#148–

Dan, thank you for clarifying–though it wasn’t necessary to explain stacked columns.

If I understand you correctly, you agree that climate is currently warming, but are skeptical that there are more extremes occurring in general. Yes?

You speak about the absolute numbers of US records. I had a quick look, but found no survey of them over the decades. It sounds as if you have. Care to share?

Thanks, Hank–

The original shows that the data are for *monthly statewide high records.* That is, if any station anywhere in the state has a record high, then that state is considered to have a record high for that month; otherwise, no record. Hence, there are 12 months x 50 states, or 600 “opportunities” for records per year. (Presumably modern definitions of “states” are extended backward to the beginning of the record.) I’ll leave the statistical heavy lifting to others, but it would seem that this individual methodology would necessitate care in making comparisons.

Also, what I couldn’t see in the small version linked is that the graph was made in 2007, and so has dated a tad. It is quite clear that the blogger thinks he has refuted Meehl et al., even if Dan isn’t making that claim–and quite clear that he hasn’t, since his ‘proxy’ for warming is not validated in any way, just assumed to be conclusive:

“it is reasonable to infer that:

–Since 2000 there has been a noticeable absence of high temperature extremes or “heat waves”

–The 2001-07 period may have been cooler than the 1994-2000 period”

Um, no–“heat waves” need not set record maxima, and the maxima by themselves aren’t sufficient to characterize the mean temps. (Especially when it’s the minima expected to be affected most strongly by greenhouse warming.)

I notice he updated in 2009, but not since. Pity. It would certainly be interesting to see what 2010 and 2011 looked like through this particular lens.

Repeatedly one reads that our atmosphere has 4% more water vapor in it, and that this fact contributes to greater precipitation and more catastrophic weather events.

But the 4% figure seems small in comparison to the magnitude of the deluges experienced in so many places recently. Surely the 4% extra atmospheric H2O is not evenly distributed in the atmosphere. Aren’t some places just as arid as before and other places finding themselves (or their weather) with atmospheric H2O boosted much more than 4%? Shouldn’t this point of clarification be made when catastrophic weather is discussed?

Scott Ripman @159 — Yes and there are many papers on the subject of rainfall. Briefly, precipitation has increased in mid and high latitudes and seems to be decreasing in the so-called subtropics. The latter includes all across the American south and all around the Mediterrean. Moreover, it may be that increased aerosols lead to more extreme rain events; I left a link regarding that on the Open Variations thread. Also there is a link by Hank Roberts indicating a tendency to increased extreme events in the Mediterrean region.

#156–Yes, the Hansen paper’s figure 7 does present a different picture. See here:

http://www.columbia.edu/~jeh1/mailings/2011/20111110_NewClimateDice.pdf

Scott @ 159, yes you raise an important point regarding communication, and yes water vapor is very unevenly distributed. Total arid area is increasing and expected to increase more. Drought is a major concern of those who are paying attention. See for instance Dai’s Drought Under Global Warming: A Review. (where is that latest paper of Hansen’s?)

But excessive rain and flooding are also a growing problem: See my comment # 29 under Scientific Confusion earlier today.

https://www.realclimate.org/index.php/archives/2011/11/scientific-confusion/comment-page-1/#comment-219404

This paper:

Recent trends of the tropical hydrological cycle inferred from Global Precipitation Climatology Project and International Satellite Cloud Climatology Project data

Y. P. Zhou et al. 2011

gives a general idea of dry and wet areas and reasons. You might look up Hadley cells, Inter Tropical Convergence Zone (ITCZ) in Wikipedia. I think Zhou’s paper is online somewhere but is cryptic just now so I’ll give you the abstract:

You might also like a certain book titled “Hell and High Water.”

Pete Dunkelberg (154) wrote:

I would also keep in mind that in terms of the warming trend the United States has been very fortunate so far. In terms of five-year averages we are still pretty close to where we were in the 1930s, but much of the world left the 1930s behind a long time ago. For the Northern Hemisphere as a whole, the last the time the 5-yr running mean was equal to that of the late 1930s was about 1985. Globally? About 1978.

Please see:

GISS Surface Temperature Analysis

Analysis Graphs and Plots

http://data.giss.nasa.gov/gistemp/graphs/

The United States may very well play catch-up at some point. But temperature itself isn’t the worst of it. In terms of the food supply the changes to the hydrological cycle are probably far more important. There is the flooding…

Groisman et al note:

In the lower 48 states the trend between 1910-1999 was for annual rain to increase by 6% per century, but heavy rain (above the 95th percentile) has increased by 6%, very heavy rain (above the 99%-les) by 24%, and extreme rain (above the 99.9%-les) by 33%. (Ibid.)

A recent fingerprint study shows that models do well in matching the pattern of increases:

… although they seem to underestimate the actual trend.

But in my view drought is far more important.

There will be the drying out of the continental interiors, particularly during summer. As I said earlier, ocean has greater thermal inertia than land. It takes longer to heat it up or cool it down. And in a warming world this implies land will warm more quickly than ocean. Thus the relative humidity of moist maritime air will drop more quickly than before as it moves inland and with it the chances for precipitation. In contrast, precipitation may increase during the wintertime. But with an earlier melt this will imply flooding. And at higher temperatures earlier in the year the moisture will tend to evaporate more quickly, leaving less moisture for later in the year. Reduced moisture during the summer will imply a reduction in moist air convection at the land’s surface. This will imply higher summertime temperatures and in time a net decrease in plant productivity.

As measured by the Palmer Drought Severity Index (PDSI), we have observed the drying out of land on a global scale.

Please see:

… and also see:

http://www.tiimes.ucar.edu/highlights/fy06/dai.html

Then there is drought driven by the expansion of the Hadley cells. I don’t know how well the models are doing at modeling this expansion now, but previously it was noted that the Hadley cells were expanding at roughly 3X the rate projected by the models. With Hadley Cells, moist air rises in the tropics, gives up its moisture as it cools with increasing altitude, then subsides once it has given its moisture at roughly 30 N/S of the equator. This may very well be a factor in the recent Texas drought. Dallas is at about 32 N.

Drought will likely have the greatest effect upon our food supply, and we have already observed a net global decrease in plant productivity.

Please see:

This global shift in plant productivity is an expected result of global warming, but we didn’t expect it so early.

http://web.mit.edu/newsoffice/2011/mass-extinction-1118.html

“… the end-Permian extinction was extremely rapid, triggering massive die-outs both in the oceans and on land in less than 20,000 years … coincides with a massive buildup of atmospheric carbon dioxide, which likely triggered the simultaneous collapse of species in the oceans and on land.

… the average rate at which carbon dioxide entered the atmosphere during the end-Permian extinction was slightly below today’s rate of carbon dioxide release into the atmosphere due to fossil fuel emissions.

…

… The researchers also discovered evidence of simultaneous and widespread wildfires that may have added to end-Permian global warming, triggering what they deem “catastrophic” soil erosion and making environments extremely arid and inhospitable.”

The researchers present their findings this week in Science

Sir,

Those are all valid explanations for the recently observed temperature increases, and generally accepted (although there are a few who will deny them).

#163–Thank you, Timothy Chase. Good information, as usual. The Zhao and Running was interesting, if not particularly cheery, and I’d missed it completely.

#165–Dan, to what does “those” refer? I really don’t know what you mean.

> all valid explanations for the recently observed temperature increases

> Dan H.

“Those” aren’t happening now — “anything but the IPCC” requires _two_ hypothetical forcings — one to cancel out the known physics explaining the effect of increasing CO2, and another to explain the facts by something besides CO2.

“Those” from the PETM — except the rate of change of CO2 — aren’t happening.

Kevin McKinney wrote in 166:

Not a problem. Fortunately the paper itself, two comments and a response have been made open access by Science:

Maosheng Zhao and Steven W. Running (20 August 2010) Drought-Induced Reduction in Global Terrestrial Net Primary Production from 2000 Through 2009, Science, Vol. 329 no. 5994 pp. 940-943

http://www.sciencemag.org/content/329/5994/940.full

Arindam Samanta et al. (26 August 2011) Comment on “Drought-Induced Reduction in Global Terrestrial Net Primary Production from 2000 Through 2009”, Science, Vol. 333 no. 6046 p. 1093

http://www.sciencemag.org/content/333/6046/1093.3.full

Belinda E. Medlyn (26 August 2011) Comment on “Drought-Induced Reduction in Global Terrestrial Net Primary Production from 2000 Through 2009”, Science, Vol. 333 no. 6046 p. 1093

http://www.sciencemag.org/content/333/6046/1093.4.full

Maosheng Zhao, Steven W. Running (26 August 2011) Response to Comments on “Drought-Induced Reduction in Global Terrestrial Net Primary Production from 2000 Through 2009”, Science, Vol. 333 no. 6046 p. 1093

http://www.sciencemag.org/content/333/6046/1093.5.full

Might make for interesting discussion at some point. There has been some movement on the other points as well. I might try to get back to them a little later.

Those oil-rich black shales we hear so much about lately?

They were laid down in anoxic conditions.

http://www.sciencedirect.com/science/article/pii/S0264817206001061

Does this mean that by pushing climate to the kind of anoxic ocean that Peter Ward warns of, we’re setting up conditions to restore the Earth’s petroleum supply over the longer term?

That would be good news for the descendants of, you know, the opossums or squids or whatever form of intelligent live eventually evolves on this planet.

Kevin,

I was responding to SirCharge where he stated that the difference between last years heat wave and the earlier heat wave (1936) was that Moscow has been built up significantly since then, such that the increase above 1936 levels could be attributed to steel and asphalt.

The “plethora” of new highs mentioned earlier are largely the result of more stations being in existence. Many of the neighboring stations which recorded record highs in 1934 or 36 did not record records recently.

Your assessment about my beliefs in #157 are correct.

> steel and asphalt … new highs … more stations

Watts’s ‘surface stations’ notion failed for the US — he’s been shown wrong. You assert it now for Russia, because — why?

And

> new highs mentioned earlier are largely the result of more stations

More thermometers used, and you assert this makes temperatures go up?

——

More on the PETM study:

http://www.cbc.ca/news/technology/story/2011/11/17/science-mass-extinction.html

“Scientists finally know the date — and hence the likely cause — of a massive extinction that wiped out 95 per cent of life in the oceans and 70 per cent of life on land …. Most affected species met their demise within 20,000 years — a blink of an eye on the geological timescale. In all, the mass extinction lasted less than 200,000 years, wiping out huge forests of conifer trees, tree ferns, big amphibians, large reptiles such as dimetrodons, mammal ancestors called synapsids and a huge diversity of fish and shellfish.

Charles Henderson (middle) of the University of Calgary collects material from a sedimentary layer in Shangsi, Sichuan Province, China. Charles Henderson (middle) of the University of Calgary collects material from a sedimentary layer in Shangsi, Sichuan Province, China. The precise timing coincides with a huge outpouring of carbon dioxide and methane from volcanic lava flows in northwest Asia known as the Siberian traps.”

—–

Reminder: http://web.mit.edu/newsoffice/2011/mass-extinction-1118.html

“… the end-Permian extinction … coincides with a massive buildup of atmospheric carbon dioxide, which likely triggered the simultaneous collapse of species in the oceans and on land.

… the average rate at which carbon dioxide entered the atmosphere during the end-Permian extinction was slightly below today’s rate of carbon dioxide release into the atmosphere due to fossil fuel emissions.”

A map for you Dan H. I forget now who posted this on another blog:

http://www.nrdc.org/globalWarming/hottestsummer/images/summernighttime.png

#170–Thank you, Dan. I still can’t find his comment, but no matter.

Have to say, UHI as an explanation doesn’t really wash, if that was the point. Temporally, 30s Moscow may have been less built up, but wasn’t Rahmstorf et al referring to ‘the Moscow station?’ In that case, we’d need to see what the temporal structure of the ‘build up’ *around the staation* was before proposing it to have some explanatory value.

But the main thing is, the “Moscow heat wave” affected a huge area of central Russia–not just Moscow. Satellite image:

http://earthobservatory.nasa.gov/IOTD/view.php?id=45069

All those forest fires weren’t due to asphalt being solar-heated. . .

Kevin, Hank, and Holly,

I just said that his explanation was plausible. Moscow broke its all time record high by 1.5C. The summer of 2010 was similar to the summer of 1936. I cannot say whether the record broke the previous highs because of the urban buildup, an extended blocking event, or global warming. Likewise, I will not make an assertion that the U.S. did not break their record highs for any of the above reasons.

I will maintain that these types of record highs are not the result of an increasing mean, but rather a specific weather event, whose frequency of occurance has not changed. When the weather event (blocking) occurs, specific local conditions will determine whether new records are broken. Russia broke many records in 2010. In the U.S., many of the 1936 records still stand.

> plausible

arguable, at least, which may be closer to what you’re looking for.

Plausible, only by ignoring the infrared absorbtion physics — the same physical behavior that makes your laser CD player work.

#174–Well, of course there are specific “local” causes when records fall. But that doesn’t mean that the bigger context isn’t important, or doesn’t contribute to the outcome.

But leaving the specifics aside, Dan, if you accept a warming trend, how does an increased probability of warm records *not* follow–unless you posit a decrease in variability, perhaps? Your agnosticism seems a tad “over-determined!”

Kevin,

Exactly! Much of the warming trend has been attributed to higher low temperatures. This has resulted in an increased mean with a smaller standard deviation. If you go to the NOAA weather site and check the records for 2011, the monthly high maximum temperatures have outpaced the low maximum temperature 527 – 324. However, the monthly high minimum temperatures have exceeded the low minimum temperatures 781 – 240. More of the observed warming can be attributed to an increase in minimum temperatures.

[Response: Unintelligible nonsense. You are really confused on this whole topic. Further unsupported opinions and gibberish are going straight to the borehole.–Jim]

> exactly

Not exactly.

“The eight warmest years on record (since 1880) have all occurred since 2001, with the warmest year being 2005.

Additionally (from IPCC, 2007):

The warming trend is seen in both daily maximum and minimum temperatures, with minimum temperatures increasing at a faster rate than maximum temperatures….”

http://www.epa.gov/climatechange/science/recenttc.html

Another reason that’s a problem:

Rice yields decline with higher night temperature from global warming

Jim,

A question for you. Hank’s post claims that minimum temperatures have risen faster than maximums, yet there is no claims of gibberish, or unsupported opinion. Yet, when I show actual numbers that says the same think, with a reference, it is called, “unintelligible nonsense.” Therefore, do you feel that Hank is as confused as you claim I am?

[Response: No, I don’t think Hank is 1/10 as confused as you are. And your “actual numbers” are meaningless without proper reference and discussion.–Jim]

He’s such a mixer, and so eager for attention. Not worth much though.

He doesn’t cite sources, so you can’t see the context for what he says. He consistently provides fake context so when he does post something factual, its meaning for a naive reader is — bent, distorted.

That’s how ‘out of context’ and ‘unsupported’ claims are useful — to delay and confuse.

Tamino nailed this tactic inline, weeks ago, here: http://tamino.wordpress.com/2011/11/07/berkeley-and-the-long-term-trend/#comment-56469

Decent summary as of last January here:

Is the U.S. climate getting more extreme?

Posted by: JeffMasters, 2:37 PM GMT on January 24, 2011

“… The portion of the U.S. experiencing month-long maximum or minimum temperatures either much above normal or much below normal has been about 10% over the past century (black lines in Figures 2 and 3.) However, over the past decade, about 20% of the U.S. has experienced monthly maximum temperatures much above normal, and less than 5% has experienced maximum temperatures much cooler than normal. Minimum temperatures show a similar behavior, but have increased more than the maximums (Figure 3).*

Climate models [tell us that] minimum temperatures should be rising faster than maximum temperatures if human-caused emissions of heat-trapping gases are responsible for global warming, which is in line with what we are seeing in the U.S. using the CEI.

While there have been a few years (1921, 1934) when the portion of the U.S. experiencing much above normal maximum temperatures was greater than anything observed in the past decade, the sustained lack of maximum temperatures much below normal over the past decade is unique. The behavior of minimum temperatures over the past decade is clearly unprecedented–both in the lack of minimum temperatures much below normal, and in the abnormal portion of the U.S. with much above normal minimum temperatures….”

____________

*Figure 3.

http://www.wunderground.com/hurricane/2011/2010_cei_min.png

The Annual Climate Extremes Index (CEI) for minimum temperature, updated through 2010, shows that about 35% of U.S. had minimum temperatures much warmer than average during 2010. This was the 7th largest such area in the past 100 years. The mean area of the U.S. experiencing minimum temperatures much warmer than average over the past 100 years is about 10% (thick black line.) Image credit: National Climatic Data Center.

[edit – stay substantive – we are not interested in attacks on other commenters]

Ten key messages from the IPCC Special report on extreme events.

http://cdkn.org/2011/11/ipcc-srex/

not quite as being depicted in the craposhere.

#176-182, mostly–

I don’t think that records are the best way to analyze this (sorry, Dr. Meehl.) You throw out a lot of information–which is perhaps part of the attraction, since there is a ton of data and if you don’t have software which can automate some of the processing, it can get tedious. But records are ‘pre-culled!’

But I digress–the point is, all those non-record data can give a more complete picture, and don’t introduce the complication of past records being ‘wiped out.’ Which is why Figure 4 of Hansen et al, 2011–the “climate dice” paper–seems to me to have quite a bit more to say about variability over time. See figure 4, where the distribution is clearly broader for recent decades:

http://www.columbia.edu/~jeh1/mailings/2011/20111110_NewClimateDice.pdf

177 Dan said, “Much of the warming trend has been attributed to higher low temperatures. This has resulted in an increased mean with a smaller standard deviation.”

You’re doing the apples to oranges thing. That there is a lower difference between daily highs and daily lows does not change the standard deviation of daily highs, daily averages, or daily lows in and of itself.

The whole question of whether a single event was caused by global warming is moot. Every day for a region is completely different due to AGW, including very cold days that wouldn’t have occurred without AGW. The 2010 blocking event? Wouldn’t have occurred without AGW. Of that we’re essentially certain. Another blocking event that didn’t happen in August 2007? Might have occurred without AGW. We can say with certainty that we’ll get more Moscow warming type events with AGW, but the dates of their occurrences will be completely different compared to a non-AGW world. If we get 5 times as many of these events in a specific AGW world, then the odds are that none of those 5 events will coincide with the 1 event we would get without AGW. Thus, AGW both prevents and causes heat waves.

100% of the weather we get is completely different because of AGW and there is no way to guess what the weather would have been without AGW. All we can determine is means and variability. Hank pointed out that if the mean increases due to AGW, then the only way to get fewer extreme highs is by lowering variability. That’s correct whether we’re talking daily high, mean, or low temperatures. That’s a good question for the moderators – does AGW change variability?

Of course, all this breaks down if we drive temperatures high enough that heat waves which had 0% probability without AGW start occurring.

Richard,

How can you be so certain that the 2010 blocking event would not have occurred without AGW? The records that were broken last year were set in 1936, in what many assert was a similar blocking event. A similar situation occurred in the U.S. See the link to which Kevin posted in #184, comparing the two hottest summers in the U.S. in the 1930s with those of the past decade. AGW did not cause this recent blocking event any more than it cause a similar occurrance 75 years ago. I am curious how you can say that we know with certainty that these events will increase in the future.

The NOAA has an ongoing investigation into the Russian heat wave of 2010. A recent assessment has been published :

http://www.agu.org/pubs/crossref/2011/2010GL046582.shtml

Kevin,

Thanks for the link. I just wish Hansen had expanded his graphs further back in time. His figure 8 indicates that there may have been much greater spread in the data in previous years.

“Monday, March 21, 2011

Tamino is watching the food

Open Mind takes on what I have argued is the critical “final common pathway” of global warming risks — crop and supply chain failures ….

…

‘Why did the Russian wheat harvest suffer so? Because of the record-breaking heat wave and drought which plagued a massive region, at just the wrong time for Russian agriculture. And one of the contributing factors is: global warming.

That’s the ugly, deadly dangerous secret the denialists don’t want you to think about. That’s why they sank so low as to try to blame inflation of food prices on attempts to fight global warming, when it’s really due to: global warming.’

…”

http://theidiottracker.blogspot.com/2011/03/tamino-is-watching-food.html

Oh, for those tracking the tactics of assertion without citation, compare the assertions posted above without any source to what you find looking for information from sources.

Yes, you’ll find crap sources out there that the deniers fall back on when pressed for any citation. Stay with the science sites and avoid the “worldclimatereport” kind of spin sites.

One characteristic of reliable science writing — it evolves over time:

Scientific Assessment of 2010 Western Russian Heatwave

http://www.esrl.noaa.gov/psd/csi/events/2010/russianheatwave/

Sep 9, 2010

“The western Russia heat wave during summer 2010 was the most extreme heat wave in the instrumental record of 1880-present for that region…. Here we provide the various stages of one such scientific assessment ….

… a chronology of the scientific investigation, including questions asked, hypotheses posed, data used, and tools applied. The effort began with a rapid initial response, assembled as preliminary material and discussion as a web page, which can be read under the header Preliminary Assessment that was last updated 9 September 2010.

… the team posed the rhetorical question whether the western Russia heat wave of July 2010 could have been anticipated. The nature of this analysis was more extensive that the initial rapid response, and the vetting of our analysis was conducted through the peer review process leading to the publication of a paper in Geophysical Research Letters in March 2011. The contents of this paper including the Supplemental figures that accompanied the work can be read under the header Published Assessment.

The assessment process continues at NOAA/ESRL, and we provide further ongoing analysis …. we attempt to reconcile several differing perspectives on the causes for the heat wave, illustrate the diversity of questions being posed and how answers to those may at times confound the public (and even scientific) understanding of causes.

… Several papers have appeared subsequent to ours in the peer reviewed literature…. We provide links to these papers and their principal conclusions on this web page under the header Related Publications….”

And follow the links they give at ESRL, e.g. to

http://www.esrl.noaa.gov/psd/csi/events/2010/russianheatwave/warming_detection.html

Ongoing Scientific Assessment of the 2010 Western Russia Heatwave

Draft – Last Update: 3 November 2011

Disclaimer: This draft is an evolving research assessment and not a final report. Comments are welcome. For more information, contact Dr. Martin Hoerling …”

Remember how science works. There’s no great original founder on which everything later is based, and this is a good example, citing work and assessing whether what appear to be contradictions or disagreements can be drawn out with more work to get closer to a better description of what happened.

Science doesn’t grow like a single tree, it grows like kudzu.

From the previous link from NOAA:

“Model simulations and observational data are used to determine the impact of observed sea surface temperatures (SSTs), sea ice conditions and greenhouse gas concentrations. Analysis of forced model simulations indicates that neither human influences nor other slowly evolving ocean boundary conditions contributed substantially to the magnitude of this heat wave. They also provide evidence that such an intense event could be produced through natural variability alone. Analysis of observations indicate that this heat wave was mainly due to internal atmospheric dynamical processes that produced and maintained a strong and long-lived blocking event, and that similar atmospheric patterns have occurred with prior heat waves in this region. We conclude that the intense 2010 Russian heat wave was mainly due to natural internal atmospheric variability.”

Dan asks, “Richard,

How can you be so certain that the 2010 blocking event would not have occurred without AGW? ”

Let’s use Hansen’s visualization that weather is like rolling dice. An extreme blocking event of a specific magnitude starting on a specific day in a specific area and lasting for a specific duration might be like rolling a 6 100 times in a row. Very very unlikely. The key is that the system without AGW represents a new reality, and so the two (with AGW VS without AGW) are independent events. That there was such an event in our reality has zero influence on the odds of such an event at the same time in the alternative reality where humans never emitted any CO2. Thus, we MUST re-roll those 100 dice! Depending on how picky you want to get in the definitions, one could say it would be impossible to get the same results.

Another way to think about it is GCMs. Do runs all starting 10 years before the event until you get the event in question. Now change the parameters to represent no AGW. Do ONE more run. What are the odds of getting the same event? Essentially zero. Thus, it is incorrect to say that the 2010 event would have been “the same” but a little lower temperature without AGW. It is correct to say that without AGW the 2010 event would not have occurred. Without AGW, 100% of all weather on the planet would be different from what occurred, including the heat waves that did not occur because of AGW.

Oh, pathetic; Dan handwaves “from NOAA” and quotes from the 2010 publication. That works because people won’t have a link and see the explicit pointers to more recent work on the same page. This is how obfuscation works; claim what seems to be almost a cite to a good source, but quote a bit out of context, so anyone not skeptical can be fooled into thinking it’s up to date information.

Tiresome.

Dan ponders, ” I am curious how you can say that we know with certainty that these events will increase in the future.”

I believe Hank’s point. With an increased mean and constant variability, the number of extreme highs will invariably increase. This is true regardless of whether climate sensitivity is 1C or 3C or 6C. To counter this, you’ll have to provide some evidence that weather variability will decrease as global mean temperature increases. I’m assuming you aren’t proposing that climate sensitivity is 0C or below.

I have to say, Dan H. is really good at what he does.

He takes a graph from a blog and posts a link to the image, making up a claim about it.

The blog he took the image from is gailtheactuary — Gail Tverberg.

You could look at the source — if you take the time to figure that out, because Dan won’t tell you where he got it.

Nor will he tell you what the caption says, nor how to find what the author had to say about the chart.

Clever, he is.

[Response: Not very actually. Just persistent–Jim]

Here’s the context:

https://www.google.com/search?q=site%3Aourfiniteworld.com+food-price-index-vs-brent-oil-price-june-2011

Background from the blogger:

http://ourfiniteworld.com/2011/02/16/a-look-behind-rising-food-prices-population-growth-rising-oil-prices-weather-events/

I’m tired of chasing Dan’s stuff.

Anyone else want a turn?

http://www.pbfcomics.com/archive_b/PBF216-Thwack_Ye_Mole.jpg

[Response: He’s used up his allotment of tolerance, thinking that it was unlimited.–Jim]

Hank,

In case you did not notice, the quote that I posted was from the abstract of the same publication to which you referenced in post #188 (i.e GRL, March, 2011). Do you have the link to a more recent publication? None of your commentary, just facts please.

If you have any other publication that shows another explanation, I would like to see that also. It would be nice to back up assertions with facts.

Dan H,

On this page the first graphic is a copy of figure 2 from Barriopedro et al. Notice how 5 of the years in the last 10 years are at the very warmest edge of the distribution, or beyond it. Notice also the mini-graph below the main graph of the statistical distribution. Barriopedro find that when taking into account the uncertainty ranges in proxy reconstruction of temperature; “at least two summers in this decade have most likely been the warmest of the last 510 years in Europe.” That is significant because those uncertainty ranges are large.

On this page you’ll find part of one of the graphics from the recent Hansen paper. This shows that not only is there a shift due to the warming trend, but there is also an increase in the high end, i.e. extreme warm events. Note in particular the top graphic there; that’s for summer which is the season being discussed.

Never mind the discussion on those pages, you can discuss those over there if you want, but you’ll also find links to the papers involved.

As a former sceptic (I was quietly in denial) I still have some doubts about the models, I suspect you do too. So lets leave aside considerations based on models.

Barriopedro et al’s argument about incidents like the Moscow 2010 heatwave being unlikely to recur for decades is based on model results, and a key thread of the NOAA argument is again based on models. The element of the NOAA argument that isn’t is how much of an outlier 2010 was (top graphic). But Barriopedro figure 2 shows us that of the last 10 years in Europe five have been at the very top end of the statistical distribution, and Hansen shows us that globally there has been a massive increase in high sigma warm events.

What conclusions do you draw from the observations I outline – no quote mining from other sources – what do you think?

> Dan H. says 22 Nov 2011 at 3:54 PM

> the quote that I posted was from …

> GRL, March, 2011). Do you have the link

> to a more recent publication? None of your commentary, just facts please.

As I had posted earlier on 22 Nov 2011 at 11:31 AM

>> … follow the links they give at ESRL ….

I quoted their directions at length for how to find that material.

Here again, briefly, supplying a clickable link:

“… Several papers have appeared subsequent to ours in the peer reviewed literature…. We provide links to these papers and their principal conclusions on this web page under the header Related Publications “

“Related Publications” link should go to:

http://www.esrl.noaa.gov/psd/csi/events/2010/russianheatwave/pubs.html

SREX (The emails are boring this time around, the trolls more so )

Certainly the report signals a need for countries to reassess their investments in measures to manage disaster risk. New disaster risk assessments that take climate change into account may require countries and people to refresh their thinking on what levels of risk they are willing and able to accept. This comes into sharper focus when considering that today’s climate extremes will be tomorrow’s ‘normal’ weather and tomorrow’s climate extremes will stretch our imagination and capacity to cope as never before. Smart development and economic policies will need to consider changing disaster risk as a core component unless ever more money, assets and people are to be washed away with the coming flood.

While both the authors of this blog are Co-ordinating Lead Authors of the IPCC Special Report on Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation, and members of the Core Writing Team of the Report’s Summary for Policy Makers, the article does not represent the views of the IPCC or necessarily of either of the author’s host organisations.

Well, this is top of the pops isn’t it:

Increase of extreme events in a warming world

Stefan Rahmstorf1 and Dim Coumou

+ Author Affiliations

Potsdam Institute for Climate Impact Research, PO Box 601203, 14412 Potsdam, Germany

Edited by William C. Clark, Harvard University, Cambridge, MA, and approved September 27, 2011 (received for review February 2, 2011)