It is a good tradition in science to gain insights and build intuition with the help of thought-experiments. Let’s perform a couple of thought-experiments that shed light on some basic properties of the statistics of record-breaking events, like unprecedented heat waves. I promise it won’t be complicated, but I can’t promise you won’t be surprised.

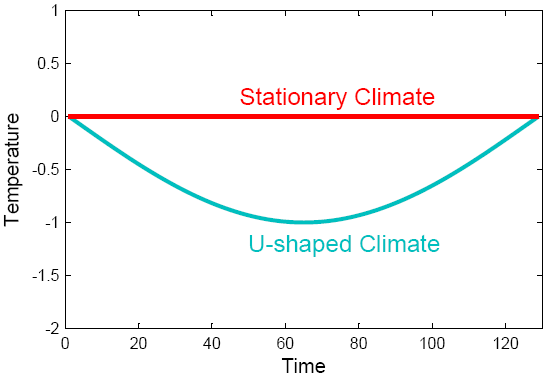

Assume there is a climate change over time that is U-shaped, like the blue temperature curve shown in Fig. 1. Perhaps a solar cycle might have driven that initial cooling and then warming again – or we might just be looking at part of a seasonal cycle around winter. (In fact what is shown is the lower half of a sinusoidal cycle.) For comparison, the red curve shows a stationary climate. The linear trend in both cases is the same: zero.

Fig. 1.Two idealized climate evolutions.

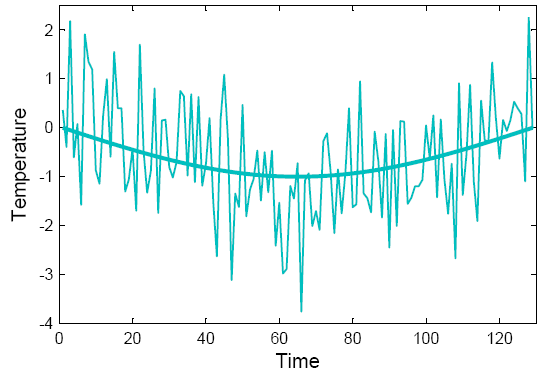

These climates are both very boring and look nothing like real data, because they lack variability. So let’s add some random noise – stuff that is ubiquitous in the climate system and usually called ‘weather’. Our U-shaped climate then looks like the curve below.

Fig. 2. “Climate is what you expect, weather is what you get.” One realisation of the U-shaped climate with added white noise.

So here comes the question: how many heat records (those are simply data points warmer than any previous data point) do we expect on average in this climate at each point in time? As compared to how many do we expect in the stationary climate? Don’t look at the solution below – first try to guess what the answer might look like, shown as the ratio of records in the changing vs. the stationary climate.

When I say “expected on average” this is like asking how many sixes one expects on average when rolling a dice a thousand times. An easy way to answer this is to just try it out, and that is what the simple computer code appended below does: it takes the climate curve, adds random noise, and then counts the number of records. It repeats that a hundred thousand times (which just takes a few seconds on my old laptop) to get a reliable average.

For the stationary climate, you don’t even have to try it out. If your series is n points long, then the probability that the last point is the hottest (and thus a record) is simply 1/n. (Because in a stationary climate each of those n points must have the same chance of being the hottest.) So the expected number of records declines as 1/n along the time series.

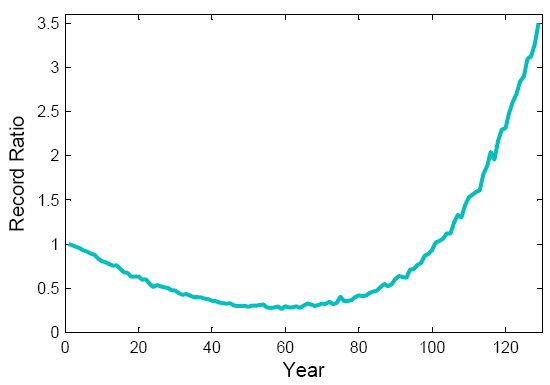

Ready to look at the result? See next graph. The expected record ratio starts off at 1, i.e., initially the number of records is the same in both the U-shaped and the stationary climate. Subsequently, the number of heat records in the U-climate drops down to about a third of what it would be in a stationary climate, which is understandable because there is initial cooling. But near the bottom of the U the number of records starts to increase again as climate starts to warm up, and at the end it is more than three times higher than in a stationary climate.

Fig. 3. The ratio of records for the U-shaped climate to that in a stationary climate, as it changes over time. The U-shaped climate has fewer records than a stationary climate in the middle, but more near the end.

So here is one interesting result: even though the linear trend is zero, the U-shaped climate change has greatly increased the number of records near the end of the period! Zero linear trend does not mean there is no climate change. About two thirds of the records in the final decade are due to this climate change, only one third would also have occurred in a stationary climate. (The numbers of course depend on the amplitude of the U as compared to the amplitude of the noise – in this example we use a sine curve with amplitude 1 and noise with standard deviation 1.)

A second thought-experiment

Next, pretend you are one of those alarmist politicized scientists who allegedly abound in climate science (surely one day I’ll meet one). You think of a cunning trick: how about hyping up the number of records by ignoring the first, cooling half of the data? Only use the second half of the data in the analysis, this will get you a strong linear warming trend instead of zero trend!

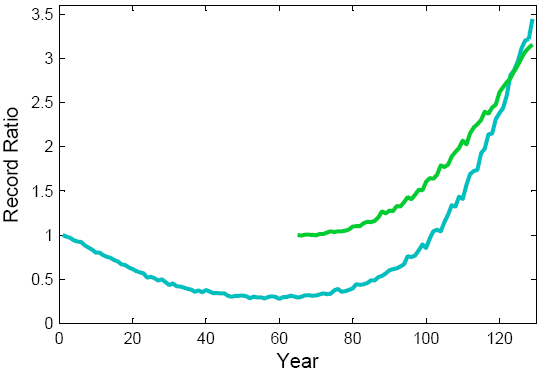

Here is the result shown in green:

Fig. 4. The record ratio for the U-shaped climate (blue) as compared to that for a climate with an accelerating warming trend, i.e. just the second half of the U (green).

Oops. You didn’t think this through properly. The record ratio – and thus the percentage of records due to the climatic change – near the end is almost the same as for the full U!

The explanation is quite simple. Given the symmetry of the U-curve, the expected number of records near the end has doubled. (The last point has to beat only half as many previous points in order to be a record, and in the full U each climatic temperature value occurs twice.) But for the same reason, the expected number of records in a stationary climate has also doubled. So the ratio has remained the same.

If you try to go to even steeper linear warming trends, by confining the analysis to ever shorter sections of data near the end, the record ratio just drops, because the effect of the shorter series (which makes records less ‘special’ – a 20-year heat record simply is not as unusual as a 100-year heat record) overwhelms the effect of the steeper warming trend. (That is why using the full data period rather than just 100 years gives a stronger conclusion about the Moscow heat record despite a lesser linear warming trend, as we found in our PNAS paper.)

So now we have seen examples of the same trend (zero) leading to very different record ratios; we have seen examples of very different trends (zero and non-zero) leading to the same record ratio, and we have even seen examples of the record ratio going down for steeper trends. That should make it clear that in a situation of non-linear climate change, the linear trend value is not very relevant for the statistics of records, and one needs to look at the full time evolution.

Back to Moscow in July

That insight brings us back to a more real-world example. In our recent PNAS paper we looked at global annual-mean temperature series and at the July temperatures in Moscow. In both cases we find the data are not well described by a linear trend over the past 130 years, and we fitted smoothed curves to describe the gradual climate changes over time. In fact both climate evolutions show some qualitative similarities, i.e. a warming up to ~1940, a slight subsequent cooling up to ~ 1980 followed by a warming trend until the present. For Moscow the amplitude of this pattern is just larger, as one might expect (based on physical considerations and climate models) for a northern-hemisphere continental location.

NOAA has in a recent analysis of linear trends confirmed this non-linear nature of the climatic change in the Moscow data: for different time periods, their graph shows intervals of significant warming trends as well as cooling trends. There can thus be no doubt that the Moscow data do not show a simple linear warming trend since 1880, but a more complex time evolution. Our analysis based on the non-linear trend line strongly suggests that the key feature that has increased the expected number of recent records to about five times the stationary value is in fact the warming which occurred after 1980 (see Fig. 4 of our paper, which shows the absolute number of expected records over time). Up until then, the expected number of records is similar to that of a stationary climate, except for an earlier temporary peak due to the warming up to ~1940.

This fact is fortunate, since there are question marks about the data homogeneity of these time series. Apart from the urban adjustment problems discussed in our previous post, there is the possibility for a large warm bias during warm sunny summers in the earlier part, because thermometers were then not shaded from reflected sunlight – a problem that has been well-documented for pre-1950 instruments in France (Etien et al., Climatic Change 2009). Such data issues don’t play a major role for our record statistics if that is determined mostly by the post-1980 warming. This post-1980 warming is well-documented by satellite data (shown in Fig. 5 of our paper).

It ain’t attribution

Our statistical approach nevertheless is not in itself an attribution study. This term usually applies to attempts to attribute some event to a physical cause (e.g., greenhouse gases or solar variability). As Martin Vermeer rightly said in a comment to our previous post, such attribution is impossible by only analysing temperature data. We only do time series analysis: we merely split the data series into a ‘trend process’ (a systematic smooth climate change) and a random ‘noise process’ as described in time-series text books (e.g. Mudelsee 2010), and then analyse what portion of record events is related to either of these. This method does not say anything about the physical cause of the trend process – e.g., whether the post-1980 Moscow warming is due to solar cycles, an urban heat island or greenhouse gases. Other evidence – beyond our simple time-series analysis – has to be consulted to resolve such questions. Given that it coincides with the bulk of global warming (three quarters of which occurred from 1980 onwards) and is also predicted by models in response to rising greenhouse gases, this post-1980 warming in Russia is, in our view, very unlikely just due to natural variability.

A simple code

You don’t need a 1,000-page computer model code to find out some pretty interesting things about extreme events. The few lines of matlab code below are enough to perform the Monte Carlo simulations leading to our main conclusion regarding the Moscow heat wave – plus allowing you to play with the idealised U-shaped climate discussed above. The code takes a climate curve of 129 data points – either half a sinusoidal curve or the smoothed July temperature in Moscow 1881-2009 as used in our paper – and adds random white noise. It then counts the number of records in the last ten points of the series (i.e. in the last decade of the Moscow data). It does that 100,000 times to get the average number of records (i.e the expected number). For the Moscow series, this code reproduces the calculations of our recent PNAS paper. In a hundred tries we find on average 41 heat records in the final decade, while in a stationary climate it would just be 8. Thus, the observed gradual climatic change has increased the expected number of records about 5-fold. This is just like using a loaded dice that rolls five times as many sixes as an unbiased dice. If you roll one six, there is then an 80% chance that it occurred because the dice is loaded, while there is a 20% chance that this six would have occurred anyway.

To run this code for the Moscow case, first download the file moscow_smooth.dat here.

----------------------

load moscow_smooth.dat

sernumber = 100000;

trendline = (moscow_smooth(:,2))/1.55; % trendline normalised by std dev of variability

%trendline = -sin([0:128]'/128*pi); % an alternative, U-shaped trendline with zero trend

excount=0; % initialise extreme counter

for i=1:sernumber % loop through individual realisations of Monte Carlo series

t = trendline + randn(129,1); % make a Monte Carlo series of trendline + noise

tmax=-99;

for j = 1:129

if t(j) > tmax; tmax=t(j); if j >= 120; excount=excount+1; end; end % count records

end

end

expected_records = excount/sernumber % expected number of records in last decade

probability_due_to_trend = 100*(expected_records-0.079)/expected_records

----------------------

Reference

Mudelsee M (2010) Climate Time Series Analysis. Springer, 474 pp.

#38

“It is the nature of knowledge that it is never completely certain, because the contents of our mind is limited by our human abilities to perceive, analyze, and conceive.”

An exercise in uncertainty on science…

1. Take a 10 Kg weight to a height of say 10 metres – makes the numbers nice and easy.

2. Place your foot directly below the weight.

3. Allow the weight to fall.

4. Use the stimulus of pain as a focus for meditation on the issue of certainty in science.

5. Use the period of convalescence to learn the sort of basic physics that may stop you doing anything as stupid again.

6. er

7. …that’s it.

> precipitation … fewer … stronger … definitive

David (replying to Pete D.)– can you tell us which studies you’ve looked at that you don’t find definitive or convincing?

That would help narrow the list to consider what might be useful.

There are so many hits on this:

http://www.google.com/search?q=precipitation+intensity+frequency+concentration+trend

(I thought Google had discontinued using + in searches but it’s still putting them in, so maybe this will work for a while)

Is western Alaska’s snowicane a record breaker?

Hank Roberts @52 — Pete Dunkleberg found

Seung-Ki Min, Xuebin Zhang, Francis W. Zwiers & Gabriele C. Hegerl

Human contribution to more-intense precipitation extremes

NATURE VOL 470, 17 FEBRUARY 2011, 378–381

http://www.nature.com/uidfinder/10.1038/nature09763

which is very good IMO. However, that study (and many others) fails to confirm that there are areas with decreased average rainfall but increased extreme rains. I’m certainly prepared to believe that so-called desertification will lead to such a result, but I’d like to see some evidence.

perhaps:

http://www.uib.es/depart/dfs/meteorologia/ROMU/formal/paradoxical/paradoxical.pdf

The paradoxical increase of Mediterranean extreme daily

rainfall in spite of decrease in total values

GEOPHYSICAL RESEARCH LETTERS, VOL. 29, NO. 0, 10.1029/2001GL013554, 2002

“Earlier reports indicated some specific isolated regions

exhibiting a paradoxical increase of extreme rainfall in spite of

decrease in the totals. Here, we conduct a coherent study of the

full-scale of daily rainfall categories over a relatively large

subtropical region-the Mediterranean-in order to assess whether

this paradoxical behavior is real and its extent. …”

Cited by 127

“David B. Benson asks, Is western Alaska’s snowicane a record breaker?”

I believe Northern Norway has had a couple of storms of similar intensity, and certainly some Antarctic coasts and Southern Ocean islands, but otherwise it’s pretty close. Definitely that’s a storm that imho qualifies quite high on the NH snow storm list, though I don’t know if there’s a comprehensive list made anywhere. We had a beaufort 10 snow storm last year and that too was pretty nasty.

Hank Roberts @55 — That was both prompt and exactly to the point! Moreover, the variations by locality are also of interest. Good find.

The changes twixt die and dice are quick.

Yes, we’ve been losing our adverbs really quick.

I am confused. Well I understand that “If your series is n points long, then the probability that the last point is the hottest (and thus a record) is simply 1/n”. This is true for every point if you look at the whole dataset. However, a record (in my opinion) is not independent. When I role a dice, the every throw is independent of each other and therefore the probability of each throw to give a ‘6’ is 1/6. But if I look at the probability of the following throw being a record, this is not independent of the throw before. Example: I throw a 4. then the probability of the next throw being a new record (menas a 5 or a 6) is 1/3. If I throw a 1, it is 5/6. Looking at the series afterwards, of course everyone of this 2 throws has the probability to be the record.

So in a linear climate, I expect, that new records tend to go near zero.

Where do I think wrong?

[Response: You have the concepts right, you just slipped up with the phrasing of your last statement there. Replace “linear” with “stationary” (unchanging) climate, and it’s correct. Since n increases with time, 1/n goes toward zero. The only thing linear here is the increase in value of n with time–Jim]

Here’s a start:

Allen and Soden 2006. Atmospheric Warming and the Amplification of Precipitation Extremes.

Min et al. 2011. Human contribution to more-intense precipitation extremes.

Intensification of Summer Rainfall Variability in the Southeastern United States HUI WANG et al. 2010

http://media.miamiherald.com/smedia/2008/08/07/15/rainwarm.source.prod_affiliate.56.pdf

Atmospheric Warming and the Amplification of Precipitation Extremes

Richard P. Allan and Brian J. Soden

http://www.pnas.org/content/106/35/14773.full.pdf+html

The physical basis for increases in precipitation

extremes in simulations of 21st-century climate change

Paul A. O’Gormana, and Tapio Schneider

124. Trenberth KE, Dai A, Rasmussen RM, Parsons DB.

The changing character of precipitation. Bull Am Met

Soc 2003, 84:1205–1217.

http://portal.iri.columbia.edu/~alesall/ouagaCILSS/articles/trenberth_bams2003.pdf

125. Sun Y, Solomon S, Dai A, Portmann R. How often

will it rain? J Clim 2007, 20:4801–4818.

http://journals.ametsoc.org/doi/pdf/10.1175/JCLI3672.1

http://scholar.google.com/scholar?q=allen+soden+precipitation&hl=en&btnG=Search&as_sdt=1%2C22&as_sdtp=on

To Thomas on 59

IMHO the loaded dice example is a good one for understanding what the 80%, means. If you know you have a loaded dice with that rolls a 6 5 out of 6 times there is an 80% chance when you roll a 6, you would not have gotten it if the dice was not loaded. You are absolutely correct that if there are only 6 discrete values, you have 0% chance of rolling a higher number if you have already rolled a 6.

Which brings us to the 1/n question. If have n unique numbers, there is a 1/n probability that the last one is the highest independent of the probability distribution. I do not think this is a good assumption on temperature data though, and I doubt they used this approach in the paper. If we characterize the distribution of temperatures, and the high, we should be able to calculate the probability that a new temperature exceeds that one. Now the paper did not take into account climate variability in the “non loaded” case, so we are comparing data for a non-variable or stationary climate versus the current one.

Your model leaves out the magnitude of new records. The Moscow heat wave wasn’t just a record, but an extreme outlier. The more interesting question is, “Given that a random record heat wave occurred (just weather), how probable was the Moscow heat wave?”

From WunderBlog:

http://www.wunderground.com/blog/JeffMasters/comment.html?entrynum=1441

“Haughty”? Resort to name-calling is a fairly certain indicator that the commenter really doesn’t have anything substantive to add to an argument.

I admire Gavin Schmidt, Eric Steig …all the folks at RealClimate. The very fact that they allow relevant debate to continue on this website is to their credit. So, when someone says they are “irritated” that RealClimate allows the debate to continue, because “the debate is over” I’m inclined to defend what I regard as completely appropriate dialog on the many uncertainties about climate science that remain. I also respect that they don’t bother to discuss every disproved conjecture of some folks – why waste time on what has been disproved, when there is so much remaining to discover and understand.

Comments about the Earth orbiting the sun, etc. seemingly betray a lack of understanding that despite all the advances scientists have made in understanding orbital mechanics, even with Einsteinian relativistic effects included, our models still fail to predict with complete accuracy the Earth’s orbit. In other words, there is opportunity here for further discovery, at least for those willing to look further.

Comments about “phlogiston” and the like are also disappointing. There is still a great deal we do not understand about the nature of space, electromagnetic radiation, gravity, the strong force, and the weak force. We don’t understand mass and how it comes about, nor why quarks seemingly have the masses they do, or why their masses don’t add up to the measured masses of protons and neutrons.

You want certainty? Sorry, you will be disappointed. Even though we may someday be able to see beyond the vail of Heisenberg’s uncertainty principle, uncertainty will remain.

Extreme? About 1,000 people died in Southeast Asia floods: UN.

#64,

“…the many uncertainties about climate science that remain…”

None of which undermine the central points:

1. The debate over the reality of the post 1975 warming is over.

2. The debate over the primary human role in most/all (I say ‘all’) of this warming is over.

Because CO2 is a primary driver of the post 1975 warming it must have had a role in the pre-1975 warming, the physics of the matter didn’t suddenly apply after 1975 and not before. So while claimed solar correlations prior to 1975 may well be correct, there was a background of warming due to CO2 which has been increasing since the late 1800s.

Yes there are issues still up for discussion – that’s why I read the science as an amateur. Were there no issues for dicussion it wouldn’t be an interesting branch of science.

But the science is settled on the core issues that are relevant to the question: Is anthropogenic global warming (AGW) a problem that we need to do something about? And the answer is a clear, unequivocal, ‘Yes.’

The valid question is what to do. Key to deciding that is what impacts we will face, since severity of impacts is a crucial consideration in any cost-benefit decision. To say anything of worth about that I need to read more: I had thought that whilst the AGW signal emerged from noise around 1975 the signal of AGW related weather events was still within the noise. I now suspect it is starting to emerge from the noise of natural variability in key regions and key indices.

Geno Canto del Halcon,

You evidently do not understand scientific uncertainty. It is knowing the uncertainties that allows us to proclaim with high confidence that a satellite that we slingshot off of half a dozen celestial bodies will arrove at its intended target on a day certain. That is certainly good enough for most purposes.

Likewise, we can state with near certainty that adding CO2 to the atmosphere will warm the planet–and with high confidence about how much.

We have reached the point where it is no longer profitable to argue about whether CO2 is a greenhouse gas. It is. And once you have 97% of experts agreeing on anything–even if it is where to go for dinner–you can pretty much take that to the bank.

If you require certainty, may I direct you to the theology department.

Will RealClimate have a post on Hansen’s new paper where Hansen shows that the area of the Earth with a greater than 3SD heat anomaly has increased tremendously? I would be very interested in what scientists think of this paper.

If the 3SD anomaly was 0.5% of the Earth in the 1960’s and is 10% now, does that mean that 95% of the anomaly can be attributed to AGW? Can a % of the 3SD anomaly be attributed to AGW in a scientific way?

Thank you for your informative blog.

hi guys… long time no post…

whenever it’s cold where i live, as with that crazy october eastern snow storm this year, there’s someone who says, “so… how’s that global warming theory working out? hahaha…”

is there a website that would show all the hi and low records set GLOBALLY on a given day? also, is there a site showing the “running” or current global average temperature (and how it compares to “normal”)? i’d like to be able to illustrate how just because it’s cold here, it’s not cold GLOBALLY.

I second Mr. Sweet’s call for a discussion of the Hansen paper. I really like Figure 4, which summarizes well the evolution of the temperature anomaly distribution over the decades. I would really like to sees the same graphs for precipitation, humidity and pressure.

sidd

I think that this is the new paper from Hansen about climate extremes.

“Climate Variability and Climate Change: The New Climate Dice” Hansen/Sato/Ruedy 10/11/11

PDF here

Ray Ladbury says:

“…. We have reached the point where it is no longer profitable to argue about whether CO2 is a greenhouse gas.”

Except, of course, for them as is profiting from the uncertainty and delay. Cough.

You guys from RC will never stop amaze me .

You even wrote a paper about july 2010 heatwave , it was a blocking high , and what happens in cases like that ? On one end you get unprecedented heat and unprecedented cold on the other one . Its not that hard to work out .

http://www.esrl.noaa.gov/psd/csi/events/2010/russianheatwave/images/Global_sfcT_July2010.gif

[Response: And so where exactly is the unprecedented cold you speak of?–Jim]

Vlasta,

No, a blocking event will get you high temperatures–not necessarily a record–and it certainly won’t get you a 3.5 sigma event as we saw in Moscow. If you learned some math, perhaps you wouldn’t be continually amazed.

Thanks to RC and James Hansen; http://www.columbia.edu/~jeh1/mailings/2011/20111110_NewClimateDice.pdf

I now know why the price of pecans has gone up.

Ray thanks for reply .

I dont dispute that was 100year event , but if you spent so much time writing your paper you could have mentioned Siberia was 8deg below normal.

Its pretty much obvious the blocking high was a1500km east from Moscow , and on eastern side of the high , cold air from Barents sea was drawn into Siberia .

http://earthobservatory.nasa.gov/IOTD/view.php?id=45069

Ray thanks for reply

I dont dispute it was a 100year event

The blocking High was 1500 east from Moscow , and on the eastern side cold air from Barents sea was drawn into Siberia , if you spent so much time wring a paper about it you mentioned it too .

http://earthobservatory.nasa.gov/IOTD/view.php?id=45069

Vlasta @76 — Based on historical records, it was more like a 1000 year event.

I read that Hansen paper yesterday and blogged on it last night – the implications are very disturbing. I’d go so far as to suggest that if you don’t find it unsettling, you don’t understand it.

The meteorological conditions leading to the 2010 Russia event are not relevant to the paper’s methodology or findings. Just as the fact that fires have happened naturally before humans would be irrelevant in a trial for arson.

Figure 2 of Barriopedro et al puts the 2003 and 2010 European Heatwaves into the same perspective as Hansen 2011. That figure also shows the shift Hansen finds.

The bottom line is that Hansen et al 2010, Rahmstorf & Coumou 2011, and Barripedro et al 2011 all* support the contention that the 2010 Russian Heatwave event was very unlikely without global warming – a warming driven by human activity. Dr Rob Carver of Weather Underground considers the 2010 Russian Heatwave to be a 1000 to 15,000 year event assuming a stationary climate – see here for detail and caveats.

*Paywall-free links to all those papers here, bottom of page.

I contributed this post on much the same topic to Skeptical Science last year.

Though the analysis is much more crude, the results seem to agree. I find that pleasing :)

http://www.skepticalscience.com/Maximum-and-minimum-monthly-records-in-global-temperature-databases.html

Vlasta:

Examining figure 6 from Hansens paper, the cooling in Sibera you mention was a 1 sigma event, hardly unexpected. In addition it was much smaller in area than the Moscow 3.5 sigma heating. Please provide a reference for your extraordinary claim that the Siberia heat was unusual.

Chris R (#79),

FWIW, I reached the same chilling conclusion you blogged about when I read the Hansen paper. Two things struck me: I don’t know that this has been peer-reviewed, and you have to have a moderate understanding of math/stats to appreciate what it is telling us.

Frankly, it is so simple, I’d like to see critics try to poke holes in it. So, the attacks on it will come in the form of tangential issues, or from those not skilled at math. I heard once that any idiot can make something complicated; it takes genius to make something simple. There is beauty is this one’s simplicity.

I think the most important trick this paper accomplishes is to convey that, while it is true that you can not say much about any isolated event, these events are not isolated.

Edward Greisch (75),

Thank you very much for the link to that paper. Not even half way through, but this particular paragraph really stands out:

Whenever you bring up weather extremes such as the heat wave in Russia or the flooding in Australia there is a pretty good chance that the “skeptics” are going to say something to the effect, “Well, during any given year there is always extreme weather somewhere, highly unusual for one location or another, but if you were to look at the globe as a whole it really wouldn’t be that unusual.” However, that particular paragraph really puts some numbers on it.

Stated a little less technically, as you might put it in conversation or a letter to the editor, “There is the warming trend, which is bad enough. But on top of this trend is a variability. That part of the world that is experiencing extremely unusual temperatures, in addition to the trend, has increased in size roughly by a factor of 60 over what it was in the 1950s, 60s and 70s.” Then if someone asks what you mean by “highly unusual” you can say something like, “Summers with heat waves, for example, that would normally occur only once every three hundred years.”

When the evening news begins with: Today, the debate over Global Warming,…”, that’s a lie. There is no debate over Global Warming.

There is a debate over ‘modals’, math, probabilities, statistics,…but not over Global Warming. The debate is over refinements.

No debate over CO2 in the atmosphere.

(“The Keeling Curve provided the first clear evidence that carbon dioxide was accumulating in the atmosphere as the result of humanity’s use of fossil fuels. It turned speculations about increasing CO2 from theory into fact. Over time, it served to anchor other aspects of the science of global warming.”)

No debate over temperature rise.

(“We see a global warming trend that is very similar to that previously reported by the other groups. The world temperature data has sufficient integrity to be used to determine global temperature trends.” Muller)

Freidman and Smith use the ‘invisible hand’ as a metaphore proving that ‘Laissez Faire’ generates a more robust system. Unfortunately, as the ‘invisible hand’ put the last propellar on the International Debt Market, the wheels fell off. Laissez Faire (deregulation) produced a catastrophe in the US Banking System, US Housing Market (read ‘Liars Loans), and now,International Sovereign Debt. Laissez Faire produces a house of cards.

Should not the world be warned that there is an intersection coming up between weather and agriculture? Should not the World be warned that the systems of Supply, Distribution, and Finance too fragile to withstand the coming onslaught? Should they not be told, “These are facts; these are hypothesis.”?

If the death of a billion people can be reduced to 900 million,is it a worth while thing to do?

Chris R said

“The bottom line is that Hansen et al 2010, Rahmstorf & Coumou 2011, and Barripedro et al 2011 all* support the contention that the 2010 Russian Heatwave event was very unlikely without global warming – a warming driven by human activity.”

Which is far from the truth. If hansen et al is correct, then their are only three possibilities – the standard deviation is incorrect, the variation is increasing, or it is random chance. Now the variation is much higher than global warming in these cases. Rahmstorf and Coumou specifically assume variation does not change with warming, and since they did not do attribution nor account for climate variation, Hansen et al. and Barripedro et al. would lead someone who understands statistics to say they can not attribute it to warming and not variation. Note they do not do any attribution in the paper, and never look at climate variation.

Now there is the possibility that global warming is causing an increase in standard deviation or causing these climate variation events such as atmospheric blocking. The main paper that examines this is Dole et al. with the Russian Heat Wave, and it can not find attribution. Someone needs to do a great deal more work to find causation between global warming and the russian heat wave if it is to have a scientific basis, and not just a political argument. If climate change is going to cause things like the russian heat wave, the mitigation may be to have better hvac in those areas which will actually increase ghg. Most deaths were related to alcohol or inhalation exasperated by lack of air conditioning following the drought induced wild fires.

Re: #85 (Norm Hull)

You said:

You left one out: the mean is increasing. That’s the entire point of Rahmstorf & Coumou — to show that even when the standard deviation is larger than the warming, warming still makes such extremes much more likely.

That’s why the Russian heat wave was so much more likely with global warming. A blocking event makes a heat wave — global warming makes it a once-in-a-thousand-years heat wave. The truly sad part is, it’s no longer once-in-a-thousand-years.

tamino,

If it is 3 sigma greater than the mean, it doesn’t matter if the mean is higher. If you mean that the 3 sigma gets higher sure, Rashmstorf and Coumou did not even look at what made the heat wave so bad. The legth of time, the droughts, etc. For the grid, the russian heat wave had an average july temperature of almost 4 standard deviations from the mean, for june, july, august it was 3.

Responding to Norm Hull Tamino (86) writes:

Rahmstorf and Coumou state as much on page 4:

They don’t deny that variability may increase, in fact they acknowledge as much. But for the sake of instruction they consider the simpler case where variation remains the same and the baseline moves in order to demonstrate how a little warming may make extremely unlikely events far more likely.

Norm Hull writes:

Trenberth examines the weaknesses of their analysis in an interview by Joe Romm here:

NOAA: Monster crop-destroying Russian heat wave to be once-in-a-decade event by 2060s (or sooner)

http://thinkprogress.org/romm/2011/03/14/207683/noaa-russian-heat-wave-trenberth-attribution/

Regarding their (lack of) attribution, in part Trenberth states:

Later he continues:

The change in atmospheric circulation that made the heatwave so intense wasn’t due to a change in variability, a moving of the baseline, or a moderate El Nino. Rather, it is reasonable to conclude that each of these played a part. Each was a factor. And thus certain highly improbable events become much more probable under global warming.

Walter Crain @ 69:

Because of the accumulated ocean heat content and CO2 it will not be cool globally for many thousands of years. Perhaps you could teach your friends some amazing natural history like winter is cooler than summer in the hemisphere that is having winter at the time, or some advance technical concepts like “average”.

http://data.giss.nasa.gov/gistemp/maps/

You can check by month. Pick any month and it is warmer than it used to be. Look for the anomaly at the top right of the map. La Niña years are globally “cool” but they are now warmer than El Niño used to be.

http://data.giss.nasa.gov/gistemp/

http://skepticalscience.com/argument.php

http://capitalclimate.blogspot.com/2010/10/endless-summer-xii-septembers.html

thanks much, pete. i really appreciate your answering.

that first link you gave is pretty cool – so i can show them the current month. what i’d really like is something for TODAY (or even yesterday). i want to be able to show someone who during a snow storm says, “how’s that global warming going?” that it’s warm pretty much everywhere else today, right now. i don’t want anything nuanced about yearly, monthly averages. i’d like to know the global average for today, and possibly the number of cold vs warm records set yesterday.

“skepticalscience” is one of my favorite sites to send “skeptics” to. i love how it just knocks down the specious arguments one-by-one.

Tamino,

The probability of the blocking event which caused the Russian heat wave neither increases nor decreases as global temperatures change. The great plains of the US experienced record high temperatures during the historic “dust bowl” in the 1930s, culminating in the summer of 1936. Whether this was a blocking event similar to what occurred in Russia may never be known, but two such events, 74 years apart, seem to be much more common that one in a thousand.

It is less a question of the standard deviation as Norm suggested, but more a statement that the distribution in non-Gaussian, resulting in higher probabilities of both record highs and lows than expected in a true Gaussian distribution. The slight warming experienced over the 20th century is unlikely to increase the chances of these types of temperature extremes, as the extremes are several standard deviations higher than the mean, and are tied to weather events rather than temperature averages. The North American record high was set in 1913, hardly a year of record warmth, in general.

Re: #91 (Dan H.)

As far as I know that’s quite true. But if a blocking event which causes a heat wave does occur, then global warming dramatically increases the probability of its being a record-setting heat wave, even a once-in-a-thousand-years record event. That’s the point of Rahmstorf & Coumou 2011.

Rather than explain your error, I’ll simply suggest that you think about this more carefully.

Well, thank god Dan H has set the scientific world straight!

Who needs research when hand-waving will suffice.

I mean, “Whether this was a blocking event similar to what occurred in Russia may never be known, but two such events, 74 years apart, seem to be much more common that one in a thousand” … wow. Add in a good saharan drought and the Russian event will seem downright common!

#85 Norm Hull,

“If hansen et al is correct, then their (sic) are only three possibilities – the standard deviation is incorrect [1], the variation is increasing [2], or it is random chance[3].”

Tamino has already given you one addition – the mean is increasing [4].

The Hansen paper gives 3 different approaches using 1951-80 sigma, detrended sigma for ’81-10, and sigma for 81-10. All three give the same overall result – with each passing decade since the 1970s the local temperature anomaly distribution shifts to the right (gets warmer). This dispenses with 1 and 3, leaving us with 2 and 4. We can accept 4, this is a trite observation, the planet is warming.

However, whilst I’m no mathematician (I’m in Electronics), I’ve noticed something at work and have reproduced it in Excel: A histogram of a timeseries with a trend in it is skewed, it rises more slowly than the original timeseries histogram and drops faster. I’ve just done some runs in Excel using the data analysis pack to make a normally distributed series of random numbers and for each new set this behaviour holds true. Like I say I’m no mathematicain, but I guess this is due to the rising trend.

This is not however what Hansen finds, and this is the reason Hansen’s paper alarmed me. In figure 4 all of the curves for all periods in both summer and winter approach the x axis at around -3. Yet at the other side there is a positive going skew that has all of the post 1970 periods meeting the X axis above 3. This suggests to me that what is going on is not simply a matter of the curves being shifted by a linear trend (change in mean) but is also a significant upward shift of variance.

Dan H,

I thought like you, that the small increase in GAT would not cause a substantial increase in high sigma warm episodes. However the Hansen paper suggests (strongly IMO) that this view is incorrect. See also Barriopedro, 2011, “The Hot Summer of 2010: Redrawing the Temperature Record Map of Europe.”

http://www.see.ed.ac.uk/~shs/Climate%20change/Climate%20model%20results/Heat%20waves.pdf

Vukcevic,

Could you expand on the North Atlantic precursor in your graph. Is it related to the NAO, AMO, or some other factor? what

Dan H.,

Yes, I think you explained my objection better than I did. If the odds are being beaten so easily there must be something wrong with the model.

Timothy Chase,

If you look at the model this thought experiment is on, there is simply climate variation. Rahmstorf and Coumou do discuss the possiblilities of higher variation, but they do not use it in their model. They also never try to model global warming, and only separate low frequency from high frequency data from local temperature record. Their trend line clearly has local climate variability, and they plainly state local warming is much higher recently than global warming. Hence you can not attribute results from this analysis to global warming.

As for climate progress and trenbreth criticism of dole, I find it quite hollow. Rolm also claimed Muller didn’t understand climate science because he dared to criticize some of al gore’s exaggerations. Let’s stick to real scientific criticisms and not go off to a political block.

Chris R.

This is the important part of the paper you linked

“The enhanced frequency for small to moderate anomalies

of 2-3 SDs is mostly accounted for by a shift in mean summer

temperatures (compare Fig. 4 with fig. S18). However, the future probabilities of ‘mega-heatwaves’ with SDs similar to

2003 and 2010 are substantially amplified by enhanced

variability. Particularly in WE, variability has been suggested

to increase at interannual and intraseasonal time-scales (1, 2)

as a result of increased land-atmosphere coupling (28) and

changes in the surface energy and water budget (2, 29).

Models indicate that the structure of circulation anomalies

associated with ‘mega-heatwaves’ remains essentially

unchanged in the future (SOM text). ”

In other words smaller variation are indicated by warming but not these heat waves, the temperature anomolies. They suggest increasses in variability but sggest that anouther heatwave of this magnitude is unlikely in the next 50 years. It had been since the 30s for western russia to have a big anomaly. There is coupling so we can look back to the 1930s and find many anomalies world wide.

Norm Hull:

For starters, global warming includes that 3/4 of the globe that is covered by the earth’s oceans. Warming over land, in particular the interior of large continents (as opposed to places like the UK or the Pacific Northwest which have maritime climates) is more pronounced.

You seem to think global warming should be uniform, and that’s just not true.

Norm Hull wrote: “Rolm also claimed Muller didn’t understand climate science because he dared to criticize some of al gore’s exaggerations. Let’s stick to real scientific criticisms and not go off to a political block.”

There are various Internet conventions, from emoticons to acronyms, which attempt to use plain text to convey a guffaw. I do not find any of them adequate.