There is a new paper on Science Express that examines the constraints on climate sensitivity from looking at the last glacial maximum (LGM), around 21,000 years ago (Schmittner et al, 2011) (SEA). The headline number (2.3ºC) is a little lower than IPCC’s “best estimate” of 3ºC global warming for a doubling of CO2, but within the likely range (2-4.5ºC) of the last IPCC report. However, there are reasons to think that the result may well be biased low, and stated with rather more confidence than is warranted given the limitations of the study.

Climate sensitivity is a key characteristic of the climate system, since it tells us how much global warming to expect for a given forcing. It usually refers to how much surface warming would result from a doubling of CO2 in the atmosphere, but is actually a more general metric that gives a good indication of what any radiative forcing (from the sun, a change in surface albedo, aerosols etc.) would do to surface temperatures at equilibrium. It is something we have discussed a lot here (see here for a selection of posts).

Climate models inherently predict climate sensitivity, which results from the basic Planck feedback (the increase of infrared cooling with temperature) modified by various other feedbacks (mainly the water vapor, lapse rate, cloud and albedo feedbacks). But observational data can reveal how the climate system has responded to known forcings in the past, and hence give constraints on climate sensitivity. The IPCC AR4 (9.6: Observational Constraints on Climate Sensitivity) lists 13 studies (Table 9.3) that constrain climate sensitivity using various types of data, including two using LGM data. More have appeared since.

It is important to regard the LGM studies as just one set of points in the cloud yielded by other climate sensitivity estimates, but the LGM has been a frequent target because it was a period for which there is a lot of data from varied sources, climate was significantly different from today, and we have considerable information about the important drivers – like CO2, CH4, ice sheet extent, vegetation changes etc. Even as far back as Lorius et al (1990), estimates of the mean temperature change and the net forcing, were combined to give estimates of sensitivity of about 3ºC. More recently Köhler et al (2010) (KEA), used estimates of all the LGM forcings, and an estimate of the global mean temperature change, to constrain the sensitivity to 1.4-5.2ºC (5–95%), with a mean value of 2.4ºC. Another study, using a joint model-data approach, (Schneider von Deimling et al, 2006b), derived a range of 1.2 – 4.3ºC (5-95%). The SEA paper, with its range of 1.4 – 2.8ºC (5-95%), is merely the latest in a series of these studies.

Definitions of sensitivity

The standard definition of climate sensitivity comes from the Charney report in 1979, where the response was defined as that of an atmospheric model with fixed boundary conditions (ice sheets, vegetation, atmospheric composition) but variable ocean temperatures, to 2xCO2. This has become a standard model metric (because it is relatively easy to calculate. It is not however the same thing as what would really happen to the climate with 2xCO2, because of course, those ‘fixed’ factors would not stay fixed.

Note then, that the SEA definition of sensitivity includes feedbacks associated with vegetation, which was considered a forcing in the standard Charney definition. Thus for the sensitivity determined by SEA to be comparable to the others, one would need to know the forcing due to the modelled vegetation change. KEA estimated that LGM vegetation forcing was around -1.1+/-0.6 W/m2 (because of the loss of trees in polar latitudes, replacement of forests by savannah etc.), and if that was similar to the SEA modelled impact, their Charney sensitivity would be closer to 2ºC (down from 2.3ºC).

Other studies have also expanded the scope of the sensitivity definition to include even more factors, a definition different enough to have its own name: the Earth System Sensitivity. Notably, both the Pliocene warm climate (Lunt et al., 2010), and the Paleocene-Eocene Thermal Maximum (Pagani et al., 2006), tend to support Earth System sensitivities higher than the Charney sensitivity.

Is sensitivity symmetric?

The first thing that must be recognized regarding all studies of this type is that it is unclear to what extent behavior in the LGM is a reliable guide to how much it will warm when CO2 is increased from its pre-industrial value. The LGM was a very different world than the present, involving considerable expansions of sea ice, massive Northern Hemisphere land ice sheets, geographically inhomogeneous dust radiative forcing, and a different ocean circulation. The relative contributions of the various feedbacks that make up climate sensitivity need not be the same going back to the LGM as in a world warming relative to the pre-industrial climate. The analysis in Crucifix (2006) indicates that there is not a good correlation between sensitivity on the LGM side and sensitivity to 2XCO2 in the selection of models he looked at.

There has been some other work to suggest that overall sensitivity to a cooling is a little less (80-90%) than sensitivity to a warming, for instance Hargreaves and Annan (2007), so the numbers of Schmittner et al. are less different from the “3ºC” number than they might at first appear. The factors that determine this asymmetry are various, involving ice albedo feedbacks, cloud feedbacks and other atmospheric processes, e.g., water vapor content increases approximately exponentially with temperature (Clausius-Clapeyron equation) so that the water vapor feedback gets stronger the warmer it is. In reality, the strength of feedbacks changes with temperature. Thus the complexity of the model being used needs to be assessed to see whether it is capable of addressing this.

Does the model used adequately represent key climate feedbacks?

Typically, LGM constraints on climate sensitivity are obtained by producing a large ensemble of climate model versions where uncertain parameters are systematically varied, and then comparing the LGM simulations of all these models with “observed” LGM data, i.e. proxy data, by applying statistical approach of one sort or another. It is noteworthy that very different models have been used for this: Annan et al. (2005) used an atmospheric GCM with a simple slab ocean, Schneider et al. (2006) the intermediate-complexity model CLIMBER-2 (with both ocean and atmosphere of intermediate complexity), while the new Schmittner et al. study uses an oceanic GCM coupled to a simple energy-balance atmosphere (UVic).

These models all suggest potentially serious limitations for this kind of study: UVic does not simulate the atmospheric feedbacks that determine climate sensitivity in more realistic models, but rather fixes the atmospheric part of the climate sensitivity as a prescribed model parameter (surface albedo, however, is internally computed). Hence, the dominant part of climate sensitivity remains the same, whether looking at 3ºC cooling or 3ºC warming. Slab oceans on the other hand, do not allow for variations in ocean circulation, which was certainly important for the LGM, and other intermediate models have all made key assumptions that may impact these feedbacks. However, in view of the fact that cloud feedbacks are the dominant contribution to uncertainty in climate sensitivity, the fact that the energy balance model used by Schmittner et al cannot compute changes in cloud radiative forcing is particularly serious.

Uncertainties in LGM proxy data

Perhaps the key difference of Schmittner et al. to some previous studies is their use of all available proxy data for the LGM, whilst other studies have selected a subset of proxy data that they deemed particularly reliable (e.g., in Schneider et al. SST data from the tropical Atlantic, Greenland and Antarctic ice cores and some tropical land temperatures). Uncertainties of the proxy data (and the question of knowing what these uncertainties are) are crucial in this kind of study. A well-known issue with LGM proxies is that the most abundant type of proxy data, using the species composition of tiny marine organisms called foraminifera, probably underestimates sea surface cooling over vast stretches of the tropical oceans; other methods like alkenone and Mg/Ca ratios give colder temperatures (but aren’t all coherent either). It is clear that this data issue makes a large difference in the sensitivity obtained.

The Schneider et al. ensemble constrained by their selection of LGM data gives a global-mean cooling range during the LGM of 5.8 +/- 1.4ºC (Schnieder Von Deimling et al, 2006), while the best fit from the UVic model used in the new paper has 3.5ºC cooling, well outside this range (weighted average calculated from the online data, a slightly different number is stated in Nathan Urban’s interview – not sure why).

Curiously, the mean SEA estimate (2.4ºC) is identical to the mean KEA number, but there is a big difference in what they concluded the mean temperature at the LGM was, and a small difference in how they defined sensitivity. Thus the estimates of the forcings must be proportionately less as well. The differences are that the UVic model has a smaller forcing from the ice sheets, possibly because of an insufficiently steep lapse rate (5ºC/km instead of a steeper value that would be more typical of dryer polar regions), and also a smaller change from increased dust.

Model-data comparisons

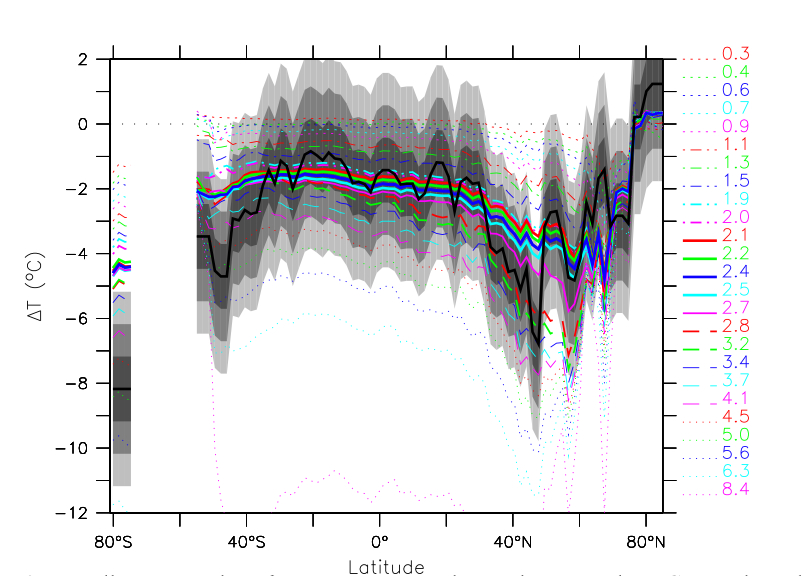

So there is a significant difference in the headline results from SEA compared to previous results. As we mentioned above though, there are reasons to think that their result is biased low. There are two main issues here. First, the constraint to a lower sensitivity is dominated by the ocean data – if the fit is made to the land data alone, the sensitivity would be substantially higher (though with higher uncertainty). The best fit for all the data underpredicts the land temperatures significantly.

However, even in the ocean the fit to the data is not that good in many regions – particular the southern oceans and Antarctica, but also in the Northern mid-latitudes. This occurs because the tropical ocean data are weighing more heavily in the assessment than the sparser and possibly less accurate polar and mid-latitude data. Thus there is a mismatch between the pattern of cooling produced by the model, and the pattern inferred from the real world. This could be because of the structural deficiency of the model, or because of errors in the data, but the (hard to characterise) uncertainty in the former is not being carried into final uncertainty estimate. None of the different model versions here seem to get the large polar amplification of change seen in the data for instance.

Response and media coverage

All in all, this is an interesting paper and methodology, though we think it slightly underestimates the most likely sensitivity, and rather more seriously underestimates the chances that the sensitivity lies at the upper end of the IPCC range. Some other commentaries have come to similar conclusions: James Annan (here and here), and there is an excellent interview with Nathan Urban here, which discusses the caveats clearly. The perspective piece from Gabi Hegerl is also worth reading.

Unfortunately, the media coverage has not been very good. Partly, this is related to some ambiguous statements by the authors, and partly because media discussions of climate sensitivity have a history of being poorly done. The dominant frame was set by the press release which made a point of suggesting that this result made “extreme predictions” unlikely. This is fair enough, but had already been clear from the previous work discussed above. This was transformed into “Climate sensitivity was ‘overestimated'” by the BBC (not really a valid statement about the state of the science), compounded by the quote that Andreas Schmittner gave that “this implies that the effect of CO2 on climate is less than previously thought”. Who had previously thought what was left to the readers’ imagination. Indeed, the latter quote also prompted the predictably loony IBD editorial board to declare that this result proves that climate science is a fraud (though this is not Schmittner’s fault – they conclude the same thing every other Tuesday).

The Schmittner et al. analysis marks the insensitive end of the spectrum of climate sensitivity estimates based on LGM data, in large measure because it used a data set and a weighting that may well be biased toward insufficient cooling. Unfortunately, in reporting new scientific studies a common fallacy is to implicitly assume a new study is automatically “better” than previous work and supersedes this. In this case one can’t blame the media, since the authors’ press release cites Schmittner saying that “the effect of CO2 on climate is less than previously thought”. It would have been more appropriate to say something like “our estimate of the effect is less than many previous estimates”.

Implications

It is not all that earthshaking that the numbers in Schmittner et al come in a little low: the 2.3ºC is well within previously accepted uncertainty, and three of the IPCC AR4 models used for future projections have a climate sensitivity of 2.3ºC or lower, so that the range of IPCC projections already encompasses this possibility. (Hence there would be very little policy relevance to this result even if it were true, though note the small difference in definitions of sensitivity mentioned above).

What is more surprising is the small uncertainty interval given by this paper, and this is probably simply due to the fact that not all relevant uncertainties in the forcing, the proxy temperatures and the model have been included here. In view of these shortcomings, the confidence with which the authors essentially rule out the upper end of the IPCC sensitivity range is, in our view, unwarranted.

Be that as it may, all these studies, despite the large variety in data used, model structure and approach, have one thing in common: without the role of CO2 as a greenhouse gas, i.e. the cooling effect of the lower glacial CO2 concentration, the ice age climate cannot be explained. The result — in common with many previous studies — actually goes considerably further than that. The LGM cooling is plainly incompatible with the existence of a strongly stabilizing feedback such as the oft-quoted Lindzen’s Iris mechanism. It is even incompatible with the low climate sensitivities you would get in a so-called ‘no-feedback’ response (i.e just the Planck feedback – apologies for the terminological confusion).

It bears noting that even if the SEA mean estimate were correct, it still lies well above the ever-more implausible estimates of those that wish the climate sensitivity were negligible. And that means that the implications for policy remain the same as they always were. Indeed, if one accepts a very liberal risk level of 50% for mean global warming of 2°C (the guiderail widely adopted) since the start of the industrial age, then under midrange IPCC climate sensitivity estimates, then we have around 30 years before the risk level is exceeded. Specifically, to reach that probability level, we can burn a total of about one trillion metric tonnes of carbon. That gives us about 24 years at current growth rates (about 3%/year). Since warming is proportional to cumulative carbon, if the climate sensitivity were really as low as Schmittner et al. estimate, then another 500 GT would take us to the same risk level, some 11 years later.

References

- A. Schmittner, N.M. Urban, J.D. Shakun, N.M. Mahowald, P.U. Clark, P.J. Bartlein, A.C. Mix, and A. Rosell-Melé, "Climate Sensitivity Estimated from Temperature Reconstructions of the Last Glacial Maximum", Science, vol. 334, pp. 1385-1388, 2011. http://dx.doi.org/10.1126/science.1203513

- C. Lorius, J. Jouzel, D. Raynaud, J. Hansen, and H.L. Treut, "The ice-core record: climate sensitivity and future greenhouse warming", Nature, vol. 347, pp. 139-145, 1990. http://dx.doi.org/10.1038/347139a0

- P. Köhler, R. Bintanja, H. Fischer, F. Joos, R. Knutti, G. Lohmann, and V. Masson-Delmotte, "What caused Earth's temperature variations during the last 800,000 years? Data-based evidence on radiative forcing and constraints on climate sensitivity", Quaternary Science Reviews, vol. 29, pp. 129-145, 2010. http://dx.doi.org/10.1016/j.quascirev.2009.09.026

- T. Schneider von Deimling, H. Held, A. Ganopolski, and S. Rahmstorf, "Climate sensitivity estimated from ensemble simulations of glacial climate", Climate Dynamics, vol. 27, pp. 149-163, 2006. http://dx.doi.org/10.1007/s00382-006-0126-8

- D.J. Lunt, A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett, "Earth system sensitivity inferred from Pliocene modelling and data", Nature Geoscience, vol. 3, pp. 60-64, 2009. http://dx.doi.org/10.1038/NGEO706

- M. Pagani, K. Caldeira, D. Archer, and J.C. Zachos, "An Ancient Carbon Mystery", Science, vol. 314, pp. 1556-1557, 2006. http://dx.doi.org/10.1126/science.1136110

- M. Crucifix, "Does the Last Glacial Maximum constrain climate sensitivity?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL027137

- J.C. Hargreaves, A. Abe-Ouchi, and J.D. Annan, "Linking glacial and future climates through an ensemble of GCM simulations", Climate of the Past, vol. 3, pp. 77-87, 2007. http://dx.doi.org/10.5194/cp-3-77-2007

- J.D. Annan, J.C. Hargreaves, R. Ohgaito, A. Abe-Ouchi, and S. Emori, "Efficiently Constraining Climate Sensitivity with Ensembles of Paleoclimate Simulations", SOLA, vol. 1, pp. 181-184, 2005. http://dx.doi.org/10.2151/sola.2005-047

- T. Schneider von Deimling, A. Ganopolski, H. Held, and S. Rahmstorf, "How cold was the Last Glacial Maximum?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL026484

#150,

Their ~2.3C finding applies to a doubling from preindustrial, not to what happened in the past when CO2 was a feedback.

They found ~2.3C and not ~3C. That’s “the beef”. It’s not a big deal (see comments by the RC crew above).

This finding is not some kind of observation. You can not determine sensitivity or convert from one type to another without a model.

Sensitivity is confusing. I’d rather not say more considering how exitable some commenters get when the limitations of the concept are brought up.

What was apparently said to that journalist is that a doubling from preindustrial would lead to a temperature difference commensurate with the difference between LGM and today. That’s all.

RW @ 142: The bottom line though is permanent ice melting or ice loss generally requires yearly averaged temperatures above 0C. Below 0C there just isn’t going to be any permanent or long term melting. Also, most of the ice on Greenland and Antarctica is located in areas where the yearly average temperature is already significantly below 0C – many areas multiple 10s of degrees below 0C.

Can you please explain how so much of the globe where mean annual temperatures are below 0C are not covered with ice year-round then? The following map (I know, it uses data from 1899, but we know any warming since then is all made up, don’t we?) seems to think that much of northern Canada and Siberia are below 0C (32F on the map), yet so much of that area does not have permanent snow/ice cover. Permafrost, yes, but even then the top metre of soil thaws each summer.

Global Temperature Map from 1899

Bob,

Here is a more recent map of global isotherms:

http://www.physicalgeography.net/fundamentals/7m.html

#151,

Thanks again. That seems to be the gist of it but something still seems to be backwards in his thinking. A doubling should have a greater effect than less than a doubling….

#154,

Naturally a doubling would have a greater effect than going from 180 to 280 ppm if everything else was the same.

But everything else isn’t the same. You shouldn’t assume there’s a simple relationship between atmospheric CO2 and temperatures.

The uncertainties and the ultimate effect of a stabilization at 560 ppm weren’t mentionned in the communication you linked to. I guess it could have mentionned for the sake of completeness that higher sensitivities are plausible and that feedbacks which were not anticipated or quantified in AR4 might cause further warming down the road.

But does it matter really? The communication was trying to convey the big piture. We don’t know exactly what emissions scenarios would lead to a stabilization at 560 ppm or what exactly the impacts of a given average termperature increase would be. There are uncertainties all over the place. But you’ve got to cut to the chase and give people a glimpse of the big picture anyway. Going into minutiae about sensitivity isn’t helpful. Journalists in general are not helpful.

Anonymous,

I would add that the sensitivity may not be a constant. While it may have been ~2.3 during the LGM, that is their calculation based on their inputs at the time. There were uncertainties in their calculations, just are there are uncertainties today. The sensitivity today could be higher or lower, and similarly for a future whereby atmospheric CO2 levels are higher.

Journalists are only helpful when they have adequate knowledge about the field in which they are writing, which appears to be rather rare these days.