There is a new paper on Science Express that examines the constraints on climate sensitivity from looking at the last glacial maximum (LGM), around 21,000 years ago (Schmittner et al, 2011) (SEA). The headline number (2.3ºC) is a little lower than IPCC’s “best estimate” of 3ºC global warming for a doubling of CO2, but within the likely range (2-4.5ºC) of the last IPCC report. However, there are reasons to think that the result may well be biased low, and stated with rather more confidence than is warranted given the limitations of the study.

Climate sensitivity is a key characteristic of the climate system, since it tells us how much global warming to expect for a given forcing. It usually refers to how much surface warming would result from a doubling of CO2 in the atmosphere, but is actually a more general metric that gives a good indication of what any radiative forcing (from the sun, a change in surface albedo, aerosols etc.) would do to surface temperatures at equilibrium. It is something we have discussed a lot here (see here for a selection of posts).

Climate models inherently predict climate sensitivity, which results from the basic Planck feedback (the increase of infrared cooling with temperature) modified by various other feedbacks (mainly the water vapor, lapse rate, cloud and albedo feedbacks). But observational data can reveal how the climate system has responded to known forcings in the past, and hence give constraints on climate sensitivity. The IPCC AR4 (9.6: Observational Constraints on Climate Sensitivity) lists 13 studies (Table 9.3) that constrain climate sensitivity using various types of data, including two using LGM data. More have appeared since.

It is important to regard the LGM studies as just one set of points in the cloud yielded by other climate sensitivity estimates, but the LGM has been a frequent target because it was a period for which there is a lot of data from varied sources, climate was significantly different from today, and we have considerable information about the important drivers – like CO2, CH4, ice sheet extent, vegetation changes etc. Even as far back as Lorius et al (1990), estimates of the mean temperature change and the net forcing, were combined to give estimates of sensitivity of about 3ºC. More recently Köhler et al (2010) (KEA), used estimates of all the LGM forcings, and an estimate of the global mean temperature change, to constrain the sensitivity to 1.4-5.2ºC (5–95%), with a mean value of 2.4ºC. Another study, using a joint model-data approach, (Schneider von Deimling et al, 2006b), derived a range of 1.2 – 4.3ºC (5-95%). The SEA paper, with its range of 1.4 – 2.8ºC (5-95%), is merely the latest in a series of these studies.

Definitions of sensitivity

The standard definition of climate sensitivity comes from the Charney report in 1979, where the response was defined as that of an atmospheric model with fixed boundary conditions (ice sheets, vegetation, atmospheric composition) but variable ocean temperatures, to 2xCO2. This has become a standard model metric (because it is relatively easy to calculate. It is not however the same thing as what would really happen to the climate with 2xCO2, because of course, those ‘fixed’ factors would not stay fixed.

Note then, that the SEA definition of sensitivity includes feedbacks associated with vegetation, which was considered a forcing in the standard Charney definition. Thus for the sensitivity determined by SEA to be comparable to the others, one would need to know the forcing due to the modelled vegetation change. KEA estimated that LGM vegetation forcing was around -1.1+/-0.6 W/m2 (because of the loss of trees in polar latitudes, replacement of forests by savannah etc.), and if that was similar to the SEA modelled impact, their Charney sensitivity would be closer to 2ºC (down from 2.3ºC).

Other studies have also expanded the scope of the sensitivity definition to include even more factors, a definition different enough to have its own name: the Earth System Sensitivity. Notably, both the Pliocene warm climate (Lunt et al., 2010), and the Paleocene-Eocene Thermal Maximum (Pagani et al., 2006), tend to support Earth System sensitivities higher than the Charney sensitivity.

Is sensitivity symmetric?

The first thing that must be recognized regarding all studies of this type is that it is unclear to what extent behavior in the LGM is a reliable guide to how much it will warm when CO2 is increased from its pre-industrial value. The LGM was a very different world than the present, involving considerable expansions of sea ice, massive Northern Hemisphere land ice sheets, geographically inhomogeneous dust radiative forcing, and a different ocean circulation. The relative contributions of the various feedbacks that make up climate sensitivity need not be the same going back to the LGM as in a world warming relative to the pre-industrial climate. The analysis in Crucifix (2006) indicates that there is not a good correlation between sensitivity on the LGM side and sensitivity to 2XCO2 in the selection of models he looked at.

There has been some other work to suggest that overall sensitivity to a cooling is a little less (80-90%) than sensitivity to a warming, for instance Hargreaves and Annan (2007), so the numbers of Schmittner et al. are less different from the “3ºC” number than they might at first appear. The factors that determine this asymmetry are various, involving ice albedo feedbacks, cloud feedbacks and other atmospheric processes, e.g., water vapor content increases approximately exponentially with temperature (Clausius-Clapeyron equation) so that the water vapor feedback gets stronger the warmer it is. In reality, the strength of feedbacks changes with temperature. Thus the complexity of the model being used needs to be assessed to see whether it is capable of addressing this.

Does the model used adequately represent key climate feedbacks?

Typically, LGM constraints on climate sensitivity are obtained by producing a large ensemble of climate model versions where uncertain parameters are systematically varied, and then comparing the LGM simulations of all these models with “observed” LGM data, i.e. proxy data, by applying statistical approach of one sort or another. It is noteworthy that very different models have been used for this: Annan et al. (2005) used an atmospheric GCM with a simple slab ocean, Schneider et al. (2006) the intermediate-complexity model CLIMBER-2 (with both ocean and atmosphere of intermediate complexity), while the new Schmittner et al. study uses an oceanic GCM coupled to a simple energy-balance atmosphere (UVic).

These models all suggest potentially serious limitations for this kind of study: UVic does not simulate the atmospheric feedbacks that determine climate sensitivity in more realistic models, but rather fixes the atmospheric part of the climate sensitivity as a prescribed model parameter (surface albedo, however, is internally computed). Hence, the dominant part of climate sensitivity remains the same, whether looking at 3ºC cooling or 3ºC warming. Slab oceans on the other hand, do not allow for variations in ocean circulation, which was certainly important for the LGM, and other intermediate models have all made key assumptions that may impact these feedbacks. However, in view of the fact that cloud feedbacks are the dominant contribution to uncertainty in climate sensitivity, the fact that the energy balance model used by Schmittner et al cannot compute changes in cloud radiative forcing is particularly serious.

Uncertainties in LGM proxy data

Perhaps the key difference of Schmittner et al. to some previous studies is their use of all available proxy data for the LGM, whilst other studies have selected a subset of proxy data that they deemed particularly reliable (e.g., in Schneider et al. SST data from the tropical Atlantic, Greenland and Antarctic ice cores and some tropical land temperatures). Uncertainties of the proxy data (and the question of knowing what these uncertainties are) are crucial in this kind of study. A well-known issue with LGM proxies is that the most abundant type of proxy data, using the species composition of tiny marine organisms called foraminifera, probably underestimates sea surface cooling over vast stretches of the tropical oceans; other methods like alkenone and Mg/Ca ratios give colder temperatures (but aren’t all coherent either). It is clear that this data issue makes a large difference in the sensitivity obtained.

The Schneider et al. ensemble constrained by their selection of LGM data gives a global-mean cooling range during the LGM of 5.8 +/- 1.4ºC (Schnieder Von Deimling et al, 2006), while the best fit from the UVic model used in the new paper has 3.5ºC cooling, well outside this range (weighted average calculated from the online data, a slightly different number is stated in Nathan Urban’s interview – not sure why).

Curiously, the mean SEA estimate (2.4ºC) is identical to the mean KEA number, but there is a big difference in what they concluded the mean temperature at the LGM was, and a small difference in how they defined sensitivity. Thus the estimates of the forcings must be proportionately less as well. The differences are that the UVic model has a smaller forcing from the ice sheets, possibly because of an insufficiently steep lapse rate (5ºC/km instead of a steeper value that would be more typical of dryer polar regions), and also a smaller change from increased dust.

Model-data comparisons

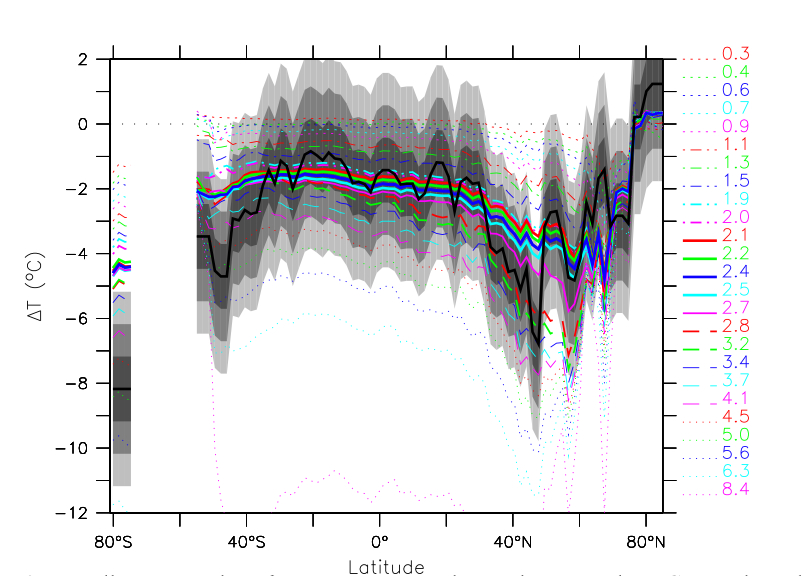

So there is a significant difference in the headline results from SEA compared to previous results. As we mentioned above though, there are reasons to think that their result is biased low. There are two main issues here. First, the constraint to a lower sensitivity is dominated by the ocean data – if the fit is made to the land data alone, the sensitivity would be substantially higher (though with higher uncertainty). The best fit for all the data underpredicts the land temperatures significantly.

However, even in the ocean the fit to the data is not that good in many regions – particular the southern oceans and Antarctica, but also in the Northern mid-latitudes. This occurs because the tropical ocean data are weighing more heavily in the assessment than the sparser and possibly less accurate polar and mid-latitude data. Thus there is a mismatch between the pattern of cooling produced by the model, and the pattern inferred from the real world. This could be because of the structural deficiency of the model, or because of errors in the data, but the (hard to characterise) uncertainty in the former is not being carried into final uncertainty estimate. None of the different model versions here seem to get the large polar amplification of change seen in the data for instance.

Response and media coverage

All in all, this is an interesting paper and methodology, though we think it slightly underestimates the most likely sensitivity, and rather more seriously underestimates the chances that the sensitivity lies at the upper end of the IPCC range. Some other commentaries have come to similar conclusions: James Annan (here and here), and there is an excellent interview with Nathan Urban here, which discusses the caveats clearly. The perspective piece from Gabi Hegerl is also worth reading.

Unfortunately, the media coverage has not been very good. Partly, this is related to some ambiguous statements by the authors, and partly because media discussions of climate sensitivity have a history of being poorly done. The dominant frame was set by the press release which made a point of suggesting that this result made “extreme predictions” unlikely. This is fair enough, but had already been clear from the previous work discussed above. This was transformed into “Climate sensitivity was ‘overestimated'” by the BBC (not really a valid statement about the state of the science), compounded by the quote that Andreas Schmittner gave that “this implies that the effect of CO2 on climate is less than previously thought”. Who had previously thought what was left to the readers’ imagination. Indeed, the latter quote also prompted the predictably loony IBD editorial board to declare that this result proves that climate science is a fraud (though this is not Schmittner’s fault – they conclude the same thing every other Tuesday).

The Schmittner et al. analysis marks the insensitive end of the spectrum of climate sensitivity estimates based on LGM data, in large measure because it used a data set and a weighting that may well be biased toward insufficient cooling. Unfortunately, in reporting new scientific studies a common fallacy is to implicitly assume a new study is automatically “better” than previous work and supersedes this. In this case one can’t blame the media, since the authors’ press release cites Schmittner saying that “the effect of CO2 on climate is less than previously thought”. It would have been more appropriate to say something like “our estimate of the effect is less than many previous estimates”.

Implications

It is not all that earthshaking that the numbers in Schmittner et al come in a little low: the 2.3ºC is well within previously accepted uncertainty, and three of the IPCC AR4 models used for future projections have a climate sensitivity of 2.3ºC or lower, so that the range of IPCC projections already encompasses this possibility. (Hence there would be very little policy relevance to this result even if it were true, though note the small difference in definitions of sensitivity mentioned above).

What is more surprising is the small uncertainty interval given by this paper, and this is probably simply due to the fact that not all relevant uncertainties in the forcing, the proxy temperatures and the model have been included here. In view of these shortcomings, the confidence with which the authors essentially rule out the upper end of the IPCC sensitivity range is, in our view, unwarranted.

Be that as it may, all these studies, despite the large variety in data used, model structure and approach, have one thing in common: without the role of CO2 as a greenhouse gas, i.e. the cooling effect of the lower glacial CO2 concentration, the ice age climate cannot be explained. The result — in common with many previous studies — actually goes considerably further than that. The LGM cooling is plainly incompatible with the existence of a strongly stabilizing feedback such as the oft-quoted Lindzen’s Iris mechanism. It is even incompatible with the low climate sensitivities you would get in a so-called ‘no-feedback’ response (i.e just the Planck feedback – apologies for the terminological confusion).

It bears noting that even if the SEA mean estimate were correct, it still lies well above the ever-more implausible estimates of those that wish the climate sensitivity were negligible. And that means that the implications for policy remain the same as they always were. Indeed, if one accepts a very liberal risk level of 50% for mean global warming of 2°C (the guiderail widely adopted) since the start of the industrial age, then under midrange IPCC climate sensitivity estimates, then we have around 30 years before the risk level is exceeded. Specifically, to reach that probability level, we can burn a total of about one trillion metric tonnes of carbon. That gives us about 24 years at current growth rates (about 3%/year). Since warming is proportional to cumulative carbon, if the climate sensitivity were really as low as Schmittner et al. estimate, then another 500 GT would take us to the same risk level, some 11 years later.

References

- A. Schmittner, N.M. Urban, J.D. Shakun, N.M. Mahowald, P.U. Clark, P.J. Bartlein, A.C. Mix, and A. Rosell-Melé, "Climate Sensitivity Estimated from Temperature Reconstructions of the Last Glacial Maximum", Science, vol. 334, pp. 1385-1388, 2011. http://dx.doi.org/10.1126/science.1203513

- C. Lorius, J. Jouzel, D. Raynaud, J. Hansen, and H.L. Treut, "The ice-core record: climate sensitivity and future greenhouse warming", Nature, vol. 347, pp. 139-145, 1990. http://dx.doi.org/10.1038/347139a0

- P. Köhler, R. Bintanja, H. Fischer, F. Joos, R. Knutti, G. Lohmann, and V. Masson-Delmotte, "What caused Earth's temperature variations during the last 800,000 years? Data-based evidence on radiative forcing and constraints on climate sensitivity", Quaternary Science Reviews, vol. 29, pp. 129-145, 2010. http://dx.doi.org/10.1016/j.quascirev.2009.09.026

- T. Schneider von Deimling, H. Held, A. Ganopolski, and S. Rahmstorf, "Climate sensitivity estimated from ensemble simulations of glacial climate", Climate Dynamics, vol. 27, pp. 149-163, 2006. http://dx.doi.org/10.1007/s00382-006-0126-8

- D.J. Lunt, A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett, "Earth system sensitivity inferred from Pliocene modelling and data", Nature Geoscience, vol. 3, pp. 60-64, 2009. http://dx.doi.org/10.1038/NGEO706

- M. Pagani, K. Caldeira, D. Archer, and J.C. Zachos, "An Ancient Carbon Mystery", Science, vol. 314, pp. 1556-1557, 2006. http://dx.doi.org/10.1126/science.1136110

- M. Crucifix, "Does the Last Glacial Maximum constrain climate sensitivity?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL027137

- J.C. Hargreaves, A. Abe-Ouchi, and J.D. Annan, "Linking glacial and future climates through an ensemble of GCM simulations", Climate of the Past, vol. 3, pp. 77-87, 2007. http://dx.doi.org/10.5194/cp-3-77-2007

- J.D. Annan, J.C. Hargreaves, R. Ohgaito, A. Abe-Ouchi, and S. Emori, "Efficiently Constraining Climate Sensitivity with Ensembles of Paleoclimate Simulations", SOLA, vol. 1, pp. 181-184, 2005. http://dx.doi.org/10.2151/sola.2005-047

- T. Schneider von Deimling, A. Ganopolski, H. Held, and S. Rahmstorf, "How cold was the Last Glacial Maximum?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL026484

and stated with rather more confidence than is warranted given the limitations of the study.

That’s a good observation, especially considering that there is no evidence relative to the question of whether “the climate sensitivity” is even constant.

[Response: By “constant” I believe you are referring to the issue of asymmetry between climate sensitivity that best fits cooling vs. warming climates. If so, you are right that this is one of the key issues, as we noted in the post. The MARGO LGM reconstruction team made a similar remark in their Nature Geoscience paper on the implications of their reconstruction. They state a 2xCO2 climate sensitivity range of 1. to 3.6 C, and that’s not even taking into account the possibility that MARGO underestimates LGM cooling by under-weighting modern proxies like Mg/Ca. What odds do you want to put on Mg/Ca being more likely to be right than foram assemblages?–raypierre]

Anonymous,

CO2 absorption and degassing occur separately. Cold, polar waters constantly absorb CO2, sink as it becomes more dense, and is transported to the equatorial waters via the ThermoHaline and outgases in the warmer waters of the Indian and Pacific Oceans. The colder, polar waters have an ~3x higher CO2 solubility than the warmer, equatorial waters. There is a debate as to how long the THC takes to complete this absorption/degassing process, with the Vostok data indicating that it is on the order of a millenium.

The CO2 solubility change due to the increase in ocean temperatures is small compared to the change in the atmospheric concentration. Therefore, the concentration gradient may be increasing the rate of absorption much faster than the warmer, equatorial waters are outgassing.

This is just an over-simplification of a very complex interaction.

Isotopious,

I really do not know what you are trying to “challenge.” If you want a tutorial into how climate scientists think about feedbacks, a good article to read is Roe, 2009. It takes nothing more than a little multi-variable calculus and algebra to fully digest, and you should be able to fully understand why positive feedback doesn’t imply some sort of runaway warming or cooling. You can think of the widely cited 1/(1-f) feedback dependence as a sort of converging series, though I’m not so sure that is the best place to start for intuition.

Climate sensitivity can be thought of as the inverse of the slope of a line relating the net top-of-atmosphere energy balance to the surface temperature. For low climate sensitivity, the outgoing radiation is a stronger function of temperature; for a higher sensitivity, the response of the surface temperature is “more sluggish” and must rise more to accommodate the same change in IR emission that is necessary to come to balance. Water vapor makes the outgoing radiation less sensitive (more linear) than σT^4, but the system can still equilibriate at a higher temperature due to the Planck restoring effect winning out in the end. The water vapor just makes the Planck response less effective, so you need a higher temperature change for the same perturbation than in a no feedback case.

The primary limit to the pressure of a vapor in equilibrium with a liquid (or solid) at a given T is governed by the Clausius-Clapeyron equation; the vapor pressure is a rapidly increasing function of temperature, and the T dependence is determined by the magnitude of the latent heat of vaporization. This imposes a strong constraint on the ability of water vapor to grow in the atmosphere, although their are also dynamical processes which keep the atmosphere unsaturated that are important. It is of course possible that the increase in greenhouse effect overwhelms the tendency for increased vapor pressure to result in saturation, and this is what happens in the runaway greenhouse; in this limit, the vapor pressure relates the optical depth to the radiating temperature of the moist troposphere, and the surface OLR no longer contributes to the planetary OLR. The water vapor feedback overwhelms the Planck response and provided you have enough solar insolation, equilibrium is never established until the water vapor feedback is terminated (i.e., when the oceans are gone)

@102 & raypierre,

Constant climate sensitivity or not: in cooling vs warming periods, but also in more or less glaciated periods, I suppose?

Deglaciation seems to be a much faster process than glaciation, and depending on the amount of ice on the planet these processes may be slower or faster, if I understand Hansen correctly on this. How much scientific agreement or discussion is there on this point and what could be the full range of climate sensitivities for different periods, approximately?

Thanks Chris,

So the reference system climate sensitivity parameter is based on a negative feedback due to Stefan’s law. As Roe says in the paper ‘However, it should be borne in mind that, in so choosing, the feedback factor becomes dependent on the reference-system sensitivity parameter’. So the feedback loop takes some ‘fraction’ of output and feeds it back into the input.

I suppose the point I was trying to make is that even though water vapor is condensable, once in place, it is essentially a forcing. It may only last for 9 days, but that in my opinion would be sufficient time for it to be stable, since its concentration is determined by heat in the system (you mentioned the remarkable latent heat of water, so the effect of the ocean heat content would provide plenty of stability).

I guess an event such as Pinatubo may provide an insight into what I have suggested. The water vapour would have dropped a fair bit, then bounced back soon after. Is that bounce back due to non –condensable GHGs or thermal inertia?

I would suggest it is due to the latter (for obvious reasons). So this poses a potential problem for CO2 – water vapor feedback theory. It is entirely possible that water vapor feedback has very little to do with non –condensable GHGs.

That’s the challenge.

Steinar Midtskogen (#97),

“when we can use observations to say something about the sensitivity (even only its lower limit). IPCC seem to say that we can already”

That’s also my understanding.

“Also a bit puzzling is the IPCC statement “values substantially higher than 4.5 °C cannot be excluded, but agreement of models with observations is not as good for those values”. Since equilibrium could take a very long time, I would think that the observations (the instrument record) would say very little about the upper limit.”

Perhaps you should refer to section 9.6.2 of the IPCC’s AR4 (WG1).

There is more to obervations than the global warming in the instrumental record.

What this is saying I think is that no one had managed at that point to build a physics-based model which produces a very high sensitivity while agreeing well with observations such as the effect of the Pinatubo eruption.

But IANAC so…

Isotopious,

Huh? Re-read what people have said. You aren’t making much sense and trying to decipher what you are saying is troublesome, and I suspect at this point you’re just playing games.

Once again, the water vapor feedback responds to temperature, not *specifically* to CO2. In the case of a volcanic eruption, it’s the sulfate aerosols injected into the stratosphere that provide the large cooling, and after they are removed (over a timescale of a few years), then provide a return back near original conditions (as it happens, Brian Soden has a paper validating the WV effect after the Pinatubo response here). This has nothing to do with thermal inertia, except that the response would have been even stronger on a planet with no buffering capability, as occurs with Martian dust storms for example. The instantaneous radiative forcing was comparable to that of a doubling of CO2, except in the cooling direction, but was very short-lived.

If CO2 were only a minor part of the terrestrial greenhouse effect, the influence on temperature may not be severe enough to trigger the snowball scenario. This wouldn’t work with N2O or methane for example. But in the case of CO2, which is a rather sizable portion of the greenhouse effect, the temperatures would be cold enough to trigger the scenario raypierre discussed (in the work of Aiko Voigt; he also discusses this in his 2007 general circulation paper, and more recently was discussed in the Lacis et al 2010 paper in Science).

Ocean currents that may carry large amounts of heat are not calculated into the GCM, and thus we do not have a good estimate of the rate of energy transfer at the boundaries of specific sea -floor methane systems. Recent methane measurements at Terceira Island, Azores, Portugal and Tae-ahn Peninsula, Republic of Korea (See http://www.esrl.noaa.gov/gmd/dv/iadv/index.php ) in the context of outlier data points over the last decade at sites such as Storhofdi, Vestmannaeyjar, Iceland, and reports of methane releases from the Arctic seabed, tell us that at current levels of AGW, the Earth’s sea-floor methane systems are not stable. Add in CO2/Ch4 from Arctic and tropical wetlands along with carbon from deforestation, and the assumption that carbon feed-backs can be ignored as we estimate future conditions is wrong. Any policy based on projections made without including all feed-backs will be flawed.

Thus, short term climate sensitivity is moot. We now face long term climate sensitivity for the coal that we burned 50 or 100 years ago. Long term climate sensitivity is twice (or three times?) the short term value.

The last paragraph in the post on risk should have made an honest estimate of all warming as a result of of applying all feed backs to all greenhouse gases in the atmosphere. You may not be able to “prove” such an honest estimate, but it is more likely to be correct than a value based on some estimate of short term climate sensitivity. Models using short term climate sensitivity for carbon that we released a century ago do not provide a realistic estimate of what can be reasonably expected over the next 24 to 35 years. (i.e., Total feed-backs for anthropogenic carbon in the atmosphere for a period of 135 years or more.)

Use of long term climate sensitivity also changes the cost (and time for depreciation) of releasing more carbon. Facing (and planning for) the full impact of long term climate sensitivity is the rational risk management approach.

Dan H.: “Cold, polar waters constantly absorb CO2, sink as it becomes more dense, and is transported to the equatorial waters via the ThermoHaline and outgases in the warmer waters of the Indian and Pacific Oceans.”

This is precisely why warming in the poles is of such concern–there’s a whole helluva lot of carbon sequestered up there…in oceans, permafrost, clathrates…

And some human activities had no parallel in the paleo record:

GEOPHYSICAL RESEARCH LETTERS, doi:10.1029/2011GL049784

Arctic winter 2010/2011 at the brink of an ozone hole

“… severe ozone depletion like in 2010/2011 or even worse could appear for cold Arctic winters over the next decades if the observed tendency for cold Arctic winters to become colder continues into the future….”

http://www.agu.org/pubs/crossref/pip/2011GL049784.shtml

GEOPHYSICAL RESEARCH LETTERS, doi:10.1029/2011GL049761

Stratospheric heating by potential geoengineering aerosols

Geoengineering aerosols change stratospheric radiative heating rates

Heating rates depend on aerosol species and size

Lennart van der Linde (#104),

Yes, a different planet should have a different sensitivity.

Traditionally, the definition of climate sensitivity excludes changes in ice cover over land among other things. The temperature variations across glacial cycles are obviously larger than what can attributed to changes in atmospheric CO2.

So the actual (very) long term warming should be higher than what you would get from sensitivity alone.

Chris,

Please note, I have not provided any mechanism for system change (i.e. I have not provided a substitute theory).

All I am highlighting is the possibility that water vapor feedback may have very little to do with non –condensable GHGs. The argument that water vapour is a feedback, rather than a forcing, seems to rest on the fact that it is condensable within a few days, rather than 100’s of years like CO2.

In a hypothetical carbon feedback, a positive feedback amplifies the signal (CO2), by releasing more carbon from a sink. In this case it is possible, for the CO2 attributed to feedback, to then become as large a signal as the initial signal. Now we have doubled the signal. In this case, simply reversing the initial perturbation back to zero will not bring the system back to its initial state.

I am arguing that there is no difference between CO2 and water vapour in this regard. That one is non –condensing makes little difference for the simple fact that the heat in the entire climate system is enormous. I disagree with the Soden paper you linked to. The water vapour responds to the temperature, not the CO2. Comparing the heat contribution of CO2, with the heat in the entire climate system on any time scale, is like comparing an elephant with an ant.

Isotopious, Do you know that the southerners among us are right this moment reading your post and saying, “Bless his heart.”

Dude, Water vapor is determined by temperature–it is therefore a feedback. CO2, when it is released thermally is a feedback. When CO2 is varied indepenently of temperature it is a forcing.

Isotopious — I admire your good natured persistence, but former students of mine control elephants with ants all the time.

First of all, simplify by imagining a box with orbital forcing as input and an (unobserable) temperature as output; the water vapor feed back is inside and is effectively instaneous on the scale of millennia appropriate for considerring ice age variations. Call that output temperature a signal which goes into box with the CO2 feedback; the output of this second box is the observable temperatures from paleoclimate proxies.

Considering just the second box, suppose the signal s=1 and the amplifying feedback from CO2 is f=1/2. Then the response becomes 1+1/2 except that the new 1/2 is also feedback givine another 1/4 and so on; we have a response

r = 1 + 1/2 + 1/4 + 1/8 + … = 2

and the general form (in this simple situation) is r = s/(1-f) for values of f less than 1. This form holds whether the signal is positive or negative. In any case of f=1/2 we see that the response is twice the signal. [That is approximately the correct value for ice age variations once water vapor is treated properly which I haven’t done.]

So yes, the system response to orbital forcing is about double the ‘raw’ value. But as Ray Ladbury ponts out, there is another way to perturb the system; add more atmospheric CO2 from a previously untapped reservoir. Charney (equilibrium) climate sensitivity is a (partial) indication of the system repsonse to instantly doubling CO2. The goal of the paper under review, as I take it, is an attempt to put an upper bound on the Charney climate sensitivity feedback by considering the LCM paleoclimate.

Isotopious – einstein? gallileo? or bozo the clown.

You decide …

Hey, dude …

Climate scientists will be stunned, stunned I say, to learn this!

(actually, the only reason you know this is that this is exactly what atmospheric physics tells us. Trivially, you’re right, except you’re in total denial over the fact that CO2 does raise the temperature.)

I suggest RC should do a whole article on sensitivity, all the way down to zero CO2 and all the way up to 2000 ppm. How logarithmic does it stay? You might need 5 dimensional graph paper.

Or comparing an elephant with LSD …

And died.

0.00065477292 pounds killed a 7000 pound bull elephant.

Apparently ants weigh something like 0.1 mg, i.e. 1/2970th of the weight of LSD in this case.

What makes you think that magnitude comparisons of this sort are particularly useful?

Perhaps this is the right place to mention a new paper:

Pagani et al

The Role of Carbon Dioxide During the Onset of Antarctic Glaciation

http://www.sciencemag.org/content/334/6060/1261

David Benson. Going off these calculations, how much ppm change during the ice cycles?

100ppm

What is the radiative perturbation to the system without feedback?

0.6 deg C

What is the radiative perturbation to the system with feedback (f =0.67)?

1.8 deg C

I can understand when RC thinks this current study underestimates climate sensitivity.

“Comparing the heat contribution of CO2, with the heat in the entire climate system on any time scale, is like comparing an elephant with an ant.”

Or, in other words, “Oooh! Look how small it is!”

Yeah. I’ve had years worth of conversation now in which “Oooh! Look how small it is!” (repeated incessantly in various forms) was pretty much the whole contribution from the ‘skeptic’ in question. Maybe I’m a bit jaded, but this seems one of the more foolish hand-wavings to me at this point.

At least, it’s a very poor substitute for actual attempts to quantify properly. You know, like this paper we’re discussing tries to do.

Isotopious seems to be repeating a meme that is gaining popularity amongst the less conspiracy-theory minded denialati–namely that the greenhouse effect is water vapor all the way down, and that CO2 “is a weak greenhouse gas”.

This “theory” at least has the merit that the warming produced would look like greenhouse warming. However, it would utterly fail to explain why the stratosphere is cooling (not much water vapor there). It also utterly ignores the transient nature of water vapor.

Anyone have any idea which denialist site this latest rebunking started on?

My understanding Ray, is that an increase in water vapor in the troposphere will cause cooling in the stratosphere. Just another correlation fallacy.

[Response: Based on what? Increases in local stratospheric water vapour cool the stratosphere (i.e Oinas et al, 2005), but I’m not aware of any study indicating that tropospheric water vapour increases have the same effect. Perhaps I’ve missed it? – gavin]

CO2 is a GHG and plays a role in warming, and as for the greenhouse effect of 30 odd deg C, estimates made by Gavin here on Real Climate are probably spot on. About 25 % CO2, etc, and about 75 % for water vapor and clouds. That would suggest CO2 is far from ‘weak’, however, I would suggest that it is no more important than water vapor in the role it plays in past ice cycles (look at the numbers!). CO2 is no more a feedback than water vapor, look at the ice core data.

[Response: CO2/GHG changes add about 40% to LGM cooling, water vapour feedback adds about 60%, so they are comparable in size – and both large! – gavin]

The desire to attribute water vapor to CO2 is easily understood from the denialist point of view. Like any good competitor, you need to knock out the competition, propose a joint venture.

Unfortunately, you can’t have it both ways. A stronger feedback is not plausible, it will blow up. And the IPCC estimate of around 3 deg C is what I used to change 0.6 into 1.8. But even that number is suspect since there is no proof that water vapor needs CO2 to ‘hold it up’.

Lot’s assumptions for very little effect.

I’m just guessing, Gavin.

From memory, I’m pretty sure that when there is warming in the troposphere, you get cooling in the stratosphere, and vice versa.

I think that’s what you see on the Aqua channels.

[Response: CO2/GHG changes add about 40% to LGM cooling, water vapour feedback adds about 60%, so they are comparable in size – and both large! – gavin]

Whatever, you get ~0.6 deg C from CO2 alone. It’s likely such a small forcing will have some serious competition.

Yes, ~8 deg C is large, however, a change from 8 to 8.6 is not very large at all.

Isotopious, you are missing the point. You start with a proximate cause. It causes warming or cooling. The climate responds to the warming or cooling, in part by increasing or decreasing water vapor a la Claussius-Clapeyron. Other greenhouse gasses also change. The changes in forcing brought about by these greenhouse gas changes (including water vapor) are a feedback on the initial forcing of the proximate cause.

For Milankovitch cycles, the proximate cause is changes in insolation, especially in the Northern Hemisphere. In the current warming epoch, the proximate cause is anthropogenic CO2. After all, the initial warming that led to the increase in H2O would not have happened without it. How hard is that to understand?

> the denialist point of view. Like any good competitor,

> … knock out the competition, propose a joint venture.

For those who can’t figure out where ‘iso’ gets this version of “science” this may help — it’s market-based “scientific” thinking. Quite popular among those who like that sort of thing.

The Rise of the Dedicated Natural Science Think Tank

http://www.ssrc.org/workspace/images/crm/new_publication_3/%7Beee91c8f-ac35-de11-afac-001cc477ec70%7D.pdf

Seriously — read it. It’s a worldview unimaginable to most in the sciences.

“… to have the ambition to change the very nature of knowledge production about both the natural and social worlds. Analysts need to take neoliberal theorists like Hayek at their word when they state that the Market is the superior information processor par excellence. The theoretical impetus behind the rise of the natural science think tanks is the belief that science progresses when everyone can buy the type of science they like, dispensing with whatever the academic disciplines say is mainstream or discredited science….

… the provision of targeted scientific findings (from ‘data’ to ‘theories’ to full-fledged scientific publications) within a concerted “marketplace of ideas” framework, with the intention of altering the balance of orthodoxy and heterodoxy from outside the university sector….

… The expanding role of natural science think tanks have due to two high profile events over the last few years: …. the numerous think tanks behind the contrarian science opposing the IPCC and academic global warming studies. But the real eye-opener for those concerned with science policy was the cache of documents made public in the series of tobacco industry settlements of the mid-1990s …..”

Those in that worldview believe everyone does this, it’s their whole world. They try to come up with ideas they can sell, not ideas they can falsify.

Just watch.

Ray. You are arguing that 100ppm change in radiative forcing for CO2, amounting to 0.6 deg C change, multiplied by a wv feedback giving 1.8 deg C change, plays an important part in raising the sea level by 100 meters.

I’m saying it doesn’t, because even if the above were true (which it probably isn’t, where’s the proof?) it’s not enough warming, and even if you add some orbital wobble, it still isn’t enough. Indeed, most of the response is probably due to obliquity, which has close to zero radiative forcing all said and done.

It’s possible the global anomaly is around 8 deg C for the ice cycle. If that were the case then CO2 alone would explain 7.5%.

92 meters of sea level to go, add some IPCC water vapor feedback, 77 meters to go, add some more positive feedbacks. Indeed, multiple feedbacks can have confounding effects so maybe that would help ‘fudge’ the data a little more.

But the point is that you are stretching reality, I have only tried to explain the climate here, let only the cycle. As Benson said ‘whether the signal is positive or negative’, it doesn’t matter. That maybe true for one simple feedback state, but in the case of multiple feedbacks with confounding effects I very much doubt that would happen.

‘How hard is that to understand?’

Extremely complex and difficult to follow.

Isotopious,

Uh, Dude, where did I say CO2 was the forcing that was responsible for the Ice ages and the interglacials? That is clearly the Milankovitch cycles that initiate the process–and CO2 and water vapor (along with changes in albedo due to snow and vegetation) are both feedbacks.

Might I suggest reading for comprehension?

“100ppm change in radiative forcing for CO2”–Don’t want to seem churlish here, but you can’t specify “radiative forcing” in ppm.

Just saying.

Isotopius:

Speak for yourself. Don’t imagine that it’s true for scientists working in the field, or for PhD physicists like Ray who don’t work in the field, or even for humble software engineers like myself who have a mathematical background.

[edit – please don’t]

Isotopious @126 — So long as the system reponse is linear the internal complexity doesn’t matter; the positive, i.e., enhancing, feedbacks remain postive and similarly for the negative ones. However, the ice age variations certainly involve some non-linear components. That makes the analysis more difficult but doesn’t change the polarity of the various feedbacks, just the strengths involved.

Obliquity is not the only aspect to orbital forcing; an appropriate graphic is found in A Moveable Trigger. More generally, the entire Quaternary was analyzed in Paillard and found to fit his trigger model rather well; studying that is recommended.

Gavin @ 122,

The stratopsheric cooling may be caused by the tropospheric water vapor (see figure 3 of http://www.springerlink.com/content/6677gr5lx8421105/fulltext.pdf ) – but in that figure water vapor is fixed only above sigma = 0.14 (~140 hPa), so the cooling may also be caused by the increase in lower stratospheric water vapor.

# 80, raypierre in the inline response,

…. But to reiterate: the difference between climate sensitivity estimates based on land vs. ocean data indicates that something is seriously wrong, either with the model, or the data, or some of both. In my view, that mismatch alone is reason not to put too much credence in Schmittner’s claim to have trimmed of the upper end of the IPCC climate sensitivity tail

Thanks, as this punter has been seeking a simply stated reasons for the view that the paper under discussion don’t seem to constrain upper bounds as well as it is variously reported to do so.

I would welcome any further substantive illumination on this point of from anyone.

And, I add a 2nd (more a 12th or 17th) to the expressions of thanks to Drs. Urban and Schmittner for their engagement here and other places on the net. And thanks, always, to the Real Climate providers.

Unless I’m missing something obvious, I don’t see how one can extrapolate or estimate current climate sensitivity from the amount of temperature change to the solar forcing change that ocurred from last glaciation to the present interglacial period.

For starters, one simply cannot equate the positive feedback effect of melting ice (both reduced albedo and increased water vapor) from that of leaving maximum ice to that of minimum ice where the climate is now (and is during every interglacial period). There just isn’t much ice left, and what is left would be extremely difficult to melt, as most of it is located at high latitudes around the poles which are mostly dark 6 months out of the year with way below freezing temperatures. A lot of the ice is thousands of feet above sea level too where the air is significantly colder on average. Unless we wait a few 10s of millions of years for plate tectonics to move Antarctica and Greenland to lower latitudes (if they are even moving in that direction), no significant amount of ice is going to melt from a relatively small ‘instrinstic’ rise in global average temperature from 2xCO2.

Furthermore, the relatively high ‘sensitivity’ from glacial to interglacial is largely driven by the change in the orbit relative to the Sun, which changes the distribution of incident solar energy into the system quite dramatically (more energy is distributed to the higher latitudes in the NH summer, in particular). This combined with positive feedback effect from melting of a huge amount surface ice was enough to cause the 5-6C rise. The roughly +7 W/m^2 or so increase from the Sun was likely only a minor contributor to the total net temperature change. Moreover, we are also nearing the end of this interglacial period, so if anything the orbital component has already flipped back in the direction of glaciation and cooling.

[Response: Your thinking is very much right on this in general but note that what is done with these estimates of climate sensitivity for LGM climate is to use the state of the climate already in place at the LGM — including the ice albedo. Note also that orbital configuration is not very different than today. You’re entirely right that huge seasonal forcing (40 W/m^2 or so) is what causes the demise of the ice in the first place (or -40 to cause it to start building up). But the actual forcing difference at the LGM, which is the period being considered, is minimal. And globally averaged, it is very close to zero (and definitely negligible in this context. It’s all about transient vs. equilibrium. Hope that helps.–eric]

RW

You’ve hit on one of the weaknesses of the paper, as the model they use admittedly doesn’t model changes in albedo (at least, not as a model output).

Thank you for pointing out one reason why the paper’s estimate of ECR is too low …

[Response: UVic doesn’t model changes in cloud albedo, but I’m quite sure it models changes in albedo due to sea ice and land ice. –raypierre]

Eric,

“note that what is done with these estimates of climate sensitivity for LGM climate is to use the state of the climate already in place at the LGM — including the ice albedo.”

I know, but the bottom line is most of the positive feedback from the melting of ice has already been used up as we left the LGM. For the reasons I stated, it’s mostly a ‘clamped’ effect in the current climate.

“Note also that orbital configuration is not very different than today. You’re entirely right that huge seasonal forcing (40 W/m^2 or so) is what causes the demise of the ice in the first place (or -40 to cause it to start building up). But the actual forcing difference at the LGM, which is the period being considered, is minimal. And globally averaged, it is very close to zero (and definitely negligible in this context. It’s all about transient vs. equilibrium.”

I was mainly referring to the change in the distribution of the incoming solar energy, which from what I understand is rather large. My point is this particular mechanism or influencing factor is not in play in the current climate.

I know the actual radiative forcing increase at the beginning of the transition from the LGM started out small and gradually increased, but the point is in total it’s estimated to be about 7 W/m^2. As I understand it, they are more or less trying to equate the 0.8C per 1 W/m^2 needed for a 3C rise from 2xCO2 (0.8 x 3.7 = 3C) to the +7 W/m^2 of net incident solar from the orbital change that ultimately resulted in about an increase of 5-6C from the LGM to the current interglacial period (0.8 x 7 = 5.6C).

[Response: No. They are comparing under nearly identical orbital conditions, and the ice is treated as a forcing, not a feedback. Of course in reality it is a slow feedback, but it can be treated as a forcing for the ‘fast feedback’ analysis that is being done. At least, this is (strongly) how I understand what was done (and definitely what older papers did).–eric]

If the two main factors that drove the bulk of temperature change from the LGM to the current interglacial are either non-existent (the orbital distribution change) or significantly reduced (the positive feedback from melting ice), I don’t see how it can be extrapolated or proportional to the current climate sensitivity.

[Response: You don’t understand how the estimate is done. It is not a simple extrapolation. The calculation (like many before it) uses a model subjected to LGM forcings, but with various settings for the atmospheric feedback magnitudes, to see how well the LGM is reproduced with various assumptions about feedbacks. The presumption is that the same feedbacks are operating in the future — it is not that the forcing is the same, or even that the ice-albedo feedback operates symmetrically. It’s a reasonable approach, and the only one that can deal with the fact that the LGM was so different than the present — but it’s an approach that is only as good as the model used. Hence my reservations about whether UVic is a suitable model for this kind of study. –raypierre]

Ray,

“You don’t understand how the estimate is done. It is not a simple extrapolation. The calculation (like many before it) uses a model subjected to LGM forcings, but with various settings for the atmospheric feedback magnitudes, to see how well the LGM is reproduced with various assumptions about feedbacks. The presumption is that the same feedbacks are operating in the future — it is not that the forcing is the same, or even that the ice-albedo feedback operates symmetrically.”

I understand it’s not an overtly direct extrapolation, but fundamentally it more or less still is if the underlying assumptions about the feedbacks are presumed to not only be correct but also operate proportionally the same to the forcing from the LGM as they do in reponse to future forcings in the current climate.

BTW, I have your book and enjoy it.

RW,

You wrote: “A lot of the ice is thousands of feet above sea level too where the air is significantly colder on average. Unless we wait a few 10s of millions of years for plate tectonics to move Antarctica and Greenland to lower latitudes (if they are even moving in that direction), no significant amount of ice is going to melt from a relatively small ‘instrinstic’ rise in global average temperature from 2xCO2.”

The idea is that ice flows downwards to the sea level around Antarctica and Greenland where it has always been melting. Warming simply makes it flow and melt faster, shrinking the ice sheets.

So it wouldn’t take that much warming to get rid of all the ice which geography isn’t keeping from flowing downwards. It must also have been really cold most of the year at the top of the Laurentide ice sheet when it melted away.

This post should get you started about expectations with regard to melting on human timescales: https://www.realclimate.org/index.php/archives/2008/09/on-straw-men-and-greenland-tad-pfeffer-responds/

You may also be interested in an article about a recent publication looking at CO2 and ice sheets on a geological timescale:

http://www.sciencedaily.com/releases/2011/12/111201174225.htm

Anonymous,

You must remember that the ice sheets flow due to the pressure imposed upon it by the ice pushing down at the center. While melting at the edges may cause the termini to recede, it will not cause the ice to flow any faster.

[Response: not true at all. Glacier speed also depends on bottom drag (which is a function of temperature and lubrication by melt water) and also stresses within the ice sheet/shelf as well. Ice flow sped up by a factor of 4 to 5 times in the source glaciers to the Larsen C ice shelf after it collapsed for instance. – gavin]

Lubrication by meltwater is a contentious subject. Stresses within the ice typically result from the pressures exerted by the bulk ice. Terminius melted has little to do with these stresses. The collapse of the Larsen C ice shelf was a special case, whereby the blocking of the glacier path was removed, and the ice was allowed to advance once more. Again, this is related to the pressure exerted on the glacie by the ice and the center. This is significantly different from termini melting. You may want to rethink your first line above.

[Response: Oh, so now you’re an expert on glacial dynamics too?–Jim]

he’s rebunking, I suspect, claims like those in the article debunked here:

http://www.geolsoc.org.uk/gsl/pid/7523

“As glaciologists and glacial geologists, we respond to the article “ Glaciers – science and nonsense ” by Cliff Ollier in the March issue of Geoscientist1 . We believe that the standfirst of this piece, which states that the author “takes issue with some common misconceptions about how ice-sheets move, and doubts many pronouncements about the “collapse” of the planet’s ice sheets” misleads the reader by assuming that Ollier’s arguments are correct.

We demonstrate in this article that those arguments are not, in fact, based on an accurate understanding of contemporary glaciology. Our response is structured around five general themes that are misrepresented by Ollier in his argument that ice sheets are not responding to recent climate warming …”

[Note the time scale changes]

http://www.geolsoc.org.uk/webdav/site/GSL/shared/images/geoscientist/Geoscientist%2020.06/Fig%201resized.jpg

Current and future cryospheric change

In contrast to the message portrayed by Ollier, extensive scientific evidence indicates that ice masses are in fact melting at rates that far exceed background trends and this is happening in nearly all glacierised (glacier-covered) regions on Earth.”

Jim,

Do you have anything productive to add, or are you just making snide comments? These little snipes of yours are getting old.

[Response: Then go find a place that likes your various proclamations and assertions and opinions.–Jim]

Anonymous Coward,

“The idea is that ice flows downwards to the sea level around Antarctica and Greenland where it has always been melting. Warming simply makes it flow and melt faster, shrinking the ice sheets. So it wouldn’t take that much warming to get rid of all the ice which geography isn’t keeping from flowing downwards. It must also have been really cold most of the year at the top of the Laurentide ice sheet when it melted away.”

“This post should get you started about expectations with regard to melting on human timescales:”

I was mainly referring to the melting of ice in the context of potential decreased surface albedo – not potential sea level rise. That’s for another thread.

The bottom line though is permanent ice melting or ice loss generally requires yearly averaged temperatures above 0C. Below 0C there just isn’t going to be any permanent or long term melting. Also, most of the ice on Greenland and Antarctica is located in areas where the yearly average temperature is already significantly below 0C – many areas multiple 10s of degrees below 0C.

… ratio of retreat to the along-flow stress-coupling length is proportional to the relative increase in speed, consistent with typical ice flow and sliding laws. This affirms that speedup results from loss of resistive stress at the front during retreat, which leads to along-flow stress transfer. Many retreats began with an increase in thinning rates near the front in the summer of 2003, a year of record high coastal-air and sea-surface temperatures.

Dan H,

I’m rather ignorant in glacial interiors/dynamics, except for some stuff I picked up in an undergrad geology class on the subject, but there are a number of real-world examples that show you are wrong. For instance, see Zwally et al (2002, Science, Vol 297)that showed clear evidence for velocity variations at Swiss Camp (Greenland), with speed-ups in the summer that likely reflects increase basal motion in response to surface meltwater that can reach the glacier bed.

Also, the outlet glaciers on Greenland are all variable in flow speed and ice discharge, and I think RC did something before on the acceleration of Jakobshavns Isbrae. And for glaciers grounded in deep water, accleration will be promoted whenever you get lowered resistance via lowering the average basal effective pressure.

RW,

Ice sheets affect both the sea level and albedo so ice sheet dynamics matter.

You wrote “The bottom line though is permanent ice melting or ice loss generally requires yearly averaged temperatures above 0C. Below 0C there just isn’t going to be any permanent or long term melting.”

What do you figure the average temperature is in Nunavut for instance? The Laurentide ice sheet is nevertheless gone and that definitely affected the albedo of the aera.

I think it’s simple enough to understand: ice is mobile so the surface or interior temperature of most of the ice sheet doesn’t affect the ultimate result. If more ice is lost at the margins than gained at the core, the ice sheet shrinks, ultimately affecting albedo as (depending on the underlying geography) lakes form, some rockbed is exposed and areas are reconquered by the ocean.

I guess a relatively small change in temperatures wouldn’t affect the albedo of a flat highland near the poles. But slopes do not remain white so easily unless they are covered by an ice sheet. Next to the oceans, it’s not going to be so cold and continental interiors could possibly (I don’t know) be very dry and therefore lack a year-round white cover in spite of very low winter temperatures (like areas in Northeastern Asia).

Dan,

I know virtually nothing about ice sheet dynamics but even I can understand that, even assuming no lubrification or sliding at the base, lateral ice flow is not going to be caused by the weight of the ice at the center. Thin glaciers would barely flow compared to thick ice sheets if that was the case.

Intuitively, it seems weight gradients and not absolute weight should drive the flow as neighboring columns of ice buttress each other. So if the margin was lost without ice loss at the center, the new margin having lost its support would become very unstable and the whole ice sheet would to have to lose volume to establish a new equilibrium. At least that would be my ignorant assumption…

Does anyone understand the math in this statement?

“We have increased CO2 from 280 to 390, going up faster each year. [There’s relevant news here.] If we continue we’ll soon get close to 560 [double the carbon dioxide concentration preceding the industrial revolution] or 2.3 (our estimate) to 3 (I.P.C.C.) degrees C. (4.1-5.4 degrees F.) global average surface air warming.

This is getting close to the warming from the LGM [peak of the last ice age] to today (3-4 degrees C.), hence we can expect dramatic changes over land, at mid- to high latitudes, in vegetation, mountain glaciers, ice sheets, and sea level.”

http://dotearth.blogs.nytimes.com/2011/12/05/more-on-the-sensitive-climate-question/

The change in the carbon dioxide concentration from the LGM to the pre-industrial period was less than a doubling. The change from then to “today” (390 ppm) is about a doubling, but because the recent increase is so rapid, today’s temperature is not representative of typical 390 ppm conditions.

Maybe this is what is meant though. 2.3C (their estimate from LGM to pre-industrial) plus 0.7C (from pre-industrial to “today”) comes to 3C while the IPCC comes to 3.7C or about 4 consistent with “3-4 degrees C” above.

But then, Schmittner is saying 3C for a doubling rather than 2.3C so where is the beef? It seems hard to believe that this boils down to forgetting to correct to a doubling sensitivity by carelessly shifting from a pre-industrial end point to a “today” endpoint.

Chris,

You are essentially correct. The pressures exerted from the interior of the glacier will force movement into those areas with the least resistance. Glacial advance will speed up or slow down based on this pressure difference. There are several examples of neighboring glaciers are moving in opposite directions.

NOAA has a nice visual of the recession of the Jakobshavn glacier which RC posted here:

https://www.realclimate.org/images/jakobshavn.jpg

There was a rather large retreat from 1851 until 1913, then slowing until 2001, with a recent acceleration. There was very little movement from 1964 – 2001.

The most recent changes in Greenland’s largest claciers can be found here:

http://www.staff.science.uu.nl/~lenae101/pubs/Howat2011.pdf

#142–

“Also, most of the ice on Greenland and Antarctica is located in areas where the yearly average temperature is already significantly below 0C –”

But it doesn’t all stay there; it flows toward the margins, where it can and does melt. That was AC’s point–cf. #140 and #143 as well.

#146 Chris Dudley,

I understand 2.3C is the center of the range they estimate for 2xCO2 Charney sensitivity while the center of the AR4 range is 3C.

I don’t think they’re saying the temperature difference from LGM to preindustrial is 2.3C.

What you may be missing is that that difference is greater than what you would get from Charney sensitivity alone. CO2 wasn’t the forcing anyway.

#149,

Thanks for your response. Looking at this pdf, http://mgg.coas.oregonstate.edu/~andreas/pdf/S/schmittner11sci_man.pdf I think that in the paper they are talking about pre-industrial controls so ‘today’ should mean 1700 or so. 185 to 280 ppm is 60% of a doubling logarithmically. So, 3 to 4 C cooling between the LGM and 1700 is a 5 to 7 C long term doubling sensitivity (all feedbacks). If the Charney sensitivity is half of that as proposed by Hansen, then we are at 2.5 to 3.5 for the Charney sensitivity. Again, where’s the beef?

It is quite confusing. The claim that a doubling of CO2 would begin to approach the difference from the LGM only makes sense if “today” means today since then it would be a doubling in both cases. But the paper seems to take “today” to be pre-industrial which is probably the correct thing to do. In that case, doubling CO2 should have a larger effect than changes from the LGM to pre-induustrial conditions and the wording does not make sense over at dotearth.