There is a new paper on Science Express that examines the constraints on climate sensitivity from looking at the last glacial maximum (LGM), around 21,000 years ago (Schmittner et al, 2011) (SEA). The headline number (2.3ºC) is a little lower than IPCC’s “best estimate” of 3ºC global warming for a doubling of CO2, but within the likely range (2-4.5ºC) of the last IPCC report. However, there are reasons to think that the result may well be biased low, and stated with rather more confidence than is warranted given the limitations of the study.

Climate sensitivity is a key characteristic of the climate system, since it tells us how much global warming to expect for a given forcing. It usually refers to how much surface warming would result from a doubling of CO2 in the atmosphere, but is actually a more general metric that gives a good indication of what any radiative forcing (from the sun, a change in surface albedo, aerosols etc.) would do to surface temperatures at equilibrium. It is something we have discussed a lot here (see here for a selection of posts).

Climate models inherently predict climate sensitivity, which results from the basic Planck feedback (the increase of infrared cooling with temperature) modified by various other feedbacks (mainly the water vapor, lapse rate, cloud and albedo feedbacks). But observational data can reveal how the climate system has responded to known forcings in the past, and hence give constraints on climate sensitivity. The IPCC AR4 (9.6: Observational Constraints on Climate Sensitivity) lists 13 studies (Table 9.3) that constrain climate sensitivity using various types of data, including two using LGM data. More have appeared since.

It is important to regard the LGM studies as just one set of points in the cloud yielded by other climate sensitivity estimates, but the LGM has been a frequent target because it was a period for which there is a lot of data from varied sources, climate was significantly different from today, and we have considerable information about the important drivers – like CO2, CH4, ice sheet extent, vegetation changes etc. Even as far back as Lorius et al (1990), estimates of the mean temperature change and the net forcing, were combined to give estimates of sensitivity of about 3ºC. More recently Köhler et al (2010) (KEA), used estimates of all the LGM forcings, and an estimate of the global mean temperature change, to constrain the sensitivity to 1.4-5.2ºC (5–95%), with a mean value of 2.4ºC. Another study, using a joint model-data approach, (Schneider von Deimling et al, 2006b), derived a range of 1.2 – 4.3ºC (5-95%). The SEA paper, with its range of 1.4 – 2.8ºC (5-95%), is merely the latest in a series of these studies.

Definitions of sensitivity

The standard definition of climate sensitivity comes from the Charney report in 1979, where the response was defined as that of an atmospheric model with fixed boundary conditions (ice sheets, vegetation, atmospheric composition) but variable ocean temperatures, to 2xCO2. This has become a standard model metric (because it is relatively easy to calculate. It is not however the same thing as what would really happen to the climate with 2xCO2, because of course, those ‘fixed’ factors would not stay fixed.

Note then, that the SEA definition of sensitivity includes feedbacks associated with vegetation, which was considered a forcing in the standard Charney definition. Thus for the sensitivity determined by SEA to be comparable to the others, one would need to know the forcing due to the modelled vegetation change. KEA estimated that LGM vegetation forcing was around -1.1+/-0.6 W/m2 (because of the loss of trees in polar latitudes, replacement of forests by savannah etc.), and if that was similar to the SEA modelled impact, their Charney sensitivity would be closer to 2ºC (down from 2.3ºC).

Other studies have also expanded the scope of the sensitivity definition to include even more factors, a definition different enough to have its own name: the Earth System Sensitivity. Notably, both the Pliocene warm climate (Lunt et al., 2010), and the Paleocene-Eocene Thermal Maximum (Pagani et al., 2006), tend to support Earth System sensitivities higher than the Charney sensitivity.

Is sensitivity symmetric?

The first thing that must be recognized regarding all studies of this type is that it is unclear to what extent behavior in the LGM is a reliable guide to how much it will warm when CO2 is increased from its pre-industrial value. The LGM was a very different world than the present, involving considerable expansions of sea ice, massive Northern Hemisphere land ice sheets, geographically inhomogeneous dust radiative forcing, and a different ocean circulation. The relative contributions of the various feedbacks that make up climate sensitivity need not be the same going back to the LGM as in a world warming relative to the pre-industrial climate. The analysis in Crucifix (2006) indicates that there is not a good correlation between sensitivity on the LGM side and sensitivity to 2XCO2 in the selection of models he looked at.

There has been some other work to suggest that overall sensitivity to a cooling is a little less (80-90%) than sensitivity to a warming, for instance Hargreaves and Annan (2007), so the numbers of Schmittner et al. are less different from the “3ºC” number than they might at first appear. The factors that determine this asymmetry are various, involving ice albedo feedbacks, cloud feedbacks and other atmospheric processes, e.g., water vapor content increases approximately exponentially with temperature (Clausius-Clapeyron equation) so that the water vapor feedback gets stronger the warmer it is. In reality, the strength of feedbacks changes with temperature. Thus the complexity of the model being used needs to be assessed to see whether it is capable of addressing this.

Does the model used adequately represent key climate feedbacks?

Typically, LGM constraints on climate sensitivity are obtained by producing a large ensemble of climate model versions where uncertain parameters are systematically varied, and then comparing the LGM simulations of all these models with “observed” LGM data, i.e. proxy data, by applying statistical approach of one sort or another. It is noteworthy that very different models have been used for this: Annan et al. (2005) used an atmospheric GCM with a simple slab ocean, Schneider et al. (2006) the intermediate-complexity model CLIMBER-2 (with both ocean and atmosphere of intermediate complexity), while the new Schmittner et al. study uses an oceanic GCM coupled to a simple energy-balance atmosphere (UVic).

These models all suggest potentially serious limitations for this kind of study: UVic does not simulate the atmospheric feedbacks that determine climate sensitivity in more realistic models, but rather fixes the atmospheric part of the climate sensitivity as a prescribed model parameter (surface albedo, however, is internally computed). Hence, the dominant part of climate sensitivity remains the same, whether looking at 3ºC cooling or 3ºC warming. Slab oceans on the other hand, do not allow for variations in ocean circulation, which was certainly important for the LGM, and other intermediate models have all made key assumptions that may impact these feedbacks. However, in view of the fact that cloud feedbacks are the dominant contribution to uncertainty in climate sensitivity, the fact that the energy balance model used by Schmittner et al cannot compute changes in cloud radiative forcing is particularly serious.

Uncertainties in LGM proxy data

Perhaps the key difference of Schmittner et al. to some previous studies is their use of all available proxy data for the LGM, whilst other studies have selected a subset of proxy data that they deemed particularly reliable (e.g., in Schneider et al. SST data from the tropical Atlantic, Greenland and Antarctic ice cores and some tropical land temperatures). Uncertainties of the proxy data (and the question of knowing what these uncertainties are) are crucial in this kind of study. A well-known issue with LGM proxies is that the most abundant type of proxy data, using the species composition of tiny marine organisms called foraminifera, probably underestimates sea surface cooling over vast stretches of the tropical oceans; other methods like alkenone and Mg/Ca ratios give colder temperatures (but aren’t all coherent either). It is clear that this data issue makes a large difference in the sensitivity obtained.

The Schneider et al. ensemble constrained by their selection of LGM data gives a global-mean cooling range during the LGM of 5.8 +/- 1.4ºC (Schnieder Von Deimling et al, 2006), while the best fit from the UVic model used in the new paper has 3.5ºC cooling, well outside this range (weighted average calculated from the online data, a slightly different number is stated in Nathan Urban’s interview – not sure why).

Curiously, the mean SEA estimate (2.4ºC) is identical to the mean KEA number, but there is a big difference in what they concluded the mean temperature at the LGM was, and a small difference in how they defined sensitivity. Thus the estimates of the forcings must be proportionately less as well. The differences are that the UVic model has a smaller forcing from the ice sheets, possibly because of an insufficiently steep lapse rate (5ºC/km instead of a steeper value that would be more typical of dryer polar regions), and also a smaller change from increased dust.

Model-data comparisons

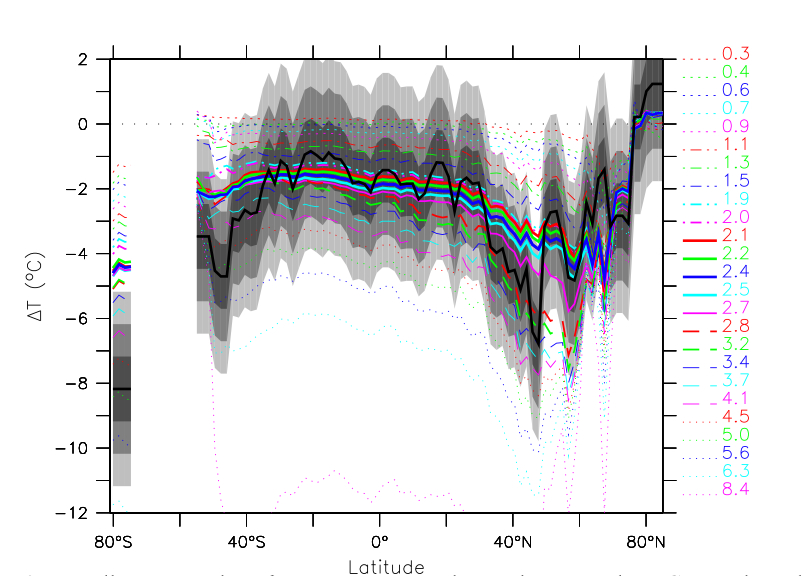

So there is a significant difference in the headline results from SEA compared to previous results. As we mentioned above though, there are reasons to think that their result is biased low. There are two main issues here. First, the constraint to a lower sensitivity is dominated by the ocean data – if the fit is made to the land data alone, the sensitivity would be substantially higher (though with higher uncertainty). The best fit for all the data underpredicts the land temperatures significantly.

However, even in the ocean the fit to the data is not that good in many regions – particular the southern oceans and Antarctica, but also in the Northern mid-latitudes. This occurs because the tropical ocean data are weighing more heavily in the assessment than the sparser and possibly less accurate polar and mid-latitude data. Thus there is a mismatch between the pattern of cooling produced by the model, and the pattern inferred from the real world. This could be because of the structural deficiency of the model, or because of errors in the data, but the (hard to characterise) uncertainty in the former is not being carried into final uncertainty estimate. None of the different model versions here seem to get the large polar amplification of change seen in the data for instance.

Response and media coverage

All in all, this is an interesting paper and methodology, though we think it slightly underestimates the most likely sensitivity, and rather more seriously underestimates the chances that the sensitivity lies at the upper end of the IPCC range. Some other commentaries have come to similar conclusions: James Annan (here and here), and there is an excellent interview with Nathan Urban here, which discusses the caveats clearly. The perspective piece from Gabi Hegerl is also worth reading.

Unfortunately, the media coverage has not been very good. Partly, this is related to some ambiguous statements by the authors, and partly because media discussions of climate sensitivity have a history of being poorly done. The dominant frame was set by the press release which made a point of suggesting that this result made “extreme predictions” unlikely. This is fair enough, but had already been clear from the previous work discussed above. This was transformed into “Climate sensitivity was ‘overestimated'” by the BBC (not really a valid statement about the state of the science), compounded by the quote that Andreas Schmittner gave that “this implies that the effect of CO2 on climate is less than previously thought”. Who had previously thought what was left to the readers’ imagination. Indeed, the latter quote also prompted the predictably loony IBD editorial board to declare that this result proves that climate science is a fraud (though this is not Schmittner’s fault – they conclude the same thing every other Tuesday).

The Schmittner et al. analysis marks the insensitive end of the spectrum of climate sensitivity estimates based on LGM data, in large measure because it used a data set and a weighting that may well be biased toward insufficient cooling. Unfortunately, in reporting new scientific studies a common fallacy is to implicitly assume a new study is automatically “better” than previous work and supersedes this. In this case one can’t blame the media, since the authors’ press release cites Schmittner saying that “the effect of CO2 on climate is less than previously thought”. It would have been more appropriate to say something like “our estimate of the effect is less than many previous estimates”.

Implications

It is not all that earthshaking that the numbers in Schmittner et al come in a little low: the 2.3ºC is well within previously accepted uncertainty, and three of the IPCC AR4 models used for future projections have a climate sensitivity of 2.3ºC or lower, so that the range of IPCC projections already encompasses this possibility. (Hence there would be very little policy relevance to this result even if it were true, though note the small difference in definitions of sensitivity mentioned above).

What is more surprising is the small uncertainty interval given by this paper, and this is probably simply due to the fact that not all relevant uncertainties in the forcing, the proxy temperatures and the model have been included here. In view of these shortcomings, the confidence with which the authors essentially rule out the upper end of the IPCC sensitivity range is, in our view, unwarranted.

Be that as it may, all these studies, despite the large variety in data used, model structure and approach, have one thing in common: without the role of CO2 as a greenhouse gas, i.e. the cooling effect of the lower glacial CO2 concentration, the ice age climate cannot be explained. The result — in common with many previous studies — actually goes considerably further than that. The LGM cooling is plainly incompatible with the existence of a strongly stabilizing feedback such as the oft-quoted Lindzen’s Iris mechanism. It is even incompatible with the low climate sensitivities you would get in a so-called ‘no-feedback’ response (i.e just the Planck feedback – apologies for the terminological confusion).

It bears noting that even if the SEA mean estimate were correct, it still lies well above the ever-more implausible estimates of those that wish the climate sensitivity were negligible. And that means that the implications for policy remain the same as they always were. Indeed, if one accepts a very liberal risk level of 50% for mean global warming of 2°C (the guiderail widely adopted) since the start of the industrial age, then under midrange IPCC climate sensitivity estimates, then we have around 30 years before the risk level is exceeded. Specifically, to reach that probability level, we can burn a total of about one trillion metric tonnes of carbon. That gives us about 24 years at current growth rates (about 3%/year). Since warming is proportional to cumulative carbon, if the climate sensitivity were really as low as Schmittner et al. estimate, then another 500 GT would take us to the same risk level, some 11 years later.

References

- A. Schmittner, N.M. Urban, J.D. Shakun, N.M. Mahowald, P.U. Clark, P.J. Bartlein, A.C. Mix, and A. Rosell-Melé, "Climate Sensitivity Estimated from Temperature Reconstructions of the Last Glacial Maximum", Science, vol. 334, pp. 1385-1388, 2011. http://dx.doi.org/10.1126/science.1203513

- C. Lorius, J. Jouzel, D. Raynaud, J. Hansen, and H.L. Treut, "The ice-core record: climate sensitivity and future greenhouse warming", Nature, vol. 347, pp. 139-145, 1990. http://dx.doi.org/10.1038/347139a0

- P. Köhler, R. Bintanja, H. Fischer, F. Joos, R. Knutti, G. Lohmann, and V. Masson-Delmotte, "What caused Earth's temperature variations during the last 800,000 years? Data-based evidence on radiative forcing and constraints on climate sensitivity", Quaternary Science Reviews, vol. 29, pp. 129-145, 2010. http://dx.doi.org/10.1016/j.quascirev.2009.09.026

- T. Schneider von Deimling, H. Held, A. Ganopolski, and S. Rahmstorf, "Climate sensitivity estimated from ensemble simulations of glacial climate", Climate Dynamics, vol. 27, pp. 149-163, 2006. http://dx.doi.org/10.1007/s00382-006-0126-8

- D.J. Lunt, A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett, "Earth system sensitivity inferred from Pliocene modelling and data", Nature Geoscience, vol. 3, pp. 60-64, 2009. http://dx.doi.org/10.1038/NGEO706

- M. Pagani, K. Caldeira, D. Archer, and J.C. Zachos, "An Ancient Carbon Mystery", Science, vol. 314, pp. 1556-1557, 2006. http://dx.doi.org/10.1126/science.1136110

- M. Crucifix, "Does the Last Glacial Maximum constrain climate sensitivity?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL027137

- J.C. Hargreaves, A. Abe-Ouchi, and J.D. Annan, "Linking glacial and future climates through an ensemble of GCM simulations", Climate of the Past, vol. 3, pp. 77-87, 2007. http://dx.doi.org/10.5194/cp-3-77-2007

- J.D. Annan, J.C. Hargreaves, R. Ohgaito, A. Abe-Ouchi, and S. Emori, "Efficiently Constraining Climate Sensitivity with Ensembles of Paleoclimate Simulations", SOLA, vol. 1, pp. 181-184, 2005. http://dx.doi.org/10.2151/sola.2005-047

- T. Schneider von Deimling, A. Ganopolski, H. Held, and S. Rahmstorf, "How cold was the Last Glacial Maximum?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL026484

One of the very best things about the present study is co-author Dr. Nathan Urban’s participation online explaining the result, the methods and the problems. If you have not read his excellent interview at Planet 3.0 now is the time. A combination of circumstances makes model-based sensitivity estimates of distant times and different climates hard to do, but at least we are getting a good education about it.

I’m confused on one point: you report the LGM cooling as -3.5 °C; Urban reports it as -3.3 °C. Yet the paper states that “The best-fitting model (ECS = 2.4 K) reproduces well the reconstructed global mean cooling of 2.2 K…” I assume the difference is that the global mean cooling cited in the paper includes the contribution of SST change, which, according to MARGO, is -1.9 ± 1.8 °C, whereas the -3.3 or -3.5 °C is for SAT. If so, why is the SAT the relevant point of comparison?

[Response: The 2.2 deg C cooling is the average only where there is data in the reconstruction. The 3.5 deg C cooling is the global mean SAT change in the ECS=2.35 model version. The latter is important for the climate sensitivity issue, while the former is key for the constraint. The model+statistical model is there to link the two. – gavin]

So IPCC has 2.0 – 4.5 and this latest study has 1.6 – 2.8. It shifts the range as much as it narrows it. In either case the range is somewhat wide.

Pardon my ignorance, but we’re now halfway through a doubling of CO2 since preindustrial times (the current 392 ppm divided by sqrt(2) is 277 ppm, right in the 260-280 ppm range given by Wikipedia for the level just before the industrial emissions began). We also have fairly reliable instrumental records of the temperature through most of this increase. If we abandon the models and simply extrapolate the trend, shouldn’t that by now, unless there is a huge or unknown temperature lag, give us a target with a similar range, and that range would more or less equal the estimated natural variation? I suppose an estimate based more on direct observations would cause less controversy than one based on models and pre instrument temperature reconstructions/proxies.

Every decade that passes should narrow the range. How warm must this decade become to dismiss a value of 1.6 and how cold must this decade become to dismiss a value of 4.5?

David Lea,

You’re right 2.2 K (grid points where there is paleo-data) refers to the SST change over the ocean and SAT over land, and 3 K refers to the global SAT change. Also they use a 5×5° grid for the oceans (or SSTs and Shakun et al 2011) and 2×2° grid for the land, and because of more data in the oceans, the global mean is probably too biased toward the ocean.

Steinar,

There are several problems with your approach (if it were so easy the problem would be trivial and would have been solved a long time ago). First, global temperature does not just respond to the CO2 forcing, but to the total forcing. We don’t know the total forcing that well, primarily because we don’t know the aerosol (direct or indirect) effects. If the negative aerosol forcing were very large, then the cumalative forcing might only be a few tenths of a Watts per square meter, and it would require a rather high sensitivity to explain the observed trend. And we don’t expect the aerosol forcing to continue to grow and indefinitely offset CO2 in the future.

The other issue is that the value you cite is close to correct for the transient climate response. This is a very important number, and probably the most relevant number as we progress into the 21st century, but paleoclimate studies are primarily focused on the equilibrium response (i.e., after the oceans have warmed up sufficiently to allow the top of atmosphere radiative budget to be balanced). In other words, the current climate might be at 390 ppm CO2, but the amount of warming we’ve seen (or for that matter, the extent of many glaciers, sea level, etc) has not equilibriated to a 390 ppm CO2 world.

William P wrote: “We are fiddling with scientific minutia while the world burns.”

Studying the climate is what climate scientists do. I hope they will continue doing so through hell and high water.

Having said that, I agree that climate scientists have already learned and communicated far more than enough to justify urgent action to end anthropogenic GHG emissions as quickly as possible — which numerous national and international scientific organizations, and many individual climate scientists, have explicitly called for in public statements.

However, it’s not up to the climate scientists to take those actions, or even to identify which specific actions are the most effective (or cost-effective) — or even to blog about such things. It’s up to the rest of us.

Re #52 Gavin – thanks. perfect!

I see that some very helpful comments were posted ahead of mine this morning. I think the air has cleared. I have another question. This paper gives a detailed account of the UVic model, albeit a ten year old version. Evidently the model finds or found then the usual sensitivity of 3.0 for the present time, and a lower sensitivity for the LGM. Does the current UVic model get 3.0 for the present?

[Response: The way the radiation is written in the Uvic model — which is typical for energy balance models of this sort — you can dial in whatever sensitivity parameter you want. In the Schmittner et al paper, they dial in a range of sensitivities, and then see which one looks most like the LGM. The numbers on the lines in the graph we reproduced in the post correspond to the respective 2xCO2 sensitivities for each of the settings of the dial. –raypierre]

When Andreas’ paper was first written about in our local newspaper the Oregonian my first thought was “Whew! Glad that climate change scare is over!” since that’s how the paper’s lead more or less spun it.

I’d like to ask the scientists here what they feel the next IPCC Report will conclude about this.

I’d also like to ask them, “Are most things about climate change more dangerous or more benign than previously thought?”

If one thing is more benign but a dozen others more dangerous, then overall things are more dangerous than previously thought. Is this the case?

[Response: The first problem here is the term ‘previously thought’. By whom? and when did they think it? And have they been asked whether they’ve changed their minds? You are far better off being specific. i.e. ‘as concluded/estimated/discussed in the last IPCC report’ – that way people actually know what is being compared, and you are comparing a well-reviewed assessment rather than some bloke’s opinion. The second problem is the term ‘dangerous’ – this is very context-laden. What is dangerous for an Inuit, is probably not the same as what is dangerous to someone living in Delhi, or New York. So you are better off again being more specific, are predicted changes of greater magnitude/lesser magnitude/more or less certain etc. than compared to the last IPCC report?

The answer is that it will be a mixed bag. Some things are changing faster (Arctic ice, greenland & Antarctic melt, wildfires and heat waves perhaps), some things are within what was expected (temperatures, rainfall), and a few things might not have changed as quickly as expected (though I can’t think of any). For the projections, the differences will mostly depend on different scenarios this time around (including some very optimistic ones) rather than any great improvement in physical understanding. I don’t anticipate any big change in the bottom line, though there will be a lot of progress in the details. – gavin]

Pete Dunkelberg (#58),

I am a coauthor on a manuscript in revision, Olson et al., JGR-Atmospheres (2011), which has a climate sensitivity analysis from modern (historical instrumental) data, using a similar UVic perturbed-physics ensemble approach. It finds a little under 3 K for ECS (best estimate).

[Response: Welcome back, Nathan. I’m glad my grouchiness up there hasn’t deterred you from continuing to contribute your insights to this discussion –raypierre]

Isotopious @43 — It is generally agreed that orbital forcing [at high northern latitudes] is the cause of the changes between the three states of interglacial/interstade(mild glacial)/stade(full glacial). The exact details are unknown AFAIK but one simple model is the trigger hypothesis of Paillard [for a highly readable use of this model I strongly recommend Archer/Ganopolski’s Movable Trigger]. Some of the big AOGCMs have attempted to reproduce the full behavior from the Eemian interglacial to the Holocene interglacial, but I’m not qualified to comment on the success of these endevors.

Regarding feedbacks, both positive and negative, I recommend a goodly dose of what is called linear systems theory [or was 50 years ago when I studied it from David Cheng’s admirable textbook]. You will discover that the total system response depends upon both the input signal [forcing in climatological terminology] and the nature of the feedback; neither can be neglected in the general case.

Chris Colose @ 39 — Thanks as always, but I am baffled by your The larger thermal inertia of the ocean is important, but the higher sensitivity over land than in the ocean is also seen in equilibrium simulations when the ocean has had time to “catch up,” so that argument doesn’t hold as equilibrium is approached. [Emphasis added]. What argument? I don’t recall that I made one.

Further, perhaps you are under the misimpression that I actually understand all the fine points. Nothing I’ve written should be viewed as either (direct) criticism or defense of the paper undergoing this [extensive] review.

I, too, live in Oregon and apparently Brenne only read the headline. The article wasn’t as strong as it could be, but in no sense did it convey the notion that “the climate change scare is over”.

Tch, tch, Brenne.

And Brenne should know better …

Nathan Urban

I think I recollect a statement by Gavin a year or so (or less) stating that the most recent (at that time) GISS Model E results are around 2.75.

Not inconsistent with what you’re saying (though my memory may be totally whacked out here).

Glad to see that you’re assisting on a variety of approaches, and that you’re not wedded to the Schmitter results …

Thank you for the response. If there’s no immediate plans for an e-book version I’ll pick up a hard copy in the early new year sometime (after Christmas bills are paid and I have contracts lined up again–any time someone tells me scientists are in it for the money I want to bop them on the head with something heavy).

Nathan Urban, I will be very interested in your new paper and I want to thank you very much for your online explanations and patience. You and Andreas Schmittner have I think made a greater contribution this way than by the original paper! I have read your two interviews at Planet 3.0 and of course your contributions here along with Andreas, and his help and yours at Skeptical Science. First your paper created great interest and then you both clarified many questions for the online science community. Since sensitivity is such a key parameter in climate change, the whole online climate community will be sharper overall thanks to you and Andreas and your coauthors and the resulting discussions, and this sharpness will filter out to all the people we communicate with.

Thanks very much!

David Benson- sorry for the misunderstanding, I wasn’t trying to address an argument you made specifically, just my way of discussing the mechanism of land:ocean sensitivity. Perhaps it’s because I’m accustomed to most people just talking about the thermal inertia in this context.

Chris (#55),

So we don’t really know the temperature lag or how the temperature is supposed to behave until equilibrium, and the sensitivity will be difficult to confirm by direct observation (say, in our lifetime)?

You say that if the negative forcings that already affect temperature are large, the sensitivity will be high, and vice versa. That would mean that if those who say that the recent warming has everything to do with CO2 are right, that is, the masking/delay is low, then the sensitivity is also low, so there is less to worry about. And if those who say that the recent warming doesn’t say much about CO2 are right (disregarding whether they’re right for the right reasons), it might be because the masking/delay is high, and they’re in for a surprise. That sounds ironical.

Please send direct me to any peer reviewed paper which shows a credable sea level rise beyond the 59cm maximum, by 2100, whch the IPCC says. By preferance post it on to the E+E section of British Democracy Forum. Thanks.

P.S. your security is a complete pain this is the 5th time I have tried to post this!

#55, #68–

Which brings up a question: what do we know about the actual time to equilibrium? I’ve seen some comments here which basically add up to “a few centuries,” and a quick search of the RC site turned up nothing more. On the other hand, John Cook has this piece which, quoting Hansen 2005 as authority, says “several decades.” I suspect the difference to be not so much a disagreement as a product of definitional differences, especially since the RC comments were somewhat informal.

Is there a more detailed post on this? Some other source for which someone can offer a pointer?

[Response: Check out the transient vs. equilibrium climate discussion in our National Research Council report, “Climate Stabilization Targets” (free from the NAS web site. Just google the title and you’ll find it.). Some part of the response does equilibrate within decades but a lot of the rest (easily half) takes a thousand years to be realized. The exact proportion is model dependent, and depends on deep ocean circulations. –raypierre]

Kevin,

In terms of modelling I think there is quite a bit of variation in time to equilibrium. The clearest indicator of those differences is the ratio between transient and equilibrium sensitivity. A GCM with relatively low transient response but relatively high equilibrium sensitivity probably has large thermal inertia, therefore will take longer to equilibrate, and vice versa.

I recall reading in one paper on the CCSM model (I can’t recall which paper) that the time taken to achieve near-complete equilibration was 3000 years after a pulse perturbation. However, the equilibrium response would be non-linear so it could be that most of the distance to equlibration was made up in the first couple of centuries.

I’ve read some snippets which suggest Hansen believes thermal inertia is too large in most GCMs, which would mean he thinks time to reach equilibrium will be fairly short (?)

I don’t know if there have been any observation-based studies on this.

Steinar-

Both hindcasts and projections are strongly influenced by climate sensitivity and also by vertical ocean diffusivity. Because of the uncertainty in ocean heat uptake and surface-to-depth transport of excess heat, there will obviously be uncertainty in the transient evolution of surface warming. Moreover, the timeframe over which the planet comes to equilibrium increases with higher climate sensitivity. In terms of global temperature change there are also several timescales of consideration- there is a fast component (roughly proportional to the instantaneous radiative forcing), and a slow omponent that reflects surface manifestation of changes in the deep ocean. The long timescales (even ignoring the “Earth system” responses like ice sheets and vegetation) are not easy to get at in the instrumental record or by studying “abrupt forcing” events like volcanic eruptions.

Similarly, many studies that attempt to examine the co-variability between Earth’s energy budget and temperature (such as in many of the pieces here at RC concerning the Spencer and Lindzen literature) are only as good as the assumptions made about base state of the atmosphere relative to which changes are measured, the “forcing” that is supposedly driving the changes (which are often just things like ENSO, and are irrelevant to radiative-induced changes that will be important for the future), and are limited by short and discontinuous data records. Thus, this is why people are motivated to look at ancient climates where there are times of much larger temperature changes and forcing signals, that we can hopefully relate to each other to interrogate the sensitivity problem in a more robust fashion.

Your point about “those who say that the recent warming has everything to do with CO2 are right” is irrelevant because they are wrong, but I don’t know anyone who actually thinks that. Despite the uncertainty in aerosol forcing, there is a lot of confidence that it is negative, and thus has at least helped offset some global warming to date. This figure gives a sense of the wide uncertainty distribution in the total anthropogenic forcing relative to just the GHG forcing (which also includes methane, N2O, etc) which is a prime reason the instrumental record doesn’t inherently give good constraints on sensitivity.

The definitions of climate sensitivity always talk about a “doubling of the C02 level.” But they don’t say doubling from what starting point.

Without this information, it is hard to make much sense out of the discussion.

[Response: This is because it doesn’t much matter. The radiative forcing from 180 to 360 ppm, or from 280 to 560 ppm, or from 380 to 760 ppm are all approximately the same (~4 W/m2). This is because forcing from CO2 is logarithmic in the concentration (F=5.35*log_e(CO2/CO2_orig) ), and the ‘doubling’ metric is preferred accordingly. -gavin]

It’s very revealing how some journalists reported the findings of this study on their blogs. Andy Revkin’s Dot Earth and Eric Berger of the Houston Chronicle both delivered inaccurate posts on their blogs and they both cited each others writings as supporting their own beliefs.

This shows how important this blog is where climate scientists can openly discuss and debate their work. Thanks for taking the time to do this.

I hope this is not off topic, but where could I find some good info on the lindzen & choi 2011 updated paper? All the links google gives are just to “the usual suspects” like Watts, Curry, Spencer.

This paper is about sensitivity also so maybe it’s pertinent.

Did they correct the problems in the 2009 paper for example?

KTB @ 75, this will get you started:

http://www.skepticalscience.com/Richard_Lindzen_art.htm

Chris Colose (#72) and especially Paul S (#71)–

Thanks very much. It sounds as if there are both practical and definitional issues standing in the way of a clear and simple statement at present–so no-one is going to flatly say “The time to equilibrium is ‘x’.” Too bad, though not really surprising–most of this stuff is not so simple, after all.

But at least I’ve garnered a little more detail about the problem–a bit like ‘being confused at a higher level,’ I suppose.

@William Jockusch: The starting point is typical the preindustrial value of about 280ppm.

@Gavin: I think the difference is not so much in the forcing itself but in the albedo feedback. Especially the snow, and sea ice feedback may be different, depending on the extend.

ktb, trying scholar for that paper

http://scholar.google.com/scholar?q=lindzen++choi+2011

Cited by 10

finds http://www.mdpi.com/2072-4292/3/9/2051

Remote Sens. 2011, 3(9), 2051-2056; doi:10.3390/rs3092051

Commentary–Issues in Establishing Climate Sensitivity in Recent Studies

Trenberth, Fasullo and Abraham

“… many of the problems in LC09 [6] have been perpetuated, and Dessler [10] has pointed out similar issues with two more recent such attempts [7,8]. Here we briefly summarize more generally some of the pitfalls and issues involved in developing observational constraints on climate feedbacks. […]”

Gavin,

Thanks for this. I had earlier asked you to give us your take on this and similar papers (see below). One thing in SEA I don’t think you discussed was their graph of “climate sensitivity” probability separated into land and ocean. Curiously the land sensitivity centered right about the IPCC value of 3.0°C! They comment that this is probably due to poor land-sea heat fluxes—another possible problem with this work.

[Response: Note please that group posts like this one are written collectively by most or all of the RC participants, not by any one of us. As for your actual question, yes that should have been emphasized more in the post itself, since it’s a key point. If you look through the comments you’ll see some discussion of the point, and there’s a very good discussion of the importance of the mismatch in the interview with Urban on Planet 3. But to reiterate: the difference between climate sensitivity estimates based on land vs. ocean data indicates that something is seriously wrong, either with the model, or the data, or some of both. In my view, that mismatch alone is reason not to put too much credence in Schmittner’s claim to have trimmed of the upper end of the IPCC climate sensitivity tail. –raypierre]

The LGM is the wrong study period. We are facing the extinction of glaciers and multi year sea ice in the Arctic. Although there is great data during the LGM, we have live observations in the Arctic which has way more facts. Rather sensitivity be tested today, when disappearing ice is compelling, especially when some like Pielke call for end of Global warming since 2002, when in fact it never stops, despite GT short time span trends. I have proof of even greater melting since 2006 http://eh2r.blogspot.com/

[Response: I wouldn’t say it’s the wrong study period. It has a lot going for it, in that it is a big event, we know the CO2 well and it dropped significantly, and the major forcings are well known (dust being the prime exception). The trick is to tease out the correct sort of information from it, and that requires a suitable model. There’s no way to do it from data alone, because of the asymmetry problem. Except as a “proof of concept,” I myself do not think that the UVic model is a remotely suitable model for this sort of study. –raypierre]

Chris (#72),

You say that you don’t know anyone who thinks that “that the recent warming has everything to do with CO2”. That is, it’s too early to see any clear effects in global temperature of CO2 emissions, as also pointed out by others. Still, a great portion of the “summary for policymakers” deals with the recent temperature rise, and it concludes that it’s “likely” that there is a human contribution to the observed trend (by which I assume CO2 emissions are especially understood, even more so considered the negative forcings mentioned). While “everything to do with CO2” was a figure of speech meant to push the point, IPCC certainly seem lean heavily in that direction. Are they jumping to conclusions here?

Which takes me to the next thing which raises a question in light of the delay that we’ve been discussing. The summary gives various estimated temperature increases for the 21st century. A doubling of CO2 from 300 ppm in 1880 to 600 ppm in 2100 has a best estimate of 1.8 degrees (scenario B1) or about 2.3 degrees warming since 1880, which happens to be precisely the sensitivity figure given by Schmittner et al. The question that arises is how IPCC has conjured this figure, whose range (1.8 – 3.6) is even narrower than the sensitivity estimates, when research seems to suggest that we can only begin to guess at the temperatures that we’ll have when most of the distance to equilibrium is made up centuries later? The 2100 temperature is a key measure for politicians and activists, so this seems important.

[Response: You need to study the IPCC report and it’s references more carefully. From what you write, it’s not even clear to me that you know that there are general circulation models involved (not “conjuring”). And further, in stating the year 2100 values, you are mixing up transient with equilibrium climate sensitivity. I don’t really see where you’re going with this. Please clarify. –raypierre]

#61

David Benson, it is generally agreed that the system is highly sensitive to changes in obliquity. This is interesting, since the amount of incoming radiation from the sun is unchanged. What changes is where the radiation goes. Instead of being more evenly distributed from the equator to the poles, the sunlight becomes more concentrated in the tropics. So it’s likely to be both the tropics and the poles regarding ‘the cause of the changes’.

So again it comes back to the proxy data, is there lots of cooling, or just lots of ice, or both?

Anyway, on the topic of feedback, you can’t have it both ways. If you’re talking about water vapour feedback, then it is dependent on temperature change. As I have demonstrated, positive feedback works fine up to a point…until you want it to reverse. After the Eemian peak, there was quite rapid cooling. There is no change in INSOLATION with regards to tilt, the all the GHGs don’t add up to a huge forcing, and it’s very cloudy in the polar regions, so you need a system which is very sensitive to the small amount of forcing to work. Unfortunately for this beautiful theory, there is an ugly fact. The feedback loop is temperature dependant. Therefore the resulting temperature changes quickly overpower whatever started them. Gavin’s model which says non-condensable GHGs ‘hold up’ the water vapour lacks proof. It is the TEMPERATURE which holds up the water vapour. You are arguing for a positve feedback loop but only when it suits.

But again, you can ‘try’ and fudge your theory by saying ‘oh, it wasn’t that cold, so it doesn’t need to be that sensitive’ or ‘it’s just the polar regions, don’t worry about the tropics’, etc..

Or you could accept the fact that interglacial cycles are, well..cycles, and like most cycles…could be negative.

[Response: The concept of water vapor feedback (which goes back to Arrhenius, and was fully consolidated in the 1960’s by Manabe and co-workers) has always stated that the water vapor was determined by temperature. Anything that “holds up” the temperature, whether it be CO2 or changes in solar brightness, allows the atmosphere to hold more water. Now, for ice ages where the CO2 plays a crucial role is as a globalizer of the temperature response. Low obliquity makes it easier to accumulate ice at the poles, and the precession effects alternate between one pole and another as to where ice most easily persists. However, without the CO2 feedback the climate change would be much more confined to the Northern Hemisphere extratropics, where the great ice sheets wax and wane. This is why the LGM response in the tropics and Southern midlatitudes is such a crucial test of CO2 response. One gets relatively little Milankovic signal there without an effect from CO2. –raypierre]

Rate of change question — would methane-using microorganisms be likely to have handled most methane as it was released from warming at geological rates of change, but not increase fast enough to metabolize the amounts of methane described at current rate of change?

http://www.nature.com/nature/journal/v480/n7375/full/480032a.html?WT.ec_id=NATURE-20111201

(paywalled)

Climate change: High risk of permafrost thaw

Edward A. G. Schuur & Benjamin Abbott

Nature 480, 32–33 (01 December 2011) doi:10.1038/480032a

Published online 30 November 2011

Northern soils will release huge amounts of carbon in a warmer world, say Edward A. G. Schuur, Benjamin Abbott and the Permafrost Carbon Network.

News story: http://www.msnbc.msn.com/id/45494959/ns/us_news-environment/

—excerpt follows—

heat-trapping gases under the frozen Arctic ground may be a bigger factor in global warming than the cutting down of forests, and a scenario that climate scientists hadn’t quite accounted for, according to a group of permafrost experts. The gases won’t contribute as much as pollution from power plants, cars, trucks and planes, though.

Image: Methane fire

Todd Paris / University of Alaska, Fairbanks

Researcher Katey Walter Anthony ignites trapped methane from under the ice in a pond on the University of Alaska, Fairbanks campus in 2009.

The permafrost scientists predict that over the next three decades a total of about 45 billion metric tons of carbon from methane and carbon dioxide will seep into the atmosphere when permafrost thaws during summers. That’s about the same amount of heat-trapping gas the world spews during five years of burning coal, gas and other fossil fuels.

And the picture is even more alarming for the end of the century. The scientists calculate that about than 300 billion metric tons of carbon will belch from the thawing Earth from now until 2100.

Adding in that gas means that warming would happen “20 to 30 percent faster than from fossil fuel emissions alone,” said Edward Schuur of the University of Florida. “You are significantly speeding things up by releasing this carbon.”

—-end excerpt—-

Steinar,

Like raypierre, I can’t really interpret what you are saying.

You need to distinguish more carefully between an end date (like “by 2100”) and an end CO2 target (like a “doubling of CO2”). The impact on climate when thinking “by 2100” depends not only on the sensitivity, but also on socio-economic pathways and carbon cycle uncertainties, which determine what the CO2 concentration will be. Furthermore, you also need to distinguish the equilibrium response from the response at any point in time when following a particular scenario.

Concerning your first paragraph, please have a harder look at the graph I linked to in my last comment to you, which is from IPCC AR4. This shows the best understanding (at the time of the AR4) of the relative contribution of different “forcings” to global temperature change since year 1750. Clearly, there are many positive forcings (warming influences) and negative forcings (cooling influences)– the total includes methane, N2O, black carbon, small changes in sunlight, aerosols, etc. You can also see the relative uncertainty between forcings (e.g., it is much larger for aerosols than for GHGs).

The reason people “lean heavily” on CO2 has to do somewhat with the fact that it is the largest positive forcing over this time interval, but also because we’d expect it to grow even more strongly relative to other contributions in the future. This is due to the fact that it has the strongest potential to warm the globe in the long-run based on its long lifetime in the atmosphere (ranging from decades to centuries, and a tail end that extends to millennia, and with many climate impacts occurring over these slow timescales). In contrast, anthropogenic aerosols only affect climate insofar as they are emitted persistently. Moreover, any internal variability in the system will be superimposed on this even stronger growing positive trend, shifting the base climate into a state not seen for at least a few million years.

“… without the CO2 feedback the climate change would be much more confined to the Northern Hemisphere extratropics, where the great ice sheets wax and wane. This is why the LGM response in the tropics and Southern midlatitudes is such a crucial test of CO2 response. One gets relatively little Milankovic signal there without an effect from CO2.. –raypierre”

That’s very interesting – I had assumed that the circulation of the atmosphere and oceans would be the ‘globaliser of temperature response’. If it’s actually CO2, does that mean the absorption of CO2 (when the climate is cooling) and the emission of CO2 (when the climate is warming) is mainly going on at high latitudes and therefore transferring the temperature response to lower latitudes, rather than being a global process in response to global temperature change?

[Response: It would be a very reasonable assumption to think the atmosphere is a globalizer, but Manabe and Broccoli showed it ain’t so, back in the 80’s. It’s surprisingly hard to get the cooling past the equator, and it doesn’t even penetrate all that well into the tropics. The ocean is somewhat better at connecting the hemispheres, but not all that great either. The importance of the details of atmospheric heat transport into the tropics, which David Battisti has shown is dependent on some rather intricate fluid dynamical behavior, is another reason that the UVic model (which represents all that by diffusion) is completely unsuitable for the uses it was put to in this study (except, as I said, as a proof of concept). –raypierre]

Isotopious @83 — A positive feedback amplifies the signal (forcing). This is true whether the signal is increasing or decreasing. So at the end of the Eemian interglacial the signal (orbital forcing) decreased and the positive feedbacks of water vapor + CO2 enchanced the decline.

Raypierre, Thanks.

On temperature dependence, water vapour feedback, once in place, will be self- sustaining (it will require a greater forcing to reverse compared with the initial forcing it started with)?

Lower obliquity should result in more water vapour in the tropics if the water vapour feedback holds true. What do the proxys show?

Are you saying the tropics are insensitive to INSOLATION, but sensitive to CO2?

Or maybe ‘all the action takes place in the extra tropics’…..?

[Response: Please go read some books. I can’t give you a complete climate physics education in the comments. Dave Archer’s “Understanding the Forecast” book and lectures make a good place to start. No, water vapor feedback, once in place, is emphatically not self-sustaining. Take out the CO2, the atmosphere cools down, water goes, cools down more, and BINGO you are in a Snowball. My colleague Aiko Voight was the first to show this in print in a clear fashion, though this is something a lot of us knew from classroom arguments beforehand. I can’t make much sense of the rest of your comments but no, I am not saying that the tropics is insensitive to insolation. You’d better learn something about Milankovic forcing, and what the ocean does to average the precessional cyle out, and how it depends on latitude. –raypierre]

Icarus- In this context it doesn’t really matter where the CO2 is coming from (since it becomes well-mixed in the air over less than a few years), though the most plausible hypotheses usually require the Southern Ocean to be involved, and the associated feedbacks of ocean biogeochemistry and its interaction with the ocean’s physical circulation.

CO2 provides only a minor effect in the obliquity and precession timescale band, but over 30% of the forcing in the 100 kyr band, so it is a key forcing agent that allows us to explain the magnitude of glacial-interglacial temperature variations. If only Milankovitch mattered the ice ages would be more localized than global.

#87

David Benson. That would only work if the forcing was greater (there therefore dominant) than the resulting temperature change (which it most certainly isn’t).

[Response: This comment makes no sense at all. Forcing is in watts per square meter. Temperature is, well, temperature, like in Kelvins. Different units. Makes no sense to say one is bigger than the other. Think first, write second. –raypierre]

Re: Raypierre’s response to Wayne Davidson. I thought there was still a long way to go in cataloging the ocean temperatures of the LGM. Problems with ferreting out overlapping signals from different temperatures in unmixed layers and the geographic distribution of sediment core data. Also, putting it all together to describe heat transfer by ocean currents. Otherwise why the mismatch seen in this paper’s land and ocean LGM temperature delta and carbon dioxide sensitivity? I thought the big deal with this paper was not necessarily it’s new estimate of sensitivity, but rather the methods used to tease out LGM ocean temperature estimates?

I’m lost. Can you point to a new book on paleooceanography/climatology that has up to date information on what the ongoing sediment drilling program is telling us? Or is it all still in the journals? I’m coming at this as a biologist who has necessarily had to learn outside of his comfort zone.

Thank-you.

[Response: I don’t understand this question. Where did I say we know the LGM temperatures well? We know them a whole lot better than in CLIMAP days, but there are still important mismatches between the various proxies. But there is enough data to say that the tropics and SH did cool much more than they would without the effect of CO2. Something even the UVic model can more or less do is to demonstrate that you don’t get anything remotely resembling the LGM in the tropics and SH unless you include the CO2 effect — though I suspect that the tropical results are very much contaminated by inadequacies in the diffusive representation of heat and moisture transport. –raypierre]

Isotopious @90 — I assure you that what I wrote follows directly from what is called Linear Systems Theory. I recommend studying before commenting.

Thanks for the discussion of time to equilibrium. It makes sensitivity all the more difficult to define because you have to say “When” and it introduces more opportunities for denialists.

Raypierre,

you said yourself “Anything that “holds up” the temperature, whether it be CO2 or changes in solar brightness, allows the atmosphere to hold more water….”

Does that “Anything” include water vapour?

If not, why not.

[Response: Yes, that includes water vapor too. But that does not in any way conflict with my statement regarding the key importance of CO2. The warming due to water vapor helps the air hold water, but in the Earth’s orbit, it is not actually sufficient to keep the air warm enough to keep the water it already has — so you go into the death spiral, with a bit of cooling, less water, then more cooling, and so on to Snowball. The difference with CO2 (in Earth’s orbit) is that it doesn’t condense as the air gets a bit cooler. Get it? Take Benson’s advice, and learn a bit about feedbacks in general systems theory. Your ignorance transcends merely climate science, and extends really to the basics. –raypierre]

Taking the precession period as 26,000 years (26 ky), note that about 2 kya the thermal equator was at the southern extreme. This means that it was at the northern extreme around 15 kya, the (end) time of LGM. Compare that to the extent of the tropical rain forest in the Americas at that time (map previously posted) and note that the Amazon basin was largely savanna, warm but dry. That this seems controlled by precession is indicated that around 41 kya the Amazon basin was also savanna but also see the presentation on this matter 2–3 years ago at the AGU fall meeting.

The relevance here is that precession appears to play some role in the climate state during LGM while the current value of precession is near the opposite extreme. I suppose this has some role in the difficulty in using LGM to determine Charney climate sensitivity with accuracy.

[Response: Again, this is why you need a model to infer climate sensitivity from the LGM. To do that, you need to take into account all the forcings that are different between the LGM and the present, not just the CO2. Milankovic forcing is one of those things. Of course, one needs a model that responds accurately to those forcings, otherwise it will lead to incorrect attributions of the part of the climate change due to CO2. In some sense, though, almost any known forcing is useful in inferring climate sensitivity, since the same feedbacks that determine the response to Milankovic also determine response to CO2, though the relative weightings of the different feedbacks are likely to be different. That is why the post emphasizes that it is unclear that the model that does best at reproducing the LGM also does best at forecasting the future. There was more ice around in the LGM and that changes the weighting of ice-albedo feedback, but also the operation of the cloud feedback since clouds over ice have different effects than clouds over water. Just one of many caveats. –raypierre]

In the response by raypierre- I agree about the problems with simple energy balance model and its lack of spatial representation, but it’s tough to fault the authors for the lack of cloud detail, since the science is not up to the task of solving that problem (and doing so would be outside the scope of the paper; very few paleoclimate papers that tackle the sensitivity issue do much with clouds).

[Response: I can’t agree with this assessment. General circulation models do simulate clouds, and the clouds they simulate are a big part of the nature of their response to both doubled CO2 and to LGM forcing. However, because of the various unknowns in the cloud process, the models give quite different climate sensitivities, accounting for much of the IPCC spread. So, the key thing in evaluating climate sensitivity is to use the LGM as a test of how well the models are doing clouds, using the LGM, and then see what happens in the same model when you project to the future. You cannot do that in a model which doesn’t have the dynamics needed to simulate changes in clouds. –raypierre]

The context of what I wrote is the discussion above, which talks about how observed temperatures can’t yet say much about the sensitivity, perhaps not yet for many decades. I’m trying to reconcile that with the summary for policymakers: the likelihood (“likely”) that emissions are behind the observed temperature rise and the estimated 21st century temperature rise. Yes, the discussion above is about equilibrium climate sensitivity and by picking 2100 (or 2011) we’ll be measuring the transient climate sensitivity. I’m aware of the difference, that equilibrium requires a very long time, but I do assume that these sensitivities are connected, in particular that the transient sensitivity can be used as a lower limit for the equilibrium sensitivity.

My original question was about when we can use observations to say something about the sensitivity (even only its lower limit). IPCC seem to say that we can already – despite the complexity and incertainties of the different forcings. In that case observations in the next few decades could narrow the sensitivity range. But the figure that was pointed out seems to suggest that the uncertainties of the negative forcings may postpone warming from emissions significantly. Also a bit puzzling is the IPCC statement “values substantially higher than 4.5 °C cannot be excluded, but agreement of models with observations is not as good for those values”. Since equilibrium could take a very long time, I would think that the observations (the instrument record) would say very little about the upper limit.

Thanks for the criticisms, raypierre, I will try again:

Benson: “A positive feedback amplifies the signal (forcing). This is true whether the signal is increasing or decreasing. So at the end of the Eemian interglacial the signal (orbital forcing) decreased and the positive feedbacks of water vapor + CO2 enchanced the decline.”

If a positive feedback amplifies a signal, and the resulting change attributable to water vapour feedback is greater than the initial signal, then any further perturbations will be competing with the change attributable to water vapor. Both cause temperature change so both will play a role in any future water vapour feedback process that is dependent on temperature.

That one component is non -condensing, so may play a stabilising role, definitely strengthens your argument and is definitely good science. I’m just trying to challenge your good science, that’s all.

I don’t see any answer to RichardC (#40). So here’s an attempt:

When temperatures change because of an orbital forcing, you’ve got a strong CO2 feedback because the CO2 in the atmosphere was in equilibrium with the CO2 in the oceans before temperatures changed.

In the case of warming caused by a disproportionate increase in atmospheric CO2 (compared with oceanic CO2), an increase in temperatures only slows down the rate at which CO2 is absorbed by the oceans. This effect is probably significant but it’s slow-acting and the CO2 self-feedback would only be fully realized when very little of the original CO2 pulse was left in the atmosphere.

I imagine the CO2 feedback would be more important as a feedback to any albedo changes brought by warming. If a spike in temperatures due to CO2 causes a non-reversible change in ice cover, you have a situation more analogous to a deglaciation because you now have a forcing that has a strong effect on the equilibrium amount of CO2 in the atmosphere.

There’s so much CO2 in the oceans that, unless some kind of tipping point is triggered, atmospheric emissions shouldn’t affect that (very) long term equilibrium anywhere as much as they affect the atmosphere in the short run.

But IANAC so I could be way off…

Isotopious, I think you are thinking of this too much in the abstract. These are all physical processes. Think how the system responds physically, and it might help.

Example: CO2 increases. This takes a big bite out of the outgoing IR spectrum and throws the system out of equilibrium. Temperature rises. This shifts the IR spectrum just slightly and increases the IR emitted. It also increases the water vapor in the air slightly–which takes another bite out of the IR spectrum. You have to look at how the added ghgs–both CO2 and H2O respond to the altered IR spectrum and how all of the feedbacks and temperature evolve as the system moves again toward equilibrium. Does that help at all?