This week, PNAS published our paper Increase of Extreme Events in a Warming World, which analyses how many new record events you expect to see in a time series with a trend. It does that with analytical solutions for linear trends and Monte Carlo simulations for nonlinear trends.

A key result is that the number of record-breaking events increases depending on the ratio of trend to variability. Large variability reduces the number of new records – which is why the satellite series of global mean temperature have fewer expected records than the surface data, despite showing practically the same global warming trend: they have more short-term variability.

Another application shown in our paper is to the series of July temperatures in Moscow. We conclude that the 2010 Moscow heat record is, with 80% probability, due to the long-term climatic warming trend.

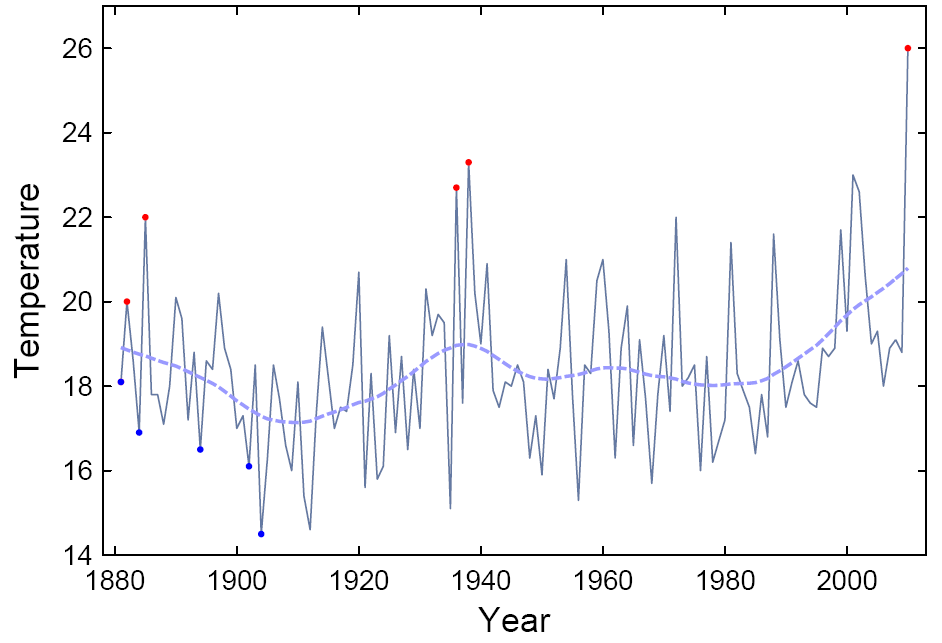

Figure 1: Moscow July temperatures 1880-2010. New records (hottest or coldest months until then) are marked with red and blue dots. Dashed is a non-linear trend line.

With this conclusion we contradict an earlier paper by Dole et al. (2011), who put the Moscow heat record down to natural variability (see their press release). Here we would like to briefly explain where this difference in conclusion comes from, since we did not have space to cover this in our paper.

The main argument why Dole et al. conclude that climatic warming played no role in the Moscow heat record is because they found that there is no warming trend in July in Moscow. They speak of a “warming hole” in that region, and show this in Fig. 1 of their paper. Indeed, the linear July trend since 1880 in the Moscow area in their Figure is even slightly negative. In contrast, we find a strong warming trend. How come?

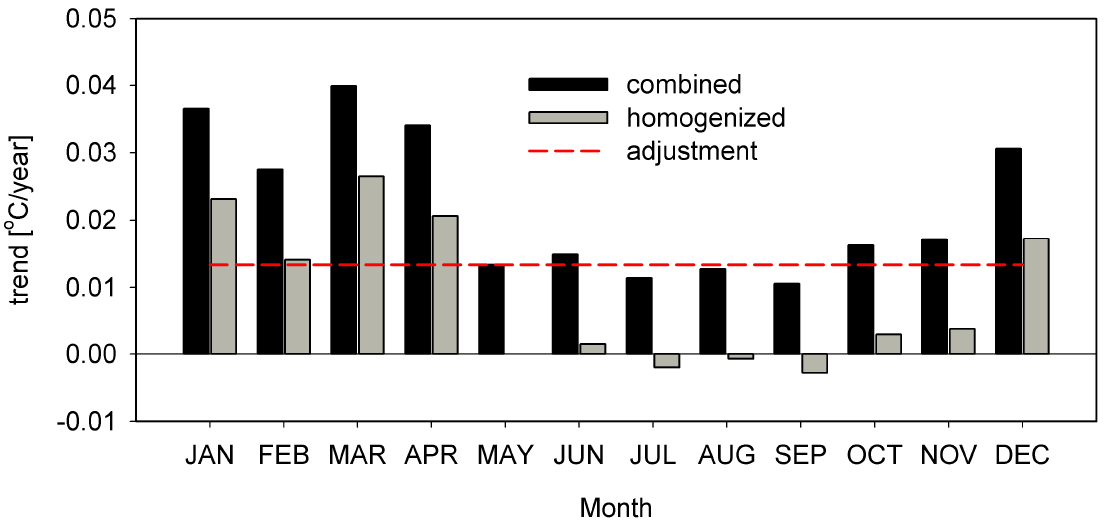

The difference, we think, boils down to two factors: the urban heat island correction and the time interval considered. Dole et al. relied on linear trends since 1880 from standard gridded data products. The figure below shows these linear trends for the GISS data for each calendar month, for two data versions provided by GISS: unadjusted and ‘homogenised’. The latter involves an automatic correction for the urban heat island effect. We immediately see that the trend for July is negative in the homogenised data, just as shown by Dole et al. (Randall Dole has confirmed to us that they used data adjusted for the urban heat island effect in their study.)

Figure 2: Linear trends since 1880 in the NASA GISS data in the Moscow area, for each calendar month.

But the graph shows some further interesting things. Winter warming in the unadjusted data is as large as 4.1ºC over the past 130 years, summer warming about 1.7ºC – both much larger than global mean warming. Now look at the difference between adjusted and unadjusted data (shown by the red line): it is exactly the same for every month! That means: the urban heat island adjustment is not computed for each month separately but just applied in annual average, and it is a whopping 1.8ºC downward adjustment. This leads to a massive over-adjustment for urban heat island in summer, because the urban heat island in Moscow is mostly a winter phenomenon (see e.g. Lokoshchenko and Isaev). This unrealistic adjustment turns a strong July warming into a slight cooling. The automatic adjustments used in global gridded data probably do a good job for what they were designed to do (remove spurious trends from global or hemispheric temperature series), but they should not be relied upon for more detailed local analysis, as Hansen et al. (1999) warned: “We recommend that the adjusted data be used with great caution, especially for local studies.” Urban adjustment in the Moscow region would be on especially shaky ground given the lack of coverage in rural areas. For example, in the region investigated by Dole et al (50N-60N/35E-55E) no single (or combined) rural GISS station (with a population less than 10,000) covers the post-Soviet era, a period when Moscow expanded rapidly.

For this reason we used unadjusted station data (i.e. the “GISS combined Moskva” data) and also looked at various surrounding stations, as well as talking to scientists from Moscow. In our study we were first interested in how the observed local warming trend in Moscow would have increased the number of expected heat records – regardless of what caused this warming trend. What contribution the urban heat island might have made to it was only considered subsequently.

We found that the observed temperature evolution since 1880 is only very poorly characterized by a linear trend, so we used a non-linear trend line (see Fig. 1 above) together with Monte Carlo simulations. What we found, as shown in Fig. 4 of our paper, is that up to the 1980s, the expected number of records does not deviate much from that of a stationary climate, except for the 1930s. But the warming since about 1980 has increased the expected number of records in the last decade by a factor of five. (That gives the 80% probability mentioned at the outset: out of five expected records, one would have occurred also without this warming.)

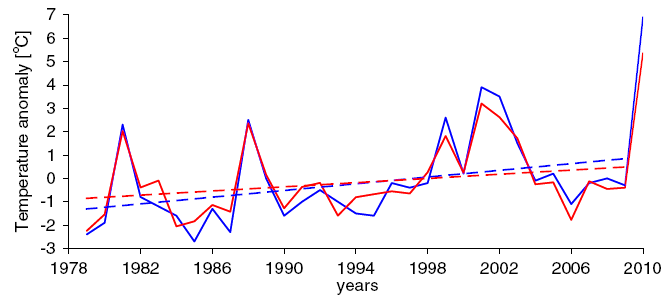

Figure 3: Comparison of temperature anomalies from RSS satellite data (red) over the Moscow region (35ºE–40ºE, 54ºN–58ºN) versus Moscow station data (blue). The solid lines show the average July value for each year, whereas the dashed lines show the linear trend of these data for 1979–2009 (i.e., excluding the record 2010 value).

So is this local July warming in Moscow since 1980 just due to the urban heat island effect? That question is relatively easy to answer, since for this time interval we have satellite data. These show for a large region around Moscow a linear warming of 1.4 ºC over the period 1979-2009. That is (even without the high 2010 value) a warming three times larger than the global mean!

So much for the “Moscow warming hole”.

Stefan Rahmstorf and Dim Coumou

Related paper: Barriopedro et al. have recently shown in Science that the 2010 summer heat wave set a new record Europe-wide, breaking the previous record heat of summer 2003.

PS (27 October): Since at least one of our readers failed to understand the description in our paper, here we give an explanation of our approach in layman’s terms.

The basic idea is that a time series (global or Moscow temperature or whatever) can be split into a deterministic climate component and a random weather component. This separation into a slowly-varying ‘trend process’ (the dashed line in Fig. 1 above) and a superimposed ‘noise process’ (the wiggles around that dashed line) is textbook statistics. The trend process could be climatic changes due to greenhouse gases or the urban heat island effect, whilst the noise process is just random variability.

To understand the probabilities of hitting a new record that result from this combination of deterministic change and random variations, we perform Monte Carlo simulations, so we can run this situation not just once (as the real Earth did) but many times. Just like rolling dice many times to see what the odds are to get a six. This is extremely simple: for one shot of this we take the trend line and add random ‘noise’, i.e. random numbers with suitable statistical properties (Gaussian white noise with the same variance as the noise in the data). See Fig. 1 ABC of our paper for three examples of time series generated in this way. We do that 100,000 times to get reliable statistics.

There is a scientific choice to be made on how to determine the trend process, i.e. the deterministic climate change component. One could use a linear trend – not a bad assumption for the last 30 years, but not very smart for the period 1880-2009, say. For a start, based on what we know about the forcings and the observed evolution of global mean temperature, why would one expect climate change to be a linear warming since 1880 in Moscow? Hence we use something more flexible to filter out the slow-changing component, in our case the SSAtrend filter of Moore et al, but one could also use e.g. a LOESS or LOWESS smooth (as preferred by Tamino) or something else. That is the dashed line in Fig. 1 (except that in Fig. 1 it was computed including the 2010 data point; in the paper we excluded that to be extra conservative, so the warming trend we used in the analysis is a bit smaller near the end).

After making such a choice for determining the climate trend, one then needs to check whether this was a good choice, i.e. whether the residual (the data after subtracting this trend) is indeed just random noise (see Fig. 3 and Methods section of our paper).

One thing worth mentioning: whether a new heat record is hit in the last ten years only depends on the temperature of the last ten years and on the value of the previous record. Whether one includes the pre-1910 data or not only matters if the previous record dates back to 1910 or earlier. That has nothing to do with our choice of analysis technique but is simply a logical fact. In the real data the previous record was from 1938 (see Fig. 1) and in the Monte Carlo simulations we find that only rarely does the previous record date back to before 1910, hence it makes just a small difference whether one includes those earlier data or not. The main effect of a longer timeseries is that the expected number of recent new records in a stationary climate gets smaller. So the ratio of extremes expected with climate change to that without climate change gets larger if we have more data. I.e., the probability that the 2010 record was due to climate change is a bit larger if it was a 130-year heat record than if it was only a 100-year heat record. But this is anyhow a non-issue, since for the final result we did the analysis for the full available data series starting from 1880.

Update 16 January 2013: As hinted at in the discussion, at the time this was published we had already performed an analysis of worldwide temperature records which confirms that our simple statistical approach works well. In global average, the number of unprecedented heat records over the past ten years is five times higher than in a stationary climate, based on 150,000 temperature time series starting in the year 1880. We did not discuss this in public at the time because it was not published yet in the peer-reviewed literature. Now it is.

> I am trying to understand how CO2 is driving the weather patterns

http://www.aip.org/history/climate/GCM.htm

Re:100

Hey Hank,

The closest that history came to describing how CO2 and other Anthropogenic activities affect weather patterns was the blurb about the “GCM” modelers trying to analyze one and two dimension models before the Charney Panel moved on to CLIMAP. I had read this or domething similar back in 2003.

The point remains I know CO2 increases wv content. I do not argue that the CO2 at 130ppm greater level then the last 1ky adds up to 1.85w/m^2 to the atmosphere 7×24. I am comfortable that the scientists have a series of models which can replicate current changes and can project future changes within a reasonable margin of error.

I am still trying to understand the three dimension process of how the radiant energy of CO2 and other human activities affects weather patterns. I am confident eventually it will be demonstrated. As a hobbist, I want to try to understand the science and the basis of knowledge that will go into defining the global processes.

So far I have seen excellent work wrt the radiative components. Dr. Schmidt and several discussions on UKww Climate Discussion and Analysis in 2007-8 did a lot for helping me understand the combination of band saturation and “splatter”.

Moving into the third dimension is a bit confusing as the advection of tropical heat when “enhanced” with added atmospheric radiative energy convects to a higher altitude and requires an in-rush/updraft of more surface air/wv content, inessence, chilling the surface (to close to its original temp., though with a higher salinity) and increasing the specific humidity at altitude over the ocean. Which leaves me with a wealth of questions and so far few answers. I do appreciate your help though, hopefully as time goes on more will come to light.

Cheers!

Dave Cooke

Jim,

Thanks for taking the time to respond. My comment was a bit half-baked, sorry about that. Bad netiquette on my part.

You wrote:

“It does get tricky though if you have these multi-decadal scale oscillations overlaid on the trend. Then you need more time, or more series, to get the same level of confidence. But that’s sort of a different point than you’re making isn’t it?”

No, that was pretty much my point. My earlier post was meant to establish that the Moscow event is not unique, independent of autocorrelation, if you look at all stations worldwide. There were probably similar events caused by blocking highs in the cooler 1970s. The rest of the comment would be that if you performed a post hoc analysis of an extreme event in the early 1930s, you would see a similar nonlinear temperature excursion preceding it, and be able to make a similar claim of improbability. From that perspective, I am unable to see how the study can differentiate between a trend-induced improbable event and an oscillation-induced improbable event, in a post hoc analysis of a single event.

[Response: There is always the chance that you’ve got some low frequency oscillation going on, with a wavelength greater than the length of your record, rather than a monotonic trend. It’s not meant to distinguish between these two, and indeed it can’t, without at some point resorting to physics, paleodata and other external information, or widening the scope of the study, in space or in time or both. It’s meant only to address the probability of a stationary process (i.e. temperature) over the given record.–Jim]

“By the way, this was fished out of the spam folder.”

I’m not sure what this is meant to convey.

Matt, #103 — It only means your post got caught in an automatic spam filter by mistake. Expect this to happen sometimes. In this case a moderator spotted it and helped out. Don’t expect this to happen always.

> I am still trying to understand the three dimension process of how the

> radiant energy of CO2 and other human activities affects weather patterns.

You’re not alone in that effort. Weart says: “The climate system is too complex for the human brain to grasp with simple insight. No scientist managed to devise a page of equations that explained the global atmosphere’s operations….”

http://www.aip.org/history/climate/GCM.htm

Re – my post at #89, Jim’s comments, #91 (Rob Painting) and 397 (tamino),

I’ve looked at the paper briefly. Thanks Jim for your comments. I think I’ve got it. I still have an important issue to raise.

Although Stefan’s attention was drawn to the Moscow record because it was so extreme, the initial question is to what extent can a new record in the past decade in Moscow be attributed to random variability about a stationary time series versus an underlying trend. The actual record was (correctly) ignored in order to estimate this trend.

The task then is to estimate the expected number of records (in the last decade) in a stationary setting (0.105 for the shorter time series, 1910-2009) versus in the expected number in the presence of an underlying trend (0.47 records per decade). So [(0.47 − 0.105)/0.47] ~ 80% probability that A new Moscow record is due to the warming trend.

All well and good, except the conclusion states, “…we estimate that the local warming trend has increased the number of records expected in the past decade fivefold, which implies an approximate 80% probability that THE 2010 July heat record would not have occurred without climate warming.”

This is where the problem occurs. I’ll first try to illustrate using Bayes theorem then I’ll do a thought experiment.

For shorthand, let WR => record due to warming & A => analysis was done, and noWR => no warming record; so P(WR|A) means the “probability a record event is due to warming GIVEN that you have chosen to analyse it”. This is what the concluding statement is in fact saying, as soon as you swap from “a record” to “the record”.

Using Bayes theorem:

P(WR|A) = P(A|WR)*P(WR)/P(A), We expand P(A)to give:

P(WR|A) = P(A|WR)*P(WR)/[P(A|noWR).P(noWR) + P(A|WR).P(WR)]

Now P(WR) is estimated in the paper to be 0.8. It is immaterial to this argument that its estimation ignores the 2010 record itself, because it is then used post-hoc to make statements about the probability of the 2010 record. I suspect that this analysis would not have been done if 2010 was not a record year. To give a realistic estimate of the probability that THE 2010 record was due to the warming trend we must be able to calculate the ratio

P(A|WR)/P(A),

i.e. what is the probability this event would have been analysed given that it was a record divided by the total probability it would be analysed. record or not. The expansion shows that this calculation must in practice consider the probability of analysis if the record had not occurred (quite low I assume). This ratio is almost certainly much greater than 1.

Consider two people who buy lottery tickets. One buys one $1 ticket among the millions available a week. He wins the $1 million prize by luck after 10 years. The other starts buying one a week but each year she increases this by an extra ticket. Two a week in year 2,…, ten a week in year 10. Finally she wins the jackpot. Now we want to calculate in each case how much the trend in ticket buying has contributed to their respective jackpot wins.

We can say definitively that the trend has not contributed at all to the probability of the man winning in year 10 but multiplied the probability of the women winning in the 10th year 10-fold. However, we cannot attribute the woman’s actual win 90% to the trend (the man had no trend so it is clearly dumb luck). The reason is that we chose to analyse them because they won the jackpot.

So until you can quantify the probability that this event was analysed given that it was a record, you cannot make post-hoc attributions of a record to a regional climate trend.

Now my intention is not to give succor to the likes of Roger Pielke, but rather to contribute to this difficult problem of attribution of regional climate change, as in my view, global climate change is beyond reasonable doubt.

[Response: Bruce, see my response to Tamino at #97. According to our analysis, the warming trend in Moscow has increased the likelihood of a record to five times what it would be without such a trend. This is true whether a jackpot (the 2010 record) was then won or not – it is a result of the Monte Carlo analysis. But the question then is: is such a warming trend special to Moscow? Did we find and analyse this special case because we were alerted to it by the news about the record? (Did we find that woman who bought ever more lottery tickets because she won in the end?) For the woman the answer is probably yes, for Moscow, no. Similar warming trends, raising the odds in a similar manner, are found all over the globe, this is nothing special to Moscow. A woman who increases her ticket-buying like this, in contrast, would be an extremely special case.

But: even if we only found this woman because she won, this would not change the facts of how her ticket-buying strategy has increased her odds of winning, the analysis of this would remain completely true. It just would be a rare case, not a common one. -stefan]

I have an observation and a question related to mutidecadal fluctuations, and the interpretation of this as a study relating solely to global warming.

I realize this is a statistical analysis, not an attribution study. That said, it is going to be discussed as if it were an attribution study — that global warming raised the odds of this extreme event. If discussed as such, the results appear a high outlier relative to similar-looking attribution studies. The ones I’ve read about (here in Realclimate, mostly) seem to find that global warming is resulting in a modest increase in the likelihood of occurrence of specific extreme events. In the 20% range or so. So something is materially different here, to find 80%, compared to attribution studies typically showing much smaller changes in likelihood of extreme events.

Let me cut to the case. As I understand this, you asked the question, how likely would you have been to see the new high if there had been no recent trend. (Where your definition of trend is clear, and defined in a neutral, statistical sense as the curve fit to the data). Then, how likely with the recent trend added. So far, so good.

Now we get to the attribution part. If the 1930s peak and the 2010 peaks in this region were due in part to the atlantic multidecadal oscillation, then would a more conservative measure of the pure effect of global warming have been to ask, not how likely the new high would have been absent any trend, but how likely if the 1930s trend had repeated itself?

I note that you clearly describe this in terms of the statistical trend. I also see that it is widely interpreted as showing that “global warming” greatly increased the odds. But if “the trend” in the most recent years combines the effects of AMO and global warming, then that interpretation is wrong. (In effect, just as you will see people plot the raw sea surface temperature data and incorrectly attribute all the change in the region to “AMO”, you’ve tracked the raw surface temperature change, and others are incorrectly attributing the entire effect to “global warming”.)

Let me put it another way: If the recent statistical trend combines both AMO and global warming, then the correct heuristic interpretation of the result is an 80% increase in likelihood, relative to a world in which both global warming and AMO had ceased.

In a crude sense, to the extent that GCMs either replicate an AMO-like phenomenon, or produce large but nonperiodic fluctuations in sea-surface temps (AMO-like in size but not periodicity), then implicitly, the alternative hypothes in those studies (a world without global warming) has a lot more variation in it than this study does.

Maybe that reconciles the 80% here compared to much lower estimates in attribution studies. (Alternatively, I may completely have misunderstood what you did).

I’m suggesting that if this is going to be interpreted as a study of the impact of global warming, maybe a more conservative measure would be to monte carlo a world in which the 1930s trend repeated itself (a simple proxy for AMO), instead of a world with no trend. Surely that would show a smaller increase in the likelihood of extreme events. I would be curious to see if that would align the results of this study more closely with prior attribution studies of extreme events.

In short, my question is not about how you defined the trend. That seems clear. Its about whether, if the recent trend combines AMO and global warming signals, interpretation of this as if it were an attribution study of global warming may overstate the pure effect of global warming.

Re: #106 (Bruce Tabor)

I believe your analysis is mistaken — particularly your application of Bayes’ theorem. The condition “WR” for “record due to warming trend” is fine, but you shouldn’t be comparing that to “noWR” for “no record.”

You should really be comparing “WR” to “RnoTR” — that a record is observed but it’s NOT due to trend. Then we have

P(WR|A) = P(A|WR) * P(WR) / [ P(A|RnoTR) * P(RnoTR) + P(A|WR * P(WR) ]

The only sensible estimate is P(A|WR) and P(A|RnoTR) are the same — analyzing the Moscow record was equally likely whether it’s due to a trend or not.

RE:107

Hey Christopher,

Regardless of the application of tools the provided sample would be fairly small if examining simple extremes. In Figure 1, that there is an underlying 1deg.C rise in the extremes regardless the assumed source appears to be unaddressed, a question seems to be whether this could be related to UHI, (I believe it unlikely). Remove this, (positive extreme range 1880 time frame, equates to 3deg.C, @1940 roughly 4deg.C and @2000 roughly 5deg.) And part of the trend remains. That fossil fuel emissions would likely have been kept in check, either by the 1920’s-30’s cooling ocean SSTs globally, there had to be a different process at work.

Proceeding, if we remove the trending many associate with GW, or roughly a 0.8degC rise the outliers continue to trend. If we suggest the AMO is resonsible for the 2degC rise in 1880 and it were a fixed value, the remaining change between 1940 and 2000 would still appear to be roughly 1degC positive. In essence, if we are considering a AMO of between the 60 and 70yrs, and a temperature contribution of between 2-3deg.C, the GAT trend remains, it would require a stretch to suggest that the AMO could be responsible for a 2-4degC variation, though not impossible.

That we are aware of other contributing processes both having positive and negative influences would seem to suggest that the effects of the AMO are not likely to be responsible for the extremes we see. As we increase our knowledge of the forcings and their observable effects I believe the picture will improve in its focus. Dealing with probabilities at this scale and current state of science makes a hard conclusion difficult to define at this time, IMHO. However, the pathway to the necessary knowledge is based on the platforms established by works such as this one.

Cheers!

Dave Cooke

To 109, I’m not suggesting that AMO by itself explains the new record. I understand that. The state of the AMO now is not materially different from the 1930s (largely, I guess, because it’s defined by de-trended sea surface temperatures).

I’m suggesting that it’s improper to characterize the results as showing that “global warming”, by itself, accounts for the 80% increase in likelihood of a new record temperature. Not that the authors did that, but that others will.

If I understand how the study worked, the 80% reflects the comparison of likelihood with actual trend, relative to likelihood with stationary (no trend) temperatures. That is a comparison of temperatures reflecting both AMO and “global warming”, relative to a world that had neither. I’m asking that, to isolate the pure effect of global warming, probabilities conditional on actual trend be compared to a different alternative, which would be a trend reflecting only AMO. And my suggestion for getting a back-of-the-envelope estimate was literally to replicate the (e.g.) 1910 to 1930 trend, and tack it on the end of the timeseries ending in 2010, then proceed with the analysis. (I realize there’s more to it than that, but that’s the gist of it.)

If, when you did that, the marginal increase in likelihood of new extremes fell more in line with the (e.g.) 20% that seems typical of recent attribution studies, then this would place what is now an extreme outlier result (as it is being interpreted, not as the authors described it) more in line with the rest of the literature.

Re:110

Hey Christopher,

At issue is the difficulty in separating the various contributors. I believe the primary issue with the AMO effect is that the Arctic patterns are likely the driving force of circulation. The issue that this analysis is based on is; How do you separate primary forcing and secondary or even tertiary forcing from natural variation? In this case I believe they took the right choice by not separating them or assigning a “SWAG” value.

If you knew the local variation limits or range maximums you could then suggest that any value that exceeds the maximum range would have to be driven by another process. The question is how do you determine what the maximum range component is? If we look at the apparent repeating maximums and subtract the variation in the mean, you are in essence normalizing the data set. (Removing the trend.) To then assign a value of 100 to the 1940s peak you get what appears to be a normal distribution if you remove the @2000 and above values. Though there are major indications of a process change when the normal variation starts stagnating on one side or the other of the mean. The cooling stagnation with a snap temperature rebound is very characteristic of weather, which is abnormal in normal statistics.

If anything, it is this one characteristic which appears to suggest that the extreme warming event is a normal weather event. Weather unlike large populations of random numbers or measures of single processes does not “behave”. Though short periods may appear to be elastic, they are not elastic in the sense of equal and opposite effect. Again the reason is we are measuring both a closed system and an open system which appears to share the same source; but different sinks. One of the two, leaks…

That the source does not seem to vary then the destination of the energy in the system should be entrophy. Hence, if the energy is increasing in the system there has to be a change in the sinks. Thus, a rebound which is higher can only happen if the total energy in the system is increasing. The measure of that increase is what is being attempted. To move beyond the work that has been done here will require additional effort and measures. I believe many would like to achieve as you request; however, it will have to wait for another day and more information before it can be achieved.

Cheers!

Dave Cooke

(PS: And yes I know I bury it, I just write as I think it and it takes too long for me to render the husk from the kernal to respond in a clear concise manner, sorry.)

Re. Stefans response to my #106 post, Tamino at #97 and #108,

Thanks guys,

I stand corrected (in the algebra as well, thanks Tamino). I realised there was an error in my thinking soon after pressing “Submit”. It’s in the nature of blogs that not enough thinking and error correction occurs before publication.

In a nutshell, my concern was that the estimated ATTRIBUTION of the record to a warming trend versus random variation about the mean was not made INDEPENDENTLY of the 2010 record itself, a record which clearly drew the attention of Stefan and Dim Coumou and other researchers.

As Tamino points out, the decision to analyse was driven by the record itself, INDEPENDENT of whether it was ATTRIBUTED (due) to a trend or random variation.

Furthermore the process of estimating the ATTRIBUTION excluded the 2010 record. So the only remaining issue is whether the trend immediately prior to 2010 contains INFORMATION about the 2010 record that is a result of short range AUTOCORRELATION rather than a longer term trend. Tamino in #97 points out that the autocorrelation is weak. The six years leading up to 2010 were close to the long term mean.

So congratulations to Stefan & Don on the paper and thank you Tamino for helping me get my statistical thinking right.

I’m looking forward to your paper Stefan!

Why do I have to search into the farthest depths of the internet to find any relevant and compelling research on GW and mainstream outlets these days have nothing to say unless its some quack scientist with a quack theory that they think will bring some ratings? Thanks or the post.