This week, PNAS published our paper Increase of Extreme Events in a Warming World, which analyses how many new record events you expect to see in a time series with a trend. It does that with analytical solutions for linear trends and Monte Carlo simulations for nonlinear trends.

A key result is that the number of record-breaking events increases depending on the ratio of trend to variability. Large variability reduces the number of new records – which is why the satellite series of global mean temperature have fewer expected records than the surface data, despite showing practically the same global warming trend: they have more short-term variability.

Another application shown in our paper is to the series of July temperatures in Moscow. We conclude that the 2010 Moscow heat record is, with 80% probability, due to the long-term climatic warming trend.

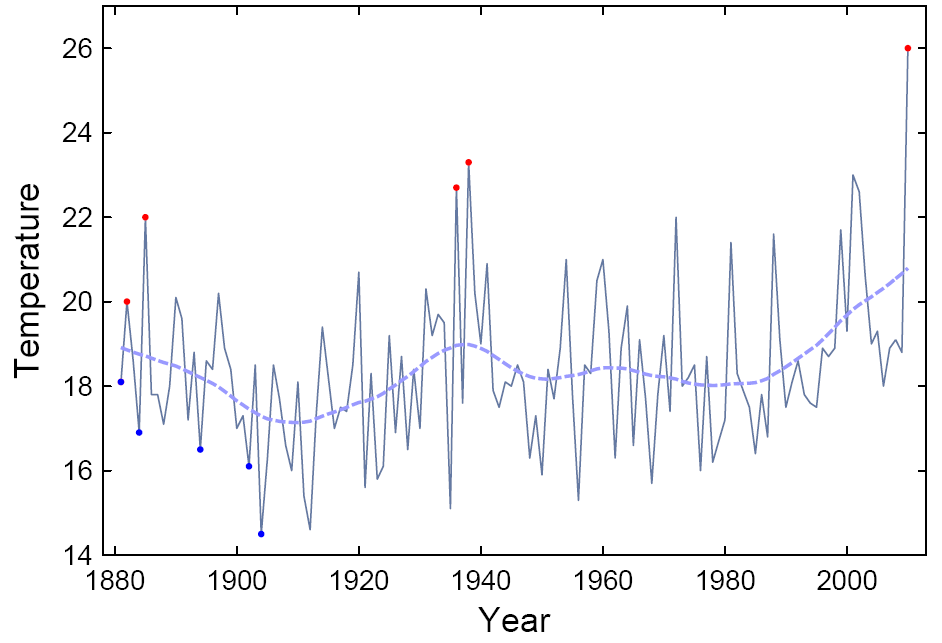

Figure 1: Moscow July temperatures 1880-2010. New records (hottest or coldest months until then) are marked with red and blue dots. Dashed is a non-linear trend line.

With this conclusion we contradict an earlier paper by Dole et al. (2011), who put the Moscow heat record down to natural variability (see their press release). Here we would like to briefly explain where this difference in conclusion comes from, since we did not have space to cover this in our paper.

The main argument why Dole et al. conclude that climatic warming played no role in the Moscow heat record is because they found that there is no warming trend in July in Moscow. They speak of a “warming hole” in that region, and show this in Fig. 1 of their paper. Indeed, the linear July trend since 1880 in the Moscow area in their Figure is even slightly negative. In contrast, we find a strong warming trend. How come?

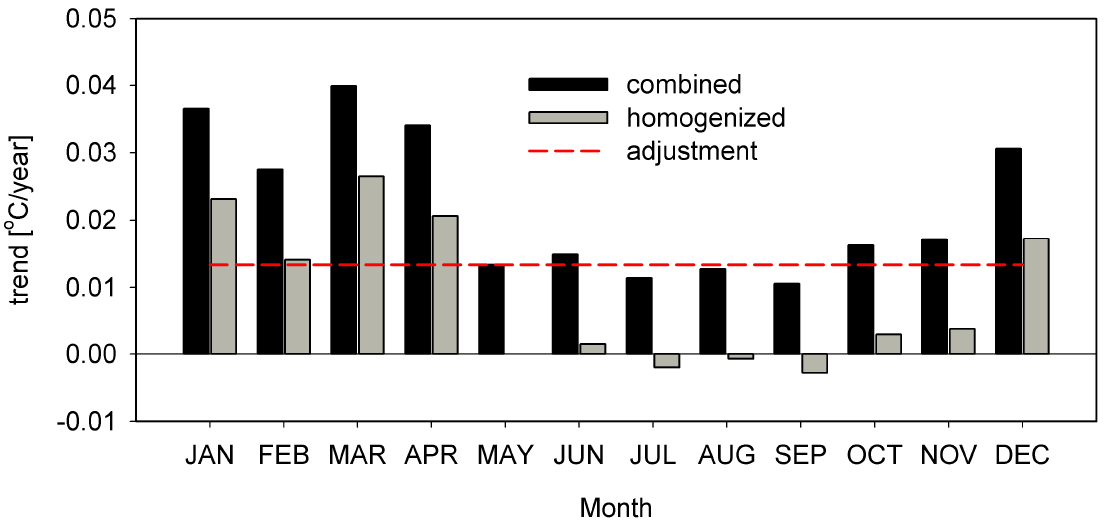

The difference, we think, boils down to two factors: the urban heat island correction and the time interval considered. Dole et al. relied on linear trends since 1880 from standard gridded data products. The figure below shows these linear trends for the GISS data for each calendar month, for two data versions provided by GISS: unadjusted and ‘homogenised’. The latter involves an automatic correction for the urban heat island effect. We immediately see that the trend for July is negative in the homogenised data, just as shown by Dole et al. (Randall Dole has confirmed to us that they used data adjusted for the urban heat island effect in their study.)

Figure 2: Linear trends since 1880 in the NASA GISS data in the Moscow area, for each calendar month.

But the graph shows some further interesting things. Winter warming in the unadjusted data is as large as 4.1ºC over the past 130 years, summer warming about 1.7ºC – both much larger than global mean warming. Now look at the difference between adjusted and unadjusted data (shown by the red line): it is exactly the same for every month! That means: the urban heat island adjustment is not computed for each month separately but just applied in annual average, and it is a whopping 1.8ºC downward adjustment. This leads to a massive over-adjustment for urban heat island in summer, because the urban heat island in Moscow is mostly a winter phenomenon (see e.g. Lokoshchenko and Isaev). This unrealistic adjustment turns a strong July warming into a slight cooling. The automatic adjustments used in global gridded data probably do a good job for what they were designed to do (remove spurious trends from global or hemispheric temperature series), but they should not be relied upon for more detailed local analysis, as Hansen et al. (1999) warned: “We recommend that the adjusted data be used with great caution, especially for local studies.” Urban adjustment in the Moscow region would be on especially shaky ground given the lack of coverage in rural areas. For example, in the region investigated by Dole et al (50N-60N/35E-55E) no single (or combined) rural GISS station (with a population less than 10,000) covers the post-Soviet era, a period when Moscow expanded rapidly.

For this reason we used unadjusted station data (i.e. the “GISS combined Moskva” data) and also looked at various surrounding stations, as well as talking to scientists from Moscow. In our study we were first interested in how the observed local warming trend in Moscow would have increased the number of expected heat records – regardless of what caused this warming trend. What contribution the urban heat island might have made to it was only considered subsequently.

We found that the observed temperature evolution since 1880 is only very poorly characterized by a linear trend, so we used a non-linear trend line (see Fig. 1 above) together with Monte Carlo simulations. What we found, as shown in Fig. 4 of our paper, is that up to the 1980s, the expected number of records does not deviate much from that of a stationary climate, except for the 1930s. But the warming since about 1980 has increased the expected number of records in the last decade by a factor of five. (That gives the 80% probability mentioned at the outset: out of five expected records, one would have occurred also without this warming.)

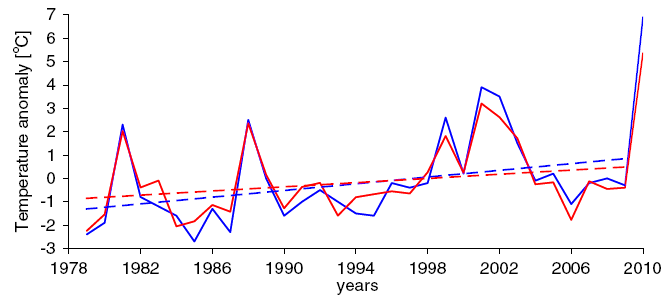

Figure 3: Comparison of temperature anomalies from RSS satellite data (red) over the Moscow region (35ºE–40ºE, 54ºN–58ºN) versus Moscow station data (blue). The solid lines show the average July value for each year, whereas the dashed lines show the linear trend of these data for 1979–2009 (i.e., excluding the record 2010 value).

So is this local July warming in Moscow since 1980 just due to the urban heat island effect? That question is relatively easy to answer, since for this time interval we have satellite data. These show for a large region around Moscow a linear warming of 1.4 ºC over the period 1979-2009. That is (even without the high 2010 value) a warming three times larger than the global mean!

So much for the “Moscow warming hole”.

Stefan Rahmstorf and Dim Coumou

Related paper: Barriopedro et al. have recently shown in Science that the 2010 summer heat wave set a new record Europe-wide, breaking the previous record heat of summer 2003.

PS (27 October): Since at least one of our readers failed to understand the description in our paper, here we give an explanation of our approach in layman’s terms.

The basic idea is that a time series (global or Moscow temperature or whatever) can be split into a deterministic climate component and a random weather component. This separation into a slowly-varying ‘trend process’ (the dashed line in Fig. 1 above) and a superimposed ‘noise process’ (the wiggles around that dashed line) is textbook statistics. The trend process could be climatic changes due to greenhouse gases or the urban heat island effect, whilst the noise process is just random variability.

To understand the probabilities of hitting a new record that result from this combination of deterministic change and random variations, we perform Monte Carlo simulations, so we can run this situation not just once (as the real Earth did) but many times. Just like rolling dice many times to see what the odds are to get a six. This is extremely simple: for one shot of this we take the trend line and add random ‘noise’, i.e. random numbers with suitable statistical properties (Gaussian white noise with the same variance as the noise in the data). See Fig. 1 ABC of our paper for three examples of time series generated in this way. We do that 100,000 times to get reliable statistics.

There is a scientific choice to be made on how to determine the trend process, i.e. the deterministic climate change component. One could use a linear trend – not a bad assumption for the last 30 years, but not very smart for the period 1880-2009, say. For a start, based on what we know about the forcings and the observed evolution of global mean temperature, why would one expect climate change to be a linear warming since 1880 in Moscow? Hence we use something more flexible to filter out the slow-changing component, in our case the SSAtrend filter of Moore et al, but one could also use e.g. a LOESS or LOWESS smooth (as preferred by Tamino) or something else. That is the dashed line in Fig. 1 (except that in Fig. 1 it was computed including the 2010 data point; in the paper we excluded that to be extra conservative, so the warming trend we used in the analysis is a bit smaller near the end).

After making such a choice for determining the climate trend, one then needs to check whether this was a good choice, i.e. whether the residual (the data after subtracting this trend) is indeed just random noise (see Fig. 3 and Methods section of our paper).

One thing worth mentioning: whether a new heat record is hit in the last ten years only depends on the temperature of the last ten years and on the value of the previous record. Whether one includes the pre-1910 data or not only matters if the previous record dates back to 1910 or earlier. That has nothing to do with our choice of analysis technique but is simply a logical fact. In the real data the previous record was from 1938 (see Fig. 1) and in the Monte Carlo simulations we find that only rarely does the previous record date back to before 1910, hence it makes just a small difference whether one includes those earlier data or not. The main effect of a longer timeseries is that the expected number of recent new records in a stationary climate gets smaller. So the ratio of extremes expected with climate change to that without climate change gets larger if we have more data. I.e., the probability that the 2010 record was due to climate change is a bit larger if it was a 130-year heat record than if it was only a 100-year heat record. But this is anyhow a non-issue, since for the final result we did the analysis for the full available data series starting from 1880.

Update 16 January 2013: As hinted at in the discussion, at the time this was published we had already performed an analysis of worldwide temperature records which confirms that our simple statistical approach works well. In global average, the number of unprecedented heat records over the past ten years is five times higher than in a stationary climate, based on 150,000 temperature time series starting in the year 1880. We did not discuss this in public at the time because it was not published yet in the peer-reviewed literature. Now it is.

RE:48

Hey Hank,

Though slightly OT, look to Dr. Muller’s paper a clear example can be found near the same latitude between the heating in Alberta Canada contrasting the cooling in Siberia. To wax philosophical, Einsteinian physics suggests that for every point of dark matter (“black hole” if you will) there should be a contrasting “white hole”. The basics of closed system physical principles going back to Newton suggests this.

(Cosmologically, if we remove matter from our perception/space-time you are left with “dark energy”.)

Similarly in atmospheric physics, change the conditions and the physics of the process change. (One thing does not change, you can transform matter to energy, if you invest sufficient energy, which should have a counter of remove sufficient energy and you get matter.)

The point being in a closed system the physics dictates balanced/equal reactions. It is when a closed system is partially open you change the equation. We have sufficient evidence of equal and opposites though the may be a difference in perception due to one being concentrated and the other diverse. We can percieve the concentrated effect, we cannot percieve the diverse.

Now as to specific URLs, sorry my current tools do not support that ability. As to URL availability they are clearly available as most have either been referenced here in RC or in other media, (those which are based on integrity rather then popularity).

(Hence the reason I have tried to now coach my point in universal principles. Using a sledge to hammer a nail is possible; but, you end up with a sore thumb…, sorry…)

Cheers!

Dave Cooke

Pete @ 49,

But surely an honest lecturer would make sure the students had access to a copy of the paper under discussion, as well as a link to the informative post by the authors here at RC? Oh, but being open and honest will not help Roger’s narrative…never mind then.

For a first approximation, an outlier in the direction of a trend tells you what’s coming. Granted you have to know what the trends are first. Just from the shape of a bell curve (and this is far from original) one can see that you don’t have to move the mean very far in one direction for outliers on that side to become much more frequent. A one hundred year heat wave or flood readily becomes a ten or five year event. The new hundred year flood is going to drown people.

Those objecting to the 100 year Russian record seem oblivious to how extraordinary that century’s outliers may be..

In 1915 , reflecting the abrupt increase in settlement caused by the opening of the Trans-Siberian Railroad hosted a wave of climate-related forest fires that released teragrams of smoke and soot over a burnt area possibly exceeding a million square kilometers.

Nature;, 323 ,116-117, 1986

MapleLeaf, John, thanks for the links.

Thanks, Hank. Lupo’s project seems still to be in the phase of announcement: http://www.eurekalert.org/pub_releases/2011-09/uom-mrt092011.php (from Sep 2011).

However, it is not only “blocking” (which relates to strong high pressure systems that “block” fronts from intruding an area) which is interesting. Blocking is only one part of the problem. It might be interesting to look at the whole system of Rossby waves. Persistence of waves cannot only occur with high pressure, but also e.g. with low pressure systems (potentially leading to flooding) or with persistent zonal flow (supporting the occurrence of extreme cyclones). We are planning a project in that direction. So I’m looking around for what already exists.

If you could provide links to the papers you found that look at changes in blocking, I would appreciate.

Besides, I am currently at the WCRP conference in Denver, where the question of changes in circulation patterns due to anthropogenic climate change as an issue for attribution of events has been put up (but not answered…) just this morning…

oarobin @37 — I’m an amateur at this. Extreme value theory (EVT) appears to require discarding all but the most extreme event in a time interval, say a year. If that is correct, much important information has been lost. I can’t comment on a Monte Carlo approach because I’m uncertain just what you are referring to.

Regarding precipitation, hereinafter just rain, there are attempts to fit the tail of the rainfall histograms using a (generalized) Pareto distribution. This has the advantage of using all the big, hence, rare, events. Even better IMHO is fitting the entire rainfall historgram. I have found efforts using the log-normal distribution and also the gamma distribution; neither works as well as desired measured over all locations.

There are but two preliminary attempts (that I have found on the web) using stable distributions. These are found under names such as Levy stable, levay-Pareto or even alpha stable. For a taste of the remarkable properties of stable distributions, see the introductory chapter of John P. Nolan’s forthcoming book; this chapter is web accessible.

To whet your appitite, one of the stable distribution is called by Nolan, and maybe also Feller (1971), the Levy distribution. For large x it rolls off as -3/2, so looks like one of the genralized Pareto distributions out at the rare event end. But unlike the other distributions mentioned in earlier paragraphs, it is stable and so enjoys the i.i.d. property well known from the most popular of the stable distributions, the normal distribution. Of course the Levy distribution is one-sided and has a very heavy right tail, heavier than the log-normal or gamma distributions. To the extent that the Levi distribution or close relatives actually characterize rainfall events it suggests that some rare events will be truely exceptional, so exceptional that perhaps for reasons of physical limitations one should instead consider a truncated version. (Various truncated versions of stable distributions similar to the Levy distribution appear to be popular in finance.)

I have, just in the past week+, discovered a remarkable property of the Levy distribution, not shared by any other stable distribution, which motivates using it to characterize rainfall events. This helps in encouraging you to study the Levy distribution for the purposes of better understanding rare rainfall events.

@David B. Benson,

thanks for the reply (i am even more of an amateur at this). The monte carlo approach i was referring to was the one described in the Rahmstorf/Coumou paper whereby one fits a statistical model to a time series with random and deterministic components ,then generate a dataset of 1000s’s of model runs with the same deterministic component but varying randoms components and then calculate statistics on the dataset see update”PS (27 October)” on the main post for a better explanation. there is a nice introductory description of EVT available @ http://www.samsi.info/communications/videos by dan cooley (including a short course by the same speaker and several other nice talks) that shows why it is a reasonable idea to throw away most of the data when looking at extremes(i think it has something to do with biasing the data fit to model parameters as there are just far more nonextreme values).

i was looking for something along the lines of the strengths and weaknesses in the two approaches.

your stuff on stable distribution looks nice have you got a writeup(link) on this or is this original research?

RE:55

Hey Urs,

Just an interjection, the NOAA paper wrt Moscows summer temp. abnormality, discussed that the driver of the Blocking condition related to a weakened polar vortex. Personally after several years of examining the NCDC SRRS NH Analysis of the 250mb data set, it seems that cross zonal flow strengthens when the heat content differential between the equator and pole are greatest. What appears to happen is the upper air currents shift from more zonal flows to more cross zonal flows as evidenced in the N. Jet Stream. (This can be noted in the changes in the polar vortex early last Winter with the weak vortex changing to a strong “split” AO pattern late Winter.)

This change in zonal flow reduces the zonal drive pushing Rossby waves within the zone, resulting in increases in Blocking/Cut-Off conditions. The end result is greater residence periods. Add in that the conditions seem to be indicated by the trailing condition of a La Nina event, seems to suggest that the contributor has more to do with Arctic heat flow patterns then the equatorial heat content.

I have not seen any papers addressing this condition other then something in the 2004-05 range discussing the changes in the Walker circulation and the NASA papers regarding the Arctic upper atmosphere heat content being elevated during the El Nino event about the same time. Sorry not much help.

Cheers!

Dave Cooke

David Cooke,

You do not need any “skills” to post a link. All you have to do is copy and paste it from your browser (I assume you know how to do that).

Like this:

https://www.realclimate.org/?comments_popup=9247

Put it on a line by itself, and it will take care of itself. It may not be elegant, but it works fine.

David and Oarbin,

The issue with extreme-value statistics is that, since it seeks to characterize the extremes of a distribution, the data needed for meaningful characterization is rare. So, unless you have lots of data, extreme value analysis is at best an exercise in futility.

Basically, though, you can sort of think of extreme value theory (EVT)as being similar to the Central Limit Theorem. The Central limit Theorem says that near the mode, any unimodal distribution looks Normal. EVT says that the extremes of a distribution will exhibit one of 3 behaviors, corresponding to 3 limiting distributions–decaying exponentially (Gumbel), decaying according to a power law (Frechet) or having negligible probability above some finite limit (reversed Weibull). You can represent all three behaviors with a single generalized extreme value (or Fisher-Tippett) distrubution.

As David points out, you can use a few “highest” samples to get a fit in some cases. I can think of two reasons for using Monte Carlo over EVT

1)limited (but still large) datasets

2)autocorrelation in the data

RE:59

Hey Susan,

Assuming, you use a browser with copy/paste features. It also assumes you have a personal library of bookmarks associated with your browsing tool…

Cheers!

Dave Cooke

Re: 60

Hey Ray,

Wouldn’t using a “R” chart over time provide a similar function, though with a larger data set. Point being, if Range is an indicator of change, then if, there is a correlation with a trend you would have confirmation of its validity?

This would seem to be an obvious test and unlikely to have been missed. This was one of the questions I asked 6 years ago. “Why if the daily range is dropping year over year, would the mean be increasing.” The answer appears to be related to wv.

This led to the next set of questions, such as: How can the wv be increasing if the reported specific humidity/temperature are not. Both of which led towards a solution set suggesting advection. Hence, where I am consigned to today, trying to find the gating elements for maintaing flat specific values in a atmosphere dominated by a TSI imballance.

Cheers!

Dave Cooke

Dave Cooke:

Copy/Paste is not a part of your browser, it is a basic operating system function that will work for any text or numbers in all programs you run.

I have pretty much stopped reading your posts because I have no way to read up on or verify the complicated material that you comment on. Citations are a normal component of scientific discussion and it is impolite to not provide them.

Steve

ldavidcooke,

I am not sure how range would help you in fitting extremem value distributions.

OTOH, decreased diurnal and annual range is a prediction for a greenhouse warming mechanism. CO2 works whether the sun is shining or not.

RE:63

Hey Steve,

Not on 1994 USL-V ver. 4.1113 via Mosaic Gopher or Nintendo’s Opera Internet service, both of which does not support flash or pdf capability… Similar to most 2nd gen. android 1.5-2.0 tablets… As to impolite, hmmm…, life experience, and ambiguious research connected thoughts are going to be hard to reference from memory over 10-40yrs, sorry…

[Response: Can we at least try and stay on topic? – gavin]

Hi Stefan,

I thought it would be fun to look at the different months in the Moscow data on the grounds that your result should be reproducible for any month. My comments are based on your post here. It will be some time before I can get a copy of the paper from the library.

I started this by creating the non-linear trend lines for each month, reproducing near enough the one shown above for July. It immediately becomes clear that each month has a very different trend line. It was also apparent that the July trend over the last 30 years is much higher than the average monthly trend. Given the amount of variation in the trend lines I couldn’t see an obvious reason to prefer using a trend line based on July rather than using the annual average trend.

I was wondering how sensitive the results are to the trend line. It looks to me that using a different trend line can affect the likely hood of a stationary climate setting a new record (because the trend line used will affect an estimate of the natural variance) and also the likely hood of a warming climate setting a new record because of variation in the recent trend; The average trend is half that of the July trend. Would that not reduce the probability to approx (0.235-0.105)/0.235 = 55% ?

I think my concern is that the July trend line is being distorted by noise, noise that is not due to the global warming trend in Moscow. I also wonder, given that trend lines for each month show so much variation, if is it sound to make trend lines based on monthly data?

NOTE PLEASE added text in the main post above.

“PS (27 October): Since at least one of our readers failed to understand the description in our paper, here we give an explanation of our approach in layman’s terms….”

Go to the top of the page for the new explanatory material.

Thank you Stefan.

RE: 64

Hey Ray,

Concur, where as it is correct that a rising tide lifts all boats and it does it regardles the time of day. Hence, individual station Ranges would not be affected by GHG. (Until recently I thought it might have been instrumentation/interpretation changes. About a year ago, wv and heat transport started to make sense when looking at the NCDC, NCEP, SRRS NH analysis 250mb isotach station graphs for the last 10yrs and comparing the patterns to large scale weather patterns.)

Back to the extremes fit, I tried a similar analysis using the USHCN data record. My results found that for my sample sites, they demonstrate a evenly distributed extreme monthly value over the last 80 years. The main change I saw was not so much TMax, as much as Tavg. TMin for the 30 long range (roughly since 1880), rural, monthly histories I sampled, demo’ed a roughly +0.35 C trend with Tmax values in the +0.65 range. When I selected to remove the 1 std. deviation values, the classic skewed/tipped parabola emerges. The point is variation and extreme fit seem to be resolution dependent in my experience. It is when we move to an annual value the extremes clearly take on the classic trend.

It becomes curious to me as to how resolution plays into the analysis, have you insights into this?

Cheers!

Dave Cooke

oarobin @57 — The Wikipedia page on stable distributions is a place to start but I do recommend using your search engine to find Nolan’s chapter 1 of his forthcoming book on stable distributions.

I don’t produce web pages for my writeups; my discovery regarding the LEvy distribution might be of interest to others and so mayhap be published.

I don’t entirely agree with Ray Ladbury’s comment at least for rare rainfall events; there are quite long records for some locations and from the satellite era worldwide data. But there is a substantive point behind insisting upon a stable distribution along the lines of Emmy Noether’s (first) Theorem; a symmetry discovered in nature leads to a conservation law. Since a stable distribution is given by the values of four parameters it seems plausible that some stable distribution ought to give a decent fit to the rainfall histogram for a given location. Then accepting that beauty is truth (and so stability is real) one can then read off the probability for exceeding a given amount, rare events which civil engineers need to plan for.

[I’ll just mention that I don’t currently see how to use these ideas for the rare events of droughts and heat waves.]

David, FWIW, I agree that rainfall events will be easier to fit to an extreme value distribution than will droughts and heat waves. After all, insurance companies have been doing just this for decades. That is also one reason why they are worried about climate change–the process generating extreme weather events may no longer be stationary.

Here’s the paper:

http://www.pik-potsdam.de/~stefan/Publications/Nature/rahmstorf_coumou_2011.pdf

Ray Ladbury @70 — The Levy distribution is a pdf for all events, not just the extreme ones. (It has to be of Frechet type, i.e., type II as it rolls off as a -3/2 power law why out at the right tail.) My goal is to justify a Levy distribution for daily rainfall totals based on some rather well-understood physics (which doesn’t appear to be known by hydrologists) in order to motivate those with lotsa data to see how well the Levy distribution fits the data. If its fairly good one could then fine-tune by using a ‘nearby’ stable distribution; however I don’t intend to carry that very far forward.

Once done, I hope to be able to offer an approach which will better predict the recurrence time of rare rainfall events in the the face of increasing temperatures. I opine it ought to do better than the usual EVT approaches using just the extreme events or even the (generalized) Pareto distribution which perforce uses just the rare events as well, but more of those.

SInce droughts and heat waves are well correlated and having no decent ideas for a pdf to describe heat waves I can’t go on to do the simplified physics of anti-rain, i.e., droughts. So unless a really good idea comes along, I’ll have to ignore that aspect of too-wet/too-dry to concentrate on just the too-dry part.

Does this paper or any other paper cover how temperature variability has changed with warming?

It seems trivial to me that if temperature variability stays the same, then there will eventually be more high temperature records over time if the overall trend is up.

Are there any studies that have documented statistically significantly more high temp extremes world wide?, i.e. not just in one location?

One of the things that continuous distribution including those represented by extreme value theory ignore, is that there are extreme values at which the thing they are representing breaks and the next values of the variable being observed are simply an infinite repeat of the last extreme value. For example a value of $0 for a bond or stock. There may be such points in the climate system.

Re: 74

Hey Eli,

For instance, Snowball Earth or possibly final sequence Red Star absorption of inner planets? That seems to define the potential range…, at least in the instance under discussion. Are you suggesting pleateuing of trends, establshing a different baseline?

Cheers!

Dave Cooke

Filipzyk @ 73, Capital Climate http://capitalclimate.blogspot.com/ often shows a chart of high vs low records for the numerous weather stations in the US. It isn’t showing just now, but if you click on “older posts” at the bottom right a couple times you ought to find it.

Last two winters

Warm extremes versus cold snaps AGU

http://www.agu.org/news/press/pr_archives/2011/2011-30.shtml

Filipzyk @ 73/Pete Dunkelberg @ 76:

The latest analysis, through the summer, is here:

http://capitalclimate.blogspot.com/2011/09/us-heat-records-continue-crushing-cold.html

Thanks for the links everyone.

Thanks to John Byatt and Cap Climate for the links. John Byatt, this seems to be the paper:

“Recent warm and cold daily winter temperature extremes in the Northern Hemisphere” – Kristen Guirguis, Alexander Gershunov, Rachel Schwartz, and Stephen Bennett (2011)

http://meteora.ucsd.edu/cap/pdffiles/2011GL048762.pdf

ht AGW Observer

http://agwobserver.wordpress.com/2011/09/21/papers-on-northern-hemisphere-winters-2009-2010-and-2010-2011/

The last words of the paper may be of interest to some here:

It isn’t the continuity of a probability density function (pdf) but rather that physical (and economic) objects have state. A pdf is adequate for a memoryless object such as a pair of dice. If there is some form of memory then one studies a Markov process which is to represent the physical (or economic) system.

The mention of Snowball Earth in a previous comment reminded me of just how frequently I was surprised by the (relative) stability of Earth’s clamate while reading and then studying Ray Pierrehumbert’s “Principles of Planetary Climate”. There is the Lovelock/Margulis Gaia hypothesis for which keeping the thermodynamic lessons in “Out of the Cool” helps keep in prespective. There is also Ward & Brownlee’s “Rare Earth” as well. Still in all, the stability continues to amaze.

Regarding eorobin‘s earlier question about EVT versus Monte Carlo (MC) methods: I’m not impressed with the applications of EVT I’ve looked into regarding rainfall intensity; perhaps the fault is my own. I have now read the Stefan Rahmstorf and Dim Coumou PNAS paper linked at the beginning of this thread. I’m impressed by the clarity of the concise writing and only wish I could do somewhere near as well. For the questions addressed the methods appear to my amateur eyes to be completely sound and above reproach (other than the minor points addressed already in that paper). I will certainly keep this MC method in mind if I ever go so far as attempting to address the role of a warming world upon local rainfall, although I’m hoping to obtain an analytic expression for the particular (simplified) physics I have in mind.

How does this relate to:

http://dotearth.blogs.nytimes.com/2011/10/29/october-surprise-white-snow-on-green-leaves/

“October Surprise: White Snow on Green Leaves”

By ANDREW C. REVKIN?

It doesn’t seem at all reasonable to me that there would still be green leaves in the Hudson valley on October 29. Nor does it seem reasonable that snow would be a surprise on October 29, given that south of Buffalo, N.Y., it used to snow every September. The Hudson valley is only about 1400 feet lower in elevation, so the lapse rate shouldn’t do it.

Did variability grow with the warming? Did the Rossby waves suddenly rotate 45 degrees of longitude? I doubt that snow on October 29 is unprecedented in the historical record. The trees should have protected themselves by dropping their leaves. Will the trees die? September and most of October must have been like July to prevent the trees from “knowing” that it was time for the leaves to change color. Any comments on this?

Will the new climate force a change from broadleaf trees to evergreens? White snow on green broad leaves is indeed strange. Are seasons changing more abruptly now?

[Response: Good questions. There is some evidence for later dates of leaf drop in some locations, but I don’t have the details at my fingertips. Heavy snow before leaf drop is by no means unprecedented, particularly since early season snows tend to be very wet and heavy, as this one is. Yes, a lot of those deciduous trees will indeed die and others will sustain major damage that both weakens them and increases their susceptibility to pathogens. Deciduous trees do protect themselves by dropping their leaves, but since this process is dominated by photoperiod and climatic conditions, there’s no way they can just jettison all their leaves immediately in response to a freak storm. The leaves have to form an abscission layer, which is hormonally mediated, for them to drop, no matter how much weight you put on them. The amount of damage done would depend on how many leaves were in the process of doing this (i.e. about to drop), when the snow hit. Color change and leaf drop are temporally close, but not synchronous. You would have to have large and persistent/consistent changes in early season snow loads for that factor by itself to shift forests from deciduous to evergreen dominance, but it’s not impossible. A more likely contributor to such a shift is increasing aridity–conifers have the advantage in dryer climates, as a broad generalization. Lastly, at large spatial scales, there is more evidence for earlier springs than later autumns, but there is some evidence for the latter also.–Jim]

I’m trying to track down an article that was written up in RealClimate that made a very approachable argument for when something can be attributed to global warming. The idea was that if an event is far out of the ordinary but fits a global warming model very closely, a degree of probability can be assigned regarding the likelihood that the event was in fact due to global warming. If I remember correctly, they gave a probability for the French heat wave of 2003 being caused by global warming. Does anyone have a link to that article or the RealClimate writeup?

Erica Ackerman @83 — I don’t know about the 2003 West European heat wave, but the PNAS paper linked at the beginning of this thread offers a method to calculate that likelihood and does so for the East Europe/Central Asian heat wave of 2010.

@Edward Greisch #82

I got interested in this October event as well, so I downloaded GHCN for my most local weather station (which happens to be a rural site, so no UHIs for me!). The variable I was looking at is date of first freeze, since that’s the limiting factor I would think in October snows. For my location, the average date of first freeze is Nov. 5 with a standard deviation of 10+ days, so an event like this isn’t out of the ordinary.

Comparing 1900-1970 vs. 1970-2010, I found that there was barely any shift at all in the first freeze date (~1/2 of a day). However, the interesting thing I did find was that the date of first freeze was substantially more variable between 1970 and 2010, with a sigma of 10 days from 1900-1900 and 15 days from 1970-2010 (and it’s just smaller sample, 1900-2010 also had a larger standard deviation of about 13). I also plotted the distribution of days and the increased variability was quite evident. Has anyone done a more global analysis along the same lines?

Erica #83,

The 2003 article was Peter A. Stott, D. A. Stone, and M. R. Allen, “Human contribution to the European heatwave of 2003,” Nature 432, no. 7017 (December 2, 2004): 610-614.

The RC post was probably this:

https://www.realclimate.org/index.php/archives/2011/02/going-to-extremes/

fyi

http://rogerpielkejr.blogspot.com/2011/11/anatomy-of-cherry-pick.html

@87 ob:

Sigh.

Stefan,

I’m a statistician and this analysis bothers me. I’m NOT a gobal warming sceptic – the opposite in fact. One problem is I don’t have access to the paper, so I can only rely on the summary here.

This issue is attribution of a single local extreme seasonal weather event to a global trend.

[Response: No, the issue is the attribution of a local/regional record event to a local/regional trend.–Jim]

To a first approximation, there is only one global average temperature and we can do statistical inference on it on an ongoing basis. But there are numerous regional seasonal, monthly or daily temperatures. New York average in summer; Melbourne in January; hottest Ausgust night in Los Angeles etc.

It seems to me that the problem is we are naturally drawn to these extremes. And this is what has happened here. You have taken a single extreme, defined both by time (July) and a small region (around Moscow), and done an analysis on the basis that it was somehow independently chosen. If the analysis was done on Moscow after July 2009 without knowledge of the extreme event in 2010, the conclusion would be more credible.

[Response: That’s exactly what they did. They removed 2010 from the trend calculation and computed their numbers based on 1910 to 2009 and then also from 1880 to 2009. The 2010 value is not included–Jim]

A fair analysis would take into account the prior probability of picking that extreme event (defined both by location and time unit) after it occurred – probably unquantifiable – but as far as I know these analysis are not done a priori. Presumable the approach would be to define a large number of regional time slices and do a similar analysis.

If the same analysis (as in the paper) was done in 1939 – after the 1938 and 1936 records, one wonders if the conclusion would be even stronger. (An additional complication here is the climate time series show positive autocorrelation, so closely spaced records may not be as unusual as would first appear.)

[Response: If you did the same analysis before (not after), you would get the same basic finding, i.e. the climate was more likely non-stationary than stationary. Which it likely was, as the low frequency variation shows. And positive autocorrelation is what you expect in a trending series, the opposite of expectation in a non-trending one having the same amount of white noise. If the trend is upward, you will get record and near record high temperatures temporally close to each other–Jim]

I work in medical stats rather than climate stats, but I often wonder how extreme local events could be attributed to global warming. It seems to me the magnitude of the event is important – this maximum is 3 degrees greater than the previous – but this also suffers from selection bias. Repetition is important, but autocorrelation must be considered. In summary this is a non-trivial statistical problem.

[Response: Yes, the amount by which the 1930s record was beaten is yet more evidence that the cause of the 2010 record event is due to non-stationarity of the climate.–Jim]

MOSCOW | Mon Oct 25, 2010 3:21pm EDT

MOSCOW (Reuters) –

Nearly 56,000 more people died nationwide this summer than in the same period last year, said a monthly Economic Development Ministry report on Russia’s economy.

http://www.reuters.com/article/2010/10/25/us-russia-heat-deaths-idUSTRE69O4LB20101025

Bruce – the paper is freely available. How about reading it first? Try here.

@87 & 88

Are we feeling devastated yet?

See Roger’s comment on the NOAA draft.

I have a concern about nonliner trending that shows increased warming in the 1980-2009 period:

Global temperatures, especially northern hemisphere temperatures, have a periodic, apparently naturally occuring cycle showing up in HadCRUT3.

I made some attempts at using Fourier analysis in Hadcrut3. I found a sinusoidal component holding up for 2 cycles (1876-2004) with period of 64 years, amplitude .218 degrees C peak-to-peak, with peaks at 2004, 1940, and 1876. What I suspect causes this is AMO and a possibly-loosely-linked long period component of PDO. This accounts for nearly half the warming from the 1973 dip to the 2005 peak in smoothed HadCRUT3.

So, I would be concerned about warming in a time period largely coinciding with 1972-2004 being used as an indicator of how much warming is to be expected in the future.

Way back at # 74, Eli wrote: “One of the things that continuous distribution including those represented by extreme value theory ignore, is that there are extreme values at which the thing they are representing breaks and the next values of the variable being observed are simply an infinite repeat of the last extreme value. For example a value of $0 for a bond or stock. There may be such points in the climate system.”

I’ll suggest that these breaks in variable pattern are much more likely in climate proxy data than in the physical measurements of temperature, rainfall, etc in climate change.

The notion that the only significant break points in climate change trends would be “Snowball earth”, or “final sequence Red Star absorption of inner planets” is disingenuous, and distracting. Climate change on earth is unlikely to come anywhere near either end of that silly continuum, but proxy data already demonstrate a number of absolutist dynamic trends.

Its not hard to argue that a primary motivation for the denial noise about global warming, is to shift attention from those proxy data.

Tree rings do not accumulate when the tree dies due to drought, or after shifts in timing of first/last frost, or if bark beetle infestations surge, due to milder deep frost in winter (as is absolutely true across millions of evergreen forest acreage in the US West and Alaska). A graph of human quality of life for residents in Shismaref Alaska will soon hit a static point, when the village moves inland to avoid encroaching sea level (and most residents scatter). Rising mortality due to hotter regional summer temps suggests a number of static points on the graph (50,000 in one summer, in one relatively small area?).

Of course insurance companies are worried – denial doesn’t work when losses are there in front of the agent. Oil and coal companies and their shills focus on denying the larger question, to postpone the inevitable attribution of harm and need for lifestyle changes for as many news cycles (and personal fortune accumulations) as can be.

Way back at #74, Eli said: “One of the things that continuous distribution including those represented by extreme value theory ignore, is that there are extreme values at which the thing they are representing breaks and the next values of the variable being observed are simply an infinite repeat of the last extreme value. There may be such points in the climate system.”

The notion that the only relevant extremes in climate change are “Snowball Earth or possibly final sequence Red Star absorption of inner planets” is disingenuous, as if only a single global average temperature matters, and distracting from the multitude of climate proxy data which absolutely do show absolutist break points.

Tree rings no longer vary in annual growth when the tree dies due to drought, or change in frost cycle, or bark beetles surge due to milder winter minimums (as is absolutely the case over millions of evergreen acreage in the US West and Alaska). Quality of life trends in Shismaref, Alaska will reach static points on graphs when the village site is abandoned due to encroaching sea level, and the residents scatter. Proxy data has been useful to supplement and confirm measurable weather parameters in defining climate, and have even more value in defining impact of the changes they document.

Of course insurance companies are worried: denial does not work when the damage is there in front of the agent. Oil and coal folks, and their willing shills, continue to deny the larger reality of global warming, to postpone any discussion of attribution for as many news cycles (and installments to personal fortune) as possible.

Maybe the next step is to shift focus on the proxy data from confirmation of climate change, to what it does even better – provide defining evidence of how we are spoiling the nest for ourselves, and not destroying the earth as snowball or red death. The debate over reality of global warming is settled for anyone seriously looking, and is simply the wrong old question.

Paul Middents – So Roger Pielke Jnr has a small coterie of sycophants. Is that unusual for fantasists? It’s difficult to ascertain whether he can’t understand Rahmstorf & Coumou’s paper, or his ideological blinkers are so powerful that he’s hell-bent on misrepresenting what they did.

[Response: He definitely doesn’t understand the paper, that’s for sure. Textbook case of misrepresentation. We’ll try to explain it to him one more time.–Jim]

As for the NOAA page, I wonder why they shift their definition of “Western Russia” in their response? Seems to be a strong warming hole in the north-East segment of their map, which includes Moscow. I’ve had a brief look at the RSS satellite temp data. Their global map shows strong warming in the region (from 1979 onwards), but I lack the technical nous to construct a decent image.

Also the NOAA guys seems to be somewhat inconsistent. Their analysis of the physical changes (attribution) was restricted in scope (as Kevin Trenberth pointed out), but their statistical analysis is more broad in scope. Seems a bit schizophrenic.

I guess it boils down to this: which is the better indicator of record-breaking warm extremes in Moscow?, the local temp series?, or Western Russia temps?

Re: #89 (Bruce Tabor)

If I understand you correctly, then I think there’s something to what you say, which boils down to this: we wouldn’t even be talking about Moscow if it hadn’t had such an extreme heat wave. Hence we’re not discussing New York, Melbourne, or Los Angeles. Given that Moscow was chosen because of its temperature record, the extreme isn’t so unlikely, so it’s attribution to climate change is less plausible.

But it seems to me that this paper isn’t about “How likely is this extreme with climate change?” It’s about “How much more likely is this extreme with climate change, than without?” The fact that Moscow is chosen *because* of its heat wave doesn’t alter that likelihood ratio. In fact the main result of the paper doesn’t depend on observed data at all — no matter what the data or its origin, the likelihood of a new record changes when the time series is nonstationary, and when the series mean has shifted by a notable amount new records become far more likely. In addition: that seems to be what has happened in Moscow.

I’ll also mention that the autocorrelation of, say, Moscow temperature for a given single month’s time series (say, July) is pretty weak. And if you take the view that the time series is nonstationary, then we should look at the autocorrelation of the residuals from the nonlinear trend, which look to me to be indistinguishable from zero.

[Response: Hi Tamino, we’ve thought quite a bit about this issue as well. If the five-fold increase in the expected number of records was something special to Moscow, and we had picked Moscow because of the record found there, then this would be a concern. But of course we’ve done this analysis for the whole globe, for all months, and this five-fold increase is nothing special – neither in the expected number of records (based on the trends) nor in the number actually observed. But that is another paper. -stefan]

Re: #97 (Tamino)

Some previous comments wandered very close to a post hoc fallacy, this nails it:

“But it seems to me that this paper isn’t about “How likely is this extreme with climate change?” It’s about “How much more likely is this extreme with climate change, than without?”

The likelihood that this extreme occurred is unity, 100% probability, with or without climate change. This gets back to the best known post hoc fallacy, “with all the possible outcomes of the universe, what is the probability that it would end up the way it did?” The answer again is unity.

[Response: That’s not what he meant. He’s talking about relative strengths of evidence before the fact.–Jim]

Jim and Tamino,

First some common ground:

“no matter what the data or its origin, the likelihood of a new record changes when the time series is nonstationary, and when the series mean has shifted by a notable amount new records become far more likely.”

Simple and clear. However, independent of climate change, what is the probability that a new high temp record will be set somewhere next year? I assert that it is close to unity in any given year.

[Response: That’s a function of the number of stations you evaluate and the record length at each one. Whether it’s close to one or not cannot be stated ahead of time without that information. If you mean every station in the GHCN record, then yes, you are right, because there are a lot of them. Every station in Iowa, then probably not]

I further assert that if the temperature record at that site is broadly similar to the Moscow record (nonlinear increasing trend), an analysis will yield similar results to what is shown in the paper. I think you need a statistically significant number of extreme events temporally clustered to avoid the post hoc fallacy and satisfy the claim of “more likely.” I just don’t see how you can look at one extreme event a posteriori and ascribe some level of improbability to the null hypothesis that it was caused by natural variation.

[Response: Yes, that’s the point. A record similar to the Moscow record demonstrates a nonlinear trend–the values are autocorrelated to some degree. They wouldn’t be so in a stationary situation. This is evidence that the record is more likely due to the trend than to combined chance and stationarity. It does get tricky though if you have these multi-decadal scale oscillations overlaid on the trend. Then you need more time, or more series, to get the same level of confidence. But that’s sort of a different point than you’re making isn’t it? By the way, this was fished out of the spam folder.–Jim]

Re: 95

Hey Phil,

If you will please notice in a discussion of extremes, recognition of the boundaries or range are used to establish a mean. It depends on the definition of the hypothesis to define the analysis being explored. I was mainly hoping for Eli to share the hypothesis…

Hence, if we define the range of the extremes to be the conditions which life could possibly be seen on Earth, I suggested one possible range, (Keeping in mind that at some point in the final sequence Earth will become tidal locked and living organisms have been detected at depths over several hundred feet underground.)

If you are stating that the extremes are in reference to the maintenance of the climate of between 1880 and 1990 then the extremes are much smaller. However, it does raise a question as to how different would this 110yr period be when compared to the last 3ky, or 30ky? The issue is we have better measures of the changes between 12kya to today then we have of 24kya to 12kya.

As to extremes clearly the current extremes have not been seen in over 1000 yrs Sorry, I had not meant to be offensive, I was only hoping for a clarification. As I had stated elsewhere there have been rates of change at least as rapid in the last 130yrs, just the drivers were different and the baseline/mean lower. (Tilted parabola)

If we look at total outliers in a more variable climate (similar to the pre-anthropogene) the ratio of high to low show the higher variation count in the last 130yrs do appear more often, when looking my local records; however, overall my region is not very representative. There are changes occurring, the difficult thing for me is trying to define the processes involved.

Personally, I can see where changes in weather patterns can drive the averages higher, I am trying to understand how CO2 is driving the weather patterns. Applying an extreme mathematical tool in an attempt to differentiate between natural distribution and a forced/skewed distribution can be as simple as a dot plot. If we have multiple peaks a dot plot would easily point to multiple processes being measured. Likewise if the outliers show a skew the evidence should be clear. An examination of extremes would not seem to offer a clearer image, the point I guess is, what’s the point?

Cheers!

Dave Cooke