This week, PNAS published our paper Increase of Extreme Events in a Warming World, which analyses how many new record events you expect to see in a time series with a trend. It does that with analytical solutions for linear trends and Monte Carlo simulations for nonlinear trends.

A key result is that the number of record-breaking events increases depending on the ratio of trend to variability. Large variability reduces the number of new records – which is why the satellite series of global mean temperature have fewer expected records than the surface data, despite showing practically the same global warming trend: they have more short-term variability.

Another application shown in our paper is to the series of July temperatures in Moscow. We conclude that the 2010 Moscow heat record is, with 80% probability, due to the long-term climatic warming trend.

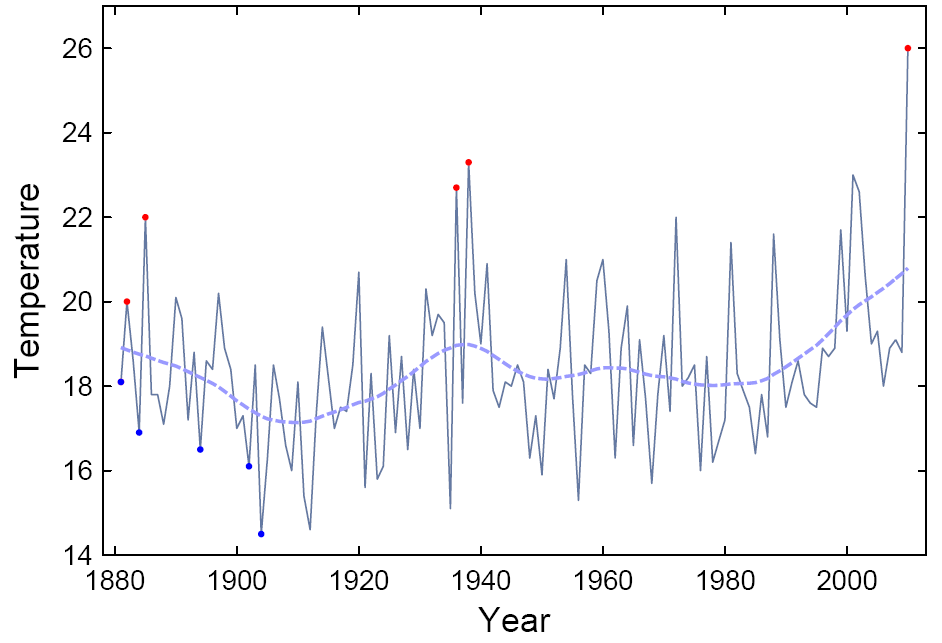

Figure 1: Moscow July temperatures 1880-2010. New records (hottest or coldest months until then) are marked with red and blue dots. Dashed is a non-linear trend line.

With this conclusion we contradict an earlier paper by Dole et al. (2011), who put the Moscow heat record down to natural variability (see their press release). Here we would like to briefly explain where this difference in conclusion comes from, since we did not have space to cover this in our paper.

The main argument why Dole et al. conclude that climatic warming played no role in the Moscow heat record is because they found that there is no warming trend in July in Moscow. They speak of a “warming hole” in that region, and show this in Fig. 1 of their paper. Indeed, the linear July trend since 1880 in the Moscow area in their Figure is even slightly negative. In contrast, we find a strong warming trend. How come?

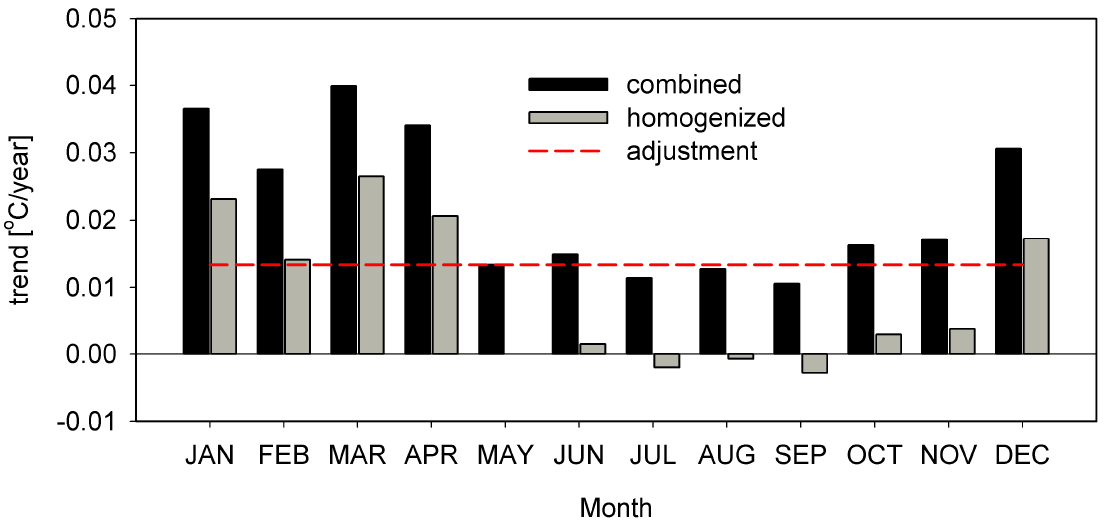

The difference, we think, boils down to two factors: the urban heat island correction and the time interval considered. Dole et al. relied on linear trends since 1880 from standard gridded data products. The figure below shows these linear trends for the GISS data for each calendar month, for two data versions provided by GISS: unadjusted and ‘homogenised’. The latter involves an automatic correction for the urban heat island effect. We immediately see that the trend for July is negative in the homogenised data, just as shown by Dole et al. (Randall Dole has confirmed to us that they used data adjusted for the urban heat island effect in their study.)

Figure 2: Linear trends since 1880 in the NASA GISS data in the Moscow area, for each calendar month.

But the graph shows some further interesting things. Winter warming in the unadjusted data is as large as 4.1ºC over the past 130 years, summer warming about 1.7ºC – both much larger than global mean warming. Now look at the difference between adjusted and unadjusted data (shown by the red line): it is exactly the same for every month! That means: the urban heat island adjustment is not computed for each month separately but just applied in annual average, and it is a whopping 1.8ºC downward adjustment. This leads to a massive over-adjustment for urban heat island in summer, because the urban heat island in Moscow is mostly a winter phenomenon (see e.g. Lokoshchenko and Isaev). This unrealistic adjustment turns a strong July warming into a slight cooling. The automatic adjustments used in global gridded data probably do a good job for what they were designed to do (remove spurious trends from global or hemispheric temperature series), but they should not be relied upon for more detailed local analysis, as Hansen et al. (1999) warned: “We recommend that the adjusted data be used with great caution, especially for local studies.” Urban adjustment in the Moscow region would be on especially shaky ground given the lack of coverage in rural areas. For example, in the region investigated by Dole et al (50N-60N/35E-55E) no single (or combined) rural GISS station (with a population less than 10,000) covers the post-Soviet era, a period when Moscow expanded rapidly.

For this reason we used unadjusted station data (i.e. the “GISS combined Moskva” data) and also looked at various surrounding stations, as well as talking to scientists from Moscow. In our study we were first interested in how the observed local warming trend in Moscow would have increased the number of expected heat records – regardless of what caused this warming trend. What contribution the urban heat island might have made to it was only considered subsequently.

We found that the observed temperature evolution since 1880 is only very poorly characterized by a linear trend, so we used a non-linear trend line (see Fig. 1 above) together with Monte Carlo simulations. What we found, as shown in Fig. 4 of our paper, is that up to the 1980s, the expected number of records does not deviate much from that of a stationary climate, except for the 1930s. But the warming since about 1980 has increased the expected number of records in the last decade by a factor of five. (That gives the 80% probability mentioned at the outset: out of five expected records, one would have occurred also without this warming.)

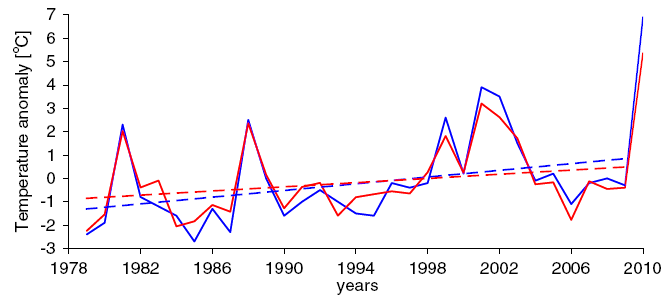

Figure 3: Comparison of temperature anomalies from RSS satellite data (red) over the Moscow region (35ºE–40ºE, 54ºN–58ºN) versus Moscow station data (blue). The solid lines show the average July value for each year, whereas the dashed lines show the linear trend of these data for 1979–2009 (i.e., excluding the record 2010 value).

So is this local July warming in Moscow since 1980 just due to the urban heat island effect? That question is relatively easy to answer, since for this time interval we have satellite data. These show for a large region around Moscow a linear warming of 1.4 ºC over the period 1979-2009. That is (even without the high 2010 value) a warming three times larger than the global mean!

So much for the “Moscow warming hole”.

Stefan Rahmstorf and Dim Coumou

Related paper: Barriopedro et al. have recently shown in Science that the 2010 summer heat wave set a new record Europe-wide, breaking the previous record heat of summer 2003.

PS (27 October): Since at least one of our readers failed to understand the description in our paper, here we give an explanation of our approach in layman’s terms.

The basic idea is that a time series (global or Moscow temperature or whatever) can be split into a deterministic climate component and a random weather component. This separation into a slowly-varying ‘trend process’ (the dashed line in Fig. 1 above) and a superimposed ‘noise process’ (the wiggles around that dashed line) is textbook statistics. The trend process could be climatic changes due to greenhouse gases or the urban heat island effect, whilst the noise process is just random variability.

To understand the probabilities of hitting a new record that result from this combination of deterministic change and random variations, we perform Monte Carlo simulations, so we can run this situation not just once (as the real Earth did) but many times. Just like rolling dice many times to see what the odds are to get a six. This is extremely simple: for one shot of this we take the trend line and add random ‘noise’, i.e. random numbers with suitable statistical properties (Gaussian white noise with the same variance as the noise in the data). See Fig. 1 ABC of our paper for three examples of time series generated in this way. We do that 100,000 times to get reliable statistics.

There is a scientific choice to be made on how to determine the trend process, i.e. the deterministic climate change component. One could use a linear trend – not a bad assumption for the last 30 years, but not very smart for the period 1880-2009, say. For a start, based on what we know about the forcings and the observed evolution of global mean temperature, why would one expect climate change to be a linear warming since 1880 in Moscow? Hence we use something more flexible to filter out the slow-changing component, in our case the SSAtrend filter of Moore et al, but one could also use e.g. a LOESS or LOWESS smooth (as preferred by Tamino) or something else. That is the dashed line in Fig. 1 (except that in Fig. 1 it was computed including the 2010 data point; in the paper we excluded that to be extra conservative, so the warming trend we used in the analysis is a bit smaller near the end).

After making such a choice for determining the climate trend, one then needs to check whether this was a good choice, i.e. whether the residual (the data after subtracting this trend) is indeed just random noise (see Fig. 3 and Methods section of our paper).

One thing worth mentioning: whether a new heat record is hit in the last ten years only depends on the temperature of the last ten years and on the value of the previous record. Whether one includes the pre-1910 data or not only matters if the previous record dates back to 1910 or earlier. That has nothing to do with our choice of analysis technique but is simply a logical fact. In the real data the previous record was from 1938 (see Fig. 1) and in the Monte Carlo simulations we find that only rarely does the previous record date back to before 1910, hence it makes just a small difference whether one includes those earlier data or not. The main effect of a longer timeseries is that the expected number of recent new records in a stationary climate gets smaller. So the ratio of extremes expected with climate change to that without climate change gets larger if we have more data. I.e., the probability that the 2010 record was due to climate change is a bit larger if it was a 130-year heat record than if it was only a 100-year heat record. But this is anyhow a non-issue, since for the final result we did the analysis for the full available data series starting from 1880.

Update 16 January 2013: As hinted at in the discussion, at the time this was published we had already performed an analysis of worldwide temperature records which confirms that our simple statistical approach works well. In global average, the number of unprecedented heat records over the past ten years is five times higher than in a stationary climate, based on 150,000 temperature time series starting in the year 1880. We did not discuss this in public at the time because it was not published yet in the peer-reviewed literature. Now it is.

In a typical display of good faith honest brokery, Roger Pielke Jr has accused you of playing games, being uninterested in furthering scientific knowledge, publishing the paper as an attempt to generate media coverage and generally being a pair of untrustworthy sneaks. Nice.

http://rogerpielkejr.blogspot.com/2011/10/games-climate-scientists-play.html

A pretty disgraceful attack if you ask me.

[Response: It is factually wrong, too. Pielke claims we did not report the sensitivity to the start date, but we did. With start date 1910 we get a probability of 78% that the record heat was due to the warming trend, and with start date 1880 we get a probability of just over 80%. It says so very clearly in the passage that Pielke emailed me about:

“Because July 2010 is by far the hottest on record, including it in the trend and variance calculation could arguably introduce an element of confirmation bias. We therefore repeated the calculation excluding this data point, using the 1910–2009 data instead, to see whether the temperature data prior to 2010 provide a reason to anticipate a new heat record.With a thus revised nonlinear trend, the expected number of heat records in the last decade reduces to 0.47, which implies a 78% probability [(0.47 − 0.105)∕0.47] that a new Moscow record is due to the warming trend. This number increases to over 80% if we repeat the analysis for the full data period in the GISS database (i.e., 1880–2009), rather than just the last 100 y, because the expected number for stationary climate then reduces from 0.105 to 0.079 according to the 1∕n law.”

The only reason why some of our analysis covers 100 years is because it started out as a purely theoretical study with synthetic time series produced by Monte Carlo simulations, and for those I just picked 100 years. Only later did we apply it to some observational series as practical examples.

Very sinister games indeed!

stefan]

Furthermore, I note from the comments of his blog that Roger has sent Stefan an email (somewhat more polite than his blog post) asking for clarification of the research. One would have thought he would do this before launching a blog based attack. I wonder what on earth makes him think that writing an innuendo laced diatribe and then sending an email to your target is a reasonable way to proceed?

[Response: Well, this approach has worked very well for McTiresome.–eric]

Truly excellent to see progress in this area.

Thank you Stefan and Dim Coumou

Stefan,

Roger informs me that you have confirmed his critique, and calls it a “fine example of cherrypicking”:

“Rahmstorf confirms my critique (see the thread), namely, they used 1910-2009 trends as the basis for calculating 1880-2009 exceedence probabilities. You can call that jackassery or whatever. I call it math and stand by my critique. A finer example of cherrypicking you will not find.”

[Response: That is truly bizarre, since what I responded to Pielke (in full) was: “We did not try this for a linear trend 1880-2009. The data are not well described by a linear trend over this period.” As shown in the paper and above, our main conclusion regarding Moscow (the 80% probability) rests on our Monte Carlo simulations using a non-linear trend line, and of course is based on the full data period 1880-2009. Nowhere did we “use 1910-2009 trends as the basis for calculating 1880-2009 exceedence probabilities”, and I can’t think why doing this would make sense. Faced with this kind of libelous distortion I will not answer any further questions from Pielke now or in future. As an aside, our paper was reviewed not only by two climate experts but in addition by two statistics experts coming from other fields. If someone thinks that using a linear trend would have been preferable, that is fine with me – they should do it and publish the result in a journal. I doubt, though, whether after subtracting a linear trend the residual would fulfill the condition of being uncorrelated white noise, an important condition in this analysis. -stefan]

SteveF wrote: “One would have thought he would do this before launching a blog based attack.”

Perhaps one who is unfamiliar with Roger Pielke Jr’s longstanding pattern of such behavior would have thought that.

A counterintuitive feature of all this: if there’s no trend, that makes the record still more of a statistical outlier, yet it must be due to natural variability since there’s nothing else left to which to attribute it. But if there is a trend, that opens up a possible alternate attribution–even as the record becomes ‘less extreme,’ so to speak.

Almost a paradox, and something that will probably generate some confusion. (Indeed, perhaps I have it wrong, and it has therefore already done so!)

The problem Stefan explains with the spuriously-normalized year-round urban heat island adjustment in the previous study is a crisp, iconic example of how statistical techniques can go astray if you lose track of the meanings behind the numbers.

Stefan’s “key result” is also very well-phrased: “The number of record-breaking events increases depending on the ratio of trend to variability.” But of course!

Thanks for your work here, and for another good read.

Well, since Roger has no reputation worth guarding, what, indeed, does he have to lose?

Stefan-

Could you answer the following two questions?

The addition of data from 1880 to 1910 to the analysis changed the trend (and expected probability of extremes in the current decade, using your unique definition of “trend”) as compared to the analysis starting in 1911 by:

a) zero

b) a non-zero amount

A second question for you:

Please point to another attribution study — any ever published — that uses the same definition and operationalzation of “trend” that is introduced in this study.

Thanks!

[Response: I’ll let Stefan answer the second question if he can make sense of it (I cannot, since there is no new definition of trend introduced), but I’ll answer the first question. The answer is (b).–eric]

This is a bit off subject. I love what this site does and it’s helped to make me much more informed about AGW. I come everyday and read the articles and postings.

I’ve been trying to find the Youtube video of the Heartland Institutes forum on AGW which I believe occured in 2008. The main speaker asked the attendants to please quit using “It hasn’t warmed since 1998” meme because, as I remember, he told them “When we make claims that are so easily debunked we lose credibility.”

I first saw this clip in one of your posts, as I remember.

Thanks, dj

Thanks Eric,

What then is (a) the value for the trend starting from 1880 vs. 1910 and (b) the expected probability of extremes in the current decade started from 1880 (it was 0.47 starting from 1911)?

Thanks!

[Response: You’re missing the argument about the importance of nonlinear trends, particular wrt the large increase in warming since 1980, in driving the pattern of expected and observed extremes, as discussed on pages 3-4 of the article. You seem to be thinking only in terms of a linear trend. With a nonlinear trend, what happened from 1880 to 1910 is relatively less important than it would be in an analysis based on a linear trend –Jim]

Thingsbreak has a relevant update that is apropos.

I don’t know about how other RC readers and lurkers would feel, but once again, Pielke’s games are boring and shrill IMO.

@ Dale

Try this one (but it’s from 2009): http://www.viddler.com/explore/heartland/videos/58/

@10 Dale, I think you refer to the presentation given by Scott Denning in 2010: http://www.youtube.com/watch?v=kkL6TDIaCVw

He gave another excellent presentation this year at the same conference: http://www.youtube.com/watch?v=P-oXWUdoXX0

Interesting about record setting events not being as frequent when the variability is greater compared to a long-term trend. Intuitively I guess that if the long-term trend is basically flat, there’s less likelihood of either record heat waves or cold snaps over time because there’s less nudging either way. If the trend is warming I’d expect fewer record-setting cold spells, but that would be more than offset by the rising frequency of record heat waves. With a long-term cooling trend I’d expect the opposite. Strong short-term variability would mean that even in a warming trend there would be more cool years than for the same trend with less variability, and vice-versa for cooling trends.

Is that about right?

@4 things break:

I could easily find a better example in his cherry-picking 1998 as the starting point to construct a temperature trend that was flat, when no other years around that time produced flat trends. He exited the discussion at SkS with the excuse that he’d blog about it later, but so far hasn’t. And as with Stefan and Dim’s paper, he misrepresented (or severely and continually misunderstood) what what actually presented to him.

@14WheelsOC

That (SkS) was Senior, not Junior.

The conclusion I reached in 1975 was “punctuated equilibrium”. This is the first study that I have seen that reaches in that direction.

While it is appropriate to consider the question from the “bottom up”, the specie will be gone before the data is all in. Calculating the category of the tornado is silly when the periphery is spawning chicks that are bending steel.

You are still applying mathematics to data which is appropriate because that is what you know how to do. We can generate no definitive answers by considering all the denizens of Lovelock’s lovely forest. But it’s a biological process, nevertheless.

@14 Regarding SkS and the short-term trend: this concerns the father of the Roger Pielke that things-break is talking about. Trees, apples and distance travelled seems to apply here.

This is the point when Pielke Jnr. should step down gracefully, be honorable and apologize for jumping the gun.

Instead what I’m reading here and on his blog is Roger Jnr. being contrarian, argumentative and making yet more unfounded and snide allegations. Ironic given that roger authored a book titled “The honest broker”. His behaviour (now and prior to this) has been the every antithesis of that expect from an “Honest broker”. Andy Revkin has Pielke Jnr. in his Rollerdex why?! Andy are you reading this and taking note?

Roger might also want to read Barriopedro et al. (2011) who looked at much longer study period than the data GISS permit and who concluded:

Quite scary really.

There is another option open to Roger, instead of nit picking and engaging in innuendo and making false accusations, he can demonstrate convincingly and quantitatively to all here that he has a valid point that has a marked impact on the paper’s findings. In other words, he can try and stick to the science.

https://www.google.com/search?q=misunderstood+Pielke+Jr.

That’s settled.

Could we not talk about that any more?

Could we talk about the science paper as published?

[Response: Your link was broken so I fixed it. Here is a nice example of an economist ‘misunderstanding’ RPJr..]

Whoops, my mistake. I’ll just shut up now.

Thanks Jim,

I understand what you are saying, but I am asking for some numbers to back up Eric’s claim in #9 above.

Can you provide the numbers that show how (a) the addition of 1880-1909 alters the trend calculation (from that starting in 1911) and (b) based on adding 1880-1909, how that changes the expected number of heat records (from the 0.47 based on 1911).

My assertion is that the addition of 1880-1909 changes neither of these values, and Eric said otherwise. So I’d like to see the numbers.

I agree with you 100% that “With a nonlinear trend, what happened from 1880 to 1910 is relatively less important than it would be in an analysis based on a linear trend”.

What I would like to see is the quantification of “relatively less important” in this case. Can you provide the numbers behind (a) and (b)?

Thanks!

[Response: You can back calculate (b) from the numbers given: (x – .079)/x ~= 0.8, so x ~= 0.4–Jim]

I’ve been trying for some time to draw attention, including the excellent RealClimate crews, to this 1992 vintage JASON report:

http://www.fas.org/irp/agency/dod/jason/statistics.pdf

In 1992 they set a probability on there being no climate change at less than p=1e-5 by pretty much the same method this new article does.

I am not Stefan, but if I understood the paper correctly, I might be able to answer Roger’s questions.

(a): the trend is a curve, not a value. The curve from 1880 is essentially a backward extension of the curve from 1910. Around 1910 you might see small differences (due to the smoothing technique used, and edge effects) but not later.

(b) It too will be 0.47. Note that the last decade only has to “beat” the last previous record, and for this positive-trending time series that record will not be from between 1880 and 1910 — rather, from around 1930 or thereabouts.

I will not claim that low-pass filtering goes all the way back to Joseph Fourier… but I wouldn’t be surprised if it did ;-)

-24-Martin Vermeer

Indeed, this is my view as well from reading the paper. Another way to put the same point — the addition of 1880-1909 does not change anything. In fact, no matter what the temperature did prior to 1880, if I extended the record back to say 1750, I could be 99% certain that the recent record was due to trend (using the 1/n rule).

My question about the use of “trend” was specific to the climate attribution literature, and not the idea of smoothing itself;-)

Thanks.

Roger first claimed that Stefan left out the 1880-1910 data.

In actuality, data from 1880-2009 were used in the paper. Roger was grossly mischaracterizing Stefan and Dim.

Roger then claimed that Stefan “confirmed” Roger’s critique, explicitly claiming that Stefan confirmed that he “used 1910-2009 trends as the basis for calculating 1880-2009 exceedence probabilities.”

In actuality, Stefan merely agreed that he did not use a linear trend from 1880-2009. Roger was grossly mischaracterzing Stefan.

Roger is now claiming that his original position was that “the methodology used by Rahmstorf renders the data prior to 1910 irrelevant to the mathematics used to generate the top line result”, a position that appears nowhere in his blog post.

In actuality, Roger originally claimed that the 1880-1910 data were ignored entirely. Roger was grossly mischaracterizing Roger.

I’m seeing a pattern.

Very odd how Roger Jnr. is now trying continue the discussion here while ignoring the fact that he has accused the authors of cherry picking and deceiving readers; I find that pretty duplicitous. Even more bizarrely, Roger is now asking other people to provide him with numbers while failing to back up his assertions with numbers.

Has Roger Jnr. any intention of apologizing to the authors and any intention of making his own calculations?

Stefan and the RC moderators continue to show incredible patience and tolerance.

for Dale: answered in the most recent of the Open threads

This is too funny. Roger is now saying that he never claimed Stefan and Dim did not include the 1880-1910 data, but rather that the “the methodology used by Rahmstorf renders the data prior to 1910 irrelevant to the mathematics used to generate the top line result”.

Yet, Roger himself plainly said those data were excluded from analysis:

“any examination of statistics will depend upon the data that is included and not included. Why did Rahmsdorf and Coumou start with 1911?”

“There may indeed be very good scientific reasons why starting the analysis in 1911”

“my assertion [is] that they analyzed only from 1911.”

The last one is particularly amusing, in its full context:

“You can assert that they were justified in what they did, and explain why that is so, but you cannot claim that my assertion that they analyzed only from 1911 is wrong.”

No, Roger, I can and do say that your “assertion that they analyzed only from 1911 is wrong.”

[OT – please note your post was moved to the open thread]

Thanks for this article. It is applicable to

http://community.nytimes.com/comments/dotearth.blogs.nytimes.com/2011/10/20/skeptic-talking-point-melts-away-as-an-inconvenient-physicist-confirms-warming

where McTiresome misreads Dai.

Technically, if we’re going with non-linear (curvy) trendlines, then you only need about 30 years of recent uptick to claim correlatable significance (and >50% derived percentages) for nearly any meteorological deviations extending back all the way to 1850 if you like, no? Can we declare a non-linear trend and then start the data at 1950 and still be fine? The veritable attribution floodgates are open, yes?

A more appropriate title for Roger’s post is “The games ‘skeptics’ play”. Below is the latest inane commentary that Roger Jnr. is now peddling:

More empty rhetoric. Just because Dole et al. (the paper that gives Roger the answer he craves) used a linear trend it does not make it correct or superior. Which yields a better fit to the data (i.e., R^2) a linear trend or a non-linear trend?

Also, Stefan said “If someone thinks that using a linear trend would have been preferable, that is fine with me – they should do it and publish the result in a journal.”

Roger then presents Dole et al. again to try and say “ah ha!”. But nowhere in their paper do Dole et al. make the case that a linear trend is preferable to a non-linear trend. . So that is not a valid answer to Stefan’s challenge. It seems that Roger should read Dole paper again too.

I’m assuming that Stefan used a linear trend in Fig. 2 above to be consistent with Dole et al. Roger also needs to read the main post above, it nicely explains why Dole et al did not find a warming trend in the data. So the entire point about trends is moot.

The fact remains that Roger Pielke Jnr’s initial libelous assertion is still wrong, but that has not stopped him form repeating it.

The large picture of cherries on Roger’s page (his dad prefers to use images of Pinocchio to smear climate scientists) are clearly intended to smear Stefan and Dim, and climate scientists in general. Him doing that, and his disgraceful behaviour here and from the safety of his blog goes to underscore the vacuity of Roger’s supposed argument. It is Roger who is ‘flipping out’.

Fortunately for Roger, it is never to late to admit error and say sorry. Well, at least doing so is not an issue for men of honor and integrity, or “honest brokers” for that matter.

Stefan — Clear. Thank you.

A remark to the question of anthropogenic influence of the Moscow heatwave:

All discussions and the papers I have seen until now (including Dole et al. and the paper discussed here) have concentrated on the influence of the increase of mean temperature change.

However, as is widely acknowledged and also stressed by Dole et al., a main reason for the heatwave was a long lasting “blocking” situation, (and they attribute that “automatically” to natural variability). Blocking has to do with the persistence of weather situations in general. One possible impact of anthropogenic climate change might be a change of the (spatial and temporal) persistence of weather situations (e.g. stationary rossby waves). There is not much literature on this topic, although persistence of weather situations might have a high impact on extreme events (droughts as well as floods).

Any thoughts?

Ricky Rood looks at other ways in which the 2010 Russian heat wave was extreme: http://www.wunderground.com/blog/RickyRood/comment.html?entrynum=208.

I have just recently heard of Extreme Value Theory (EVT) and have been reading the blog post “Extreme Heat” at Tamino’s blog and also viewing Dan Cooley SAMSI talk on “Statistical Analysis of Rare Events”.

my question is can you give me some idea of the tradeoffs involved in deciding when to take a EVT vs Monte Carlo approach to the topics of extreme events.

thanks.

RE: 35

Hey Urs,

As what I intended to share earlier today is lost, may I suggest in its place that before we consider that an event is abnormal that we examine some simple points. One, the runway numbers of Domodedovo International, as an indication of local normal seasonal winds. Two, the 2010 wind-rose for July in Moscow.

If the weather system was abnormal, the two values should not share a similarity. If the system was normal; but, more intense the runway directions and wind-rose should match up well. Suggesting it may not be a change in the pattern; but, the duration/intensity. This should point towards whether or not it is natural variation, “on steroids”.

The next effort should be to back out to get a wider view to see if there might be a corresponding; but, inverted weather variation within say, 3000km to the West. Point being, most extremes in the temperate zone should have a “inverted partner”.

If you have a condition changing say, the convective processes in one region there should be a equivalent; but, opposite event, (cool rainfall), in the vacinity. The distance between them being governed by the height of the Rossby convective plume, where heat and water vapor content suggest the residence time. (Add more heat and the duration and content of high altitude water vapor increases as well as the height that it is lifted too/falls from. (think PSCs))

The main point I am suggesting is, though analysis and the law of large numbers can tell us a lot about processses that are measured with what seems to be random numbers, actually may not apply here.

With an X-barred we can find the change about an expected mean. With a rule such as three points on the same side of the mean suggests the values around the mean may be skewed. I do not think we can equate that variability decreases in the face of extremes. I think that idea misses that weather may not follow normal analytic rules…. (As it is an irrational and partial open system process, the only limits are governed by airmass content and direction (phase).)

Cheers!

Dave Cooke

@35 A recent publication that addresses stationary Rossby waves: “Warm Season Subseasonal Variability and Climate Extremes in the Northern Hemisphere: The Role of Stationary Rossby Waves” by S. Schubert, et.al. in Journal of Climate Vol 24, no. 18, 4773-92 (15 Sept. 2011). I quote from their abstract http://journals.ametsoc.org/doi/abs/10.1175/JCLI-D-10-05035.1

“The first REOF in particular consists of a Rossby wave that extends across northern Eurasia where it is a dominant contributor to monthly surface temperature and precipitation variability and played an important role in the 2003 European and 2010 Russian heat waves.” (REOF is a rotated empirical orthogonal function)

> most extremes in the temperate zone should have a “inverted partner”.

Thus there can’t be an overall trend, just variations?

Has someone published work establishing such an “inverted partner” exists, do you recall? Or is this an idea tossed out hoping someone can find facts for it? (Not me, I’m done searching for such, but I remain fascinated by the process.)

Urs, I did find quite a few papers, poking around, some mentioning changes in blocking with warming climate. Have you any in particular? I know nothing about the subject, obviously. Here’s a press release: http://www.sciencedaily.com/releases/2010/02/100218125535.htm

http://www.eurekalert.org/pub_releases/2010-02/uom-wpt021810.php

from the Eurekalert link:

“Atmospheric blocking occurs between 20-40 times each year and usually lasts between 8-11 days, Lupo said. Although they are one of the rarest weather events, blocking can trigger dangerous conditions, such as a 2003 European heat wave that caused 40,000 deaths. Blocking usually results when a powerful, high-pressure area gets stuck in one place and, because they cover a large area, fronts behind them are blocked. Lupo believes that heat sources, such as radiation, condensation, and surface heating and cooling, have a significant role in a blocking’s onset and duration. Therefore, planetary warming could increase the frequency and impact of atmospheric blocking.

“It is anticipated that in a warmer world, blocking events will be more numerous, weaker and longer-lived,” Lupo said. “This could result in an environment with more storms. We also anticipate the variability of weather patterns will change dramatically over some parts of the world, such as North America, Europe and Asia, but not in others.”

Lupo, in collaboration with Russian researchers from the Russian Academy of Sciences, will simulate atmospheric blocking using computer models that mirror known blocking events, then introduce differing carbon dioxide environments into the models to study how the dynamics of blocking events are changed by increased atmospheric temperatures. The project is funded by the US Civilian Research and Development Foundation – one of only 16 grants awarded by the group this year. He is partnering with Russian meteorologists whose research is being supported by the Russian Federation for Basic Research.

Lupo’s research has been published in several journals, including the Journal of Climate and Climate Dynamics. He anticipates that final results of the current study will be available in 2011.”

Roger, minor nit… reaching 99% would mean, by the 1/n rule, going back 2 kyrs, not 260 years ;-)

More importantly, “no matter what the temperature did prior to 1880”, not true. Temperatures would have to remain well below 1930s level for the whole period 1750-1880, for this to work out, not setting any record that would not subsequently be broken in the 1930s. Only then would your statement hold.

But then, wouldn’t such a prolonged low-temperature period provide added background to the recent record-breaking decade? Don’t you agree that ‘unprecedented in 260 years’ is a legitimately stronger statement than ‘unprecedented in 130 years’?

Sure, and I only wanted to point out that people have been doing these things since well before climatology became a science with a name of its own. But no, the paper isn’t doing ‘attribution’. Attribution is physics, not something a purely statistical analysis can do.

[Response: Martin, that is actually one of those cases where a layperson’s intuition agrees with statistics. Any layperson will understand that a 1000-year heat record is more exceptional and less likely to happen just by chance – and thus more likely due to climate change – than a 100-year record or a 10-year record. That is indeed the case, and is why considering that the Moscow heat wave was a 130-year record gives a greater probability of it being due to climate change than looking at it just as a 100-year record. Very simple.

Spot-on observation about attribution, by the way. -stefan]

RPJr writes in an update (2):

“I’ll be using the RC11 paper in my graduate seminar next term as an example of cherry picking in science — a clearer, more easily understandable case you will not find.”

Let’s hope the students also learn about critical thinking skills. Perhaps a pointer to SkS could provide them with a pool of cherrypicking examples that Roger could have used to his heart’s content.

RE:40

Hey Hank,

The original NOAA paper, that Drs. Trenberth and Rahmstorf countered concerning the lack of a warming trend, describes the “Siberian Partner”. You cannot have an “event” without a counter in a closed system. The changes we see are where the partial open system is closing down.

That there are extremes in both directions was projected tens of years ago. That these “events” should have a corresponding counter event is what concerns me wrt polar sea ice melt.

If there is a “pipeline” that exhausts equatorial heat, then it should point to a cooling effect at a lower latitude. It is kind of like the development of a Great Plains tornado outbreak. Overcome the 4km/700-500mb temperature inversion and like a cork in a soda bottle, the release of the backpressure should release most of the contents. We are not seeing that “event” in the weather patterns…

It is like if you reduce the heat by allowing it to escape, you are reducing the pressure, causing the “water” to “boil” anyway… You can’t win for losing until you either remove the pot from the stove or put a pressure lid on it…

Cheers!

Dave Cooke

#44–“Perhaps a pointer to SkS could provide them with a pool of cherrypicking examples that Roger could have used to his heart’s content.”

What a delightfully wicked suggestion!

Bart @44 you must then also be referring to Roger Pielke Jnr’s dad’s cherry picking exposed at Skeptical Science ;) Examples of cherry-picking by Pielke Snr. have have also been discussed here at RealClimate. So Roger Jnr. need not look very far at all for real, genuine examples of shameless cherry picking, not trumped up ones.

And yes, any student with critical thinking skills will see right through Roger Jnr’s plan, especially when they read the paper in question ;)

Sorry, David, once again, I have no idea what you’re talking about.

This is why cites to sources help in discussing science, and you don’t.

MapleLeaf @ 47: “And yes, any student with critical thinking skills will see right through Roger Jnr’s plan, especially when they read the paper in question ;)”

Um, yes, about that paper, where is it besides somewhere over the paywall?

Very few people will read it, so RPJr scores for the absurd but ever so convenient for Big Carbon “evil climatologist” meme.

[Response: See link in our PS. ]

As entertaining as it is, we should probably ignore Pielke Jnr. digging himself ever deeper into denial and a deep hole ;) Urs Neu @35 asked about blocking. I found this paper that may be useful, but their focus is on winter temperatures.

Stefan,

I was very interested to read that the annual mean UHI adjustment was applied for all months in the GISTEMP data. What about HadCRUT and NCDC? Do they do the same? What would have Dole et al. (and you) have found for July and August trends had you looked at NCDC or HadCRUT?

Much focus has been placed on July temperatures, but the Russian heat wave extended into mid August. Any thoughts on that?