Is the number 2.14159 (here rounded off to 5 decimal points) a fundamentally meaningful one? Add one, and you get

π = 3.14159 = 2.14159 + 1.

Of course, π is a fundamentally meaningful number, but you can split up this number in infinite ways, as in the example above, and most of the different terms have no fundamental meaning. They are just numbers.

But what does this have to do with climate? My interpretation of Daniel Bedford’s paper in Journal of Geography, is that such demonstrations may provide a useful teaching tool for climate science. He uses the phrase ‘agnotology’, which is “the study of how and why we do not know things”.

Furthermore, many descriptions of our climate are presented in terms of series of numbers (referred to as ‘time series‘), and when shown graphically, they are known as curves. It is possible to split curves into pieces in an analogous way to how π may be split into random numbers.

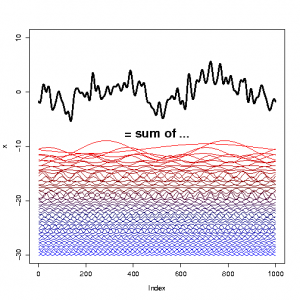

All curves (finite time series) can be represented as a sum of sine and cosine curves (sinusoids), describing cycles with different frequency. This is demonstrated in the figure below (source code for the figure):

The random time series, here represented as the bold curve on the top, may be physically meaningful, but the components made up of cosine and sine may not all have a physical interpretation (especially if the time series is from a chaotic or complex system).

However, cosine and sine curves represent only one special case, and there may be other curves that equally well can make up a time series. A technique called ‘singular spectrum analysis‘ (SSA), for instance, is designed to find curves with other shapes than sinusoids.

The process of representing a series of numbers as a sum of sinusoids (cycles) with different frequencies (or wave lengths) is known as a Fourier transform (FT). It is also possible to go the other way, from the information about the frequencies, and reconstruct the original curve – this is known as the inverse Fourier transform.

Fourier transforms are closely related to spectral analysis, but these concepts are not exactly the same. The reason is that all measurements hold a finite number of observations, and provide just a taste – a sample – of the process. The FT makes the assumption that the curve that is analysed repeats itself exactly for infinity, something which clearly is not the case for real noisy or chaotic data.

One of the gravest mistakes in the attribution of cycles is trying to fit sinusoids with long time scales to short time series. We will see some examples of this below.

In the meanwhile, it may be useful to note that spectral analysis tries to account for mathematical artifacts, such as ‘spectral leakage‘, probabilities that some frequencies are spurious, and the significance of the results. Anyway, there is a number of different spectral analysis techniques, and some are more suitable for certain types of data. Sometimes, one can also use regression to find the best-fit combination of sinusoids for a time series.

A recent paper by Loehle & Scafetta (L&S2011) in a journal known as the ‘Bentham Open Atmospheric Science Journal‘ (also discussed at Skeptical Science) presents some analysis using regression to describe cycles in the global mean temperature, showing us many strange tricks one can do with curves and sinusoids, in something they call “empirical decomposition” (whatever that means).

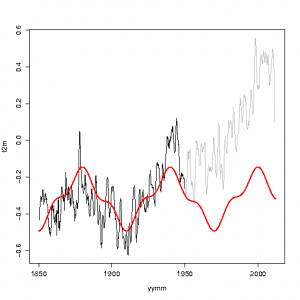

They fit 20-year and 60-year sinusoids to the early part (1850-1950) of the global mean temperature from the Hadley Centre/Climate Research Unit. I have reproduced their analysis below, although I do not recommend using this for any meaningful purposes than just having fun (source code)

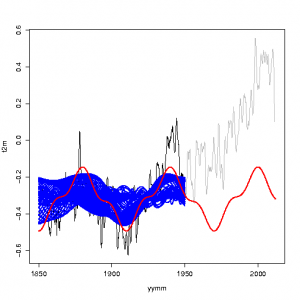

It’s typical, however, that geophysical time series, such as the global mean temperature, are not characterised by one or two frequencies. In fact, if we try to fit sinusoids with other frequencies (here only one was used rather than two), we get the following picture (source code):

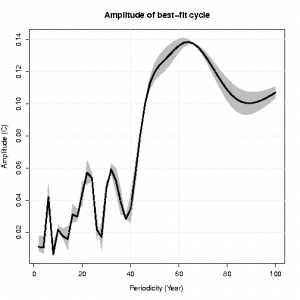

In fact, we can compare the amplitudes of these different fits, and we see that the frequencies of 20 and 60 years are not the most dominant ones (source code):

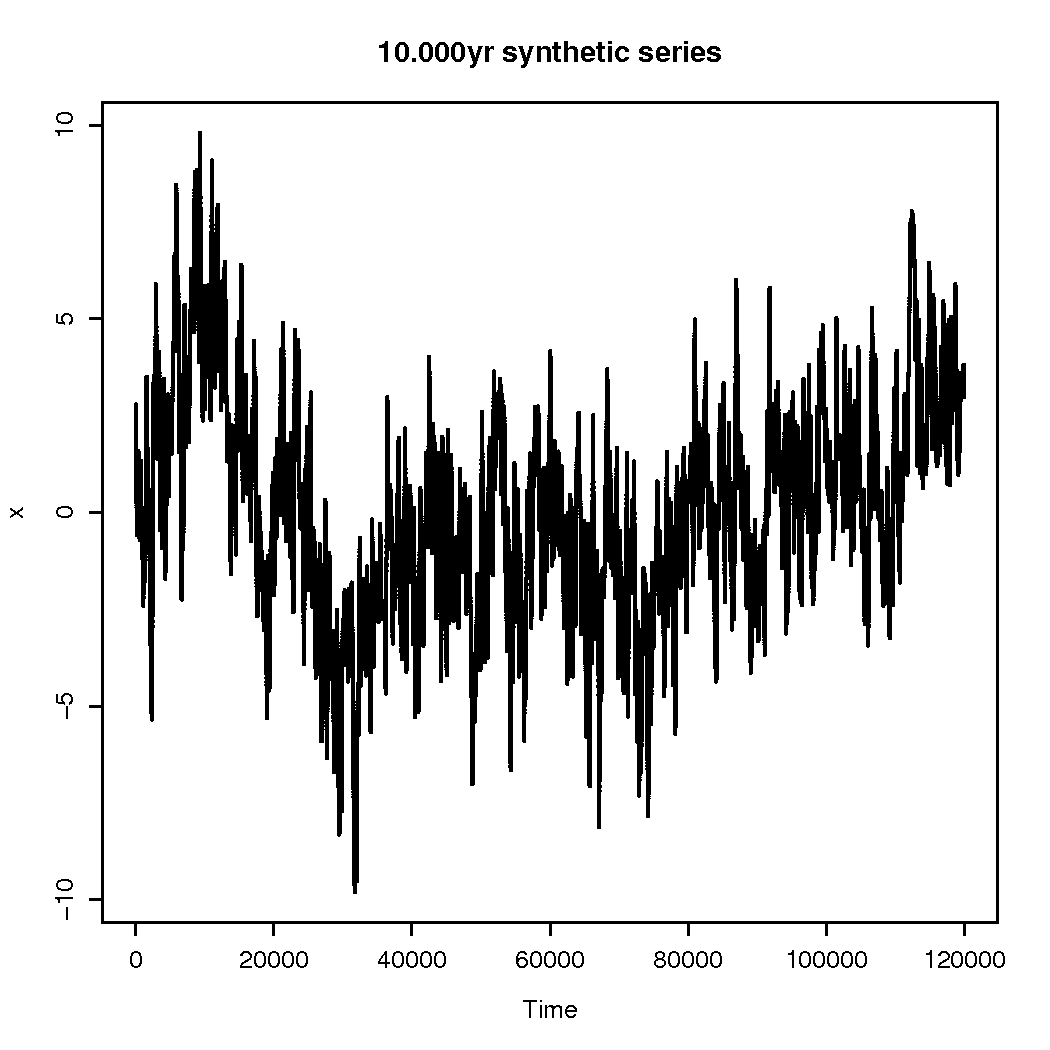

Fitting sinusoids with long time scales compared to the time series is dangerous, which can be illustrated through constructing a synthetic time series that is much longer than the one we just looked at. This time series is shown below (source code):

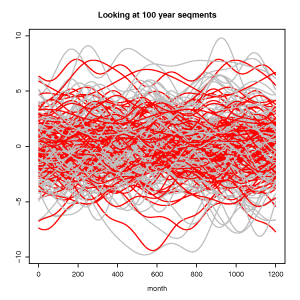

We can divide the above time series into sequences with the same length as that L&S2001 used to fit their model, and we can then do a similar fit to these sequences (source code):

The red curves, representing the best-fit are all over the place, and they differ from one sequence to the next, although they are all part of the same original time series. But the point here is that we could get similar results for other frequencies, and the amplitude for the fits to the shorter sequences would typically be 4 times greater than a similar fit would give for the original 10,000 years long series (source code). This is because there is a band of frequencies present in random, noisy and chaotic data, which brings us back to our initial point: any number or curve can be split into a multitude of different components, most of which will not have any physical meaning.

Loehle & Scafetta also assume that the net forcing of the earth’s climate is a linear combination of solar and anthropogenic. The fact that our climate system involves a series of non-linear processes, as well as non-linear feedback mechanisms that may be influenced by both, suggest that this position may be a bit simplistic – it ignores internal, natural variability for instance.

Some of the basis of L&L2011’s analysis can be traced back to a 2010 paper that Scafetta wrote on the influence of the great planets on Earth’s climate. I’m not kidding – despite the April 1st joke and the resemblance to astrology – Scafetta claims that there is a 60-year cycle in the climate variations that is caused by the alignment of the great gas giants Jupiter and Saturn. This conclusion is reached only 3 years after he in 2007 argued that up to 50% of the warming since 1900 could be explained by 11 and 22-year cycles (a claim which Gavin and I contested in our 2009 paper in JGR).

The Scafetta (2010; S2010) paper presents spectral analysis for two curves which are fundamentally different in character – to quote Scafetta himself:

Spectral decomposition of the Hadley climate data showed spectra similar to the astronomical record, with a spectral coherence test being highly significant with a 96% confidence level.

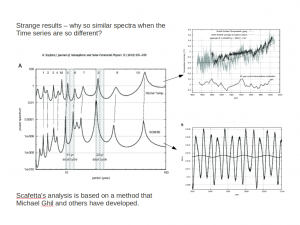

The problem is immediately visible in the figure below:

For any spectral analysis, it should in principle be possible to carry out the reverse operation to get the original curve. S2010 presented a figure showing the alleged spectra for the terrestrial global mean temperature and the rate of motion of the solar systems centre of mass. It is conspicuous when spectra of two very different looking curves appear to produce similar peaks.

So how was the spectral analysis in Scafetta carried out? He used the Maximum Entropy (MEM) method, keeping 1000 poles. The tool he used was developed by Michael Ghil and others, and the method is described in one of the chapters of a text book by Anderson and Willebrand (1996).

As the number of peaks increases with M [number of poles], regardless of spectral content of the time series, an upper bound for M is generally taken as N/2.

If X not stationary or close to auto-regressive, great care must be taken in applying MEM

So the choice of number (1000) of poles cannot be justified, as this will more likely lead to spurious results, and one of the time series is not stationary (temperature) while the other is far from being auto-regressive (astronomical record). It is worth pointing out that if an Akaike Information Criteria (AIC) is applied to this series (which balances the number of parameters used with the gain in goodness of fit), the number of poles is 32.

L&S2011 also claim that a 60-year must be the effect from Jupiter and Saturn. What is the implications of such an assertion? Does it mean that the broad frequency band, seen in the global mean temperature record, can be taken as evidence for many new unknown celestial objects affecting the solar system mass, and hence the solar activity? No, I don’t think so.

The solar activity shows a clear 11-year cycle, and no trend during the last 50 years, and no clear 60-year periodicity.

In my humble opinion, Loehle and Scafetta (2011) is a silly paper, making many claims with no support from science (about planets, the sun, GHGs, urban heat island, aerosols, GCMs, significance levels, and weird coherence results). I would not be surprised if rumours about the journals lacking rigour are true. But, on the other hand, Daniel Bedford may cherish the opportunity to learn from such mistakes.

References:

Anderson, DLT. and J. Willebrand, J.(1996) ‘Decadal Variability’, Springer, NATO ASI series, volume 44; chapter: ‘Spectral Methods: What they Can and Cannot Do for Climate Times Series’ by M. Ghil and P. Yiou

re 36 Patrick 027

See http://spaceplace.nasa.gov/barycenter/

You may be interested in this animation – http://www.youtube.com/watch?v=1iSR3Yw6FXo

The centre of mass is not subject to an inverse square law, it’s simply the average of the combined moments. The sun orbits the barycentre of the solar system. The orbit is not constant, but the combined effect of the gas giants is such that the radius can approach 1% of the earth’s orbit.

CM, 46, That is a good post.

It looks to me like “agnatology” is just one more pseudo-intellectual way to smear some of the skeptics. It’s as one-sided as Oreskes’ “Merchants of Doubt”, who somehow failed to note the “merchants of Belief” like Al Gore (I personally respect Al Gore’s work, but he is a merchant.)

Your post contributes to a case that I might be wrong.

There’s another way to look at the Loehle and Scafetta paper.

If (1) the temperature change since about 1850 is approximately the sum of a linear trend and some periodic components;

and if (2) the estimated function is a reasonable estimate of the effect of a persisting mechanism;

then their procedure is reasonable. Their paper does not establish or test the truth of either (1) or (2), and there is no good reason to believe that their result is accurate.

Such a use of mathematics is sometimes called “heuristic” (meaning leading in a useful direction), “hypothesis generation”, “the context of discovery” (vs. the context of testing), or “conjecture”.

A more elaborate example appears in section 3.3 of Raymond Pierrehumbert’s book Principles of Planetary Climte, which opens with some approximations (slightly reworded for brevity):

1. the only source of the temperature of the planet is absorption of light from the sun;

2. the planetary albedo is spatially uniform;

3. the planet is spherical and has a distinct solid or liquid surface which radiates as a perfect blackbody;

4. the planet’s temperature is uniform over its entire surface;

5. the planet’s atmosphere is perfectly transparent to the electromagnetic energy emitted by the surface.

5 is relaxed later on.

How much inaccuracy is introduced by the simplifications 1 – 4? I don’t know. Later on (p 163) the derivation of climate sensitivity omits clouds completely — does that additional simplification introduce non-negligible inaccuracy? I don’t know the answer to that either, and I don’t think anyone does.

To me it seems that the two calculations of climate sensitivity (L & S, P) make testable predictions, but neither is trustworthy for any other purpose than designing more experiments to learn what the climate sensitivity, starting with earth as it is now, really is. They could both be accurate enough (for short term and long term, respectively), or they both could be way off.

I do not think that either should be judged an example of “agnatology”.

SM at 52

‘False Balance’, much…?!

> agnatology

You’re spelling it wrong.

This is not a new term. See for example

http://frantzmd.info/Miscellaneous%20Writings/Agnotology.htm

“a very early use of the new word agnotology, if not its coining, in an article by Linda Schiebinger titled Agnotology and Exotic Abortifacients: The Cultural Production of Ignorance in the Eighteenth Century Atlantic World…. The article emphasizes the work of Maria Sibylla Merian from 1699 to 1701 in Surinam, then Dutch Guiana. She was the only one of many naturalists and anthropologists active among the indigenous peoples in Africa and the Americas who mentioned extensive use of abortifacients for “menstrual regulation” (population control) …. The silence of the others had to be self-censorship imposed by no authority but by a rather universal cultural bias….

…

… Mark Twain called willful withholding of vital information a silent lie….

A Lesson in Skepticism

A couple of generations ago (1938) a prominent citizen of Monroe was confronted by his frantic wife, ‘I have heard on the radio that the Martians are invading. What should we do?’

Pearl Guess answered calmly, ‘Turn off the radio.’

… Twain is even more concise: The only difference between fiction and nonfiction is that fiction should be completely believable.”

Re 45 EFS_Junior – um, what? I skimmed a little bit of one of those papers and I ‘kinda’ thought that it was basically computer modelling based on physics. Of course if there is some prediction that can be made that can be used to test the output, great, but I would think simply trying to describe how some system likely evolved based on what is known, parameterizing (with care/justification) where grid-scale requires it, etc, can provide something useful. Sorry I haven’t had the time to thoroughly look through Laskar, so maybe I was way off. PS I didn’t think Laskar was proposing that Jupiter and Saturn cause signficant 60-year climate cycles on Earth. Earth’s orbital cycles can/do have great influence on climate, even if the effects are a bit complicated.

Re 51 Tom P – Okay, I’ll look at those, but while I have the time now: yes, center of mass is shited in proportion to mass and distance, whereas gravity follows an inverse square law, which is roughly why I wouldn’t expect all the planets or the sun to orbit the barycenter – well, at least not neatly as if all the mass was concentrated at the barycenter (perhaps I misunderstood what you meant). Earth’s orbit isn’t affected by all planets in proportion to distance and mass, it should be affected in proportion to mass and some inverse of distance, shouldn’t it? – considering tides on the orbit, maybe an inverse cube for some aspects, but that’s getting a bit outside what I’m familiar with.

54, Hank Roberts: You’re spelling it wrong.

Yeh, thanks for the correction.

Re CM #46

Thank you! This is a very nice, clear post and hits more or less exactly what I was trying to get at in the article.

@45:

Very roughly (just using Newton’s LoUG), the gravitational force between Earth and Jupiter varies from about 8*10^17 N to about 2*10^18 N, which is between about 2*10^-5 and 6*10^-5 as much as the force between the Earth and the Sun. Jupiter orbits the sun in about 11.859 yr in the same direction as earth, but not quite in the same plane. I don’t know the current phase, but it matters only for long-term solutions.

I haven’t explored planetary mechanics, so I haven’t delved into Laskar’s methods. I’m curious that you find them so defective. You definitely should write a paper criticizing them, because Laskar is very widely cited (his 2004 paper has 367 cites in scholar.google.com). If he’s substantially off base, the consequences will be significant.

BTW, the solar system is not “not deterministic over long periods of time”. It’s chaotic, meaning that it’s deterministic in principle, but that the math that describes it is so sensitive to initial conditions that — in practice — it’s impossible to predict its state beyond some point. That said, the more accurately you measure a chaotic system’s initial conditions, and the less numerical error your models contain, the farther out you can validly predict the system’s state. Hence the “b = 0.00339677504820860133” that you criticized.

Finally, I don’t think it at all strange that a chaotic system’s state might be greatly perturbed, over long spans of time, by a force on the order of 10^-5 that of one of the principal forces in the system.

CAPTCHA: ready atsentsn

#59 & # 56

Milankovitch cycles? Check mark (i. e. yes they are real but not a full answer).

http://en.wikipedia.org/wiki/Milankovitch_cycles

Solar System barycenter? Check mark (background in naval architecture, been there, done that)

http://en.wikipedia.org/wiki/Center_of_mass

I’ve now had a more complete look at Laskar’s papers, quite readable, in fact.

His latest paper is here (free access);

http://www.aanda.org/index.php?option=com_article&access=standard&Itemid=129&url=/articles/aa/abs/2011/08/aa16836-11/aa16836-11.html

Titled “La2010: a new orbital solution for the long-term motion of the Earth”

So the main issue, as I see it, is eccentricity, from Laskar’s POV.

95Kyr, 124Kyr, and 405Kyr eccentricities, the 405Kyr has been partially confirmed in the geological record, but not the 95Kyr and 124Kyr to date.

The major problem I see with Laskar’s work is he assumes the now (today’s Solar System) for the then (60-250Myr in the past) and the to be (60-250Myr in the future).

So, for example, his modeling goes to the dumpster about 60Myr in EITHER direction. Meaning, that if he were on the Yucatán Peninsula, say 65Mya, a few hours before you know what hit right aboot there, he would claim that 65Myr in to the future (e. g. December 21, 2012AD), the Solar System would be in total chaos, simply bescuse that’s when his theory/numerical model goes to the crapper (he’s doing these calculations at 80-bit extended precision BTW, but I really don’t know why he’s not using quad (128-bit) precision). AFAIK that is what he’s claiming, inevitable chaos, because his model said so.

There are other issues as well, but basically the instability is highly non-linear, it’s flatlined most of the way, but then literally explodes.

So like tree rings and ice cores, the geologic record should contain all ~165 405Kyr cycles going back to the demise of the dinosaurs ~65Mya (which I conjecture, is what Laskar is really trying to get to, a more definitive dating of that exnction event). AFAIK that would have to be on the geologists to do list, finding all ~165 405Kyr events in the geologic record.

Onec that’s been done, then and only then, will I be satisfied with the 405K “constant” resonance issue, and you need other dating techniques to confirm it. All ~165 events will have to be spot on with Laskar’s 405Kyr number, otherwise no go.

Here are a few more links for you to mull over (in no particular order);

http://en.wikipedia.org/wiki/Geochronology

http://en.wikipedia.org/wiki/Stratigraphy

http://en.wikipedia.org/wiki/Lithostratigraphy

http://en.wikipedia.org/wiki/Biostratigraphy

http://en.wikipedia.org/wiki/Chronostratigraphy

and

http://en.wikipedia.org/wiki/Cyclostratigraphy

(e. g. Laskar’s work but the word “around” is used before all cycle periods named there, as if to say it’s not “exact” science)

My original complaint has always been with those who claim a 60-year deterministic cycle for Earth’s climate, and then L&S2011 go aboot claiming a 20-year deterministic cycle, right aboot there I lose it, I’ve had it with those 60-year cycle nut jobs.

At some point in time things are no longer deterministic, and as far as the Solar System goes, I happen to thing it is significantly less than 60Myr.

Laskar should be looking over this current ice age (say 2.4Mya to the present) and try to explain the 41Kyr to 100Kye transition, that’s what I’d be doing if I has his mathematical chops.

#59

An appeal to ignorance, an appeal to authority, and a double negative.

Great. :-(

not not deterministic means deterministic, see;

http://en.wikipedia.org/wiki/Deterministic_system

http://en.wikipedia.org/wiki/Argument_from_ignorance

http://en.wikipedia.org/wiki/Argument_from_authority

See also;

http://en.wikipedia.org/wiki/Lyapunov_time

Set that number to zero then deterministic, set it to any small positive number, chaos eventually ensues.

So while the Solar System appears to have been markedly stable, oh say these past 4,500,000,000 years, Laskar predicts that that everything goes to hell and a handbasket, in less than 60,000,000 years (in the future, but most importantly, IN THE PAST!).

Further, he’s even predicted that Mercury will; 1) leave our Solar System, 2) collide with the Sun, 3) collide with Venus, 4) collide with Earth, 5) collide with Mars, 6) collide with itself (oops I made that one up), because … you guessed it … Jupiter did it.

Read all aboot it here;

http://www.imcce.fr/Equipes/ASD/person/Laskar/jxl_collision.html

Note the capton on the bottom of that webpage;

“The orbit sequences are made with real data from the numerical simulations.”

should read;

“The orbit sequences are made with let’s make believe data from the numerical simulations.”

Real data comports the meaning of empirical as in experimental or observational data.

Similiarly, reanalysis data are also not real data, they are also data taken from a numerical model.

Matthew #52,

> Your post contributes to a case that I might be wrong.

I’ll happily settle for that. I think agnotology’s a nifty notion, but I’m not selling it, nor totally sold on it myself.

> It looks to me like “agn[o]tology” is just one more pseudo-intellectual

> way to smear some of the skeptics.

I think it’s mostly trying to be a fancy buzzword for a research program, one that, incidentally, covers broader ground than the climate debate. Possibly too broad, too conducive to intellectual smugness, and too likely to tick off research subjects. (“Hi, I’m studying ignorance. Could I interview you?”)

Still, it ain’t much of a smear. Us just plain folks who call’em as we see’em, we’ll just stick with “liars” and “cranks” for the deniers. (Not you, Matthew.)

> one-sided

Surely there are cases of ignorance-making in progressive and ecological causes, too, research opportunities just waiting for a conservative agnotologist to pounce. Might be healthy for the field.

> “merchants of Belief” like Al Gore

I’m sorry, #53 is right, that’s just false balance.

Dan Bedford #58,

Cheers! And sorry again about the name thing. I enjoyed your paper. Useful reading list and constructive tips for putting misinformation to good educational use. Defining and measuring success might take some more thinking about. If you train students to see through Crichton’s essay, you’d expect them to do well at an exam question about an op-ed-style text, and that’s a good thing in itself, but do the critical thinking skills and understanding of scientific method gained by considering misinformation carry over to other kinds of problem-solving? And if they’re trained on materials denying an environmental problem (admittedly the vast bulk of misinformation in this field), does it make them better able to spot materials that make similar errors in somehow overstating one?

Septic Matthew, Excuse me, but what the F*** does Al Gore have to do with any of this. Al Gore is a layman doing his best to present the scientific consensus. The idjit denialists are simply refusing to consider evidence because they don’t like the policy implications. See a difference?

Hint: One is trying to present science. The other is trying to present anti-science. You figure out which is which!

Matthew #53,

The comparison between L&S’s physics-free curve-fitting exercise and Raypierre’s textbook introduction to planetary radiation balance lost me, I’m afraid. Anyway, I think your suggestion about Loehle and Scafetta is testable:

Hypothesis (H): Loehle and Scafetta is a hypothesis-generating exercise of heuristic value.

Empirical consequence: Having generated the hypothesis (H’) that there might be a 60-year wobble in the solar system capable of forcing much of the temperature variation on 20th century Earth, Loehle and Scafetta set off to verify the physical existence of said wobble.

Observation: Well… not so much. Rather, they go off reassessing climate sensitivity, dismissing the role of aerosols, and making 21st-century projections as if H’ could be taken for granted.

Conclusion: H is falsified. New hypothesis H2: Loehle and Scafetta is a publicity-generating exercise of, uh, agnogenetic value.

Is this argument wrong or unfair?

(Captcha: ithink student-)

Re 60 EFS_Junior – I just thought that the time horizons on Laskar’s work were limits of predictability; that the meteorologist doesn’t bother trying to tell you what’s going to happen more than 1-2 weeks from now doesn’t imply a prediction of the end of the world (PS tying several things together, I’ve heard the same perspective voiced about the Mayan calendar – ie they just never got around to what happens after whatever o’clock on what’s that date, Dec 2012 :) ).

I thought predictions of possible future interplanetary impacts were a bit of a different matter from the orbital cycles.

Might there be categories of solar system dimensions with limits of predictability longer or shorter – like you can predict how much snow you will get in tonight’s blizzard but not know where each snowflake will land – you can predict a number of hurricanes of some intensity for the next year but not quite when and where each will be – you can predict in principle that the next ice age may start in 20,000?/30,000? or 50,000 or ? years (contingent on what we do), and the one after that, but at some point there could be some tectonic processes that make things more like the Cretacious – and there is probably some time horizon for the weather of mantle convection, too, while the climate of the mantle (layered vs whole vs mixed convection, general descriptions of cell sizes and rates of motion) may be predicted for longer time frames. (And yes, you need to know the paleogeography in order to calculate tidal torques and thus the evolution of the periods of the orbital cycles as they are affected by the Earth’s rotation rate and the moon’s orbit and oh, Earth’s rotation also determines the coriolis effect which affects the tides and the rotation also affects the weather and climate, and tides are important along with wind and plankton in ocean mixing too, but anyway, there is at least geologic evidence for changes in Earth’s rotation which constrains the possible trajectories over time.) Did the K/T impact throw off things so much in the solar system? For the range of directions and speeds and masses it could have involved, there are a range of possible prior momentum and angular momentum values the Earth could have had – perhaps we should do a ‘spaghetti model’ (as was much discussed on the news for the track of Irene)? Might the ‘weather’ of orbital cycles be impacted by K/T but not the ‘climate’ – perhaps the trajectories of obliquity, precession and eccentricity would become completely different given sufficient time, but maybe with the same general character – periods and amplitudes and average values being similar enough that a casual glance at any given time segment (on the necessary scale to characterize the orbital cycle ‘climate’) wouldn’t look like anything different.

I don’t see that a potential for future collisions among planets should be preposterous just because it hasn’t apparently happened except near the beginning; I thought it’s been accepted that there is chaos in the long term regarding the orbits. Which would the prediction of a specific incident hard, but may allow some signficant probability for some collision or massive rearrangement at some time.

@60-61: I agree that Milankovich is not (thus far) a complete explanation for glacial cycles. The theory has been (and is actively being) revised in accord with what we’ve learned. I also agree that we hear all kinds of claims that lack physical basis, not just for “60 year cycles”, but also for such amusing (but unfortunately damaging) humbug like the idea that the GHE violates the 2nd LoT.

On the solar system, it is deterministic — but chaotic. If we could measure all relevant conditions, we could in principle predict its state at any point in time. But we cannot measure all relevant conditions, the ones we can measure all have some error, our calculations are approximations and have finite precision, and the minimum step size is limited by available computational power. These factors limit our ability to predict the system’s state beyond some point in time. Also there is an irreducible prediction limit arising from Heisenberg’s Uncertainty Principle (who knows where the next virtual particle pair will appear?), but I don’t know what it is, and none of the papers I’ve read mention it, probably because non-quantum uncertainties (unknown Kuiper belt objects, anyone?) swamp it by many orders of magnitude.

The question is thus: for what time span does state-of-the-art solar system simulation yield valid results? Laskar et al 2011 (thanks for the cite), claims usable eccentricity results for +- 50 Myr.

You say

As far as I understand what you mean by “chaos” here (you seem to be using it in the sense of “disorder” or even “ruin” rather than in the sense of a limit on predictability), I disagree. Laskar et al 2011 claims only that their model can validly predict earth’s eccentricity over +- 50 Myr, not that its eccentricity assumes wild values beyond that time. Similarly, Laskar & Gastineau 2009’s take on Mercury’s orbit’s possible evolution is a probabilistic one, not a prediction.

All that said, it’d be great to get more geological data to confirm, refute, and/or recalibrate solar system models, a point which Laskar et al 2011 makes in the abstract, p.2, p.8, and elsewhere.

Thank you for the conversation. This topic is more interesting than I’d thought.

Re 51 Tom P – watched the video; and I agree that the sun does wobble around the center of mass of the solar system; the center of mass itself is not tending to wobble around the sun because conservation of momentum applies to the solar system as a whole (except of course for the forces applied to it by nearby stars, the rest of the galaxy, etc, but those are not varying so fast and so the center of mass should generally be moving along rather smoothly on the same timescale as planetary motions). However, the idea that this motion or Jupiter would result in the sunspot cycle is a bit odd – see above comments on ‘free fall’ and tides on the sun. Tides follow an inverse cube law (to a first approximation for systems experiencing tides due to masses relatively far away compared to the system’s size; for systems that are not small relative to the distance to the object producing the tidal acceleration, I think it’s more complicated), and so the inner planets gain in importance relative to the outer planets, although mass is still important (and nonetheless, tides raised on the Sun by the planets are so tiny it’s hard to imagine they could be of any such significance).

And Earth is not orbiting the barycenter of the solar system. Consider that the Moon is in orbit about the Earth (well, their barycenter), it is not orbiting the barycenter of the Earth-Moon-Sun system except by being part of the Earth-moon system. Jupiter is (from memory) a bit over 5 times the distance from the sun as Earth is; thus, with about 1/1000 the mass of the sun, the gravitational acceleration of the Earth toward the sun due to the sun’s mass is about 1000 * (4 to 6)^2 = 16,000 to 36,000 times that of it’s acceleration toward Jupiter due to Jupiter’s mass, whereas if the Earth were accelerating toward the barycenter of the Sun-Jupiter system, shouldn’t that ratio should be ~ 1000 * 1/(4 to 6) = 250 to ~ 167? The Earth-Moon doesn’t orbit the Earth-Moon-Sun barycenter exactly but it is not orbiting the barycenter of the solar sysem either; to some approximation the innermost planets and the sun must wobble around the barycenter together as they are similarly affected by the outermost planets which happen to be more massive as well as more distant and thus dominate in their effects on the barycenter – things should tend to get more complicated when the planets involved are at more similar distances.

Here is another quote from Raymond Pierrehumbert’s book “Principles of Planetary Science”.

The thought experiment of varying L{*} in a hysteresis loops is rather fanciful, but many atmospheric processes could act to either increase or decrease the greenhouse effect over time. For the very young Earth with L{*} = 960W/m^2, the planet falls into a Snowball when p{rad} exceeds 500mb, and thereafter would not deglaciate until p{rad} was reduced to 420mb or less (see Fig. 3.11). The boundaries of the hysteresis loop, which are critical thresholds for entering and leaving the Snowball, depend on the solar constant. For the modern solar constant, the hysteresis loop operates between p{rad} = 690mb and p{rad} = 570mb. It takes less greenhouse effect to keep out of the Snowball now than it did when the Sun was fainter, but the threshold for initiatiing a Snowball in modern conditions is disconcertingly close to the value of p{rad} which reproduces the present climate.

For those of you who like RISK, the predicted (or modeled, or the scenario of) reduced solar output in the next decades ought to lead to even more “disconcert”, and a crusade to spend trillions of $$ to make sure that it does not happen.

In brief, the knowledge of planetary climate and the many heat flows do not justify any confidence in attributing some of the post LIA temperature rise to CO2, or to forecasting a climate sensitivity of 3.5K per doubling of global average CO2. There is too much that is only roughly known, too much that is not known, to justify a belief in such refined or seemingly precise conclusions. On present knowledge, taken all together, it is possible that Earth will heat (with consequent crop disruption), and it is possible that Earth will cool (with consequent crop disruption.) Both risks should be remembered as you go about advocating the rebuilding of the human energy industry.

How that fits in with agnotology I leave to you.

Wait, you are attributing that to Ray Pierrehumbert?

Maybe some of it. But how much?

Please look for the ” key on your computer and use it before and after quoted material. It’s a courtesy.

Matthew #69,

If you’re claiming that uncertainty means we cannot know whether we’re affecting the climate and temps can just as well go down as up, the fit with agnotology is clear. Instead of throwing up their hands, people have worked with multiple lines of evidence to bound the uncertainties. For the questions you raise, see e.g. Knutti and Hegerl’s review of climate sensitivity; the IPCC chapter on detection and attribution; or Feulner and Rahmstorf on the effect of a new grand minimum on climate.

Septic Matthew,

That the human energy industry will have to be “rebuilt” is inevitable, and it would happen even if climate change were to magically stop. Oil is running out. Coal is finite. Demand is growing.

As to your other assertion (which, by the way, you should indicate with quotes, block quotes, etc. what is yours and what is Raypierre’s), there is zero evidence that any Grand Solar Minimum is in any danger of putting us into a Snowball Earth scenario. (Read Usoskin) Talk about alarmists!

Hi MJD

MJD says:

3 Sep 2011 at 10:15 AM

To: vukcevic #23

“vukcevic says:

1 Sep 2011 at 6:14 AM

No doubt the Sun indeed runs ‘60 year cycle’, (pi/3 = 60 ; pi/…), as this NASA’s record confirms: http://sohowww.nascom.nasa.gov//data/REPROCESSING/Completed/2009/mdimag/20090330/20090330_0628_mdimag_1024.jpg”

Vukcevic: Your link just shows a photo of the sun. It doesn’t confirm anything about cycles (or much of anything else). Kindly post the correct link if you please.

——————–

Just examine the photo carefully, particularly top left hand side (10 o’clock area), 1024 pix resolution should be good enough. The sun definitely runs ‘pi/…’

@69: In addition to what’s already been said about misleading quote-mining, I’m sure you’ve got ample evidence that solar output will soon fall from ~1366 W/m^2 to ~1312 W/m^2 (a drop of ~4%, probably not seen since ~475 Mya!) for long enough that we should be concerned about runaway glaciation. Oh, wait, you haven’t produced any.

d (septicism quality)/dt has fallen to << 0, which could indicate an incipient grand minimum. Watch out!

28, gavin: A careful reading of the Scafetta papers (2010; L&S 2011 etc.) shows that the ‘astronomical’ rationale is completely post-hoc.

True, but it doesn’t make them wrong, only untested and unreliable. The early history of the Bohr model of the atom was similarly confused (? to pick another mild pejorative for unreliable): it was invented post-hoc to explain spectroscopic data, and required repeated revisions until (among other tortures), the hypothetical electron orbits had been replaced by hypothetical distributions, and electrons

had become subject to Pauli’s Exclusion Principle.

Loehle and Scafetta’s work should not be relied upon to guide policy, at least not exclusively, but I bet it is worthy of further tests.

[Response: I hope you aren’t really being serious, but loehle and scafetta’s contribution neither deals with any important uncertainty nor points to any interesting research avenues. They may as well have correlated temperature to the number of Tibetan monks for all the insight their work provides. – gavin]

Re my 68 re 51 Tom P: I worked on it a little more and, for position vectors rS and rJ toward masses MS and MJ, the vector to the center of mass CM is

rCM = ( MS*rS + MJ*rJ ) / (MS+MJ)

and the gravitation acceleration at the origin of the position vectors:

= G* (MS*rS/rS^3 + MJ*rJ/rJ^3) = gS + gJ

were it not for the r^3 terms in the denominators, this would be parallel to rCM and thus point toward CM. But for the larger mass being MS, we can take gS = G*MS*rS/rS^3, and note that the difference between that and a vector pointing toward CM is (from proportionalities) gJ’ = G*MJ*rJ/rS^3 = gJ * (rJ/rS)^3

gJ/gJ’ = (rJ/rS)^3, and so the actual g = gS + gJ will point toward a point on the line between S and CM that is ~ (rS/rJ)^3 of the way from S to CM, at least for small gJ/gS and small (rCM – rS)/rS

So *if* the Earth’s orbit could be approximated as about a single point between Jupiter and the Sun, assuming the above can be used as guidance, it would be ~ 63 to 215 times closer to the sun than to the Jupiter-Sun barycenter. Thus, rather than changing the Earth-sun distance by ~ +/-0.5 % and thus solar TSI by ~ +/-1 % from average, it would change it by two orders of magnitude less (with the annual average effect perhaps smaller still, although varying with Jupiter’s alignment with the semimajor axis, etc.). Etc. for Saturn et al.

A related but different matter: I’m curious what anybody thinks of these:

“Possible forcing of global temperature by the oceanic tides”

Charles D. Keeling, Timothy P. Whorf

http://www.pnas.org/content/94/16/8321.full

“The 1,800-year oceanic tidal cycle: A possible cause of rapid climate change”

Charles D. Keeling* and Timothy P. Whorf

http://www.pnas.org/content/97/8/3814.full

for a little background:

http://www.aviso.oceanobs.com/en/applications/ocean/tides/tides-and-climate/index.html

(PS I recall elsewhere reading that wind, plankton, and tides contribute similarly to oceanic mixing. But is that just in the mixed layer (or entrainment into it) or in the deep ocean, and does this include upwelling with subsequent mixing or merging with the mixed layer via heating, etc.? Also, deep ocean mixing by tides may be through breaking of internal gravity waves – something about submarine ridges or seamounts rings a bell).

I read through some portion of the first paper, the second I looked at a little. I couldn’t find any mention of the variation of the eccentricity of the Moon’s orbit, and I don’t understand why the parameter γ should be the best for rating the tides. It would be helpful to see a graph of how the tidal amplitude varies – how much variabiligy remains outide of the spring-neap and other short-term cycles?

And as long as someone would consider such (small?) variations in tide amplitude to be important for oceanic mixing, what about deep mixing (time delay effects?), or if it is important how much tidal energy is dissipated in the diurnal cycle vs the semidiurnal cycle (if my understanding is correct, the equilibrium tide height is zero at the equator and pole and maximum at midlatitudes for the diurnal cycle and zero at the pole and maximum at the equator for the semidiurnal). Or what if stronger tides increased glacial flow or calving at the coasts, or tidal currents openned up gaps in sea ice covering by piling sea ice against islands, thus affecting albedo and surface heat fluxes? (The mechanism proposed by the authors sounds a bit like tidal variations forcing a sort of ENSO-like effect – not the specific geographical effects but the general vertical redistribution of heat in the ocean. If the tides were turned off completely, presumably there would be some global warming, which would then decay with time as it would cause a radiative disequilibrium.)

I haven’t checked with google scholar but the papers themselves don’t seem to list any other work citing them that really pertains to the hypothesis – unless I missed something.

75, Gavin: They may as well have correlated temperature to the number of Tibetan monks for all the insight their work provides. – gavin]

The calculation of climate sensitivity by Pierrehumbert (whose book supports a figure of 2K) assumes that it is constant (an untestable assumption, at least now) and ignores the effects of cloud formation and dissipation (there are other approximations/simplifications to what is known, but those 2 will do for now.) Loehle and Scafetta assume a persistent periodicity, a persistent linear trend, and an additional more recent (but persistent) linear trend attributable to CO2. Both sets of assumptions are deficient. Either or neither may be shown by 2020 (or 2030) to be sufficiently accurate for predicting the global mean temperature in 2050 (or accurate scenarios depending on the accumulation of CO2.)

If you like assigning numbers to represent degrees of belief, each should get a low number. If you are thinking of public policy, you/we should formulate a public policy for the next ten years that will not be too bad no matter which is best supported by evidence available then.

I think that your invocation of the Tibetan monks was a poor rhetorical flourish. If their number did correlate with some climate statistic, however, that would be intriguing.

> I haven’t checked with google scholar but the papers themselves

> don’t seem to list any other work citing them ….

Papers citing those papers were only published later; each those web pages have links (which only work from the article page): look for and click on

View citing article information

Citing articles via CrossRef

Citing Articles via Web of Science (55)

Articles citing this article

Here’s one such link — you’ll find familiar names among the authors:

http://scholar.google.com/scholar?q=link:http://www.pnas.org/content/97/8/3814.abstract

Considering Chinese activity wrt Tibet, I wouldn’t be at all surprised if the number of monks showed a decline well correlated to the decadal increase in global temperature. ;)

81, flxible, That was cute — sincerely. But we already know of the correlation with the growth of Chinese industry. So we’d need some sort of (or sorts of) multivariate time series analysis.

Raymond Pierrehumbert’s book is a treasure trove of information. Because the author is so complete in describing the limitations and approximations in his derivations, it could be called “The AGW Skeptic’s Handbook”. If the so-called “denialists” weren’t so narrow-minded (some are for sure), they’d publish “Annotations” or “A Reader’s Guide” companion explicating the justifications for a skeptical or “lukewarm” assessment of AGW.

Re 79,80 Hank Roberts – thanks.

Re #82

If the so-called “denialists” weren’t so narrow-minded (some are for sure), they’d publish “Annotations” or “A Reader’s Guide” companion explicating the justifications for a skeptical or “lukewarm” assessment of AGW.

Or “How to cherry pick factoids you like from a barely understood text book you have just skimmed, without really having thought about working through thoroughly”

Ok, probably too long

All the best,

Marcus

84, Marcus

“Cherry picking” hardly does this justice, it’s more like harvesting cherries by the bushel.

I suppose you’d have considered the inability to correctly model the precession of the perihelion of Mercury a “cherry picked factoid”. That error was far less than the errors to date in predicting climate change.

A lot of you don’t seem to understand that the models of climate science are approximations, and that in most cases they do not have demonstrated precision better than 3%. Even if Earth truly were a flat disk without terrain, and even if the energy transfer processes were linear, and even if the system were in steady-state, the models would not be accurate enough to make a long-term forecast of the effects of doubling CO2 because the models can not even predict changes in cloud cover.

Septic Matthew,

If the models were all we have, or if the purpose of the models were to make long-term predictions, I might agree. But neither of these assertions is true. We have mountains–both literal and figurative–of evidence that the planet is warming in a manner consistent with the output of the models–imperfect though they are.

And the purpose of the models is NOT to provide detailed predictions of every behavior the climate may exhibit, but rather so we can compare qualitative and quantitative trends in nature and in the models.

See, Matthew, here’s the deal. We know with near 100% certainty that CO2 is a greenhouse gas and that greenhouse gasses warm the climate. We know with better than 95% confidence that doubling CO2 in the atmosphere will raise the planet’s temperature by an average of greater than 2 degrees. We also know that MOST of the uncertainty, by far, is on the high side of that sensitivity confidence curve–that is it’s a whole helluva lot more likely that sensitivity is 6 degrees than it is that it’s 1 degree. If it’s 2 degrees, we might just barely manage if we act soon. If it is 6 degrees, we are cooked. And if you toss out the models, you further increase that high-side probability

So, I have to say, I’m a little perplexed by denialists like you taking comfort in the “lack of precision” of the models. Uncertainty is NOT the friend of complacent.

Dear Matthew,

You can always suspect that I myself do not know sufficiently what a model is and what restrictions it has, as You don’t know what I know… But if You blame the pros here for such a thing You fall victim to the Kruger Dunning fallacy.

As I understand projections are of course made on the basis of what is known today, which comprises that greenhouse gases force the climate system due to a well understood mechanism of radiative heat transfer, generating models that well explain what is observed and measured today. This is knowledge surely persisting albeit there are model deficiences and open questions.

Of course always surprising things can happen the next 20 years, maybe Taminos mid atlantic leprechauns take over… not a consideration due to occams razor, though

Cheers,

Marcus

Re my 76 – of course, the sun is also accelerating toward Jupiter by a similar amount and direction, which would make the Earth’s orbit even closer to being about the Sun (or the Earth-Sun barycenter).

86, Ray Ladbury: So, I have to say, I’m a little perplexed by denialists like you taking comfort in the “lack of precision” of the models. Uncertainty is NOT the friend of complacent.

There are too many risks for everyone to “complacently”, so to speak, focus on one to the exclusion of others.

88, Marco: As I understand projections are of course made on the basis of what is known today, which comprises that greenhouse gases force the climate system due to a well understood mechanism of radiative heat transfer, generating models that well explain what is observed and measured today. This is knowledge surely persisting albeit there are model deficiences and open questions.

Radiative heat transfer is not the only mechanism, and some of what is observed today is not explained: on another thread is a discussion of clouds.

I recall more than one guest lecture at our physics department’s Centre for Global Change Studies displaying a graph of spectral analysis of temperature histories, with data from multiple time scale sources including thermometer records, ice core data, etc. Separate sources are color coded and aligned to scale.

The spectrum shows really strong peaks at one day and one year, obviously. The other peaks are the interesting bits. There is a small peak around a couple of days, apparently tied to weather such as pasage of warm and cold fronts. IIRC, any peak at 11 or 22 years for sunspot cycles is not at all strong. Much farther down the curve, there are some peaks for Milankovich frequencies in the ice core data segment.

I’m limited to working from memory in this, as I’ve searched several times without managing to find the original sources for these graphs. erhaps someone here is familiar with such sources. If so, please post any references you can offer. I”d really like to see these in context and study them more closely, along with the author’s discussion.

[Response: Here is a relevant reference, perhaps the one you are thinking of: Huybers and Curry, 2006. –eric]

For those who’d rather not spend $32 for Huybers and Curry, 2006, linked in eric’s response to #90 there is this:

http://dash.harvard.edu/bitstream/handle/1/3382979/Huybers_MilankoContinuumVar.pdf?sequence=1

It’s free, there.

(Not sure how to post URLs in any fancy way.)

Septic Matthew, could you include antecedents to the pronouns you have used in your #89. I’m not sure what “one” is referring to, and I don’t see how it relates to what I posted.

> some of what is observed today is not explained

You’re a statistician, you say? In what field?

89, Septic Matthew: There are too many risks for everyone to “complacently”, so to speak, focus on one to the exclusion of others.

There are too many risks for anyone to “complacently”, so to speak, focus on one risk to the exclusion of other risks .

No none is complacent. Most people in this debate are balancing multiple risks.

93, Hank Roberts: You’re a statistician, you say? In what field?

Nonstationary multivariate biological time series.

MJD, vukcevic is apparently a bit of a stirrer – if you look closley at the region around 10’oclock, and 1/3 in from the edge on the sum image, you will discover an example of the ubiquity of pi.