A group of colleagues have succeeded in producing the first continuous proxy record of sea level for the past 2000 years. According to this reconstruction, 20th-Century sea-level rise on the U.S. Atlantic coast is faster than at any time in the past two millennia.

Good data on past sea levels is hard to come by. Reconstructing the huge rise at the end of the last glacial (120 meters) is not too bad, because a few meters uncertainty in sea level or a few centuries in dating don’t matter all that much. But to trace the subtle variations of the last millennia requires more precise methods.

Andrew Kemp, Ben Horton and Jeff Donnelly have developed such a method. They use sediments in salt marshes along the coast, which get regularly flooded by tides. When sea level rises the salt marsh grows upwards, because it traps sediments. The sediment layers accumulating in this way can be examined and dated. Their altitude as it depends on age already provides a rough sea level history.

How is sea level reconstructed?

But then comes the laborious detail. Although on average the sediment buildup follows sea level, it sometimes lags behind when sea level rises rapidly, or catches up when sea level rises more slowly. Therefore we want to know how high, relative to mean sea level, the salt marsh was located at any given time. To determine this, we can exploit the fact that each level within the tidal range is characterized by a particular set of organisms that live there. This can be analyzed e.g. from the tiny shells of foraminifera (or forams for short) found in the sediment. For this purpose, the species and numbers of forams need to be determined under the microscope for each centimeter of sediment.

The foram Trochammina inflata under the microscope

To get a continuous record of good resolution, we need a site with a rapid, continuous sea level rise. Kemp and colleagues used salt marshes in North Carolina, where the land has steadily sunk by about two meters in the past two millennia due to glacial isostatic adjustment. Thus a roughly 2.5 meters long sediment core is obtained. The effect of land subsidence later needs to be subtracted out in order to obtain the sea level rise proper.

Ben Horton and Reide Corbett

How did sea level evolve?

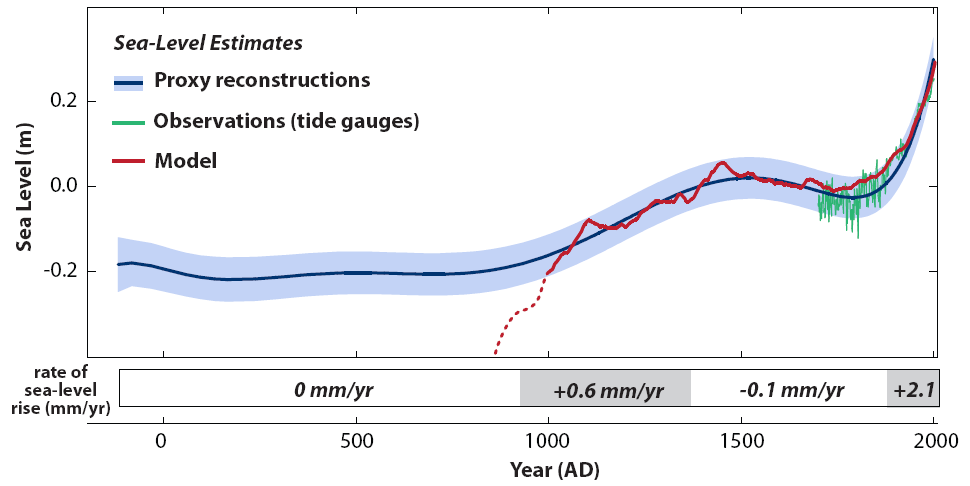

The graph shows how sea level changed over the past 2000 years. There are four phases:

- Stable sea level from 200 BC until 1000 AD

- A 400-year rise by about 6 cm per century up to 1400 AD

- Another stable period from 1400 AD up to the late 19th C

- A rapid rise by about 20 cm since.

Sea level evolution in North Carolina from proxy data (blue curve with uncertainty range). Local land subsidence is already removed. The green curve shows

These data are valid for North Carolina, where they are also in agreement with a local tide gauge (green)(Fig. 2 in the paper). But they also agree with another proxy data set from Massachusetts. Sea level changes along the US Atlantic coast do not need to fully coincide with global mean sea level, however. Even though the level rises uniformly if I fill water into my bath tub, the ocean has a number of mechanisms by which local sea level can deviate from global sea level. One of these mechanisms can also occur in the tub: the water can “slosh around”, in the oceans on multidecadal time scales. And there are some other factors as well, like changing ocean currents or changes in the gravitational field (due to melting continental ice). In the paper these factors are estimated and it is concluded that the North Carolina curve should be within about 10 cm of global mean sea level.

Connection to climate

I was involved in this study, together with Martin Vermeer and Mike Mann, to analyse the connection of the sea level data with climate evolution. We used a simple semi-empirical model, which basically assumes that the rate of sea level rise is proportional to temperature. In other words: the warmer it gets, the faster the sea level rises. This connection has already been established for the past 130 years, but it also works well for the past millennium (red curve). There is a discrepancy before 1000 AD (see figure caption).

According to this model, the rise after about 1000 AD is due to the warm medieval temperatures and the stable sea level after 1400 AD is a consequence of the cooler “Little Ice Age” period. Then follows a steep rise associated with modern global warming. Modern tide gauge and satellite measurements indicate that sea level rise has accelerated further within the 20th Century.

Reference: Kemp et al., Climate related sea-level variations over the past two millennia, PNAS 2011.

Further info on sea level: see the PIK sea level pages.

Update 21. June. I’d like to clarify two issues that have come up in discussion of our paper.

The first is: what can we learn from this for future sea level? A proper answer has to be given in another paper, but we can note now that the model fit to the new proxy data is highly consistent with the fit we obtained in 2009 to the tide gauge data. Hence it implies almost the same future projections as in our 2009 paper (75-190 cm by 2100).

The second issue is a misunderstanding of our Fig. 4D. The blue and green curves shown there, labelled Rahmstorf (2007) and Vermeer & Rahmstorf (2009), do not show sea level predictions we made in those earlier papers. They show new predictions (or rather hindcasts) with the model equations used in those two papers, for comparison with the more sophisticated equation used in the present paper. As we write in the paper: “These two models were designed to describe only the short-term response, but are in good agreement with reconstructed sea level for the past 700 y.” The former means we never used them to compute long-range hindcasts – they are merely shown here for comparison purposes, so that readers can see what difference the additional term in Eq. 2 actually makes. And the good agreement for the past 700 years was quite a surprise to me – I did not expect these simple models to hold up for such a long time scale.

Update 2 (June 23)

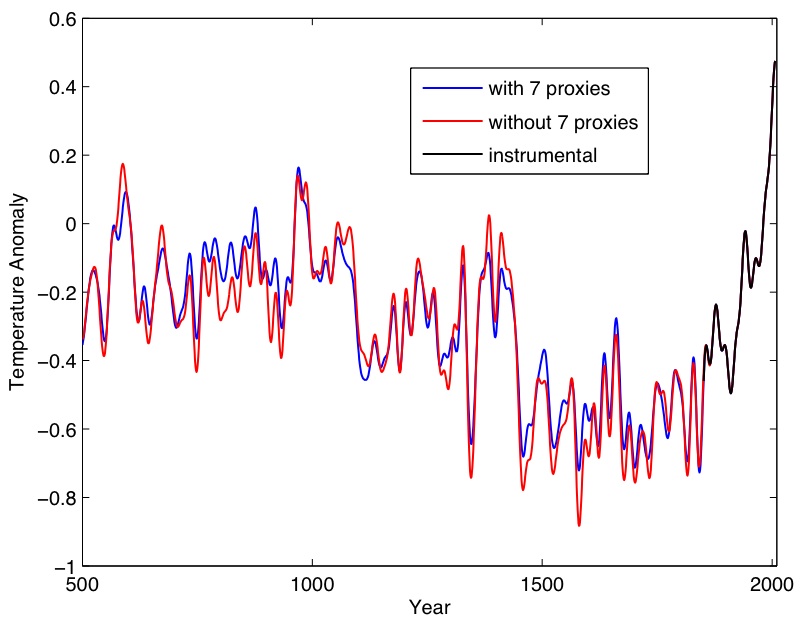

People have asked whether the use of the Tiljander proxies in the Mann et al (2008) EIV surface temperature reconstructions matters for the conclusions of this or any related studies. The answer, as provided previously in the literature (see this reply to a comment in PNAS) is no.

The impact of whether or not these proxies are used was demonstrated to be minimal for the Northern Hemisphere land+ocean EIV reconstruction featured in Mann et al (2008) [see Figure S7b of the Supplementary Information of that article, which compares the reconstruction both with and without 7 potential ‘problem proxies’, that include the Tiljander proxies; a similar comparison was also made in Figure S8 of the Supplementary Information for the followup article by Mann et al (2009)] . The same holds for the specific global mean EIV temperature reconstruction used in the present study as shown in the graph below (interestingly, eliminating the proxies in question actually makes the reconstruction overall slightly cooler prior to AD 1000, which–as noted in the article–would actually bring the semi-empirical sea level estimate into closer agreement with the sea level reconstruction prior to AD 1000).

Comparison of Mann et al (2008) global mean (land+ocean) temperature reconstruction with and without the 7 proxy records discussed in the text [shown in both cases is the low-frequency (>20 year timescale) component of the reconstruction]. Reconstruction is based on calibration against the HadCRUT3 series using the global proxy network

References:

Mann, M.E., Zhang, Z., Hughes, M.K., Bradley, R.S., Miller, S.K., Rutherford, S., Proxy-Based Reconstructions of Hemispheric and Global Surface Temperature Variations over the Past Two Millennia, Proc. Natl. Acad. Sci., 105, 13252-13257, 2008

Mann, M.E., Zhang, Z., Rutherford, S., Bradley, R.S., Hughes, M.K., Shindell, D., Ammann, C., Faluvegi, G., Ni, F., Global Signatures and Dynamical Origins of the Little Ice Age and Medieval Climate Anomaly, Science, 326, 1256-1260, 2009

Fifty years ago I worked at a lab that did submarine launched ICBM targeting. Calculations included tides, sun/moon gravity, air/water temps, and location at point of launch… virtually anywhere in the world’s oceans. The gravitational model of the earth required variations due to the density and depth of stone layers, bays, swamps, etc. The information was derived by analysis of thousands of orbits by hundreds of man-made objects. The results were proven to be quite precise.

If you truly believe there is a significant rise in sea level (and compensation required by “glacial rebound”) then certainly these classified datasets should show the impact of 50 years of warming.

You may want to submit a suggestion to NRL or other Naval R&D facilities to review this data and compare with modern ICBM targeting data/models.

IMHO, you are talking about tracking nats in a huricane. The changes over geologic time are so much more massive, the REAL news is that we’ve seen rediculously little variation over the past 4000 (10,000?) years and THAT is what we should be surprised and shocked about.

Such stability suggests some form of natural feedback mechanism that has compensated for far more severe changes in inputs than man-made CO2.

Rather than a focus on detecting the impact of MM CO2, try to understand how the much greater stimuli of the past made so little relative impact on life on this planet.

Humankind is far, far too self-centered and self-absorbed; nature does not dance to our tune, no matter how much it pumps up our ego to think so.

[Response: I might suggest that whether humanity is affecting nature (and how could it not be?) is more a question of investigating the degree, than of a priori assumptions that it isn’t possible regardless of it’s effect (or not) on our (collective?) ego. – gavin]

Karl Quick (#51)

The gravitational models used can’t have been that good, because the US Navy launched the GEOSAT mission in 1985 specifically to map the marine geoid (and gravity field) as the fields they had weren’t good enough for much of the world’s oceans.

This data has now been declassified because gravity data from civilian missions (e.g. ESA’s ERS-1) made the continued classification of it pointless. There is no need to ask the NRL for this data.

More recently, NASA’s GRACE and ESA’s GOCE gravity missions have improved gravity fields, both time-varying (GRACE) and time mean (GOCE).

The GRACE data shows the increase in mass of the oceans from ice melt.

Neil White

#51–

“. . . some form of natural feedback mechanism. . .” that was effective over the past 4000 (10,000?) years but not previously?

Please.

Karl Quick (#51):

Could you let us know what these much greater stimuli of the past (10,000 years, i.e. after the last ice-age) are? Strange that you’re so confident they existed while I’ve never heard of anything “much greater”.

Karl Quick:

Don’t you like it when an amateur tells professional scientists what they should be researching. Talk about condescending.

As Neil White points out, though, Karl’s time might be better spent getting up-to-date on the data that’s available rather than pontificating on how scientists should do their job …

Yes, over a small fraction of geologic time we’ve seen the evolution of hominids and over a tiny, almost imperceptible period of time, the evolution of humans followed over an eyeblink of geologic time, the growth and flourishing of human civilization.

Yet you’d have us marvel over the fact that pangaea once existed and stuff like that rather than concentrate on our human future.

Based on falsehoods like:

Little relative impact on life? You mean those huge extinction events like that which led to the demise of dinosaurs?

RE: 49 comment

Hey Dr. Mann,

My apologies, it was a 40 yr old memory. The images that come to mind are, a shaggy brown bearded researcher, (a grad. student, maybe, working with a European Professor/Scientist) standing in front of a large fresh redwood cutting. Then there was an image of a cross cut tree sample with reference arrows, maybe it was a rare fold out page… As it would fit with Dr. Furgeson’s C-14 dating paper, I would be more inclined to think the bearded man was a young Mr. Hughes, now Dr. Hughes, though I can find no references of their working together.

Again, my apologies, though I am curious as to why the idea of CO isotope or calcium leaching was not considered in the prior research, are there too many degrees of freedom to establish a robust evidence base or is there no reliable means to track calcium errosion or the acidic signature responsible? Never mind, if it were worthy of analysis it would already have been done.

Again my thanks and appreciation for your tolerance over the years, for an “old man’s” meanderings and rants…

Cheers!

Dave Cooke

Wow, it really is true. More than one author worked this paper! (Interesting comments about paper in the linked article.)

Another thing I was thinking about…

I was at a weather conference in New England a few years back, and we heard a talk/presentation there by a company who’s primary function was to forecast eddy-currents and such so that those involved in yacht races could take advantage of them as they swirl current toward (or away) from their intended directions.

One of the identifiers for those eddies was noticeable water temperature perturbations within these swirls (sometimes as great as 2-4C as I recall).

Anyway, could the presence of these eddy swirls (and the sea-surface temperature garbling that goes along with them) have an impact on the sea-surface temperature record by ships traveling unbeknownst through them over the last 50-100 years? Or, is it just assumed that all of this sort of thing evens out in the end such that it can be considered a negligible entity? (I acknowledge this may be slightly OT, since SST measurement is not specifically part of this post about SLR and Temperature corelation…But if there’s someone that can help, or point me to better place, that would be fine).

[Response: These eddies are a big part of the variability seen by shipboard SST measurements and indeed of the SST variability more generally. They last longer than eddies in the atmosphere, so necessary sampling is not as high frequency, but in areas like the Gulf Stream extension, the Aghulas retroflection (off South Africa), or the Antarctic Circumpolar current, they are really important. – gavin]

[Response: Getting at your question in a slightly different way, one thing that is peculiar about eddies embedded in the western boundary currents (there are analogies for the atmosphere too, e.g. the jet stream), is that they exhibit the property of “negative viscosity”. That is to say, the transport of energy is (over some range of wavelengths) from shorter to longer wavelengths, the opposite of what is typically seen in a fluid, where energy is dissipated by the small-scale turbulent structures. The eddies actually work to maintain the mean large-scale flow (e.g. the Gulf Stream). This was noted decades ago by my academic grandfather, Victor Starr of MIT, who in fact even wrote a book about all of this entitled “Physics of Negative Viscosity Phenomena”. So the short answer to the question is, the properties of these eddies are not only important, but in fact they are fundamental to the large-scale planetary circulation. And there are things about them we still don’t fully understand. – Mike]

A further follow-up to Terry (#46) and my response (#48): actually reading the paper (what a concept) made me realize that the semi-empirical model contains two important terms: one that is relative to an absolute equilibrium T (T0,0), and the other than is relative to T0(t), where T0(t) depends on the historic temperature…

T0,0 ranges from 0 to -10K (or more) based on a Bayesian analysis: if it is less than about -0.4 K, that would imply that the long-term term of the semi-empirical equation is contributing to SLR for the entire reconstruction. That would also imply that (T-T0(t)) must be negative during the pre-900 period when SLR=0… would a plausible physical explanation be that the deep ocean and ice sheets are still responding somewhat to the post-glacial temperature increase (eg, T-T0,0>0), but that the faster components of SLR like the surface oceans and glaciers were actually responding to the decrease in temperature since the early Holocene?

-M

#57 (If I may…)

So does this sort of phenomenon affect the paleo-reconstruction of global SSTs? I’m assuming there’s no way to know if boat-bucket measurements were conducted in eddy anomalies back-in-the-day.

Is it thought that the number of occurrences are probably evenly distributed (toward hot and cold) and thus isn’t worrisome? Or are these eddies particularly long-lasting enough to simply assume they ‘do’ or ‘can’ represent grid-smoothed temperature accurately for a particular time interval in the olden days?

[Response: The presence of eddies is taken into account in the estimation of uncertainties in gridded SST products because they express themselves in the sampling variability estimated for a given location. The error bars in state-of-the-art SST compilations take into account such sampling uncertainties, and indeed they become larger back in time, especially the earliest decades (1850s-1870s) in part due to the fact that there is substantial eddy variance. This is especially true e.g. in boundary current regions, and other areas of large eddy variance as mentioned by Gavin earlier. A good discussion is provided by Brohan et al (2006). – Mike]

Looking at Figure S6 of the supporting document I notice that the decline in sea level 2000 back to 1900 is measured by tide gauges as 200mm. For the new proxy it is measured as about 300mm. This implies that the new proxy is 50% out in its ability to hindcast after just 100 years. In that case, would it not be reasonable to suggest it is likely to be 1000% out over a 2000yr period?

[Response: Only if you don’t know what % means. (i.e. a linear trend that 50% off another trend in one period, remains 50% off if the trend is extended). – gavin]

Looking at the sea level curve. It appears this study would preclude a sea level stand in the mid-Holocene that is equal to or higher than present. I can’t imagine that sea level would have been higher than today, dip 0.4m from present, and then rise again. That seems like too much variation.

A mid-Holocene high stand is something that’s been debated for quite a while in my neck of the woods as it would say a lot about barrier island and tidal marsh formation and migration.

The standard model used to be a slow, steady rise up to about 6,000 YBP and then steady till modern times. I’ve seen the 6,000 YBP date chipped away over the decades to about 4,000 YBP, but this study seems to chip it down to almost nothing (500 YBP). Of course, I work in the microtidal environment of the Texas coast where 0.2m of sea level rise is something we fight about at every conference after a couple of beers.

Would you agree that the mid-Holocene high level stand is dead?

M #58:

Very perceptive, M. That is pretty much what I believe is happening.

I wonder: have vertical tectonic movements been considered?

Eric #65, Luckily there are people who regard difficulty as a challenge rather than an excuse for inaction.

This is some great, and scary information. It obviously shows a correlation between our emissions and the rising sea level. Although people will always say that correlation is not cause and effect, the evidence is far to strong to ignore the fact that sea levels are rising at a rapid rate.

Working with forams in UK salt marshes, the big problem is the whole teasing apart of isostatic/eustatic influences – I noted the correction for subsidence in the paper, but are you confident in not accounting for other sources?

Can I also please ask what was your take on the transfer function error? Things like infaunal species, bioturbation, non parametric salinity tolerances etc all can produce quite significant error, and I am impressed that in this data set it was less than 10cm!

Cheers,