We’ve been a little preoccupied recently, but there are some recent developments in the field of do-it-yourself climate science that are worth noting.

First off, the NOAA/BAMS “State of the Climate 2009” report arrived in mailboxes this week (it has been available online since July though). Each year this gets better and more useful for people tracking what is going on. And this year they have created a data portal for all the data appearing in the graphs, including a lot of data previously unavailable online. Well worth a visit.

First off, the NOAA/BAMS “State of the Climate 2009” report arrived in mailboxes this week (it has been available online since July though). Each year this gets better and more useful for people tracking what is going on. And this year they have created a data portal for all the data appearing in the graphs, including a lot of data previously unavailable online. Well worth a visit.

Second, many of you will be aware that the UK Met Office is embarking on a bottom-up renovation of the surface temperature data sets including daily data and more extensive sources than have previously been available. Their website is surfacetemperatures.org, and they are canvassing input from the public until Sept 1 on their brand new blog. In related news, Ron Broberg has made a great deal of progress on a project to use the much more extensive daily weather report data into a useful climate record. Something that the pros have been meaning to do for a while….

Third, we are a little late to the latest hockey stick party, but sometimes taking your time makes sense. Most of the reaction to the new McShane and Wyner paper so far has been more a demonstration of wishful thinking, rather than any careful examination of the paper or results (with some notable exceptions). Much of the technical discussion has not been very well informed for instance. However, the paper commendably comes with extensive supplementary info and code for all the figures and analysis (it’s not the final version though, so caveat lector). Most of it is in R which, while not the easiest to read language ever devised by mankind, is quite easy to run and mess around with (download it here).

The M&W paper introduces a number of new methods to do reconstructions and assess uncertainties, that haven’t previously been used in the climate literature. That’s not a bad thing of course, but it remains to be seen whether they are an improvement – and those tests have yet to be done. One set of their reconstructions uses the ‘Lasso’ algorithm, while the other reconstruction methods use variations on a principal component (PC) decomposition and simple ordinary least squares (OLS) regressions among the PCs (varying the number of PCs retained in the proxies or the target temperatures). The Lasso method is used a lot in the first part of the paper, but their fig. 14 doesn’t show clearly the actual Lasso reconstructions (though they are included in the background grey lines). So, as an example of the easy things one can look at, here is what the Lasso reconstructions actually gave:

‘Lasso’ methods in red and green over the same grey line background (using the 1000 AD network).

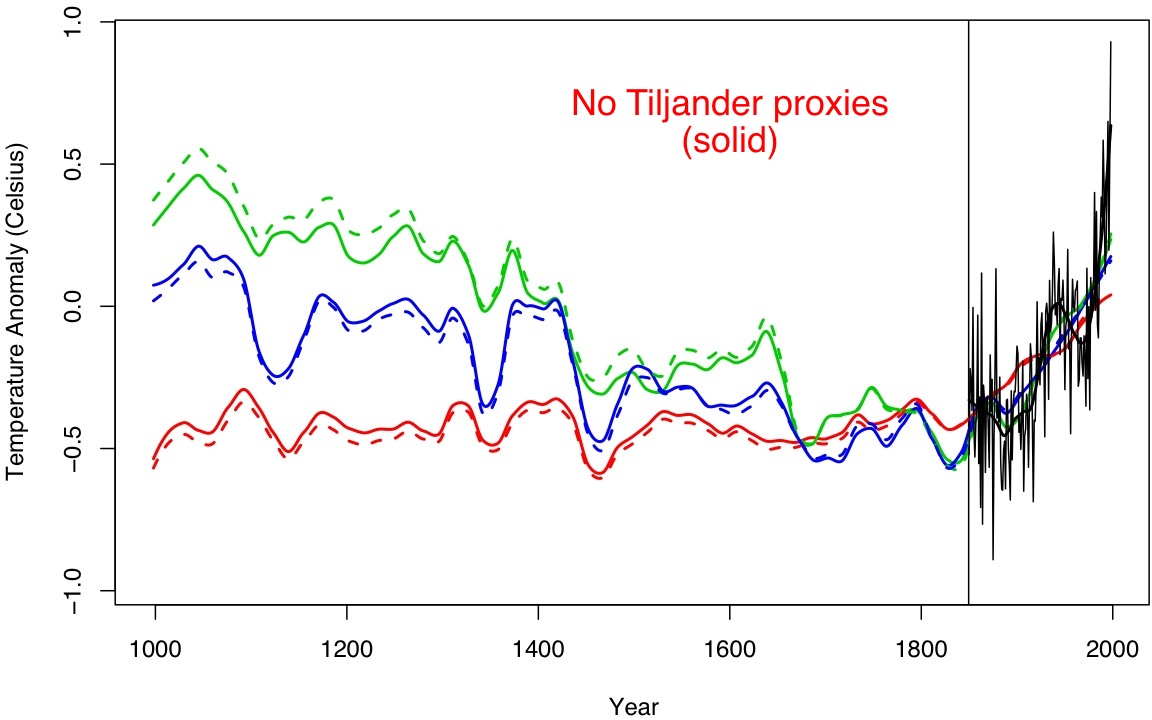

It’s also easy to test a few sensitivities. People seem inordinately fond of obsessing over the Tiljander proxies (a set of four lake sediment records from Finland that have indications of non-climatic disturbances in recent centuries – two of which are used in M&W). So what happens if you leave them out?

No Tiljander (solid), original (dashed), loess smooth for clarity, for the three highlighted ‘OLS’ curves in the original figure).

… not much, but it’s curious that for the green curves (which show the OLS 10PC method used later in the Bayesian analysis) the reconstructed medieval period gets colder!

There’s lots more that can be done here (and almost certainly will be) though it will take a little time. In the meantime, consider the irony of the critics embracing a paper that contains the line “our model gives a 80% chance that [the last decade] was the warmest in the past thousand years”….

Gavin,

“You need to start with the code, not a paper. That comes afterwards. Try EdGCM, NCAR CSM or GISS ModelE. – gavin]”

No gavin, you start with the paper, because the raw code is meaningless without an explanation of what equations are what, and what’s coming from where. I’m not going to spend a week trying to piece a fairly large model together, finding all the background for each equation from scratch. Luckily the GISS model does have a paper to go with it, which happened to be the schmidt paper you gave me. Its far to late to do much with it now, but give a day or so.

And those FAQs from your own site…not useful here.

But hey, you’ve stop with the appeals to ridicule and explaining simplistic issues that don’t answer the criticism all in a condescending manner, so maybe we can get somewhere.

It is interesting that Wally and Gilles both emphasize the uncertainty in climate sensitivity, but without any indication of which way those uncertainties are skewed. It is pretty much impossible to understand Earth’s climate if you presume sensitivity is less than 2 degrees per doubling. However, even 2 degrees per doubling would give us a temperature rise of about 4 degrees by the end of the century–well into the red zone. It is much easier to make the models work with a sensitivity of 4 degrees per doubling–which puts us into the range of mass extinction by century’s end.

Guys, I’ll keep saying it until you get it. Uncertainty is not your friend.

Wally: The irony! You first lecture Gavin on what he can do with GCMs, and when he points out you don’t know how they work (which you admit) you accuse him of being condescending.

You say that logical argument could swing your opinion, but it actually looks like your have already reached the conclusion you want.

Rocco,

You apparently did not understand my post. I may not be able write out any particular model from scratch or make perfect sense of the raw code without a paper to explain it, but that does not mean I do not appreciate what these models are comprised of. One issue is the raw code, the other is basic theory and rational. They are two different things.

Wally 13 September 2010 at 3:07 PM

In an earlier comment you said something similar:

You’re not here to do anyone any favours except yourself. Whether you let your emotions interfere with your judgment is your choice.

Wally: But you have yet to demonstrate that you really “appreciate what these models are comprised of”. Perhaps it would be wise to do so before you try to convince climate modellers that you understand GCMs better then they do.

Wally says: ” I may not be able write out any particular model from scratch or make perfect sense of the raw code without a paper to explain it, but that does not mean I do not appreciate what these models are comprised of. ”

Do you have any idea how often I had students in introductory physics classes try to tell me, “I may not understand the math, but I just know relativity can’t be right!”

Anne,

My emotions are not the problem. The problem is getting useful criticism that can advance my own knowledge, or as you want to put it “do me favors,” from a generally abusive set of posts.

There is not much to gain if someone more less calls you stupid and tells you to read a stat book. If there is something specific that causes someone to say such a thing, that can be useful however. Unfortunately, its difficult for most people to do both at the same time, but I’ve tried to pull what I can out of this discussion.

“That doesn’t win you any favors.” — Wally

“as you want to put it ‘do me favors'” — Wally

“explain it rationally, logically and respectively” — Wally

People are giving you the same answers others get when they ask the same questions, and you’re asking very frequently asked questions so far, and taking offense at almost everything.

Maybe finishing the PhD first would be a good idea, before starting to dig into a whole different field?

@359: Hank, the first quote that you attribute to Wally was mine. Pay attention, please. I won’t comment on your insulting tone as I promised not to do it.

On the debate: of the 9 posts I currently see on this page, only 2 have *something* to do with climate science – 351 and 352. Others are pointless attacke on how someone does not really know anything and has to go take lessons (5 posts) and predictable reaction to that (2 posts). Pathetic, particularly from the side of attackers.

On models: of course, the ultimate truth is in the code, but the argument in 351 that there has to be some guiding document behind any good model that would describe its behavior without going into a lot of detail has merit as well. Could anyone please point Wally to such a document for, say, ModelE? I would do that myself, but I am not familiar with that model.

Wally 14 September 2010 at 7:0 PM

That requires effort from both sides. Focus is key. Try not to get carried away by economics and etiquette. And even in the field of climate science there is too much to cover in a few exchanges of comments. Focus on those areas where you see most issues (I think you have already identified climate models as one of those areas, so a suggestion might be to try and stick to that subject).

Gavin saying “you’re confused” is not being offensive, he is being direct. He doesn’t know you and cannot look inside your head. He only has your comments. If those comments show confusion, he’ll tell you that. Directly and without detours. I always find it very helpful in a discussion if people leave me no guesswork about where they stand.

Like a famous president once said “Don’t ask what your country….”. Maybe if you forget about what you can get out of this discussion and start looking for what you can contribute, you’ll learn much more from it. For example, instead of demanding evidence from the other posters, bring up some yourself.

Do you really think you’ve already tried enough? You knew you were entering the lion’s den, don’t tell me you didn’t expect stiff opposition to your viewpoints. Don’t give up so easily.

Ah, sure enough, ‘favors’ was initially used by Nick Rogers, y’all are both complaining about being referred to statistics and code sources.

But those are regular answers to frequently asked questions.

It’s easy to make a blog exchange of text into a Monty Python sketch,* but it takes effort to have a human conversation in text without visual and verbal cues to feeling. Make the effort, others here will work with you. Yes, there are always going to be people trying to stir things up, and people thin-skinned enough; people do make mistakes. Don’t take it personally. Nobody’s sounded angry or upset with you yet, tho’ you’ve heard some impatience. Seriously, look at “Start Here” and Weart’s book, and consider that even easily irritated and cranky people will respond to questions that are asked in the right way, e.g.

http://www.catb.org/esr/faqs/smart-questions.html

______

* http://www.ibras.dk/montypython/episode29.htm#11

Nick, reread 351. You’re asking people to give Wally what he has now.

Wally writes “Luckily the GISS model does have a paper to go with it, which happened to be the schmidt paper you gave me…. FAQs from your own site…not useful here.”

Luckily? happened to be?

He’s been given what he asked for, and without having read it yet he is dismissing it as luck and happenstance.

That’s not respective.

The Model E page says how to join the mailing list. Done that?

There are a lot of people doing what you all are asking help with.

Ask good questions, get better answers.

http://imgs.xkcd.com/comics/physicists.png

Gavin, you write: “The number of variable parameters that can be used for the tuning exercises you seem to want to do are tiny (a handful or so), and even they can’t be changed radically because you’ll lose radiative balance, or reasonable climatology.”

I am interested to know your reaction to Jeffrey T. Kiehl, 2007. Twentieth century climate model response and climate sensitivity. GEOPHYSICAL RESEARCH LETTERS, VOL. 34, L22710, doi:10.1029/2007GL031383, 2007

My reading of Kiehl is that there is a great deal of uncertainty in historical estimate of aerosol forcings and that these uncertainties are one of the primary sources of the uncertainty in the climate sensitivities generated by the climate models. Although I am not familiar with all the ins and outs of creating climate models, it seems to me that Kiehl’s observations at least create an appearance that climate models are “tuned” to historical data with choices of historical aerosol forcing from a plausible range of historical estimates.

[Response: These two different issues. I was discussing the tuning of the model physics, while you are alluding to the potential for the tuning of the model forcings. I cannot speak for any of the other groups, but we chose our aerosol forcings based on a best guess estimate of aerosol distributions (from an offline aerosol model) and an estimate of the aerosol indirect effect from the literature (-1 W/m2 pre-industrial to present). We did not go back and change this based on our transient temperature response in the 20th C simulations. For AR5, the model has changed significantly, the aerosol distribution as well, and yet in the configuration that requires an imposed aerosol indirect effect we are using the same magnitude as in AR4 (i.e. -1 W/m2 pre-industrial to present). No tuning is being done. We will also have configurations in which the indirect effect is calculated from first principles – this is going to give whatever it gives. And again, we are not going to tune it based on the transient temperature response. – gavin]

Gavin’s inline response @ #332 received the response:

“Here’s a hint Gavin, create an argument that makes sense. . .” That’s two insults in ten words–“hint” is pretty clearly sarcastic, while the assertion by implication that the argument did not make sense

would have a pretty high probability of causing offense with most people, IMO.

And what was Gavin’s comment?

“Why do you think that GCMs are like random low order differental equations? They are nothing like it. The vast majority of the code is tied very strongly to very well known physics (conservation of energy, radiative transfer, equations of motion etc.) which can’t be changed in any significant aspect at all. The number of variable parameters that can be used for the tuning exercises you seem to want to do are tiny (a handful or so), and even they can’t be changed radically because you’ll lose radiative balance, or reasonable climatology. I strongly suggest you actually look at some GCM code, and read a couple of papers on the subject – GCMs are far more ‘stiff’ than you imagine. – gavin]”

By contrast, there is to my eye no insult here. The closest thing to it is the initial question “Why do you think. . .” But when I (for example) ask for information, there’s a tacit assumption that whomever I ask actually does know more than I do on that topic. I can hardly fault them for accepting that assumption; rather, I should be grateful for their time. I am certainly not doing them a favor! The remainder is straight declarative sentences, substantive and relevant.

The final sentence is a recommendation, true enough, but as I said, when you come around looking for information, there’s a tacit assumption. . .

Cognitive bias isn’t your friend when people are telling you that you have everything wrong. I’m reasonably certain this is the only reason that Wally can’t extract anything from these discussions.

xkcd is very interesting, today. Painfully apt, too.

Gavin, can you expand on your response to 365? How exactly do you use the information that the aerosol indirect effect is 1 W/m2 from the preindustrial in a model? I assume it is not just a global perturbation to the radiation budget. Do you add an extra cooling to the radiative transfer calculations when aerosols are present? Does there have to be both aerosols and clouds? I don’t see how you could have a configuration with an ‘imposed’ indirect effect, without essentially building a simple parameterisation.

Secondly, have you seen the paper: Knutti, Why are climate models reproducing the observed global surface warming so well?, GRL, 2005. Knutti seems to suggest that the trend in temperatures over the 20th century has been used, consciously or not, in model development.

Finally, why is there so much room within CMIP for modelling groups to choose their own forcing. Shouldn’t at least the aerosol concentration, ozone concentration etc. as well as any offline/imposed forcing values be consistent accross the board?

[Response: The details need to be read – this paper (Hansen et al, 2005) has the rationale and actual mechanics of the parameterisation (section 3.3.1). Not sure where the idea came from that this was not a parameterisation though. Knutti’s paper is interesting (as is almost everything he writes), but as I said (and I can’t speak for any other groups), we do not tune the forcings to get a better match to the transient temperatures. We aren’t far off though and so we have no incentive to do so – that might be a factor in some collective emergent behaviour. As for why groups vary in what they use to force the models, it is inevitable given the range of sophistication in modelling groups. I’ll give an easy example – many early codes did not have absorption or scattering by aerosols in the radiation code. This is a hard problem, and not necessarily the first thing you would get to when you start out. Yet these groups still wanted to include some accounting of the effects of volcanoes (which are clear in the record). So they applied the aerosol forcing from volcanoes as if it was the exact equivalent of a dimming of the sun. This works ok for some things (global cooling), but doesn’t work at all for strat. warming, circulation impacts, ozone feedbacks etc. So along comes a committee of climate modellers who try and get everyone to do the same experiment that involves volcanic forcing. Can they mandate that everyone use the exact same aerosol mass distribution in the stratosphere? No, because that won’t work at all for some groups. Do they force everyone to the lowest common denominator? (i.e. a change in TSI?) Again no, because once people have implemented complex physics in the code, they hate turning it off to keep people who didn’t make that effort happy. They want to see if it works. So you are stuck – everyone will end up applying the forcings their own way. This is even more of a problem when it comes to the complex 3D forcings – tropospheric aerosols, indirect effects and ozone. Plus you have the even more fundamental issue that the amount of radiative forcing from a physical change in GHGs or aerosols is a function of the model base climate and the radiative transfer code – so even if you forced everyone to do things identically, you still aren’t going to get identical impacts. Of course, some experiments like this are useful (2xCO2 + slab ocean for instance) – but they are useful only for comparing models. For comparing to the real world, over-specification of some ideal (but uncertain) forcing restricts the phase space of where the models can be and underestimates the uncertainty. In some cases an unfortunate choice can mean that none of the models can get anywhere close to the real world – and what is the point in that? – gavin]

Nick,

I’ve been looking at ModelE in what time I can spare (which hasn’t been much) for the last 2 days, and I’m not exactly satisfied with the explainations for it. The Schmidt paper that supposedly describes it, does a pretty good job of explaining the basic rational from a pretty high level, however, the model code is not explained. We need a list of where the model equations and parameter values are coming from, including references and discussion. I know most model papers have a hard time with this because it is quite a lot of work and requires many, many additional pages that journals often don’t want to bother with. But it makes reproducing these models very difficult to impossible.

However, to go back to the point of looking at GISS modelE, its obvious from the Schmidt paper that they are estimating, guessing, or “tuning”, at several parameter values such as the aerosols that were brought up before, drag co. effs., radiation scattering effects by clouds, cloud formation, and percepitation.

Gavin is now attempting a bait and switch in his latest comment to pauld. We were talking about GCMs in their entirety. Earlier he wanted me to believe these things where ‘stiff’ but now he’s retreating from that position and saying that the “physics” is known, but the forcings are more maliable. I was speaking to the entirety of these models, includng CO2/aerosol forcings, as well as black body ration and conduction. Obviously we know some things very well, but others not so much.

Off topic, I suppose,but

“Nanodiamonds Discovered in Greenland Ice Sheet, Contribute to Evidence for Cosmic Impact”:

http://www.sciencedaily.com/releases/2010/09/100914143626.htm

Clovis comet?

Anne,

“Gavin saying “you’re confused” is not being offensive, he is being direct. He doesn’t know you and cannot look inside your head. He only has your comments. If those comments show confusion, he’ll tell you that.”

No, it isn’t direct, its a rididule. Plain and simple. Since as you say he can’t look inside my head, there are several other posibilities beyond me being confused.

1) Gavin is confused

2) My post was confusing (notice this is different than ME being “very confused”)

3) There is just some general misunderstanding threw slightly different use of vocabular or something, that you might not qualify as confusion on anyone party

Gavin does not have the required information to make a broad statement about my general understand from just a couple of posts (you even state as much). Thus, making such a statement about me personally, instead of my argument is an appeal to ridicule. I’m not being senstive here, I’m stating the facts. Comments such as that one are ad hominems. If you, or Gavin or Hank, don’t want me to point them out, you all better get together and decide to not throw them out there.

And of course after this statement Gavin goes on to describe a statistical concept that is extremely remedial as if somehow he’s “teaching” me, which doesn’t even do anything to advance his argument. He came in assuming I don’t even have a high schoolers understanding of stats. As hank was moning about, that isn’t doing him any favors. Isn’t it at least on of reason for this blog to help convince people like me?

And Kevin, my “hint” was quite serious. Was it disrespectful? Probably, but I generally don’t give a lot of respect to those that don’t show me any.

Anyway, the general point is that Gavin, and I, as well as others on this board would be better served if we depersonalized this debate. No more, “you’re confused”, “you’re wrong”, “you need to go learn some stats”. If you have a point explain it as it pertains to the arguments only, not those making the arguments. As we know, such statements are ad hominems regardless of truth. I’m sure I’m ignorant of a great many things, but someone else stating as much in argument is pointless. Let the facts be the facts.

1- Livingston and Penn have popped up again.

http://www.probeinternational.org/Livingston-penn-2010.pdf

2- There’s also an October Science Magazine ref which I’ve misplaced

3- My first real live experience with MSM. From the Financial Post (Energy rag???):

“…The upshot for scientists and world leaders should be clear, particularly since other scientists in recent years have published analyses that also indicate that global cooling could be on its way. Climate can and does change toward colder periods as well as warmer ones. Over the last 20 years, some $80-billion has been spent on research dominated by the assumption that global temperatures will rise. Virtually no research has investigated the consequences of the very live possibility that temperatures will plummet. Research into global cooling and its implications for the globe is long overdue…”

Read more: http://opinion.financialpost.com/2010/09/16/lawrence-solomon-chilling-evidence/#ixzz0zjDkaElt”

This is surely OT. Does it belong anywhere on RC?

> Lawrence Solomon

Shorter Lawrence Solomon — maybe the sun __won’t_ come up tomorrow!

It’s Possible! We should plan for that happening!!!

On the less dramatic possibility of another solar minimum, not to worry even if it happens:

http://www.agu.org/pubs/crossref/2010/2010GL042710.shtml

John Peter @372 — Sure you didn’t find it on The Onion?

Gavin: Earlier I received the following response from you to the question I asked at about #365:

“[Response: These two different issues. I was discussing the tuning of the model physics, while you are alluding to the potential for the tuning of the model forcings. I cannot speak for any of the other groups, but we chose our aerosol forcings based on a best guess estimate of aerosol distributions (from an offline aerosol model) and an estimate of the aerosol indirect effect from the literature (-1 W/m2 pre-industrial to present). We did not go back and change this based on our transient temperature response in the 20th C simulations. For AR5, the model has changed significantly, the aerosol distribution as well, and yet in the configuration that requires an imposed aerosol indirect effect we are using the same magnitude as in AR4 (i.e. -1 W/m2 pre-industrial to present). No tuning is being done. We will also have configurations in which the indirect effect is calculated from first principles – this is going to give whatever it gives. And again, we are not going to tune it based on the transient temperature response. – gavin]”

I appreciate your response. While it helped clarify some issues in my mind, it raises a host of other questions that would be off-topic on this thread.

I think that it would be very helpful for RealClimate to post a discussion of Jeffrey T. Kiehl, Twentieth Century Climate Model Response and Climate Sensitivity, 34 Geo. Res. Lett. L22710 (2007), http://www.atmos.washington.edu/twiki/pub/Main/ClimateModelingClass/kiehl_2007GL031383.pdf , along with the related article, Stephen E. Schwartz, Robert J. Charlson and Henning Rodhe, Quantifying Climate Change – Too Rosy a Picture?, 2 Nature Reports: Climate Change 23 (2007). http://www.ecd.bnl.gov/pubs/BNL-78121-2007-JA.pdf

The way you describe incorporating estimates of historical forcing of aerosols sounds reasonable, but I am surprised that you are not aware of how other modelers are handling this issue. What do you think of Scwartz’s, et.al. recommendations that: ‘A much more realistic assessment of present understanding would be gained by testing the models over the full range of forcings”? Is there any movement towards implementing this suggestion in the next IPCC or at least developing a standard set of historical forcings and evaluating models based upon them?

RE 372

Science Writer added this:

“…The phenomenon has happened before. Sunspots disappeared almost entirely between 1645 and 1715 during a period called the Maunder Minimum, which coincided with decades of lower-than-normal temperatures in Europe nicknamed the Little Ice Age…”

Full story at: http://news.sciencemag.org/sciencenow/2010/09/say-goodbye-to-sunspots.html

Gavin,

Thanks for your detailed reply and reference. I come from idealised modelling world, so when I see ‘imposed’ I read ‘specified’ ;-).

Your point is well taken – the inputs used must be appropriate for the level of physics within each model. However, your assertion that allowing more freedom in terms of inputs leads to a wider exploration of the phase space is at odds with Knutti’s conclusion, in which the correlation between aerosol forcing and climate sensitivity narrows the ensemble of 20th century runs.

Do you think we should adopt his interpretation of the 20th century runs as conditional on observations? If this is the case, we can’t really claim reproduction of the global mean 20th century temperature as any kind of prediction success. While it may be true that formal attribution studies do not rely on the global mean temperature history, the IPCC literature does give a lot of weight to these figures as showing model skill.

If we don’t adopt this view, we have to explain why the aerosol forcing climate sensitivity correlation exists. While you, and no doubt other modelling groups, are adamant they only use the climatology and seasonal cycle for tuning, is there no possibility that the 20th century change enters at some level? Most of these models have been around for a long time in one form or another, and thus the models ability to simulate the 20th century climate is known to those choosing aspects of the model to improve.

I would appreciate hearing your views on this issue.

[Response: The basic fact is that the 20th C trend does not constrain the total aerosol forcing or the climate sensitivity and the space of allowable combinations that provide basically the same temperature trend is large. Clearly we could create a set of model runs that mapped out the uncertainty in each independently and get a much wider range of 20th C trends (and indeed this has been done in simpler contexts). You can then ask what the projections are for the future given the actual temperature trend (plus or minus some tolerance), and you will get a range that is quite close to the range of AR4 projections (maybe a little wider). That is certainly a useful exercise. But the AR4 models were not created in such a fashion, and so thinking about them like that is not as useful. Each group strives to create the best simulation they can and therefore most of the models cluster around ‘best estimates’ of various quantities. But even then, some of the models didn’t use any aerosol indirect effects and have late 20th C trends that are significantly too warm. Nonetheless, the existence of a reasonable match to the observational trend in a specific GCM does not preclude the potential existence of another reasonable match with a different sensitivity and a different forcing. Thus you have to look at the models as plausible and consistent renditions of what happened, but that are not definitive. Widening the scope of variables, time periods, and complexity will give much better chances of constraining important facets of the projections. – gavin]

Martin Singh (368) and Wally (369) (Anyone else as well)

If you want to understand how GCM’s work, I would encourage you to look my GoGCM project. My intent with this project is to try to show how these models are made, what assumptions go with them and what they can, and can’t do.

http://gogcm.blogspot.com/

“Gavin is now attempting a bait and switch in his latest comment to pauld.”

“Anyway, the general point is that Gavin, and I, as well as others on this board would be better served if we depersonalized this debate.”

Indeed, “we” would. Personally, I think Gavin makes a major contribution toward that end by not responding to the first quoted comment. Many could profit by his refusal to take (perhaps unintended slurs) personally.

Oh, and “ad homs” are arguments of the form: “A is a bad person, therefore his argument his discredited.” Simple name-calling is not an ad-hom, much less so a statement (correct or not) that someone is “confused.”

Anne et al.,

I have become convinced after many long conversations with Wally that he is acutely sensitive to any criticism and so becomes reactionary whenever anyone who disagrees with him, even when it is deserved, and that he is genuinely tone-deaf to his own online persona. He honestly does not know how he comes off.

The ‘you-are-not-in-my-head’ / ‘you-don’t-know-me’ response is also a motif with him; I have no idea why.

It is probably better not to engage in these kinds of conversations.

Hank @373

David @374

I believe both of you are in error.

According to the Science blog it actually the global warming that is causing the sunspots to start to disappear.

Thanks to both of you for your help anyway. It pointed me in the right direction.

for those who didn’t click the link above:

GEOPHYSICAL RESEARCH LETTERS, VOL. 37, L05707, 5 PP., 2010

doi:10.1029/2010GL042710

On the effect of a new grand minimum of solar activity on the future climate on Earth

Georg Feulner, Stefan Rahmstorf

Potsdam Institute for Climate Impact Research, Potsdam, Germany

The current exceptionally long minimum of solar activity has led to the suggestion that the Sun might experience a new grand minimum in the next decades, a prolonged period of low activity similar to the Maunder minimum in the late 17th century. The Maunder minimum is connected to the Little Ice Age, a time of markedly lower temperatures, in particular in the Northern hemisphere. Here we use a coupled climate model to explore the effect of a 21st-century grand minimum on future global temperatures, finding a moderate temperature offset of no more than −0.3°C in the year 2100 relative to a scenario with solar activity similar to recent decades. This temperature decrease is much smaller than the warming expected from anthropogenic greenhouse gas emissions by the end of the century.

—-

Hank, Don – After a lot of screwing around here’s a copy of what I hope is my last post to the Morningstar forum:

Re: Re: Greenhouse effect vs Maunder Minimuma few seconds ago | Post #2899434

I suggest that the reason it’s being reported now is as stated in the 3rd paragraph of the OP:

We are now in the onset of that next sunspot cycle, called Cycle 24 – these cycles typically last 11 years — and Livingston and Penn have this month published new,potentially ominous findings in a paper entitled Long-term Evolution of Sunspot Magnetic Fields: “we are now seeing far fewer sunspots than we saw in the preceding cycle; solar Cycle 24 is producing an anomalously low number of dark spots and pores,” they reported.

Don

You are cherry picking. Read further, the Science blog clearly states that “it is global warming that is causing the sunspots to disappear”.

Seriously now, it’s a bit late — but — I just finished reading the entire Penn Livingston paper. The paper presents evidence that magnetic field measurements can be used as a proxy for sunspot size and for sunspot intensity. The only “trends” they mention are in their statistical PDFs (probability distribution functions) corelating the magnetic field measurements to the spots’ sizes and intensities. It’s a Solar physics instrumentation paper, good, careful work.

P&L reference only 2008 sunspot data, almost three years old. Nowhere do they even mention climate science or the Maunder Minimum. The Science reporter, Phil Berardelli who should be canned for misrepresenting the P&L work, slipped that one in on you. To put it simply, you’ve been had.

Read P&L.

John

Of course P&L’s work should be funded and continued. The biggest problem in astrophysics is meaningful data. Anyone willing to do peer reviewable tracking should be encouraged.

@377: So what, we are going to keep repeating now that it is better not to make personal attacks, while still making them, like you?

On topic:

Is there anybody on this thread who have been able to reproduce the results reported by ModelE?

[Response: Yes. ;) But what is in question here? The code is available, and as long as you have enough computer power (a laptop) you can run it. If you want replication of the basic results independent of modelE, look at the other GCMs. Perhaps you can be a little clearer about what you want? – gavin]

I should have been more clear.

Is there anybody in this thread [except Gavin and other keepers of RC] who have been able to reproduce [any of] the results reported by ModelE [concerning global temperatures, for years past 2010, like those on the page below]?

http://data.giss.nasa.gov/modelE/transient/dangerous.html

Example graph:

http://data.giss.nasa.gov/cgi-bin/cdrar/do_LTmapE.py

Wally 371: No more, “you’re confused”, “you’re wrong”, “you need to go learn some stats”.

BPL: We’re no longer allowed to tell anyone they’re wrong? I have news for you, Wally–sometimes people really are wrong. All viewpoints are NOT equally valid.

Thanks for #373 and #379, Hank–a denialist “regular” whom I encounter has pushed this line periodically in the past. My impression is that Livingstone & Penn are presenting a reasonable case as astronomers studying solar activity, and that they are not responsible for the misuse of their conclusions by such as Lawrence Solomon. Is that the case, or are they more similar to the Soon & Baliunas prototype? Here’s a report from NASA science news, which to me suggests the former:

http://science.nasa.gov/science-news/science-at-nasa/2009/03sep_sunspots/

Of course, the idea that a new solar minimum would result in Little Ice Age-like conditions is logical enough in a way, but ignores the obvious fact that climate can be affected by more than one factor at a given time. Ignoring AGW is logical enough, I suppose, if you don’t believe it exists–which is to say that the “new solar minimum” meme represents a denier position that at least has some internal consistency (by no means a given in that community.) But of course you can’t–successfully!–go so far as to use the assumption that AGW isn’t real as evidence that AGW isn’t real!

#377–I think you’re right, Waldo.

#378–“According to the Science blog it actually the global warming that is causing the sunspots to start to disappear.”

Wow, that’s some feedback loop! ;-)

In case anyone wants to read another item about the rapid growth of renewable energy, here is one:

http://www.renewableenergyfocus.com/view/12482/solar-pv-shipments-to-exceed-16-gw-in-2010-but-tough-2011/

Two of my opinions: (1) 16 GW per year of new generating capacity is a truly non-negligible amount of new power generation; (2) although exponential growth can not continue forever on a finite planet, doubling every 1 – 2 years (as currently for biofuels, wind and solar) can continue for the next 20 years, as far as can be known now.

No matter what you think about anything else, that will be a lot of new power generation by any standard.

John Peter says: 16 September 2010 at 11:23 PM

”According to the Science blog it actually the global warming that is causing the sunspots to start to disappear.”

“the Science blog” John Peter refers to has to be Wattsup.

Congrats, JP, for the best Poe I’ve seen in quite a while.

Google finds that phrase and excerpts from the source, thus:

“NASA: Are Sunspots Disappearing? | Watts Up With That?

Sep 3, 2009 … This disappearing act is possible because sunspots are made of magnetism…. I expect the feedback effect of CO2 is causing a disturbance to the magnetic field and inevitably leading to the sun going out and the extinction of all life in the solar system. Possibly within days….”

Barton,

“We’re no longer allowed to tell anyone they’re wrong? I have news for you, Wally–sometimes people really are wrong. All viewpoints are NOT equally valid.”

I suppose I should have further explained the “you’re wrong” example. First, stating such doesn’t really matter. You need to explain why and thus it will become obvious. What is often found here is a grand claim of how someone is wrong or confused, then little is actually proven. Such as in Gavin’s example, where he explained a concept that doesn’t apply to this situation, then after I explained why, he hasn’t responded. So, was gave confused about calling me confused? Better to just stick to facts and save the grand claims of who’s right, wrong, or confused.

Second, if all you do is say the equivalent of “you’re wrong, go read X.” You’re basically putting forth a ridicule, because you need to establish what specifically in X you’re talking about and where/why it is useful in this discussion. Just telling someone to go read is not an argument, its an ad hominem.

[Response: Nonsense. If someone makes a claim that is based on false premises, telling them to go read some background is perfectly appropriate. You are not the first person to ask very similar questions and so answers given in other cases are likely to be relevant. Indeed, the existence of this record is one of the boons of the internet since, hopefully it allows for some progress in the discussion. Please note that my ability to engage in multiple conversations at all times is constrained by the existence of the rest of my life (including my day job). If you feel that you have a question I haven’t addressed, then repeat it (but leave out the attitude – it doesn’t increase my inclination to be responsive). – gavin]

Kevin,

>Oh, and “ad homs” are arguments of the form: “A is a bad person, therefore his argument his discredited.” Simple name-calling is not an ad-hom, much less so a statement (correct or not) that someone is “confused.”<

You don't need to have a strict if A, then B type attachment to the insult or ridicule. Gavin, attempted to add strength to his argument by casting this ridicule, in the first sentence no less. Further, ad hominems do not need to be strict insults. They can come in many forms, but the basic point is that they are about the author of the argument and not the argument itself. Thus, saying "you're X", where X = something bad, abusive or similar, is going to pretty much always be an ad hominem. Logically, there are ways to prove these kinds of statements, but generally they would then be red herrings to your original topic anyway.

[Response: Please stick to real issues. Endless debates about what is and is not ad hom and who is the most outraged are extremely tedious. – gavin]

Surely you’re joking, John Peter.

John Peter refers to the opening post of the (inverted) “discussion” thread accompanying the article @ Science: http://news.sciencemag.org/sciencenow/2010/09/say-goodbye-to-sunspots.html

The thread reads much like something from WUWT.

http://www.google.com/search?q=do_LTmapE

Nick, are you getting your material from old blog posts Google turns up searching on that string? It was apparently quite a popular topic on that side of the blogosphere for a while.

But did you check your second link?

It doesn’t find anything at the moment.

I’m referring to blogstuff mentioning that old link a few years ago.

The ridicolous 40% by 2020 campaign | Kiwiblog

Jul 20, 2009 … http://data.giss.nasa.gov/cgi-bin/cdrar/do_LTmapE.py.

http://www.kiwiblog.co.nz/

…

Tropical Troposphere « Climate Audit

Apr 26, 2008 … http://data.giss.nasa.gov/cgi-bin/cdrar/do_LTmapE.py

For folks who persist in trying to make computer models look silly by suggesting they break with extreme numbers ignoring limits that are physically realistic, a comparison to experience with aircraft simulation might be instructive:

http://catless.ncl.ac.uk/Risks/26.16.html#subj12

“… a simulator cannot be known accurately to represent what would happen during unusual piloting rudder-reversal behavior because, well, until the accident nobody knew at what point airframe structure would fail (it turned out to be some one-third stronger than required by certification regulations)!”

…

Simulators do not necessarily accurately represent the behavior of aircraft close to the “edge” of their “flight envelope”, and they cannot be taken to do so for flight outside the envelope. Aerodynamicists study these “out of envelope” characteristics by use of wind tunnel models, but actual aircraft are not flown in flight test “out of envelope” except for certain restricted manoeuvres prescribed in the certification regulations …. For most “out of envelope” flight, aerodynamicists can make very well-educated guesses (from their wind-tunnel modeling) as to what might happen, but they are the first people to say that they are not at all certain. Nobody goes out to flight-test Boeing 747 aircraft in partially-inverted almost-vertical semi-spins, such as what happened to a China Air Lines Boeing 747 over the Pacific near San Francisco in 1985 …[link to details in original post] So there are limits to what simulators can achieve, and it is a matter for research how much “out of envelope” behavior can be usefully and veridically simulated.

_______________

And for global climate, we’re piloting our flight by committee(s).