We’ve been a little preoccupied recently, but there are some recent developments in the field of do-it-yourself climate science that are worth noting.

First off, the NOAA/BAMS “State of the Climate 2009” report arrived in mailboxes this week (it has been available online since July though). Each year this gets better and more useful for people tracking what is going on. And this year they have created a data portal for all the data appearing in the graphs, including a lot of data previously unavailable online. Well worth a visit.

First off, the NOAA/BAMS “State of the Climate 2009” report arrived in mailboxes this week (it has been available online since July though). Each year this gets better and more useful for people tracking what is going on. And this year they have created a data portal for all the data appearing in the graphs, including a lot of data previously unavailable online. Well worth a visit.

Second, many of you will be aware that the UK Met Office is embarking on a bottom-up renovation of the surface temperature data sets including daily data and more extensive sources than have previously been available. Their website is surfacetemperatures.org, and they are canvassing input from the public until Sept 1 on their brand new blog. In related news, Ron Broberg has made a great deal of progress on a project to use the much more extensive daily weather report data into a useful climate record. Something that the pros have been meaning to do for a while….

Third, we are a little late to the latest hockey stick party, but sometimes taking your time makes sense. Most of the reaction to the new McShane and Wyner paper so far has been more a demonstration of wishful thinking, rather than any careful examination of the paper or results (with some notable exceptions). Much of the technical discussion has not been very well informed for instance. However, the paper commendably comes with extensive supplementary info and code for all the figures and analysis (it’s not the final version though, so caveat lector). Most of it is in R which, while not the easiest to read language ever devised by mankind, is quite easy to run and mess around with (download it here).

The M&W paper introduces a number of new methods to do reconstructions and assess uncertainties, that haven’t previously been used in the climate literature. That’s not a bad thing of course, but it remains to be seen whether they are an improvement – and those tests have yet to be done. One set of their reconstructions uses the ‘Lasso’ algorithm, while the other reconstruction methods use variations on a principal component (PC) decomposition and simple ordinary least squares (OLS) regressions among the PCs (varying the number of PCs retained in the proxies or the target temperatures). The Lasso method is used a lot in the first part of the paper, but their fig. 14 doesn’t show clearly the actual Lasso reconstructions (though they are included in the background grey lines). So, as an example of the easy things one can look at, here is what the Lasso reconstructions actually gave:

‘Lasso’ methods in red and green over the same grey line background (using the 1000 AD network).

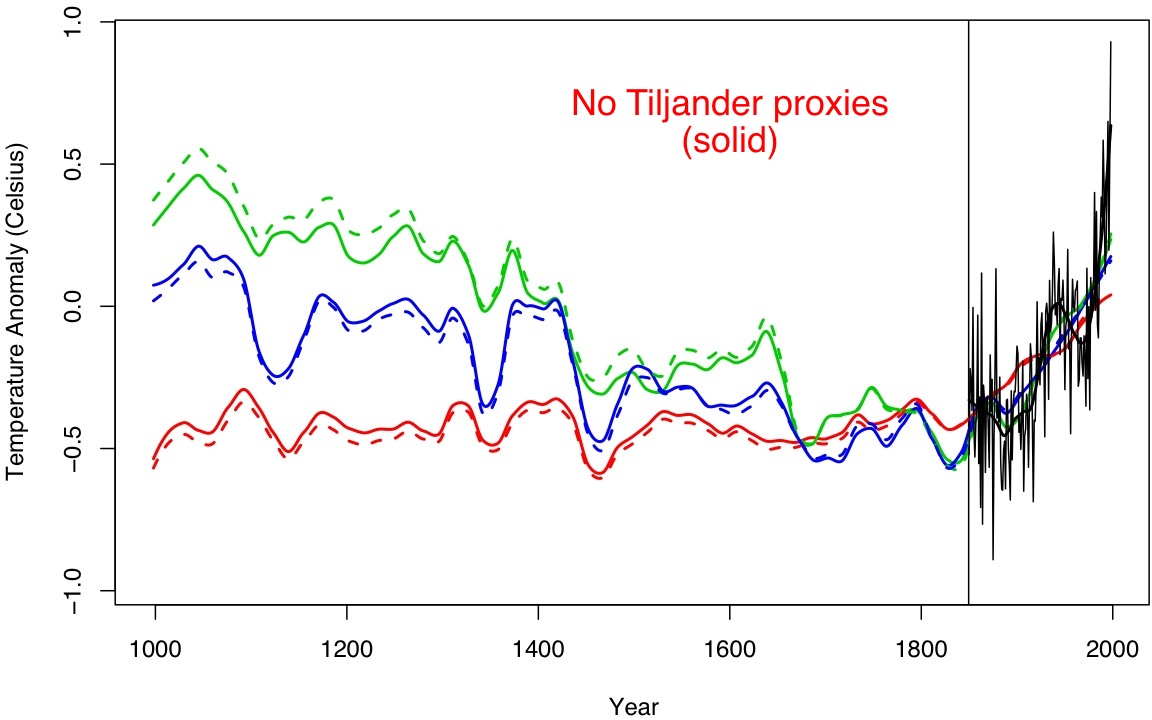

It’s also easy to test a few sensitivities. People seem inordinately fond of obsessing over the Tiljander proxies (a set of four lake sediment records from Finland that have indications of non-climatic disturbances in recent centuries – two of which are used in M&W). So what happens if you leave them out?

No Tiljander (solid), original (dashed), loess smooth for clarity, for the three highlighted ‘OLS’ curves in the original figure).

… not much, but it’s curious that for the green curves (which show the OLS 10PC method used later in the Bayesian analysis) the reconstructed medieval period gets colder!

There’s lots more that can be done here (and almost certainly will be) though it will take a little time. In the meantime, consider the irony of the critics embracing a paper that contains the line “our model gives a 80% chance that [the last decade] was the warmest in the past thousand years”….

I tried posting this in the new thread. First try, I got Captcha problems. Second try, they told me it was a duplicate posting. I don’t know if it got in or not; thus my posting here. Apologies if this is a duplicate.

OT: Gavin, Mike, Ray, somebody who has published in peer reviewed journals–I really need someone to look over my paper and make sure I’m not making any stupid mistake that will get it rejected on the first pass. Tamino is very kindly reviewing the statistics for me, and it’s a largely statistical analysis paper. But I don’t want to get the climate science wrong. If you’re sick of hearing about this from me, let me know and I’ll shut up.

To Barton Paul Levenson @38: This paper has relevant recipes…

Rangaswamy, M., Weiner, D., and Ozturk, A., “Computer Generation of Correlated Non-Gaussian Radar Clutter,” IEEE Trans. on Aerospace and Electronic Systems, v.31, p.106-116 (1995).

Thanks, Chris.

This seems like the applicable thread to ask this question: I am having trouble finding details on the radiation models in the recently released Community Earth System Model (CESM). I am interested in both solar spectrum absorption and scattering (as a function of wavelength) and the flip-side infrared from earth. All I can find and download are users guides for the linked model. In the interest of “Doing it yourselves”, do you have any links or info that may help? Thanks.

Re: ~#193 Hank

Have you considered enhancing the transpiration ‘of’ your roof instead of its albedo?

http://www.guardian.co.uk/environment/2010/sep/02/green-roof-urban-heat-island

[Stuart Gaffin’s site is down now which is another reason why I cannot vouch for its effectiveness].

Bill McKibben, founder 350.org, author ‘Eaarth’, with David Letterman; on climate change and the 10/10/10 ‘Global Work Party’: http://bit.ly/McKLet (Youtube video)

This blog piece alleging the gulf oil spill has dramatically affected the North Atlantic current is doing the rounds. http://europebusines.blogspot.com/2010/08/special-post-life-on-this-earth-just.html Can we ignore this as largely unfounded speculation? This http://environmentalresearchweb.org/cws/article/news/43681 would suggest so.

Geoff, yes, I checked; a ‘green roof’ adds weight not allowed by code on old structures; rebuilding to support them isn’t in the code yet in our area (every old house has different issues; cheaper to tear down and rebuild completely).

Some white “cool” roofing plastic is the same stuff used as the base waterproof layer for green roofs, which might leave the option open for later structural improvement.

No good pure choices for roofs I can find. All have issues.

Speaking of \do-it-yourself,\ there is a rather big brewhaha at the \Climate Skeptic\ blogsite. One of the commentators, under the moniker of \Russ R,\ has posted the following to demonstrate the statistical relevance of recent climate models. I have challenged him to peer-review his findings–which he believes are unique to climate science–but he has refused. I wonder if anyone at Real Climate could comment upon them?

This is the verbatim post; the complete trainwreck thread can be seen at the link above. Russ R posts:

\I’ve fed the UAH and GISS data into excel and done a little basic statistical analysis.

Based on IPCC (1990), we are testing whether the 1990-2010 data support a predicted temperature rise of 0.3 degrees per decade or 0.03 degrees per year.

UAH data (1990 – 2010)

Observations = 247

Slope = 0.01840195

Standard Error = 0.001953884

Ho: slope ≠ 0.03

T Stat = 5.935894464

GISS data (1990 – 2010)

Observations = 247

Slope = .019243

Standard Error = 0.001579303

Ho: slope ≠ 0.03

T Stat = 6.811077087

Turning to IPCC (1995), we are testing whether the 1995-2010 data support a predicted temperature rise of 2.5 degrees per century or 0.025 degrees per year.

UAH data (1995 – 2010)

Observations = 187

Slope = 0.013700253

Standard Error = 0.002937661

Ho: slope ≠ 0.025

T Stat = 3.84651187

GISS data (1990 – 2010)

Observations = 187

Slope = 0.016804263

Standard Error = 0.002198422

Ho: slope ≠ 0.025

T Stat = 3.728008917

\If I recall correctly, a t-stat greater than 1.96 indicated a significant difference at the 95% confidence level. But I’ll leave the interpretation of the above t-stats to Waldo.

\The raw data are here, for anyone who’d like to check my work.\

Russ R then corrects himself a few posts later.

\And after typing that last comment, I just caught one of my own mistakes.

In my statistical analysis for the IPCC (1995) predictions, I accidentally used a prediction of 2.5 degrees per century when I should have used 2.0 degrees per century for the null hypothesis. This was a misreading on my part and it changes the T-stats substantially. (This mistake did not impact the 1990 analysis.)

Correction follows for IPCC (1995):

UAH data (1995 – 2010)

Observations = 187

Slope = 0.013700253

Standard Error = 0.002937661

Ho: slope ≠ 0.020

T Stat = 2.144477

GISS data (1990 – 2010)

Observations = 187

Slope = 0.016804263

Standard Error = 0.002198422

Ho: slope ≠ 0.020

T Stat = 1.45365

Using the corrected T-stats, the P-values (two-tailed) are 0.03199 (UAH) and 0.14605 (GISS). Therefore, the UAH data would allow us to reject the IPCC (1995) predictions with more than 95% confidence. The GISS data show a difference with only ~85% confidence.\

Thanks.

Waldo @209 — Use statistical tests to determine how long an intrval produces a result one can be confident of. For climate, 15 years might work but 20 years is safer.

Nothing of significance can be learned from a mere decade of data.

Waldo, given that the guy doesn’t even know how to formulate a proper null hypothesis, I think we can dispense with peer review and simply reject.

Thanks David and Ray. I hope you don’t mind if I cross-post your responses?

Waldo, this finds a good high-school level explanation with exercises for the student, written by someone who knows how to do this stuff professionally.

http://www.google.com/search?q=grumbine+how+detect+trends

Always provide the best most useful answer you can, even if the person asking won’t follow the pointer; some later reader may appreciate it.

It looks to me like someone hasnt also considered the error bounds on what the IPCC models would predict for a 15-20 year trend. The IPCC prediction is invalidated when 95% error bars on observed trend dont intersect the 95% bounds on the prediction.

@ Waldo 7 September 2010 at 1:45 PM re Russ R “…demonstrate the statistical relevance of recent climate models …”

“For the mid-range IPCC emission scenario, IS92a, assuming the “best estimate” value of climate sensitivity[7] and including the effects of future increases in aerosol concentrations, models project an increase in global mean surface temperature relative to 1990 of about 2 deg C by 2100.” IPCC Second Assessment Climate Change 1995 – http://www.ipcc.ch/pdf/climate-changes-1995/ipcc-2nd-assessment/2nd-assessment-en.pdf

Over the period of his analysis – 1995-2010 – CO2 only increased by ~30ppm, from ~360 to 390+ ppmv, at current fossil C emissions of ~7-8 GT/yr. By 2100, under scenario IS92a anthropogenic C emissions will be ~20 GT/yr (see fig 2, p10 of the IPCC report). His extrapolation fails to account for this, and he’s making the same sort of inept (intentional?) mistakes as Monckton, demonstrating the statistical irrelevance of bad analyses.

One also might wonder why he’s choosing to attack the 1995 IPCC report instead of AR4 which came out in 2007; or why he chose 1990 and 1995 as start dates when data is available back to 1980?

Waldo@209 – Thanks for cross-posting the statistical results here at Real Climate. I’d appreciate having them checked out by anyone who’s got the know-how, time and interest.

To provide readers here with some context I’ll copy over my original concerns with the predictive accuracy of the warming projections made in IPCC (1990) and (1995):

“Regarding IPCC (1990), it predicted: “An average rate of increase of global mean temperature during the next century of about 0.3°C per decade (with an uncertainty range of 0.2—0.5°C per decade) assuming the IPCC Scenario A (Business-as-Usual) emissions of greenhouse gases;…” In the 20 years since then, the world has gone about “business as usual” regarding emissions, but actual warming has been only 0.184°C per decade (UAH) or 0.192°C per decade (GISS). Both observations are below the lower bound of uncertainty given by the prediction.

Moving on to IPCC (1995), they reduced their warming prediction by approximately a third compared to their 1990 estimate: “Temperature projections assuming the “best estimate” value of climate sensitivity, 2.5°C, (see Section D.2) are shown for the full set of IS92 scenarios in Figure 18. For IS92a the temperature increase by 2100 is about 2°C. Taking account of the range in the estimate of climate sensitivity (1.5 to 4.5°C) and the full set of IS92 emission scenarios, the models project an increase in global mean temperature of between 0.9 and 3.5°C”

Evidence to from 1995 to date: emissions have been business as usual, but actual warming has been only 0.137°C per decade (UAH) or 0.168°C per decade (GISS).”

The data I used to test the projections are neatly compiled here: http://woodfortrees.org/data/uah/from:1990/plot/uah/from:1990/trend/plot/gistemp/from:1990/plot/gistemp/from:1990/trend

If you prefer, you can find original data sets here: http://vortex.nsstc.uah.edu/data/msu/t2lt/uahncdc.lt and here: http://data.giss.nasa.gov/gistemp/tabledata/GLB.Ts.txt

And Waldo was kind enough to post the statistical results above, so I won’t duplicate his efforts.

David @210: “Use statistical tests to determine how long an intrval produces a result one can be confident of. For climate, 15 years might work but 20 years is safer. Nothing of significance can be learned from a mere decade of data.” Well David, there are 20 years of data to test the IPCC (1990) projections, and 15 years for the IPCC (1995) projections. The results show with 99.99…% confidence that the 1990 predictions were too high, and very good (>85%) confidence that the 1995 predictions were too high.

Ray @211: “…given that the guy doesn’t even know how to formulate a proper null hypothesis, I think we can dispense with peer review and simply reject.” It would be a more convincing rejection if you actually evaluated the statistical results on their merits and pointed out any errors or misinterpretations. Thanks.

Phil Scadden @214 – “It looks to me like someone hasnt also considered the error bounds on what the IPCC models would predict for a 15-20 year trend.” I have never seen any 95% error bars on the IPCC’s reports, otherwise I would have happily considered them. I did take into consideration the IPCC (1990) stated range of uncertainty (0.2 – 0.5 deg C per decade). And the actual warming trend as measured by both GISS (0.192) and UAH (0.184) was outside that range.

Brian Dodge @215 – If I’m understanding your concern correctly, you’re saying the CO2 emissions in Scenario IS92a are back-end loaded, so the early years (1995-2010) should by definition see less warming than the average of 2.0 deg C per century? If so, that’s a fair point. Is there a more specific warming forecast that can be tested with the observations since the 1995 report was issued? Or do we have to wait until 2100 to validate the model?

“One also might wonder why he’s choosing to attack the 1995 IPCC report instead of AR4 which came out in 2007; or why he chose 1990 and 1995 as start dates when data is available back to 1980?” No need to wonder… I’ll explain as clearly as I can. I’m testing the 1990 and 1995 projections for exactly the reason specified by David B. Benson @210. “Nothing of significance can be learned from a mere decade of data”. And I chose 1990 and 1995 as start dates because I’m not interested in how well models can be tuned to replicate the past… the success of a model is based solely on how well it can predict the future.

Russ R., The statistics are inextricably related to the errors–which you utterly ignore. Look at the confidence intervals rather than the point estimates. Moreover, consider that the IPCC estimates are equilibrium values, and we probably have not acheived equilibrium on a timescale of a decade (oceans take ~30 years to reach equilibrium). I’m sorry, Russ, but you might want to look at the analysis that has been done (some of it on this very site) before claiming your Nobel prize.

Ray Ladbury @218,

No need to be snide… I’m not planning on flying to Norway any time soon.

You say I “utterly ignore” the errors. Not so. The IPCC (1990) warming projections gave a range of uncertainty, and in the 20 years since they were published, the warming trend has been below the lower bound of that range (See my comment #217 responding to Phil Scadden). IPCC (1995) gave a range of estimates, that by my reading were the point estimates for the set of IS92 scenarios and a range climate sensitivities, so are not “error bars” per se (See my comment #216). If you can point me toward where I could find the uncertainties around the 1995 projections it would be appreciated.

In regard to your “timescale of a decade” comment, I’m looking at 20 years of observed temperatures since IPCC (1990) and 15 years since IPCC (1995). If you’re arguing that it requires no less than 30 years of observations before a model’s predictions can be reliably tested, you’re saying that all of the models currently in use are not only untested, but are, in any practical sense, untestable.

You argument around confidence intervals and equilibrium timescales is a double-edged sword. While these factors make it more difficult to reject a model, they also reduce the amount of confidence in the model’s predictions. At some point, wide uncertainties and long timescales render predictions untestable.

There’s a rather crude saying (which I believe originates in quantum physics) likening models which can’t be tested to toilets which can’t be flushed. I’ll leave the punch line to your imagination.

Joking aside, if you’re serious about the predictive ability of climate models (and not merely their ability to recreate the past), you should want to see model outputs rigorously tested and validated. If your argument is that I’m going about testing predictions in the wrong way, then please point me in the right direction. How would you propose testing model predictions of warming against observations? (And if it’s been discussed in a prior thread, please direct me there.)

“Waldo@209 – Thanks for cross-posting the statistical results here at Real Climate.”

You are welcome, Russ, I thought you’d like to have some experts look over your equations just in case you might have missed something along the way.

Ray, you dodged Russ’s request. If he’s to consider these “error bars,” he’d like you to point to what they actually are out side this .2-.5 degrees/decade which, if I recall correctly, don’t come with any specific confidence range in those older reports. Either way link to it, or at least explain it to us. Don’t just sit back an throw out vague criticisms. Its completely unconstructive.

Further, you don’t test a model against the error bars, you test it against the mostly likely predicted result, then get the confidence of that this mostly likely result is the same as the observed result. So your null hypothesis is a slope of what ever the average predicted warming was, ie. .25 degrees/decade, then you take the observed values and get the derivatives, sum them, then test against your observed slope using a T test. This is how it is done. The specific model predictions for each year are not data, as much as you’d like them to be, so you don’t test each group of points like a normal T test. You certainly don’t test against the lower bound 95% CI, that would be double counting the error. If you did, then you’d basically be able to say X is with in, for example, the 60% confidence interval of the 95% confidence interval of Y. Err, no, not how you do it.

Russ R. @219 — The climate exhibits multidecadal internal variabity. This variablity is not predicatable in a deterministic sense. Crudely speaking, this means that the error bounds on predictions have to be fairly wide.

But even ultrasimple climate models offer some predictability. Here is mine:

https://www.realclimate.org/index.php/archives/2010/03/unforced-variations-3/comment-page-12/#comment-168530

Russ R 219,

Try here:

http://BartonPaulLevenson.com/ModelsReliable.html

Russ R.,

> so the early years (1995-2010) should by definition see less warming than

> the average of 2.0 deg C per century?

Not by definition, by model results, but yes. Have you looked at the projection you’re discussing? [1] It’s not a straight line. It curves up. And the estimate given is explicitly for 2100, not for 2010. It won’t do to take a straightedge ruler, draw a line from 1990 to 2100, and say the model fails if observations to date don’t have the same slope. The IPCC in 1995 did not project a linear warming and you cannot just divide 2.0 deg C by ten to get a 0.2 deg warming rate for the first decades.

(Incidentally — since the projection is a 2 deg rise from 1990 to 2100, [2] isn’t that eleven decades and 0.18 deg per decade, well within one standard error of the GISS observations?)

I think what you’d really want to do is take the SAR’s model output for 1990-2010 (or 1995-2010, which is very short, but whatever), calculate a linear trend for that period, and test that against the trend in the observations (with uncertainties). For inspiration, look e.g. here:

https://www.realclimate.org/index.php/archives/2009/12/updates-to-model-data-comparisons/

Now, I don’t know if the model output data is online (anyone got a link?), so I don’t have a least squares fit for you. But perhaps we can get some use out of that straightedge ruler after all: You can get an idea of the 1990-2010 model trend by taking the graph of the projection [1], printing it out, and drawing a line from the origin through the graph at year 2010. If you prefer to draw the line through the graph at 1995 and 2010, go ahead, same difference. What do you get? I got a line crossing 2100 at about 1.43 deg C, so I figure we’re looking at a projection of about 0.14 deg per early decade, which seems almost complacent compared to what GISTEMP is showing.

Or 0.13 deg/decade, really, if we’re dividing by eleven decades. Uh, what was the UAH observed trend again?

:-)

Notes:

[1] IPCC SAR WG1, p. 322, fig. 6.21 (the solid “all” line). All you climate science memorabilia buffs out there, take note: the IPCC has finally got the full FAR and SAR digitized and online (http://www.ipcc.ch/publications_and_data/publications_and_data_reports.htm). Don’t try this over dial-up, though, the SAR WG1 report alone is 51 MB.

[2] Just to be clear, we’re talking about the SAR’s projection for the then mid-range IPCC emission scenario (IS92a), assuming the “best estimate” value of climate sensitivity (then 2.5 deg C) and including the effects of future increases in aerosol, using a simple upwelling diffusion-energy balance climate model.

Wally, You and Russ can find the answers here, among other places on this very site:

https://www.realclimate.org/index.php/archives/2008/05/what-the-ipcc-models-really-say/

https://www.realclimate.org/index.php/archives/2009/12/updates-to-model-data-comparisons/

The fact Russ is declaring the models dead without having even done a 5 minute search suggests he isn’t really serious. This isn’t that hard.

Ray,

“The fact Russ is declaring the models dead without having even done a 5 minute search suggests he isn’t really serious. This isn’t that hard.”

Please, drop this kind of BS. Its nothing but ad hominem appeal to motives. Stay on topic.

Second, those links don’t answer any of the issues brought up. One is talking about AR4, which is 2007 right? That report is not being discussed here, its far to early to test it. Then the next talks about Hansen 1988, but it doesn’t TEST it at all. It simply draws a couple lines on a graph. No statistical test was used AT ALL. The author drew on the graph and proclaimed something “resonable.” Sorry, no sale.

Maybe you think this stuff is easier than it is?

Russ R – the point you are trying to make has been discussed often before. As a starting point, look at fig 1 in:

global cooling wanna bet

This is a model output which shows that decade of slow warming is completely consistent with the physics. At decadal scale there is too much internal variability in the climate system to be making predictions.

More discussion on this can be found in:

What the ipcc models really say and

decadal predictions

CM,

“It’s not a straight line. It curves up. And the estimate given is explicitly for 2100, not for 2010. It won’t do to take a straightedge ruler, draw a line from 1990 to 2100, and say the model fails if observations to date don’t have the same slope. The IPCC in 1995 did not project a linear warming and you cannot just divide 2.0 deg C by ten to get a 0.2 deg warming rate for the first decades. ”

The 1990 result was linear, at least very close to it, the scanned in pdf is not wonderfully clear.

“I got a line crossing 2100 at about 1.43 deg C, so I figure we’re looking at a projection of about 0.14 deg per early decade, which seems almost complacent compared to what GISTEMP is showing.”

I don’t think there is a lot of validity to testing a model through roughly 10% of the predicted time scale, if the predictions drastically change in the next 90%. For instence if you look at figure 18 in IPCC 1995, all the predictions are basically the same through at least 2020. So our models are not discernable after at least 25 years, though they don’t truly diverge from each other until 2050 to 2060.

So, I think what we’re coming to here is that IPCC 1990 sucks, and everything after that is untestable for at least the next 20 or 30 years. And people wonder why we’re skeptical of doing all that is proposed to save us from this disaster?

Wally, the subject is internal variability, and those two posts are quite relevant. The fact of the matter is that the warming seen to date is quite consistent with predictions. Do you have any idea how tiring it is to have neophytes/trolls (one cannot tell the difference) come on here every 2 months or so claiming to disprove climate change based on a very simple, WRONG statistical analysis? And the mistakes are always the same.

I suggest both of you pay a visit to Tamino’s and READ.

Russ R.,

The models are testable. They reproduce the overall behavior of Earth’s climate quite well over millennia. They reproduce regional patterns fairly convincingly. And I am sorry if you don’t like the fact that climate takes decades to settle. Don’t blame me. Take it up with reality.

I would also say that estimating 95% confidence limit model predictions for a 1-2 decade period is difficult to say the least. It is my understanding that so far we dont have remotely enough computer power to explore the possible space for this level internal variability. It is only on longer scales that climate trends in response to particular forcing scenarios can be made with any confidence.

> take a straightedge ruler, draw a line from 1990 to

> 2100, and say the model fails

That’s

https://www.realclimate.org/index.php/archives/2010/08/monckton-makes-it-up/

Ray,

Well my post to CM that is still awaiting moderation, despite lacking anything that could even remotely be considered abusive, may have helped here, but I suppose I might as well attempt to restate some of those things to both specifically address what little you brought up and to maybe get through this apparently biased filter (which is famous around the climate-blogosphere, though I’ve never run into it myself maybe until now).

Anyway, yes of course the issue is variability, but you had better expand on what you think to be “internal variability.” Are you referring to the variability of the actual climate or of the models, or both? I assume because you’re likely referring to the first figure from that “what the ipcc models really say” article that you’re mostly talking about the models’ internal variability. Well, that I have little use of. The only reason you have variability in a model is because your model lacks confidence in trying to account for what we think of a chaotic system and you need many runs (think simulations of a baseball season or playoffs). This doesn’t really matter when it comes time to test your model, because your model has essentially turned into the pooled set of model runs. It is no longer any individual run. And like I said before, you don’t really care about the model’s internal error when it comes time to test it against reality. You don’t essentially get to double count error and say something like well this is with a 95% of the 95th percentile run. You want to know how it did against the average of all the model runs, because, as I said, this is essentially your model now.

“The fact of the matter is that the warming seen to date is quite consistent with predictions.”

Prove it, or give me a link that actually tests this statistically instead of just drawing lines and calling it “reasonable” as Gavin, if I recall correctly, did.

“Do you have any idea how tiring it is to have neophytes/trolls (one cannot tell the difference) come on here every 2 months or so claiming to disprove climate change based on a very simple, WRONG statistical analysis?”

I love how you attempt to sneakily stick in a little ad hominem attack while playing the victim card here. I don’t care if you’re tired, more to the point, it doesn’t matter in establishing what’s right or wrong. If you’re so tired, too tired to adiquately explain your position or leavy any reasonable criticism to help us reach the truth of this matter, why do you bother commenting? I was around reading and commenting on some of those threads, I guess the problem here is that you (which I mean both specifically and generally) can’t actually convincingly show us what’s wrong, much less what’s actually right.

“I suggest both of you pay a visit to Tamino’s and READ.”

Please, stop talking down to me, and Russ for that matter, to the unbiased, undecided reader you just make your argument look weak. I’ll be happy to read something specific you think makes your case, but telling me I need some sort of general refresher is nothing short of attempting to make an argument out of an insult. Just stop. That doesn’t work out here in what you refear to as “reality” in that post to Russ.

Wally, there is no getting around the 30 year wait for some kinds of prediction. You want some long term validation? How about

Broecker

Okay, based on incredibly primitive model and frankly lucky. However, models make MANY predictions that you can validate. BPL link earlier Model prediction has a good list. You really want to wait another 30 years before you do anything. Suppose they were right?

Wow… I’ve got some catching up to do:

David B. Benson @222 – Very nice work there. What I like most is that you state a clear prediction (with a range of uncertainty) for a specific time period (2010s: 0.686 +- 0.024 K), such that anyone can easily look at it in 2020 for validation. I only wish all the projections out there were so straightforward.

Barton Paul Levenson @223 – That’s quite the reading list… and after skimming through I haven’t found anything I disagree with. However, I notice Hansen (1988) isn’t on your list of model predictions that have been confirmed.

CM @224 – Thanks for your critique, most useful thing I’ve read all day… Very much appreciated. I’ll correct my work and update the results here. I’ll first have a crack (below) at statistically testing Hansen (1988) since I’ve finally found the model output data (though I haven’t seen any error ranges).

Ray @225 & Wally @226 – I’ve already seen lots of squiggly lines on charts presented as evidence either supporting or refuting model predictions. I’m simply trying to apply some basic statistics to come up with an objective evaluation of my own, without cherry picking, distorting, or otherwise trying to bias the conclusion. My hypothesis is that certain predictions were too high, but if the data say they’re good, I’ll happily accept what the data say. And I appreciate honest input and guidance if I’m doing something incorrectly.

So, to test Hansen (1988), I’m using the model output data from here: https://www.realclimate.org/data/scen_ABC_temp.data

If anyone can point me to uncertainty values, it would be appreciated.

I’ve seen plenty of back and forth debate over whether Scenario A or B is more appropriate. I’m in no position to choose one over the other, so I’ll test them both (I’ll throw Scenario C in as well, since the data are already in the table).

Using a start year of 1988 and an end year of 2010, I get the following best fit linear regression slope coefficients (deg C / year):

Scenario_A Scenario_B Scenario_C

Slope 0.029844862 0.027396245 0.019274704

I’ll test those against the following GISTEMP and UAH observations from 1988 to present (up to July 2010) available here: http://woodfortrees.org/data/uah/from:1988/to:2010.58/plot/gistemp/from:1988/to:2010.58

I’ve noticed that some evaluations of Hansen (1988) use 1984 as a start date. I’m choosing not to follow that approach, instead only testing predictions against future observations.

Here are the results:

SUMMARY OUTPUT (UAH)

Observations 271

Slope 0.017105791

Standard Error 0.001694254

Scenario_A Scenario_B Scenario_C

Ho: 0.029844862 0.027396245 0.019274704

T Stat 7.518986439 6.073738521 1.280157957

P value 5.52891E-14 1.24966E-09 0.200489589

SUMMARY OUTPUT (GISTEMP)

Observations 271

Slope 0.018145974

Standard Error 0.00137222

Scenario_A Scenario_B Scenario_C

Ho: 0.029844862 0.027396245 0.019274704

T Stat 8.525519713 6.741099703 0.822557306

P value 0 1.57192E-11 0.410759786

Would appreciate any thoughts, guidance, etc.

> Tamino’s

Site search is a useful tool; try these for some places to begin:

http://www.google.com/search?q=site:tamino.wordpress.com/+Hansen+prediction

@236: I tried the first six or so links in the search and they return 404. Using the search box on the site for “hansen” comes up empty. Could you please post links to exact posts?

Phil,

I know there is no way to get around the 30 year wait (or more). This is not good or bad, its just “reality” as Ray so kindly points out. But just because its difficult or takes a lot of time to really gain knowledge or confidence in a theory, does not mean we can simply settle for less confidence before we drastically alter our lifestyle so to avoid some dubiously predicted catastrophe.

Yes, we all know raising the GMT by 4 degrees or more over 100 years or less, is going to give some adverse effects. Yes, we also know that we should probably expect at least some warming (say 0-2 degrees/100 years). What we disagree about is the confidences surrounding how much warming and what that means we should do. So you ask suppose they are right? Well, even if they are right, I still don’t think we understand the Earth’s climate well enough to know if over the whole this “catastrophic” warming will even be bad. Sure some people will likely be hurt in a myriad of ways, but it would be completely naive to not also study and consider posible benefits. Yet, I don’t think very many people are looking at such things, do you?

So, ok, lets suppose they are right, despite any convincing proof that they are, now convince me I need to do something about it.

> Tamino’s

Ah, that blog had some WordPress problems; older topics may be missing.

I don’t have the time to find you individual posts, and blog posts aren’t going to answer basic questions without some background; most of us here are just readers like yourself, not professional searchers or reference librarians.

Try the \Start Here\ link above, or http://www.google.com/search?q=site:realclimate.org+Hansen+predictions, or

http://www.google.com/search?q=site:skepticalscience.com+Hansen+predictions

Most of this assumes you’ve had statistics 101. If you haven’t, Robert Grumbine will help, and invites questions.

http://moregrumbinescience.blogspot.com/2009/01/results-on-deciding-trends.html

Ray : “Russ R., The statistics are inextricably related to the errors–”

True, but the specific problem is the following : in order to prove a theory, you have to test definite predictions that are substantially different from other theories. Consider for example the problem of general relativity. The 43″/century precession of the perihelion of Mercury was successfully explained py GR, but it wasn’t a definite proof because it could be obtained by a number of other causes (actually ANY deviation from a 1/r2 law produces a precession ! ) that could be possibly ill known and underestimated (a possible ellipticity of the sun for instance). BUT the deviation of light from stars close to the sun during the eclipse in 1919 was definitely, beyond any doubt, twice the classical newtonian result and there were absolutely no hope for explaining it but by relativistic effects. Proving a theory “beyond any doubt” requires exactly that – actually for me *means* exactly that : exhibiting a definite observational result that is really impossible to explain without the theory at stake (same for all “classical” great experiments like Morley Michelson, Davisson and Germer, etc….).

I don’t blame climate science for uncertainties : I just don’t understand very well how one can both recognize the uncertainties AND claim that science is proved “beyond any reasonable doubts” – and put the blame on those who question these uncertainties.

Oh, and if you search for a few minutes you’ll find this sort of help:

http://eesc.columbia.edu/courses/ees/climate/labs/globaltemp/index.html

Gilles: “I just don’t understand very well how one can both recognize the uncertainties AND claim that science is proved “beyond any reasonable doubts” – and put the blame on those who question these uncertainties.”

Very simple.

1)The models have been around since the late 70s and predicted trend reasonably well for the level of maturity they had at the time. These are dynamical, not statistical models, so it they had been substantively wrong, you would have expected large errors by now.

2)The models predict MANY things in addition to temperature.

3)The trend thus far is consistent with the predicted trend modula the inherent internal variability of the climate system.

Perhaps a visit to Barton’s page would be in order, Gilles.

http://www.bartonpaullevenson.com/ModelsReliable.html

And then maybe you can explain to us why you guys want the models to be wrong so badly that you can’t even wait for the required period to assess the prediction–after all, if the models are wrong, it doesn’t make the reality of climate change go away.

@239: I am sorry, that’s not helpful. You posted a link that turned out to be dead. If you don’t have time to search to where that material is now, that’s OK, but please don’t suggest that someone might not know how to use Google or might not have had statistics 101. That doesn’t win you any favors.

Russ,

My question is why are you concentrating on only a single quantity–temperature–despite the fact that

1)the time series is too short

2)you don’t have good estimates for the uncertainties (they are provided in one of the references I gave)

3)temperature is a particularly noisy variable

4)we don’t know how much warming is in the pipeline before the system returns to equilibrium

There is also the question of what, precisely, you intend to do with this info. Let’s say you find significant disagreement (you won’t). Does that mean that the models are substantively wrong? Perhaps. However, it could also imply 1)more warming in the pipeline, 2)the models do not include all the global dimming we are seeing (and this is a transient effect, probably due to aerosols from fossil fuel consumption in India, China, etc.).

I really think that your time would be better spent learning the science rather than trying to assess predictions you don’t understand.

Wally 238: I still don’t think we understand the Earth’s climate well enough to know if over the whole this “catastrophic” warming will even be bad.

BPL: How does “harvests failing around the world due to massive increases in drought” grab you?

Gilles

You know that the basics are way beyond doubt – the physical properties of gases being the central issue. After that there are observations of many kinds in dozens of fields, and they go together to show a coherent picture. That picture is consistent with the underlying physics.

Uncertainties? Absolutely. But they only show gaps in the jigsaw, they don’t change the overall picture.

Science is not a house of cards. Even if one or several lines of evidence need adjustment or replacement, the structure will not fail. The only killer item is the foundation in basic physics, and overturning that is Nobel Prize material. Even then, there will still be all the other lines of investigation and evidence which will need a new basis to align around. Take out the underlying physics and you then have to explain everything from lasers to the climate temperature record and sea level rise on the new basis.

Climate disruption is the big picture on the box. We’ve pretty well got the corners right and the edges look pretty good. The fact that we can’t yet see the pieces to complete a few patches in the picture we’ve so far put together does not mean that the boats in the foreground are upside down or that the horizon should be beneath the waterline. The picture is coming together and even if we get frustrated that we can’t see how to complete a house or a tree in the background it does not mean that we should chuck it all back in the box and start again.

Uncertainties do not change or challenge the overall picture, they just tell us where to do the next lot of work.

Russ (#235),

You’re welcome. But there are more pitfalls for the unwary. Before you rush back to Excel to do more tests, I’d like to suggest you read and digest some the links people have given above, plus the FAQ and other posts here:

https://www.realclimate.org/index.php/archives/2004/12/index/#ClimateModelling

Among other things, you may want to read up on what model “tuning” actually involves, since you appear to think that the models are tinkered with until they match the past temperature trend.

As for testing Hansen ’88, the link I provided already covers that (see also discussion of the limitations of the model, scenarios, and different temperature records in this earlier post.)

Wally (#228),

> I don’t think there is a lot of validity to testing a model through

> roughly 10% of the predicted time scale

Well, the elapsed time in empirically observable reality is what we have to work with. Feel free to suggest a better way to test the projection that does not involve time travel, psychic powers, or substituting a straight line of your own fancy for the model projection.

The state of the art has moved on a bit since the 1990 projection, done with a simple model before aerosol forcing was taken properly into account. Still, if you compare with a “no warming” null, even the 1990 projection doesn’t half suck so far.

And yes, I do wonder why you are “skeptical” about addressing a risk just because it’s poorly bounded. Poorly bounded risk is not a happy thought.

Ray Ladbury – Please stop assuming my intent. I don’t “want the models to be wrong”. I want to objectively assess how well some widely publicized models have predicted warming over the longest time period available. I don’t have any preferred outcome. The facts are what they are.

“why are you concentrating on only a single quantity–temperature–despite the fact that

1)the time series is too short

2)you don’t have good estimates for the uncertainties (they are provided in one of the references I gave)

3)temperature is a particularly noisy variable

4)we don’t know how much warming is in the pipeline before the system returns to equilibrium”

1) The time series wasn’t too short to evaluate the Hansen (1988) model in the links you provided me. How is it too short now, even with another 2-3 years of added observations?

2) I saw mentions of uncertainty in one of the links you gave (+/-0.05 and +/-0.06 deg C/decade), but I couldn’t trace them back to the original data, which is why I asked. (FYI, the slope of both data sets is well outside this range of uncertainty.)

3) Yes, temperature is a noisy variable, which is why I’m looking at the oldest predictions. Again, you seemed happy with the use of temperature data to support the model in the links you sent.

4) How do you even know whether the current temperature is above or below equilibrium? Why assume that warming is in the pipeline and not cooling? Transitioning from an El Nino to a La Nina would suggest cooling lies ahead, right?

“There is also the question of what, precisely, you intend to do with this info.” I intend to use what the data say to better understand the reliability of climate projections. Wouldn’t you want to do the same? And at present, the data are saying that the Hansen (1988) temperature projections for both Scenarios A and B are significantly higher than the subsequent warming trend in the 22.5 years since they were published. The same can be said for the IPCC (1990) temperature projection and the 20 years of available temperature data. IPCC (1995) is looking much more reliable, based on CM’s analysis above (comment #224). Yes, all of these assessments may change if temperatures move sharply in the future.

“Let’s say you find significant disagreement (you won’t).” Actually, I did. Take a look at the T-stats I posted above which for Scenarios A and B range from 6.1 to 8.5. Wouldn’t you call those significant?

“Does that mean that the models are substantively wrong? Perhaps.” No, it doesn’t mean the models are wrong, at least not structurally. But it does suggest that some factors may be poorly understood, with incorrect values assumed. It could also mean that some structural elements are not factored into the models.

“However, it could also imply 1)more warming in the pipeline, 2)the models do not include all the global dimming we are seeing (and this is a transient effect, probably due to aerosols from fossil fuel consumption in India, China, etc.).” You’ll need to build a case for point 1 as to why there might be “warming in the pipeline”. However, I agree with you on point 2. The models don’t factor in everything… they are simplifications. Testing them from time to time is the only way to see how well the predict the future.

Barton,

“How does “harvests failing around the world due to massive increases in drought” grab you?”

Maybe I did not make myself entirely clear. I won’t be scared into believing you, you need to post large and exhaustive set of studies on how the climite change will effect basically everything. Grabbing one headline, without a source, is a meaningless scare tactic. Feel free to try again though.

Russ R #235: I get for your GISTemp data set, using ordinary least-squares with some software I had lying around:

The agreement seems to suggest that you are ignoring the autocorrelation present (right?), which is significant for monthly data and should be accounted for.

On a more general note, the test you are doing (the most appropriate one being against the B scenario) kicks over the straw man that the model outputs are “perfect”. Of course they are not. Here Hansen writes that the doubling sensitivity of the model used, 4.2 degrees, was at the upper edge of the then-known uncertainty range of 3 +/- 1.5 degrees. No, he gives no explicit uncertainty estimate, but that’s the ball park we’re looking at. I think 30% off was pretty good for then.