This is Hansen et al’s end of year summary for 2009 (with a couple of minor edits). Update: A final version of this text is available here.

If It’s That Warm, How Come It’s So Damned Cold?

by James Hansen, Reto Ruedy, Makiko Sato, and Ken Lo

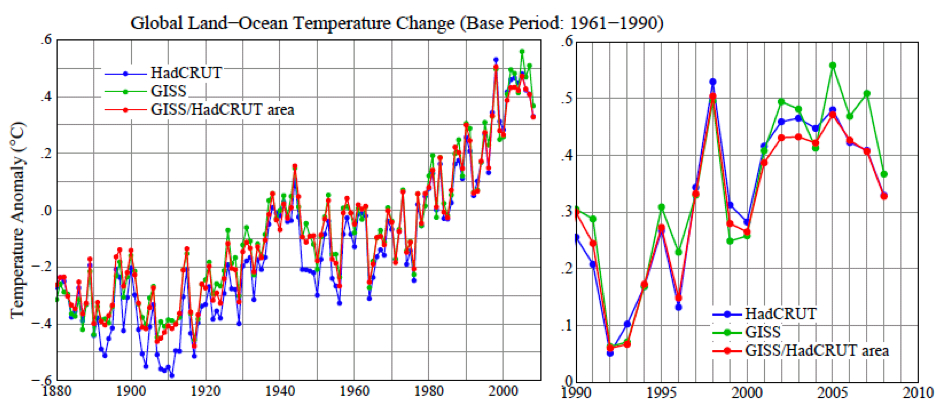

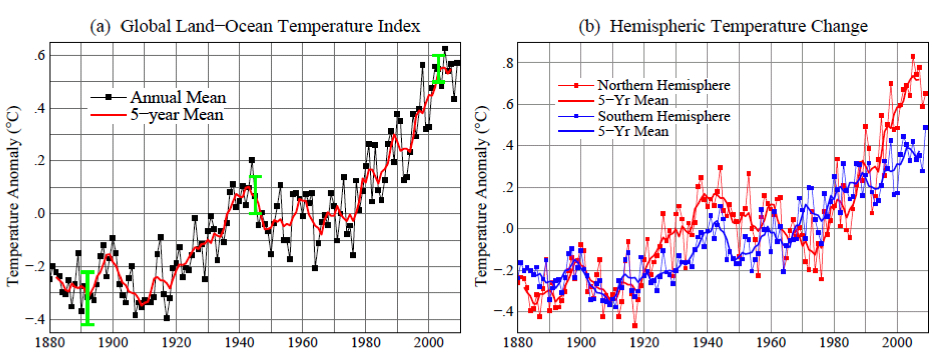

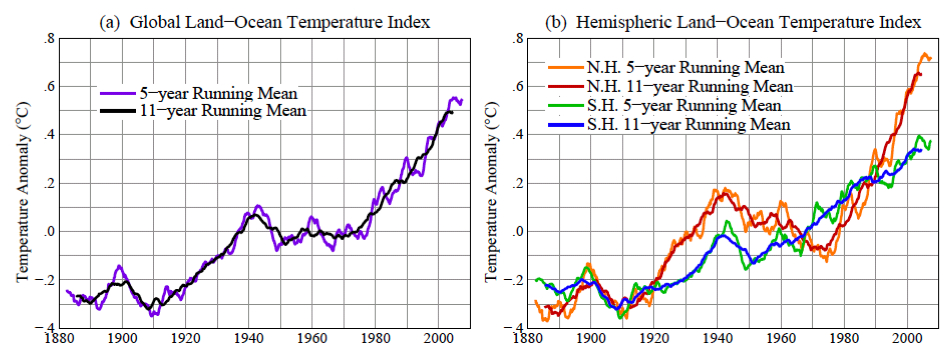

The past year, 2009, tied as the second warmest year in the 130 years of global instrumental temperature records, in the surface temperature analysis of the NASA Goddard Institute for Space Studies (GISS). The Southern Hemisphere set a record as the warmest year for that half of the world. Global mean temperature, as shown in Figure 1a, was 0.57°C (1.0°F) warmer than climatology (the 1951-1980 base period). Southern Hemisphere mean temperature, as shown in Figure 1b, was 0.49°C (0.88°F) warmer than in the period of climatology.

Figure 1. (a) GISS analysis of global surface temperature change. Green vertical bar is estimated 95 percent confidence range (two standard deviations) for annual temperature change. (b) Hemispheric temperature change in GISS analysis. (Base period is 1951-1980. This base period is fixed consistently in GISS temperature analysis papers – see References. Base period 1961-1990 is used for comparison with published HadCRUT analyses in Figures 3 and 4.)

The global record warm year, in the period of near-global instrumental measurements (since the late 1800s), was 2005. Sometimes it is asserted that 1998 was the warmest year. The origin of this confusion is discussed below. There is a high degree of interannual (year‐to‐year) and decadal variability in both global and hemispheric temperatures. Underlying this variability, however, is a long‐term warming trend that has become strong and persistent over the past three decades. The long‐term trends are more apparent when temperature is averaged over several years. The 60‐month (5‐year) and 132 month (11‐year) running mean temperatures are shown in Figure 2 for the globe and the hemispheres. The 5‐year mean is sufficient to reduce the effect of the El Niño – La Niña cycles of tropical climate. The 11‐year mean minimizes the effect of solar variability – the brightness of the sun varies by a measurable amount over the sunspot cycle, which is typically of 10‐12 year duration.

C’est le résumé pour 2009 de Hansen et collaborateurs’, (avec quelques modifications mineures).

“Si ça se réchauffe tant, bon sang, pourquoi fait-il si froid?”

par James Hansen, Reto Ruedy, Makiko Sato, and Ken Lo (Traduction par Xavier Pétillon)

Figure 2. 60‐month (5‐year) and 132 month (11‐year) running mean temperatures in the GISS analysis of (a) global and (b) hemispheric surface temperature change. (Base period is 1951‐1980.)

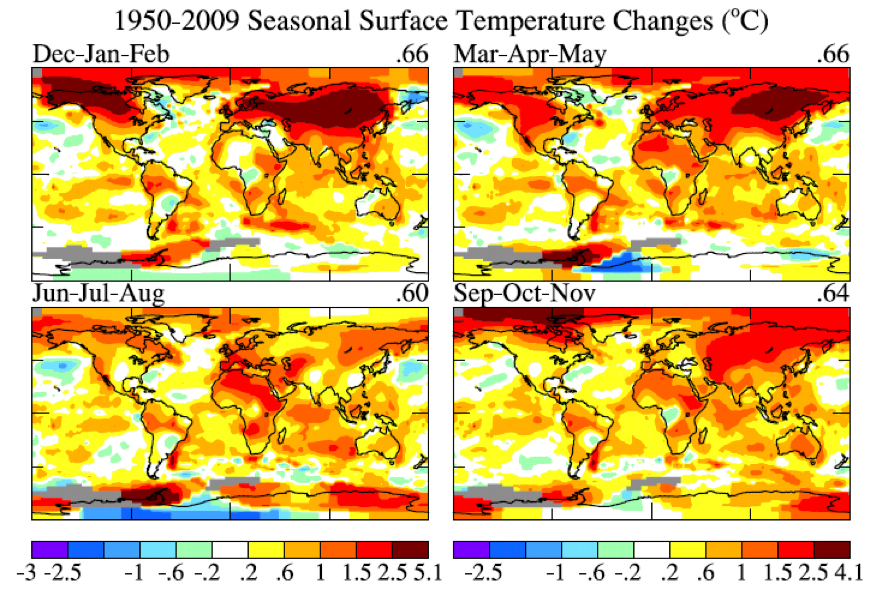

There is a contradiction between the observed continued warming trend and popular perceptions about climate trends. Frequent statements include: “There has been global cooling over the past decade.” “Global warming stopped in 1998.” “1998 is the warmest year in the record.” Such statements have been repeated so often that most of the public seems to accept them as being true. However, based on our data, such statements are not correct. The origin of this contradiction probably lies in part in differences between the GISS and HadCRUT temperature analyses (HadCRUT is the joint Hadley Centre/University of East Anglia Climatic Research Unit temperature analysis). Indeed, HadCRUT finds 1998 to be the warmest year in their record. In addition, popular belief that the world is cooling is reinforced by cold weather anomalies in the United States in the summer of 2009 and cold anomalies in much of the Northern Hemisphere in December 2009. Here we first show the main reason for the difference between the GISS and HadCRUT analyses. Then we examine the 2009 regional temperature anomalies in the context of global temperatures.

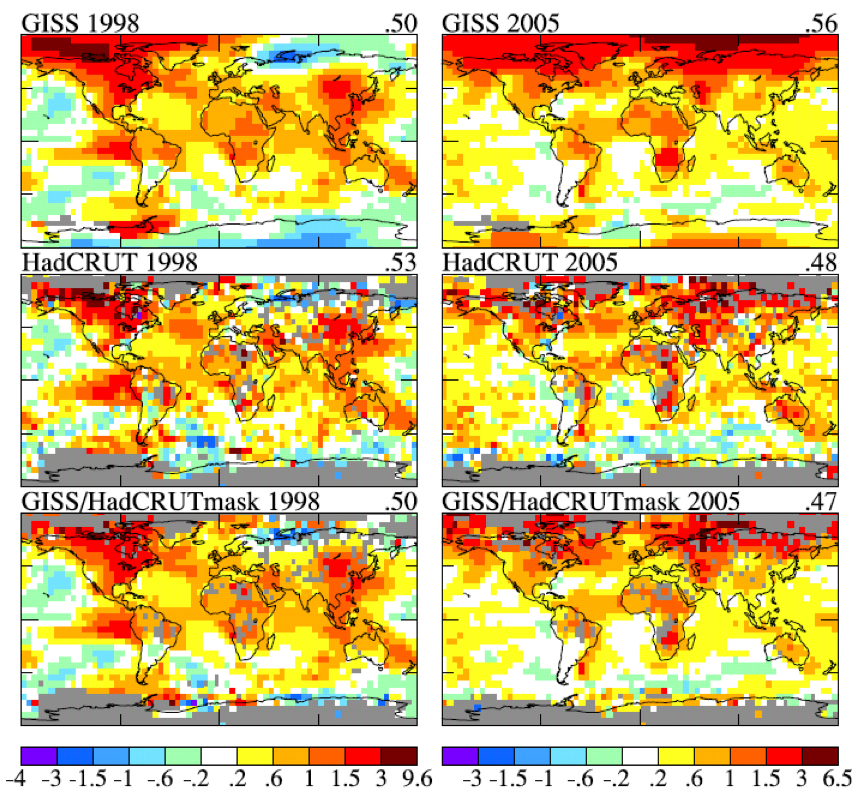

Figure 3. Temperature anomalies in 1998 (left column) and 2005 (right column). Top row is GISS analysis, middle row is HadCRUT analysis, and bottom row is the GISS analysis masked to the same area and resolution as the HadCRUT analysis. [Base period is 1961‐1990.]

Figure 3 shows maps of GISS and HadCRUT 1998 and 2005 temperature anomalies relative to base period 1961‐1990 (the base period used by HadCRUT). The temperature anomalies are at a 5 degree‐by‐5 degree resolution for the GISS data to match that in the HadCRUT analysis. In the lower two maps we display the GISS data masked to the same area and resolution as the HadCRUT analysis. The “masked” GISS data let us quantify the extent to which the difference between the GISS and HadCRUT analyses is due to the data interpolation and extrapolation that occurs in the GISS analysis. The GISS analysis assigns a temperature anomaly to many gridboxes that do not contain measurement data, specifically all gridboxes located within 1200 km of one or more stations that do have defined temperature anomalies.

The rationale for this aspect of the GISS analysis is based on the fact that temperature anomaly patterns tend to be large scale. For example, if it is an unusually cold winter in New York, it is probably unusually cold in Philadelphia too. This fact suggests that it may be better to assign a temperature anomaly based on the nearest stations for a gridbox that contains no observing stations, rather than excluding that gridbox from the global analysis. Tests of this assumption are described in our papers referenced below.

Figure 4. Global surface temperature anomalies relative to 1961‐1990 base period for three cases: HadCRUT, GISS, and GISS anomalies limited to the HadCRUT area. [To obtain consistent time series for the HadCRUT and GISS global means, monthly results were averaged over regions with defined temperature anomalies within four latitude zones (90N‐25N, 25N‐Equator, Equator‐25S, 25S‐90S); the global average then weights these zones by the true area of the full zones, and the annual means are based on those monthly global means.]

Figure 4 shows time series of global temperature for the GISS and HadCRUT analyses, as well as for the GISS analysis masked to the HadCRUT data region. This figure reveals that the differences that have developed between the GISS and HadCRUT global temperatures during the past few decades are due primarily to the extension of the GISS analysis into regions that are excluded from the HadCRUT analysis. The GISS and HadCRUT results are similar during this period, when the analyses are limited to exactly the same area. The GISS analysis also finds 1998 as the warmest year, if analysis is limited to the masked area. The question then becomes: how valid are the extrapolations and interpolation in the GISS analysis? If the temperature anomaly scale is adjusted such that the global mean anomaly is zero, the patterns of warm and cool regions have realistic‐looking meteorological patterns, providing qualitative support for the data extensions. However, we would like a quantitative measure of the uncertainty in our estimate of the global temperature anomaly caused by the fact that the spatial distribution of measurements is incomplete. One way to estimate that uncertainty, or possible error, can be obtained via use of the complete time series of global surface temperature data generated by a global climate model that has been demonstrated to have realistic spatial and temporal variability of surface temperature. We can sample this data set at only the locations where measurement stations exist, use this sub‐sample of data to estimate global temperature change with the GISS analysis method, and compare the result with the “perfect” knowledge of global temperature provided by the data at all gridpoints.

| 1880‐1900 | 1900‐1950 | 1960‐2008 | |

|---|---|---|---|

| Meteorological Stations | 0.2 | 0.15 | 0.08 |

| Land‐Ocean Index | 0.08 | 0.05 | 0.05 |

Table 1. Two‐sigma error estimate versus period for meteorological stations and land‐ocean index.

Table 1 shows the derived error due to incomplete coverage of stations. As expected, the error was larger at early dates when station coverage was poorer. Also the error is much larger when data are available only from meteorological stations, without ship or satellite measurements for ocean areas. In recent decades the 2‐sigma uncertainty (95 percent confidence of being within that range, ~2‐3 percent chance of being outside that range in a specific direction) has been about 0.05°C. The incomplete coverage of stations is the primary cause of uncertainty in comparing nearby years, for which the effect of more systematic errors such as urban warming is small.

Additional sources of error become important when comparing temperature anomalies separated by longer periods. The most well‐known source of long‐term error is “urban warming”, human‐made local warming caused by energy use and alterations of the natural environment. Various other errors affecting the estimates of long‐term temperature change are described comprehensively in a large number of papers by Tom Karl and his associates at the NOAA National Climate Data Center. The GISS temperature analysis corrects for urban effects by adjusting the long‐term trends of urban stations to be consistent with the trends at nearby rural stations, with urban locations identified either by population or satellite‐observed night lights. In a paper in preparation we demonstrate that the population and night light approaches yield similar results on global average. The additional error caused by factors other than incomplete spatial coverage is estimated to be of the order of 0.1°C on time scales of several decades to a century, this estimate necessarily being partly subjective. The estimated total uncertainty in global mean temperature anomaly with land and ocean data included thus is similar to the error estimate in the first line of Table 1, i.e., the error due to limited spatial coverage when only meteorological stations are included.

Now let’s consider whether we can specify a rank among the recent global annual temperatures, i.e., which year is warmest, second warmest, etc. Figure 1a shows 2009 as the second warmest year, but it is so close to 1998, 2002, 2003, 2006, and 2007 that we must declare these years as being in a virtual tie as the second warmest year. The maximum difference among these in the GISS analysis is ~0.03°C (2009 being the warmest among those years and 2006 the coolest). This range is approximately equal to our 1‐sigma uncertainty of ~0.025°C, which is the reason for stating that these five years are tied for second warmest.

The year 2005 is 0.061°C warmer than 1998 in our analysis. So how certain are we that 2005 was warmer than 1998? Given the standard deviation of ~0.025°C for the estimated error, we can estimate the probability that 1998 was warmer than 2005 as follows. The chance that 1998 is 0.025°C warmer than our estimated value is about (1 – 0.68)/2 = 0.16. The chance that 2005 is 0.025°C cooler than our estimate is also 0.16. The probability of both of these is ~0.03 (3 percent). Integrating over the tail of the distribution and accounting for the 2005‐1998 temperature difference being 0.61°C alters the estimate in opposite directions. For the moment let us just say that the chance that 1998 is warmer than 2005, given our temperature analysis, is at most no more than about 10 percent. Therefore, we can say with a reasonable degree of confidence that 2005 is the warmest year in the period of instrumental data.

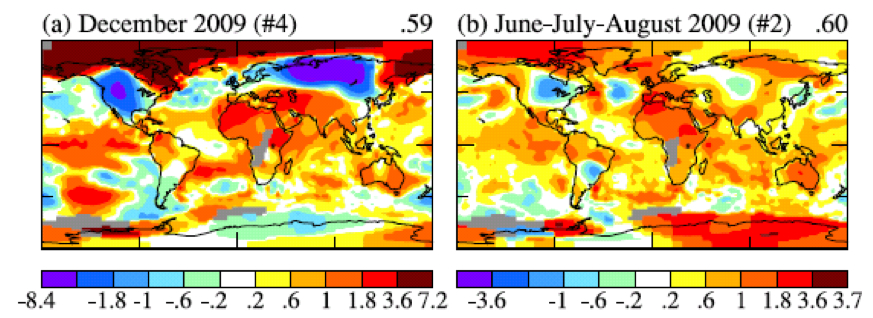

Figure 5. (a) global map of December 2009 anomaly, (b) global map of Jun‐Jul‐Aug 2009 anomaly. #4 and #2 indicate that December 2009 and JJA are the 4th and 2nd warmest globally for those periods.

What about the claim that the Earth’s surface has been cooling over the past decade? That issue can be addressed with a far higher degree of confidence, because the error due to incomplete spatial coverage of measurements becomes much smaller when averaged over several years. The 2‐sigma error in the 5‐year running‐mean temperature anomaly shown in Figure 2, is about a factor of two smaller than the annual mean uncertainty, thus 0.02‐0.03°C. Given that the change of 5‐year‐mean global temperature anomaly is about 0.2°C over the past decade, we can conclude that the world has become warmer over the past decade, not cooler.

Why are some people so readily convinced of a false conclusion, that the world is really experiencing a cooling trend? That gullibility probably has a lot to do with regional short‐term temperature fluctuations, which are an order of magnitude larger than global average annual anomalies. Yet many lay people do understand the distinction between regional short‐term anomalies and global trends. For example, here is comment posted by “frogbandit” at 8:38p.m. 1/6/2010 on City Bright blog:

“I wonder about the people who use cold weather to say that the globe is cooling. It forgets that global warming has a global component and that its a trend, not an everyday thing. I hear people down in the lower 48 say its really cold this winter. That ain’t true so far up here in Alaska. Bethel, Alaska, had a brown Christmas. Here in Anchorage, the temperature today is 31[ºF]. I can’t say based on the fact Anchorage and Bethel are warm so far this winter that we have global warming. That would be a really dumb argument to think my weather pattern is being experienced even in the rest of the United States, much less globally.”

What frogbandit is saying is illustrated by the global map of temperature anomalies in December 2009 (Figure 5a). There were strong negative temperature anomalies at middle latitudes in the Northern Hemisphere, as great as ‐8°C in Siberia, averaged over the month. But the temperature anomaly in the Arctic was as great as +7°C. The cold December perhaps reaffirmed an impression gained by Americans from the unusually cool 2009 summer. There was a large region in the United States and Canada in June‐July‐August with a negative temperature anomaly greater than 1°C, the largest negative anomaly on the planet.

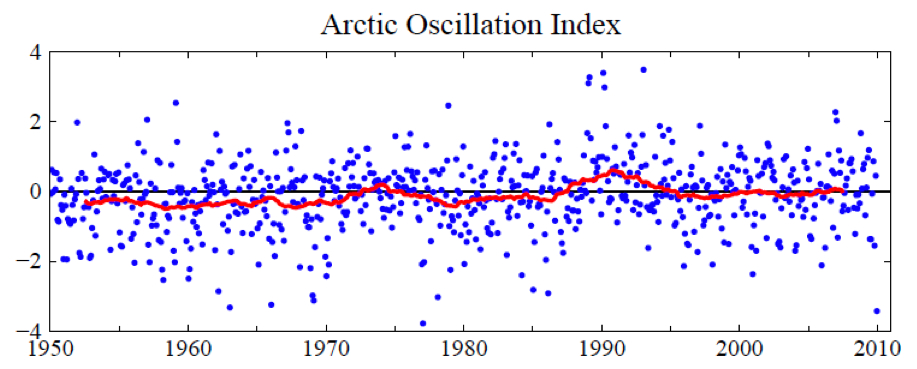

Figure 6. Arctic Oscillation (AO) Index. Positive values of the AO index indicate high low pressure in the polar region and thus a tendency for strong zonal winds that minimize cold air outbreaks to middle latitudes. Blue dots are monthly means and the red curve is the 60‐month (5‐year) running mean.

How do these large regional temperature anomalies stack up against an expectation of, and the reality of, global warming? How unusual are these regional negative fluctuations? Do they have any relationship to global warming? Do they contradict global warming?

It is obvious that in December 2009 there was an unusual exchange of polar and mid‐latitude air in the Northern Hemisphere. Arctic air rushed into both North America and Eurasia, and, of course, it was replaced in the polar region by air from middle latitudes. The degree to which Arctic air penetrates into middle latitudes is related to the Arctic Oscillation (AO) index, which is defined by surface atmospheric pressure patterns and is plotted in Figure 6. When the AO index is positive surface pressure is high low in the polar region. This helps the middle latitude jet stream to blow strongly and consistently from west to east, thus keeping cold Arctic air locked in the polar region. When the AO index is negative there tends to be low high pressure in the polar region, weaker zonal winds, and greater movement of frigid polar air into middle latitudes.

Figure 6 shows that December 2009 was the most extreme negative Arctic Oscillation since the 1970s. Although there were ten cases between the early 1960s and mid 1980s with an AO index more extreme than ‐2.5, there were no such extreme cases since then until last month. It is no wonder that the public has become accustomed to the absence of extreme blasts of cold air.

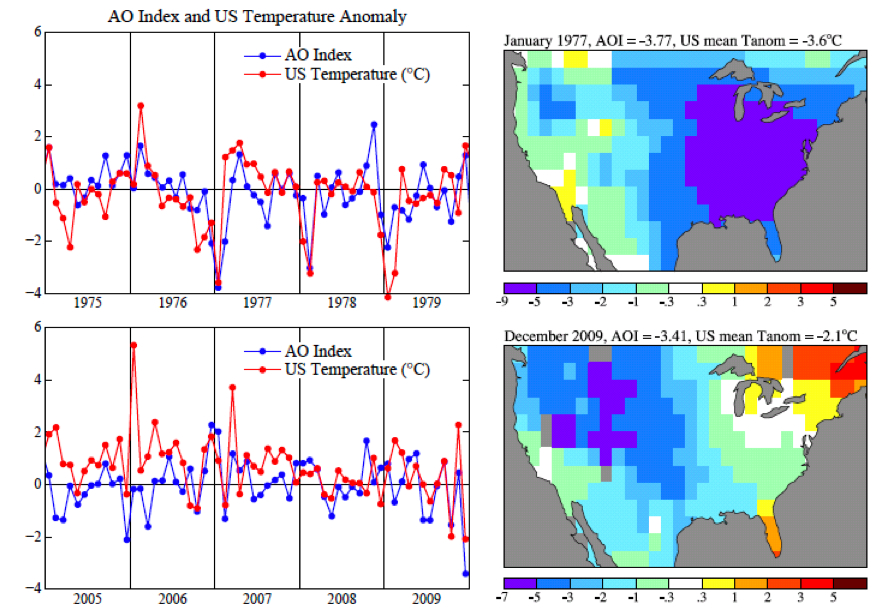

Figure 7. Temperature anomaly from GISS analysis and AO index from NOAA National Weather Service Climate Prediction Center. United States mean refers to the 48 contiguous states.

Figure 7 shows the AO index with greater temporal resolution for two 5‐year periods. It is obvious that there is a high degree of correlation of the AO index with temperature in the United States, with any possible lag between index and temperature anomaly less than the monthly temporal resolution. Large negative anomalies, when they occur, are usually in a winter month. Note that the January 1977 temperature anomaly, mainly located in the Eastern United States, was considerably stronger than the December 2009 anomaly. [There is nothing magic about a 31 day window that coincides with a calendar month, and it could be misleading. It may be more informative to look at a 30‐day running mean and at the Dec‐Jan‐Feb means for the AO index and temperature anomalies.]

The AO index is not so much an explanation for climate anomaly patterns as it is a simple statement of the situation. However, John (Mike) Wallace and colleagues have been able to use the AO description to aid consideration of how the patterns may change as greenhouse gases increase. A number of papers, by Wallace, David Thompson, and others, as well as by Drew Shindell and others at GISS, have pointed out that increasing carbon dioxide causes the stratosphere to cool, in turn causing on average a stronger jet stream and thus a tendency for a more positive Arctic Oscillation. Overall, Figure 6 shows a tendency in the expected sense. The AO is not the only factor that might alter the frequency of Arctic cold air outbreaks. For example, what is the effect of reduced Arctic sea ice on weather patterns? There is not enough empirical evidence since the rapid ice melt of 2007. We conclude only that December 2009 was a highly anomalous month and that its unusual AO can be described as the “cause” of the extreme December weather.

We do not find a basis for expecting frequent repeat occurrences. On the contrary. Figure 6 does show that month‐to‐month fluctuations of the AO are much larger than its long term trend. But temperature change can be caused by greenhouse gases and global warming independent of Arctic Oscillation dynamical effects.

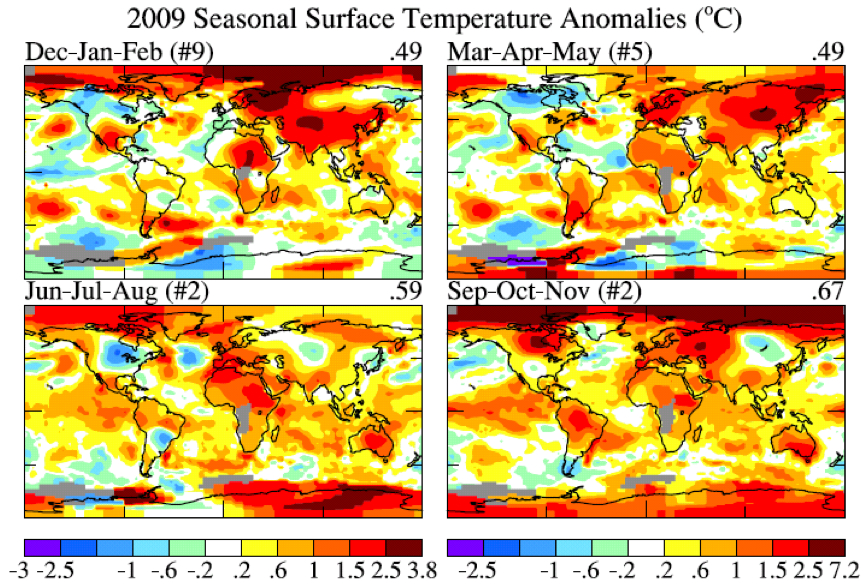

Figure 8. Global maps 4 season temperature anomalies for ~2009. (Note that Dec is December 2008. Base period is 1951‐1980.)

Figure 9. Global maps 4 season temperature anomaly trends for period 1950‐2009.

So let’s look at recent regional temperature anomalies and temperature trends. Figure 8 shows seasonal temperature anomalies for the past year and Figure 9 shows seasonal temperature change since 1950 based on local linear trends. The temperature scales are identical in Figures 8 and 9. The outstanding characteristic in comparing these two figures is that the magnitude of the 60 year change is similar to the magnitude of seasonal anomalies. What this is telling us is that the climate dice are already strongly loaded. The perceptive person who has been around since the 1950s should be able to notice that seasonal mean temperatures are usually greater than they were in the 1950s, although there are still occasional cold seasons.

The magnitude of monthly temperature anomalies is typically 1.5 to 2 times greater than the magnitude of seasonal anomalies. So it is not yet quite so easy to see global warming if one’s figure of merit is monthly mean temperature. And, of course, daily weather fluctuations are much larger than the impact of the global warming trend. The bottom line is this: there is no global cooling trend. For the time being, until humanity brings its greenhouse gas emissions under control, we can expect each decade to be warmer than the preceding one. Weather fluctuations certainly exceed local temperature changes over the past half century. But the perceptive person should be able to see that climate is warming on decadal time scales.

This information needs to be combined with the conclusion that global warming of 1‐2°C has enormous implications for humanity. But that discussion is beyond the scope of this note.

References:

Hansen, J.E., and S. Lebedeff, 1987: Global trends of measured surface air temperature. J. Geophys. Res., 92, 13345‐13372.

Hansen, J., R. Ruedy, J. Glascoe, and Mki. Sato, 1999: GISS analysis of surface temperature change. J. Geophys. Res., 104, 30997‐31022.

Hansen, J.E., R. Ruedy, Mki. Sato, M. Imhoff, W. Lawrence, D. Easterling, T. Peterson, and T. Karl, 2001: A closer look at United States and global surface temperature change. J. Geophys. Res., 106, 23947‐23963.

Hansen, J., Mki. Sato, R. Ruedy, K. Lo, D.W. Lea, and M. Medina‐Elizade, 2006: Global temperature change. Proc. Natl. Acad. Sci., 103, 14288‐14293.

L’année passée, 2009, passe pour être la seconde année la plus chaude depuis 130 ans d’enregistrements instrumentaux de la température globale, dans l’analyse de température de surface par l’Institut Goddard pour les études spatiales de la NASA (GISS). L’hémisphère sud bat un record comme le plus chaud pour cette moitié du monde. La température globale moyenne, comme montré dans l’illustration 1a, fut plus chaude de 0,57°C (1°F) que la période climatologique (période de base 1951-1980). L’hémisphère sud, comme montré dans l’illustration 1b, fut plus chaud de 0,49°C (0,88°F) que la période climatologique.

Illustration 1: (a) analyse du GISS pour les changements de la température globale de surface. La barre verticale verte est l’estimation à l’intervalle de confiance de 95% (deux écarts-type) pour le changement annuel de température. (b) Changement des

températures des hémisphères dans l’analyse du GISS. (Période de base 1951-1980. Cette période de base est est systématiquement fixée pour tous les articles du GISS concernant l’analyse de la température – voir les références. La période de base 1961-1990 est utilisée pour les comparaisons avec les analyses publiées du HadCRUT dans les illustrations 3 et 4).

L’enregistrement de l’année globalement la plus chaude, dans la période d’utilisation des mesures instrumentales globales (depuis la fin du XIXème siècle) était 2005. Il est quelques fois avancé que 1998 était la plus chaude. L’origine de cette confusion est discutée ci-après. Il y a un fort degré de variabilité interannuelle (année par année) et décénnale à la fois dans les températures globales et hémisphériques. Sous-tendant cette variabilité, néanmoins, on trouve une tendance au réchauffement de long terme qui devient plus fort et persistant [tenace] au cours des trois dernières décennies. Les tendances de long terme sont plus apparentes quand les températures sont moyennées sur plusieurs années. Les températures en moyennes mobiles sur 60 mois (5 ans) et 132 mois (11 ans) sont montrées dans la figure 2 pour le globe et les hémisphères. La moyenne sur 5 ans est suffisante pour réduire l’effet du cycle climatique tropical El Niño-El Niña. La moyenne sur 11 ans minimise l’effet de la variabilité solaire – la luminosité solaire varie significativement pendant le cycle de tâches solaires, qui est généralement d’une durée de l’ordre de 10-12 ans.

Illustration 2: Températures en moyennes mobiles sur 60 (5 ans) et 132 (11 ans) mois dans l’analyse du GISS pour les changements de température de surface (a) globale et (b) des hémisphères.(période de base 1951-1980).

Il y a une contradiction entre la tendance observée et continue au réchauffement et la perception populaire des tendances climatiques. Ce type de perception inclut fréquemment ces assertions « Il y a eu un refroidissement global ces dernières 10 années. » « Le réchauffement global s’est arrêté en 1998. » « 1998 est l’année la plus chaude jamais enregistrée. » De telles déclarations ont été répétées si souvent que la plupart des gens les acceptent comme vraies. Néanmoins, selon nos données, ces déclarations ne sont pas correctes.

L’origine de la contradiction se trouve probablement pour partie dans la différence entre les analyses du GISS et du HadCRUT (HadCRUT est une association entre le centre Hadley et l’unité de recherche sur l’analyse de température de l’université de East-Anglia). En effet, le HadCRUT a trouvé que 1998 était l’année la plus chaude enregistrée. De plus, les croyances populaires en un refroidissement sont renforcées par des anomalies froides aux USA à l’été 2009 et dans l’hémisphère nord en décembre 2009.

Nous montrerons d’abord les principales raisons des différences entre les analyses du GISS et du HadCRUT. Nous examinerons ensuite les anomalies régionales de 2009 dans le contexte des températures globales.

Illustration 3: Anomalies de températures en 1998 (colonne de gauche) et 2005 (colonne de droite). Le rang du haut est l’analyse du GISS, celui du milieu est l’analyse du HadCRUT et le rang du bas est l’analyse du GISS masquée [ndt : calée] sur les mêmes zones et résolution que l’analyse du HadCRUT. (La période de base est 1961-1990.)

L’illustration 3 montre les cartes des anomalies de températures du GISS et HadCRUT en 1998 et 2005 relativement à la période 1961-1990 (la période de base usuelle du HadCRUT). Les anomalies de températures sont dans une résolution de 5 en 5 degrés géographiques pour les données du GISS afin qu’elles correspondent à celles de l’analyse du HadCRUT. Dans les deux cartes du bas, nous montrons les données du GISS sous le même masque en termes de répartition géographique et de résolution que celui du HadCRUT. Les données du GISS « sous masque » nous permettent de quantifier la manière dont les différences entre les analyses du GISS et du HadCRUT sont dues à l’interpolation et l’extrapolation des données utilisées dans l’analyse du GISS. Cette analyse affecte

à de nombreuses cases [des modèles] une anomalie de température qui ne contiennent pas de données mesurées, spécifiquement dans des cases qui se trouvent à moins de 1200 km d’une ou plusieurs stations qui ont défini une anomalie de température.

La raison de cet aspect de l’analyse du GISS est basée sur le fait que le schéma d’une anomalie de température tend à se produire à grande échelle. Par exemple, s’il y a un hiver anormalement froid à New-York, il est probablement anormalement froid à Philadelphie aussi. Ce fait suggère qu’il peut être préférable d’affecter une anomalie de température basée sur les stations les plus proches de la case qui n’a aucune observation que d’exclure la case de l’analyse globale. Des tests de cette assertion sont décrits dans nos articles référencés plus bas.

Illustration 4: Anomalies de la température de surface globale relativement à la période de base 1961-1990 pour trois cas : HadCRUT, GISS et anomalies du GISS limitées à l’aire HadCRUT. [Pour obtenir des séries temporelles cohérentes pour les moyennes globales du HadCRUT et du GISS, les résultats mensuels ont été moyennés par régions avec des anomalies de températures définies à l’intérieur de 4 zones de latitudes (90N-25N, 25N-équateur, équateur-25S, 25S-90S) ; la moyenne globale pondère ainsi ces zones en fonction de la vraie surface de ces zones entières, et les moyennes annuelles sont basées sur ces moyennes mensuelles globales.]

L’illustration 4 montre des séries temporelles de température globale pour les analyses du GISS et du HadCRUT, aussi bien que pour l’analyse du GISS masquée sur les régions de données du HadCRUT. Cette illustration révèle que les différences qui se sont développées entre les températures globales du GISS et du HadCRUT ces dernières décennies sont principalement dues à l’extension de l’analyse du GISS à des régions exclues de l’analyse du HadCRUT. Les résultats du GISS et de HadCRUT sont similaires durant

cette période quand les analyses sont circonscrites exactement aux mêmes aires. L’analyse du GISS trouve aussi 1998 comme année la plus chaude, si l’analyse est limité aux données sous le même masque. La question devient alors : quelle est la valeur des interpolations et des extrapolations dans l’analyse du GISS ? Si l’échelle des anomalies de température est ajustée telle que l’anomalie de la moyenne globale est de zéro, alors les schémas des régions chaudes et froides ont un aspect cohérent avec les schémas météorologiques, apportant ainsi un support qualitatif pour l’extension des données. Néanmoins, nous aimerions une mesure quantitative sur l’incertitude de notre estimation pour l’anomalie de la température globale causée par le fait d’une distribution spatiale des mesures incomplète.

Une manière d’estimer cette incertitude, ou possible erreur, peut être d’utiliser les séries temporelles complètes générées par un modèle de climat global ayant déjà fait ses preuves d’une variabilité spatiale et temporelle des températures de surface réaliste. Nous pouvons échantillonner ce jeu de données seulement aux endroits où des stations de mesure existent, et utiliser ce sous-ensemble de données pour estimer le changement de la température globale avec l’analyse du GISS, puis comparer le résultat avec la connaissance « parfaite » de la température globale que nous avons avec les données de chacune des cases.

| 1880-1900 | 1900-1950 | 1960-2008 | |

|---|---|---|---|

| Stations météorologiques | 0.2 | 0.15 | 0.08 |

| Index « Land-Ocean » | 0.08 | 0.05 | 0.05 |

Tableau 1. Estimation de l’erreur à deux écart-type par période pour les stations météorologiques et l’index « Land-ocean ».

Le tableau 1 montre l’erreur dérivée due à la couverture incomplète des stations. Comme attendu, l’erreur est plus importante aux dates anciennes quand la couverture en stations était plus pauvre. Mais aussi, l’erreur est plus grande quand les données sont disponibles seulement depuis les stations météorologiques, sans mesure depuis des bateaux ou satellites pour les aires océaniques. Dans les décennies récentes, l’incertitude à 2 écarts-type (intervalle de confiance à 95% d’être à l’intérieur de ces valeurs, 2 à 3 % d’être en dehors d’un côté ou de l’autre) a été de 0,05°C. La couverture incomplètes des stations est la première cause d’incertitude pour les années récentes, pour lesquelles les erreurs plus systématiques sont petites, comme le réchauffement urbain.

Des sources additionnelles d’erreurs deviennent importantes quand on compare des anomalies de températures séparées par des périodes plus longues. La source d’erreur de long terme la plus connue est « le réchauffement urbain », un réchauffement local d’origine humaine causé par l’utilisation de l’énergie et les altérations de l’environnement naturel. D’autres erreurs variées, qui affectent les estimations des changements de températures sur le long terme, sont décrites de manière complète dans un grand

nombre d’articles par Tom Karl et ses associés du Centre national de données sur le climat (NCDC) de la NOAA. L’analyse du GISS pour la température corrige l’effet urbain en ajustant les tendances de long terme des stations urbaines de manière cohérente avec les stations rurales des alentours, et en identifiant les densités urbaines par leur population ou par l’observation par les satellites des lumières nocturnes. Dans un article en préparation, nous démontrons que les approches par la population et par les lumières nocturnes donne des résultats similaires sur la moyenne globale. Les erreurs additionnelles causées par des facteurs autres que

la couverture spatiale incomplète est estimée comme étant de l’ordre de 0,1°C sur des échelles de temps de plusieurs décennies à un siècle, cette estimation étant nécessairement partiellement subjective. L’incertitude totale dans les anomalies de température globale moyenne, avec les données « terre et océans » ainsi incluses, est équivalente à l’erreur estimée dans la première ligne du

tableau 1, i.e. l’erreur due à une couverture spatiale limitée quand seules les stations météorologiques sont incluses.

Maintenant, voyons voir si nous pouvons préciser un rang entre les températures annuelles globales récentes, i.e. quelle année est la plus chaude, la seconde plus chaude, etc. L’illustration 1a montre l’année 2009 comme la seconde plus chaude, mais si proche de 1998, 2002, 2003 et 2007 que nous devons considérer toutes ces années comme étant virtuellement la seconde année la plus chaude. La différence maximale entre elles dans l’analyse du GISS est de ~0,03°C (2009 étant la plus chaude et 2003 la plus froide). Cet écart est approximativement égal à notre incertitude à un écart-type de ~0,025°C, ce qui est la raison pour établir que ces années sont toutes la seconde année la plus chaude.

L’année 2005 est plus chaude de 0,061°C que 1998 dans notre analyse. Donc, comment sommes-nous certains que 2005 est plus chaude que 1998 ? Étant donné l’écart-type de ~0,025°C pour l’erreur estimée, nous pouvons estimer la probabilité que 1998 était plus chaude que 2005 comme suit. La chance que 1998 soit 0,025°C plus chaude que notre valeur estimée est d’environ (1-0,68)/2=0,16. La chance que 2005 soit 0,025°C plus froide que notre estimation est aussi de 0,16. La probabilité que ces deux évènements se produisent ensemble est de ~0,03 (3 pourcent). Intégrer la queue de distribution et compter une différence de température entre 2005 et 1998 de 0,61°C change l’estimation dans des directions opposées. Pour le moment, disons juste que la chance pour que 1998 soit plus chaude que 2005, étant donnée notre analyse des températures, est au plus de l’ordre de 10 pourcent. Par conséquent, nous pouvons dire avec un degré raisonnable de confiance que 2005 est l’année la plus chaude dans la période de mesures instrumentales.

Illustration 5. (a) Carte globale de l’anomalie de décembre 2009, (b) carte globale de l’anomalie de juin-juillet-août 2009. #4 et #2 indiquent que décembre 2009 en juin-juillet-août sont les quatrième et deuxième périodes globalement plus chaudes de ce laps de temps.

Que dire à propos de la déclaration comme quoi la surface de la Terre se rafraîchit depuis 10 ans ? Cette question peut être traitée avec beaucoup de confiance, car l’erreur due à une couverture spatiale insuffisante des mesures devient encore plus faible quand on moyenne sur plusieurs années. L’incertitude à deux écarts-type dans la moyenne sur 5 ans de l’anomalie de température montrée dans l’illustration 2, est plus petite d’un facteur 2 que l’incertitude moyenne annuelle, ainsi 0,02-0,03°C. Étant donné que le changement d’une moyenne sur 5 ans de l’anomalie de température est d’environ 0,2°C sur la dernière décennie, nous pouvons conclure que le monde est devenu plus chaud, et non plus froid, depuis la dernière décennie.

Pourquoi des gens sont-ils convaincus d’une conclusion erronée, que le monde est vraiment en train de se refroidir ? Cette naïveté a certainement beaucoup à voir avec les variations régionales de court terme de la température, qui sont d’un plus grand ordre de grandeur que les anomalies annuelles des températures. Même des personnes non averties sont capables de comprendre la différence entre les anomalies locales [ndt : régionales] de court terme et la tendance globale. Par exemple, voici un commentaire posté par « frogbandit » à 20h38 le 6 janvier 2010 le blog de City Bright :

« Je m’étonne de ces gens qui utilisent une météo quotidienne froide pour dire que la Terre se refroidit. On oublie que le réchauffement global a des composantes globales et que c’est une tendance, pas une chose quotidienne. J’entends des gens, au sud que la latitude 48, dire qu’il fait vraiment froid cet hiver. Ce n’est pas si vrai que ça, ici, en Alaska. Bethel, en Alaska, a eu un Noël brun. Ici, à Anchorage, la température d’aujourd’hui est de 31°F [ndt : soient 3°C]. En me basant sur le fait que Bethel et Anchorage sont si chauds cet hiver, je ne peux pas dire que nous avons un réchauffement climatique. Ce serait vraiment un argument idiot de penser que mon schéma de température est répété dans le reste des Etats-Unis, plus ou moins globalement. »

Ce que ‘frogbandit’ dit est illustré par la carte globale des anomalies de températures en décembre 2009 (illustration 5a). Il y a eu de forte anomalies négatives de températures dans les latitudes moyennes de l’hémisphère nord, pas moins de 8°C en Sibérie, moyenné sur le mois. Mais l’anomalie de température en Arctique était, elle, aussi forte que +7°C.

Le décembre froid confirme peut-être une impression acquise par les américains depuis l’été inhabituellement froid de 2009. Il y avait des régions étendues des USA et du Canada en juin-juillet-août avec une anomalie négative de température supérieure à 1°C, la plus grande anomalie sur la planète.

Illustration 6. L’index de l’Oscillation Arctique (AO). Les valeurs positives de l’Index AO indiquent une zone de haute pression sur les régions polaires et ainsi, une tendance à de forts vents zonaux qui minimisent la circulation d’air froid aux latitudes moyennes. Les point bleus sont des moyennes mensuelles et la courbe rouge est la moyenne mobile sur 60 mois (5 ans).

Comment ces larges anomalies régionales de températures se confrontent-elles aux attentes et à la réalité du réchauffement climatique? Ces fluctuations négatives régionales sont-elles inhabituelles? Sont-elles liées avec le réchauffement climatique? Le contredisent-elles?

Il est évident qu’il y a eu en décembre 2009 un échange inhabituel d’air entre le pôle et les latitudes moyennes de l’hémisphère nord. L’air arctique s’est engouffré à la fois sur l’Amérique du nord et l’Eurasie, et, bien sûr, a été remplacé dans ces régions polaires par l’air des latitudes moyennes. La force avec laquelle l’air arctique a pénétré dans les latitudes moyennes est relié avec l’index AO, défini par des schémas de pression atmosphérique de surface et représenté dans l’illustration 6. Quand l’index AO est positif, la pression de surface est élevée dans les régions polaires. Cela permet au jet stream des latitudes moyennes de souffler fortement et constamment d’ouest en est, bloquant ainsi l’air froid au pôle. Quand l’index AO est négatif, il y a une tendance aux basses pressions dans les régions polaires, un vent zonal plus faible, et de plus grands mouvements d’air glacé vers les latitudes moyennes.

L’illustration 6 montre que décembre 2009 a vu la valeur de l’index AO la plus extrêmement négative depuis les années 70. Malgré le fait qu’il y ait eu une dizaine de cas d’index AO aussi extrêmes que -2,5 entre les années 60 et les années 80, il n’y a rien eu d’aussi extrême que le mois dernier. Ce n’est pas étonnant que les gens aient été accoutumés à une absence de ces coups de froid extrêmes.

Illustration 7. Anomalie de températures issu de l’analyse du GISS et Index AO du NWSCPC de la NOAA. La moyenne pour les Etats-Unis fait référence aux 48 états contigus.

L’illustration 7 montre l’index AO avec une résolution temporelle plus grande pour deux périodes de 5 ans. Il est évident qu’il y a un fort degré de corrélation entre l’index AO et les températures des Etats-Unis, avec un décalage possible entre l’index et les anomalies de températures inférieur à la résolution termporelle mensuelle. Les anomalies largement négatives, quand elles arrivent, sont souvent pendant les mois d’hiver. Il faut noter que l’anomalie de températures de janvier 1977, principalement située dans les états de l’est, fut considérablement plus forte que celle de décembre 2009. [cela n’a rien de magique quand une fenêtre de 31 jours coincide avec les jours calendaires du mois, et cela peut être trompeur. Il serait plus informatif de regarder la moyenne mobile sur 30 jours et la moyenne de l’index AO et des températures sur décembre-janvier-février.]

L’index AO n’est pas tant une explication pour ces schémas d’anomalies climatiques qu’un simple état de fait de la situation. Cependant, John (Mike) Wallace et ses collègues ont été capable d’utiliser la description de l’index AO pour aider à comprendre comment ces schémas peuvent changer en cas d’augmentation de gaz à effet de serre. Un certain nombre d’articles, par Wallace, David Thompson et d’autres, aussi bien que par Drew Shindell et d’autres au GISS,

ont montré que l’augmentation de gaz carbonique refroidit la stratosphère, ce qui cause en moyenne un jet stream plus puissant, et ainsi une tendance pour une oscillation arctique (AO) plus positive.

Globalement, l’illustration 6 montre une tendance selon le sens attendu. L’AO n’est pas le seul facteur qui altère la fréquence des épisodes d’air froid de l’Arctique. Par exemple, quel est l’effet d’une glace de mer réduite sur le schéma climatologique? Il n’y a pas assez de preuves empiriques depuis la fonte rapide de la glace de 2007. Nous pouvons seulement conclure que décembre 2009 était un mois hautement anormal et que cette oscillation arctique inhabituelle peut décrire la « cause » du climat extrême de décembre.

Nous n’avons pas trouvé de base pour nous attendre à de fréquentes répétitions de ce phénomène. Tout au contraire. L’illustration 6 montre que les fluctuations mois-par-mois de l’AO sont plus étendues que la tendance de long terme. Mais les changements de températures peuvent être causés par les gaz à effet de serre et le réchauffement global être indépendant des effets dynamiques de l’Oscillation Arctique.

Illustration 8. Carte globale des anomalies de températures pour les 4 saisons pour ~2009. (noter que Dec est décembre 2008. La période de base est 1951-1980.)

Illustration 9. Carte globale des tendances des anomalies de températures pour les 4 saisons pour la période 1950-2009.

Maintenant, regardons les anomalies de températures régionales récentes et les tendances des températures. L’illustration 8 montre les anomalies de températures saisonnières pour l’année passée et l’illustration 9 montre les changements des anomalies de températures depuis 1950 basés sur une tendance linéaire locale. Les échelles de températures sont les mêmes sur les illustrations 8 et 9. La caractéristique remarquable quand on compare ces deux illustrations est que la magnitude des changements sur 60 ans est similaire à la magnitude des anomalies saisonnières. Ce que cela nous raconte, c’est que les dés climatiques sont déjà sérieusement lancés. La personne perspicace qui est là depuis les années 50 sera capable de noter que les températures moyennes saisonnières sont actuellement plus élevées que celles des années 50, bien qu’il y ait encore occasionnellement des saisons froides.

La magnitude des anomalies mensuelles de températures est couramment 1,5 à 2 fois plus grande que la magnitude des anomalies saisonnières. Du coup, ce n’est pas encore si facile de voir le réchauffement global si sa principale illustration est la température moyenne mensuelle. Et, bien sûr, les fluctuations du temps au quotidien sont bien plus importantes que l’impact de la tendance globale du réchauffement.

Les bases sont celles-ci : il n’y a pas de tendance au refroidissement global.

A l’heure actuelle, jusqu’à ce que l’humanité mette ses émissions de gaz à effet de serre sous contrôle, nous pouvons nous attendre à ce que chaque décennie soit plus chaude que la précédente. Les fluctuations du temps qu’il fait excèdent certainement les changements locaux de températures du dernier demi-siècle. Mais la personne perspicace verra bien que le climat se réchauffe à l’échelle des décennies.

Cette information a encore besoin d’être mise en relation avec la conclusion qu’un réchauffement global de 1 à 2°C a d’énormes implications pour l’humanité. Mais cette discussion est au-delà de la portée de cet article.

Références:

Hansen, J.E., and S. Lebedeff, 1987: Global trends of measured surface air temperature. J. Geophys. Res., 92, 13345-13372.

Hansen, J., R. Ruedy, J. Glascoe, and Mki. Sato, 1999: GISS analysis of surface temperature change. J. Geophys. Res., 104, 30997-31022.

Hansen, J.E., R. Ruedy, Mki. Sato, M. Imhoff, W. Lawrence, D. Easterling, T. Peterson, and T. Karl, 2001: A closer look at United States and global surface temperature change. J. Geophys. Res., 106, 23947-23963.

Hansen, J., Mki. Sato, R. Ruedy, K. Lo, D.W. Lea, and M. Medina-Elizade, 2006: Global temperature change. Proc. Natl. Acad. Sci., 103, 14288-14293.

Jacob (#594),

What a timely link, thanks. By the way, I have no idea what “Completely Fed Up” thinks he’s accomplishing with his hijacking of this and the IPCC thread. I see that he even jumped on my little comment. All I can say to the man without having followed his many dozen comments: Overshare.

Why this place can never be fun again is that people like him think it’s all about what they need to prove to the world.

I see that the chemistry is still being studied, and I of course know that the surface readings tell us that acidification is occurring, but I just sort of figured, hey, you have these chemicals, you have some idea of their relative quantities and properties…this isn’t as amorphous as atmospheric CO2, where fifteen other things have to happen before the surface warms. This is: Add carbon to ocean water, and what happens?

My larger point was that there is literally nothing that isn’t being fought over like a scrap of meet on a lifeboat.

We’ve come a long way in 3 years, and I’m here to report it was the wrong way.

As an addendum to the #599, you can find a nifty Google Earth visualization of all the stations with monthly updates in the GSN here: http://www.wmo.int/pages/prog/gcos/index.php?name=GCOSNetworksvisualised

You will notice plenty in Canada, and for that matter plenty worldwide.

You can see the actual data from each of these stations here: http://www.dwd.de/bvbw/appmanager/bvbw/dwdwwwDesktop/?_nfpb=true&_windowLabel=T15806838371147176099165&_state=maximized&_pageLabel=_dwdwww_klima_umwelt_datenzentren_gsnmc

So far, every station I’ve checked in Northern Canada seems to have plenty of data.

580

Ray Ladbury says:

25 January 2010 at 10:00 AM

“Richard Steckis says “Assertions from one paper does not make it fact.”

Please, everyone, take a moment and savor the delicious irony…”

When all else fails good old Ray resorts to the Ad-Hom attack. You are so predictable.

593

dhogaza says:

25 January 2010 at 3:13 PM

” Being a charitable guy, I’ll accept that you’re learning just like the rest of us.

Steckis, unfortunately, has demonstrated no capacity for learning.

If you are a scientist as you claim, you’ll naturally be absorbing the papers and other literature we’ve posted in reply to your comments and use them to evolve a deepened perspective.

Steckis has a BS, no more. He likes to describe himself as a “scientist” so folks who haven’t run across him in the past think he’s at the same level as PhDs doing research in climate science, in other words, an authority.”

Unlike you, I have a peer-reviewed publication record as both primary and co-author. For your information I was studying toward my Ph.D. and was about half way through my research. However, It became too much to complete on a part-time basis. I will complete it some time in the future.

596

David B. Benson says:

25 January 2010 at 3:33 PM

“Richard Steckis (563) — Actually, I have been an amateur student of geology for over 50 years. In even that interval textbook geology had to be revised; most notibly because of the discovery of plate tectonics.

The paper in question raises serious doubts about at least one of the proxies used to estimate paleoclimate CO2 concentrations. The work was considered good enough to be accepted for publication in PNAS. I suggest you take it rather seriously.”

Mann’s hockey stick paper was considered good enough to be published in Nature. Yet it is still controversial.

Hank Roberts

It’s easy to figure actual and potential boiler efficiency, and it’s definitely myth that steam boilers from the 1800’s had anything like 90-95%, likely more on the order of 60-65% max when new and well setup, and rarely achieved considering the state of technology, engineering and “robber baron” business practices of the day ….. and considering modern boilers are rarely as much as 90% – professional boiler info – note that the Lancashire boiler [the “peak” of 1850’s tech] is 65-75% – some industry info, primarily from: ‘Boilers and Heaters: Improving Energy Efficiency’ Natural Resources Canada, 2001 PDF here

A good part of the reason England near asphyxiated itself back then was the inefficient coal burning with all the early steam powered industrialization

Gavin @599:

Okay, so a “limited” number of temperature records doesn’t refute the LIA or MWP being “localized events”, but a “limited” number of temperatures =does= mean that the Arctic is warming?

I also take offense to this comment —

How is it that thousands and thousands of Weather Underground stations — run by amateurs — can all have their data gathered in real time, but professionals can’t? And has anyone given any thought to firing the people who can’t seem to gather their data faster / better than people who don’t do it for a living?

If you are trying to convince me that GISS isn’t a valid data set, you are succeeding.

On which planet is capturing =less= data viewed as the right decision when questions about the validity of the data exist?

[Response: Oh please. The accusation was that NASA and NOAA were ‘deleting’ stations in cold places in order to somehow boost the global warming signal. This is untrue, defamatory and based on a complete ignorance of both where the data comes from (CLIMAT reports from WMO) and the whole point of the anomaly method. Pointing this out is not ignoring questions about data validity. If you want more Canadian data to be included in the global indices, ask Environment Canada to submit more CLIMAT reports. I’ve suggested this before though no-one took it up, but It would be possible to use the SYNOP and METAR daily reports to create an alternate global temperature index – and of course the reanalysis products do something analogous (and come up with the same answer in any case – Simmons et al (2010)). – gavin]

Re: 593 dhogaza says:

25 January 2010

Tim:

“If you are a scientist as you claim, you’ll naturally be absorbing the papers and other literature we’ve posted in reply to your comments and use them to evolve a deepened perspective.”

dhogaza:

“Steckis has a BS, no more. He likes to describe himself as a “scientist” so folks who haven’t run across him in the past think he’s at the same level as PhDs doing research in climate science, in other words, an authority.”

I always wonder about folks who can be shot down repeatedly and keep coming back with more. The tenacity to search through no-matter-what is replied to tease out the fatal flaw in the theory is a quality we can use.

If he wants to prove he’s right, prove that ocean acidification is a crock, then he could do an _inventory_ of the ocean’s animal and plant taxa by genus and species. He could classify them by degree of impact of CO2 poisoning for various life stages and various carbonic acid concentrations.

This would settle it.

Of course maybe he doesn’t want it settled. Perhaps he’s too lazy to take on finding the truth of it for himself and wants us to do it for him.

Or perhaps Mr. Steckis can concede the point that ocean acidification is a serious problem and we can all live happily-ever – after having learned so much new cool stuff about the ocean. I think he should do the inventory.

591:Completely Fed Up… I see you do not often read other’s posts or atleast not mine. The physics of greenhouse gases is indisputable and AGW is a real phenomenon. I have never stated otherwise. Nature still has and can and might still do far more and far sooner than man’s emissions of greenhouse gases will in terms of environment detriment. Look at all the eartquakes for example. I also stated in this thread that we should lower greenhosue gas emissions, so please read beore you reply… no worries I do not always read every single post either before I respond and usually at my own loss.

“Nature still has and can and might still do far more and far sooner than man’s emissions of greenhouse gases will in terms of environment detriment. Look at all the eartquakes for example.”

Which earthquakes?

I didn’t feel a single one.

The last one that affected anyone in the UK rattled plates and nothing else.

So, no, earthquakes are not worse.

How many earthquakes have reduced US wheat production? None. Warming climates have.

No, earthquakes are not worse.

Because they stop.

“When all else fails good old Ray resorts to the Ad-Hom attack. You are so predictable.”

RS is so predictable.

Making up an “ad hom attack” so he can become the victim.

Diddums.

“Please, everyone, take a moment and savor the delicious irony…”

Is not an attack, let alone an ad homming one.

It IS ironic that you state that one paper doesn’t make truth: you’ve very often posited one single paper as “PROOF!” that AGW was wrong.

This makes your statement ironic.

“Finally, a comment to Ray Ladbury [527]. I don’t think rude language has ever been effective as a tool for convincing people in a scientific discussion.”

And RS, Tilo, Septic and many, many more (Heironymous for example who doesn’t CARE if the science is sound: he doesn’t like some of the people) aren’t here for the science.

Richard Steckis @603

Somehow, I predicted that you wouldn’t know the meaning of ad hominem, either.

http://en.wikipedia.org/wiki/Ad_hominem

Steven Jorstater, Wow, I think you might have just scored a record for the most distortions in a single post.

Steven: “The only problem is, of course, that if you are looking for a trend change you must look at a reasonably short interval, mustn’t we?”

WRONG!!! If it is a trend change, it will show up in the long-term data. Good lord, why not just fit 30 years worth of data to a 29th degree polynomial! That’ll give you a really good fit, won’t it. A great fit, but zero predictive capability!

Steven: “For one thing, Hansen thinks that something like 5-10 years is enough.”

Absolute bullshit! This verges on mendacious. All Hansen is saying is that if you average over 5-10 years, you filter out enough of the noise that the trend starts to emerg. Good Lord, man, if you average over 10 years, 2 decades gives you only 2 data points!!!

As to why Hansen used GISS data–well, it’s his data set. DUH!!! Taken over a meaningful period of time, UAH, RSS, HADCRUT, GISS, ice melt, phenological data and any other dataset you care to name is consistent with warming. And your allegations of misconduct against Jim Hansen are beneath what one would expect of any true scientist!

As to the models–is it your serious contention that the physics of the greenhouse effect changes dramatically from 280 ppmv to 385 ppmv or even 600 ppmv? On what possible scientific finding could you base this contention.

Steven, your post betrays a stunning ignorance of climate science. Now you can either stay ignorant and keep posting absolute BS, or you can actually try to learn the science so you will at least be arguing against the real thing rather than a straw man. Your choice, but right now nearly everything you think you know is flat-assed wrong!

> Nature still has and can and might still do far more and far sooner

> than man’s emissions of greenhouse gases will in terms of environment

> detriment. Look at all the eartquakes for example.

Citation needed. How many earthquakes, of what magnitude, would it take to cause the predicted level of ice loss, sea level rise, ocean pH change, etc.?

Yes, climate change can’t destroy Haiti like one earthquake did. http://www.xkcd.com/687/

Hi Gavin

“les temps sont durs en ce moment”

for your suggestion:

“I’ve suggested this before though no-one took it up, but It would be possible to use the SYNOP and METAR daily reports to create an alternate global temperature index”

it is already done in ECA KMNI(http://eca.knmi.nl/dailydata/index.php)

“The series collected from participating countries generally do not contain data for the most recent years. This is partly due to the time that is needed for data quality control and archiving at the home institutions of the participants, and partly the result of the efforts required to include the data in the ECA database. To make available for each station a time series that is as complete as possible, we have included an automated update procedure that relies on the daily data from SYNOP messages that are distributed in near real time over the Global Telecommunication System (GTS). In this procedure the gaps in a daily series are also infilled with observations from nearby stations, provided that they are within 25km distance and that height differences are less than 50m.”

[Response: Interesting. Has anyone done a comparison? – gavin]

Sorry Gavin in this site, these are the raw data.

There is an homogeneity test but the series value are not changed.

For France, for example, the decennal trends (1980-2009) are, with these data, 0.6°C/dec (with 12 stations) and for Meteo France rather 0.45-0.50°C/dec.

The difference is not huge but real.

But a question: get you the raw or homogeneized data?

And, in the case of raw, do you apply your own homogeneisation?

[Response: Me? I don’t do any of these things. GISTEMP uses the GHCN homgenization combined with a urban bias correction. With respect to the SYNOP data, you would need to do your own homgenisation. – gavin]

The news is a good citation Hank for earthquakes, but that was just one example. There are terrible hurricans, tsunamis and the like which also are devastating throughout our and the Earth’s history that kill so many. The flu epidemic of 1918 killed over 600,000 people. I do not see strong evidence that global warming will match that anytime soon. Again, I agree that GHG emissions should be lowered, but it is foolish for some to think global warming is the worse issue we face as the human race. Also it is impossible to do away with all GHG emissions ever. Just do a quick look on scholar too and see how many so called green technologies are leading to equal and even greater emissions as well. We need to keep this issue in context is all I am saying. Again: what we can do in regards to lowering GHG emissions we should do.

Fedup you are taking me out of context and I think you will find it very hard to back up your claims on wheat production and warming in the US and then attribute it to greenhouse gases. Bacteria, algae and plant life love C02 and some love methane as well. Let us not forget ntural weather patterns which are devastating and various cycles… my concern is as GHG get higher it will cause more extreme weather conditions to get the system back to equilibrium.

Fed up: saying you cannot feel an earthquake is like someone in Dallas saying they cannot feel the warming in these recent times of record cooling; these kind of statements do nothing for the science of global warming or any other global concern in terms of scientific research.

Jacob: you’re saying that the power of the earthquake is greater than that of global climate change.

Except the earthquakes are temporary and minor.

Your statement is one based on faith without any sort of thought behind it.

I don’t *have* to attribute the wheat farming losses to warming. You have to show that earthquakes are more damaging.

Because climate change WILL mean those wheat farms will die, unless we avoid the worst.

That they are bad enough to register yet the warming has hardly begun shows how much more of a threat to human existence CC is than earthquakes.

And yes I have been reading links like this:http://www.iop.org/EJ/article/1748-9326/2/1/014002/erl7_1_014002.html

Gavin’s response @ 607:

Yes, but I didn’t repeat that accusation because it’s irrelevant. All sorts of people can come up with all sorts of conspiracy theories.

What’s =relevant= is demonstrating the validity of the data, regardless of which accusation is used to question its validity.

So, I ask the question again — on what planet does using fewer data sources address questions about the validity of a data set? I get that Environment Canada isn’t providing the data — now, what’s actually being done about it? Who should Canadians (and everyone else) write in order to get more data into the models?

[Response: This has nothing to do with models. Where did you get that from? The only issue is whether there is sampling issue with the current CLIMAT network, but the match to the satellite data and the reanalysis products indicates that there isn’t. – gavin]

Quote:

“Because climate change WILL mean those wheat farms will die, unless we avoid the worst.” No one knows this and the line of evidence does not point in this direction; that is too far in the future to be answered with such high level of confidence.

“Except the earthquakes are temporary and minor.” Really? With the recent high level of confidence in the research by seismologists that California is going to be hit by a the ‘big one’ an earthquake of extremely high magniude, would you like to rethink that? How about the 150,000 plus dead in Haiti? Many seimologists believe based upon data collection and history that California may be under water within my life time at 31 years of age due to a cataclysmic earthquake. Now add to that power outages, and violent chaotic weather events and the picture starts to get into focus.

“I don’t *have* to attribute the wheat farming losses to warming. You have to show that earthquakes are more damaging.” You as of yet have not shown that global warming is more damaging and your assumpton that I have to show you evidence when you have not is just an argument in futility.

“Because climate change WILL mean those wheat farms will die, unless we avoid the worst.” By all means better irrigation techniques should be used since some areas are drought prone to begin with; some wheat will evolve the necessary adaptations while others will not. However, some areas will become cooler and with increased precipitation due to climate change, even from the global warming we see changing in microclimates more coundusive to crops and other forms of life.

“Your statement is one based on faith without any sort of thought behind it.” It seems to me it is you who are speaking with a lot of faith here.

“That they are bad enough to register yet the warming has hardly begun shows how much more of a threat to human existence CC is than earthquakes.”

You have not made your case. All that aside, however, rising lung cancer rates and asthma is good enough reason to me to lower emissions of air pillutants of all kinds. The 2 degree warming will affect regions of severe poverty the most as outlined in the IPCC report. Earthquakes, however can end whole civilizations far faster than the current trend of global warming can… I suggest to study the history of earthquakes and infectious disease epidemics. No doubt warming has, does and will encourage the incubation of some pathogens, but your view and resulting comparison of global warming due to man made means and natural disasters is a little misguied albeit sincere.

I leave you with this: http://earthquake.usgs.gov/earthquakes/states/us_deaths.php

Do not forget to look up the flu epidemics as well:)

FurryCatHerder says: 26 January 2010 at 12:54 PM

Just to clarify, FCH, are you looking at the sample size (sorry!) as a public perception issue?

Hank Roberts,my apologies and retraction about the steam boiler statement. I meant to say 1950’s not 1800’s; I was looking at several different related references. Matter of fact the boilers usually only reached 90% efficiency in the 1950’s. The supercritcal boiler does get to 95% efficiency. Some modification can get a steam boiler to 95%, but that takes some work.

#622, “The only issue is whether there is sampling issue with the current CLIMAT network, but the match to the satellite data and the reanalysis products indicates that there isn’t. – gavin”

This is where laypeople have difficulties understanding science — sampling and probability distributions.

Even I (who teach the basics of that) am somewhat amazed how scientists can take a sample — a fairly small proportion of the total population of cases — and make fairly accurate inferences about the population, specifying the 95% confidence intervals, etc.

But I guess if people are going to question the science, then they really should read that chapter in a stat book.

I had a distant relative in the EPA, who had to decide whether old industrial sites around Chicago were contaminated. They only took a few random samples, and were able to make that decision.

Then there is calling 2000 people or even 1000 people to see who’s ahead in the national polls.

Hope this helps people who have a hard time understanding and complain that there are not enough weather stations. Read the basics of probability and statistics first, then understanding will begin to dawn.

Question of the Week: How many red herrings can denialists come up with in a week? (Hint: it’s a rhetorical question)

Richard Steckis (605) — Not by the knowledgable. And your comment does seem to be somewhat changing the subject just to score a point. Not the purpose of serious scientific discussion, is it?

610 Completely Fed Up says:

26 January 2010 at 9:16 AM

How many earthquakes have reduced US wheat production? None. Warming climates have.

Really?

From U.S. Wheat Associates, 2009: http://www.uswheat.org/uswPublic2009.nsf/index?OpenPage

“USDA’s Annual Small Grains Summary released on Wednesday, Sept. 30, reports that total 2009 U.S. wheat production is 60.4 MMT, an increase of two percent from USDA’s previous estimate. The summary indicated record spring wheat yields (45.0 bushels per acre) and barley yields (72.8 bushels per acre), along with increased durum production.

The past two years have been the most prolific for global wheat production. As a result, supplies are abundant and more wheat is being stored. USDA’s quarterly Grain Stocks Report, also released Wednesday, revealed U.S. wheat stocks are at their highest level since 2000. The report estimates wheat stored in all positions as of Sept. 1, 2009 is 60.3 million metric tons (MMT), up 19 percent from this time last year and 21 percent higher than the five-year average of 50.0 MMT. USDA’s estimates exceeded trade expectations of 58.1 MMT. The indicated disappearance for June – August 2009 is down significantly (30 percent) from last year because of decreased exports compared to last year’s breakneck pace. First quarter exports for MY 2009/10 were down 42 percent from last year.

In addition to higher wheat stocks, USDA reported increased stocks for corn, sorghum, barley, and sunflower, which are competing with wheat for storage space. Kansas, the largest producer of hard red winter wheat, is facing its largest grain stocks since 2000. This has put significant pressure on local cash prices.”

Just a couple more sources on global wheat production:

http://westernfarmpress.com/mag/farming_world_wheat_production_3/

In 2007, Dr. Pachauri stated that climate change was affecting wheat production in India:

http://www.monstersandcritics.com/news/india/news/article_1376486.php/Climate_change_hurting_wheat_production_in_India_Pachauri

“Agriculture productivity, particularly of wheat, has shown signs of going down as a result of the climate change.”

So how has wheat production fared in India since then?

http://www.thaindian.com/newsportal/india-news/indias-wheat-production-estimated-to-surpass-record-78-million-tonnes_100171249.html

“New Delhi, Mar 25 [2009](ANI): The country is going to witness record production of wheat consecutively for the second year with output estimated to surpass 78 million tonnes.

Last year, 78.57 million tonnes of wheat was produced, which was the highest ever in the history of India.”

> My larger point was that there is literally nothing that isn’t being fought over like a scrap of meet on a lifeboat.

Walt Bennet: I believe that most of us lurkers and rare posters that have been here since before you “graduated” and are still here, learn to step over the tro lls (it’s not easy when one is Competely Fed Up with folks like Mark).

I’m not ready to graduate as I am still learning.

I am reading Schmidt et al (2006) with particular reference to the clouds on ModelE. I’m afraid this will probably mean I will have completely weird questions to ask later.

But on first read, one thing struck me: you say (p172):

The planetary albedo is indeed reasonable, according to the provided figure. But I noticed that total cloud cover is particularly higher than expected in the polar regions. I just can’t reconcile that with “systematically too low”.

Snow and cloud have almost identical (and hugely overlapping) albedos. This means that the albedo is useless as a diagnostic in precisely the area that seems to have a problem.

I feel I am missing something here.

[Response: In the global mean, the optical thickness was high. Cloud cover estimates in the poles are particularly difficult, and so these are not a strong constraint on the models. – gavin]

http://books.google.com/books?hl=en&lr=&id=Qd9b8taIAqgC&oi=fnd&pg=PR9&dq=global+warming+is+a+hoax&ots=ZxIp7MFjtB&sig=rFYg9BX6ANluAN23TLNl6gb4NXw#v=onepage&q=global%20warming%20is%20a%20hoax&f=false

Re Inline response to 617:

The GISS webpage and Hansen’s publications explicitly state that GISS starts with the GHCN raw, not the GHCN homogenised. Has something changed of late?

RE responses to my post 574

moderators response seems to promote an inductive over a deductive approach. I would say, as an empiricist, that if the empirical data does not align with the modelling than the hypothesis that the modelling is wrong needs to be taken seriously.

Re 586 I reported modified R squared, this adjusts for the additional parameter (One extra parameter out of three doesn’t increase the fit by two, I suspect what you are trying to say is that one can always get a perfect fit by using an n-1 polynomial to fit n data points)

Re 598 all joint tests significant at 95% level. The CO2^2 is just a trend variable, effectively I am hypothesising that the rate of temperature increase varies with CO2 concentration, it appears this is a reasonable empirical conclusion from the data.

John Storer,

What I am saying is that if the goodness of fit (Likelihood) doesn’t improve exponentially in the number of parameters, then the additional parameters represent an overfitting of the data. The Akaike Information Criterion (AIC) is one way to deal with this. It ensures that the model used has the best predictive power rather than merely giving the best fit to the data. See here:

http://en.wikipedia.org/wiki/Akaike_information_criterion

Don Shor, First, your harvest numbers are not particularly informative because 1)what matters is yield and 2)1-2 years is not a trend. Finally, get back to us in about 20 years on wheat production.

This website remindes me of that old game we played as kids, with a twist. The king of the hill (Gavin) has a whole army motivated to keep him there. Any time someone makes a comment that is even the least bit detramental to the AGW cause, the troops immediately attack and put it under. When one does finally make it through, Gavin heroically puts it down with malace.

What a game!

ps re 586 AIC is lower for the four parameter model

I’ve looked at the problem of the GISS/HadCRUT divergence more closely, and I’ve come to the conclusion that the divergence is due to the way that changes in sea ice effect the readings of the coastal thermometers. I give a complete explanation here:

#629 Don, that is what’s expected with CO2 and GW — increasing crop production in the mid and northern latitudes, due to longer growing seasons and CO2 fertilization, up to about 2050, after which there is expected to be a sharp decline due to effects of GW.

See: Schlenker, W., and M. Roberts. 2009. “Nonlinear Temperature Effects Indicate Severe Damages to U.S. Crop Yields under Climate Change.” Proceedings of the National Academy of Science. 106.37: 15594-15598. Online at: http://www.scribd.com/doc/22765244/Nonlinear-Temperature-Effects-Indicate-Severe-Damages-to-U-S-Crop-Yields-Under-Climate-Change

RE #630, the news item re increased wheat crops in India was dated Mar 2009, so it might be a good idea to see if the devastating floods in several states in India this past Sept/Oct 2009 (probably enhanced by GW) reduced their wheat crops. The floods were more to the South and wheat is grown mainly in the North, but I know the floods caused huge crops losses.

See: NDTV. 2009. “India: Prices set to soar as crucial crops are lost in floods.” Oct. 7. http://www.ndtv.com/news/india/prices_set_to_soar_as_crucial_crops_are_lost_in_floods.php

“This website remindes me of that old game we played as kids, with a twist.”

All this proves is that you didn’t grow up.

Sometimes people are wrong.

Ever consider that?

Or is anyone saying AGW is wrong automatically right?

“I would say, as an empiricist, that if the empirical data does not align with the modelling than the hypothesis that the modelling is wrong needs to be taken seriously.”

And the data aligns with the modeling.

You not been reading much, have you.

There’s absolutely NO model that works if you don’t have AGW science and the role of anthropogenic CO2 in there.

But denialists will not let the idea go that such CO2 has no role.

You’re pointing that accusation over to the wrong place.

Point it over to Watts where his “model” that the UHI is making a warming trend appear doesn’t fit the data retrieved:

http://www.skepticalscience.com/On-the-reliability-of-the-US-Surface-Temperature-Record.html

“629

Don Shor says:

26 January 2010 at 3:38 PM

610 Completely Fed Up says:

26 January 2010 at 9:16 AM

How many earthquakes have reduced US wheat production? None. Warming climates have.

Really?”

Really.

Why else would you then quote a report that doesn’t mention earthquake disruption of wheat production and DOES mention how climate has?

Jacob says: “You have not made your case.”

Except even Don Shor has quoted a report that shows the case has been made and has much greater provenance than earthquakes being worse.

637 Ray Ladbury says:

26 January 2010 at 7:59 PM

Don Shor, First, your harvest numbers are not particularly informative because 1)what matters is yield and 2)1-2 years is not a trend. Finally, get back to us in about 20 years on wheat production.

First of all, I was replying to CFU’s statement that climate change has already had an impact on wheat production. It hasn’t.

Second, the FAO has lots of data on world agriculture.

Total value ($) of world food production has increased (by amounts ranging from 2.4% to 2.1%) between 1993 and 2007.

Total world exports of wheat in tons, after dropping from 1992 – 1995, has increased steadily through 2007. The world’s total production of wheat in tons also has increased steadily through 2007.

Total arable land has increased somewhat. Arable land has increased in developing countries, and decreased in some developed countries (notably Europe). Forest cover has decreased.

You can fuss with these numbers. But they illustrate that there is no reasonable way to claim any linkage yet between existing climate change and agricultural yields. CFU did exactly that, with no statistical evidence. I am unaware of any basis for Dr. Pachauri’s statement with respect to productivity.

Shorter Toledo Tim@638

Waaaaaah! You meanies keep using evidence and logical argument! ‘Snot fair!

641 Lynn: #629 Don, that is what’s expected with CO2 and GW — increasing crop production in the mid and northern latitudes, due to longer growing seasons and CO2 fertilization, up to about 2050, after which there is expected to be a sharp decline due to effects of GW.

Thanks for the link; that is an interesting analysis.

As it says clearly on page 4, there are many caveats. “The simplest form of adaptation would be to change the locations or seasons where and when the crops are grown.” In other words, farmers aren’t stupid. Whether or not they will invest in irrigation supplies or more expensive varieties that tolerate heat will depend on crop prices and yields. “Greater precipitation partially mitigate damages….”

I live in an area where all agriculture is irrigated. Studies of agricultural impacts of climate change tend not to factor changes in crop practices and the willingness of farmers to invest to get higher yields. Most that I have read appear to be detailed statistical analyses based on the continuation of current cropping patterns. But farmers aren’t going to sit by and wring their hands while yields decline. Agribusiness will develop more heat-tolerant varieties, and growers will choose other things to grow.

It is common here in northern California for orchards to be top-worked to change the variety, or to be completely replanted, if prices are changing. Taking out pears and prunes, putting in walnuts or almonds; that is an investment that takes 3 – 5 years or more to pay off. Cropping patterns of annuals have changed markedly over the years. Farmers here choose between canning tomatoes, sunflowers, safflower or corn, or a couple of years of alfalfa. Or they decide to put some percentage of their acreage into tree crops. I realize that midwestern growers have fewer options.

Chilling and heating hours, availability of irrigation water, cost of fertilizers, and world market trends are all factors in the decision-making process. In the case of corn in the midwest, I’d imagine that the long-term viability of the ethanol market and the status of government price supports and tax credits are probably going to have big impacts. Climate change is just another factor in all of that, affecting some of those variables as well as perhaps some of the crops directly. I am very skeptical about the dire predictions about agriculture in developed countries due to AGW. But increased agronomic aid to developing countries will be crucial to maintaining the world food supply.

SJ: if you are looking for a trend change you must look at a reasonably short interval, mustn’t we? Because trend changes is what we are interested in if we want to know if global warming is still going on, isn’t?

BPL: Will you for God’s sake CRACK A BOOK? I mean a book on statistics, preferably time-series analysis. NO, you do not want “a reasonably short interval” to find a trend change. The shorter your interval, the more likely the “trend change” isn’t a trend change at all.