Kevin Wood, Joint Institute for the Study of the Atmosphere and Ocean, University of Washington

Eric Steig, Department of Earth and Space Sciences, University of Washington

In the wake of the CRU e-mail hack, the suggestion that scientists have been hiding the raw meteorological data that underpin global temperature records has appeared in the media. For example, New York Times science writer John Tierney wrote, “It is not unreasonable to give outsiders a look at the historical readings and the adjustments made by experts… Trying to prevent skeptics from seeing the raw data was always a questionable strategy, scientifically.”

The implication is that something secretive and possibly nefarious has been afoot in the way data have been handled, and that the validity of key data products (especially those produced by CRU) is suspect on these grounds. This is simply not the case.

It may come as a surprise to some that the first compilation of world-wide meteorological data was published by the Smithsonian Institution in 1927, long before anthropogenic climate change emerged as an important issue (Clayton et al., 1927). This volume is still widely available on the library shelf as are updates that were issued periodically. This same data collection provided the foundation for the World Monthly Surface Station Climatology, 1738-cont. As has been the case for many years, any interested party can access this from UCAR (http://dss.ucar.edu/datasets/ds570) and other electronic data archives.

Now, it is well known that these data are not perfect. Most records are not as complete as could be wished. Errors periodically creep in and have to be identified and weeded out. But beyond the simple errors of the key-entry type there are inevitably discontinuities or inhomogeneities introduced into the records due to changes in observing practices, station environment, or other non-meteorological factors. It is very unlikely there is any historical record in existence unaffected by this issue.

Filtering inhomogeneities out of meteorological data is a complicated procedure. Coherent surface air temperature (SAT) datasets like those produced by CRU also require a procedure for combining different (but relatively nearby) record fragments. However, the methods used to undertake these unavoidable tasks are not secret: they have been described in an extensive literature over many decades (e.g. Conrad, 1944; Jones and Moberg, 2003; Peterson et al., 1998, and references therein). Discontinuities may nevertheless persist in data products, but when they are found they are published (e.g. Thompson et al., 2008).

Furthermore, it is a fairly simple exercise to extract the grid-box temperatures from a CRU dataset—CRUTEM3v for example—and compare it to raw data from World Monthly Surface Station Climatology. CRU data are available from http://www.cru.uea.ac.uk/cru/data/temperature. One should not expect a perfect match due to the issues described above, but an exercise like this does provide a simple way to evaluate the extent to which the CRU data represent the underlying raw data. In particular, it would presumably be of interest to know whether the trends in the CRU data are very different than the trends in the raw data, since this could be taken as indication that the methods used by CRU result in an overstatement of the evidence for global warming.

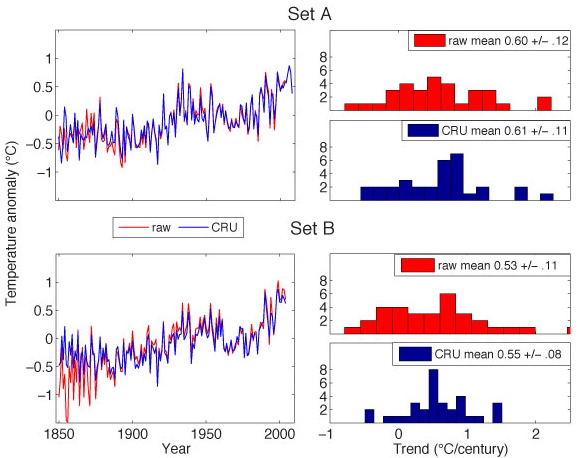

As an example, we extracted a sample of raw land-surface station data and corresponding CRU data. These were arbitrarily selected based on the following criteria: the length of record should be ~100 years or longer, and the standard reference period 1961–1990 (used to calculate SAT anomalies) must contain no more than 4 missing values. We also selected stations spread as widely as possible over the globe. We randomly chose 94 out of a possible 318 long records. Of these, 65 were sufficiently complete during the reference period to include in the analysis. These were split into two groups of 33 and 32 stations (Set A and Set B), which were then analyzed separately.

Results are shown in the following figures. The key points: both Set A and Set B indicate warming with trends that are statistically identical between the CRU data and the raw data (>99% confidence); the histograms show that CRU quality control has, as expected, narrowed the variance (both extreme positive and negative values removed).

Comparison of CRUTEM3v data with raw station data taken from World Monthly Surface Station Climatology. On the left are the mean temperature anomalies from each pair of randomly chosen times series. On the right are the distribution of trends in those time series and their means and standard errors. (The standard error provides an estimate of how well the sampling of ~30 stations represents the full global data set assuming a Gaussian distribution.) Note that not all the trends are for identical time periods, since not all data sets are the same length.

Conclusion: There is no indication whatsoever of any problem with the CRU data. An independent study (by a molecular biologist it Italy, as it happens) came to the same conclusion using a somewhat different analysis. None of this should come as any surprise of course, since any serious errors would have been found and published already.

It’s worth noting that the global average trend obtained by CRU for 1850-2005, as reported by the IPCC (http://www.ipcc.ch/pdf/assessment-report/ar4/wg1/ar4-wg1-chapter3.pdf), 0.47 0.54 degrees/century,* is actually a bit lower (though not by a statistically significant amount) than we obtained on average with our random sampling of stations.

*See table 3.2 in IPCC WG1 report.

References

Clayton, H. H., F. M. Exner, G. T. Walker, and C. G. Simpson (1927), World weather records, collected from official sources, in Smithsonian Miscellaneous Collections, edited, Smithsonian Institution, Washington, D.C.

Conrad, V. (1944), Methods in Climatology, 2nd ed., 228 pp., Harvard University Press, Cambridge.

Jones, P. D., and A. Moberg (2003), Hemispheric and large-scale surface air temperature variations: An extensive revision and an update to 2001, Journal of Climate, 16, 206-223.

Peterson, T. C., et al. (1998), Homogeneity adjustments of in-situ atmospheric climate data: a review, International Journal of Climatology, 18, 1493-1517.

Thompson, D. W. J., J. J. Kennedy, J. M. Wallace, and P. D. Jones (2008), A large discontinuity in the mid-twentieth century in observed global-mean surface temperature, Nature, 453(7195), 646-649.

It would be fairly simple to compare the data if the CRU had not, inexplicably, killed the link to the data set.

Would the argument here be more compelling if the specific allegations of the deniers with respect to the New Zealand and Australian measurements (Darwin et al) be explained.

[Response: The point is that individual stations are being cherry picked. An honest assessment would pick sites at random, as we have done. It is of course possible that some stations have problems that CRU didn’t catch. Picking on those isn’t objective.–eric]

Eric, I’m in the middle of writing a column for Examiner.com. (If you don’t know me, no worries, as I’m not a major player in the debate–but I am usually quite unsympathetic to ‘your side’ and have been very direct about it in the past.) If I include in my column that you and Real Climate say that the warming trend over the past 150 years is 0.47 degrees Celsius per century, my readers (well, at least those who comment regularly) will go berserk. I confess that this surprises me as well, given the regular predictions of much steeper rises. Before I spread the good news from Ghent to Aix, and my more skeptical commenters start singing ‘ding dong the witch is dead,’ could you maybe put this figure into context that would show why this rate of warming should not put the entire controversy to rest?

[Response: Tom: this is the *average* rate over the *entire* last 150 years. It’s taken directly from the IPCC report. To claim it means anything other than that would be totally dishonest of you. (Do you know what the word “exponential” means, by the way?)–eric]

Could you kindly indicate which 33 stations are in Set A and which 32 stations are in Set B? Many thanks!

[Response: I’ll add a link to the locations. The point, however, is that you can do this sort of experiment yourself. It’s simple, we’ve provided the links, and there is no “code” necessary expect a pen and paper (or, if you like, Microsoft Excel).–eric]

This was very helpful. Thanks!

Nice to see a return to the science. And indeed a very interesting couple of graphs. The thing is, there are a lot of stations out there, and reports about Darwin, Nashville, New Zealand, Orland CA and Crand Canyon (although not CRU UEA stations) seem to make quite a compelling argument that sometimes the homoginization steps, unlike in your arbirarily chosen sets here, lead to flat raw data giving warming value added data.

Now then, there are a lot of people interested in climate data, many of whom have a mathematics background. If people could collectively survey each and every dataset, raw versus homoginized, surely we could reach a point where the true condition of the full dataset could be assessed.

surfacestations managed this with actual station sitings – in the USA surely volunteers could be recruited and station data could be emailed out and results calculated / graphed up and sorted regarding UP / FLAT / DOWN for further research.

Just a thought.

KJ

[Response: Sure, but this would be repeating what CRU, GISS, etc. have been doing for years and years. If you want to reinvent the wheel, go ahead. Oh, while we’re at it, let’s redo the epidemiology on smoking and cancer. Until that’s done, let’s all take up smoking. After all, who can trust the corrupted peer-reviewed literature in leftist journals like the New England Journal of Medicine?–eric]

Is there an explanation for the difference in the set B data between 1850 and 1900?

Thanks, this is very helpful. I have not heard any skeptic yet claim that the contentious data proves that that the warming described by the published temperature trend data has not occurred. Am I missing something, or should not the burden of proof at some point be on the skeptics if there is a reasonable scientific basis for concern? Ethics would have the burden of proof shift to those who want do continue potentially harmful behavior to them once it is established that there is reasonable scientific concern that harm to some people could occur without their acceptance. It seems to me that this controversy is missing a crucial question, namely given what we know for sure, who should have the burden of proof since continuing potentially dangerous behavior has consequences.

GhostsCarbon monoxide gas in my home might be harmful, but that fact alone does not shift the burden from you to prove to me that ittheyare there.;)

Questions:

1. The CRU data is the simple average of the fully adjusted (?) data of the stations you are using in each of the two examples? [I assume your data is a simple average as well, but unadjusted in any way].

2. It’s interesting that the average GHCN adjustment over time from 1910-present shows a .25 C warming as Roman M and other have shown. I wonder why this doesn’t show up in this analysis? In other words, you would expect the CRU data to be about .25 C above the unadjusted data, for a simple average if it tracked GHCN. Any thoughts? IE your selected station samples show much less adjustment than an average sample from GHCN from 1910-present for some reason…

Thanks for the analysis…..

So, am I correct in understanding that the raw samples provide an average temperature trend from 1850 to 2005 of 0.60 and 0.53degC/century (+or-) whereas the CRU/IPCC reported figure was 0.45degC/century?

At the crude newspaper level of sound-bites, that seems to me to be a useful counter to the ignorant charge that CRU have been over-egging the global warming pudding. Have I got that right?

[Response: Note my correction — the CRU number is actually 0.54. I was looking at the wrong part of the table in IPCC. Either way, there is no significant difference. It would not be correct to say that the CRU data have a smaller trend than the raw data, but it would be equally wrong to say CRU is bigger. And in general CRU gives smaller trends than other compilations.–eric]

If there is a bias suggested by your results, it is that the raw data shows an even colder past (SetB).

However Phil Jones’ recent presentation indicates that a global cooling trend in the raw data was turned into a warming trend by his adjustments.

Look at the homogenisation slide in:

http://www.cgd.ucar.edu/cas/symposium/061909presentations/Jones_Boulder_june2009.ppt

Maybe it is your selection of only the long records that has caused the problem?

One possible correction: “0.47 C per century” trend for 1850-2005 I believe applies to the northern hemisphere. Globally, it should be 0.42. This is from the decadal trends listed on page 248 of the IPCC chapter linked.

For Tom Fuller:

CRU decadal trends:

1850-2005: 0.042 per decade (0.42 per century)

1901-2005: 0.071 per decade

1979-2005: 0.163 per decade (NCDC and GISS are a bit higher)

[Response: That’s including oceans. We were looking at land-only data, and number is 0.54.–eric]

Thank you for this analysis. It is well-explained and quite clear, and exactly what is needed right now.

Eric, you’re being too kind on those referring to ‘problems’ with measurements in e.g. New Zealand and Australia (Darwin in particular).

The New Zealand issue is based on “we don’t understand the procedures, and all references to the literature explaining the procedures will be duly neglected”. See for example:

http://hot-topic.co.nz/nz-temps-more-stations-no-adjustments-still-warming/

and references therein.

The ‘Darwin-issue’ is even worse: Willis Eschenbach is the one doing the analysis (that should be a big red flag already), indicating he knows corrections need to be made(!), decides not to do them because he can’t find which and why…and then insinuates fraud because others *do* make corrections!

http://scienceblogs.com/deltoid/2009/12/willis_eschenbach_caught_lying.php

Are these raw data taken from the GHCN network?

If so, what adjustments have they undergone?

Would you agree that it’s necessary to have a good idea of what has occured prior to the 1850 start date?

For instance the Uppsala record http://www.kolumbus.fi/tilmari/gwuppsala.htm shows pronounced variations, with the rise from the LIA period showing periods of warming much greater than those seen currently – and with lower CO2 levels.

Do you also support the requests that the CRU provides the methodology behind its manipulation of raw (Or part-cooked already?) data?

I wonder how much of this will be cut?

Update on another dataset: NCDC global results for November are in.

Highlights:

Most categories come in at 5th-6th warmest ever;

Warmest Southern Hemisphere November & austral spring ever;

Warmest global UAH lower & midtrop ever;

Very warm November for North America generally;

Central Asia was quite cold indeed;

And, yes, the stratosphere is still cooling, by the looks of it.

http://www.ncdc.noaa.gov/sotc/?report=global&year=2009&month=11&submitted=Get+Report

I’m signing off at this point.

Hopefully those with the energy to do so can correct the apparently good-faith misconceptions that get submitted. I strongly recommend ignoring the rest.

–eric

Gavin please, let’s not nit-pick, I am sorry I mixed up popular technology with PM. Here is the link again, with a link to the WT that pointed it out. Could you please link me to a similar list from your side, I want to put the two side by side and see what I can make out of them.

http://washingtontimes.com/news/2009/dec/11/the-tip-of-the-climategate-iceberg-55941015/

http://www.populartechnology.net/2009/10/peer-reviewed-papers-supporting.html

[Response: Look up the reference list for AR4. But you are completely wasting your time. Just look at the list you’ve posted – half are from an un-peer-reviewed journal (E&E) whose editor prints whatever she feels like. The other range from the absolutely kooky (G&T, Chilingar, Miskolczi) to the completely mainstream. There are a number of takedowns of the list around – read them first. – gavin]

How then did the Administrators at RealClimate know that the 61 megabyte archive was the property of Phil Jones at the CRU when RealClimates said in a statement on November 17 , 2009 ” . . . We were made aware of the existence of this archive last Tuesday morning when the hackers attempted to upload it to RealClimate, and we notified CRU of their possible security breach later that day . . . ” ? ? ?

RealClimate stated that ” hackers ATTEMPTED to upload the file . ‘Attempted’ in the English language is defined as : to make an effort to do, accomplish, solve, or effect without success ? So ………………………..?

[Response: They uploaded the file and attempted to put out a post saying so. They did not succeed. – gavin]

#8 (David Wright) Are you saying that I have a responsibility to monitor the safety of your home? (Or RC does?) That’s news indeed–I would have thought the burden was all yours.

Say, who’s responsible for my place? It would be good to know!

Of course, we all live on this planet (don’t we?), so I suppose it’s our shared responsibility to ensure its safety either way.

Re: #12

Thanks. I saw the 0.047 per decade number in the IPCC table and made an assumption.

By AR4, do you mean the UN Assessment Report? Does that in fact represent the best climate science? Just checking because I really want to get the best if I can. Thanks for the tip, I will be sure to exclude the EE articles from my final report.

Simple, brilliant, and easily understood. A very nice piece of work.

A good companion piece would be NOAA’s analysis responding to criticism from Anthony Watts/Heartland Institute that most US weather stations were suboptimally sited. NOAA took all 1200+ stations and took the 70 that Watts et al judged to be “good or best”, and showed that both sets of data gave essentially the same surface temperature trend. The .pdf of the NOAA analysis is here:

http://www.ncdc.noaa.gov/oa/about/response-v2.pdf

Adam Gallon (15)

“Would you agree that it’s necessary to have a good idea of what has occured prior to the 1850 start date?”

What happened prior to 1850 has absolutely nothing to do with the point of this post. This post demonstrates that the homogenization of the instrumental temperature data by CRU does NOT create any false warming trend when compared to the raw data. Eric & Kevin have now (not that anyone who follows the literature needed to be convinced) demonstrated that our surface temperature has indeed been warming.

Yggdrasil, the UN IPCC Fourth Assessment Report, aka AR4, was state of the art in 2007. Since then things have moved on a bit, and some of the authors produced an interim report titled Copenhagen Diagnosis which updates the science.

You can get IPCC AR4 here: http://www.ipcc.ch/

You can get the Copenhagen Diagnosis here: http://www.copenhagendiagnosis.com/

Oh and: good post Kevin/Eric, I’ve been looking for exactly this analysis. :)

As an aside, the Italian biologist was looking at GHCN homogenisations for the NCDC, not CRU. So his analysis is giving context to the Darwin hubbub, rather than any CRU controversy. That said, I haven’t read Jones’ papers; perhaps CRU uses the GHCN adjustments? I don’t know.

I would be interested to hear your thoughts on this comparison of your graph of a representative sample of HADCRU3 stations, to the graph presented in IPCC CH9 pg 182:

flickr comparison

IPCC certainly shows what looks like an “exponential” curve, but the HADCRU3 data you pulled does not.

A couple of other basic questions:

Is the HADCRU3 data adjusted for urban heat?

Can we get to the HADCRU3 data anywhere to do this exact same analysis against data for the last 40 years only?

Thank you.

Since reconstructing the palaeoclimatic record is a two pipe problem par excellence, it’s inspiring to see Eric at last showing a little backbone in soldarity with the poor SOB’s who brave Siberian mosquitoes, avalanching tropical bergschrunds, rabid bats and ravenous polar bears en route to collecting raw physical data ,

Not to mention being confronted by raving Fox reporters on their return,

I suggest Eric commence with Arrhenius’s old favorite, the cigars of the Copenhagen firm of E. Nobel

Mesa #9:

As I understand it, the CRU data is the average of the grid boxes corresponding to the members of either set A or set B that are being compared to. And yes, these grid box values were produced by gridding (i.e., re-sampling to grid nodes using some suitable technique) from the fully adjusted global set of station data. And the average of the “raw” station data is indeed just that.

BTW I’m a bit surprised that you didn’t plot a histogram, for sets A and B, of the differences between each raw station trend and its corresponding CRU grid box trend… much narrower and more centred on zero, and a better metric for, well, how little damage the reductions could potentially do if they were indeed somehow all wrong. Isn’t that close to what ‘gg’ is doing?

I am concerned “researcher bias” is starting to be a major factor on this website and on “skeptic” websites as well. So much of the analysis seems to be an “us vs them” affair that is is hard for me to avoid thinking about researcher bias.

By the way, I am not implying a bias in the research, but rather a bias in the decision on whether or not to pursue / publish the results. This article is a good example. Would it have been trumpeted if it had shown significant problems with the CRU dataset? The researcher decides whether or not to pursue a topic (this is equally true on the skeptic side btw), and the researcher decides if the research is ready for publication.

Having done research myself, I know it is all to easy to stop when you get an answer that validates your view and continue on when something is funny.

I’m not sure what to do, but the “us vs. them” mentality is troubling.

JSC

Somewhat off-topic, but topical:

I see John Tierney has an article and a post on his blog at the NY Times discussing McKitrick’s idea of linking a carbon tax to a atmospheric temperature measurement. He quotes Gavin and Eric in his post. There are several problems with a “McKitrick Temperature Tax” , but I am intrigued by a “McKitrick Global Heating Tax”. I put this comment on Tierney’s blog (version here corrected slightly for grammar):

I think the idea of having a carbon tax linked to a measurement of global warming is a terrific idea. If we can identify a metric that responds quickly to changes in the planetary energy budget, then this is the best way to reward efforts to reduce global heating. And when the planet stops heating, this system will reward the decision makers who forecast the leveling off of heat buildup on earth.

The problem is that McKitrick has proposed a rather esoteric measurement to tie the carbon tax to. He and the skeptics, and Gavin Schmidt, all seem to agree that atmospheric and surface temperature measurements suffer from a great deal of natural variability, or measurement issues depending on which camp you want to believe.

A second problem: Increasing temperatures in the atmosphere lags increasing heat energy on earth by decades, and likely centuries . The oceans, land areas, glaciers and ice caps are acting as heat sinks that absorb over 95% of any energy imbalance for the planet, Until the ice melts off, and the oceans heat and stop absorbing the excess energy in the planetary budget, the atmospheric temperature won’t increase as rapidly as the heat is building up. And if we eliminated GHG emissions tomorrow, the atmospheric temperature could continue to rise for a century! Clearly we need a faster response mechanism.

What is needed is a metric that more directly measures the planetary energy imbalance, and approximates the heat buildup on our planet.

Fortunately, the leading scientists seem to agree that ocean heating is the key parameter, with ice melt an important contributor. Dr. Trenberth has published a series of papers showing the planetary energy imbalance, and both Dr. Hansen and the skeptic Dr. Roger Pielke Sr. agree that ocean heating is the key parameter.

So tie the carbon tax to sea level rise, which is a very robust and well known measurement. Sea level rise is due primarily to thermal expansion of the sea water and melt of land based ice sheets and glaciers, and thus accounts for over 92% of the planetary energy imbalance. SLR can be measured by satellites accurately within 3 mm, roughly the annual rise seen over the last 15-20 years. There is less variability than atmospheric temperature measurements.

When the planet stops heating, as the skeptics claim is happening now, then SLR will stop, or slow to a much slower rate. The amount of heat being absorbed and ice melt associated with SLR at 3 mm per year is enormous compared to atmospheric air heating. If the skeptics are correct, the “McKitrick planetary heating tax” would fall to zero within several years, probably 5-10 years at most, and Dr. McKittrick will get the credit for the largest tax cut in history.

If temperatures were used instead of SLR, the McKitrick tax could continue to rise for over a century before responding to the lack of heating or cooling of the planet. Clearly SLR is a better metric to base a carbon tax.

i suggest you contact Gavin Schmidt again, and propose a carbon tax based on SLR, using this well known measurement from the University of Colorado (the most recent academic home of the Pielkes).

http://sealevel.colorado.edu/current/sl_ib_ns_global.jpg

Kudos to Austin Vidich in a comment posted at midnight last night who suggested SLR, and to many of the posters who quickly identified the lag problem with atmospheric temperatures.

JSC, better to light a replicable candle than to curse the meta darkness. That’s the main point of the post, in my view.

Jsc (30) — It is us who know and use the scientific method versus them which don’t.

Paul Klemencic (31) — Actually, the time to near equilibrium from a large forcing is more like a dozen centuries, not just one. Admittedly, the lattr part of the change is rather small and slow.

Mark Lindeman – but what was replicated? Certainly not the graphs of instrumental temperature presented by the IPCC:

temperature comparison: IPCC vs. HADCRU3

Looks like the U.S. was able to “teleconnect” with the “real” global trend afterall. I’ve been waiting for an analysis like this for a long time, thanks! Where’s the blade?

Very elegant and very clear, guys and thank you for the link to my post (yes, I am an Italian who lives in Wisconsin actually, but I am on the job market so God knows where I am going to end up… If you can use sleep scientists at NASA, I’d run.)

I hope the reader will graps immediately that the goal of these analysis is not much to add pieces of scientific evidence to the discussion, because these tests are actually simple and nice but quite trivial. The goal it is really to show to the blogoshpere what kind of analysis should be done in order to properly address this kind of issue, if one really wants to. I believe there is so much data out there on the internet that amateur climatologists in the blogoshpere would actually be able to really contribute to the debate if they only would put paranoia and ideology aside.

Four quesions:

(a) Why not do the full set of unbroken records instead of an “arbitrary” sampling of only a third of them?

(b) Explain your selection of A vs. B series. Why break it into two? You claim both A and B have statistically identical trends vs. raw yet B clearly shows a difference whereas A does not.

(c) Please list the full range of data sets out there (USHCN, GHCN, GISS, etc.) for which raw/adjusted are available and explain which is associated with CRU and why. Which global average plots are associated with which raw data sets? I have not seen a simple illustration of such relationships and data links do not explain it in simple fashion.

(d) Take a look at my identically scaled overlay of what are claimed to be the adjustments to (1) GHCN (global) vs. (2) USHCN (United States) over time. Since GHCN is evidently associated with CRU then if GHCN is exonerated, what implication does this have for the far greater-value adjustments to USHCN?:

http://i46.tinypic.com/6pb0hi.jpg

JSC: If there were such significant problems with one of the datasets, such as CRU, somebody would have noticed by now. Do you think this is the first time somebody is bothering to test the methods?

Further, I’d say that we shouldn’t always expect raw data to match the processed data so well. After all, you wouldn’t bother doing the processing unless it made some improvement (though yes, we do see fewer outliers above, so even here we see improvement). So for some regions and individual data series, homogenisation would be important.

Jsc #30: I think you got this backwards. Kevin and Eric picked a piece of public-outreach low hanging fruit (You do agree public outreach by scientists is a good thing, don’t you?), using data files they had lying around and a few dozen lines of their favourite scripting language, in order to prove to the hilt something they already bloody well knew to be the case, as anyone familiar with the subject does. You call this ‘research’? Hey, they do this kind of thing with their left foot before breakfast ;-)

After reading some of the Harry_readme.txt comments, one wonders whether those who use CRU gridded data (eg. TS 2.1)for precipitation-runoff modeling do so at their own risks.

#31 It would be a better idea to connect that carbon tax to the current ppm levels of CO2.

“Further, I’d say that we shouldn’t always expect raw data to match the processed data so well”

Why?

If you interpolate an image correctly (trilinear mapping, for example), you can count up the intensities of Red, Green and Blue average over all pixels, and find that you get (within the limits of IEEE floating point accuracy) the same number before interpolation as you did after.

The point of such careful and complex (as opposed to the simple “nearest neighbour” method) to interpolate is so that there is NO CHANGE in the overall numbers.

If the CRU number is 0.54° of warming per century and half of the warming is tied to things other than CO2: namely black carbon, methane, land use changes, UHI effects, and various CFCs, then carbon dioxide is only responsible for 0.27°? That is really mind boggling that the big bad bully of C02 is only good for a quarter of a degree. Am I missing something here?

C02 is only half of the constituents of the warming. We can’t blame all of that .0.54°/century on carbon dioxide. So half of .54° = 0.27° of warming due to C02 over a century?

JSC, frankly, the likelihood that this analysis could have come out differently is basically nil, because their are multiple research groups analyzing such climate data, so there is no way that one group could be “cooking the books” in some way without a discrepancy showing up. For that reason, an analysis like this is almost certainly unpublishable–it is hard to a publication for belaboring the obvious. I don’t think the point of this post was to convince the deniers, anyway. Anybody who believes that CRU, GISS, etc. are all engaged in a grand conspiracy has doubtless already dismissed RealClimate as co-conspirators, so why would they believe that the raw data randomly sampled just because RealClimate says so?

The key point here is that the data is readily available for anybody who is genuinely interested in temperature trends or who is concerned about the possibility of temperature adjustments introducing bias, and it provides an example of how to go about it. This is not sophisticated science, just random sampling that anybody who has taken a basic statistics course would understand. The remarkable thing, really, is the apparent total lack of interest of climate science critics/auditors in doing this kind of basic analysis. One cannot help but suspect the motives of those who focus on criticisms of cherry-picked individual stations, or who insist that the validity of the enterprise cannot be evaluated without analysis to every scrap of data and code used by climate scientists for their own analyses, but who cannot be bothered to do this kind of analysis using unbiased sampling techniques. Or perhaps they have done it, but have chosen not to report it?

[Response: Well said. My emphasis added.–eric]

Hi all,

I’ve coincidentally tried a somewhat comparable exercise yesterday. Downloaded raw and adjusted GHCN data. Then wrote a MATLAB script that reads the data, selects all WMO stations, selects the measurement series that are present in both datasets, determines the differences between them (i.e., the ‘adjustment’ or ‘homogenization’), bins the adjustments in 5-year bins, and plots the means and std’s of the data in the bins. Not surprisingly, both for the global dataset and the European subset this shows near-neutral adjustments (i.e., no “cooling the old data” or “cooking the recent data”). Additionally, the script shows the deviation from the 1961-1990 mean of each measurement series (both raw and homogenized). Strong warming in the most recent decades is absolutely obvious in both datasets. Here’s a link to the resulting PDF for Europe:

RESULTS-EUROPE.pdf

If you want to try it yourself (data + simple script + example output):

GHCN-QND-ANALYSIS.zip

I’m not a climatologist (although I am a scientist, and have performed QC on environmental data – which I guess puts me squarely on the Dark Side of the debate on AGW). Yet I’ve done this analysis in 4 hours, without any prior knowledge of the GHCN dataset. What this shows, in my opinion, is that anyone who claims to have spent yeeeaaaars of his/her life studying the dark ways of the IPCC/NOAA/WMO/etc., and still cannot reproduce their results or still cannot understand/believe how the results were obtained, is full of sh#t… Thanks for implying the same, above (if I may read it that way). :)

Keep it up.

Steven.

Eric,

Do you know if CRU uses homogenized GHCN or do they do their own process?

Ryan did a post at tAV which takes a brute force look at homogenization. It’s not particularly damaging to any case either way but it’s interesting.

http://noconsensus.wordpress.com/2009/12/15/3649/

My own opinion is that the homogenization may very well be acceptable. It would be nice to see it clearly spelled out why certain decisions are made. –I have read several papers on it and without someone to answer a few questions, it’s very difficult to figure out.

[Response: Jeff, I’m no expert on the homogenization process. I’m sure this information is available and others reading these posts will be able to help. Ok, I’m really signing off now. Real work to do, not to mention it is supposed to be holiday time of year.–eric]

Steven – genuinely curious – why do you think your Europe graph shows no warming mid-century, while Eric’s graph does? Is this a pure anomoly? Or does it represent some difference between the raw dataset that Eric uses and the v2.mean dataset from GHCN? GHNC feeds into GISS? and GISS and HADCRU3 match pretty closely if I recall.

Do v2.mean and the World Monthly Surface Station Climatology, 1738-cont use the same method for identifying stations? It would be fascinating to compare these two give the differences in the graphs you produced vs. Eric’s.

Question – how do we know that these stations are not simply measuring the growing proximity of asphalt and air conditioning units with time?

I’ve posted a new page to my climate web site which shows, as clearly as I could put it, why you need 30 years or more to find a climate trend (and thus why we CAN’T say “it’s been cooling since 1998!”):

http://BartonPaulLevenson.com/30Years.html