Interesting news this weekend. Apparently everything we’ve done in our entire careers is a “MASSIVE lie” (sic) because all of radiative physics, climate history, the instrumental record, modeling and satellite observations turn out to be based on 12 trees in an obscure part of Siberia. Who knew?

Indeed, according to both the National Review and the Daily Telegraph (and who would not trust these sources?), even Al Gore’s use of the stair lift in An Inconvenient Truth was done to highlight cherry-picked tree rings, instead of what everyone thought was the rise in CO2 concentrations in the last 200 years.

Who should we believe? Al Gore with his “facts” and “peer reviewed science” or the practioners of “Blog Science“? Surely, the choice is clear….

More seriously, many of you will have noticed yet more blogarrhea about tree rings this week. The target de jour is a particular compilation of trees (called a chronology in dendro-climatology) that was first put together by two Russians, Hantemirov and Shiyatov, in the late 1990s (and published in 2002). This multi-millennial chronology from Yamal (in northwestern Siberia) was painstakingly collected from hundreds of sub-fossil trees buried in sediment in the river deltas. They used a subset of the 224 trees they found to be long enough and sensitive enough (based on the interannual variability) supplemented by 17 living tree cores to create a “Yamal” climate record.

More seriously, many of you will have noticed yet more blogarrhea about tree rings this week. The target de jour is a particular compilation of trees (called a chronology in dendro-climatology) that was first put together by two Russians, Hantemirov and Shiyatov, in the late 1990s (and published in 2002). This multi-millennial chronology from Yamal (in northwestern Siberia) was painstakingly collected from hundreds of sub-fossil trees buried in sediment in the river deltas. They used a subset of the 224 trees they found to be long enough and sensitive enough (based on the interannual variability) supplemented by 17 living tree cores to create a “Yamal” climate record.

A preliminary set of this data had also been used by Keith Briffa in 2000 (pdf) (processed using a different algorithm than used by H&S for consistency with two other northern high latitude series), to create another “Yamal” record that was designed to improve the representation of long-term climate variability.

Since long climate records with annual resolution are few and far between, it is unsurprising that they get used in climate reconstructions. Different reconstructions have used different methods and have made different selections of source data depending on what was being attempted. The best studies tend to test the robustness of their conclusions by dropping various subsets of data or by excluding whole classes of data (such as tree-rings) in order to see what difference they make so you won’t generally find that too much rides on any one proxy record (despite what you might read elsewhere).

****

So along comes Steve McIntyre, self-styled slayer of hockey sticks, who declares without any evidence whatsoever that Briffa didn’t just reprocess the data from the Russians, but instead supposedly picked through it to give him the signal he wanted. These allegations have been made without any evidence whatsoever.

McIntyre has based his ‘critique’ on a test conducted by randomly adding in one set of data from another location in Yamal that he found on the internet. People have written theses about how to construct tree ring chronologies in order to avoid end-member effects and preserve as much of the climate signal as possible. Curiously no-one has ever suggested simply grabbing one set of data, deleting the trees you have a political objection to and replacing them with another set that you found lying around on the web.

The statement from Keith Briffa clearly describes the background to these studies and categorically refutes McIntyre’s accusations. Does that mean that the existing Yamal chronology is sacrosanct? Not at all – all of the these proxy records are subject to revision with the addition of new (relevant) data and whether the records change significantly as a function of that isn’t going to be clear until it’s done.

What is clear however, is that there is a very predictable pattern to the reaction to these blog posts that has been discussed many times. As we said last time there was such a kerfuffle:

However, there is clearly a latent and deeply felt wish in some sectors for the whole problem of global warming to be reduced to a statistical quirk or a mistake. This led to some truly death-defying leaping to conclusions when this issue hit the blogosphere.

Plus ça change…

The timeline for these mini-blogstorms is always similar. An unverified accusation of malfeasance is made based on nothing, and it is instantly ‘telegraphed’ across the denial-o-sphere while being embellished along the way to apply to anything ‘hockey-stick’ shaped and any and all scientists, even those not even tangentially related. The usual suspects become hysterical with glee that finally the ‘hoax’ has been revealed and congratulations are handed out all round. After a while it is clear that no scientific edifice has collapsed and the search goes on for the ‘real’ problem which is no doubt just waiting to be found. Every so often the story pops up again because some columnist or blogger doesn’t want to, or care to, do their homework. Net effect on lay people? Confusion. Net effect on science? Zip.

Having said that, it does appear that McIntyre did not directly instigate any of the ludicrous extrapolations of his supposed findings highlighted above, though he clearly set the ball rolling. No doubt he has written to the National Review and the Telegraph and Anthony Watts to clarify their mistakes and we’re confident that the corrections will appear any day now…. Oh yes.

But can it be true that all Hockey Sticks are made in Siberia? A RealClimate exclusive investigation follows:

We start with the original MBH hockey stick as replicated by Wahl and Ammann:

Hmmm… neither of the Yamal chronologies anywhere in there. And what about the hockey stick that Oerlemans derived from glacier retreat since 1600?

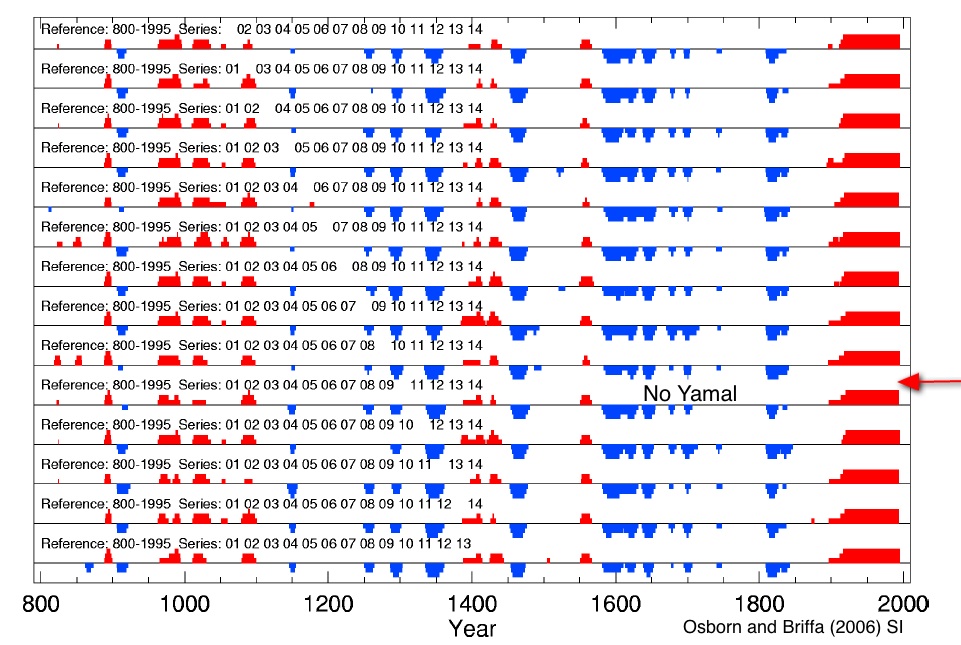

Nope, no Yamal record in there either. How about Osborn and Briffa’s results which were robust even when you removed any three of the records?

Or there. The hockey stick from borehole temperature reconstructions perhaps?

No. How about the hockey stick of CO2 concentrations from ice cores and direct measurements?

Err… not even close. What about the the impact on the Kaufman et al 2009 Arctic reconstruction when you take out Yamal?

Oh. The hockey stick you get when you don’t use tree-rings at all (blue curve)?

No. Well what about the hockey stick blade from the instrumental record itself?

And again, no. But wait, maybe there is something (Update: Original idea by Lucia)….

Nah….

One would think that some things go without saying, but apparently people still get a key issue wrong so let us be extremely clear. Science is made up of people challenging assumptions and other peoples’ results with the overall desire of getting closer to the ‘truth’. There is nothing wrong with people putting together new chronologies of tree rings or testing the robustness of previous results to updated data or new methodologies. Or even thinking about what would happen if it was all wrong. What is objectionable is the conflation of technical criticism with unsupported, unjustified and unverified accusations of scientific misconduct. Steve McIntyre keeps insisting that he should be treated like a professional. But how professional is it to continue to slander scientists with vague insinuations and spin made-up tales of perfidy out of the whole cloth instead of submitting his work for peer-review? He continues to take absolutely no responsibility for the ridiculous fantasies and exaggerations that his supporters broadcast, apparently being happy to bask in their acclaim rather than correct any of the misrepresentations he has engendered. If he wants to make a change, he has a clear choice; to continue to play Don Quixote for the peanut gallery or to produce something constructive that is actually worthy of publication.

Peer-review is nothing sinister and not part of some global conspiracy, but instead it is the process by which people are forced to match their rhetoric to their actual results. You can’t generally get away with imprecise suggestions that something might matter for the bigger picture without actually showing that it does. It does matter whether something ‘matters’, otherwise you might as well be correcting spelling mistakes for all the impact it will have.

So go on Steve, surprise us.

Update: Briffa and colleagues have now responded with an extensive (and in our view, rather convincing) rebuttal.

Over at Dot Earth, McIntyre is taking another shot at Mann et al. 2008.

http://community.nytimes.com/comments/dotearth.blogs.nytimes.com/2009/10/05/climate-auditor-challenged-to-do-climate-science/?permid=302#comment302

He seems to still be worried about inverted data despite Mann et al. publishing a formal reply to this. At this point bizarre is not the word any more.

Jim Bouldin (#649) — Thanks very much for that very nice reference.

ref# 642: I said nothing untoward. My comment was eschew right back at you Hank, I don’t argue anyone’s opinions but my own. It seems to me a fair statement in reply to being told I am using talking points and I would expect that it would be placed as written instead of edited in such a manner to make it seem like I was much less polite then I actually was.

Can you just show a graph of the actual temps, not this so-called anomaly.

Jim,

Thanks for the reference, very interesting. Dr Kalman is a very clever guy, his filter crops up everywhere :).

I have one more question, for you (just one!), I hope that by bribing you with some possibly useful information I can encourage you to break your “no more posts” vow…..

The search for the exogenous and endogenous signals described in sections 3 and 4 of your reference make me think of channel estimation in cellular phone systems. Maybe this is a technique which might be of interest? Let me explain

In a cellular system a sequence of data bits is transmitted. The channel between transmitter and receiver consists of a number of individual paths, each with its own time delay and amplitude – echoes, if you like. The receiver receives the vector sum of the signals from the paths. This makes the channel look highly dispersive. However by occasionally feeding a known sequence of bits (a training sequence) into the channel, and setting a receiver algorithm (a channel estimator) to look for those bits, we can derive the channel response function (often called the channel impulse response) and use this to get a much better estimate of subsequent transmitted data bits. Usually the receiver contains some form of maximum likelihood sequence estimator like the Viterbi algorithm.

This looks a bit like the intervention analysis described in Section 3. A pulse (caused eg by logging) is fed into a stand of trees. Each surviving tree introduces species characteristic attenuation and delay to the “signal”. We observe this signal at a later time. Perhaps it would be possible to use algorithms analogous to the above to extract the tree response function and then use it to determine the characteristics of previous, unknown events like a forest fire?

Long shot, the cellular problem is highly constrained (each echo has only an amplitude, phase and timing delay) and there is only one measurement signal (the vector sum at the receiver). In dendro you’ll have a much less constrained problem, but many more measurement signals.

My question…..

You mentioned in a previous post that good dendros like H&S can use their skill and experience to identify “responder” trees. My question is not a new one, it’s been posed eg on CA before, but I’ve never heard a decent answer amongst all the foaming at the mouth on there :)

What characteristics do dendros use to determine responder trees, and are these characteristics equally present in living trees and long dead trees?

At the extremes, do they use characteristics like foliage or proximity to other trees, which won’t be preserved in dead trees; or do they use the cellular structure of the wood, or isotopes, or bark, which might be preserved in a dead tree for thousands of years.

Putting the question in more statistical language:- If we take a dendro to a site which has a set of living trees, and a set of similar but long dead trees, will he be able to identify “responder” trees in the living and dead sets with equal success?

cheers

Mark

Google: site:IPCC.ch “terms of reference”

http://www.ipcc.ch/ipccreports//sres/emission/index.php?idp=501

“As required by the Terms of Reference, the SRES preparation process was open with no single “official” model and no exclusive “expert teams.” To this end, in 1997 the IPCC advertised in relevant scientific journals and other publications to solicit wide participation in the process. A web site documenting the SRES process and intermediate results was created to facilitate outside input. Members of the writing team also published much of their background research in the peer-reviewed literature and on web sites.”

stevenc, eschew means to avoid.

How much of a come-back is “avoid right back at you” intended to be?

“The expert reviewers were invited to make comments by the IPCC. These people were selected based upon their knowledge in the selected areas.”

We know that isn’t true, stevenc.

Please, if you think this is true for McI, please show us the invite.

US didn’t do anything other than ask anyone if they wanted a read.

UK did something fairly similar.

Why? You can’t visualize things easily if you do, given that summer temps are so much higher than winter temps. Showing the anomaly (why do you say “so-called”?) gets rid of that.

“Some of the most outspoken global-warming skeptics in fact participated in the formal

peer review of the SAR, including Chapter 8. Since Seitz is not a climate scientist, and

Singer is no longer active in research, they did not qualify as formal reviewers. However,

Singer regularly attends IPCC meetings. In 1995, as the IPCC prepared the SAR, Singer

was present at both the Madrid meeting and the IPCC plenary at Rome. Representatives

from a number of NGOs which typically take a skeptical stance, including the Global

Climate Coalition and several energy and automotive industry lobbies, also participated

in the SAR peer review. Other skeptic referees included Patrick Michaels, Hugh……”

This is from Chapter 7. Self-Governance and Peer Review in Science-for-Policy: The Case of the IPCC Second Assessment Report

Written by Paul Edwards of the University of MIchigan and Stephen Schneider from Stanford.

It is clear from this statement that persons were excluded from the formal review process based upon lack of qualifications.

> skeptics in fact in fact participated in the formal review

> Seitz … and Singer … did not qualify as formal reviewers

> Singer regularly attends IPCC meetings

> NGOs … lobbies, also participated in the SAR peer review

> Other skeptic referees included Patrick Michaels, ….

You’re confusing two different things

—- being qualified as a formal reviewer

—- participating in the formal review, which was open to people who did not qualify as formal reviewers.

The comment process was open; people other than those who _reviewed_ the comments made comments. That’s what that multiple-column table available from Harvard shows you, the comments, and what the reviewers did with’em;

You are restating the same misunderstanding, over and over, and misreading what you find yourself.

It’s over. Gavin has tried to put a stop to this previousl. No more from me. A heartfelt plea to anyone who would argue more with Stevenc — eschew.

stevenc doesn’t understand what However means: quotes examples of skeptics participating in the IPCC process “Since Seitz is not a climate scientist, and

Singer is no longer active in research, they did not qualify as formal reviewers. However,” and concludes “It is clear from this statement that persons were excluded from the formal review process based upon lack of qualifications.”

http://www.ipcc.ch/pdf/ipcc-principles/ipcc-principles-appendix-a.pdf

4. EXPERT REVIEWERS

Function:

To comment on the accuracy and completeness of the scientific/technical/socio-economic content and

the overall scientific/technical/socio-economic balance of the drafts.

Comment:

Expert reviewers will comment on the text according to their own knowledge and experience. They may

be nominated by Governments, national and international organisations, Working Group/Task Force

Bureaux, Lead Authors and Contributing Authors.

“Since Seitz is not a climate scientist, and Singer is no longer active in research, they did not qualify as formal reviewers. However, Singer regularly attends IPCC meetings.”

Singer isn’t McIntyre. And as you say, he wasn’t a formal reviewer.

Patrick Michaels was put in by the lobbying group (I.e. not individually invited). Nor is he McI.

Hugh?

Hank, if Singer and Seitz are listed as expert reviewers then obviously I am mistaken. I’m done with this topic and I’m sorry for taking up so much of everyone’s time. It isn’t my report after all.

He seems to still be worried about inverted data despite Mann et al. publishing a formal reply to this. At this point bizarre is not the word any more.

The word we’re all groping for is “dishonest.”

I’m sure everyone is as shocked as I am.

Actually, those would be *review* comments and *author* responses.

Stevenc, you don’t even have to be wrong for that reason. There are plenty other reasons for your position to be incorrect.

This isn’t your fault, however. You’ve been given a half-truth and no way to locate the rest of it.

Another beat-up doing the rounds: CRU is being accused of destroying the raw data on which their published temperature numbers are based. Search for “We have 25 years or so invested in the work. Why should I make the data available to you, when your aim is to try and find something wrong with it?” and you will find this all over the denialosphere. This arose out of New Zealand denialist (living in Australia) Warwick Hughes asking after the original data, and getting this response from Phil Jones in 2005. If Jones said this, it’s a bit unfortunate though you can see where he’s coming from. The two problems with releasing the raw data are that limitations on disk space in the 1980s meant it could not all be kept, and much of the data was released to CRU under limited proprietary licenses. There’s not much you can do about the former, but it amuses me how people who would ordinarily be raving loony capitalists are demanding something for free. In a perfect utopian social-ist world (hyphen for spam filter), all data would be free. Let’s see if they keep campaigning for that :) As for the 1980s problem, just the subset of the data that CRU is able to release publicly (still not raw data but closer) amounts to about 6.5GB per year. The total data is probably at least double this amount. Those who were using computers in the 1980s will remember that by 1990, a 40MB disk was pretty big for a personal computer, and 1GB of storage was very expensive (over the decade, the cost of disk storage dropped from around $200/MB to $12). No doubt in the social-ist utopia these guys would like, scientific organizations would have the kind of funding they need to pay for this kind of thing, and an army of clerks and database administrators to curate the data.

But more seriously, some good answers are: this is not the only data set out there; others like GISS, various proxies and satellite data follow the same trend. If the temperature data is bogus, someone please explain what is causing sea levels to rise, and sea ice and glaciers to melt.

Nonetheless we know the purpose in all this is not to improve the science but to undermine it. I wonder how many other research projects in the 1980s have all their data preserved. Somehow you have to know in advance that you are going to be the subject of an anti-science campaign. In retrospect, the fact that the science runs contrary to the short-term interests of a major industry is clear, but in the 1980s, we had not yet seen the full impact of campaigns like that of tobacco against science.

The key point is that McIntyre is way, way out on the fringe, with the same veracity as a 9-11 conspiracy theorist or moon landing was staged type of person. Even these guys apparently have an opportunity to talk at an IPCC public comment session themselves, so what?

Steve Mc showed up at Dot Earth, with several comments, and we gave him a pretty good whuppin, in honor of what Gavin did. Since even the oil and coal companies would be ashamed to come up with the claims that he does, it’s time he was officially assigned to a seat on Planet Ozone. We’re used to the rifraff on Dot Earth. You smart guys need to keep up the good work here.

Jones’ wording was unfortunate, though the remaining text where he mentions intellectual propery rights is frequently truncated; remember also that this was meant to be a private communication.

Scientists should of course be delighted to expose their data to scrutiny, and pleased when someone finds faults [Ok I am being idealistic], this being a key aim of peer-review, enabling conclusions to be reconsidered, and understanding refined.

But this presupposes that the scrutiny will be performed with some integrity. Given Hughes’ record of dishonesty a more fitting response would have been along the lines of …

“Dear Mr Hughes, I am refusing your request for data. Nothwithstanding various ownership and commercial issues, your website contains many instances where you have distorted and misrepresented scientific data in a manner incompatible with our standards of academic integrity. This data was collected under the imprimateur of a British University and we are unwilling to risk it being abused in a similar fashion.

We are of course willing to share results and data via the normal medium of journal publication”

or something.

Steve McIntyre publishes some correspondence with CRU here

http://www.climateaudit.org/correspondence/cru.correspondence.pdf

he describes CRU’s actions as ‘concealing’ which I guess is one step above ‘stonewalling’. A more reasonable observer might see an organisation, limited by commercial, historical and contractral constraints bending over backwards to comply with an increasingly unreasonable request….

I wonder when we can expect a McInytresque ‘audit’ of Piers Corbyn’s data and methods? He does, after all, charge money for his ‘forecasts’….

The total data is probably at least double this amount. Those who were using computers in the 1980s will remember that by 1990, a 40MB disk was pretty big for a personal computer, and 1GB of storage was very expensive…

When I graduated from college in the early ’80s and went to work, a 300MB hard-drive cost tens of thousands of dollars and was the size of a Maytag washing-machine. The multi-gigabyte disk-farm in the lab where I worked back then looked like a laundromat! Needless to say, raw data were rolled off to reel-to-reel tapes ASAP to avoid project-draining disk storage charges. I remember many times where I rolled off data to tape Friday evenings (and restored the backups the following Monday mornings) to avoid weekend disk-storage charges.

People forget (or if they are younger, don’t appreciate) what an expensive hassle it was dealing with large raw datasets not so long ago.

hi all … I’m a civil engineer and I don’t completely understand some numbers, and being an engineer, numbers are sacred ! :-) … I googled the H & S data, referenced in the post, and was directed to http://www.ncdc.noaa.gov/paleo/pubs/hantemirov2002/hantemirov2002.html … downloaded the data there (Calendar year, summer temperature anomaly reconstruction (.01 deg.C), larch tree ring chronology index value, and sample depth.) in a simple excel and sorted the data by larch tree ring chronology index data … if I then take a LTRCIV of 14, there are 7 values for the summer temperature anomaly reconstruction, ranging from -1.64°C in 287BC to -2.71°C in 550BC … in the middle of the table, a LTRCIV of 100 gives 30 STA values and a range from -1.09°C in AD436 to 0.87°C in 1317BC … towards the end of the table, a LTRCIV of 196 yields 7 STA values ranging from 1.68°C in AD1782 to 2.87°C in AD206 … can someone direct me to some explanation as to how I get from the one

(LTRCIV) to the other (STA) ? many thanks beforehand …

r.e. 665

“He seems to still be worried about inverted data despite Mann et al. publishing a formal reply to this. At this point bizarre is not the word any more.”

The word we’re all groping for is “dishonest.”

Could someone point me to where this “inverted data” issue is addressed by Mann or someone else who knows? I’ve so far been unable to debunk McIntyre’s claims that there was an error there.

Thanks!

[Response: The original commenter appears to be referring to: Mann, M.E., Bradley, R.S., Hughes, M.K., Reply to McIntyre and McKitrick: Proxy-based temperature reconstructions are robust, Proc. Natl. Acad. Sci., 106, E11, 2009. – mike]

Mark:

What characteristics do dendros use to determine responder trees, and are these characteristics equally present in living trees and long dead trees?

At the extremes, do they use characteristics like foliage or proximity to other trees, which won’t be preserved in dead trees; or do they use the cellular structure of the wood, or isotopes, or bark, which might be preserved in a dead tree for thousands of years.

Putting the question in more statistical language:- If we take a dendro to a site which has a set of living trees, and a set of similar but long dead trees, will he be able to identify “responder” trees in the living and dead sets with equal success?

In most dendrochronology work, it’s site- and taxon-based, not individual tree based. That is, you identify, beforehand, via observations of the variability of your response variable (esp ring widths, maximum latewood density, latewood percentage. etc) against the instrumental record, how a particular taxon repsonds in particular environmental situations. The textbook example is the 5-needle pines, including bristlecones, in the Sierra Nevada and Great Basin: they are sensitive to temperature near treeline. Because it’s open subalpine woodland, competitive interactions are minimal to begin with, and because decay is extremely slow (dead wood lays around a long time) there is some ability to corroborate an individual’s former competitive environment by simple observation. And whatever inter-individual growth differences there may have been are presumed averaged out in the (typically) ~15 trees that constitute one chronology (one specific geographic location). In that situation the answer to your last question is yes, but it’s based on site identification as much as tree identification.

What HS are doing is a horse of another color though. Not only are they going WAY back in time (like the bristlecone work), they’re doing it with short lived trees, many of which may have been moved from their growing location by alluvial processes, or if they didn’t move they grew at a time that the tundra hydrological conditions were different, all of which serve to complicate the simple assumption that the trees used to calibrate the growth-instr. record relationship, grew in the same site conditions as the sub-fossil trees did. This means that cross-dating the 241 samples is a hugely important task, not only because of the long time factor, but because it is a way of identifying the sub-fossil specimens that most closely respond the way the calibration set do, from the complete set of over 2000 slabs. That is, the very ability/necessity to cross date to extend the series back in time (and establish a decent sample size for a given time), which depends on inter-annual ring variability, insures that you will at the same time be selectiong specimens from the past that responded in a way similar to the modern, calibration trees. And this conclusion is further strengthened if you find these spread across an area for which it is reasonable to conclude would have been fairly homogeneous for the climate variable of interest, in this case summer temps.

In short, typically it’s based on site and species criteria, mainly, which are roughly constant through time, but in the HS case, it’s also greatly aided by the fact that cross-dating inherently insures a degree of similarity of response between samples in which the confidence of similarity of growing conditions may be lower.

Hope that helps.

Jim

Mike (in response to Benjamin #673):

That is the correct reference. I note that McIntyre has again ignored it by failing to acknowledge the existence of your in line comment. http://community.nytimes.com/comments/dotearth.blogs.nytimes.com/2009/10/05/climate-auditor-challenged-to-do-climate-science/?permid=379#comment379

Re: Jim Bouldin 598 etc.

I’m just catching up on the latest discussion from one of the supposedly “silent” dendros.

I’m planning to do a post on McIntyre’s “sensitivity analysis” and Jim’s points will certainly be part of it. In comments at my DeepClimate blog I’ve already noted the weighting/geographic sampling problem (i.e. Briffa says the Hantemirov live 17 are taken from at least three different locations).

Another salient fact I’ve noted is that McIntyre has now acknowledged that the live 17 were present in Briffa’s version of the Yamal data set all along. Apparently McIntyre missed the 5 earliest live series. Also the Schweingruber set of 34 (although it looks like 33 to me) contains both dead and live trees. (I didn’t see these points made above, sorry if they were).

So McIntyre’s rhetoric about the 12 “picked” trees was completely off base. And the “sensitivity analysis” is a mess, if the idea was to substitute the Schweingruber live tree series for the H&S live tree series (aside from the fact that this was misguided in the first place).

Some questions for Jim:

Are you saying that the selection for “sensitive” (wider tree-ring variance) tree series would be unnecessary at a “regular” site like Schweingruber Khadyta River? Or to put it differently, should the utility of such a site for paleoclimatology be evaluated on an “all or nothing” basis?

As far as I know this site has not been used in a published study even by Schweingruber himself (at least I can’t find one). Is this likely because of the apparent “divergence problem” at this site, or some other reason? (I guess that calls for speculation, and I probably should ask Schweingruber that question … still I’d like to know your thoughts).

Assuming the Schweingruber site were considered useful, is there a “proper” way to include Schweingruber into the H&S Yamal network (e.g. weighting), or are the incompatibilities between H&S approach and Schweingruber too great?

Re 674

Thanks again Jim for your time, very helpful. V interesting problems & nice to learn about a new field!

Mark

Re 672

I can’t answer that question, but maybe I can answer a different one which will help!

I looks like you are referring to a 2002 publication. Most of the discussion here is about a Briffa paper published in 2000 and based on earlier H&S work. So maybe the data you found is from an unrelated reconstruction. Also the fact that the data you found goes back to 550 BC makes me think this is a different paper. Briffa (2000) only deals with a 2000-year reconstruction from 1990, ie back to around 10 BC.

But that’s just a guess

Mark

Continuing #676

It could be that I’m just misunderstanding what Schweingruber was up to.

There are many, many Schweingruber sites – 36 for Siberian larch alone. Kadhyta River just happens to be the one in the same region as H&S Yamal.

The idea seems to be have sampled many sites, but not to go back very far in time, using only easily available living and dead trees (as opposed to the sub-fossil tree-series of H&S). Like Khadyta River, all the other Schweingruber sites seem to go back to the 1790s and no further, except for one: Polar Ural-historical, another bete noir of McIntyre’s if I recall correctly.

This stuff becomes entrenched in the denialosphere very quickly as gospel truth carved in stone. The BBc is running a blog http://www.bbc.co.uk/blogs/thereporters/richardblack/2009/10/climate_issue.html by “Richard Black, environment correspondent” to answer all the criticism of their appalling recent “global warming has stopped” article. And sure enough, here is one contribution “May I ask why the totally discredited hockey stick appears on your blog? Others have commented already. I add only that this hockey stick chart ends with a wild upswing that was based on a few tree rings and trees do not make really good thermometers. Your text does not mention the graph. Why? You have put this graph with a statement about being unbiased and that seems to show exactly the opposite of what you say in the text. Odd!” Note especially “this hockey stick chart ends with a wild upswing that was based on a few tree rings and trees do not make really good thermometers”. Do you ever get down-hearted Gavin?

#676 Deep Climate

Why bother?

1. We have esatblished that the guy is unqualified to be an “expert” IPCC reviewer.

2. IPCC argues that nothing hinges on the paleoclimate data.

3. If you want to replicate somebody’s work, read the methods section in his papers. Or go get your own samples.

You are just giving the other guys some attention. Ignore them.

You do a poor parody, Sycamore, of the kind of position you like to pretend others hold so you can dismiss them as foolish.

#682 Hank

-Perhaps you can do a better job summarizing? I’ve tried my best.

-Are you deliberately ignoring my previous question about polar ice melt and lack of temperature rise? If so, why? Is it ill-posed?

Thanks.

Richard,

I’m not sure where you’re coming from, although HR who knows all and sees all, seems to be on the right track.

Be that as it may, did you happen to notice that I answered your previous “question” about how the 17 H&S live tree series got “whittled” down to 12 in Briffa? In case you missed it, that was yet another mistake by McIntyre – all 17 were in the H&S/Briffa data set all along.

The opening post mentions the Kaufman et al 2009 Arctic reconstruction. Has that paper been discussed yet? Because I have a question about it.

Thanks.

By the way, I have a big problem with McIntyre’s definition of “replication”, at least as it applies in this case.

Removing selected data from one research team’s data set, and adding in other sample data, collected and screened with a different set of criteria by another research team, is hardly a valid replication test.

When you boil away all the rhetoric and mistakes, we’re left with the fact that Schweingruber’s Khadyta site chronology does not corroborate H&S’s regional chronology in the 20th century. And the fact that this discrepancy is due to a “divergence problem” in Schweingruber’s chronology, which fails to match the temperature record.

I suppose one could argue that this is significant or needs to be explained. However, it should also be noticed that this observation/analysis could have been made years ago. McIntyre didn’t need the H&S Yamal raw measurement set to make that point, whether or not he knew he had it in his possession all along.

#682

Hank, maybe you forgot to read this. I guess your melting ice cubes model doesn’t explain the pause in warming after all. Seems your time scaling was off by an order of magnitude. Confirmation bias will get you every time if you don’t think skeptically! Enjoy:

Mark C. Serreze says:

18 November 2008 at 10:30 AM

Note that we’ve got a paper soon to come out in “The Cryosphere” (and we’ll have a poster at AGU) looking at recent “Arctic Amplification” that you discuss (the stronger rise in surface air temperatures over the Arctic Ocean compared to lower latitudes). We make use of data from both the NCEP/NCAR and JRA-25 reanalyses. JRA-25 a product of the Japan Meteorological Agency. It’s a pretty impressive signal, and is clearly associated with loss of the sea ice cover. You see the signal in autumn, as this is when the ocean is losing the heat it gained (in summer) back to the atmosphere. Very little happening in summer itself (as expected) as the melting ice surface and heat sensible heat gain in the mixed layer limit the surface air temperature change.

#683, 685, 687

Richard Sycamore, please stay on topic.

Sycamore’s trolling. There’s no “melting ice cubes model’ — not from me nor anyone. Sycamore misrepresents a good desktop experiment answering a question about heat measurement as an arctic climate model, a stupid one.

Sycamore’s doing fairly good trolling; he distracts from this topic, gets attention, reiterates bogosity from another topic, and sets up for concern trolling afterward.

Deep Climate:

Are you saying that the selection for “sensitive” (wider tree-ring variance) tree series would be unnecessary at a “regular” site like Schweingruber Khadyta River? Or to put it differently, should the utility of such a site for paleoclimatology be evaluated on an “all or nothing” basis?

No, not saying that at all. You always want sensitive trees–in all specimens–that is the only way to infer past environments. This is regardless of condition–living, dead, buried, etc. The first criteria is always that you have functioning thermometers.

As far as I know this site has not been used in a published study even by Schweingruber himself (at least I can’t find one). Is this likely because of the apparent “divergence problem” at this site, or some other reason? (I guess that calls for speculation, and I probably should ask Schweingruber that question … still I’d like to know your thoughts).

It would be very inappropriate for me to speculate on why Fritz Schweingruber has or has not used his Yamal-area chronology, or what his purposes were in collecting it originally, but I most definitely do not believe that he has avoided using it because it doesn’t act the way he would “like it to”, with the exception of possible ring complacency, which is always a valid reason. Same for Keith Briffa.

An important but un-emphasized point in this thread is that the main point of multi-millenial dendroclimate work is to estimate the more distant PAST, not the present and recent past: those we can get from the instrumental record (with some important caveats that I will ignore here). The divergence problem is not helpful, but neither is it fatal, because in most cases I’ve seen, there is still a significant overlap between the instr. record and the portion of the ring series not affected by divergence (i.e. for calibration), the latter being almost entirely a problem of the last few decades only as far as I know.

Assuming the Schweingruber site were considered useful, is there a “proper” way to include Schweingruber into the H&S Yamal network (e.g. weighting), or are the incompatibilities between H&S approach and Schweingruber too great?

I don’t know enough about the specifics of his chronology, but in theory there’s no reason why not. Certainly some weighting based on spatial location and/or differences in standard errors could be involved. The major difference between the two is the use of buried logs by HS; I think they both used mainly Siberian Larch, so no problem there.

Hank, #689. I just was happy for Sycamore that he at last had something.

It’s nice to have things, even if they’re only questions.

Be happy for him! He’s the RC Eeyore with a Useful Pot For Keeping Things in and Something To Put In A Pot.

:-)

#689 Hank, I asked you to clarify how you thought your desktop experiment had relevance for the real world question being asked, and you chose not to. I didn’t mean to be insulting calling it an “ice cube model” – but is that not what it was? If this is so far off-topic, why not just transfer it to the “warming pause” thread?

Thanks a lot Jim. Wow. I’m even more confused now.

Hmmm, with respect I think that the actual answer to my question “If we take a dendro to a site which has a set of living trees, and a set of similar but long dead trees, will he be able to identify “responder” trees in the living and dead sets with equal success?” seems to be No.

In a nutshell, dendros identify living responders using information which simply isn’t available about dead responders. Instead for dead trees they rely upon weaker correlations and assumptions. I would conclude from this that the ability to detect dead responder trees is worse than the ability to detect living responder trees. How much worse seems hard to quantify, it might only be quite a small difference, but given the low signal to noise regime I doubt it can be ignored.

For living trees they can use:

i – known growth location

ii – measured correlation with instrumental temperature

For dead trees they can use:

a – an assumption about averaging growth differences over the sample set

b – an assumption about site homogeneity

c – (for H&S) a daisy chain of correlations from the dead trees back to the living responder trees

The dead tree assumptions seem a lot weaker than the live tree hard data. Therefore the ability to detect responders must be worse in the past than the present.

In my view this causes a problem. The averaging-across-samples step includes a larger percentage of responder trees (good thermometers) for living trees than for dead trees. This is a selection bias which increases the temperature sensitivity in the present vs the past. In mickey mouse language, if we have 10 thermometers, in the present we get an average temperature from 10 known good thermometers by adding their readings and dividing by 10. In the past we might have 5 good and 5 broken thermometers. If we average across them all by adding the readings and dividing by 10, our average temperature will be very wrong. Exactly how wrong depends on the number of broken thermometers and in what way they are broken.

There’s also a signal to noise ratio issue:- older parts of the reconstruction have degraded signal to noise with respect to recent parts, because of the presence of more non-responding trees in the average over dead trees. This could be important since most reconstructions seem to have quite low SNR even in the present day.

How do dendros correct for this temperature bias? It seems intractable to me. If you could quantify the selection bias with accuracy you could correct it by having some kind of temperature gain adjustment with time. But quantification seems awfully difficult. And gain adjustments are a nightmare in themselves since you apply gain to the noise as well as the signal. If you assume the noise is additive white & Gaussian you could instead over sample the past set of trees to get the temperature sensitivity and the SNR to match the present day. But that would rely upon a very accurate model of the bias, and the assumption of AWGN seems very weak.

When a scientist like Briffa receives a chronology from field dendros like H&S, does he receive a lot of metadata and analysis of this temperature bias, or does he get some kind of bias correction curve estimate? It seems to me impossible to do the gain correction based purely on the samples themselves, he’d need a bunch of out of sample data to build any such.

Or am I completely wrong? I often am :)

Cheers

Mark

#693 Mark P,

Jim will no doubt have better, more complete answers.

But as far as I know, chronologies are never constructed by comparing live trees to instrumental temperature. The calibration comes after any screening and mean chronology construction.

My understanding is that sensitivity can be imputed by the degree of variability in tree ring width. As explained by H&S in (Hantemirov & Shiyatsov, Holocene, 2002):

It seems to me this method could be applied to trees of any age, living or dead. I’m not sure about the details. For example, I suppose one could normalize measures of interannual sensitivity against trees of the same age.

What I’m not clear on, though, is whether a similar method was used by Schweingruber. I tend to think not. If one looks at the “raw measurements” and “chronology” files for Khadyta River, we see the same number of samples for 1990 in both: 18.

ftp://ftp.ncdc.noaa.gov/pub/data/paleo/treering/chronologies/asia/russ035w_crns.crn

ftp://ftp.ncdc.noaa.gov/pub/data/paleo/treering/measurements/asia/russ035w.rwl

http://www.nosams.whoi.edu/PDFs/papers/Holocene_v12a.pdf

I could be way off too. I guess we’ll both have to wait for Jim.

Deep Climate,

Selecting “sensitive” trees is fairly standard in dendro. One reason this is useful is that it aids in crossdating.

#694

Just in case anyone is confused by the files I posted, the first one contains four different ring width chronologies (crn):

ftp://ftp.ncdc.noaa.gov/pub/data/paleo/treering/chronologies/asia/russ035w_crns.crn

And the second the raw sample data (rwl):

ftp://ftp.ncdc.noaa.gov/pub/data/paleo/treering/measurements/asia/russ035w.rwl

#695 Rattus Norvegicus

Sure, that makes sense. But presumably the samples in the raw measurements file for the Schweingruber Khadyta site represent the whole site data set before selecting for sensitivity. If so, then that implies that sensitivity screened data from H&S was mixed with unscreened data from Schweingruber by McIntyre in his “sensitivity test” (whether or not Schweingruber himself selected sensitive trees in his chronology).

Or am I on the wrong track?

Re 694, 693

Deep Climate, thanks for your comments. Sounds like I might have been premature in my comments on selection and my strawman above. Apologies. I thought I didn’t understand selection of dead trees, it sounds like I also don’t understand selection of living trees!

Re my assumption (c) it sounds like I might have it wrong, but I need to think through the implications. Jim says:-

“That is, the very ability/necessity to cross date to extend the series back in time (and establish a decent sample size for a given time), which depends on inter-annual ring variability, insures that you will at the same time be selecting specimens from the past that responded in a way similar to the modern, calibration trees”. And your quote from the H&S paper says something similar.

I guess the question then becomes “how do they select LIVING trees”. If living trees are also only selected on inter-annual ring variability, then Jim’s comment seems correct. I can see this working if dendros took a truly random sample of living trees (eg walk past 100 trees, roll a dice at each tree, if you throw a 6 take a sample) and then performed the same inter-annual ring variability selection among those random samples. But my (limited) understanding is that living tree selection is an on-site process looking for trees at the right location within the stand. Jim’s comments earlier kind of support this understanding.

The upshot is I still don’t understand how to reconcile the selection process for living trees with that for dead trees.

Thanks

Re 694

Deep Climate, Jim, I really don’t understand how H&S’s selection criterion pertains to climate. (I’m spending waaaay too much time on this). Can you help me understand?

I agree that selecting the samples with the highest inter-annual variability helps develop the most accurate chronology (x-axis). But the climatological signal is (literally) orthogonal to this (y-axis). (also comments 695 and 697).

What H&S have done seems to involve a hidden assumption:- that the inter-annual temperature variation was high at all times in the past. I can’t see any justification for this assumption, without a-priori knowledge of inter-annual temperature variations in the past.

During periods when inter-annual temperature variation is low, the trees which best represent temperature will be those with low inter-annual variability. But H&S’s algorithm in such periods will select the wrong trees:- ones which have suffered from small-scale events which were spatially inhomogeneous.

So for example if there was a long period of stable temperature, but during that time there was a localised event affecting part of the region (eg a flood), H&S’s algorithm will select the trees in the flooded region, because they show a larger inter-annual variation. But that variation was a result of the flood, not temperature changes. This won’t affect the chronology, but it will affect the temperature reconstruction.

Analogy:- if you’re studying history, war and famine events are great ways to date your chronology. But they are very bad ways of inferring the average citizen’s lifestyle during a long historical period.

A while ago I kind of liked Jim’s argument that responder trees in the present have high inter-annual variability, so trees in the past with the same property are probably responders too. But now I think that all we can say about highly variable dead trees is just that – they are highly variable. I don’t understand how that variability can be attributed to temperature without a-priori information.

I guess H&S would argue that events which are small spatially will be small temporally, and that selecting long-lived trees will average them out. But I think that even if this is true, their selection algorithm will perform worse with respect to a climate signal than using the entire data set. H&S’s algorithm will always have some bias (however small) towards spatially inhomogeneous events which are not temperature related. Using the entire data set will have the best chance of averaging these out.

Do you or Jim have any views on this?

Cheers

Mark

I think that’s exactly what he did.