This last week has been an interesting one for observers of how climate change is covered in the media and online. On Wednesday an interesting paper (Thompson et al) was published in Nature, pointing to a clear artifact in the sea surface temperatures in 1945 and associating it with the changing mix of fleets and measurement techniques at the end of World War II. The mainstream media by and large got the story right – puzzling anomaly tracked down, corrections in progress after a little scientific detective work, consequences minor – even though a few headline writers got a little carried away in equating a specific dip in 1945 ocean temperatures with the more gentle 1940s-1970s cooling that is seen in the land measurements. However, some blog commentaries have gone completely overboard on the implications of this study in ways that are very revealing of their underlying biases.

The best commentary came from John Nielsen-Gammon’s new blog where he described very clearly how the uncertainties in data – both the known unknowns and unknown unknowns – get handled in practice (read that and then come back). Stoat, quite sensibly, suggested that it’s a bit early to be expressing an opinion on what it all means. But patience is not one of the blogosphere’s virtues and so there was no shortage of people extrapolating wildly to support their pet hobbyhorses. This in itself is not so unusual; despite much advice to the contrary, people (the media and bloggers) tend to weight new individual papers that make the news far more highly than the balance of evidence that really underlies assessments like the IPCC. But in this case, the addition of a little knowledge made the usual extravagances a little more scientific-looking and has given it some extra steam.

Like almost all historical climate data, ship-board sea surface temperatures (SST) were not collected with long term climate trends in mind. Thus practices varied enormously among ships and fleets and over time. In the 19th Century, simple wooden buckets would be thrown over the side to collect the water (a non-trivial exercise when a ship is moving, as many novice ocean-going researchers will painfully recall). Later on, special canvas buckets were used, and after WWII, insulated ‘buckets’ became more standard – though these aren’t really buckets in the colloquial sense of the word as the photo shows (pay attention to this because it comes up later).

The thermodynamic properties of each of these buckets are different and so when blending data sources together to get an estimate of the true anomaly, corrections for these biases are needed. For instance, the canvas buckets give a temperature up to 1ºC cooler in some circumstances (that depend on season and location – the biggest differences come over warm water in winter, global average is about 0.4ºC cooler) than the modern insulated buckets. Insulated buckets have a slight cool bias compared to temperature measurements that are taken at the inlet for water in the engine room which is the most used method at present. Automated buoys and drifters, which became more common in recent decades, tend to be cooler than the engine intake measures as well. The recent IPCC report had a thorough description of these issues (section 3.B.3) fully acknowledging that these corrections are a work in progress.

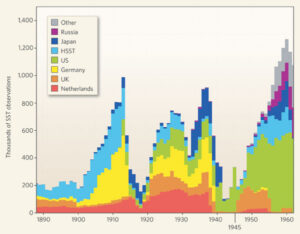

And that is indeed the case. The collection and digitisation of the ship logbooks is a huge undertaking and continues to add significant amounts of 20th Century and earlier data to the records. This dataset (ICOADS) is continually growing, and the impacts of the bias adjustments are continually being assessed. The biggest transitions in measurements occurred at the beginning of WWII between 1939 and 1941 when the sources of data switched from European fleets to almost exclusively US fleets (and who tended to use engine inlet temperatures rather than canvas buckets). This offset was large and dramatic and was identified more than ten years ago from comparisons of simultaneous measurements of night-time marine air temperatures (NMAT) which did not show such a shift. The experimentally-based adjustment to account for the canvas bucket cooling brought the sea surface temperatures much more into line with the NMAT series (Folland and Parker, 1995). (Note that this reduced the 20th Century trends in SST).

More recent work (for instance, at this workshop in 2005), has focussed on refining the estimates and incorporating new sources of data. For instance, the 1941 shift in the original corrections, was reduced and pushed back to 1939 with the addition of substantial and dominant amounts of US Merchant Marine data (which mostly used engine inlets temperatures).

The version of the data that is currently used in most temperature reconstructions is based on the work of Rayner and colleagues (reported in 2006). In their discussion of remaining issues they state:

Using metadata in the ICOADS it is possible to compare the contributions made by different countries to the marine component of the global temperature curve. Different countries give different advice to their observing fleets concerning how best to measure SST. Breaking the data up into separate countries’ contributions shows that the assumption made in deriving the original bucket corrections—that is, that the use of uninsulated buckets ended in January 1942—is incorrect. In particular, data gathered by ships recruited by Japan and the Netherlands (not shown) are biased in a way that suggests that these nations were still using uninsulated buckets to obtain SST measurements as late as the 1960s. By contrast, it appears that the United States started the switch to using engine room intake measurements as early as 1920.

They go on to mention the modern buoy problems and the continued need to work out bias corrections for changing engine inlet data as well as minor issues related to the modern insulated buckets. For example, the differences in co-located modern bucket and inlet temperatures are around 0.1 deg C:

(from John Kennedy, see also Kent and Kaplan, 2006).

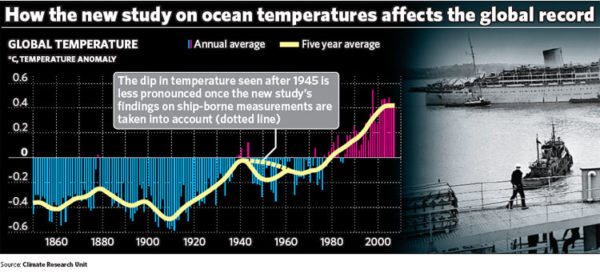

However it is one thing to suspect that biases might remain in a dataset (a sentiment shared by everyone), it is quite another to show that they are really have an impact. The Thompson et al paper does the latter quite effectively by removing variability associated with some known climate modes (including ENSO) and seeing the 1945 anomaly pop out clearly. In doing this in fact, they show that the previous adjustments in the pre-war period were probably ok (though there is substantial additional evidence of that in any case – see the references in Rayner et al, 2006). The Thompson anomaly seems to coincide strongly with the post-war shift back to a mix of US and UK ships, implying that post-war bias corrections are indeed required and significant. This conclusion is not much of a surprise to any of the people working on this since they have been saying it in publications and meetings for years. The issue is of course quantifying and validating the corrections, for which the Thompson analysis might prove useful. The use of canvas buckets by the Dutch, Japanese and some UK ships is most likely to blame, and given the mix of national fleets shown above, this will make a noticeable difference in 1945 up to the early 1960s maybe – the details will depend on the seasonal and areal coverage of those sources compared to the dominant US information. The schematic in the Independent is probably a good first guess at what the change will look like (remember that the ocean changes are constrained by the NMAT record shown above):

Note that there was a big El Niño event in 1941 (independently documented in coral and other records).

So far, so good. The fun for the blog-watchers is what happened next. What could one do to get the story all wrong? First, you could incorrectly assume that scientists working on this must somehow be unaware of the problems (that is belied by the frequent mention of post WWII issues in workshops and papers since at least 2005, but never mind). Next, you could conflate the ‘buckets’ used in recent decades (as seen in the graphs in Kent et al 2007‘s discussion of the ICOADS meta-data) with the buckets in the pre-war period (see photo above) and exaggerate how prevalent they were. If you do make those mistakes however, you can extrapolate to get some rather dramatic (and erroneous) conclusions. For instance, that the effect of the ‘corrections’ would be to halve the SST trend from the 1970s. Gosh! (You should be careful not to mention the mismatch this would create with the independent NMAT data series). But there is more! You could take the (incorrect) prescription based on the bucket confusion, apply it to the full global temperatures (land included, hmm…) and think that this merits a discussion on whether the whole IPCC edifice had been completely undermined (Answer: no). And it goes on – once the bucket confusion was pointed out, the complaint switched to the scandal that it wasn’t properly explained and well, there must be something else…

All this shows wishful thinking overcoming logic. Every time there is a similar rush to judgment that is subsequently shown to be based on nothing, it still adds to the vast array of similar ‘evidence’ that keeps getting trotted out by the ill-informed. The excuse that these are just exploratory exercises in what-if thinking wears a little thin when the ‘what if’ always leads to the same (desired) conclusion. This week’s play-by-play was quite revealing on that score.

[Belated update: Interested in knowing how this worked out? Read this.]

DR,

Neither, you’d move. But we can’t move. If we were to build the metaphorical boats the issue still remains our crops would be devestated, and so only a few of us would survive. That’s not acceptable so we would fix the dams the best we could as quickly as we could. The major earthquake should give us enough time to do that, but we’ve spent the last decade sitting here arguing that it’s not worth it when we can all learn to swim anyway.

The changes are massive, yes, but necessary as your flood waters wouldn’t subside without us pumping them away.

Gary, you quote from a 1995 article then say “This would indicate to me that natural causes for a change in C12/C13 can not be ruled out. I must also question ….”

Here are some 250 subsequent articles citing that one; you can check your supposition — likely someone else thought of the same questions you raise and may have published. Science works like that.

http://scholar.google.com/scholar?num=100&cites=873523141873022731

#195 DR: Developing countries cannot get fossil fuel-based energy at the cost older countries did. Oil is already about 30% above the 1979 spike ($39, about $100 in today’s money). Coal prices have doubled in world markets over the last year. If every poor country rapidly converted over to the modes of energy use in Europe let alone the US, prices would go through the roof.

As to the enormous expense, a coal-fired power plant doesn’t last forever. Instead of replacing them, we should be replacing them with clean power. If we couple this with aggressive efficiency drives, while cranking up the cost of carbon pollution, the cost need not be very high. Better: once you have renewables in place, you are into a Moore’s Law type cost reduction curve: as each plant wears out, you replace it by one that costs less. And your running costs don’t include consumables.

Better still, renewables don’t rely on massive scale the way coal-fired plants do for low cost, so you can put them in inaccessible places or countries without a national grid.

Contrary to this being a disaster for developing countries, it could be what saves them from being terminally behind.

Your analogy is good. To take it a step further, you seem to want poorer countries who have not built dams yet to build more to the same shonky standard.

Since this is a bit OT, can I invite follow-ups to go to my blog?

Re DR #186:

The IPCC 4 was a review of the scientific literature and made it pretty clear that if emissions continue as is then we are likely or highly likely to experience warming that is likely to bring about significant impacts. If you want peer reviewed literature look into the list of papers and reports that they cite.

The impacts of the current droughts in India and Austrlia demonstrate that you don’t need a huge shift in temperatures and rainfall to cause severe problems. The 5-year drought in India has brought impoverishment and starvation to 50 million people. The ongoing decade long drought in Australia is bringing about the ecological collapse of the Murray-Darling Basin river system, and bringing our once highly productive irrigation, broad-acre cropping and rangeland grazing sectors to their knees – raising prices domestically and and crimping our capacity export the huge food to the World.

It is apparent from this report from the Royal Society that it is virtually inevitable that CO2 induced Ocean acidification will have very dire impact on oceanic ecosystem and thereby fisheries.

I am continuously astounded by skeptics quibbling about scientists adjusting and improving their datasets as if this somehow disproves global warming and its predicted impacts, when the science is so clear and the implications are staring us in the face.

I look forward to a delegation of skeptics and conservative politician heading off to central India to explain that it is not worthwhile trying to prevent predicaments such as theirs because it is much more cost effective for them to adapt than for us to attempt to reduce the risk that such catastrophes occur. And that there have been droughts before so it is natural, so there is no reason for us to risk reducing our growth in wealth by a few percent in order to prevent such events becoming more frequent.

DR: “massive changes to the existing infrastructure at enormous expense.”

Whether we want it or not, these massive changes will happen. If it’s not the climate, it will be the mere scarcity of resources mandating the change. We have a choice between starting now and having some level of control on it, and a little time, or to wait until the massive change happens on its own.

There is no lack of signs, see the recent protests in Spain and their victims.

I agree with you that it sucks to be a developing country at the time when everyone realizes that the “wealth” we thought was there turns out to be a liability as much as an asset. They won’t get to ride the illusion of wealth that we have enjoyed for a while. Kinda like a 18 year old who sees his parents digging their own hole into bankruptcy through debt and comes to the realization that he will have to refrain from all the niceties that they got out of it if he wants to follow a better path. However, is there really another viable option?

DR writes:

We also built our wealth on slavery and exploitation of native peoples. Should we allow the third world to use those as well, since they should have the same rights as us to development?

RE: 197

Forget all that biofuel nonsense. Methanex (Vancouver, BC) sells methanol for $1.50/US Gal, and it is used to make biodiesel. Wesport (Vancover, BC) has develeped methods for using methane and other light hydrocarbon gas formulations as fuel for Diesel engines. These engines are manufactured by Cummins are now in service in several BC Transit city buses. China will have about 100 of these buses in service for the Olympics. If the Wesport engineers can figures out how to use methane as Diesel fuel, they can certaintly figure out how to use methanol.

FlexFuel cars use E85, which is a mixture of about 85% and 15% light hydrocarbons. The latter are added to lower the flash point of the mixture and promote smooth combustion of the mixture in engines using fuel injection. These hydrocarbons are also required for low temperature service.

With modification, methanol could be used as fuel instead of ethanol in these engines. Racing cars use methanol, and they go really fast.

Methanol is made for natural gas, and there is lots of it in ocean basin near Dubai and Qatar. The Japonese have several pilot projects under way to recover nat gas from permafrost.

Steve Reynolds, A & H take a reasonable approach, but they run into the same problem that you do any time you use Bayesian statistics–how do you justify your choice of a prior. This was precisely the problem that caused Fischer to give up on Bayesian methods. When you use a uniform prior, at least you cannot be accused of having your prior dominate your data. However, until your data are so overwhelming that the choice of prior does not matter, any other choice requires justification. In particular, the choice of 3 degrees per doubling as the location parameter for the Cauchy prior could be questioned, and you could make arguments for either higher or lower. Likewise, you could question the scale parameter as well. The fact that the choice of prior makes a significant difference means that you must be very careful in your choice of prior and confident in your justification.

One result that is particularly interesting is how dependent the results are on the level of constraint provided by the data. A reduction of 50% in constraining power could raise the upper limit by 1.5-2x and more than double the cost of mitigation.

As to choice of prior (be it uniform, Cauchy or whatever), probably one of the strongest constraints is something along the lines of a weak anthropic argument: We’re here, so the sensitivity can’t be too high (or too low). This makes values much above 20 degrees and probably 10 degrees unlikely. (Note, another problem with the Cauchy is that it is defined from -infinity to + infinity, so it has to be artificially truncated for low values.

The bottom line is that the data by themselves are not sufficient to constrain us to a level below 5-6 with much confidence. To do this you need to rely to some extent on the Prior. Also, notice that while they don’t quote lower limits, there’s very little probability of a sensitivity below 2 in these analyses.

Finally, note that even if we can constrain sensitivity to below 4.5 degrees per doubling, that doesn’t necessarily mean we’re out of the soup. As Harold Pierce’s missive (#207) shows, even if there weren’t a risk that we’d start seeing outgassing from natural sources (and there is), we might recover and liberate the carbon in the permafrost and the clathrates ourselves.

A correction to my #199: The possible tripling of Arctic warming is not due to the melting of permafrost, which is a consequence. The cause is the loss of Arctic sea ice. It is reported here by NSIDC:

http://nsidc.org/news/press/20080610_Slater.html

“The rate of climate warming over northern Alaska, Canada, and Russia could more than triple during extended episodes of rapid sea ice loss, according to a new study from the National Center for Atmospheric Research (NCAR) and the National Snow and Ice Data Center (NSIDC). The findings raise concerns about the thawing of permafrost, or permanently frozen soil, and the potential consequences for sensitive ecosystems, human infrastructure, and the release of additional greenhouse gases.

‘The rapid loss of sea ice can trigger widespread changes that would be felt across the region,’ said Andrew Slater, NSIDC research scientist and a co-author on the study, which was led by David Lawrence of NCAR. The findings will be published Friday in Geophysical Research Letters.”

Thanks to all. I appreciate the points that were made.

Climate science and even just the possibility of CO2 driven AGW tells us that any new investment in infrastructure should be made with low or zero CO2 emmissions in mind. I’ve been a proponent of nuclear energy for years, thinking that we should cut down on pollutants in general and save the limited resources of fossil fuels for transportation. The U.S. should have been building nuclear plants for the last 30 years as Western Europe has but we didn’t due to activism. I hope our rising energy costs will spark innovation and clear the hurdles put in place by the “not in my backyard” crowd.

To the issue of “unequivocal” proof. Not to change this to an English lesson but the science of AGW is not unequivocal. It is not an absolute, it’s not proven, it’s not fact, CO2 sensitivity is not X (as opposed to say gravity, where objects WILL accelerate at 32.2 feet per second, per second until they reach terminal velocity.) Obviously the problem is that if and when CO2 – AGW has been shown to be unequivocal much damage will have been done.

I can tell you this, we are not in a runaway feedback condition, runaway feedbacks do not pause. This means that a negative feedback (PDO or AMO or reduction in TSI or whatever) is having an effect that may be temporary on global temperatures. This will cause the Oceans to sink more CO2 and give off less, it will by AGW theory reduce water vapor and low cloud cover which will further impact temperature.

So now I have a question, in general terms, what positive feedbacks were used in the development of the GCM’s? What negative feedbacks were used?

Re #210, GHG theory is scientifically sound and proven and as there is now an additional 220 billion tonnes of CO2 and other GHG gases in the atmosphere it can be determined that we are warming up somewhat in line with the expectations of GW theory. Add in land use changes and albedo effects and the balance of evidence states that AGW is hapenning and is not scientifically at any rate incorrect.

The Arctic is warming faser than anywhere else on the planet and that is an accurate prediction/projection/forecast. The bernard/barnard convection cells that make up the ITCZ etc are expanding (was that predicted or be demonstrated on GCM?) and will cause deserts to move ever northewards.

Scientifically speaking real climate has spend years spelling this out now and if someone wants to come around here arguing the opposite they had better have better science than RC do which is doubtful. Anyone would thing that NASA/GISS are making this all up for some reason in order to line their pockets no doubt (sigh)

DR, I will leave your question about feedbacks included in the GCMs to others more qualified. But I will continue to disagree about the meaning of “unequivocal.” No one has been able to produce a mechanism for warming of the past several decades without including the effect of anthropogenic greenhouse gases. How long do you think we need to continue the search for alternative explanations before we decide that it must be AGW?

#210 [DR] “I can tell you this, we are not in a runaway feedback condition, runaway feedbacks do not pause. This means that a negative feedback (PDO or AMO or reduction in TSI or whatever) is having an effect that may be temporary on global temperatures.”

I can tell you this: you don’t understand feedback. Positive feedback is not the same as runaway feedback, as discussed repeatedly on this site.

DR, If you want unequivocal in that sense, might I suggest the Church (and perhaps a denomination other than the Unitarians would be appropriate). No, we cannot say CO2 sensitivity=x. In fact we cannot say that the acceleration due to gravity is x, but rather must say that it is x +/- dx with Y degree of confidence at this particular point on Earth’s surface. We can also say, with about 90% confidence that CO2 sensitivity is not much less than 2. So, since the subject matter is science and not language arts, I’d suggest that this is about as unequivocal as it gets.

As to nuclear power, the main reason why we have not been building new plants has less to do with NIMBYism and more to do with concerns over proliferation (both wrt fuel and reprocessing). I, too favor increased use of nuclear power, but I do realize that proliferation and waste storage concerns remain to be addressed satisfactorily.

Finally, a little lesson in physics. A system with positive feedback is not necessarily in a runaway condition, and it is not necessary for positive and negative feedbacks to balance (i.e. an infinite series can converge).

Read about feedbacks here:

https://www.realclimate.org/index.php/archives/2007/08/the-co2-problem-in-6-easy-steps/langswitch_lang/sw

here:

https://www.realclimate.org/index.php/archives/2007/10/the-certainty-of-uncertainty/langswitch_lang/sw

here:

https://www.realclimate.org/index.php/archives/2007/04/learning-from-a-simple-model/langswitch_lang/sw

and here:

https://www.realclimate.org/index.php/archives/2006/08/climate-feedbacks/langswitch_lang/sw

to start with.

You can read about feedback

DR,

science does not “prove” things, because 100% certaintly rarely exists in science. Everything comes with uncertainty, and political and economic decisions are made all the time in the face of it. Most of the decisions you make come without 100% confidence in the intended outcome. Maybe you shouldn’t spend money on an education because you might not get the job you want. Maybe you shouldn’t get in your car to work because there is a small chance you’ll get in a catastrophic accident. What the science shows is that there is very high confidence that the modern warmth is predominantly caused by greenhouse gases, and other human activity like deforestation, etc…and that “business-as-usual” consequences out to 2100 will not be negligible. The paradigm of AGW has not only been consistent with collected data sets, but has spawned a wide range of tests which have been borne out, and continues to hold remarkable explanatory and preditive power. Not much more you can ask for.

A “runaway feedback” is a strawman. Of more importance than the end point (which is certainly not going to be like Venus) is the rate of change, and the associated consequences as we go there. Glacier melt and sea level rises leading to human displacement, loss of seasonal sea ice, temperature responses of ecosystems and agriculture, enhancement of precipitation gradients and droughts in already dry areas, etc. What matters for the climate sensitivity is the forcing and the sum of the feedbacks (i.e. the net effect) and that appears to be positive. The OLR is the big negative feedback which prevents a runaway, but net positive feedbacks (after the planck response, water vapor, clouds, albedo, etc) do not imply that the Earth will eventually heat up without bound. Keeping it simple, all that net positive feedbacks imply is that a doubling of CO2 will give a response greater than 1.2 C.

I don’t think it’s correct to say “which feedbacks were used” since, for example, the effects of water vapor are not built-in assumptions but rather predictions by the model, or an emergent property. The short answer is that

positive feedbacks= water vapor (largely in the upper, colder layers of the atmosphere) which moistens as temp goes up. Ice-albedo (the ratio of ice to ocean/land changes as the climate warms resulting in less reflection of incoming sunlight)

negative feedbacks= the outgoing radiation (OLR) since Earth radiates more according to Planck’s law at higher temperature. With just this feedback (no others), a doubling of CO2 gives 1.2 C warming. Lapse rate is another one, since the strength of the greenhouse effect is effected by the strength of the temperature decrease with altitude.

You also have the cloud feedback which we still don’t know the sign of with as much certainty as the others, but the best evidence seems to suggest that they are approximately neutral to positive. If there is a net negative feedback from clouds, it can’t be too strong since the paleoclimatic and modern observational record don’t suggest a low sensitivity and we have observations shwoing that lower clouds decline in a warming climate (low clouds control the albedo more than any other kind).

Ray Ladbury: …A & H take a reasonable approach, but they run into the same problem that you do any time you use Bayesian statistics–how do you justify your choice of a prior. … When you use a uniform prior, at least you cannot be accused of having your prior dominate your data.

Thanks for the thoughtful response, but one of the major points of the paper was that choosing a ‘uniform’ prior is inappropriate and does dominate the data!

You may want to make your other points about the paper on Annan’s web site where he can answer them much better than I can:

http://julesandjames.blogspot.com/2008/05/once-more-into-breech-dear-friends-once.html#comments

Ray: Also, notice that while they don’t quote lower limits, there’s very little probability of a sensitivity below 2 in these analyses.

They do quote a lower limit of 1.2C: “…present the results in Figure 2. The resulting 5–95% posterior probability interval is 1.2–3.6C…”

Also, note raypierre’s response did not claim that sensitivity below 2C was ruled out by model results.

Harold (207) says, “…Racing cars use methanol, and they go really fast…”

Yes, but their MPG really sucks ;-) !

I’ve always wondered why methane isn’t higher on the candidate list. I now very little of the specifics, though understand it has some really nasty inherent properties among maybe other problems.

Ron (212), from a purely logical perspective, lack of a proven alternative does not, by itself, prove the primary and make it “unequivocal”, though proof of an alternative can disprove the primary.

OT:

http://www.sciencenews.org:80/view/generic/id/33092/title/Science_Academies_Call_for_Climate_Action

National science acadamies from over twelve nations including the USA, the G8, China and India, Brazil among others issued a joint statement on climate change (“to limit the threat of climate change by weaning themselves off of their dependence on fossil fuels”.

Steve Reynolds–the prior dominates the results because there is not enough data to overcome its influence. That occurs regardless of which prior one chooses early in an Bayesian analysis. The thing is that if you choose an “intelligent” prior, its influence is still felt, but it is at some level prejudging the outcome of the analysis. For instance, what would have happened had A&H chosen 6 as the location parameter for their distribution? Or 12? Or 0.5? You’d wind up with a much different distribution. I’ve run into similar problems in my day job trying to come up with distributions that bound the radiation hardness of microelectronics using multiple types of data. It’s not an easy nut to crack.

And yes, while Raypierre’s comment does not completely rule out, it’s bloody difficult to make a model with a sensitivity that low–and if you did, you’d probably have some pretty unphysical assumptions or results.

Ray Ladbury wrote: “As to nuclear power, the main reason why we have not been building new plants has less to do with NIMBYism and more to do with concerns over proliferation (both wrt fuel and reprocessing).”

The reason the USA has not been building nuclear power plants is that investors don’t like throwing money away. It’s not because “anti-nuclear activists” or “NIMBYs” object to it. It’s because Wall Street won’t touch it. Nuclear power is a proven economic failure — even after a half century of massive subsidies.

That’s why the nuclear industry will not put a shovel in the ground to start building even a single new nuclear power plant unless the taxpayers underwrite all the costs and absorb all the risks — not only the risks of catastrophic accidents but the risks of economic losses. That’s why the nuclear industry has been aggressively (and successfully) pushing for hundreds of billions of dollars in federal subsidies, guarantees and insurance — like the half trillion dollars in nuke subsidies in the Lieberman-Warner climate change legislation that the Senate debated last week. Proponents of a nuclear expansion were unhappy with that bill because in their view it did not offer enough support for nuclear power.

#189: raypierre should have added that France’s great per capita CO2 performance comes mostly from it’s world record nuclear capacity: About 80% of the electricity produced in France is of CO2 poor nuclear origin. There is nobody in the world who matches this, and it is heartbreaking to see how Germany’s environmentalists have, by flatly refusing the nuclear option and clinching to the “Ausstieg”, put the country on the rails leading to energy poverty.

DR, here is another point to consider when deciding when the evidence is sufficient to act. The evidence being considered is based on what is actually observed in the data. However, because of the thermal lag of the system, whatever is observed will only be a fraction of the guaranteed final result, no matter what is done. Stop greenhouse gas emissions totally today and the temperature (say the ten year mean of the annual global average) is expected to continue to increase for at least decades. So if you do not act until things get serious, you can be certain that they are going to get considerably more serious, very possibly catastrophic.

Francis Massen wrote: “France’s great per capita CO2 performance comes mostly from it’s world record nuclear capacity: About 80% of the electricity produced in France is of CO2 poor nuclear origin. There is nobody in the world who matches this”

It is always surprising to me when nuclear advocates cite France as the world leader in nuclear electricity generation. France has 59 nuclear power plants generating about 63,000 MW. The USA has 104 nuclear power plants, generating about 99,000 megawatts — the largest number of nuclear power plants and the most nuclear generating capacity of any country in the world. The USA is the world leader in nuclear electricity generation, not France.

The French-designed EPR reactor under construction at Olkiluoto, Finland, touted by the nuclear industry as the flagship of new reactor designs, is at least two years behind schedule and 50 percent over budget and has been plagued with safety and quality problems. This is of course normal for the nuclear industry.

Francis Massen wrote: “… it is heartbreaking to see how Germany’s environmentalists have, by flatly refusing the nuclear option and clinching to the “Ausstieg”, put the country on the rails leading to energy poverty.”

Germany is on track to become a powerhouse of clean renewable energy technologies. According to WorldWatch Institute:

Germany is also a world leader in wind-generated electricity:

Worldwide, solar and wind generated electricity are the fastest growing forms of energy production by far. This is the direction we need to go in, not nuclear — which is the most expensive, least effective and — crucially — the slowest path to reducing CO2 emissions from electricity generation.

Ray Ladbury (208) — Bayesians make quite a point of ‘background information’. In the current seeting of estimating equilibrium climate sensitivity, that means we may use all of physics except those observations to be used to update the prior. So we are allowed to redo Arrhenius’s calculation correctly to obtain a prior equilibrium climate sensitivity of about 3 K. Now choose to wrap a distribution around that, a Weibull distribution for sanity and safety. There is the issue of the remaining parameter; use the median of the distribution together with weak anthropic principle (or whatever).

Now update the prior using paleoclimate data. The result should be quite good, IMO, but still leaving very small probabilities attached to large values. So, collect more data and repeat.

Ron Taylor (223) wrote “Stop greenhouse gas emissions totally today and the temperature (say the ten year mean of the annual global average) is expected to continue to increase for at least decades.” I would prefer using at least 22 year averages, but that is a small point. The larger one is that for the oceans to warm to come close to equilibrium with the air requires several centuries.

My own amateur estimate is that if there were no further emissions after today, there is about 0.5+ K of further global warming to come. That’ll melt some, but not all of, the Greenland ice sheet.

Ray Ladbury: –the prior dominates the results because there is not enough data to overcome its influence.

I don’t think resolving that was the point of the paper (since it only used ERBE data). There is independent data available from paleoclimate and volcanic effects that could be used for the prior.

Ray: And yes, while Raypierre’s comment does not completely rule out, it’s bloody difficult to make a model with a sensitivity that low–and if you did, you’d probably have some pretty unphysical assumptions or results.

I still have not seen any real evidence to support that statement. The only expert opinion we got did not support it.

Apparently a slightly negative (or even zero) cloud feedback would probably allow sensitivity less than 2C. From the published info that I have seen, that is within current uncertainty.

RE #217 GO: http://www.westport.com and read about the clean Diesel technology they have developed.

Methanol has a much lower energy density than gasoline. So what? The low price compensates for that. However, if it were to be used as fuel, the gov’s would slap a lot of taxes on it.

But in the meantime, suppose you have your engine modified for use with methanol just like the racing cars,

and you have the drums of methanol delivered right to your doorstep from a commercial suppler of this “solvent”. Whose is to know that you are using it as motor fuel? If really want to juice it up, you can add some propylene oxide which is also readily available and cheap.

You could a become methanol “bootleger”, get really rich, and then drive a big honking Dee-Lux Lincoln Navigator or the Viper with that monster V-12 engine! And then watch all the neighbors go nuts!

My own amateur estimate is that if there were no further emissions after today, there is about 0.5+ K of further global warming to come. That’ll melt some, but not all of, the Greenland ice sheet.

Really? 0.5 degrees is all that separates Greenland from becoming green again? I’m sure someone on a long betting site would take you up on that.

RE#228 Harold…how much road tax is there on methanol?

And tell me what are the drawbacks to methanol if you spill it on the environment?

And what happens if you spill methanol on yourself?

The problem with biofuels will always be, that people will start growing fuel rather than food and that will automatically lead to less food and more expensive food which will make the poorest people in the world suffer even more just because we focused on cars rather than looking at the entire energy sector and picking the most effective measures first – which clearly are replacing coal and gas power plants with renewables and nuclear. Cars are a popular and telegenic focus. Politicians driving around in Teslas and activists slicing SUV tires – all for the “greater good” but somewhat pointless and of a rather symbolic nature compared to the real issues.

@Secular Animist #224

Those numbers are misleading. Wind contributed 5% in 2007 with an average effectivity of just 20% of the installed capacity. Schleswig Holstein is an exception because its the small part directly south of Danmark (a narrow, flat strip of land) and its effectivity is considerably higher due to no big cities, very little industry and rather constant wind conditions. But even there, 100% is laughable. They will need backup. The 25% claim for PV is a joke that went thru the press a couple of months ago. Somebody had simply looked at the installed PV capacity and had assumed exponential growth – exactly the sort of science usually and rightfully smashed on this website. The “Ausstieg” from nuclear will not persist, BTW. The conservative parties most likely to rule the country after the next elections have already announced that they will go back to nuclear rather than building new coal power plants which the old government had planned just to get rid of nukes.

Re #224, very true and well put but the world needs 7 TW more power come 2030 and renewables although set to expand aggressively will not even make up this number from the 14 TW We use today.

There is little chance of avoiding 2C but a good chance of averting anything more unless CCS does not work and we still intend to go coal mad which even Germany is planning on doing.

The complexities of the energy mix requirements for heat, electricity and liquid fuel is all underpinned by liquid fuel. Global warming will not be a worry to mosat if we see the $250 oil barrel as large part of the economy will be wiped out as well as globalisation etc.

#230 What happens if you spill methanol on yourself is nothing. Bathing in it however is not recommended and drinking it is deadly. I speak from experience on the first and intelligence on the latter two

Thanks all, for the education,

Ron Taylor – What do you have in mind when you say act now? My car gets good mileage, I’ve just re-insulated my home, Use CF bulbs where I can stand to, have modern and efficient appliances and all that. I’m a firm supporter of nuclear, and hydro power (wind and solar wouldn’t make a dent in the NorthEast’s needs). I’d love the ability to buy a diesel – electric car with a diesel generator running at steady RPM driving electric motors because it would eat silly hybrids and fuel cells for lunch at 50 – 100mpg because I do believe in conservation.

But what do you mean, or what do Real Climate regulars mean, by act now?

Re: Bayesian analysis, Priors and Sensitivity. Bayesian analysis is easy when you have lots and lots of data–then your choice of prior doesn’t matter. However, in the realm of limited data–where we usuallylive–your choice of prior effectively determines your result. One approach I’ve discussed with a colleague is doing the analysis over “all possible priors”. Then you can look at a weighted average to get your best-fit and how things change over the choice of prior. In reality, of course, you have to choose a subset of priors as possible, and you have to figure out how to weight them (weighting them all evenly is in fact akin to a “maximum entropy” prior on priors), but it is probably still an improvement.

The thing is, the advantage of Bayesian analysis is that it allows you to include all sorts of information that couldn’t be included in a standard probabilistic analysis, and so get more definitive answers than you could otherwise. Unfortunately, there are few generally accepted guidelines as to HOW TO include this information.

My general caveat is that when your choice of prior influences your results significantly, you need to be very careful. Since this is almost always the case (or why else would you be using Bayesian methods), caution is the watchword.

DR, “Act now” means do whatever we can to reduce CO2 emissions, so we can hopefully buy enough time to come up with a real solution to the issue. It means trying to convince others of the necessity of such action. It means voting for “reality-based” politicians who recognize the necessity of decreasing CO2 emissions. It means realizing that this is a global problem that invalidates the usual economic zero-sum game model. Progress in China or India or Africa is as much a gain as progress in the US.

It means planting trees to buy time and a whole lot more. Above all, it means banishing complacency. The more progress we make reducing CO2, the more time we have to come up with a workable solution. Perhaps our great-great-great grandchildren can afford to look behind them.

BlogReader (229) wrote “0.5 degrees is all that separates Greenland from becoming green again?” No, just that more of the Greenland ice sheet would eventually melt. Remember, that is the global temperature change, distributed unequally between the tropics and the polar regions.

My take on the Greenland ice sheet is that it is already in negative mass balance. Somewhere earlier on RealClimate (I think) there is a link to a paper simulating the extent of the ice sheet during the Eem (Eemian interglacial period). Although considerably warmer than now, about 2+ K, some of the simulated ice sheet survived.

In any case CO2 emissions didn’t stop yesterday, aren’t stopping today, and won’t tomorrow. It’ll become even warmer.

Henning (231) — Not entirely. First of all, there is a considerable (to put it mildly) supply of biomass as biofuel feedstack in the form of waste: agricultural, animal, forestry, and with now half the people of the world living in cities, municipal. This alone will make a substantial contribution.

More, in the global South especially, there is an abundant supply of unused, sometimes degraded, soils. Many plants suggested as biofuel feedstacks will thrive under those conditions where typical plants for human and animal food will fare only poorly. So there is no competition with food by using those areas and plants.

Then there are the semi-competitive plants. An example are tubers such as sweet potatoes and cassava. People prefer ‘better’ foods, but the tubers will grow on poorer soils. Using the tubers for biofuel feedstock when the food crop does well makes good sense; if the food crop (partially) fails, then eat the tubers. It is a form of insurance.

Finally there is the directly competitive crops such as maize, rapeseed, soy and palm oil. Ethanol from maize doesn’t even help the climate. (Ethanol from sugarcane does.) Biodiesel from rapeseed is hardly better. Both, in an ideal world, would be discouraged. That leaves, in this brief analysis, the competion between food and fuel for major oil crops such as soy and palm. With regard to palm oil, it is now in such great demand as food that the biodiesel producers in Southeast Asia are having serious difficulties obtaining affordable supplies. A ‘free market’ proponent would surely find this to be an appropriate situation. I’d rather see saner policies which don’t lead to so much waste of resources, myself.

DR, “Act now” also means writing letters to the press and answering posts on discussion forums that claim climate change is a hoax. The will to act at the top level of politics is ultimately driven by the fear of losing votes; a small but vocal climate inactivist community can give politicians the insulation from reality that permits them to avoid unpopular steps like encouraging everyone to use trains rather than cars (e.g. by ripping out roads where trains are a viable alternative). This is a useful prerequisite to emissions reduction because any reduction in emissions from power generation is immediately effective for trains; for cars it only helps if we all use electric cars (and likewise buses).

DR – I have done the things you have done. By act now, I mean that, as a society, we need to dedicate ourselves to the systemic change that is needed to address this issue. I am talking about governement policy, not the pandering, short-term politically expedient stuff, but the kind of long-term commitment that the problem demands. It means changing our approach to transporation, electric power generation, etc. And that will not happen without a comprehensive commitment to research, tax incentives, and everything else the goverment can do to make this happen (without taking it over and thus killing it).

DR writes:

And you know this how? Based on what?

@Davis B. Benson #239

In a perfect, sane world, people would grow fuel exclusively on soil not appropriate for food and there would probably be laws out there ensuring a worldwide usage of soil for food until everybody had enough to eat and nobody would suffer. But what we see every day is that people do what’s best for them as an individual. And you can’t blaim a farmer for planting fuel rather than food when he can make more money that way. Suddenly we fueling our cars compete directly with those wanting to eat and already having trouble to afford it. This won’t save the world – it will split it even further. I’m totally with you when it comes to using waste that would otherwise just rot in the sun and maybe even when it comes to exploiting soil that wouldn’t be used at all if it wasn’t for biofuel – but I don’t see how this can be ensured at all. It didn’t work against the insanity of people planting drugs for the rich in the poorest parts of the world, replacing food for many with money for few – and those are illegal. I don’t want to find out what it’ll be like for something that isn’t.

DR wrote: “… wind and solar wouldn’t make a dent in the NorthEast’s needs …”

On the contrary, the offshore wind power resources of the Northeast alone could “make a dent” in the entire country’s needs. According to a September 2005 article in Cape Cod Today:

A good source for information on wind energy potential in the Northeast is the New England Wind Forum on the US Department of Energy website.

How much wood would a woodchuck chuck if the woodchuck had a contract to fuel the number of power plants (displacing coal/natural gas burners) necessary to supplement power when the wind/sun fail to do the job?

re #239 and 243

Don’t you think that your comments relaing to production of biofuels on “unused or waste land” are somewhat anthropocentric? We do share our planet with other species. We have already appropriated vast areas of the globe for our agricultural activities and pushed many other species to the edges. If it is deemed necessary to sustain an ever growing human population in the face of the twin threats of peak oil and global warming, we should make more efficient use of the land we do farm. By eating much less meat, we would probably free up well over half the area we currently use for the production of biomass. I say this with regret because I am an enthusiastic carnivore with a repugnance of rabbit food.

[Response: There is a strong case for wilderness untouched by humans, but in the spirit of Aldo Leopold, it is also possible for human uses of land to co-exist with the needs of other species. Industrial agriculture doesn’t do that, but that is not the only model of land use. With regard to biofuels, there’s increasing interest in natural prairie ecosystems as a source of feedstock for cellulosic ethanol. –raypierre]

JCH has an interesting point — is wood-burning a viable energy source?

re Raypierre’s response to #246

I agree that it is possible for some human uses of land to co-exist with the needs of (some) other species. However, your apparent readiness to damn so-called industrial agriculture in favour of a more sustainable organic approach is IMHO somewhat naive. The latter model would/will serve us well when and if global population levels drop to 2-3 billion and if we still have a reasonable climate by then. It is how you get there from here that should be exercising everyone (i.e. from 6.5 to 10 back to ,say, 3 billion).

I accept that industrial agriculture is energy intensive but it is the very use of all this extra energy that has allowed massive yield increases which, in turn, has resulted in what would otherwise have been unsustainable population growth. I am led to believe that the green revolution doubled the world’s carrying capacity. A sudden widespread reversion to organic farming would lead to Malthusian consequences although, in the West, the affluent appear to acquire feelings of virtue from the purchase of over-priced organic produce.

The only way we may be able to have a soft landing for the planet and its human population (assuming you physicists have your climate sensitivities properly calculated) is to work as rapidly as possible on acceptable methods of population control while maintaining high but ultimately declining levels of industrial-scale agriculture.

I suggested that, if you want more bangs for your buck, you might have to consider changing human dietary habits from a high dependency on meat. This would require top chefs and food writers to start developing meals from vegetables that the carnivores among us could find remotely palatable instead of banging on about the mythical health and taste virtues of organic produce. Currently, over half of all agricultural land is grassland, largely but not entirely because it is relatively less suitable for arable production. Much of this could be given over to forestry or other forms of biomass.

The human digestive system is designed to handle diets similar to those of pigs and poultry. Generally, vegetable diets are lacking in some of the essential amino acids needed by monogastric animals. Traditionally, these limiting amino acids are provided through meat, fish, eggs or dairy products. In productive poultry or pigs, up to 7% of old fashioned diets comprised meat or fish meal. On economic grounds, these have mainly been replaced with synthesised limiting amino acids with no associated performance loss. It should also be noted that pig and poultry diets are based on cereals and vegetable protein concentrates (solvent extracted soya bean meal, for example) with additions of appropriate mineral/vitamin supplements. Monogastric farm animals are not fed salads (flavoured water with a tad of minerals and vitamins)or even green winter vegetables. It follows that, if the name of the game is to maximise the number of humans that can be sustained for a minimum of agricultural production, feed them all poultry or pig diets and get rid of the poultry and pigs. Replace ruminants with trees, harvesting the latter for (transport) fuel and CO2 air capturing biochar while simultaneously reducing methane emissions.

O, brave new world. I’ll soon be out of it.

Excellent post by Douglas Wise. Appealing ideas are not the same as *effective* ideas. Low yield “organic” agriculture, like most alternative power scenarios, simply cannot (or won’t) ever scale to meet even fraction of the growing global demands. An exceptions and therefore a major part of the solution is nuclear power which scales up very well.

The claims that Mars and Jupiter are warming up gets lots of play, especially from “conservative cartoonist” [Mallard Fillmore.] What’s up with that, what is the science and the not-science there?