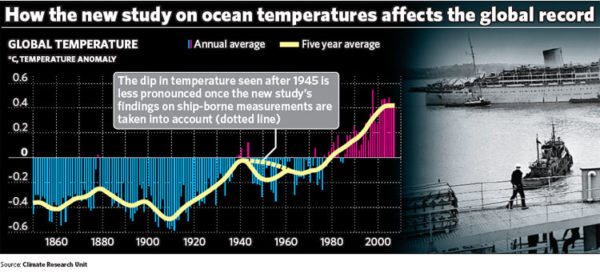

This last week has been an interesting one for observers of how climate change is covered in the media and online. On Wednesday an interesting paper (Thompson et al) was published in Nature, pointing to a clear artifact in the sea surface temperatures in 1945 and associating it with the changing mix of fleets and measurement techniques at the end of World War II. The mainstream media by and large got the story right – puzzling anomaly tracked down, corrections in progress after a little scientific detective work, consequences minor – even though a few headline writers got a little carried away in equating a specific dip in 1945 ocean temperatures with the more gentle 1940s-1970s cooling that is seen in the land measurements. However, some blog commentaries have gone completely overboard on the implications of this study in ways that are very revealing of their underlying biases.

The best commentary came from John Nielsen-Gammon’s new blog where he described very clearly how the uncertainties in data – both the known unknowns and unknown unknowns – get handled in practice (read that and then come back). Stoat, quite sensibly, suggested that it’s a bit early to be expressing an opinion on what it all means. But patience is not one of the blogosphere’s virtues and so there was no shortage of people extrapolating wildly to support their pet hobbyhorses. This in itself is not so unusual; despite much advice to the contrary, people (the media and bloggers) tend to weight new individual papers that make the news far more highly than the balance of evidence that really underlies assessments like the IPCC. But in this case, the addition of a little knowledge made the usual extravagances a little more scientific-looking and has given it some extra steam.

Like almost all historical climate data, ship-board sea surface temperatures (SST) were not collected with long term climate trends in mind. Thus practices varied enormously among ships and fleets and over time. In the 19th Century, simple wooden buckets would be thrown over the side to collect the water (a non-trivial exercise when a ship is moving, as many novice ocean-going researchers will painfully recall). Later on, special canvas buckets were used, and after WWII, insulated ‘buckets’ became more standard – though these aren’t really buckets in the colloquial sense of the word as the photo shows (pay attention to this because it comes up later).

The thermodynamic properties of each of these buckets are different and so when blending data sources together to get an estimate of the true anomaly, corrections for these biases are needed. For instance, the canvas buckets give a temperature up to 1ºC cooler in some circumstances (that depend on season and location – the biggest differences come over warm water in winter, global average is about 0.4ºC cooler) than the modern insulated buckets. Insulated buckets have a slight cool bias compared to temperature measurements that are taken at the inlet for water in the engine room which is the most used method at present. Automated buoys and drifters, which became more common in recent decades, tend to be cooler than the engine intake measures as well. The recent IPCC report had a thorough description of these issues (section 3.B.3) fully acknowledging that these corrections are a work in progress.

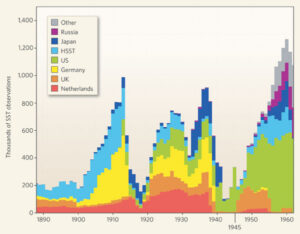

And that is indeed the case. The collection and digitisation of the ship logbooks is a huge undertaking and continues to add significant amounts of 20th Century and earlier data to the records. This dataset (ICOADS) is continually growing, and the impacts of the bias adjustments are continually being assessed. The biggest transitions in measurements occurred at the beginning of WWII between 1939 and 1941 when the sources of data switched from European fleets to almost exclusively US fleets (and who tended to use engine inlet temperatures rather than canvas buckets). This offset was large and dramatic and was identified more than ten years ago from comparisons of simultaneous measurements of night-time marine air temperatures (NMAT) which did not show such a shift. The experimentally-based adjustment to account for the canvas bucket cooling brought the sea surface temperatures much more into line with the NMAT series (Folland and Parker, 1995). (Note that this reduced the 20th Century trends in SST).

More recent work (for instance, at this workshop in 2005), has focussed on refining the estimates and incorporating new sources of data. For instance, the 1941 shift in the original corrections, was reduced and pushed back to 1939 with the addition of substantial and dominant amounts of US Merchant Marine data (which mostly used engine inlets temperatures).

The version of the data that is currently used in most temperature reconstructions is based on the work of Rayner and colleagues (reported in 2006). In their discussion of remaining issues they state:

Using metadata in the ICOADS it is possible to compare the contributions made by different countries to the marine component of the global temperature curve. Different countries give different advice to their observing fleets concerning how best to measure SST. Breaking the data up into separate countries’ contributions shows that the assumption made in deriving the original bucket corrections—that is, that the use of uninsulated buckets ended in January 1942—is incorrect. In particular, data gathered by ships recruited by Japan and the Netherlands (not shown) are biased in a way that suggests that these nations were still using uninsulated buckets to obtain SST measurements as late as the 1960s. By contrast, it appears that the United States started the switch to using engine room intake measurements as early as 1920.

They go on to mention the modern buoy problems and the continued need to work out bias corrections for changing engine inlet data as well as minor issues related to the modern insulated buckets. For example, the differences in co-located modern bucket and inlet temperatures are around 0.1 deg C:

(from John Kennedy, see also Kent and Kaplan, 2006).

However it is one thing to suspect that biases might remain in a dataset (a sentiment shared by everyone), it is quite another to show that they are really have an impact. The Thompson et al paper does the latter quite effectively by removing variability associated with some known climate modes (including ENSO) and seeing the 1945 anomaly pop out clearly. In doing this in fact, they show that the previous adjustments in the pre-war period were probably ok (though there is substantial additional evidence of that in any case – see the references in Rayner et al, 2006). The Thompson anomaly seems to coincide strongly with the post-war shift back to a mix of US and UK ships, implying that post-war bias corrections are indeed required and significant. This conclusion is not much of a surprise to any of the people working on this since they have been saying it in publications and meetings for years. The issue is of course quantifying and validating the corrections, for which the Thompson analysis might prove useful. The use of canvas buckets by the Dutch, Japanese and some UK ships is most likely to blame, and given the mix of national fleets shown above, this will make a noticeable difference in 1945 up to the early 1960s maybe – the details will depend on the seasonal and areal coverage of those sources compared to the dominant US information. The schematic in the Independent is probably a good first guess at what the change will look like (remember that the ocean changes are constrained by the NMAT record shown above):

Note that there was a big El Niño event in 1941 (independently documented in coral and other records).

So far, so good. The fun for the blog-watchers is what happened next. What could one do to get the story all wrong? First, you could incorrectly assume that scientists working on this must somehow be unaware of the problems (that is belied by the frequent mention of post WWII issues in workshops and papers since at least 2005, but never mind). Next, you could conflate the ‘buckets’ used in recent decades (as seen in the graphs in Kent et al 2007‘s discussion of the ICOADS meta-data) with the buckets in the pre-war period (see photo above) and exaggerate how prevalent they were. If you do make those mistakes however, you can extrapolate to get some rather dramatic (and erroneous) conclusions. For instance, that the effect of the ‘corrections’ would be to halve the SST trend from the 1970s. Gosh! (You should be careful not to mention the mismatch this would create with the independent NMAT data series). But there is more! You could take the (incorrect) prescription based on the bucket confusion, apply it to the full global temperatures (land included, hmm…) and think that this merits a discussion on whether the whole IPCC edifice had been completely undermined (Answer: no). And it goes on – once the bucket confusion was pointed out, the complaint switched to the scandal that it wasn’t properly explained and well, there must be something else…

All this shows wishful thinking overcoming logic. Every time there is a similar rush to judgment that is subsequently shown to be based on nothing, it still adds to the vast array of similar ‘evidence’ that keeps getting trotted out by the ill-informed. The excuse that these are just exploratory exercises in what-if thinking wears a little thin when the ‘what if’ always leads to the same (desired) conclusion. This week’s play-by-play was quite revealing on that score.

[Belated update: Interested in knowing how this worked out? Read this.]

Ray, BPL, et al: A question that may be old hat and I just missed it: why would the natural CO2 sinks absorb a percentage of emissions versus the capability of absorbing a fixed amount? In other words if the sinks are absorbing 14 gigatonnes of CO2 in ~ 2004 at about 50% of emissions, why are they not capable of that absorption always — which would have absorbed ~all of the emissions through about 1970 and so show zero increase in CO2 atmospheric concentration from 1800 to ~1970?

btw, I agree that CO2 emissions compared to the total weight of the atmosphere (my earlier post) has no probative value.

Hank (127), looks like a good reference; may answer some of my questions and save you guys’ time! A quick clarification: do you know why the largest C/CO2 sink, carbonaceous rock was omitted in the discussion? Because its time interval is so long and “non-interesting”? Or is there something else?

RE: #150 Hello Ray! GO:

http://www-airs.jpl.nasa.gov/index.cfm?fuseaction=ShowNews_DynamicContent&NewsID=10

Note the scale on the image: It is for standard dry air, but this is mis-leading. At 25,000 ft the air pressure is 6 psi, and amount of CO2 would be 154 ml/cu meter without a temperature correction.

Check out the video.

If a model run starts with a value of 385 ppmv fot CO2, the projected results with be a little bit too high. Models should start with ca 365 ppmv as calculated above. This may be the reason the mid troposhere is a little cooler than projected.

ATTN Gavin

How are your newer climate models incorporating the CO2 distribution data from the AIRS satellite? This would seem really important for the polar regions. The guys over at the AIRS place imply that you modelers are looking into this.

“Rod B Says:

6 June 2008 at 11:08 PM

Mark, 9 gigatonnes (I assume tonnes is correct) is (about) what % of the total flora?”

Rod, that would be 9 gigatonnes EACH YEAR of EXTRA plant growth. Add to that the reduction of the rainforests. But even so, where are these nine billion tons of new growth over and above the natural rate hiding themselves?

All you’ve managed to say is “well, it’s not a lot compared with the total, is it?”. Which doesn’t really answer the question, does it. It answers the question you’d like it to have been.

Ray Ladbury: …but I rather doubt that your model will match the data (all the data) very well.

Martin: …but that tuning is done to produce realistic model behaviour for known test situations.

Finally back to the thread topic! The data is so uncertain (and changing) that a very broad range of models could claim to match it nearly equally well.

And, while I know the time is too short to prove anything, the lack of warming this century (according to satellite data) does not match high sensitivity model predictions very well.

Re 151–the question of why sinks absorb a percentage rather than a fixed amount. I think that it has to do with the fact that they are not becoming fully saturated. In general reaction rates are proportional to concentrations unless one reactant saturates.

Harold, You seem to be implying that CO2 is 154 ppmv at 8 km–and that’s not what the link shows. Indeed, it appears quite consistent with 385 ppmv.

Rod, time interval; rate of change of rate of change. Most biogeochemical cycles are far, far slower than the rate at which human fossil fuel use is increasing CO2 in the atmosphere.

Natural sinks change over time; plant growth for plankton may bloom in one season and take up more CO2; fish and whale populations may increase in a few decades, and consume more plankton; erosion of exposed minerals changes slowly unless there are extreme rainfalll/erosion events.

Look — seriously, look, read, for example the study linked below — you can expose a lot of fresh material with events like the sudden massive erosion at the end of the PETM when climate changed extremely fast compared to ‘normal’ rates.

This kind of change would wipe much of our coastal urban development off the map with severe flooding, if it happens again:

Abrupt increase in seasonal extreme precipitation at the Paleocene-Eocene boundary

B Schmitz, V Pujalte – Geology, 2007 – ic.ucsc.edu

http://ic.ucsc.edu/~jzachos/eart120/readings/Schmitz_Puljate_07.pdf

——excerpt from abstract———

… during the early, most intense phase of CO2 rise, normal, semiarid coastal plains with few river channels of 10–200 m width were abruptly replaced by a vast conglomeratic braid plain, covering at least 500 km2 and most likely more than 2000 km2. This braid plain is interpreted as the proximal parts of a megafan. Carbonate nodules in the megafan deposits attest to seasonally dry periods and together with megafan development imply a dramatic increase in seasonal rain and an increased intra-annual humidity gradient.

The megafan formed over a few thousand years to 10 k.y. directly

after the Paleocene-Eocene boundary. Only repeated severe floods

and rainstorms could have contributed the water energy required

to transport the enormous amounts of large boulders and gravel

of the megafan during this short time span.

The findings represent evidence for considerable changes in regional hydrological cycles following greenhouse gas emissions….”

Steve Reynolds #155:

and yet, not one in nineteen manages to come up wirh sub- 2C sensitivity… repeating your claim doesn’t make it any more true.

Steve, your caveat shows you’re learning, slowly. Now repeat after me: a trend hasn’t changed unless and until the change is statistically significant.

You’re getting there.

Steve Reynolds, First, we are talking about physical models, not statistical models. That is, you have to start with physical processes that you know are actually occurring. Then you use independent data to constrain those processes. Now it is true that any single data set may not provide a tight constraint. However, as different phenomena are analyzed and more data are added, the constraints for having the forcing explain all the data become increasingly tight. Because CO2 has properties as a forcing that are not shared by most others, it is a fairly easy one to constrain. Now it is true that each single dataset probably assumes some statistical model, but it would not be enough to show that any single model is wrong. You’ll have to find fault with most of them. In effect you would have to show that most of the correct models are either peaked much lower or at the very least were skewed leftward. If you just spread out the probability distribution to the left and right, you will wind up increasing the probability of sensitivity higher than 4.5 degrees, and that would make the risk for this portion of the curve even more dominant. Be very careful what you wish for with respect to the models. I for one am hoping they are right wrt the most probable value for sensitivity, as that at least preserves some chance of avoiding dangerous warming without draconian measures.

Ray: Because CO2 has properties as a forcing that are not shared by most others, it is a fairly easy one to constrain.

I have not been questioning CO2 forcing. Feedback effects are the issue, especially clouds. I have still not seen any evidence about model physical parameters that the feedback effects are constrained to prevent sensitivity values less than 2C.

How do you know that modelers have not chosen parameters (affecting clouds for example) that determine feedback to produce a sensitivity consistent with paleoclimate and volcanic recovery time?

Only repeated severe floods and rainstorms could have contributed the water energy required to transport the enormous amounts of large boulders and gravel of the megafan during this short time span.

So perhaps this weekend’s rain is a mere prelude for what is yet to come. Great. Really looking forward to it.

Steve, the feedbacks don’t depend on the forcing, since they are thermally activated. That knob is not unconstrained either.

Mark (154), I never said 9 gigatones of flora was “not a lot compared with the total”. I’m just asking the question: 9 gt is how much compared to what the base is. I have no idea. Thought you might.

RE: #156 Hello Ray!

The relative concentration is 385 ppmv on the mole fraction scale at STP for dry air. The absolute amount of CO2 is 156 ml/cu meter at this elevation, and the absolute concentation is 0.000 007 moles/liter.

At sea level the absolute conc of CO2 for dry air is 0.017 moles/liter. For air with 1% water vapor, the absolute conc is 0.45 moles/liter. The ratio of H2O molecules to CO2 molecules is about 27 to 1.

Real air has this very important property: It always contains water vapor. With respect to the greenhouse effect, water vapor is auto-forcing and auto-feedback.

Harold, A 15 micron photon traversing that same liter of air will encounter about a billion CO2 molecules within a radius of its wavelength. And it will continue to do so well into the upper troposphere.

Ray: That knob [feedbacks] is not unconstrained either.

While of course there are some constraints, how tight are they?

What I’m looking for is a credible source that can answer the question: How much are the feedback effects in climate models constrained by known physics and high confidence real world data?

Steve, the feedbacks for CO2 will be pretty much the same as any other forcing. That implies a fair amount of data, and fairly tight constraints. Perhaps Gavin or Raypierre could help us out here.

“# Rod B Says:

9 June 2008 at 11:16 AM

Mark (154), I never said 9 gigatones of flora was “not a lot compared with the total”. I’m just asking the question: 9 gt is how much compared to what the base is. I have no idea. Thought you might.”

Well, someone else did the calculation. About 3KG per square metre.

That would be each year. And for each square metre whether it could hold plants (though you may get some back on gently sloped hills).

And, I think, they used a figure for “best Carbon content” of plant matter. There’s water in there too, y’know.

And you still haven’t figured out why total airmass matters.

Steve Reynolds asks:

“What I’m looking for is a credible source that can answer the question: How much are the feedback effects in climate models constrained by known physics and high confidence real world data?”

Well, if the IPCC scientific papers aren’t considered a credible source, first we must find a credible source.

Know of anyone?

Now what we CAN infer is that if even the denialists haven’t trotted this point out, it’s likely solid. After all, they harped on for AGES about the hockey stick. So, lacking anything other than the lack of attack on this subject, we have a baseline conclusion of “the constraints are solid”.

That shouldn’t rely on finding a “credible source”, either.

Steve Reynolds #160:

If there’s a modeller out there smart enough to pull that off, I’m sure Gavin would hire him :-)

You see, again, sensitivity is not a tunable parameter. It doesn’t help if you think you know what the sensitivity should be from extraneous sources like palaeo and Pinatubo. You cannot do anything with that knowledge through the cloud parametrization. It would be like blindly poking a screwdriver into a clock and turning it, in the hope it will run on time.

You can only improve cloud parametrization by — studying clouds. Real clouds, or clouds modelled physically in a high-resolution model called CSRM (Cloud System Resolving Model). And then you test how well you’ve done — not on palaeo or Pinatubo, but e.g., on weather prediction! The physics is largely the same… and surprise surprise, as cloud modelling performance improves by this metric, out comes the right reponse to Pinatubo and palaeoclimate forcings.

But it’s a painfully slow process. See http://www.sc.doe.gov/ober/berac/GlobalChange.pdf

Steve Reynolds (166) — Some of what I believe you are after can be found in Ray Pierrehumbert’s

http://geosci.uchicago.edu/~rtp1/ClimateBook/ClimateBook.html

Re 131 and Raypierre response

I am not an expert in this field but I am able to review the CO2 information and I still have a problem with the claim that all of the increase in the past 100 years is due to humans. The IPCC says that over 99% of CO2 is C12 isotope and the claim is that the change in the less than 1% C13 isotope indicates that humans have caused the increase in CO2. They quote Francey, R.J., et al., 1995: Changes in oceanic and terrestrial carbon uptake

since 1982. Nature, 373, 326–330. to show that you can distinguish between human and other causes of CO2. Looking at this article and since seasonal and longer term variation in CO2 are know to exist, any decrease in natural C12O2 output would cause an apparent increase in C12/C13 ratio. In fact the article specifically notes ” we observe a gradual decrease in 13C from 1982 to 1993, but with a pronounced flattening from 1988 to 1990″ and ” The flattening of the trend from 1988 to 1990 appears to involve the terrestrial carbon cycle, but we cannot yet ascribe firm causes.” This would indicate to me that natural causes for a change in C12/C13 can not be ruled out. I must also question how accurately these changes, representing such a small part of the atmosphere, can be made? Error bars anyone?

#52 Alastair McDonald:

>You may wish to declare your Australia a global warming free zone but …

He’s not the only one. Australia has one of the most ardent inactivist movements on the planet, supported by a powerful coal lobby and incompetent press.

The oil price trend is kind of rough justice on all those people who thought climate change and peak oil were hoaxes and bought SUVs.

#82 Timo Hämeranta:

>When discussing sea surface temperatures please see the following new

> study about sea level rise:

[…]

> They reconstructed approximately 80 mm sea level rise over 1950–2003,

> i.e. a rise of 1.48 mm yr−1. This rise is below the estimate of the

> IPCC and have no acceleration.

>

> And after 2003 sea levels are falling…

Sorry, I can’t see where in the paper you cite they discuss levels after 2003. Also, it seems to me that the paper is about exploring alternative methodologies with some uncertainty as to whether they are yielding credible results, not about validating or overturning previous results.

#90 Timo Hämeranta:

> Everyone, whether neutral, ‘mainstream’, alternative, critical or sceptical,

> who proclaims certainty, only proves poor knowledge, false confidence and

> lack of scientific understanding (& behavior).

Good that you point that out. The inactivist crew in general exhibit this flaw to the highest degree because arguing that you should do nothing in the face of a potentially extreme threat requires supreme confidence that you’re right. I have yet to see an article by Bob Carter (for example), who has the scientific background to know the difference between science and absolute certainty, admitting that there is a scintilla of probability that he could be wrong.

Take for example the threat of the West Antarctic Ice Sheet collapsing in the next century. What probability of that would we tolerate before extreme measures to tolerate climate change? 10%? 5%? 1%? 0.01%? You can argue that even with only a 1 in 1000 chance of it happening, the cost is so high, you’d pay the cost of prevention. Those who cannot accept on ideological grounds that humans are altering the climate in potentially harmful ways demand 100% certainty. You’re quite right to point out that that is not science (more like religion in my opinion).

Unfortunately as Richard Feynman told us, you can’t fool nature…

RE #30 & the much worse condition of sampling.

I’m thinking that if there is no bias in those sample errors you mentioned, and that they are “random,” then they might pretty much cancel each other out. So one would have to find some bias toward getting fairly consistently warmer or cooler samples than the actual SST being measured to have more serious problems. And even then if such a bias were found, it could be accounted for and corrections could be made.

Re Gary @172: “This would indicate to me that natural causes for a change in C12/C13 can not be ruled out.”

When you find a natural source of the required size be sure to let us know. Until then it’s just another ‘what if’ straw to grasp at for those looking for a reason–any reason–to do nothing.

Martin, your link does provide some useful info, but I think it makes my point rather than yours:

“The behavior of clouds in a changed climate is often cited as the leading reason that

climate models have widely varying sensitivity when they are given identical forcing

such as that provided by a doubling of carbon-dioxide. [Cess et al.1989 and Weilicki

et al. 1996] One important way to reduce this uncertainty is to build models with the

greatest possible simulation fidelity when compared to the observations of current

climate. While the climate modeling community is making important and

demonstrable progress towards this goal, the predictions of all types of models –

including models such as “super-parameterization” – will remain uncertain at some level.”

Ray, yes, I agree that some help from Gavin or Raypierre would be useful here.

Gary #172,

You must realize that, while the CO2 content of the atmosphere is steadily increasing, the atmospheric CO2 is rapidly cycled all the time through the biosphere and ocean surface waters. These fluxes are many times the secular rate, but they very nearly cancel long term. This is why you see short term variations in atmospheric CO2 (the seasonal “ripple” on the Keeling curve, e.g., and ENSO-related fluctuations), and also in the d13C. The long-term trend in both is robust.

The technique of isotope ratio measurement is not a problem in spite of the small numbers. More of a challenge is proper sampling, i.e., choosing locations far from sources/sinks to get values representative for the whole atmosphere. Just as with ppmv determination.

As raypierre already pointed out, you will have to explain where the known-released CO2 from fossil fuel burning, about twice of what we see appear in the atmosphere, is going. It doesn’t do to postulate an unknown natural mechanism that magically just kicks in in the 19th century as we start burning fossil fuels (after having been stable for 8000 years or so), magically has just the same quasi-exponential growth signature, and magically just about the same size as fossil-fuel (plus deforestation) released CO2 if half of that would disappear into the oceans and biosphere… while failing at the same time to give said explanation.

#173 Philip Machanick Says:

“You can argue that even with only a 1 in 1000 chance of it happening, the cost is so high, you’d pay the cost of prevention.”

That depends on the cost of prevention and how effective this prevention is. For example, let’s say your $200,000 home has a 1 in 1000 chance of buring down in 30 years. Would you pay for fire insurance if the policy was going to cost you $25,000/per year and only had a 1 in 100 chance of paying out due to fine print? Most people would simply live with the risk of being uninsured if those were the terms.

Meaningful CO2 reductions are not going to happen as long as the world population is growing no matter what governments do. Even if there was some initial success at coercing people into a ‘carbon control regime’ you can bet that carbon limits would be tossed out the window at the first sign of a stalling economy. For that reason it makes no sense to pay the ‘cost of prevention’ if that cost involves restricting access to currently viable energy sources. Paying for R&D and hoping that a miracle occurs would likely be a worthwhile effort but it is not guaranteed to produce results either. Adaptation is the only plausible and cost effective strategy remaining.

Lynn #174:

The good news is that you don’t have to stop at “thinking”. There are ways to check and optain a precise correction. The second and fourth figure in the article show how it is done: you need redundancy, i.e., the same, or nearly the same, thing measured in more than one way. Co-located air and bucket temperatures. Co-located engine inlet and bucket temperatures.

This is what fools statistically naive critics also of the land temperature record: for climatology it is massively redundant. We know when a measurement is wrong and don’t have to rely on Anthony Watts’ pretty pics for circumstantial evidence.

BTW I would happily believe that individual bucket readings are off by 2C or even more :-)

Re Raven 178:

Providing a house insurance example with a wildly inflated estimates of insurance costs and understated risk does not in any way justify your assertion that it is pointless to work hard at reducing emissions. If your house burns down then you can build or rent another one. Not so with the planet. And most climate scientists with any credibility appear to be convinced that if we don’t radically reduce emission soon then very nasty impacts are more likely than not (rather than the one in a 1000 chance that you imply).

#172, Gary, over the long term, the bulk of the CO2 increase can be shown to be from fossil fuels (e.g. https://www.realclimate.org/index.php/archives/2004/12/how-do-we-know-that-recent-cosub2sub-increases-are-due-to-human-activities-updated/langswitch_lang/sw)

However, that thread lacks quantitative analysis. As you point out, there are natural sources causing isotope ratio fluctuations in the short term and they could act in the long term as well. It would be nice to have a fully quantitative analysis of the fossil contribution to the past and current isotope ratio over various time scales.

re #172, Gary

you need to distinguish variations in the rates of change of the 13C/12C ratio in atmospheric CO2 and the absolute changes. The bottom line is that the oceans and terrestrial environment are taking up excess CO2 released by humans (they are nett sinks), and therefore they cannot also be nett sources, even if occasionally the rates of uptake of CO2 from the atmosphere may vary, e.g. due to biomass burning (such as that of 1994/5 and 1997/8 [*]) when the rate of CO2 uptake by the terrestrial environment may slow considerably (or potentially reverse). However the latter are temporary changes in the rate of CO2 exchange with the terrestrial environment since plant growth following biomass burning will recover somewhat.

Now it’s very likely that variations in 13C/12C (and absolute atmospheric [CO2]) in the early part of the “industrial age” were due to land clearance which will both have rendered the terrestrial environment a nett source, and also contributed to the reduction in atmospheric CO2 13C/12C, since plants and fossil fuel are similarly 13C-depleted. But that’s also an anthropogenic source of both atmospheric CO2 and reduced 13C/12C. In fact there’s evidence that the terrestrial environment was nett neutral for long periods in the late 20th century (e.g. throughout the 1980’s [**]).

But the bottom line is that if one adds CO2 to the atmosphere, then this is going to suppress natural release of CO2 from the oceans and the terrestrial environment, since the terrestrial environment and especially the oceans are linked by equilibria in which CO2 partitions according to atmospheric concentration. In other words enhanced atmospheric CO2 results in enhanced physical partitioning into the oceans (easily measured by changes in ocean pH), and enhanced uptake by plants and the soil. Notice that each of these is potentially saturable in various respects. The only way’s one might get a positive feedback (higher CO2 resulting in enhanced natural CO2 release) would be if the CO2-induced greenhouse enbhancement resulted in temperature sufficiently high as to kill large amounts of biomass non-recoverably, or through the release of sequestered carbon in tundra – that sort of thing).

But that’s not to say that natural process don’t lead to variations in the rates of change of atmospheric [CO2] and 13C/12C. That (and non-natural contributions to the terrestrial CO2 “budget”) is what the Francey Nature paper refers to I suspect.

You asked about errors. The error in measuring the isotopic composition of carbon isotopes using mass spectrometry is small, and if one looks at graphs the error is normally encompassed within the size of the symbol. So for example (to cite yet another Francey paper”! [***]) the authors determine that the accuracy in measurement of 13C/12C to be around 0.015% (in other words if the 13C/12C ratio is given as delta 13C and has a value of -7.83% in 1996, that’s -7.83 +/- 0.015%). Any errors of any significance lie elsewhere (e.g. in the dating of gas in ice cores for historical measures of 13C/12C; or the possibilities for gas contamination and so on).

[*]Langenfelds RL et al (2002) Interannual growth rate variations of atmospheric CO2 and its delta C-13, H-2, CH4, and CO between 1992 and 1999 linked to biomass burning, Global Biogeochemical Cycles 16 art # 1048.

[**] Battle et al (2000) Global carbon sinks and their variability inferred from atmospheric O-2 and delta C-13, Science 287, 2467-2470.

[***] Francey et al (1999) A 1000-year high precision record of delta C-13 in atmospheric CO2, Tellus Series B-Chemical And Physical Meteorology 51, 170-193

Steve, Note that the reference Martin supplied was advocating a way of calculating cloud feedbacks–not describing how they are done at present. Be that as it may, keep in mind that feedbacks affect all forcings equally. Thus if you change, for example, feedback due to clouds to preclude large temperature rises, this would seem to be contradicted by the fact that we have had large temperature rises in the paleoclimate.

Ultimately, there’s a reason why climate models yield fairly consistent results–there’s just not that much wiggle room. Climate models aren’t telling us, qualitatively, anything we didn’t already know–they’re just making it possible to estimate how severe things could get. This is why I emphasize that if you are leary of draconian action motivated by panic, climate models are your best friends.

Steve Reynolds asks:

Well, for an example, in 1964 Manabe and Strickler, in the first radiative-convective model, used a distribution of water vapor with height by absolute humidity. In a second model, Manabe and Wetherall 1967, they constrained relative humidity instead, using the Clausius-Clapeyron law to calculate how much vapor pressure was available at each level as the temperature changed. Research since then has shown that relative humidity does tend to stay about the same in vertical distribution (although not in every place from day to day). They improved the physics and got a better model. Note that they used nothing from climate statistical data; they simply improved the physics. This has been going on in model development since the 1960s.

Raven, I don’t think anything you’ve said contradicts Philip’s point. The problem is that with the current state of knowledge, we cannot rule out truly catastrophic consequences–sufficiently catastrophic, that any effort that does not bankrupt us could be justified.

To take your example–perhaps I might not pay for the insurance policy you posit, but I would invest heavily in protection equipment to preclude the possibility of myself and my family being caught in a burning house, even if the probability was, perhaps 1 in a hundred.

Chris:

“And most climate scientists with any credibility appear to be convinced that if we don’t radically reduce emission soon then very nasty impacts are more likely than not”

Wow, “More likely than not” is a strong statement. Do you have some links or even names of some peer reviewed studies with quanititave probability outcomes at better than 50%?

Ray Ladbury

“Cannot rule out truly catastrophic consequences” – well said.

Ray Ladbury: Climate models aren’t telling us, qualitatively, anything we didn’t already know–they’re just making it possible to estimate how severe things could get. This is why I emphasize that if you are leary of draconian action motivated by panic, climate models are your best friends.

Of course motivation should not matter if we are trying to determine truth. However, I think that quantitative limits on sensitivity are currently more constrained by volcanic and paleoclimate data than they are by models (see James Annan’s papers).

Barton: …They improved the physics and got a better model.

That’s great and I hope similar progress eventually leads to accurate models based only on objective physics, but I think we have a long way to go to resolve the initial question.

Let me restate my question to more clearly reflect the original discussion:

Are the feedback effects in climate models sufficiently constrained by known physics and high confidence real world data to preclude a climate sensitivity less than 2C?

[Response: Steve: You’ve got the wrong question. The right question is: are the feedback effects sufficiently constrained by known physics and high confidence real world data to preclude a climate sensitivity of 8C or more? At present, I’d argue that the answer to that is no. As long as there is a credible threat that 8C is the right answer (or even 4C), that threat has to be factored into the expected damages. It’s no different than what you’d do when designing a dam in a region where there’s a 1% chance of no earthquake over 100 years and a 1% chance of a very severe earthquake. And by the way, though I don’t think you can completely rule out a sensitivity below 2C, such a low sensitivity looks more and more incompatible with the 20th century record and past events, notably the PETM. Finally, without models, we wouldn’t even know that 8C is possible, or what kind of cloud feedbacks could give that value. For that matter, it’s models that tell us a Venus type runaway greenhouse is essentially impossible. –raypierre]

“Meaningful CO2 reductions are not going to happen as long as the world population is growing no matter what governments do.”

Germany, France, Japan and the UK produce 1/2 CO2 per person the US, Canada and Australia do. Getting those three down to typical EU levels for per person CO2 emission would be a substantial cut globally.

[Response: Actually France is more like 1/4, and is struggling to do even better than that. As yo note, if the US even met France halfway on percapita emissions, that would be a huge reduction — and if China and India’s future percapita emissions could be capped at the French level, which we know is technologically feasible and compatible with a prosperous society — that would make a good interim target. It wouldn’t be enough to prevent doubling of CO2, but it would delay the date of doubling and buy time for more technology and more decarbonization. But yes, preventing doubling, with a world population of 10 billion, will require that ultimately the energy system be almost totally decarbonized. Even a percapita target of 1 tonne C per person worldwide (somewhat less than French percapita emissions today) amounts to 10 Gtonne per year, which would double CO2 in around a century, even if the ocean and land continue to be as good sinks as they are today. –raypierre]

Ray (165), this is probably worth nothing but maybe you could verify your math re the billion CO2 atoms. I get (but would bet little on it) with 380ppmv evenly distributed CO2 molecules ~2.15×10^3 molecules along a 10cm side (height) of a cubed liter, and 3.25×10^3 molecules in an area slice of 7×10^-10 sq.meters (circle area of r = 15 microns) for an encounter of ~7 million CO2 molecules or ~1/100th of your billion. Probably doesn’t change your basic point — still alot; maybe nobody cares, but I thought I’d ask…

Re #189, And when the emissions are coming down we must also contain emissions due to economic growth to which is not going to be easy because even if we doubled fuel efficieny for every vehicle in the USA we would be still consume the same amount of oil seven years laters due to 2% economic growth per year.

The task of decarbonising whilst meeting projected energy demand growth is daunting and requires some serious strategic thought which I personally doubt will happen in time to avoid >2C rises in global temperature.

people keep on talking about CCS for coal but that aint gonna work in time but it may stop >3C warming I suppose.

Rod B., You’re right–forgot to divide by ~22.

Here is a graph prepared by the Carbon Dioxide Information Analysis Center at ORNL of the yearly anthropogenic emissions of CO2:

http://cdiac.ornl.gov/trends/emis/tre_glob.htm

Re #176 Steve Reynolds:

On the contrary — you’re changing the goalposts again.

I provided the link to demonstrate that your rather libelous claim that modellers tweak the cloud parametrization in order to get more “appropriate” sensitivity values has no relationship with how the process of improving the parametrization works in reality. You fooled even Ray Ladbury on that one (Ray, read the article with comprehension.)

At this point I usually give up. Horse, well, drink.

raypierre:

“It’s no different than what you’d do when designing a dam in a region where there’s a 1% chance of no earthquake over 100 years and a 1% chance of a very severe earthquake.”

Your analogy is not a great one. This is NOT a situation where we are planning a greenfield factory, power plant or hospital. In developed countries we are talking about massive changes to the existing infrastructure at enormous expense that would have a debatable impact on a problem that is not unequivocally proven. In the developing countries we’re talking about restricting their rights to the same inexpensive energy that we built our wealth on.

It just isn’t that simple. More like we’ve found out that 100,000 dams have already been built in areas with a chance of an earthquake. The technology exists to build better dams but it takes time, it’s expensive and we’re not the least bit sure we can replace a significant number of the dams before the major earthquake that scientisits tell us is coming. Do we fix the dams or buy boats?

raypierre: Steve: You’ve got the wrong question. The right question is: are the feedback effects sufficiently constrained by known physics and high confidence real world data to preclude a climate sensitivity of 8C or more?

Thanks for the response answering two questions.

For the high sensitivity limit, is your answer just addressing results from models, or do you disagree with James Annan?:

“Even using a Cauchy prior, which has such extremely long and fat tails that it has no

mean or variance, gives quite reasonable results. While we are reluctant to

actually endorse such a pathological choice of prior, we think it should at

least be immune from any criticism that it is overly optimistic. When this

prior is updated with the analysis of Forster and Gregory (2006), the long fat

tail that is characteristic of all recent estimates of climate sensitivity simply

disappears, with an upper 95% probability limit for S easily shown to lie

close to 4C, and certainly well below 6C.”

http://www.jamstec.go.jp/frcgc/research/d5/jdannan/prob.pdf

DR (195) wrote “In the developing countries we’re talking about restricting their rights to the same inexpensive energy that we built our wealth on.” Fossil fuel isn’t so ‘inexpensive’ anymore, is it? So maybe they (and everybody) needs alternative sources of energy. The recent IEA report offers one plan. For many developing countries, pushing hard for biofuels seems to me to be extremely sensible. Many countries in the global south are already doing so.

DR, If you feel the threat of climate change is not unequivocally proven, I wonder what additional proof you are looking for. After all, the IPCC models use the relatively conservative best-fit sensitivity of 3 degrees per doubling and still conclude that we will likely face serious consequences this century. A real risk calculation would have to use a higher bounding value for the sensitivity and would likely find that catastrophic results cannot be ruled out with confidence. It may not be a matter of fixing the dams or buying boats, but rather some of both–and both will take time. The only way we buy time is by decreasing CO2 emissions.

As to your arguments about the industrial and developing world, WE are going to have to significantly alter our infrastructure to deal with the end of cheap fossil fuel. The developing world’s lack of infrastructure is actually an advantage, since we can significantly diminish the impact of their economic growth in return for assisting them with clean energy. We are certainly justified in investing as much in risk mitigation as we would lose if the threat becomes reality.

DR says “…we are talking about massive changes to the existing infrastructure at enormous expense that would have a debatable impact on a problem that is not unequivocally proven.”

That is where we disagree. What will it take to convince you? The physics is solid and its predictions, which are conservative since they do not include some of the positive feedbacks, are being realized daily. The Arctic ice cover is reaching historic lows and may be ice-free within a few years, something that has not happened for a million years. Australia and Spain are developing new areas of desert, California is considering rationing water, the Southeastern US is in prolonged drought. The WAIS and GIS are melting at ever faster rates, and on and on. A recent study shows that the warming rate may triple in the Arctic because of the melting of permafrost. That is the kick-in of a positive feedback, and represents a possible tipping point. Your level of demand for unequivical proof could well take us to irreversible change of a catastrophic nature. We dare not take that risk.

DR,

even if a climate sensitivity >4.5 C could be completely ruled out (I would say that it could for purposes of political action, raypierre or others might disagree) it probably doesn’t matter, because a climate sensitivity at around 3 C is most likely. But this number is a just a scientific benchmark; there is nothing inherently special about 560 ppmv aside from being the “first doubling.” The world doesn’t end at 2x CO2 or 2100 or what have you, so if we keep burning, it keeps getting hotter. The people living 500 years from now won’t care too much whether the 8 C hotter temperature came from a doubling or a tripling or a quadrupling of CO2, only that it happened.

Given that the CO2 we add will influence climate for thousands of years to come, there is probably an ethical (as well as socio-economic) responsiblity to do something about it as a change that we will get with a doubling of CO2 or more is on the same order of magnitude as a transition into an ice age (only going the other way). And as people like Hansen or Richard Alley say in the public, we may not just be looking at a rising trend, but also a more variable climate which flickers back and forth between different states on yearly to decadal scales, which is very difficult to adapt to.

As for “solutions,” I only leave you with a few quotes from Daniel Quinn in his book “Beyond Civilization” without any implication or further comment:

“Old minds think: How do we stop these bad things from happening?”

“New minds think: How do we make things the way we want them to be?”

“Old minds think: If it didn’t work last year, let’s do MORE of it this year”

“New minds think: If it didn’t work last year, let’s do something ELSE this year.”