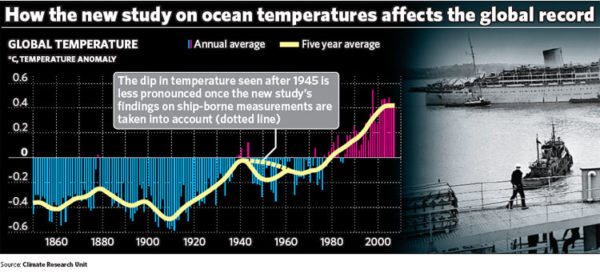

This last week has been an interesting one for observers of how climate change is covered in the media and online. On Wednesday an interesting paper (Thompson et al) was published in Nature, pointing to a clear artifact in the sea surface temperatures in 1945 and associating it with the changing mix of fleets and measurement techniques at the end of World War II. The mainstream media by and large got the story right – puzzling anomaly tracked down, corrections in progress after a little scientific detective work, consequences minor – even though a few headline writers got a little carried away in equating a specific dip in 1945 ocean temperatures with the more gentle 1940s-1970s cooling that is seen in the land measurements. However, some blog commentaries have gone completely overboard on the implications of this study in ways that are very revealing of their underlying biases.

The best commentary came from John Nielsen-Gammon’s new blog where he described very clearly how the uncertainties in data – both the known unknowns and unknown unknowns – get handled in practice (read that and then come back). Stoat, quite sensibly, suggested that it’s a bit early to be expressing an opinion on what it all means. But patience is not one of the blogosphere’s virtues and so there was no shortage of people extrapolating wildly to support their pet hobbyhorses. This in itself is not so unusual; despite much advice to the contrary, people (the media and bloggers) tend to weight new individual papers that make the news far more highly than the balance of evidence that really underlies assessments like the IPCC. But in this case, the addition of a little knowledge made the usual extravagances a little more scientific-looking and has given it some extra steam.

Like almost all historical climate data, ship-board sea surface temperatures (SST) were not collected with long term climate trends in mind. Thus practices varied enormously among ships and fleets and over time. In the 19th Century, simple wooden buckets would be thrown over the side to collect the water (a non-trivial exercise when a ship is moving, as many novice ocean-going researchers will painfully recall). Later on, special canvas buckets were used, and after WWII, insulated ‘buckets’ became more standard – though these aren’t really buckets in the colloquial sense of the word as the photo shows (pay attention to this because it comes up later).

The thermodynamic properties of each of these buckets are different and so when blending data sources together to get an estimate of the true anomaly, corrections for these biases are needed. For instance, the canvas buckets give a temperature up to 1ºC cooler in some circumstances (that depend on season and location – the biggest differences come over warm water in winter, global average is about 0.4ºC cooler) than the modern insulated buckets. Insulated buckets have a slight cool bias compared to temperature measurements that are taken at the inlet for water in the engine room which is the most used method at present. Automated buoys and drifters, which became more common in recent decades, tend to be cooler than the engine intake measures as well. The recent IPCC report had a thorough description of these issues (section 3.B.3) fully acknowledging that these corrections are a work in progress.

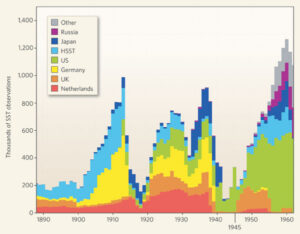

And that is indeed the case. The collection and digitisation of the ship logbooks is a huge undertaking and continues to add significant amounts of 20th Century and earlier data to the records. This dataset (ICOADS) is continually growing, and the impacts of the bias adjustments are continually being assessed. The biggest transitions in measurements occurred at the beginning of WWII between 1939 and 1941 when the sources of data switched from European fleets to almost exclusively US fleets (and who tended to use engine inlet temperatures rather than canvas buckets). This offset was large and dramatic and was identified more than ten years ago from comparisons of simultaneous measurements of night-time marine air temperatures (NMAT) which did not show such a shift. The experimentally-based adjustment to account for the canvas bucket cooling brought the sea surface temperatures much more into line with the NMAT series (Folland and Parker, 1995). (Note that this reduced the 20th Century trends in SST).

More recent work (for instance, at this workshop in 2005), has focussed on refining the estimates and incorporating new sources of data. For instance, the 1941 shift in the original corrections, was reduced and pushed back to 1939 with the addition of substantial and dominant amounts of US Merchant Marine data (which mostly used engine inlets temperatures).

The version of the data that is currently used in most temperature reconstructions is based on the work of Rayner and colleagues (reported in 2006). In their discussion of remaining issues they state:

Using metadata in the ICOADS it is possible to compare the contributions made by different countries to the marine component of the global temperature curve. Different countries give different advice to their observing fleets concerning how best to measure SST. Breaking the data up into separate countries’ contributions shows that the assumption made in deriving the original bucket corrections—that is, that the use of uninsulated buckets ended in January 1942—is incorrect. In particular, data gathered by ships recruited by Japan and the Netherlands (not shown) are biased in a way that suggests that these nations were still using uninsulated buckets to obtain SST measurements as late as the 1960s. By contrast, it appears that the United States started the switch to using engine room intake measurements as early as 1920.

They go on to mention the modern buoy problems and the continued need to work out bias corrections for changing engine inlet data as well as minor issues related to the modern insulated buckets. For example, the differences in co-located modern bucket and inlet temperatures are around 0.1 deg C:

(from John Kennedy, see also Kent and Kaplan, 2006).

However it is one thing to suspect that biases might remain in a dataset (a sentiment shared by everyone), it is quite another to show that they are really have an impact. The Thompson et al paper does the latter quite effectively by removing variability associated with some known climate modes (including ENSO) and seeing the 1945 anomaly pop out clearly. In doing this in fact, they show that the previous adjustments in the pre-war period were probably ok (though there is substantial additional evidence of that in any case – see the references in Rayner et al, 2006). The Thompson anomaly seems to coincide strongly with the post-war shift back to a mix of US and UK ships, implying that post-war bias corrections are indeed required and significant. This conclusion is not much of a surprise to any of the people working on this since they have been saying it in publications and meetings for years. The issue is of course quantifying and validating the corrections, for which the Thompson analysis might prove useful. The use of canvas buckets by the Dutch, Japanese and some UK ships is most likely to blame, and given the mix of national fleets shown above, this will make a noticeable difference in 1945 up to the early 1960s maybe – the details will depend on the seasonal and areal coverage of those sources compared to the dominant US information. The schematic in the Independent is probably a good first guess at what the change will look like (remember that the ocean changes are constrained by the NMAT record shown above):

Note that there was a big El Niño event in 1941 (independently documented in coral and other records).

So far, so good. The fun for the blog-watchers is what happened next. What could one do to get the story all wrong? First, you could incorrectly assume that scientists working on this must somehow be unaware of the problems (that is belied by the frequent mention of post WWII issues in workshops and papers since at least 2005, but never mind). Next, you could conflate the ‘buckets’ used in recent decades (as seen in the graphs in Kent et al 2007‘s discussion of the ICOADS meta-data) with the buckets in the pre-war period (see photo above) and exaggerate how prevalent they were. If you do make those mistakes however, you can extrapolate to get some rather dramatic (and erroneous) conclusions. For instance, that the effect of the ‘corrections’ would be to halve the SST trend from the 1970s. Gosh! (You should be careful not to mention the mismatch this would create with the independent NMAT data series). But there is more! You could take the (incorrect) prescription based on the bucket confusion, apply it to the full global temperatures (land included, hmm…) and think that this merits a discussion on whether the whole IPCC edifice had been completely undermined (Answer: no). And it goes on – once the bucket confusion was pointed out, the complaint switched to the scandal that it wasn’t properly explained and well, there must be something else…

All this shows wishful thinking overcoming logic. Every time there is a similar rush to judgment that is subsequently shown to be based on nothing, it still adds to the vast array of similar ‘evidence’ that keeps getting trotted out by the ill-informed. The excuse that these are just exploratory exercises in what-if thinking wears a little thin when the ‘what if’ always leads to the same (desired) conclusion. This week’s play-by-play was quite revealing on that score.

[Belated update: Interested in knowing how this worked out? Read this.]

Re 84 Roger, some more comments (then I’ll stop, since it’s not the topic here):

– In wintertime when there is no wind, there is often fog, at least in many places. And in fog layers you will not find temperature inversions as in the nocturnal boundary layer.

– In valleys you often have slope or valley winds during night, which are still cool, but are mixing a layer which often is higher than 10 meters. And on coasts or near lakes you have also local wind systems. These situations are not comparable to open plains.

– Stability in the nocturnal boundary layer is on the one hand influenced by wind speed, but also by clouds. Trends in stability are therefore influenced by trends in wind speed and clouds, and therefore by trends in the frequency distribution of distinct synoptic situations. Since there is considerable regional decadal variations in synoptic situations, a regional trend over a decade might be influenced by regional synoptic decadal variability. So before extrapolating over the globe, you have to assess such trends.

– Stability is influenced by longwave downward radiation. Especially in calm clear sky conditions, where you have a full built nocturnal boundary layer, a decrease in stability is exactly what can be expected, if the concentration of greenhouse gases rises (enhanced longwave downward radiation, partly offsetting the cooling at the surface). Thus, if it is not a regional synoptic effect, the decrease in stability and the ‘enhanced’ surface warming might well be the result of increased greenhouse gas concentration and represent global warming. If greenhouse gases warm the air at 10m height somewhat less than at 2m, this does not help much, since humans, vegetation, soil, etc. is more affected by temperatures at 2m than by those at 10m.

“Sounds like special instruments would be preferable, avoiding people blogging about how the Boeing 737 thermometers were known biased and the Airbus temperature gauges were placed downwind of the engines….”

Just as long as they’re not using canvas buckets…

RE 96: Factually, commercial passenger aircaraft have been used for collection of near real time meteorological data since the 1970’s, first on experimental basis and for research purposes and now in a fully operational manner. The system development was rather slow, as finding transmission slots in radio channels and related standardization took its time. Also the issue of cost sharing had to be addressed.

Now many aircraft routinely report temperature and wind readings, and humidity mesurements are being added to the programs. In many areas most of the in-situ ujpper-air measurement data is generated from these aircraft reports. Adding measurements of ozone and other atmospheric composition parameters is in the works.

Pilots did provide weather reports on written de-briefing forms even earlier, but these seldom included detailed data such as temperature readings.

Timo Hämeranta says: “Science is not about credibility, but facts. When Climatology is mostly probabilities, there’s always room to debate.”

Oh, please. Anybody who has ever actually done science knows that over time you get to know the contributors to a field–and guess what, everybody has a pet theory, a personal rivalry or an axe to grind. Those who establish themselves as credible are those who demonstrate willingness to put these biases aside and do good science. On the other hand, if a scientist goes to the media and misrepresents the state of the art, that is the surest way to destroy his or her credibility.

This does not mean that every technical contribution they make will be dismissed or even that it will be more heavily scrutinized, but everything they do is viewed with their agenda in mind.

Chuck has already pointed out the probabilistic nature of “scientific fact”. Along these lines I recommend the attached essay (as I’ve done many, many times):

http://ptonline.aip.org/journals/doc/PHTOAD-ft/vol_60/iss_1/8_1.shtml

As to the references you’ve cited–it is nearly impossible to come up with a generally applicable GCM with a sensitivity much less than about 2 degrees per doubling, and 3 degrees is strongly favored by a range of independent lines of evidence. Moreover, the evidence suggests that if 3 degrees is wrong, it’s more likely too low than too high.

Pekka’s right about commercial aircraft reports:

http://amdar.noaa.gov/docs/bams/

February 2003 Bulletin,

American Meteorological Society, 84, pp 203-216

Automated Meteorological Reports from Commercial Aircraft

ABSTRACT excerpt follows

Commercial aircraft now provide over 130,000 meteorological observations per day, including temperature, winds, and in some cases humidity, vertical wind gust, or eddy dissipation rate (turbulence). The temperature and wind data are used in most operational numerical weather prediction models at the National Centers for Environmental Prediction (NCEP) and at other centers worldwide. At non-synoptic times, these data are often the primary source of upper air information over the U.S. Even at synoptic times, these data are critical in depicting the atmosphere along oceanic air routes.

A web site (http://acweb.fsl.noaa.gov/) has been developed that gives selected users access to these data. Because the data are proprietary to the airlines, real-time access is restricted to entities such as government agencies and nonprofit research institutions ….

I realize this is OT and that climate is an average over 10 years or so, but can’t help to just compare the extent of ice and snow from last year to this.

http://igloo.atmos.uiuc.edu/cgi-bin/test/print.sh?fm=06&fd=04&fy=2007&sm=06&sd=04&sy=2008

In summary, the current snow cover in the NH is signficantly less than last year, which as many on this thread know produced record minimums of sea ice extent.

So, with the albedo of the NH so low, it’s looking like a another record summer for sea ice.

Most of the ACWEB site is restricted, as it says in the AMDAR article linked above, but poking at it, some information is public; here’s an online course in the operation of the program, which may interest some.

http://amdar.noaa.gov/2007course/

Thanks again, Pekka, for pointing out this exists.

More evidence that Google lacks a Wisdom button; I continue to rely on others to know what to look for!

Re #90 …

However, obviously it is incorrect to say, as Tim H. said, that “Climatology is mostly probabilities”…

Insteady, when climate change is happening, climatology is or should be mostly about measuring and seeing trends in climate data which began years ago and are continuing and/or increasing.

Re #95 – Hi Wayne.

“At non-synoptic times, these data are often the primary source of upper air information over the U.S. Even at synoptic times, these data are critical in depicting the atmosphere along oceanic air routes.”

This information is very useful to the European models as it’s a very good source of upper air data over the Atlantic.

Hi Andrew,

Re #106 “that climate is an average over 10 years or so.”

Not to be picky, but I believe that the IPCC is more comfortable with the term “mininum climate” being much longer-term averages than just ten years: -about 30-50 years needed at mininum to filter out noise and natural cycles:

“The classical period is 30 years, as defined by the World Meteorological Organization (WMO).”

“Climate in a narrow sense is usually defined as the “average weather”, or more rigorously, as the statistical description in terms of the mean and variability of relevant quantities over a period of time ranging from months to thousands or millions of years. The classical period is 30 years, as defined by the World Meteorological Organization (WMO). These quantities are most often surface variables such as temperature, precipitation, and wind. Climate in a wider sense is the state, including a statistical description, of the climate system. (IPCC) glossary

http://www.grida.no/climate/ipcc_tar/wg1/518.htm

Although ten years versus 30-50 does not sound like much difference, some natural repeating cycles are thought to be more than ten years long in duration such as the Pacific Decadal Oscillation (~22 years or so). So if climate were only ten years long, you wouldn’t be able to filter out noise like this would you?

WAit a minute- could we not use the aircraft temperature readings to cross check with the radiosondes and thence the satellite readings?

Of course it would depend on the reliability and reproducibility of the aircraft method, its probably got some faults in it, but nevertheless, I wonder if it could help.

Re #6 and 16

My understanding is that humans produce about 4% of the CO2 released into the atmosphere annually. Is there a reference for the 27% that you stated? Thanks

Ray Ladbury: …it is nearly impossible to come up with a generally applicable GCM with a sensitivity much less than about 2 degrees per doubling…

As someone familiar with computer models, this seems unlikely to me. Do you have a reference?

Ray: Moreover, the evidence suggests that if 3 degrees is wrong, it’s more likely too low than too high.

That seems in conflict with James Annan (to whom you have previously linked) in this paper:

http://www.jamstec.go.jp/frcgc/research/d5/jdannan/prob.pdf

re 112

//”Re #6 and 16

My understanding is that humans produce about 4% of the CO2 released into the atmosphere annually. Is there a reference for the 27% that you stated? Thanks”//

I think you’re confusing emissions and concentrations…the latter is what matters for the greenhouse effect. CO2 has changed from 280 ppmv to 384 ppmv so about 30% of the CO2 in the air is anthropogenic. The 4% number sounds about right for annual emissions (compared to all sources), but not incredibly relevant since CO2 is long-lived and forcing is cumalative.

Re 113

In fact, he’s right. The range is most likely within 2 to 4.5 C (IPCC 2007) , and the paleo record in particular argues against low sensitivity…but if anything we cannot discount the long tail that exists at the high end (i.e., low-probability, high consequence). See Roe and Baker 2007 in Science.

> aircraft temperature

You may suspect they’re doing that already, given the existence of that large website dedicated to applying the data — but note the altitudes reached by sonde balloons: “The balloon carries the instrument package to an altitude of approximately 25 mi (27-37 km)….” http://www.aos.wisc.edu/~hopkins/wx-inst/wxi-raob.htm

Re Comment by Fred Moolten — 2 June 2008 @ 11:51 AM

Like you I’ve noticed that there seems to be a concentration on the fall-off and an ignoring of the rise. You attribute the WWII temperature excursion to an ENSO event. Isn’t it rather odd for such a feature? If you look at the Hadley SST graphs without the major Folland and Parker correction — Folland and Parker [1995] Figure 3. Annual anomalies from a 1951-80 average of uncorrected SST (solid) and corrected NMAT (dashed) for (a) northern (b) southern hemisphere, 1856-1992. Only collocated 5 deg. x 5 deg. SST and NMAT values were used — the strangeness of the temperature excursion stands out like the proverbial dog’s whatsits, larger than anything else on the graph, larger even than the huge 1998 surge which got us all so worried. There is a rise of about .4 deg in a year, sustained for several years.

I haven’t read the paper with the latest correction, but I can’t understand why there’s a correction afterwards and not before the excursion, unless it’s because someone hasn’t noticed that the F&P adjustment hides a rise which is even more striking than the fall. If the procedure used is just to strip out temperature blips on the basis that they are merely ENSO events then the chance to find an explanation for this interesting anomaly will be missed.

BTW, nice blues at your site — thanks.

JF

Andrew writes:

The World Meteorological Organization defines climate as mean regional or global weather over a period of 30 years or more.

Re #112 Gary

In relation to [CO2] in the atmosphere we need to be clear what we are talking about!

The parameter of interest is the increase in atmospheric CO2 as time progresses.

So for example for the around 2000 years before the mid 19th century, atmospheric CO2 levels were near 280 ppm [***]. That’s despite the massive release of CO2 into the atmosphere by plant decay in the Northern hemisphere in late summer/fall. This massive release of CO2 is reabsorbed by the terrestrial environment due to plant regrowth in the Northern hemisphere Spring and early summer.

This is overall pretty much nett neutral with respect to year on year changes in atmospheric [CO2]. That’s rather clear from the atmospheric CO2 record of the approx 2000 years before the industrial era [***].

Pretty much all of the excess and increasing [CO2] resulting from excess release of CO2 over and above the plant growth/decay cycles is from human emissions (digging up and burning fossil fuels sequestered underground for many millions of years). This has resulted in an increase in atmospheric [CO2] from 280 ppm to 385 ppm now (and growing).

The proportion of the total atmospheric [CO2] resulting from human emissions is (385-280)/385 = 27%

The percentage increase in atmospheric [CO2] due to human emissions is (385-280)/280 = 37.5% (in other words we have increased atmospheric CO2 levels by 37.5% over preindustrial levels).

Note that only about 40-50% of our emissions have ended up in the atmosphere. Around 40-50% has been absorbed by the oceans and a small amount has been absorbed by the terrestrial environment

[***]Meure CM et al (2006) Law Dome CO2, CH4 and N2O ice core records extended to 2000 years BP Geophys. Res. Lett. 33 L14810

Gary says:

It’s actually less than 1% that’s released every year. But the vast natural sources are balanced by vast natural sinks, which is why atmospheric CO2 was steady for thousands of years at around 280 parts per million by volume. Since about 1750, human technology has increased the amount coming in, so that CO2 is now at about 385 ppmv. (385 – 280) / 385 = 27% of the CO2 out there is of human technological origin.

Spam filter note — I kept talking about the a m b i e n t level of CO2, and my posts kept getting flagged as spam because a m b i e n is a drug.

Barton (120), I’m flabbergasted with the numbers and granularity of CO2 absorption they suggest. I may also just be ignorant, but while I’m thinking: are you saying that the natural balance of CO2 in the atmosphere (source = sink) is so finely tuned and sensitive that its sink can not handle any of the 0.000000062% [6.2×10 -8 %] of its weight added in an average year?? Also, are our physics and math that exact? [Or…, is my arithmetic faulty?]

Apologies but there’s nowhere I could find (before I got bored.. :-)) to ask. Anyone know who Steven Goddard is?

He’s put a couple of things up on The Register about how the NASA figures are fiddled so that NASA can continue the “lie” about AGW. The latest one is here:

http://www.theregister.co.uk/2008/06/05/goddard_nasa_thermometer/

There’s plenty of holes but getting them pointed out to him is impossible: too many posts go “disappearing” by moderation. The last one was about how the UAH and RSS figures were the second coldest southern hemisphere temperatures and only a little bit away from the world average.

However, I think he’s not reading correctly, or at the very least assuming a meaning to the report that isn’t actually there.

Ta.

#113 Steve Reynolds:

The IPCC AR4 WG1 report, page 630.

“The current generation of GCMs covers a range of equilibrium climate sensitivity from 2.1C to 4.4C (with a mean value of 3.2C, see Table 8.2 and Box 10.2), which is quite similar to the TAR”.

Table 8.2 tells that this is based on 19 model values.

So, getting well below 2C seems to be a bit of an effort, probably requiring you to do something wrong :-)

Rod B asks: “may also just be ignorant, but while I’m thinking: are you saying that the natural balance of CO2 in the atmosphere (source = sink) is so finely tuned and sensitive that its sink can not handle any of the 0.000000062% [6.2×10 -8 %] of its weight added in an average year??”

Well, where would the 9 gigatons of trees and plants each year be growing? We can’t see them from the sattelite.

Maybe they’re growing underground…

And is that ~10^-8 % based on total mass of the atmosphere? How does having nitrogen in the air help carbon absorption?

Rod B.,

Look here:

http://www.epa.gov/climatechange/emissions/co2_human.html

and here:

http://en.wikipedia.org/wiki/Carbon_cycle

Then check your math.

Off topic. But does anyone know if Wally Broeker’s “greenhouse scrubbing” Co2-absorbing plastic “tree” project is feasible or have more information about it?

He and others have proposed needing ~60 million of these plastic towers which would be ~50 feet high (~14 meters high). The plastic absorbs CO2. Then the “full” or CO2 saturated trees would then be turned into a solid or compressed and stored underground.

Broeker points out that humanity can produce 55 million automobiles every year so the scope is possible especially if spread out over a 30-40 year period.

His calculations apparently work out to needing ~60 million trees to capture “all of the CO2 currently emitted”.

The “trees” might be placed in “remote desert areas”. I know that currently Arizona, according to the non-peer-reviewed Scientific American magazine state that Arizona seems to be open to projects like this (or massive solar farms to be more precise).

This would of course only be a “bandaid” and would not solve the Co2 emissions problem…but it might help stop or slow down or reduce the higher emission scenario tipping point\violent climate change\really nasty possibilities.

I’m not for it or against it. I just don’t know enough about it.

http://www.SciAm.com Jan 08, “A Solar Grand Plan” pp. 63-71

No, Rod. RC threads have been over this same question several times before, I am pretty sure you were in the threads in the past.

Recall, natural processes have handled about half the added CO2 from human activity, and the remainder is in the atmosphere. You can always find new publications on this easily using Google and care reading the result. One just as an example, you will find others:

The Science of The Total Environment

Volume 277, Issues 1-3, 28 September 2001, Pages 1-6

doi:10.1016/S0048-9697(01)00828-2

The biogeochemical cycling of carbon dioxide in the oceans — perturbations by man

David W. Dyrssen

Abstract (excerpt):

“… Using data on the emission of CO2 and the atmospheric content in addition to the value recently presented by Takahashi et al. for the net sink for global oceans the following numbers have been calculated for the period 1990 to 2000, annual emission of CO2, 6.185 PgC (Petagram=1015 g). Annual atmospheric accumulation, 2.930 PgC. …”

Click the ‘Cited by’ and “Related articles” links for more info as usual.

Re: #121: I think your math is way off. There are about 3000 gigatonnes of CO2 in the atmosphere. Anthropogenic emissions are about 30 gigatonnes a year.

Rod B (121) — There are several decent web sites about the carbon cycle. Just now, in effect, the oceans (and other sinks) are absorbing as much as possible as fast as possible. Compared to recent human activities, that’s not fast enough to provide balance, i.e., equilibrium, yet.

Wait quite a few centuries…

The idea of the CO2 sink not absorbing is not that far fetch. Deforestation, land clearing, agricultural use, rising sea temp, the sink is decreasing in size and capacity when not downright becoming a source itself.

Re #119,120,121 Chris et al, Thank you, I understand the basic idea and have read the IPCC section on C12O2/C13O2 ratio etc. Thank you for the Law Dome reference. I can’t figure out how you can attribute the entire rise in CO2 since 1900 to humans without showing NO change in non human sources, ocean degassing, biosphere CO2 resorbtion and a host of other variables which can not be known for the earlier part of the 1900’s and are probably not known today. The idea that biological systems are not maliable and can not respond to changes in environment is not easily supported. Since it is known that plant growth is in general accelerated by increased levels of CO2, it seems possible that there are gigaton increses in the mass of trees plants, algea, seaweed etc on a global scale. You may be interested in this NASA note: http://science.nasa.gov/headlines/y2001/ast07sep_1.htm

[Response: You clearly haven’t understood what you have been reading. Ocean degassing would be incompatible with the C13 record, and in any event it can be directly shown by ocean monitoring that oceans are taking up CO2, not releasing it. And the land biosphere is indeed taking up CO2, not releasing it — which together with the ocean offsets part of what we are putting into the atmosphere. So, if the oceans are a sink and the land biosphere is a sink, what can be causing an increase in atmospheric CO2 other than the additional (and well documented) amount put into the atmosphere by fossil fuel burning and deforestation? Where is all that carbon supposed to be going, hmm? –raypierre]

Martin,

I don’t see how the existence 19 models with sensitivity above 2C comes close to demonstrating that “it is nearly impossible to come up with a generally applicable GCM with a sensitivity much less than about 2 degrees”.

Mark, 9 gigatonnes (I assume tonnes is correct) is (about) what % of the total flora?

My percent figures are based on total atmosphere. I’ll have to think that through…

Steve,

think about it. Nineteen groups independently (more or less) try to build models of the climate system where the requirement is that they be as physically realistic as possible. Not a single one comes up with a model having a sensitivity under 2 degrees. To me that strongly suggests that physical realism and low sensitivity are incompatible.

It’s a statistical argument (sampling the population of physically realistic models), but a pretty strong one I would say.

Totally off topic, but the posts on carbon sequestration are closed. On 30 May, The New York Times, along with a couple of other papers (including the FT, I think), announced that any hope for the often-advertised “clean coal” solution was -for all practical purpose- abandoned.

Re #118,

I think it’s inappropriate for the World Meteorological Organization (and NOAA) to continue to use a definition of climate to be “mean regional or global weather over a period of 30 years or more” … climate is changing rapidly.

Steve Reynolds, The short and flippant answer in the face of the fact that such a beast does not exist is–“You’re welcome to try.” The thing is that for a dynamical model, you have to fix (or at least constrain) the forcing with independent data, and the data simply aren’t compatible with a forcing that low. The thing is that CO2 forcing has some fairly unique properties in that it provides a consistent, long-term forcing and remains well mixed into the stratosphere.

Erik, what’s your source for the 30 gigaton/year number from fossil fuels that you posted above?

Below a link to a recent MIT course outline page for

petagram=gigaton

and other useful information.

Climate Physics and Chemistry Fall 2006 Ocean Carbon Cycle Lectures

Carbon Cycle 1: Summary Outline

http://ocw.mit.edu/NR/rdonlyres/Earth–Atmospheric–and-Planetary-Sciences/12-301Fall-2006/66D1CB48-09FA-4CF5-8D9D-9C41834EEA94/0/handout.pdf

“… CO2 rise (at present) in atmosphere is about half of the rate of fossil fuel consumption – where does the rest go? Answer to follow: about half goes into the ocean and about half goes into organic matter storage (almost certainly on the continents)…”

Rod — I don’t know where you got your percentage figure. There are about 3 x 1015 kg of CO2 in the air. We add about 3 x 1013 kg per year, about 1%. A bit more than half of that gets absorbed, so net CO2 goes up by about 0.4% per year.

Just a relevant aside: it’s 103 °F in Norfolk VA right now, beating the old record from around 1900 by five degrees …

Ray: …for a dynamical model, you have to fix (or at least constrain) the forcing with independent data, and the data simply aren’t compatible with a forcing that low. The thing is that CO2 forcing has some fairly unique properties in that it provides a consistent, long-term forcing and remains well mixed into the stratosphere.

For my hypothetical model, I will accept the standard CO2 forcing, but that by itself only gives about a 1C sensitivity. The rest is feedback, both positive and negative. I’m sure that it is possible within the uncertainty in cloud and other effects, to produce a model with net sensitivity less than 2C.

For Martin: I wonder how many times someone has done this and been told to fix their model so that it agrees with the expected sensitivity? Now there is paleo climate data and volcanic recovery time data that does say 3C sensitivity may be more likely, but I have not seen anything from models that says less than 2C is nearly impossible.

There is also a heat wave in Norway. Good for ice cream vendors…

Steve, again, the feedback is part of the package–it doesn’t matter whether the warming is due to insolation or greenhouse gasses. Models operate under many constraints–there’s not a lot of wiggle room, and there’s less wiggle room for CO2 and other greenhouse gasses than many other causes. This is not a Chinese menu where you choose one from column A, one from column B … until you match the trend.

So you are welcome to try, but I rather doubt that your model will match the data (all the data) very well.

Re # 141 Steve Reynolds

“I wonder how many times someone has done this and been told to fix their model so that it agrees with the expected sensitivity?

So, who would tell the modelers to fix their models?

[Can one of the RC moderators tell me why this thread, unlike all the other RC threads, doesn’t save my name and email address in the Leave a Reply fields, or whatever they are called?]

But sensitivity is not a tunable parameter in them… as I understand there are tunable parameters especially in the cloud/aerosol parametrization which involves a difficult upscaling from cloud scale to model cell scale, but that tuning is done to produce realistic model behaviour for known test situations. Which brings us back to physical realism. Yes, as a side effect this may pin sensitivity to a 2C-plus value… my argument.

Barton (139) yes, using CO2 into CO2 I get 0.9% emitted each year, which does seem to be more relevant (though I’m still mulling…) than CO2 added to total atmosphere.

RE #137

As a matter of fact, there is no unifrom temporal and spatial distribution of CO2 in the air. The conc of CO2 in the atmosphere as determined by analysis of local or real air at Mauna Loa or any site is always reported for standard dry air, which is dry air at 273.2 K and 1 atm pressure and comprised of only nitrogen,oxygen and the inert gases. Standard dry air presently has ca 385 ml of CO2/cu meter. Real air is a term for local air at the intake ports of air separtion plants and in the HVAC indusries.

The relative ratios of the gases in the air is quite uniform around the world and is independent of elevation, temperature and humidity. This the origin of the term “well-mixed gases”.

The absolute or actual amount of the gases is site specific and depends mostly on elevation, pressure, temperature, and humidity.

For example, if standard dry air is heated to 30 deg C with pressure at 1 atm the amount of CO2 is about 350 ml/cu meter. If this air are were to become sat with water vapor (ca 5% for 100% rel humidity) the amount of CO2 is about 330 ml/cu meter. If stand. dry air is cooled to -40 dg C at 1 atm pressure, the amount of CO2 is about 450 ml/cu meter. This is the origin of the polar applification effect.

For a mean global temp of 15 deg C and about 1% humidity, the amount of CO2 is 365 ml/cu meter.

In text books the ideal gas law is usally given as PV=nRT which be rearranged to n/V=P/TR, where n is the sum of the moles of gases in the mixture and R is the gas constant. Locally, the amount of CO2 and all the other gases will fluctuate with the weather. Water vapor will vary the most over wide ranges.

CO2 is fairly soluble in water, and exchange clouds can effect local distributions.

Ther are some images from the AIRS that show that the distribution of CO2 is not constant especially over rhe land.

Steve Reynolds writes:

So produce one. Prove your point.

What makes you think any such events have occurred at all?

Steve Reynolds, I think that you are attaching way too much importance to the adjective “impossible” and too little to the adverb “nearly”. You will also note the modifiers of GCM–“generally applicable”. Think about the characteristics of CO2 forcing. It is a net positive and acts 24/7 and over a period of hundreds of years. It acts at all levels of the troposphere. It is not unreasonable to think that a forcing with such unique characteristics will leave a pretty unique signature. You could probably “mimic” such a signature with a combination of factors, but it would probably mean taking values other than the most probable. It’s a question of probabilities and confidence intervals.

Harold Pierce, Jr., note that I did not say or imply a uniform concentration, but said well mixed. Uniformity is not necessary for the green house effect to take place. Mixing to high in the troposphere and above is.